ANN-Based Filtering of Drone LiDAR in Coastal Salt Marshes Using Spatial–Spectral Features

Abstract

:1. Introduction

2. Materials and Methods

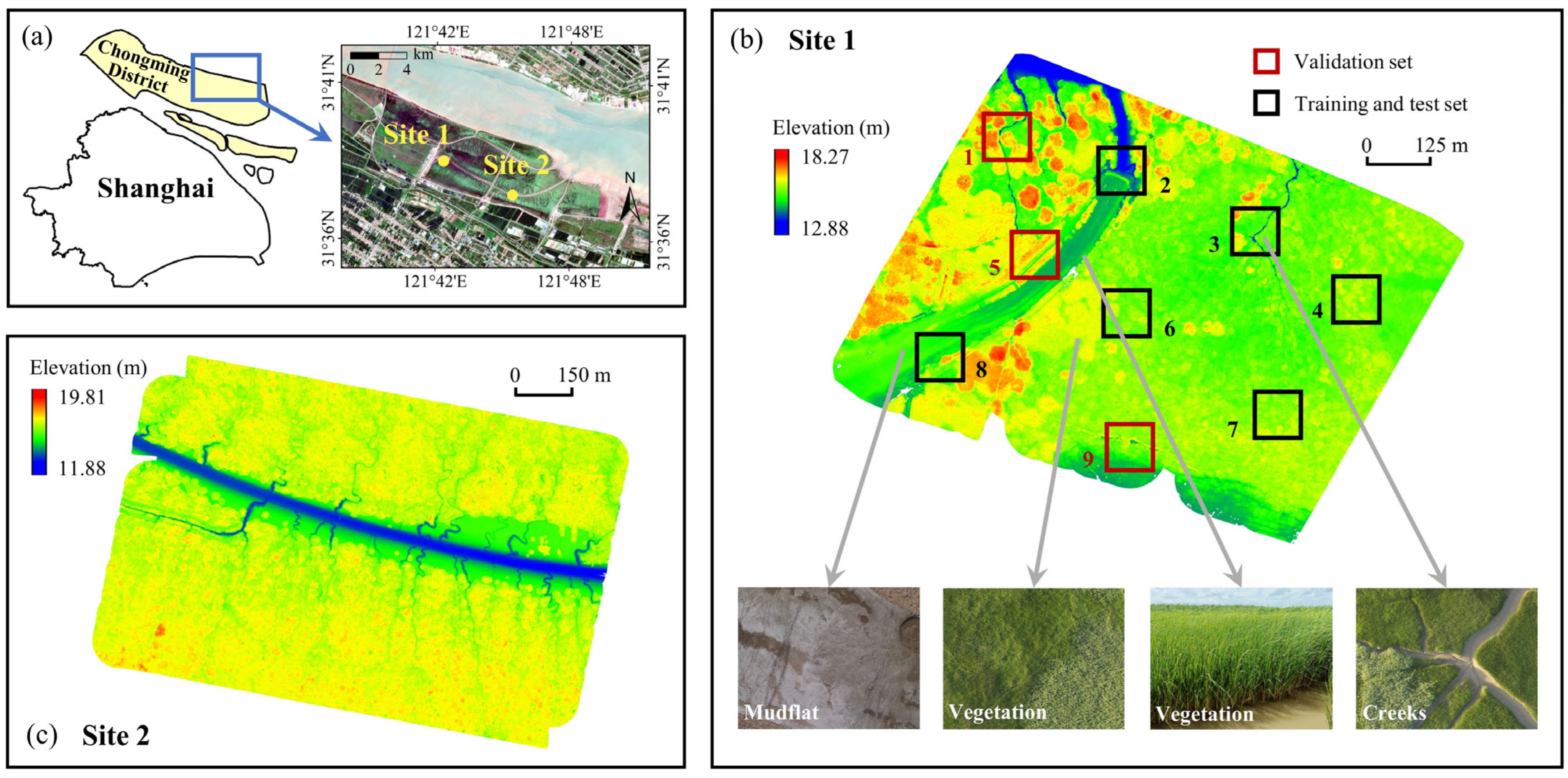

2.1. Materials

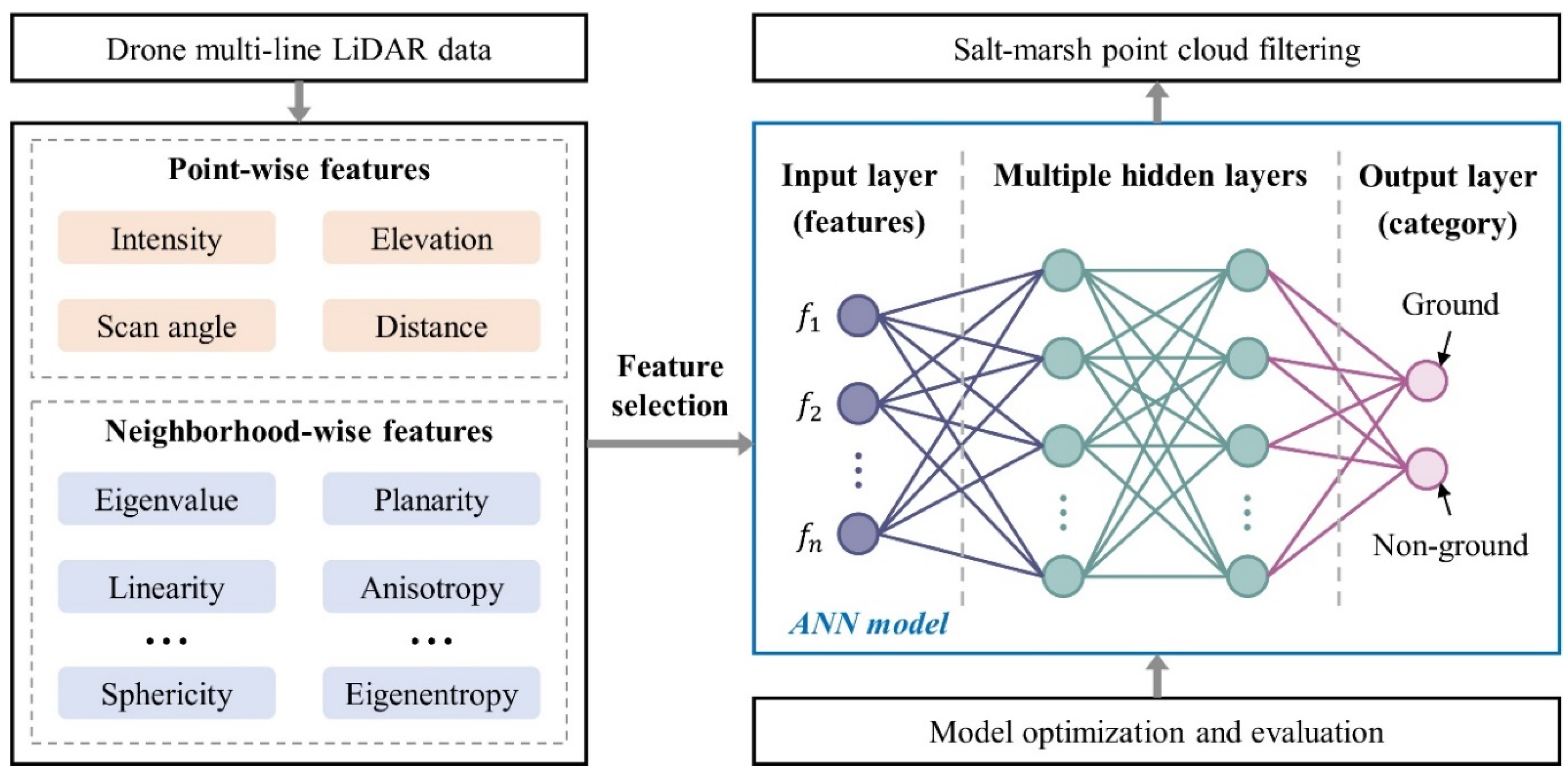

2.2. Overview of the Proposed Method

2.3. Features Derivation and Selection

2.3.1. Point-Wise Features

2.3.2. Neighborhood-Wise Features

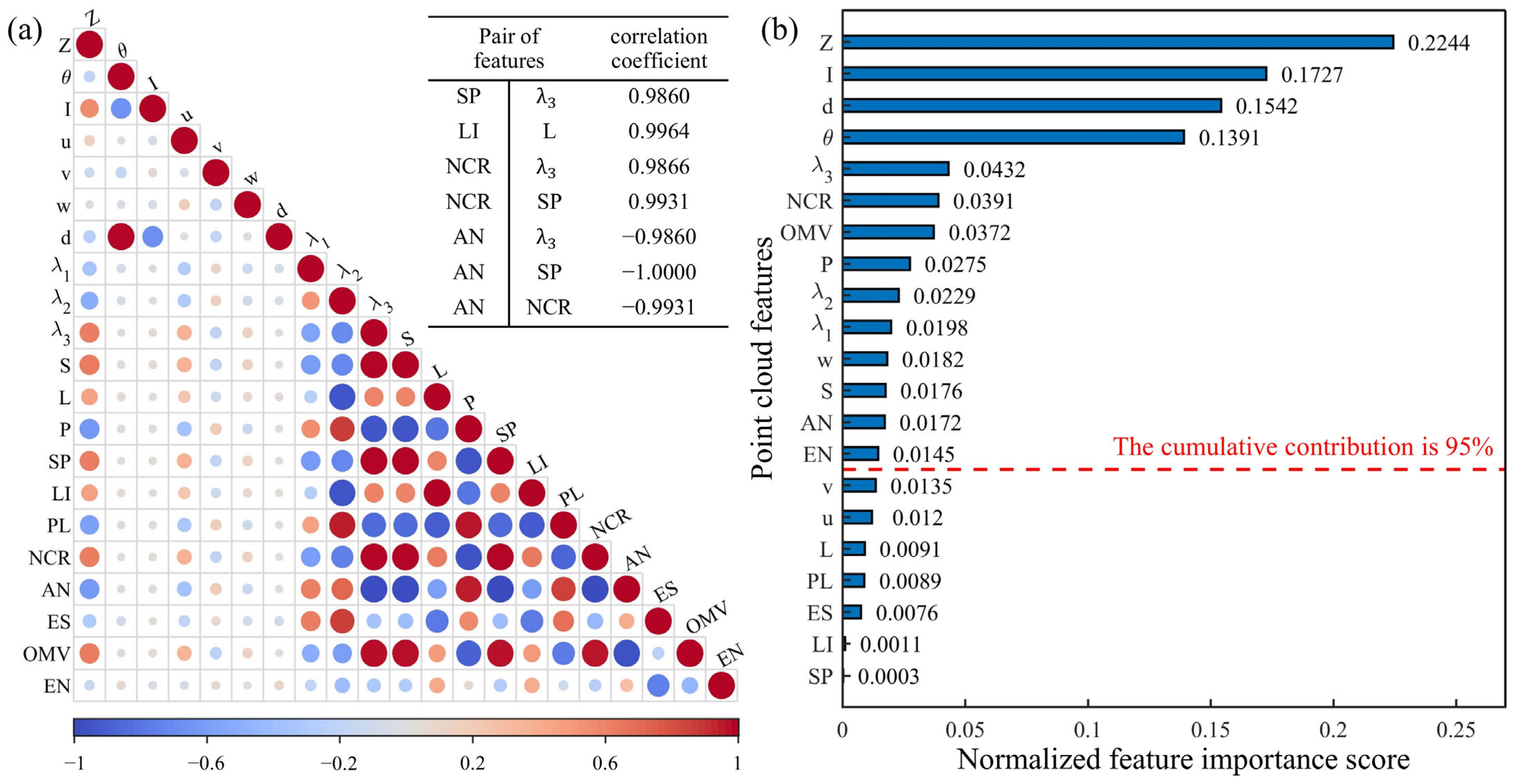

2.3.3. Feature Selection

2.4. ANN-Based Point Cloud Filtering

2.5. Accuracy Evaluation

3. Results

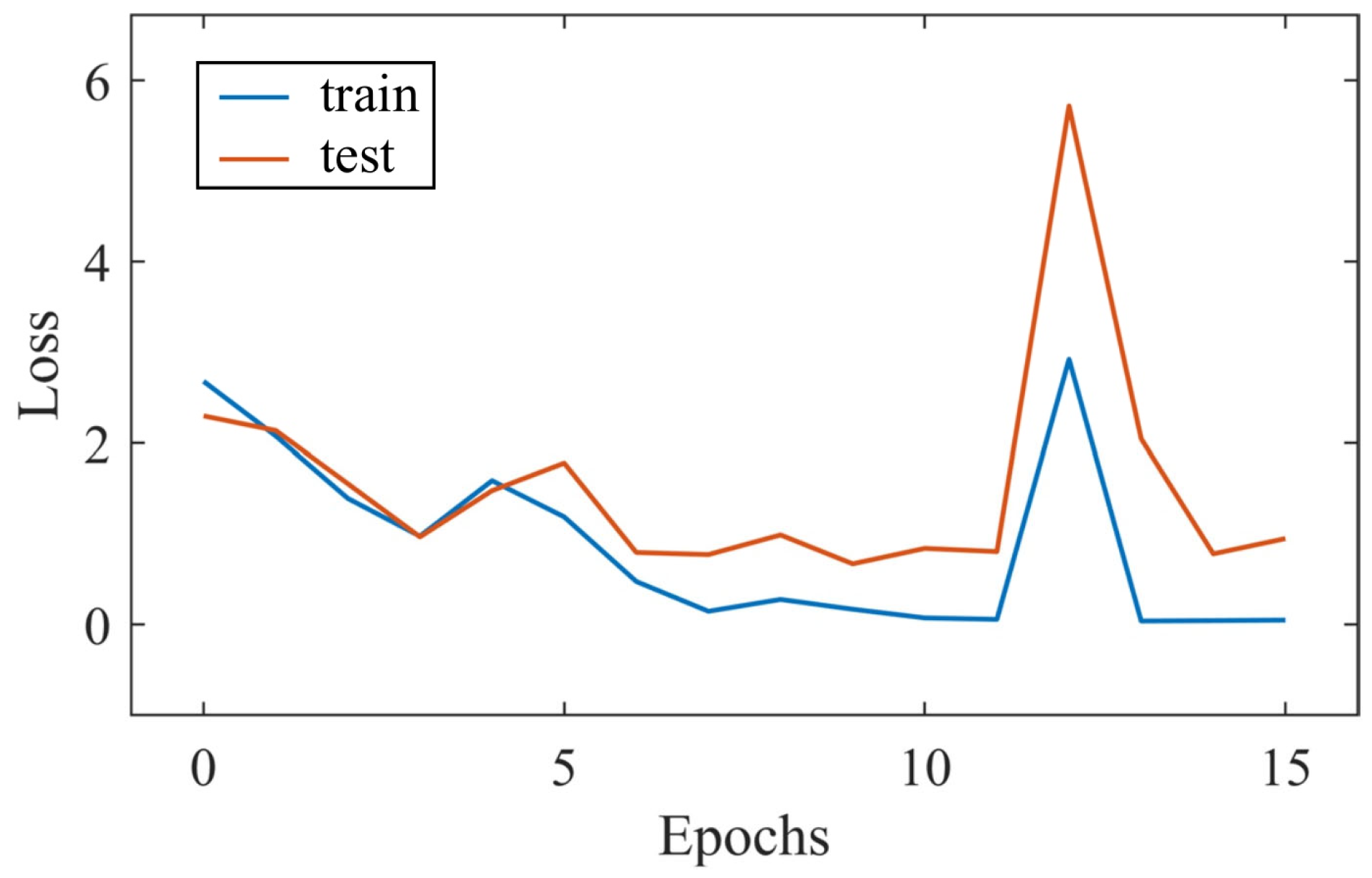

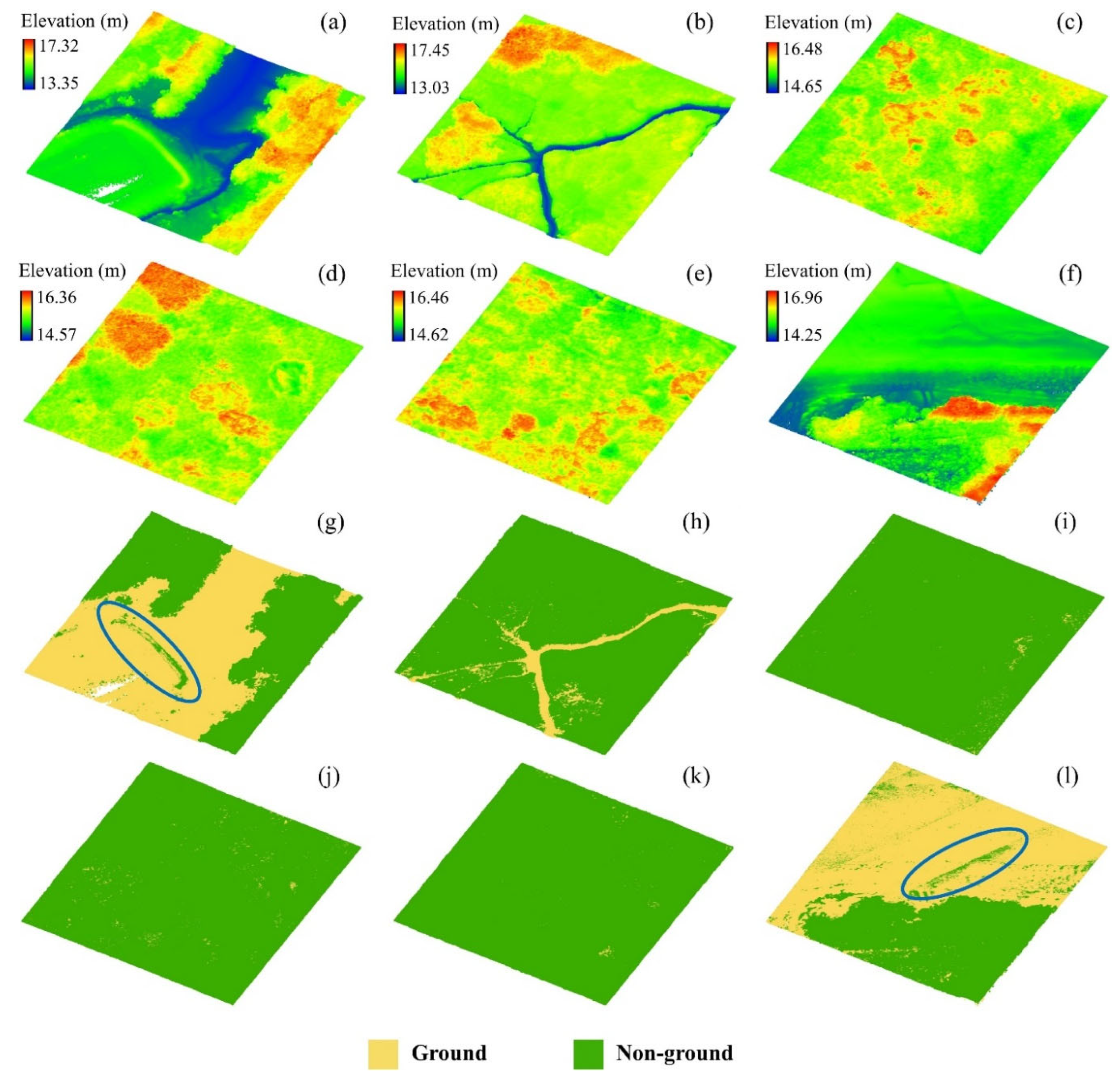

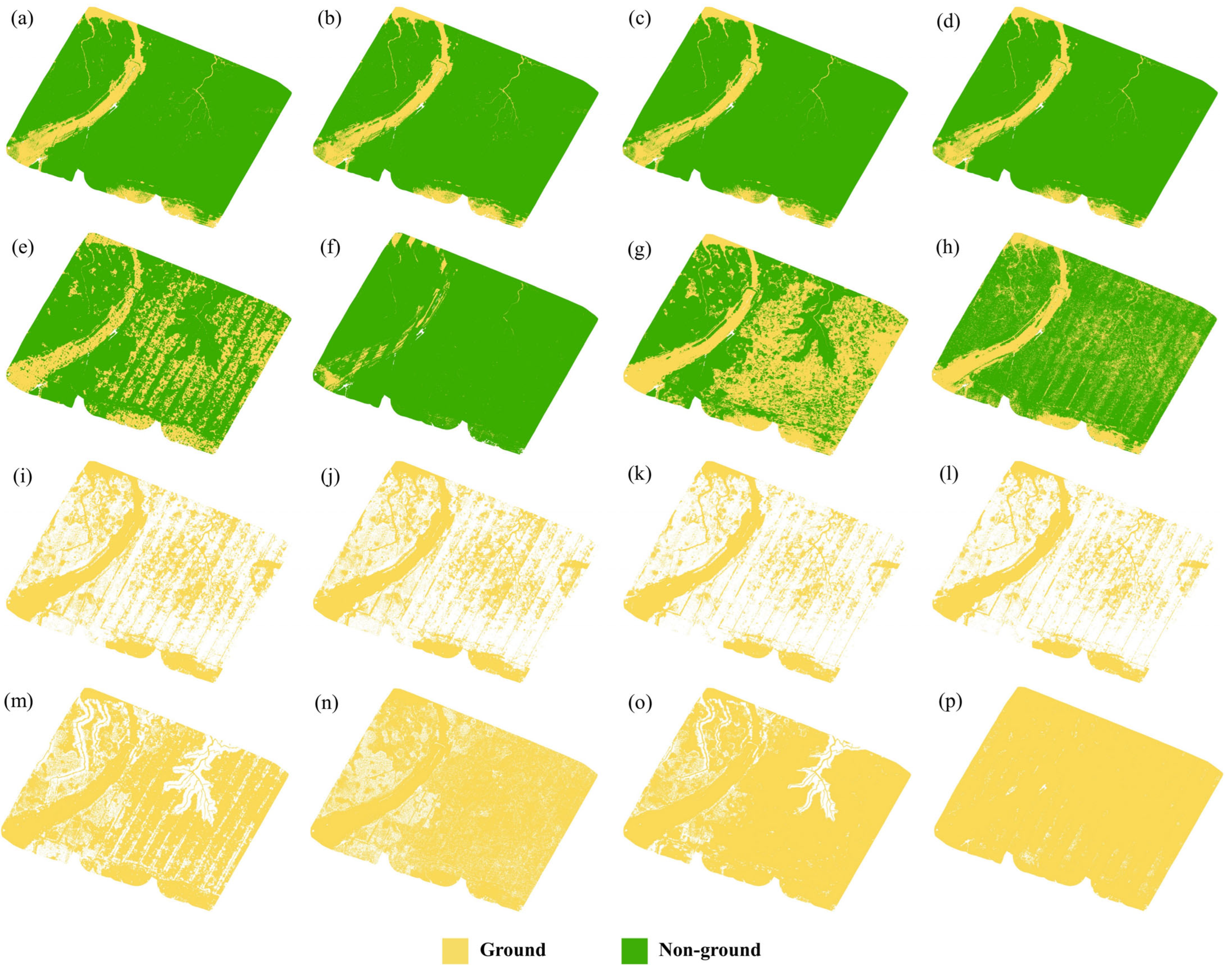

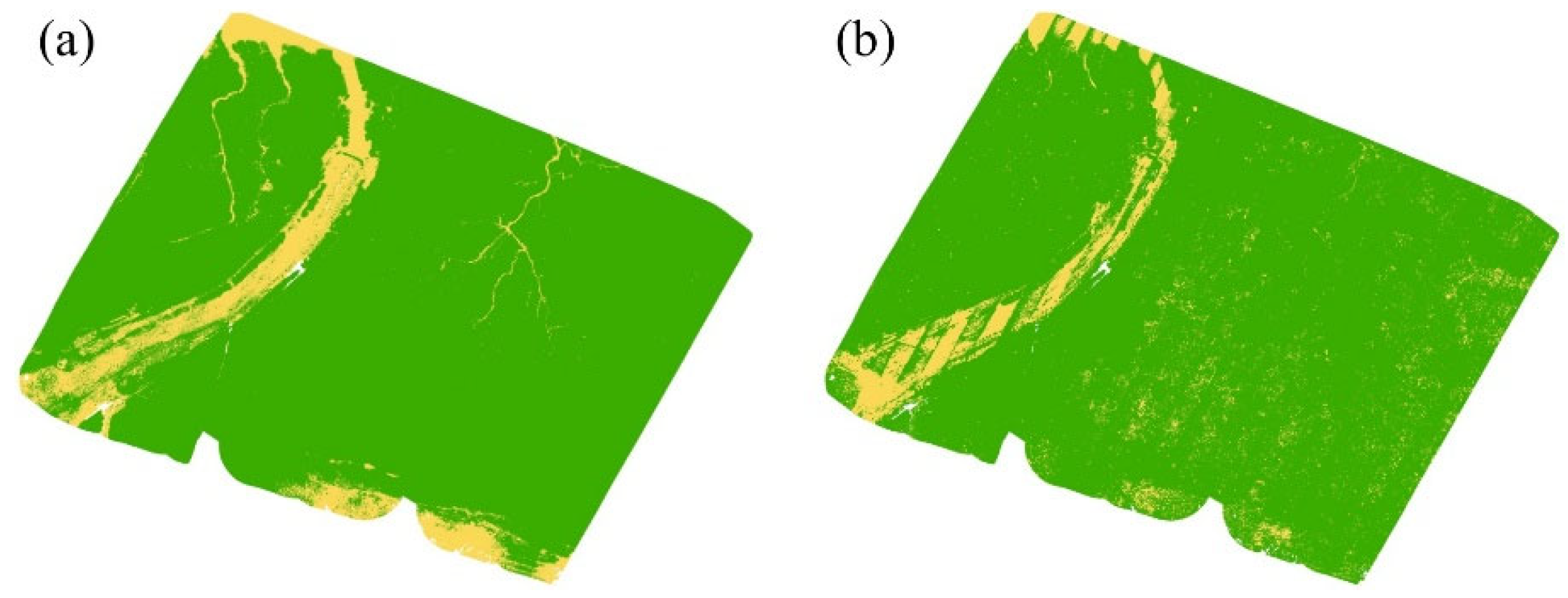

3.1. Results of Training and Test Sets

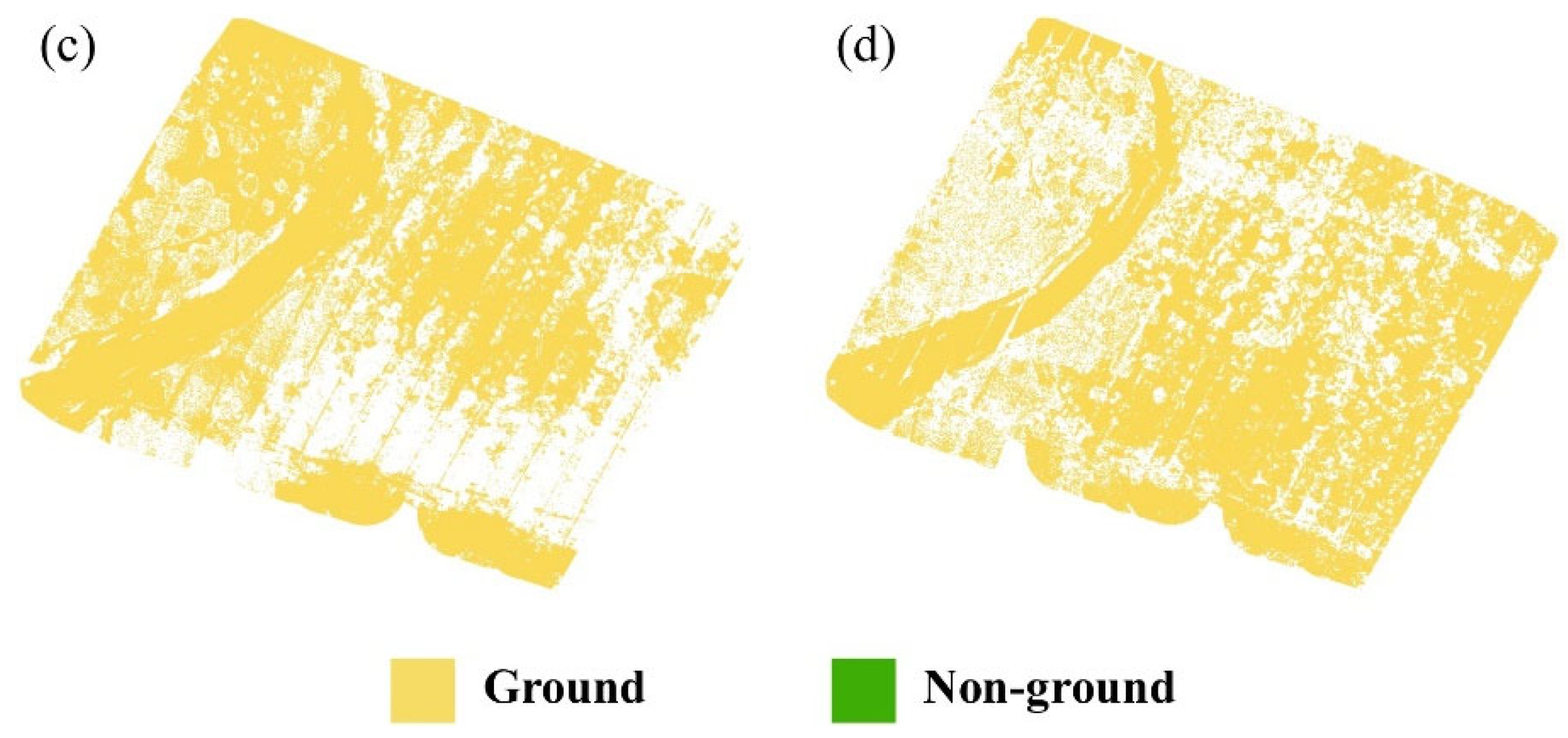

3.2. Results of Validation Set

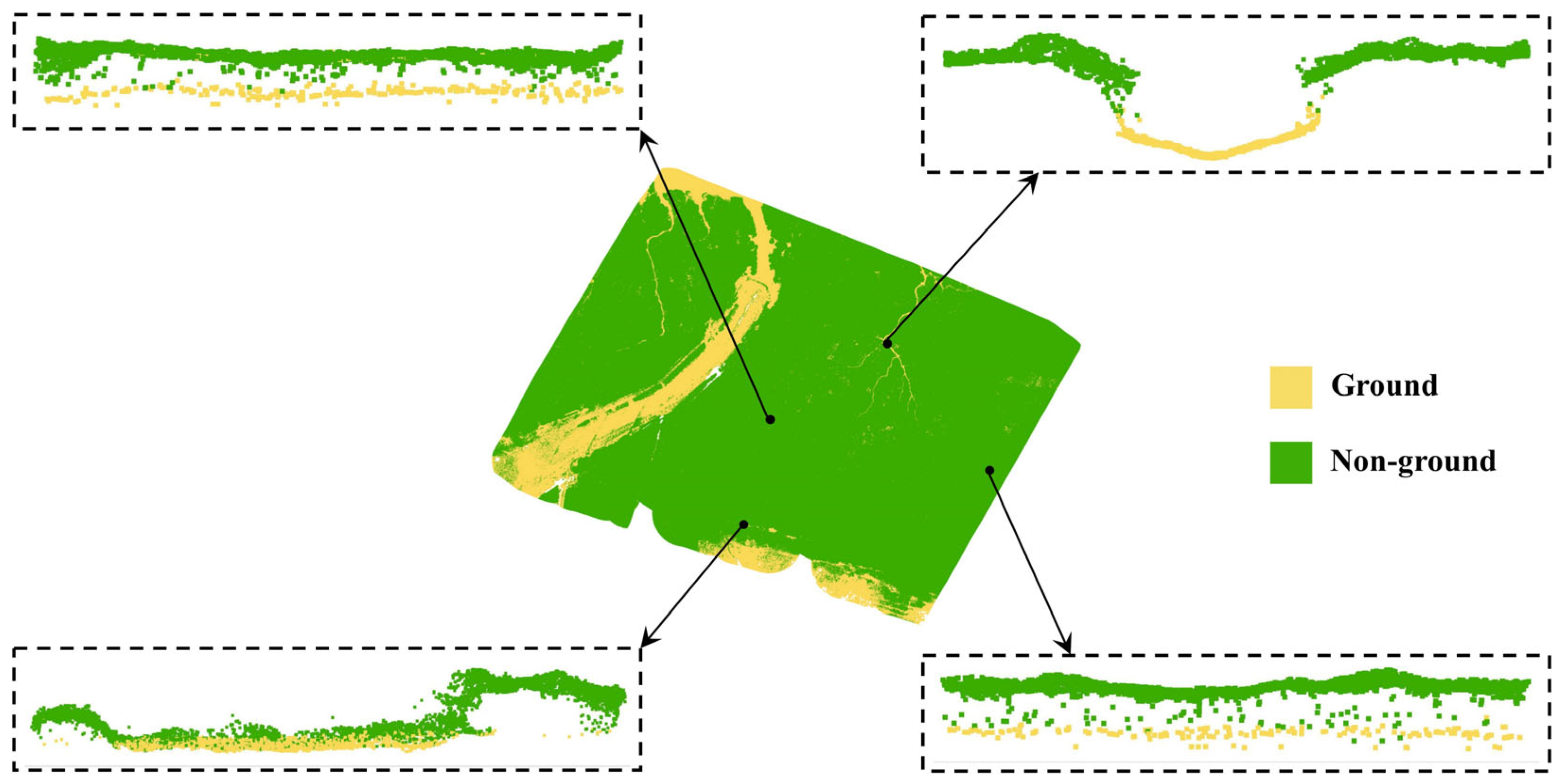

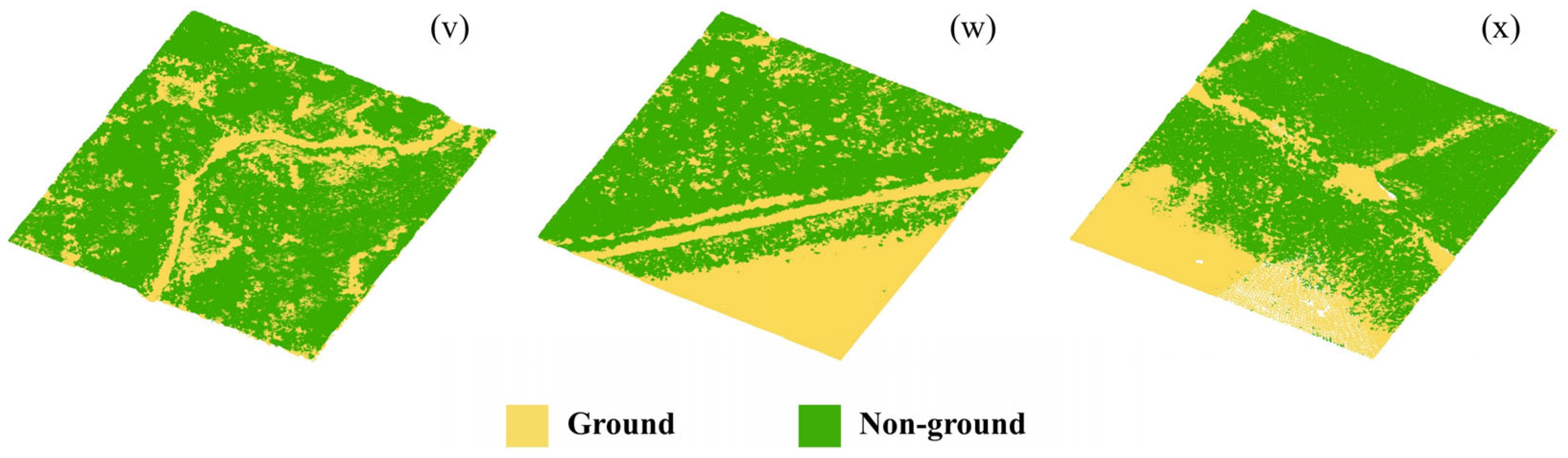

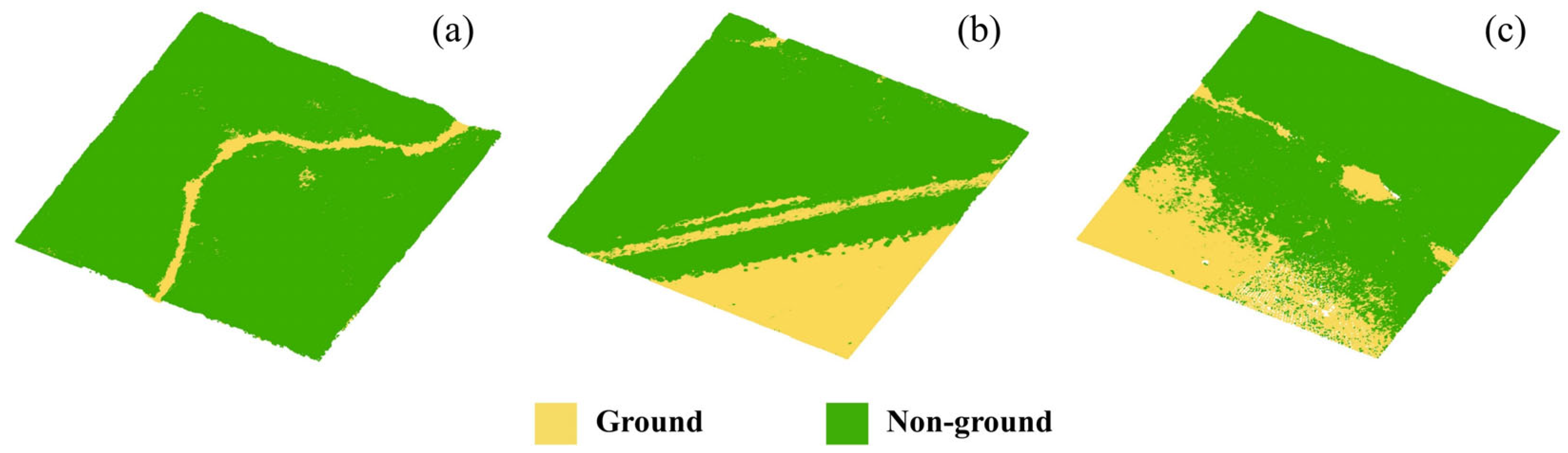

3.3. Filtering Results of Site 1

4. Discussion

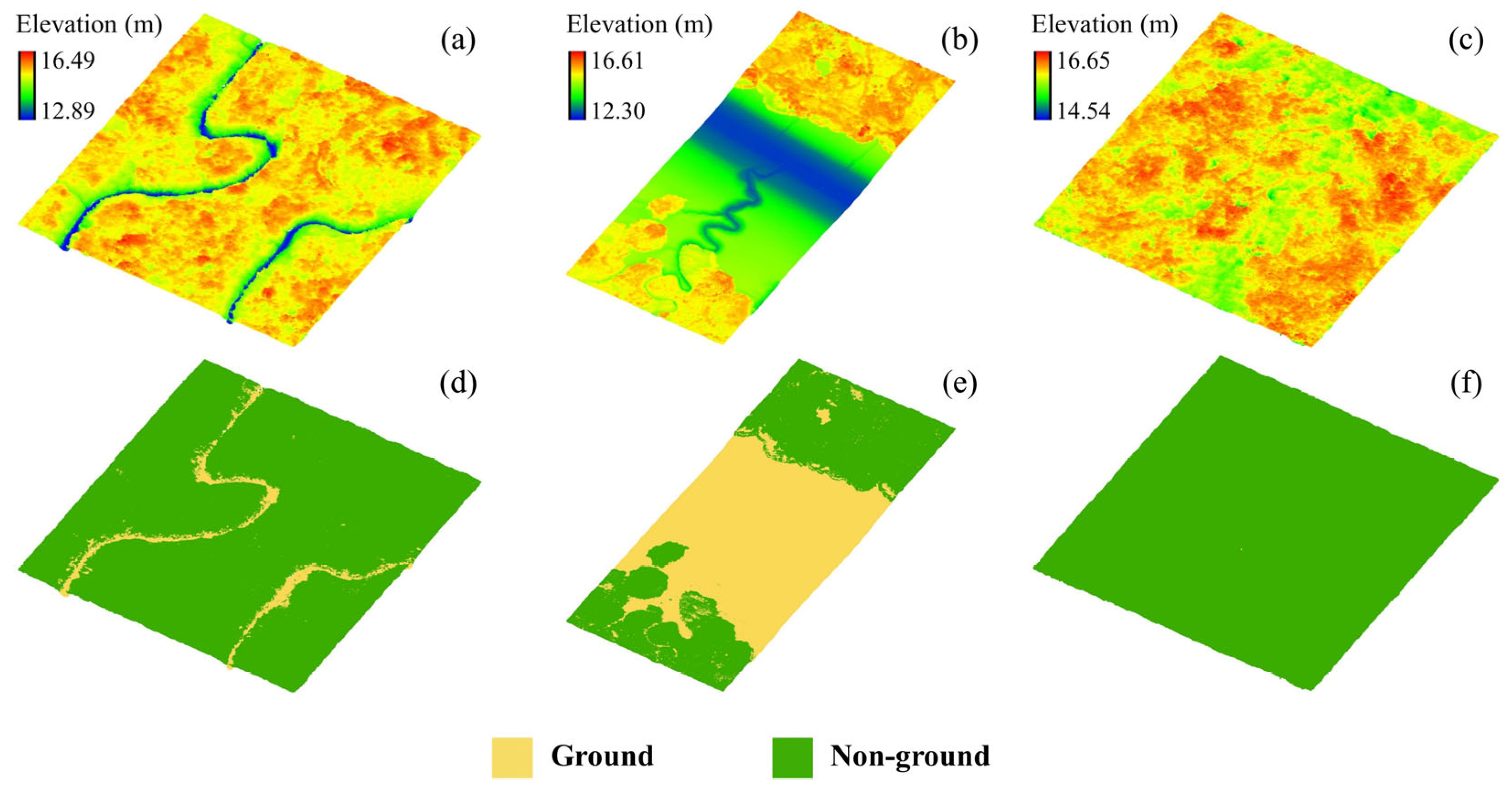

4.1. Comparison of Different Methods

4.2. Comparison of Different Features

4.3. Generalization Performance of the Proposed Method

4.4. Analysis of the Impact of Feature Selection

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix B

References

- Adams, J.B. Salt marsh at the tip of Africa: Patterns, processes and changes in response to climate change. Estuar. Coast. Shelf Sci. 2020, 237, 106650. [Google Scholar] [CrossRef]

- Wang, F.M.; Sanders, C.J.; Santos, I.R.; Tang, J.W.; Schuerch, M.; Kirwan, M.L.; Kopp, R.E.; Zhu, K.; Li, X.Z.; Yuan, J.C.; et al. Global blue carbon accumulation in tidal wetlands increases with climate change. Natl. Sci. Rev. 2021, 8, nwaa296. [Google Scholar] [CrossRef] [PubMed]

- Campbell, A.D.; Fatoyinbo, L.; Goldberg, L.; Lagomasino, D. Global hotspots of salt marsh change and carbon emissions. Nature 2022, 612, 701–706. [Google Scholar] [CrossRef] [PubMed]

- Jin, C.; Gong, Z.; Shi, L.; Zhao, K.; Tinoco, R.O.; San Juan, J.E.; Geng, L.; Coco, G. Medium-term observations of salt marsh morphodynamics. Front. Mar. Sci. 2022, 9, 988240. [Google Scholar] [CrossRef]

- Tan, K.; Chen, J.; Zhang, W.G.; Liu, K.B.; Tao, P.J.; Cheng, X.J. Estimation of soil surface water contents for intertidal mudflats using a near-infrared long-range terrestrial laser scanner. ISPRS-J. Photogramm. Remote Sens. 2020, 159, 129–139. [Google Scholar] [CrossRef]

- Tang, Y.N.; Ma, J.; Xu, J.X.; Wu, W.B.; Wang, Y.C.; Guo, H.Q. Assessing the impacts of tidal creeks on the spatial patterns of coastal salt marsh vegetation and its aboveground biomass. Remote Sens. 2022, 14, 1839. [Google Scholar] [CrossRef]

- Molino, G.D.; Defne, Z.; Aretxabaleta, A.L.; Ganju, N.K.; Carr, J.A. Quantifying slopes as a driver of forest to marsh conversion using geospatial techniques: Application to Chesapeake Bay Coastal-Plain, United States. Front. Environ. Sci. 2021, 9, 616319. [Google Scholar] [CrossRef]

- Sandi, S.G.; Rodríguez, J.F.; Saintilan, N.; Riccardi, G.; Saco, P.M. Rising tides, rising gates: The complex ecogeomorphic response of coastal wetlands to sea-level rise and human interventions. Adv. Water Resour. 2018, 114, 135–148. [Google Scholar] [CrossRef]

- Yi, W.B.; Wang, N.; Yu, H.Y.; Jiang, Y.H.; Zhang, D.; Li, X.Y.; Lv, L.; Xie, Z.L. An enhanced monitoring method for spatio-temporal dynamics of salt marsh vegetation using google earth engine. Estuar. Coast. Shelf Sci. 2024, 298, 108658. [Google Scholar] [CrossRef]

- Gao, W.L.; Shen, F.; Tan, K.; Zhang, W.G.; Liu, Q.X.; Lam, N.S.N.; Ge, J.Z. Monitoring terrain elevation of intertidal wetlands by utilising the spatial-temporal fusion of multi-source satellite data: A case study in the Yangtze (Changjiang) Estuary. Geomorphology 2021, 383, 107683. [Google Scholar] [CrossRef]

- Yang, B.X.; Ali, F.; Zhou, B.; Li, S.L.; Yu, Y.; Yang, T.T.; Liu, X.F.; Liang, Z.Y.; Zhang, K.C. A novel approach of efficient 3D reconstruction for real scene using unmanned aerial vehicle oblique photogrammetry with five cameras. Comput. Electr. Eng. 2022, 99, 107804. [Google Scholar] [CrossRef]

- Taddia, Y.; Pellegrinelli, A.; Corbau, C.; Franchi, G.; Staver, L.W.; Stevenson, J.C.; Nardin, W. High-resolution monitoring of tidal systems using UAV: A case study on Poplar Island, MD (USA). Remote Sens. 2021, 13, 1364. [Google Scholar] [CrossRef]

- An, S.K.; Yuan, L.; Xu, Y.; Wang, X.; Zhou, D.W. Ground subsidence monitoring in based on UAV-LiDAR technology: A case study of a mine in the Ordos, China. Geomech. Geophys. Geo-Energy Geo-Resour. 2024, 10, 57. [Google Scholar] [CrossRef]

- Hodges, E.; Campbell, J.D.; Melebari, A.; Bringer, A.; Johnson, J.T.; Moghaddam, M. Using Lidar digital elevation models for reflectometry land applications. IEEE Trans. Geosci. Remote Sensing 2023, 61, 5800509. [Google Scholar] [CrossRef]

- Jancewicz, K.; Porebna, W. Point cloud does matter. Selected issues of using airborne LiDAR elevation data in geomorphometric studies of rugged sandstone terrain under forest—Case study from Central Europe. Geomorphology 2022, 412, 108316. [Google Scholar] [CrossRef]

- Medeiros, S.C.; Bobinsky, J.S.; Abdelwahab, K. Locality of topographic ground truth data for salt marsh LiDAR DEM elevation bias mitigation. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2022, 15, 5766–5775. [Google Scholar] [CrossRef]

- Tao, P.J.; Tan, K.; Ke, T.; Liu, S.; Zhang, W.G.; Yang, J.R.; Zhu, X.J. Recognition of ecological vegetation fairy circles in intertidal salt marshes from UAV LiDAR point clouds. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 103029. [Google Scholar] [CrossRef]

- Cai, S.S.; Liang, X.L.; Yu, S.S. A progressive plane detection filtering method for airborne LiDAR data in forested landscapes. Forests 2023, 14, 498. [Google Scholar] [CrossRef]

- Vosselman, G. Slope based filtering of laser altimetry data. Int. Arch. Photogramm. Remote Sens. 2000, 33, 935–942. [Google Scholar]

- Zhang, W.M.; Qi, J.B.; Wan, P.; Wang, H.T.; Xie, D.H.; Wang, X.Y.; Yan, G.J. An easy-to-use airborne LiDAR data filtering method based on cloth simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Zhang, K.Q.; Chen, S.C.; Whitman, D.; Shyu, M.L.; Yan, J.H.; Zhang, C.C. A progressive morphological filter for removing nonground measurements from airborne LIDAR data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 872–882. [Google Scholar] [CrossRef]

- Cai, S.S.; Yu, S.S.; Hui, Z.Y.; Tang, Z.Z. ICSF: An improved cloth simulation filtering algorithm for airborne LiDAR data based on morphological operations. Forests 2023, 14, 1520. [Google Scholar] [CrossRef]

- Li, H.F.; Ye, C.M.; Guo, Z.X.; Wei, R.L.; Wang, L.X.; Li, J. A fast progressive TIN densification filtering algorithm for airborne LiDAR data using adjacent surface information. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 12492–12503. [Google Scholar] [CrossRef]

- Brodu, N.; Lague, D. 3D terrestrial lidar data classification of complex natural scenes using a multi-scale dimensionality criterion: Applications in geomorphology. ISPRS-J. Photogramm. Remote Sens. 2012, 68, 121–134. [Google Scholar] [CrossRef]

- Stroner, M.; Urban, R.; Lidmila, M.; Kolar, V.; Kremen, T. Vegetation filtering of a steep rugged terrain: The performance of standard algorithms and a newly proposed workflow on an example of a railway ledge. Remote Sens. 2021, 13, 3050. [Google Scholar] [CrossRef]

- Nesbit, P.R.; Hubbard, S.M.; Hugenholtz, C.H. Direct georeferencing UAV-SfM in high-relief topography: Accuracy assessment and alternative ground control strategies along steep inaccessible rock slopes. Remote Sens. 2022, 14, 490. [Google Scholar] [CrossRef]

- Stroner, M.; Urban, R.; Línková, L. Multidirectional shift rasterization (MDSR) algorithm for effective identification of ground in dense point clouds. Remote Sens. 2022, 14, 4916. [Google Scholar] [CrossRef]

- Anders, N.; Valente, J.; Masselink, R.; Keesstra, S. Comparing filtering techniques for removing vegetation from UAV-based photogrammetric point clouds. Drones 2019, 3, 61. [Google Scholar] [CrossRef]

- Qin, N.N.; Tan, W.K.; Guan, H.Y.; Wang, L.Y.; Ma, L.F.; Tao, P.J.; Fatholahi, S.; Hu, X.Y.; Li, J.A.T. Towards intelligent ground filtering of large-scale topographic point clouds: A comprehensive survey. Int. J. Appl. Earth Obs. Geoinf. 2023, 125, 103566. [Google Scholar] [CrossRef]

- Bai, J.; Niu, Z.; Gao, S.; Bi, K.Y.; Wang, J.; Huang, Y.R.; Sun, G. An exploration, analysis, and correction of the distance effect on terrestrial hyperspectral LiDAR data. ISPRS-J. Photogramm. Remote Sens. 2023, 198, 60–83. [Google Scholar] [CrossRef]

- Tan, K.; Cheng, X.J. Correction of incidence angle and distance effects on TLS intensity data based on reference targets. Remote Sens. 2016, 8, 251. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learing 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, T.Q.; Guestrin, C.; Assoc Comp, M. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Ke, G.L.; Meng, Q.; Finley, T.; Wang, T.F.; Chen, W.; Ma, W.D.; Ye, Q.W.; Liu, T.Y. LightGBM: A highly efficient gradient boosting decision tree. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Lettvin, J.Y.; Maturana, H.R.; McCulloch, W.S.; Pitts, W.H. What the frog’s eye tells the frog’s brain. Proc. IRE 1959, 47, 1940–1951. [Google Scholar] [CrossRef]

- Duran, Z.; Ozcan, K.; Atik, M.E. Classification of photogrammetric and airborne LiDAR point clouds using machine learning algorithms. Drones 2021, 5, 104. [Google Scholar] [CrossRef]

- Liao, L.F.; Tang, S.J.; Liao, J.H.; Li, X.M.; Wang, W.X.; Li, Y.X.; Guo, R.Z. A supervoxel-based random forest method for robust and effective airborne LiDAR point cloud classification. Remote Sens. 2022, 14, 1516. [Google Scholar] [CrossRef]

- Wu, X.X.; Tan, K.; Liu, S.; Wang, F.; Tao, P.J.; Wang, Y.J.; Cheng, X.L. Drone multiline light detection and ranging data filtering in coastal salt marshes using extreme gradient boosting model. Drones 2024, 8, 13. [Google Scholar] [CrossRef]

- Fareed, N.; Flores, J.P.; Das, A.K. Analysis of UAS-LiDAR ground points classification in agricultural fields using traditional algorithms and PointCNN. Remote Sens. 2023, 15, 483. [Google Scholar] [CrossRef]

- Qin, N.N.; Tan, W.K.; Ma, L.F.; Zhang, D.D.; Guan, H.Y.; Li, J.A.T. Deep learning for filtering the ground from ALS point clouds: A dataset, evaluations and issues. ISPRS-J. Photogramm. Remote Sens. 2023, 202, 246–261. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.C.; Guibas, L.J. PointNet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet plus plus: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 5105–5114. [Google Scholar]

- Hu, Q.Y.; Yang, B.; Xie, L.H.; Rosa, S.; Guo, Y.L.; Wang, Z.H.; Trigoni, N.; Markham, A. RandLA-Net: Efficient semantic segmentation of large-scale point clouds. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11105–11114. [Google Scholar]

- Li, Y.Y.; Bu, R.; Sun, M.C.; Wu, W.; Di, X.H.; Chen, B.Q. PointCNN: Convolution On X-transformed points. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 2–8 December 2018; pp. 828–838. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. KPConv: Flexible and deformable convolution for point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 6420–6429. [Google Scholar]

- Li, Y.; Ma, L.F.; Zhong, Z.L.; Cao, D.P.; Li, O.N.H. TGNet: Geometric graph CNN on 3-D point cloud segmentation. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3588–3600. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.B.; Liu, Z.W.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic graph CNN for learning on point clouds. ACM Trans. Graph. 2019, 38, 12. [Google Scholar] [CrossRef]

- Wang, L.; Huang, Y.C.; Hou, Y.L.; Zhang, S.M.; Shan, J.; Soc, I.C. Graph attention convolution for point cloud semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 10288–10297. [Google Scholar]

- Wan, P.; Shao, J.; Jin, S.N.; Wang, T.J.; Yang, S.M.; Yan, G.J.; Zhang, W.M. A novel and efficient method for wood-leaf separation from terrestrial laser scanning point clouds at the forest plot level. Methods Ecol. Evol. 2021, 12, 2473–2486. [Google Scholar] [CrossRef]

- Singh, S.; Sreevalsan-Nair, J. Adaptive multiscale feature extraction in a distributed system for semantic classification of airborne LiDAR point clouds. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6502305. [Google Scholar] [CrossRef]

- Dittrich, A.; Weinmann, M.; Hinz, S. Analytical and numerical investigations on the accuracy and robustness of geometric features extracted from 3D point cloud data. ISPRS-J. Photogramm. Remote Sens. 2017, 126, 195–208. [Google Scholar] [CrossRef]

- Gallwey, J.; Eyre, M.; Coggan, J. A machine learning approach for the detection of supporting rock bolts from laser scan data in an underground mine. Tunn. Undergr. Space Technol. 2021, 107, 103656. [Google Scholar] [CrossRef]

- Gross, J.W.; Heumann, B.W. Can flowers provide better spectral discrimination between herbaceous wetland species than leaves? Remote Sens. Lett. 2014, 5, 892–901. [Google Scholar] [CrossRef]

- Willkoehrsen. Feature-Selector. Available online: https://github.com/WillKoehrsen/feature-selector (accessed on 9 January 2024).

- Bradley, A.P. The use of the area under the roc curve in the evaluation of machine learning algorithms. Pattern Recognit. 1997, 30, 1145–1159. [Google Scholar] [CrossRef]

- He, H.; Garcia, E.A. Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar]

| Dataset | Sub-Regions | Point Number | Vegetation | Topography Features |

|---|---|---|---|---|

| Training and test set | 2 | 8,650,502 | PA, SA, relatively dense | A part of bare muddy flat with undulating terrain |

| 3 | 7,725,041 | PA, SA, highly dense | Several intertidal creeks | |

| 4 | 3,965,034 | SA, highly dense | Flat terrain and no intertidal creeks | |

| 6 | 3,895,731 | PA, SA, highly dense | Flat terrain and no intertidal creeks | |

| 7 | 3,879,095 | SA, highly dense | Flat terrain and no intertidal creeks | |

| 8 | 5,301,961 | PA, SA, relatively sparse | A part of bare muddy flat with flat terrain | |

| Validation set | 1 | 8,443,890 | PA, SA, highly dense | Several intertidal creeks |

| 5 | 3,813,431 | PA, relatively dense | A part of bare muddy flat with flat terrain | |

| 9 | 4,523,592 | PA, SA, relatively dense | Flat terrain and no intertidal creeks |

| Feature Name | Abbreviation | Formula | |

|---|---|---|---|

| Point-wise features | Distance | d | [38] |

| Scan angle | [38] | ||

| Intensity | I | / | |

| Elevation | Z | / | |

| Neighborhood-wise features | Eigenvalue | [49] | |

| Normal vector | (u, v, w) | Eigenvector corresponds to the minimum eigenvalue | |

| Scattered feature | S | ||

| Linear feature | L | ||

| Planar feature | P | ||

| Normal change rate | NCR | ||

| Anisotropy | AN | ||

| Sphericity | SP | ||

| Linearity | LI | ||

| Planarity | PL | ||

| Sum of eigenvalues | ES | ||

| Ominvariance | OMV | ||

| Eigen entropy | EN |

| Methods | Learning Rate | Max_Depth | n_Estimators | Min_Samples_Leaf/Min_Child_Weight | Gamma |

|---|---|---|---|---|---|

| RF | NA | 9 | 50 | 1000 | NA |

| XGBoost | 0.1 | 10 | 500 | 1 | 0.1 |

| LightGBM | 0.1 | 10 | 600 | 1 | NA |

| Sub-Region 1 | Sub-Region 5 | Sub-Region 9 | ||||

|---|---|---|---|---|---|---|

| AUC | G-Mean | AUC | G-Mean | AUC | G-Mean | |

| ANN | 0.9895 | 0.9895 | 0.9241 | 0.9219 | 0.9214 | 0.9208 |

| RF | 0.9915 | 0.9915 | 0.9178 | 0.9148 | 0.9205 | 0.9198 |

| XGBoost | 0.9960 | 0.9960 | 0.9115 | 0.9076 | 0.8838 | 0.8804 |

| LightGBM | 0.9961 | 0.9961 | 0.9118 | 0.9079 | 0.8820 | 0.8783 |

| SF | 0.8011 | 0.8009 | 0.9055 | 0.9051 | 0.7657 | 0.7652 |

| PMF | 0.8601 | 0.8522 | 0.9296 | 0.9281 | 0.7921 | 0.7886 |

| CSF | 0.8515 | 0.8408 | 0.9083 | 0.9037 | 0.7029 | 0.6384 |

| RandLA-Net | 0.8556 | 0.8436 | 0.8738 | 0.8683 | 0.9029 | 0.8993 |

| Point-wise features | 0.9815 | 0.9814 | 0.8862 | 0.8824 | 0.9136 | 0.9136 |

| Neighborhood-wise features | 0.5977 | 0.4518 | 0.6909 | 0.6197 | 0.6435 | 0.5675 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, K.; Liu, S.; Tan, K.; Yin, M.; Tao, P. ANN-Based Filtering of Drone LiDAR in Coastal Salt Marshes Using Spatial–Spectral Features. Remote Sens. 2024, 16, 3373. https://doi.org/10.3390/rs16183373

Liu K, Liu S, Tan K, Yin M, Tao P. ANN-Based Filtering of Drone LiDAR in Coastal Salt Marshes Using Spatial–Spectral Features. Remote Sensing. 2024; 16(18):3373. https://doi.org/10.3390/rs16183373

Chicago/Turabian StyleLiu, Kunbo, Shuai Liu, Kai Tan, Mingbo Yin, and Pengjie Tao. 2024. "ANN-Based Filtering of Drone LiDAR in Coastal Salt Marshes Using Spatial–Spectral Features" Remote Sensing 16, no. 18: 3373. https://doi.org/10.3390/rs16183373