Segment Anything Model Combined with Multi-Scale Segmentation for Extracting Complex Cultivated Land Parcels in High-Resolution Remote Sensing Images

Abstract

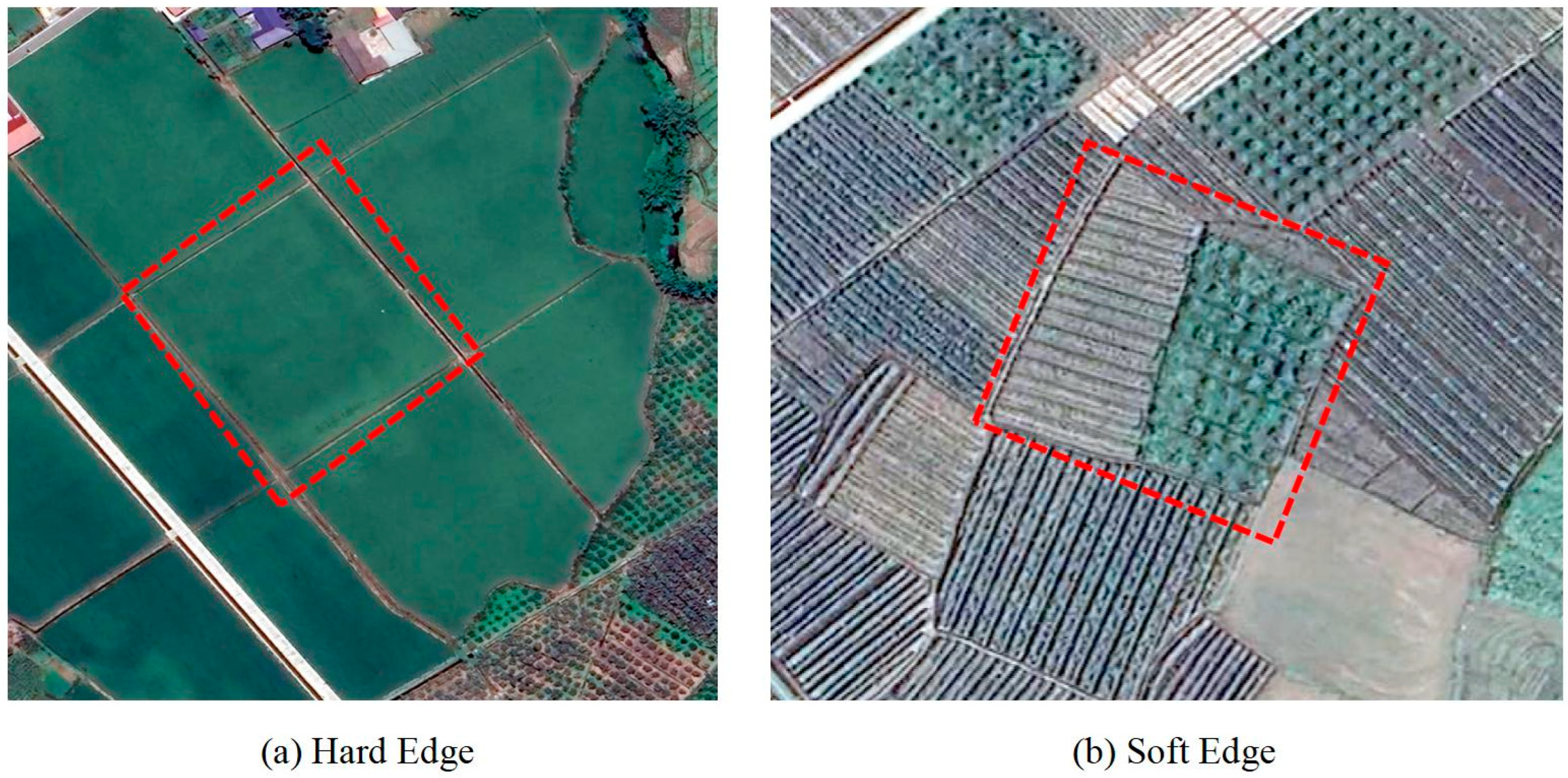

:1. Introduction

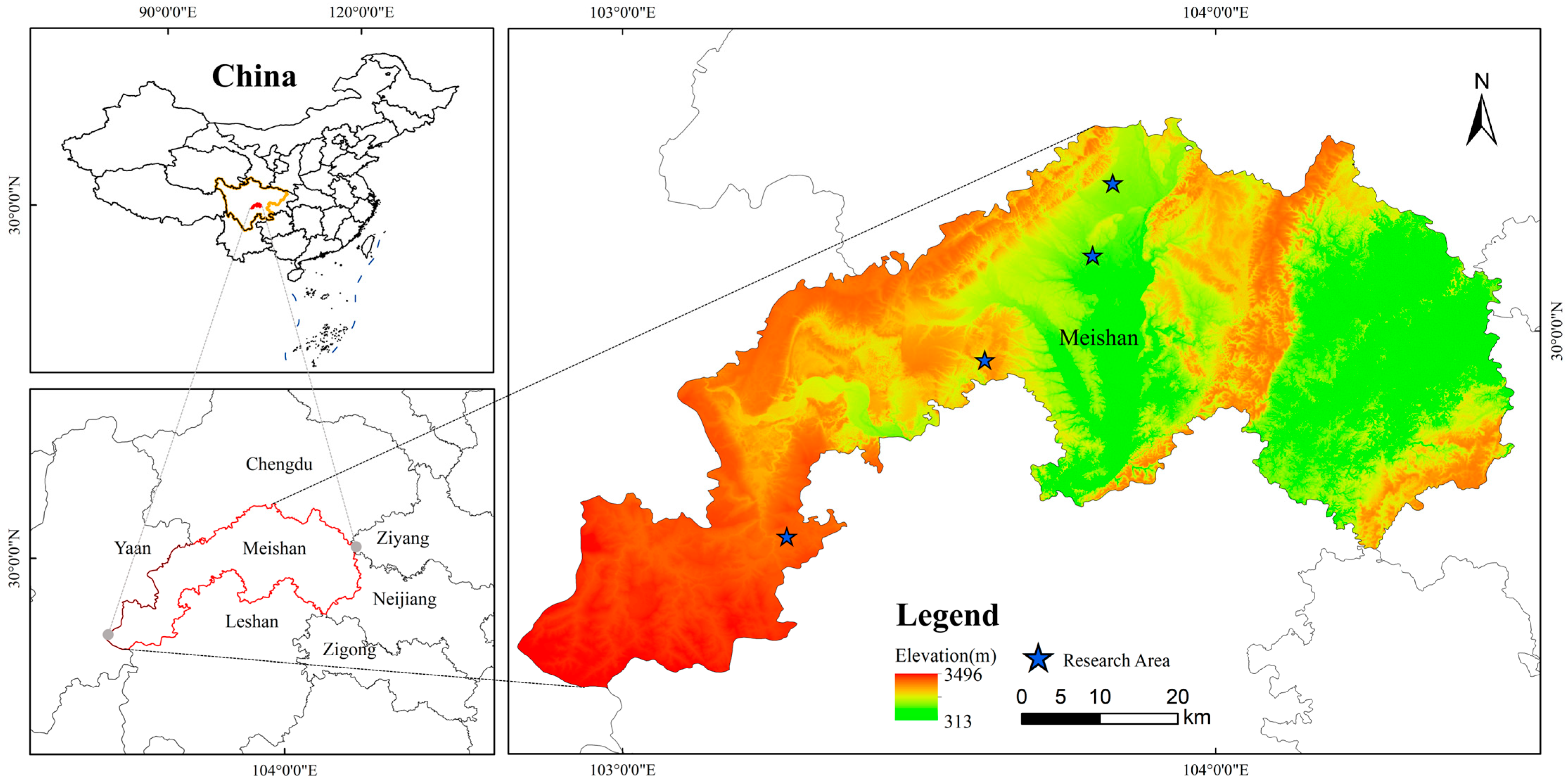

2. Study Area and Data

2.1. Study Area

2.2. High-Resolution Remote Sensing Data

2.3. Other Data

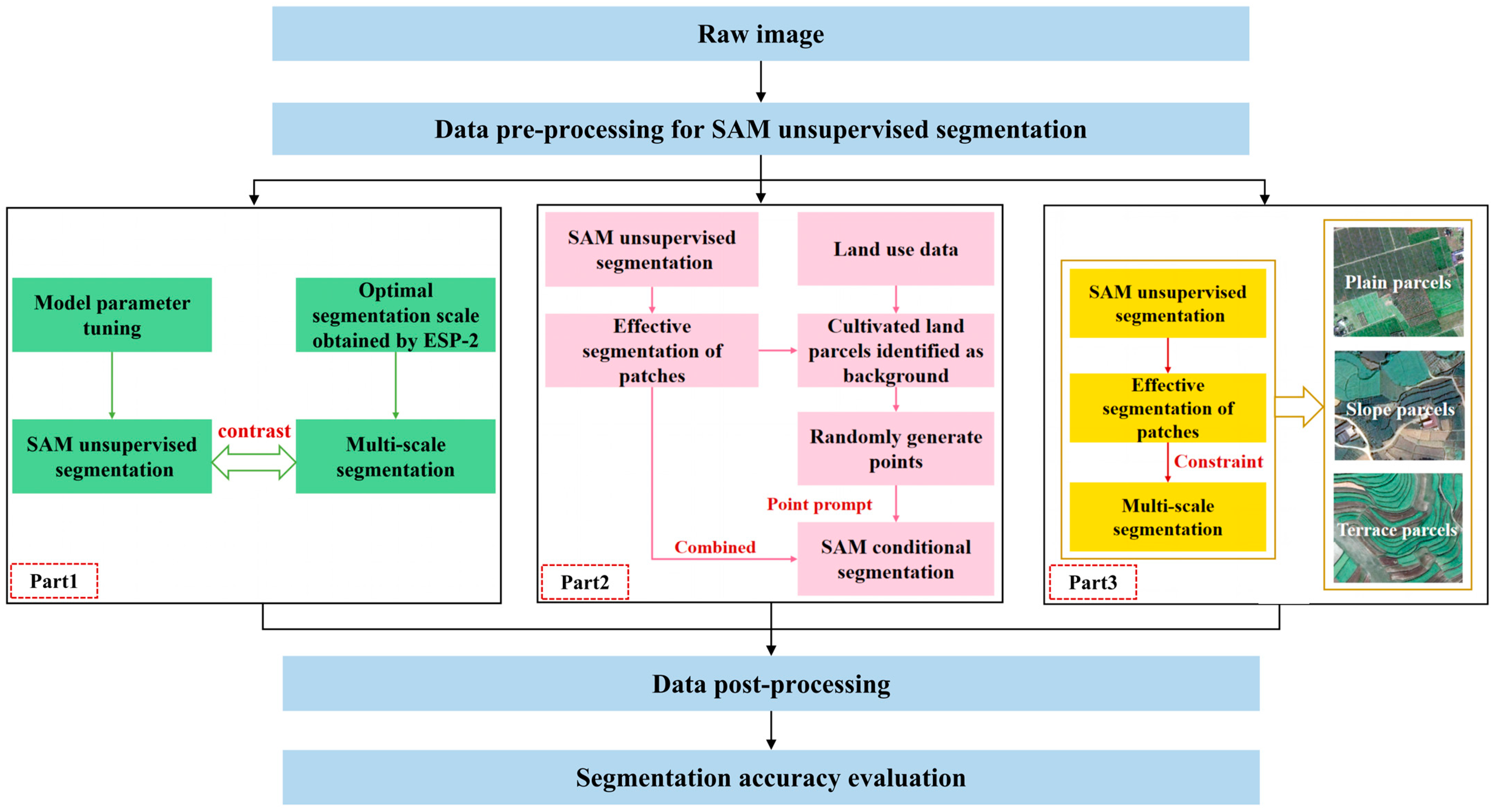

3. Methods for Effective Cultivated Land Parcel Segmentation in Multiple Scenes

3.1. Data Preprocessing for SAM Unsupervised Segmentation

3.2. Experiments on Effective Division of Different Cultivated Land Parcels

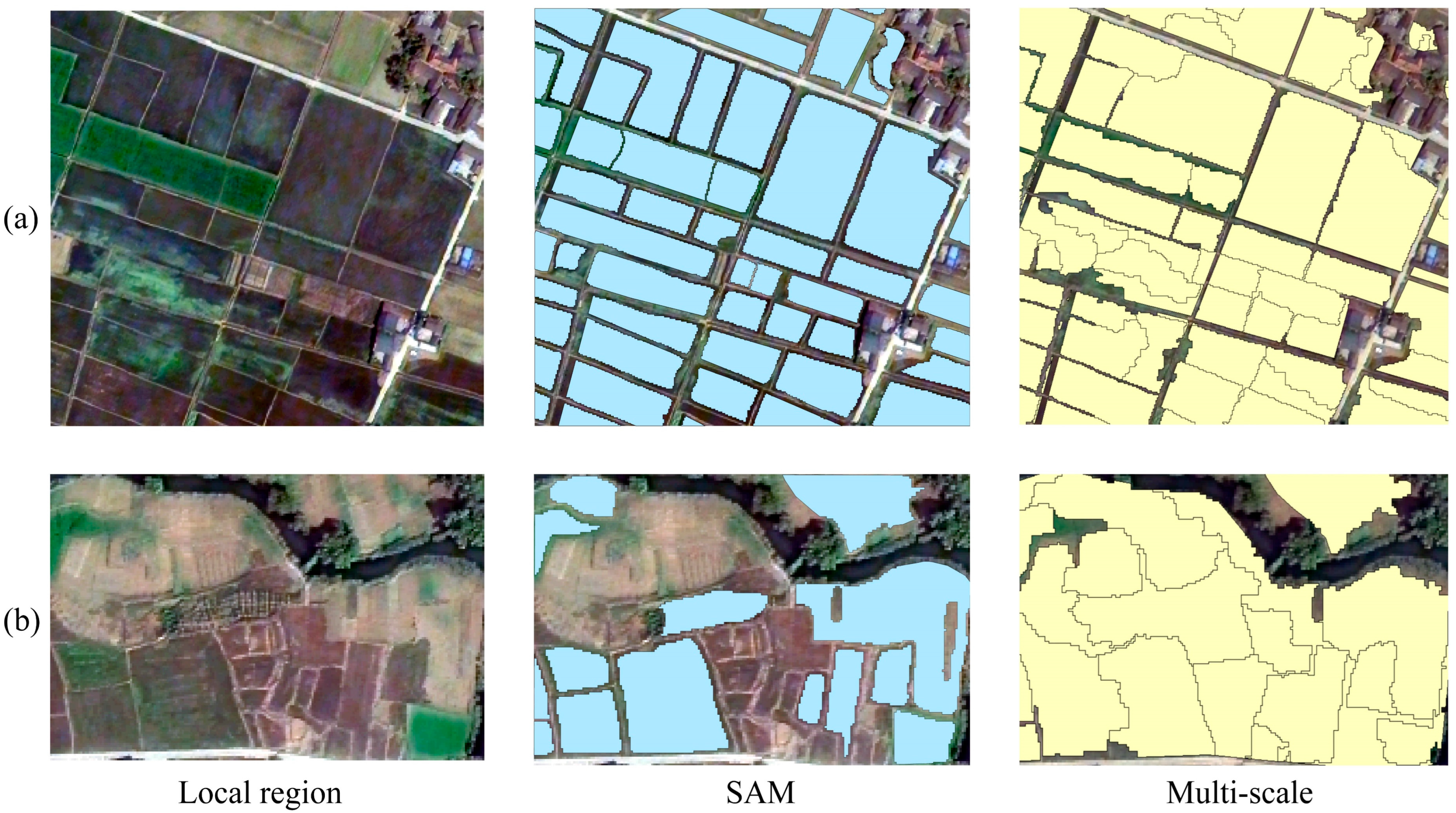

3.2.1. Comparative Experiment of SAM and Multi-Scale Segmentation

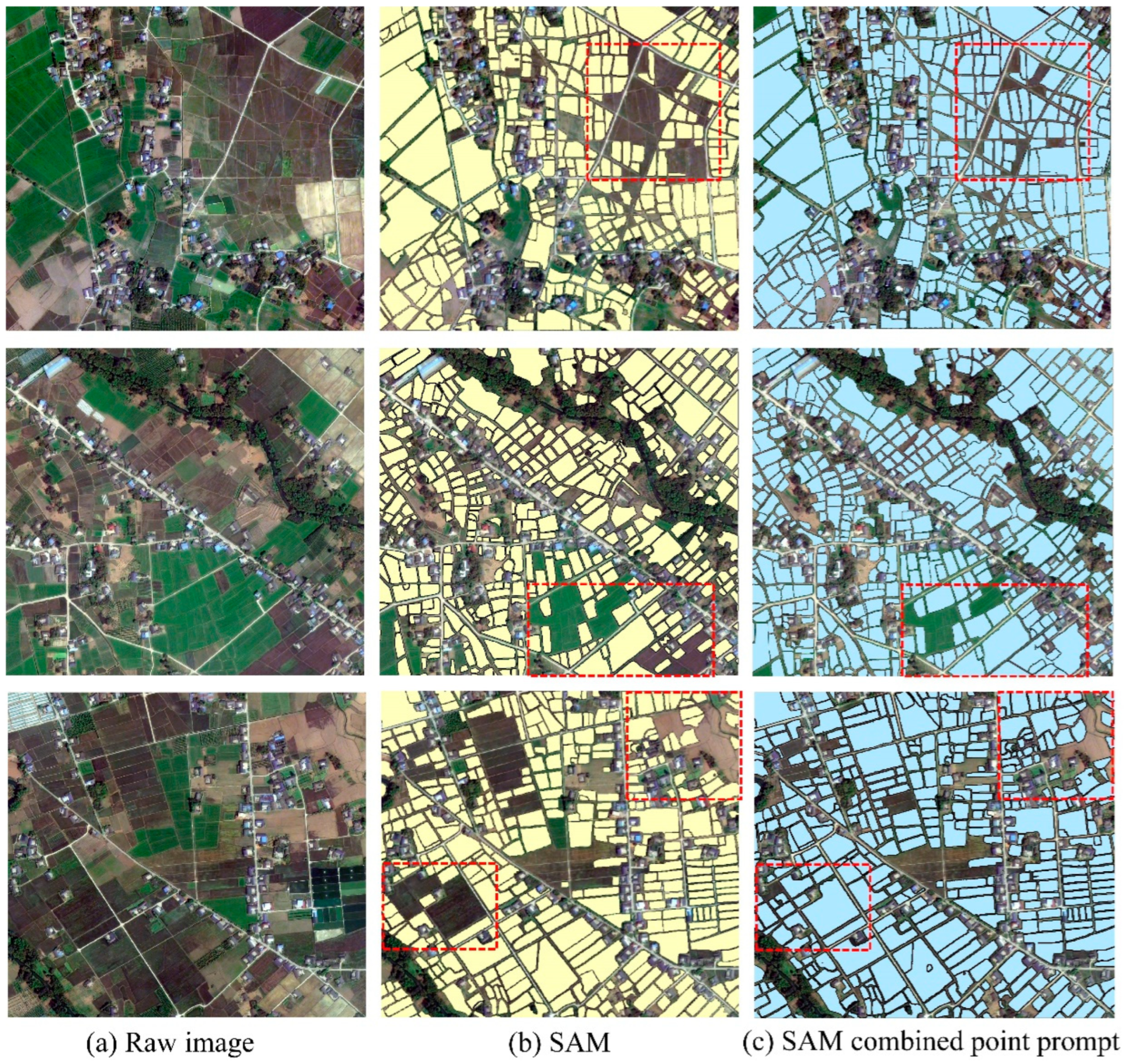

3.2.2. Effectiveness Experiment of SAM Conditional Segmentation with Point Prompts

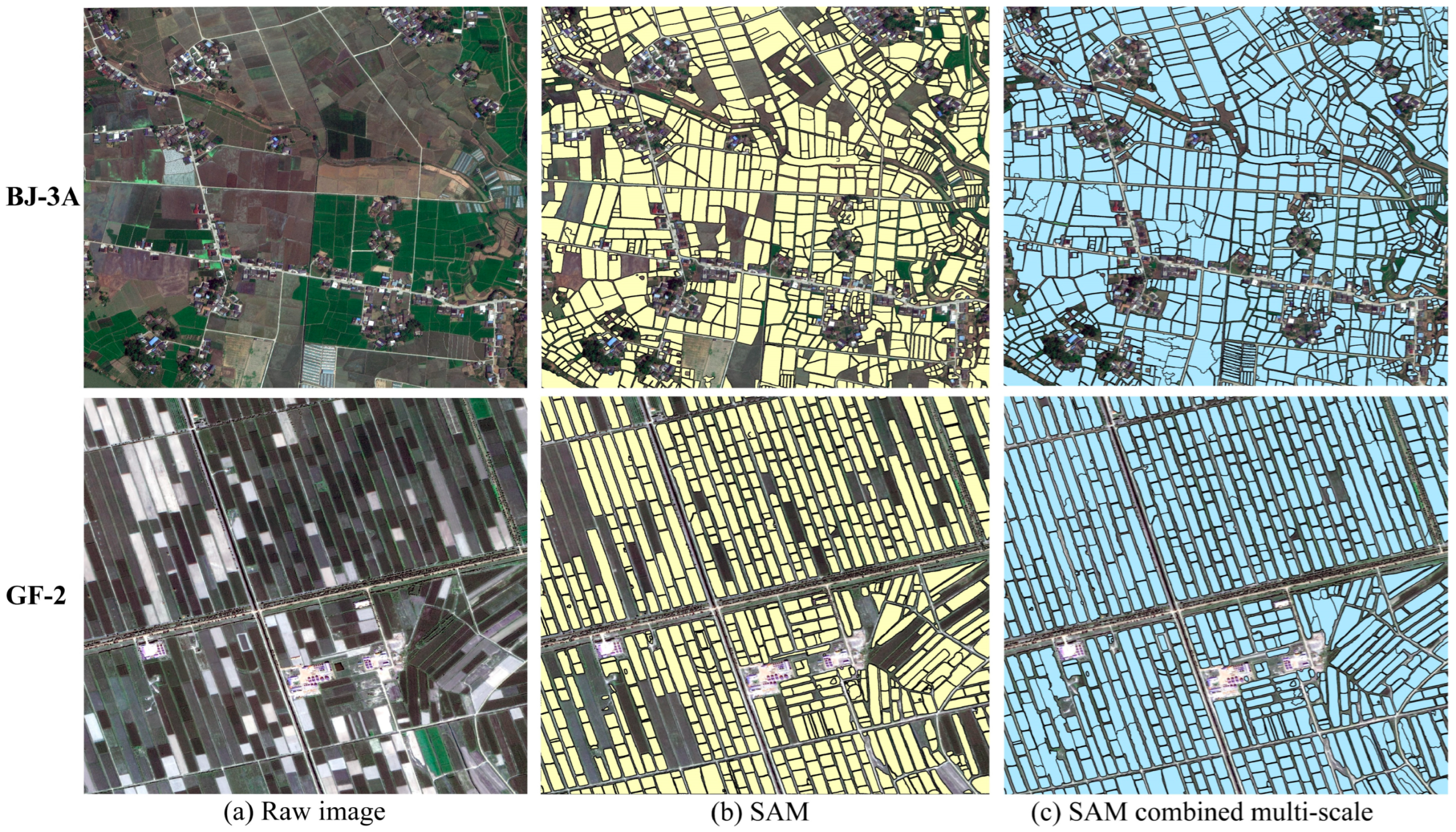

3.2.3. Experiments of the AM Combined with Multi-Scale Segmentation in Various Scenarios

3.3. Data Post-Processing

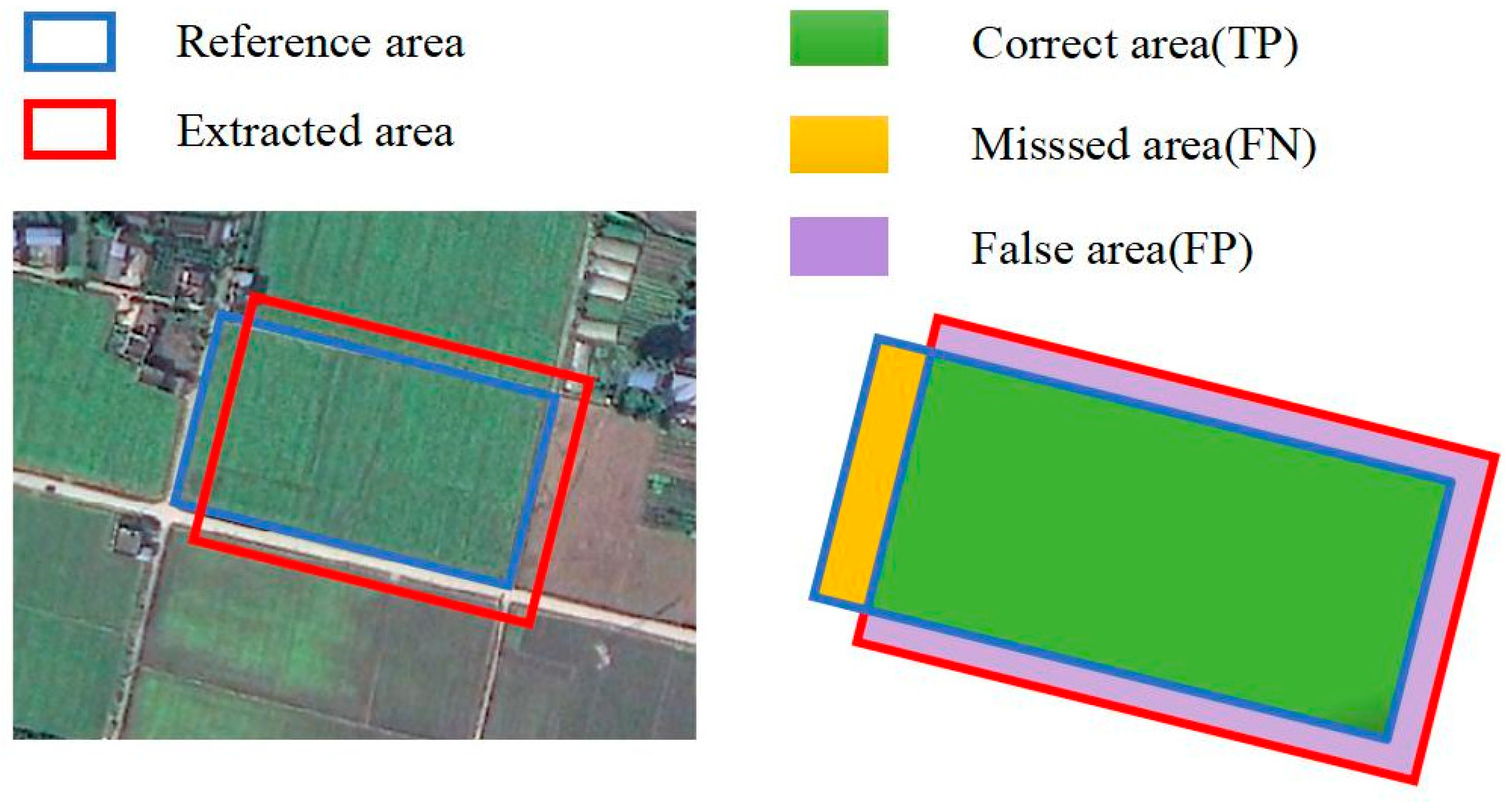

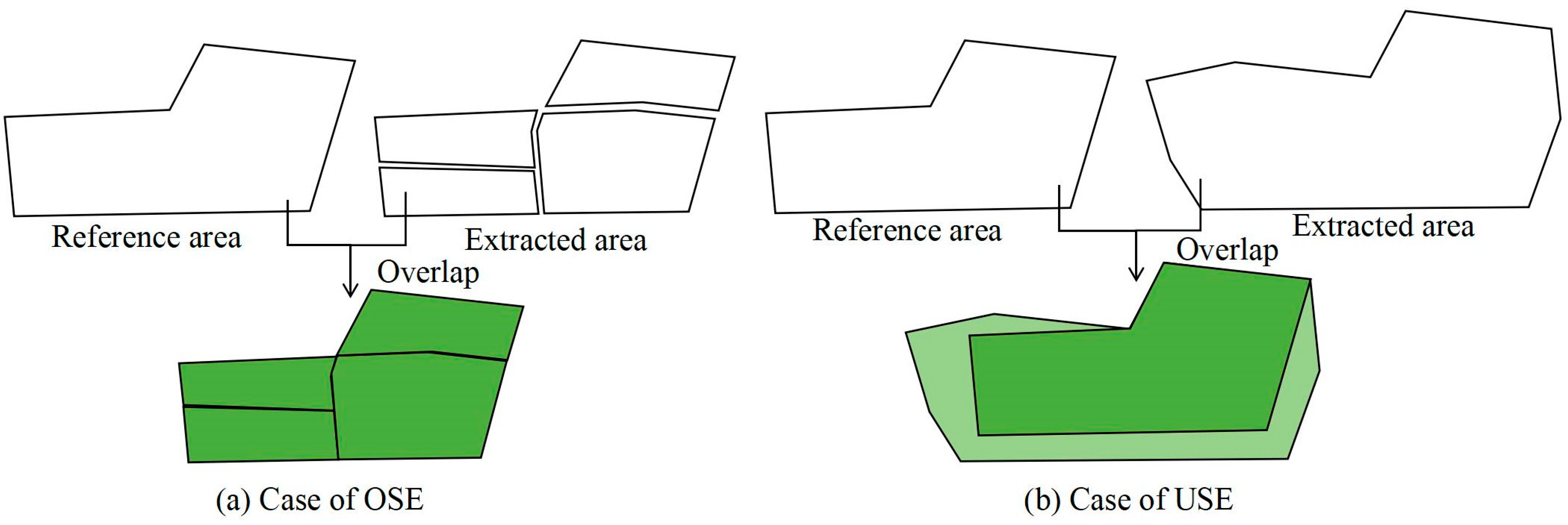

3.4. Evaluation Metric

4. Results

4.1. Comparison of SAM Unsupervised Segmentation and Multi-Scale Segmentation

4.2. SAM Conditional Segmentation Results for Cultivated Land Extraction under Point Prompts

4.3. Results of SAM Combined with Multi-Scale Segmentation for Cultivated Land Extraction in Multiple Scenarios

5. Discussion

5.1. Data Transferability

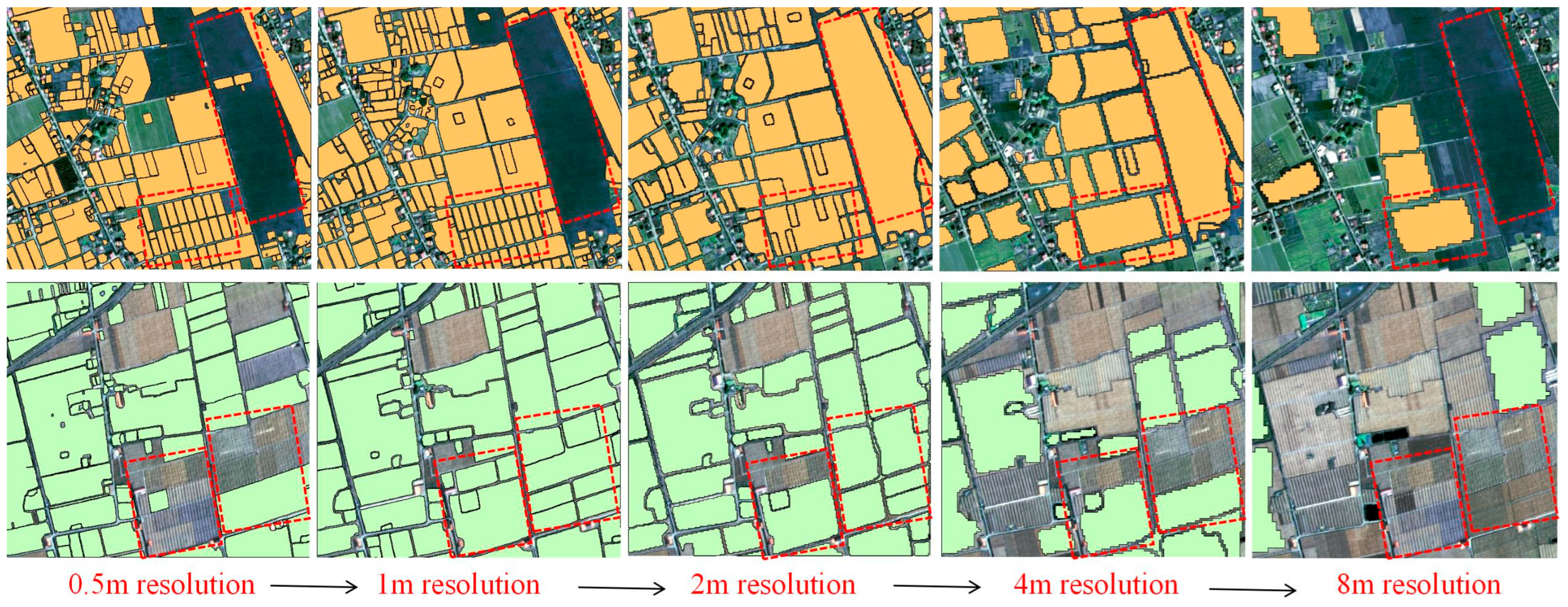

5.2. Influence of Parcels Segmentation Effectiveness in High-Resolution Imagery with Different Resolutions

5.3. Influence of Different Complex Scenes

5.4. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Waldner, F.; Canto, G.S.; Defourny, P. Automated annual cropland mapping using knowledge-based temporal features. ISPRS J. Photogramm. Remote Sens. 2015, 110, 1–13. [Google Scholar] [CrossRef]

- Yang, C.; Everitt, J.H.; Du, Q.; Luo, B.; Chanussot, J. Using high-resolution airborne and satellite imagery to assess crop growth and yield variability for precision agriculture. Proc. IEEE 2012, 101, 582–592. [Google Scholar] [CrossRef]

- Mateo-Sanchis, A.; Piles, M.; Muñoz-Marí, J.; Adsuara, J.E.; Pérez-Suay, A.; Camps-Valls, G. Synergistic integration of optical and microwave satellite data for crop yield estimation. Remote Sens. Environ. 2019, 234, 111460. [Google Scholar] [CrossRef] [PubMed]

- Campos-Taberner, M.; García-Haro, F.J.; Martínez, B.; Sánchez-Ruiz, S.; Moreno-Martínez, Á.; Camps-Valls, G.; Gilabert, M.A. Land use classification over smallholding areas in the European Common Agricultural Policy framework. ISPRS J. Photogramm. Remote Sens. 2023, 197, 320–334. [Google Scholar] [CrossRef]

- Gao, H.; Wang, C.; Wang, G.; Fu, H.; Zhu, J. A novel crop classification method based on ppfSVM classifier with time-series alignment kernel from dual-polarization SAR datasets. Remote Sens. Environ. 2021, 264, 112628. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Liu, H.; Peng, S. Detection of cropland change using multi-harmonic based phenological trajectory similarity. Remote Sens. 2018, 10, 1020. [Google Scholar] [CrossRef]

- Liu, M.; Chai, Z.; Deng, H.; Liu, R. A CNN-transformer network with multiscale context aggregation for fine-grained cropland change detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4297–4306. [Google Scholar] [CrossRef]

- Tarasiewicz, T.; Tulczyjew, L.; Myller, M.; Kawulok, M.; Longépé, N.; Nalepa, J. Extracting High-Resolution Cultivated Land Maps from Sentinel-2 Image Series. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 175–178. [Google Scholar]

- North, H.C.; Pairman, D.; Belliss, S.E. Boundary delineation of agricultural fields in multitemporal satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 12, 237–251. [Google Scholar] [CrossRef]

- Watkins, B.; Van Niekerk, A. Automating field boundary delineation with multi-temporal Sentinel-2 imagery. Comput. Electron. Agric. 2019, 167, 105078. [Google Scholar] [CrossRef]

- Xu, Y.; Zhu, Z.; Guo, M.; Huang, Y. Multiscale edge-guided network for accurate cultivated land parcel boundary extraction from remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 62, 4501020. [Google Scholar] [CrossRef]

- Wang, M.; Li, R. Segmentation of high spatial resolution remote sensing imagery based on hard-boundary constraint and two-stage merging. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5712–5725. [Google Scholar] [CrossRef]

- Wang, M.; Huang, J.; Ming, D. Region-line association constraints for high-resolution image segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 628–637. [Google Scholar] [CrossRef]

- Xu, L.; Ming, D.; Du, T.; Chen, Y.; Dong, D.; Zhou, C. Delineation of cultivated land parcels based on deep convolutional networks and geographical thematic scene division of remotely sensed images. Comput. Electron. Agric. 2022, 192, 106611. [Google Scholar] [CrossRef]

- Wu, W.; Chen, T.; Yang, H.; He, Z.; Chen, Y.; Wu, N. Multilevel segmentation algorithm for agricultural parcel extraction from a semantic boundary. Int. J. Remote Sens. 2023, 44, 1045–1068. [Google Scholar] [CrossRef]

- Xia, L.; Luo, J.; Sun, Y.; Yang, H. Deep extraction of cropland parcels from very high-resolution remotely sensed imagery. In Proceedings of the 2018 7th International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Hangzhou, China, 6–9 August 2018; pp. 1–5. [Google Scholar]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Yan, L.; Roy, D. Automated crop field extraction from multi-temporal Web Enabled Landsat Data. Remote Sens. Environ. 2014, 144, 42–64. [Google Scholar] [CrossRef]

- Graesser, J.; Ramankutty, N. Detection of cropland field parcels from Landsat imagery. Remote Sens. Environ. 2017, 201, 165–180. [Google Scholar] [CrossRef]

- Chen, B.; Qiu, F.; Wu, B.; Du, H. Image segmentation based on constrained spectral variance difference and edge penalty. Remote Sens. 2015, 7, 5980–6004. [Google Scholar] [CrossRef]

- Xue, Y.; Zhao, J.; Zhang, M. A watershed-segmentation-based improved algorithm for extracting cultivated land boundaries. Remote Sens. 2021, 13, 939. [Google Scholar] [CrossRef]

- Song, Q.; Hu, Q.; Zhou, Q.; Hovis, C.; Xiang, M.; Tang, H.; Wu, W. In-season crop mapping with GF-1/WFV data by combining object-based image analysis and random forest. Remote Sens. 2017, 9, 1184. [Google Scholar] [CrossRef]

- Tang, Z.; Wang, H.; Li, X.; Li, X.; Cai, W.; Han, C. An object-based approach for mapping crop coverage using multiscale weighted and machine learning methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1700–1713. [Google Scholar] [CrossRef]

- Xu, L.; Ming, D.; Zhou, W.; Bao, H.; Chen, Y.; Ling, X. Farmland extraction from high spatial resolution remote sensing images based on stratified scale pre-estimation. Remote Sens. 2019, 11, 108. [Google Scholar] [CrossRef]

- Cai, Z.; Hu, Q.; Zhang, X.; Yang, J.; Wei, H.; He, Z.; Song, Q.; Wang, C.; Yin, G.; Xu, B. An adaptive image segmentation method with automatic selection of optimal scale for extracting cropland parcels in smallholder farming systems. Remote Sens. 2022, 14, 3067. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, X.; Xin, Q.; Huang, J. Developing a multi-filter convolutional neural network for semantic segmentation using high-resolution aerial imagery and LiDAR data. ISPRS J. Photogramm. Remote Sens. 2018, 143, 3–14. [Google Scholar] [CrossRef]

- Ming, D.; Li, J.; Wang, J.; Zhang, M. Scale parameter selection by spatial statistics for GeOBIA: Using mean-shift based multi-scale segmentation as an example. ISPRS J. Photogramm. Remote Sens. 2015, 106, 28–41. [Google Scholar] [CrossRef]

- Waldner, F.; Diakogiannis, F.I. Deep learning on edge: Extracting field boundaries from satellite images with a convolutional neural network. Remote Sens. Environ. 2020, 245, 111741. [Google Scholar] [CrossRef]

- Zhang, L.; Yuan, S.; Dong, R.; Zheng, J.; Gan, B.; Fang, D.; Liu, Y.; Fu, H. Swcare: Switchable learning and connectivity-aware refinement method for multi-city and diverse-scenario road mapping using remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2024, 127, 103665. [Google Scholar] [CrossRef]

- Yan, G.; Jing, H.; Li, H.; Guo, H.; He, S. Enhancing building segmentation in remote sensing images: Advanced multi-scale boundary refinement with MBR-HRNet. Remote Sens. 2023, 15, 3766. [Google Scholar] [CrossRef]

- Zhong, B.; Wei, T.; Luo, X.; Du, B.; Hu, L.; Ao, K.; Yang, A.; Wu, J. Multi-swin mask transformer for instance segmentation of agricultural field extraction. Remote Sens. 2023, 15, 549. [Google Scholar] [CrossRef]

- Cai, Z.; Hu, Q.; Zhang, X.; Yang, J.; Wei, H.; Wang, J.; Zeng, Y.; Yin, G.; Li, W.; You, L.; et al. Improving agricultural field parcel delineation with a dual branch spatiotemporal fusion network by integrating multimodal satellite data. ISPRS J. Photogramm. Remote Sens. 2023, 205, 34–49. [Google Scholar] [CrossRef]

- Zhang, D.; Pan, Y.; Zhang, J.; Hu, T.; Zhao, J.; Li, N.; Chen, Q. A generalized approach based on convolutional neural networks for large area cropland mapping at very high resolution. Remote Sens. Environ. 2020, 247, 111912. [Google Scholar] [CrossRef]

- Song, W.; Wang, C.; Dong, T.; Wang, Z.; Wang, C.; Mu, X.; Zhang, H. Hierarchical extraction of cropland boundaries using Sentinel-2 time-series data in fragmented agricultural landscapes. Comput. Electron. Agric. 2023, 212, 108097. [Google Scholar] [CrossRef]

- Persello, C.; Tolpekin, V.A.; Bergado, J.R.; De By, R.A. Delineation of agricultural fields in smallholder farms from satellite images using fully convolutional networks and combinatorial grouping. Remote Sens. Environ. 2019, 231, 111253. [Google Scholar] [CrossRef] [PubMed]

- Ji, Z.; Wei, J.; Chen, X.; Yuan, W.; Kong, Q.; Gao, R.; Su, Z. SEDLNet: An unsupervised precise lightweight extraction method for farmland areas. Comput. Electron. Agric. 2023, 210, 107886. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 4–6 October 2023; pp. 4015–4026. [Google Scholar]

- Mazurowski, M.A.; Dong, H.; Gu, H.; Yang, J.; Konz, N.; Zhang, Y. Segment anything model for medical image analysis: An experimental study. Med. Image Anal. 2023, 89, 102918. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, L.; Cui, Y.; Huang, G.; Lin, W.; Yang, Y.; Hu, Y. A comprehensive survey on segment anything model for vision and beyond. arXiv 2023, arXiv:2305.08196. [Google Scholar]

- Shi, P.; Qiu, J.; Abaxi, S.M.D.; Wei, H.; Lo, F.P.-W.; Yuan, W. Generalist vision foundation models for medical imaging: A case study of segment anything model on zero-shot medical segmentation. Diagnostics 2023, 13, 1947. [Google Scholar] [CrossRef]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment anything in medical images. Nat. Commun. 2024, 15, 654. [Google Scholar] [CrossRef]

- Yu, T.; Feng, R.; Feng, R.; Liu, J.; Jin, X.; Zeng, W.; Chen, Z. Inpaint anything: Segment anything meets image inpainting. arXiv 2023, arXiv:2304.06790. [Google Scholar]

- Liu, S.; Ye, J.; Wang, X. Any-to-any style transfer: Making picasso and da vinci collaborate. arXiv 2023, arXiv:2304.09728. [Google Scholar]

- Zhang, R.; Jiang, Z.; Guo, Z.; Yan, S.; Pan, J.; Ma, X.; Dong, H.; Gao, P.; Li, H. Personalize segment anything model with one shot. arXiv 2023, arXiv:2305.03048. [Google Scholar]

- Gui, B.; Bhardwaj, A.; Sam, L. Evaluating the efficacy of segment anything model for delineating agriculture and urban green spaces in multiresolution aerial and spaceborne remote sensing images. Remote Sens. 2024, 16, 414. [Google Scholar] [CrossRef]

- Ding, L.; Zhu, K.; Peng, D.; Tang, H.; Yang, K.; Bruzzone, L. Adapting segment anything model for change detection in VHR remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5611711. [Google Scholar] [CrossRef]

- Chen, T.; Zhu, L.; Ding, C.; Cao, R.; Wang, Y.; Li, Z.; Sun, L.; Mao, P.; Zang, Y. SAM Fails to Segment Anything?—SAM-Adapter: Adapting SAM in Underperformed Scenes: Camouflage, Shadow, Medical Image Segmentation, and More. arXiv 2023, arXiv:2304.09148. [Google Scholar]

- Zhang, C.; Puspitasari, F.D.; Zheng, S.; Li, C.; Qiao, Y.; Kang, T.; Shan, X.; Zhang, C.; Qin, C.; Rameau, F. A survey on segment anything model (sam): Vision foundation model meets prompt engineering. arXiv 2023, arXiv:2306.06211. [Google Scholar]

- Yan, Z.; Li, J.; Li, X.; Zhou, R.; Zhang, W.; Feng, Y.; Diao, W.; Fu, K.; Sun, X. RingMo-SAM: A foundation model for segment anything in multimodal remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Xia, L.; Liu, R.; Su, Y.; Mi, S.; Yang, D.; Chen, J.; Shen, Z. Crop field extraction from high resolution remote sensing images based on semantic edges and spatial structure map. Geocarto Int. 2024, 39, 2302176. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, M.; Shi, W. CS-WSCDNet: Class Activation Mapping and Segment Anything Model-Based Framework for Weakly Supervised Change Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5624812. [Google Scholar] [CrossRef]

- Guk, E.; Levin, N. Analyzing spatial variability in night-time lights using a high spatial resolution color Jilin-1 image–Jerusalem as a case study. ISPRS J. Photogramm. Remote Sens. 2020, 163, 121–136. [Google Scholar] [CrossRef]

- Crippen, R.; Buckley, S.; Agram, P.; Belz, E.; Gurrola, E.; Hensley, S.; Kobrick, M.; Lavalle, M.; Martin, J.; Neumann, M.; et al. NASADEM global elevation model: Methods and progress. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 125–128. [Google Scholar] [CrossRef]

- Wu, Q.; Osco, L.P. samgeo: A Python package for segmenting geospatial data with the Segment Anything Model (SAM). J. Open Source Softw. 2023, 8, 5663. [Google Scholar] [CrossRef]

- Ren, Y.; Yang, X.; Wang, Z.; Yu, G.; Liu, Y.; Liu, X.; Meng, D.; Zhang, Q.; Yu, G. Segment Anything Model (SAM) Assisted Remote Sensing Supervision for Mariculture—Using Liaoning Province, China as an Example. Remote Sens. 2023, 15, 5781. [Google Scholar] [CrossRef]

- Lesiv, M.; Laso Bayas, J.C.; See, L.; Duerauer, M.; Dahlia, D.; Durando, N.; Hazarika, R.; Kumar Sahariah, P.; Vakolyuk, M.y.; Blyshchyk, V. Estimating the global distribution of field size using crowdsourcing. Glob. Chang. Biol. 2019, 25, 174–186. [Google Scholar] [CrossRef] [PubMed]

- Drǎguţ, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Jiao, S.; Hu, D.; Shen, Z.; Wang, H.; Dong, W.; Guo, Y.; Li, S.; Lei, Y.; Kou, W.; Wang, J. Parcel-level mapping of horticultural crop orchards in complex mountain areas using VHR and time-series images. Remote Sens. 2022, 14, 2015. [Google Scholar] [CrossRef]

- Rishikeshan, C.; Ramesh, H. An automated mathematical morphology driven algorithm for water body extraction from remotely sensed images. ISPRS J. Photogramm. Remote Sens. 2018, 146, 11–21. [Google Scholar] [CrossRef]

- Su, T.; Zhang, S. Local and global evaluation for remote sensing image segmentation. ISPRS J. Photogramm. Remote Sens. 2017, 130, 256–276. [Google Scholar] [CrossRef]

- Wang, Y.; Mao, Z.; Xin, Z.; Liu, X.; Li, Z.; Dong, Y.; Deng, L. Assessing the Efficacy of Pixel-Level Fusion Techniques for Ultra-High-Resolution Imagery: A Case Study of BJ-3A. Sensors 2024, 24, 1410. [Google Scholar] [CrossRef]

- Wu, C.; Guo, Y.; Guo, H.; Yuan, J.; Ru, L.; Chen, H.; Du, B.; Zhang, L. An investigation of traffic density changes inside Wuhan during the COVID-19 epidemic with GF-2 time-series images. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102503. [Google Scholar] [CrossRef]

- Salgueiro, L.; Marcello, J.; Vilaplana, V. SEG-ESRGAN: A multi-task network for super-resolution and semantic segmentation of remote sensing images. Remote Sens. 2022, 14, 5862. [Google Scholar] [CrossRef]

- Osco, L.P.; Wu, Q.; de Lemos, E.L.; Gonçalves, W.N.; Ramos, A.P.M.; Li, J.; Junior, J.M. The segment anything model (sam) for remote sensing applications: From zero to one shot. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103540. [Google Scholar] [CrossRef]

- Li, Z.; Chen, S.; Meng, X.; Zhu, R.; Lu, J.; Cao, L.; Lu, P. Full convolution neural network combined with contextual feature representation for cropland extraction from high-resolution remote sensing images. Remote Sens. 2022, 14, 2157. [Google Scholar] [CrossRef]

| Precision (%) | Recall (%) | IoU (%) | GOSE (%) | GUSE (%) | |

|---|---|---|---|---|---|

| SAM | 85.47 | 74.66 | 69.88 | 16.33 | 12.31 |

| Multi-scale segmentation | 55.56 | 90.25 | 51.55 | 47.52 | 10.75 |

| Precision (%) | Recall (%) | IoU (%) | GOSE (%) | GUSE (%) | |

|---|---|---|---|---|---|

| SAM | 84.47 | 74.66 | 69.88 | 16.33 | 12.31 |

| SAM combined point prompt | 86.91 | 77.05 | 71.88 | 15.29 | 12.14 |

| Plain Parcels | Precision (%) | Recall (%) | IoU (%) | GOSE (%) | GUSE (%) |

|---|---|---|---|---|---|

| SAM | 85.72 | 76.28 | 73.25 | 20.13 | 19.46 |

| SAM combined point prompt | 86.86 | 78.30 | 73.52 | 18.58 | 17.47 |

| SAM combined multi-scale | 92.29 | 87.06 | 83.07 | 24.11 | 9.51 |

| Sloped Parcels | Precision (%) | Recall (%) | IoU (%) | GOSE (%) | GUSE (%) |

|---|---|---|---|---|---|

| SAM | 74.06 | 67.23 | 63.31 | 14.73 | 22.94 |

| SAM combined point prompt | 79.78 | 77.15 | 68.35 | 12.09 | 19.77 |

| SAM combined multi-scale | 88.23 | 84.91 | 76.64 | 16.23 | 12.12 |

| Terraced Parcels | Precision (%) | Recall (%) | IoU (%) | GOSE (%) | GUSE (%) |

|---|---|---|---|---|---|

| SAM | 66.63 | 62.28 | 57.53 | 21.88 | 32.79 |

| SAM combined point prompt | 68.22 | 74.25 | 59.45 | 18.02 | 29.76 |

| SAM combined multi-scale | 79.96 | 69.91 | 65.70 | 46.27 | 17.45 |

| Precision (%) | Recall (%) | IoU (%) | GOSE (%) | GUSE (%) | |

|---|---|---|---|---|---|

| SAM (BJ-3) | 87.02 | 84.85 | 83.74 | 16.21 | 6.15 |

| SAM combined multi-scale (BJ-3) | 95.10 | 90.58 | 89.81 | 19.06 | 3.12 |

| SAM (GF-2) | 84.79 | 83.56 | 78.37 | 18.94 | 13.24 |

| SAM combined multi-scale (GF-2) | 92.39 | 89.21 | 87.09 | 22.16 | 7.48 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Z.; Jing, H.; Liu, Y.; Yang, X.; Wang, Z.; Liu, X.; Gao, K.; Luo, H. Segment Anything Model Combined with Multi-Scale Segmentation for Extracting Complex Cultivated Land Parcels in High-Resolution Remote Sensing Images. Remote Sens. 2024, 16, 3489. https://doi.org/10.3390/rs16183489

Huang Z, Jing H, Liu Y, Yang X, Wang Z, Liu X, Gao K, Luo H. Segment Anything Model Combined with Multi-Scale Segmentation for Extracting Complex Cultivated Land Parcels in High-Resolution Remote Sensing Images. Remote Sensing. 2024; 16(18):3489. https://doi.org/10.3390/rs16183489

Chicago/Turabian StyleHuang, Zhongxin, Haitao Jing, Yueming Liu, Xiaomei Yang, Zhihua Wang, Xiaoliang Liu, Ku Gao, and Haofeng Luo. 2024. "Segment Anything Model Combined with Multi-Scale Segmentation for Extracting Complex Cultivated Land Parcels in High-Resolution Remote Sensing Images" Remote Sensing 16, no. 18: 3489. https://doi.org/10.3390/rs16183489

APA StyleHuang, Z., Jing, H., Liu, Y., Yang, X., Wang, Z., Liu, X., Gao, K., & Luo, H. (2024). Segment Anything Model Combined with Multi-Scale Segmentation for Extracting Complex Cultivated Land Parcels in High-Resolution Remote Sensing Images. Remote Sensing, 16(18), 3489. https://doi.org/10.3390/rs16183489