Estimation of Forage Biomass in Oat (Avena sativa) Using Agronomic Variables through UAV Multispectral Imaging

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experimental Site

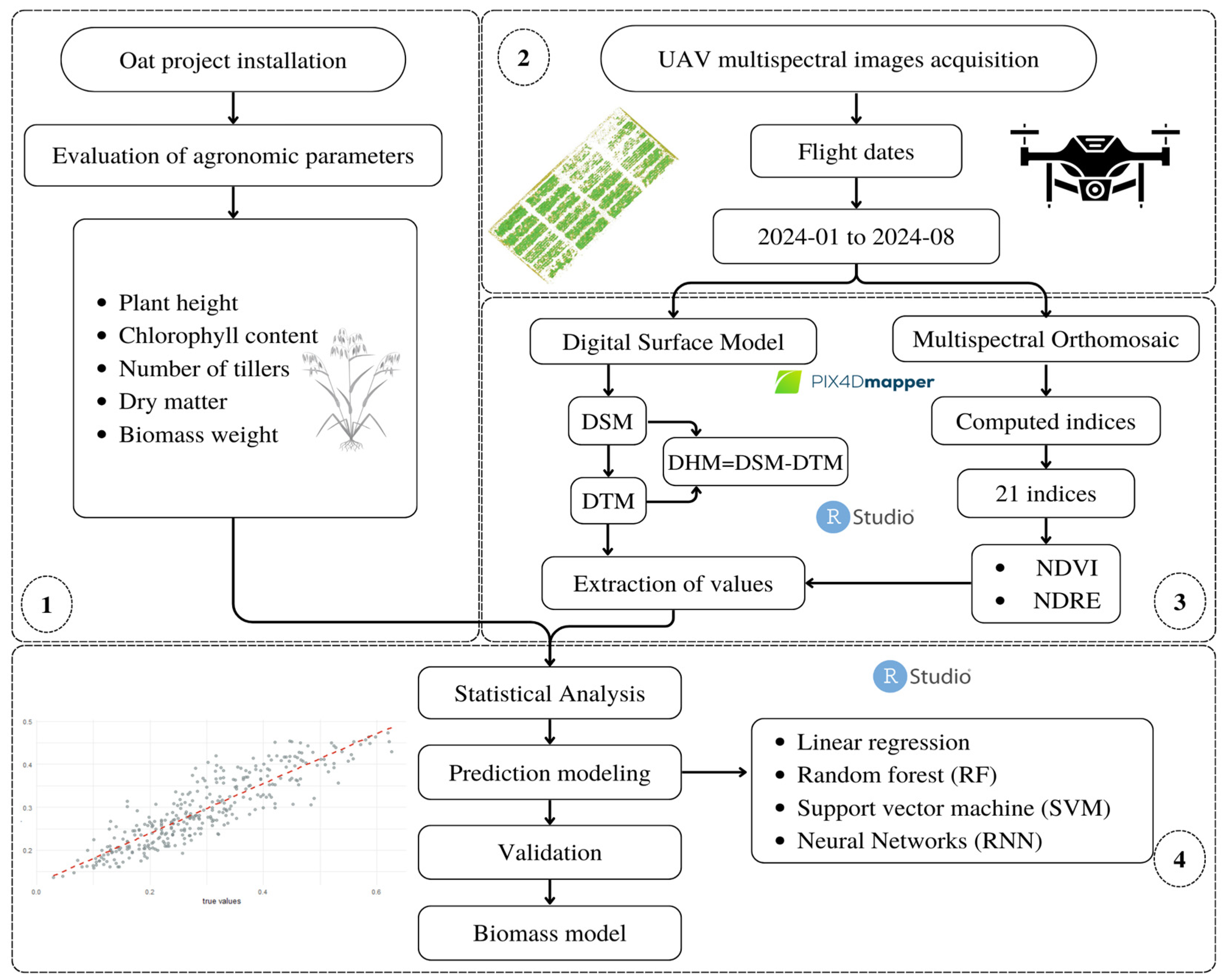

2.2. Methodological Framework

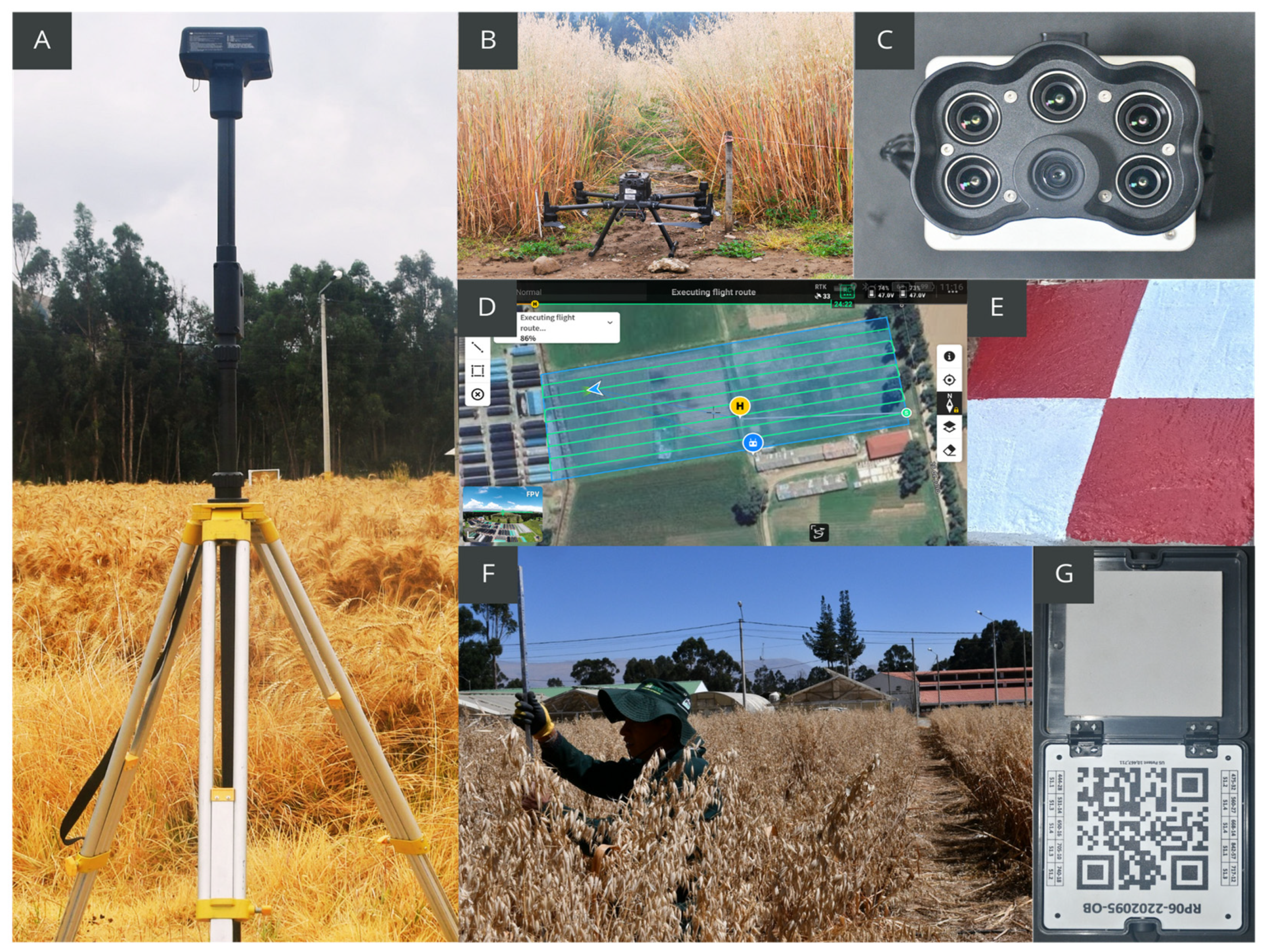

2.3. Image Acquisition and Preprocessing

2.4. Field Data Acquisition

2.5. Extraction and Processing of Multispectral Images

2.6. Data Analysis and Selection of Predictor Variables

2.6.1. Modeling and Estimation Algorithms

2.6.2. Model Tuning and Evaluation

3. Results

3.1. Descriptive Statistics of Agronomic Variables

3.2. Spectral Variable Analysis

3.2.1. Significance Correlation Matrix

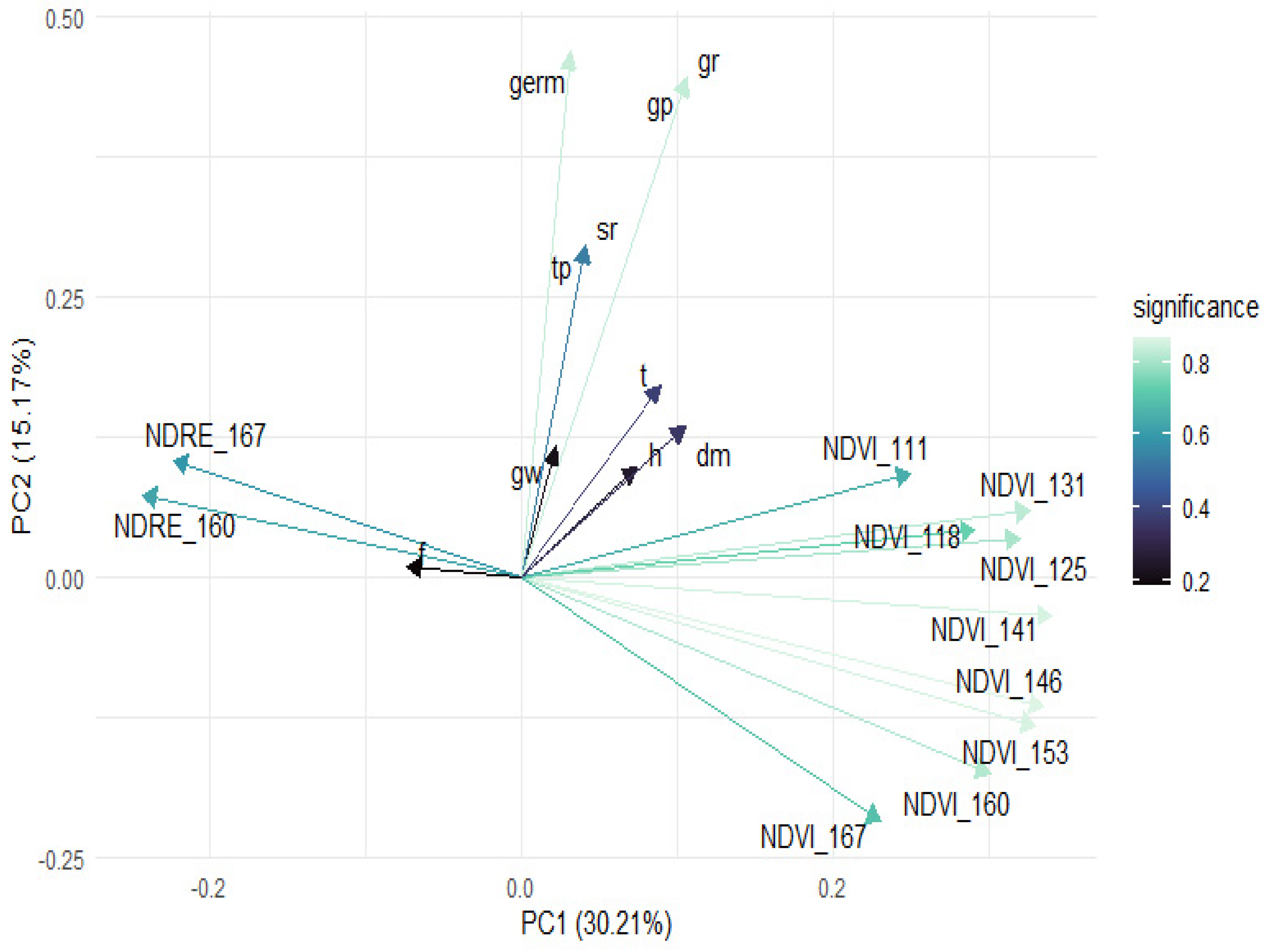

3.2.2. Principal Component Analysis

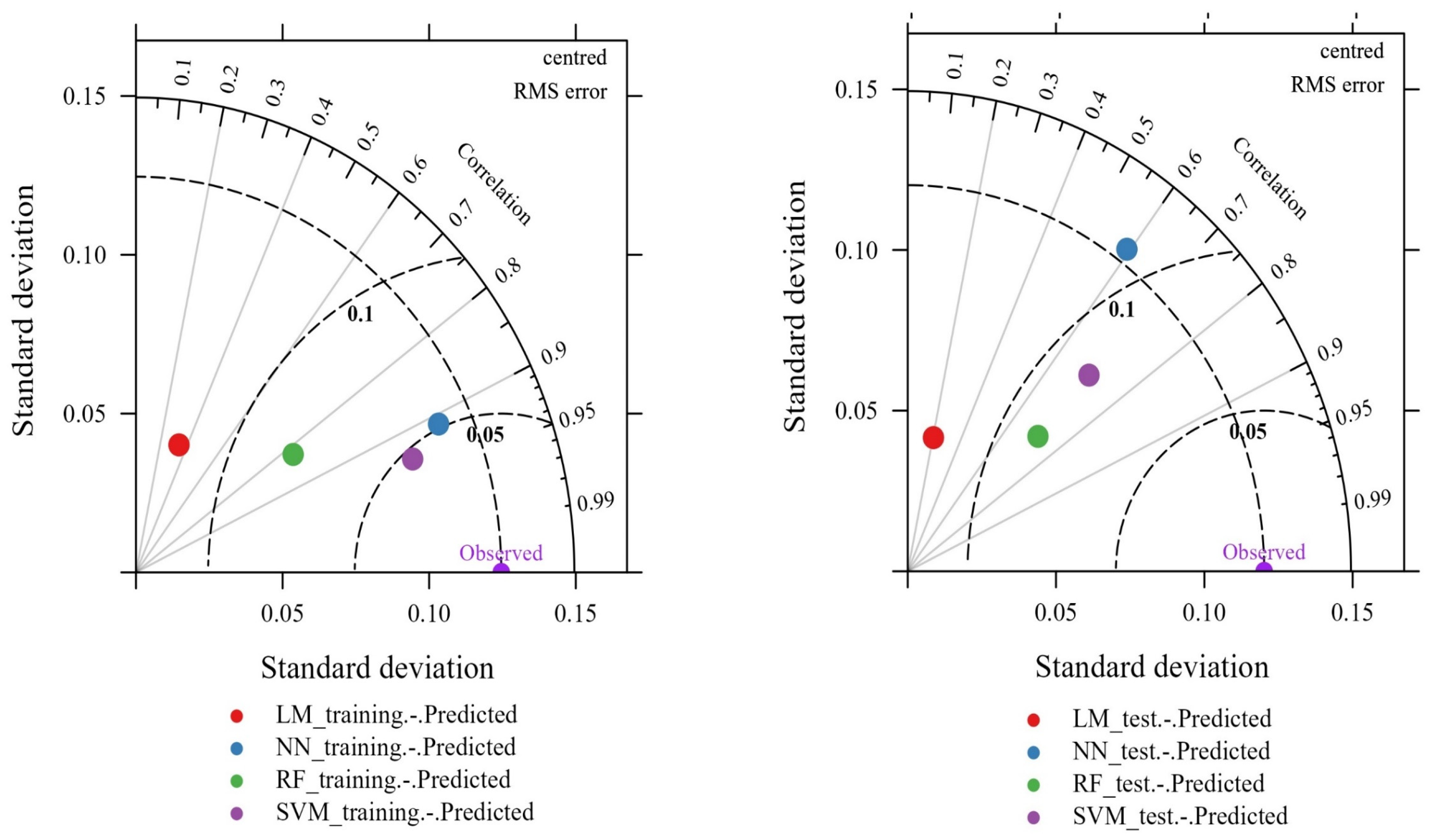

3.3. Model Performance

3.4. Predictor Estimation

3.5. Predictive Model for Biomass Estimation

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gupta, A.K.; Sharma, M.L.; Khan, M.A.; Pandey, P.K. Promotion of improved forage crop production technologies: Constraints and strategies with special reference to climate change. In Molecular Interventions for Developing Climate-Smart Crops: A Forage Perspective; Springer: Singapore, 2023; pp. 229–236. [Google Scholar] [CrossRef]

- Trevisan, L.R.; Brichi, L.; Gomes, T.M.; Rossi, F. Estimating Black Oat Biomass Using Digital Surface Models and a Vegetation Index Derived from RGB-Based Aerial Images. Remote Sens. 2023, 15, 1363. [Google Scholar] [CrossRef]

- Garland, G. Sustainable management of agricultural soils: Balancing multiple perspectives and tradeoffs. In EGU General Assembly Conference Abstracts; EGU: Vienna, Austria, 2023. [Google Scholar] [CrossRef]

- Francaviglia, R.; Almagro, M.; Vicente-Vicente, J.L. Conservation Agriculture and Soil Organic Carbon: Principles, Processes, Practices and Policy Options. Soil Syst. 2023, 7, 17. [Google Scholar] [CrossRef]

- Sharma, P.; Leigh, L.; Chang, J.; Maimaitijiang, M.; Caffé, M. Above-Ground Biomass Estimation in Oats Using UAV Remote Sensing and Machine Learning. Sensors 2022, 22, 601. [Google Scholar] [CrossRef] [PubMed]

- Fodder, K.; Jimenez-Ballesta, R.; Srinivas Reddy, K.; Samuel, J.; Kumar Pankaj, P.; Gopala Krishna Reddy, A.; Rohit, J.; Reddy, K.S. Fodder Grass Strips for Soil Conservation and Soil Health. Chem. Proc. 2022, 10, 58. [Google Scholar] [CrossRef]

- Katoch, R. Nutritional Quality of Important Forages. In Techniques in Forage Quality Analysis; Springer: Singapore, 2023; pp. 173–185. [Google Scholar] [CrossRef]

- Barrett, B.A.; Faville, M.J.; Nichols, S.N.; Simpson, W.R.; Bryan, G.T.; Conner, A.J. Breaking through the feed barrier: Options for improving forage genetics. Anim. Prod. Sci. 2015, 55, 883–892. [Google Scholar] [CrossRef]

- Kim, K.S.; Tinker, N.A.; Newell, M.A. Improvement of Oat as a Winter Forage Crop in the Southern United States. Crop Sci. 2014, 54, 1336–1346. [Google Scholar] [CrossRef]

- McCartney, D.; Fraser, J.; Ohama, A. Annual cool season crops for grazing by beef cattle. A Canadian Review. Can. J. Anim. Sci. 2011, 88, 517–533. [Google Scholar] [CrossRef]

- Kumar, S.; Vk, S.; Sanjay, K.; Priyanka; Gaurav, S.; Jyoti, K.; Kaushal, R. Identification of stable oat wild relatives among Avena species for seed and forage yield components using joint regression analysis. Ann. Plant Soil Res. 2022, 24, 601–605. [Google Scholar] [CrossRef]

- Espinoza-Montes, F.; Nuñez-Rojas, W.; Ortiz-Guizado, I.; Choque-Quispe, D. Forage production and interspecific competition of oats (Avena sativa) and common vetch (Vicia sativa) association under dry land and high-altitude conditions. Rev. De. Investig. Vet. Del. Peru. 2018, 29, 1237–1248. [Google Scholar] [CrossRef]

- INEI. Sistema Estadistico Nacional-Provincia de Lima 2018, 1–508. Available online: https://www.inei.gob.pe/media/MenuRecursivo/publicaciones_digitales/Est/Lib1583/15ATOMO_01.pdf (accessed on 4 September 2024).

- Aníbal, C.; Mayer, F. Producción de Carne y Leche Bovina en Sistemas Silvopastoriles; Instituto Nacional de Tecnología Agropecuaria: Buenos Aires, Argentina, 2017. [Google Scholar]

- Santacoloma-Varón, L.E.; Granados-Moreno, J.E.; Aguirre-Forero, S.E. Evaluación de variables agronómicas, calidad del forraje y contenido de taninos condensados de la leguminosa Lotus corniculatus en respuesta a biofertilizante y fertilización química en condiciones agroecológicas de trópico alto andino colombiano. Entramado 2017, 13, 222–233. [Google Scholar] [CrossRef]

- Mariana, M.P. Determinación de Variables Agronómicas del Cultivo de Maíz Mediante Imágenes Obtenidas Desde un Vehículo Aéreo no Tripulado (VANT). Thesis Instituto Mexicano de Tecnología del Agua, Jiutepec, Mexico, 2017. Available online: http://repositorio.imta.mx/handle/20.500.12013/1750 (accessed on 4 September 2024).

- Watanabe, K.; Guo, W.; Arai, K.; Takanashi, H.; Kajiya-Kanegae, H.; Kobayashi, M.; Yano, K.; Tokunaga, T.; Fujiwara, T.; Tsutsumi, N.; et al. High-throughput phenotyping of sorghum plant height using an unmanned aerial vehicle and its application to genomic prediction modeling. Front. Plant Sci. 2017, 8, 254051. [Google Scholar] [CrossRef] [PubMed]

- Matsuura, Y.; Heming, Z.; Nakao, K.; Qiong, C.; Firmansyah, I.; Kawai, S.; Yamaguchi, Y.; Maruyama, T.; Hayashi, H.; Nobuhara, H. High-precision plant height measurement by drone with RTK-GNSS and single camera for real-time processing. Sci. Rep. 2023, 13, 6329. [Google Scholar] [CrossRef]

- Ji, Y.; Chen, Z.; Cheng, Q.; Liu, R.; Li, M.; Yan, X.; Li, G.; Wang, D.; Fu, L.; Ma, Y.; et al. Estimation of plant height and yield based on UAV imagery in faba bean (Vicia faba L.). Plant Methods 2022, 18, 26. [Google Scholar] [CrossRef]

- Ibiev, G.Z.; Savoskina, O.A.; Chebanenko, S.I.; Beloshapkina, O.O.; Zavertkin, I.A. Unmanned Aerial Vehicles (UAVs)-One of the Digitalization and Effective Development Segments of Agricultural Production in Modern Conditions. In AIP Conference Proceedings; AIP Publishing: Melville, NY, USA, 2022; Volume 2661. [Google Scholar] [CrossRef]

- Hütt, C.; Bolten, A.; Hüging, H.; Bareth, G. UAV LiDAR Metrics for Monitoring Crop Height, Biomass and Nitrogen Uptake: A Case Study on a Winter Wheat Field Trial. PFG-J. Photogramm. Remote Sens. Geoinf. Sci. 2023, 91, 65–76. [Google Scholar] [CrossRef]

- Plaza, J.; Criado, M.; Sánchez, N.; Pérez-Sánchez, R.; Palacios, C.; Charfolé, F. UAV Multispectral Imaging Potential to Monitor and Predict Agronomic Characteristics of Different Forage Associations. Agronomy 2021, 11, 1697. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Lottes, P.; Khanna, R.; Pfeifer, J.; Siegwart, R.; Stachniss, C. UAV-Based Crop and Weed Classification for Smart Farming. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017. [Google Scholar]

- Munghemezulu, C.; Mashaba-Munghemezulu, Z.; Ratshiedana, P.E.; Economon, E.; Chirima, G.; Sibanda, S. Unmanned Aerial Vehicle (UAV) and Spectral Datasets in South Africa for Precision Agriculture. Data 2023, 8, 98. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Vina, A.; Arkebauer, T.J.; Rundquist, D.C.; Keydan, G.; Leavitt, B. Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophys. Res. Lett. 2003, 30, 1248. [Google Scholar] [CrossRef]

- Belton, D.; Helmholz, P.; Long, J.; Zerihun, A. Crop Height Monitoring Using a Consumer-Grade Camera and UAV Technology. PFG-J. Photogramm. Remote Sens. Geoinf. Sci. 2019, 87, 249–262. [Google Scholar] [CrossRef]

- Hassan, M.A.; Yang, M.; Fu, L.; Rasheed, A.; Zheng, B.; Xia, X.; Xiao, Y.; He, Z. Accuracy assessment of plant height using an unmanned aerial vehicle for quantitative genomic analysis in bread wheat. Plant Methods 2019, 15, 37. [Google Scholar] [CrossRef]

- Schreiber, L.V.; Atkinson Amorim, J.G.; Guimarães, L.; Motta Matos, D.; Maciel da Costa, C.; Parraga, A. Above-ground Biomass Wheat Estimation: Deep Learning with UAV-based RGB Images. Appl. Artif. Intell. 2022, 36. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Jin, Y.; Yang, X.; Qiu, J.; Li, J.; Gao, T.; Wu, Q.; Zhao, F.; Ma, H.; Yu, H.; Xu, B. Remote Sensing-Based Biomass Estimation and Its Spatio-Temporal Variations in Temperate Grassland, Northern China. Remote Sens. 2014, 6, 1496–1513. [Google Scholar] [CrossRef]

- Zhang, H.; Sun, Y.; Chang, L.; Qin, Y.; Chen, J.; Qin, Y.; Chen, J.; Qin, Y.; Du, J.; Yi, S.; et al. Estimation of Grassland Canopy Height and Aboveground Biomass at the Quadrat Scale Using Unmanned Aerial Vehicle. Remote Sens. 2018, 10, 851. [Google Scholar] [CrossRef]

- Bazzo, C.O.G.; Kamali, B.; Hütt, C.; Bareth, G.; Gaiser, T. A Review of Estimation Methods for Aboveground Biomass in Grasslands Using, U.A.V. Remote Sens. 2023, 15, 639. [Google Scholar] [CrossRef]

- Kurbanov, R.; Panarina, V.; Polukhin, A.; Lobachevsky, Y.; Zakharova, N.; Litvinov, M.; Rebouh, N.Y.; Kucher, D.E.; Gureeva, E.; Golovina, E.; et al. Evaluation of Field Germination of Soybean Breeding Crops Using Multispectral Data from UAV. Agronomy 2023, 13, 1348. [Google Scholar] [CrossRef]

- Chen, R.; Chu, T.; Landivar, J.A.; Yang, C.; Maeda, M.M. Monitoring cotton (Gossypium hirsutum L.) germination using ultrahigh-resolution UAS images. Precis. Agric. 2018, 19, 161–177. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, T.; He, J.; Yang, X.; Wang, L.; Guo, Y. Estimation of peanut seedling emergence rate of based on UAV visible light image. In Proceedings of the International Conference on Agri-Photonics and Smart Agricultural Sensing Technologies (ICASAST 2022), Zhengzhou, China, 4–6 August 2022; Volume 12349, pp. 259–265. [Google Scholar] [CrossRef]

- Greaves, H.E.; Vierling, L.A.; Eitel, J.U.H.; Boelman, N.T.; Magney, T.S.; Prager, C.M.; Griffin, K.L. Estimating aboveground biomass and leaf area of low-stature Arctic shrubs with terrestrial LiDAR. Remote Sens. Environ. 2015, 164, 26–35. [Google Scholar] [CrossRef]

- Li, K.Y.; de Lima, R.S.; Burnside, N.G.; Vahtmäe, E.; Kutser, T.; Sepp, K.; Pinheiro, V.H.C.; Yang, M.-D.; Vain, A.; Sepp, K. Toward Automated Machine Learning-Based Hyperspectral Image Analysis in Crop Yield and Biomass Estimation. Remote Sens. 2022, 14, 1114. [Google Scholar] [CrossRef]

- Cáceres, Y.Z.; Torres, B.C.; Archi, G.C.; Zanabria Mallqui, R.; Pinedo, L.E.; Trucios, D.C.; Ortega Quispe, K.A. Analysis of Soil Quality through Aerial Biomass Contribution of Three Forest Species in Relict High Andean Forests of Peru. Malaysian Journal Soil Science. 2024, 28, 38–52. [Google Scholar]

- Naveed Tahir, M.; Zaigham Abbas Naqvi, S.; Lan, Y.; Zhang, Y.; Wang, Y.; Afzal, M.; Cheema, M.J.M.; Amir, S. Real time estimation of chlorophyll content based on vegetation indices derived from multispectral UAV in the kinnow orchard. Int. J. Precis. Agric. Aviat. 2018, 1, 24–31. [Google Scholar] [CrossRef]

- Fei, S.; Hassan, M.A.; Xiao, Y.; Su, X.; Chen, Z.; Cheng, Q.; Duan, F.; Chen, R.; Ma, Y. UAV-based multi-sensor data fusion and machine learning algorithm for yield prediction in wheat. Precis. Agric. 2023, 24, 187–212. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Zhai, W.; Li, C.; Cheng, Q.; Mao, B.; Li, Z.; Li, Y.; Ding, F.; Qin, S.; Fei, S.; Chen, Z. Enhancing Wheat Above-Ground Biomass Estimation Using UAV RGB Images and Machine Learning: Multi-Feature Combinations, Flight Height, and Algorithm Implications. Remote Sens. 2023, 15, 3653. [Google Scholar] [CrossRef]

- Quirós, J.J.; McGee, R.J.; Vandemark, G.J.; Romanelli, T.; Sankaran, S. Field phenotyping using multispectral imaging in pea (Pisum sativum L) and chickpea (Cicer arietinum L). Eng. Agric. Environ. Food 2019, 12, 404–413. [Google Scholar] [CrossRef]

- Ortega Quispe, K.A.; Valerio Deudor, L.L. Captación y almacenamiento pluvial como modelo histórico para conservación del agua en los Andes peruanos. Desafios 2023, 14, e385. [Google Scholar] [CrossRef]

- IGP. Atlas Climático de Precipitación y Temperatura del Aire de la Cuenca del Río Mantaro; Consejo Nacional del Ambiente: Lima, Peru, 2005.

- Ccopi-Trucios, D.; Barzola-Rojas, B.; Ruiz-Soto, S.; Gabriel-Campos, E.; Ortega-Quispe, K.; Cordova-Buiza, F. River Flood Risk Assessment in Communities of the Peruvian Andes: A Semiquantitative Application for Disaster Prevention. Sustainability 2023, 15, 13768. [Google Scholar] [CrossRef]

- ISTA. Reglas Internacionales para el Análisis de las Semillas; International Seed Testing Association: Bassersdorf, Switzerland, 2016; pp. 1–384. [Google Scholar] [CrossRef]

- Hijmans, J. Package “terra” Spatial Data Analysis 2024. Available online: https://cran.r-project.org/web/packages/terra/terra.pdf (accessed on 4 September 2024).

- Huete, A.R.; Liu, H.Q.; Batchily, K.; Van Leeuwen, W. A comparison of vegetation indices over a global set of TM images for EOS-MODIS. Remote Sens. Environ. 1997, 59, 440–451. [Google Scholar] [CrossRef]

- Koppe, W.; Li, F.; Gnyp, M.L.; Miao, Y.; Jia, L.; Chen, X.; Zhang, F.; Bareth, G. Evaluating multispectral and hyperspectral satellite remote sensing data for estimating winter wheat growth parameters at regional scale in the North China plain. Photogramm. Fernerkund. Geoinf. 2010, 2010, 167–178. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Strong, C.J.; Burnside, N.G.; Llewellyn, D. The potential of small-Unmanned Aircraft Systems for the rapid detection of threatened unimproved grassland communities using an Enhanced Normalized Difference Vegetation Index. PLoS ONE 2017, 12, e0186193. [Google Scholar] [CrossRef] [PubMed]

- Jordan, C.F. Derivation of Leaf-Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Wu, C.; Niu, Z.; Gao, S. The potential of the satellite derived green chlorophyll index for estimating midday light use efficiency in maize, coniferous forest and grassland. Ecol. Indic. 2012, 14, 66–73. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Richardson, A.J.; Wiegand, C.L. Distinguishing Vegetation from Soil Background Information. Photogramm. Eng. Remote Sens. 1977, 43, 1541–1552. [Google Scholar]

- Vincini, M.; Frazzi, E.; D’Alessio, P. A broad-band leaf chlorophyll vegetation index at the canopy scale. Precis. Agric. 2008, 9, 303–319. [Google Scholar] [CrossRef]

- Schleicher, T.D.; Bausch, W.C.; Delgado, J.A.; Ayers, P.D. Evaluation and Refinement of the Nitrogen Reflectance Index (NRI) for Site-Specific Fertilizer Management. In Proceedings of the 2001 ASAE Annual Meeting, Sacramento, CA, USA, 29 July–1 August 2001; p. 1. [Google Scholar] [CrossRef]

- Daughtry, C.S.T.; Walthall, C.L.; Kim, M.S.; De Colstoun, E.B.; McMurtrey, J.E. Estimating Corn Leaf Chlorophyll Concentration from Leaf and Canopy Reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Eitel, J.U.H.; Long, D.S.; Gessler, P.E.; Smith, A.M.S. Using in-situ measurements to evaluate the new RapidEyeTM satellite series for prediction of wheat nitrogen status. Int. J. Remote Sens. 2007, 28, 4183–4190. [Google Scholar] [CrossRef]

- Pereira, F.R.d.S.; de Lima, J.P.; Freitas, R.G.; Dos Reis, A.A.; do Amaral, L.R.; Figueiredo, G.K.D.A.; Lamparelli, R.A.; Magalhães, P.S.G. Nitrogen variability assessment of pasture fields under an integrated crop-livestock system using UAV, PlanetScope, and Sentinel-2 data. Comput. Electron. Agric. 2022, 193, 106645. [Google Scholar] [CrossRef]

- Karnati, R.; Prasad, M.V.S. a Prediction of Crop Monitoring Indices (NDVI,MSAVI,RECI) and Estimation of Nitrogen Concentration on Leaves for Possible of Optimizing the Time of Harvest with the Help of Sensor Networks in Guntur Region, Andhra Pradesh, India. with agent based modeling. Int. J. Adv. Sci. Comput. Appl. 2023, 2, 19–30. [Google Scholar] [CrossRef]

- Kureel, N.; Sarup, J.; Matin, S.; Goswami, S.; Kureel, K. Modelling vegetation health and stress using hypersepctral remote sensing data. Model. Earth Syst. Environ. 2022, 8, 733–748. [Google Scholar] [CrossRef]

- Yang, C.; Everitt, J.H.; Bradford, J.M.; Murden, D. Airborne hyperspectral imagery and yield monitor data for mapping cotton yield variability. Precis. Agric. 2004, 5, 445–461. [Google Scholar] [CrossRef]

- Yang, K.; Tu, J.; Chen, T. Homoscedasticity: An overlooked critical assumption for linear regression. Gen. Psychiatr. 2019, 32, e100148. [Google Scholar] [CrossRef]

- Hope, T.M.H. Linear regression. In Machine Learning: Methods and Applications to Brain Disorders; Acamedic Press: Cambridge, MA, USA, 2020; pp. 67–81. [Google Scholar] [CrossRef]

- Dhulipala, S.; Patil, G.R. Freight production of agricultural commodities in India using multiple linear regression and generalized additive modelling. Transp. Policy 2020, 97, 245–258. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. Springer Series in Statistics The Elements of Statistical Learning Data Mining, Inference, and Prediction. Math. Intell. 2008, 27, 83–85. [Google Scholar]

- Bhavsar, H.; Ganatra, A. A Comparative Study of Training Algorithms for Supervised Machine Learning. Int. J. Soft Comput. Eng. (IJSCE) 2012, 2, 2231–2307. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and Regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Mammone, A.; Turchi, M.; Cristianini, N. Support vector machines. Wiley Interdiscip. Rev. Comput. Stat. 2009, 1, 283–289. [Google Scholar] [CrossRef]

- Capparuccia, R.; De Leone, R.; Marchitto, E. Integrating support vector machines and neural networks. Neural Netw. 2007, 20, 590–597. [Google Scholar] [CrossRef] [PubMed]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Leng, Y.; Xu, X.; Qi, G. Combining active learning and semi-supervised learning to construct SVM classifier. Knowl. Based Syst. 2013, 44, 121–131. [Google Scholar] [CrossRef]

- Williams, H.A.M.; Jones, M.H.; Nejati, M.; Seabright, M.J.; Bell, J.; Penhall, N.D.; Barnett, J.J.; Duke, M.D.; Scarfe, A.J.; Ahn, H.S.; et al. Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 2019, 181, 140–156. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V.; Saitta, L. Support-Vector Networks Editor; Kluwer Academic Publishers: New York, USA, 1995; Volume 20. [Google Scholar]

- Przybyło, J.; Jabłoński, M. Using Deep Convolutional Neural Network for oak acorn viability recognition based on color images of their sections. Comput. Electron. Agric. 2019, 156, 490–499. [Google Scholar] [CrossRef]

- A Ilemobayo, J.; Durodola, O.; Alade, O.; J Awotunde, O.; T Olanrewaju, A.; Falana, O.; Ogungbire, A.; Osinuga, A.; Ogunbiyi, D.; Ifeanyi, A.; et al. Hyperparameter Tuning in Machine Learning: A Comprehensive Review. J. Eng. Res. Rep. 2024, 26, 388–395. [Google Scholar] [CrossRef]

- Defalque, G.; Santos, R.; Bungenstab, D.; Echeverria, D.; Dias, A.; Defalque, C. Machine learning models for dry matter and biomass estimates on cattle grazing systems. Comput. Electron. Agric. 2024, 216, 108520. [Google Scholar] [CrossRef]

- Natekin, A.; Knoll, A. Gradient boosting machines, a tutorial. Front. Neurorobot 2013, 7, 21. [Google Scholar] [CrossRef]

- Hornik, K. Resampling Methods in R: The boot Package. R News 2002, 2, 2–7. [Google Scholar]

- Wei, T.; Simko, V.; Levy, M.; Xie, Y.; Jin, Y.; Zemla, J. Package “Corrplot” Title Visualization of a Correlation Matrix Needs Compilation. 2022. Available online: https://cran.r-project.org/web/packages/corrplot/corrplot.pdf (accessed on 4 September 2024).

- Greenacre, M.; Groenen, P.J.F.; Hastie, T.; D’Enza, A.I.; Markos, A.; Tuzhilina, E. Principal component analysis. Nat. Rev. Methods Primers 2022, 2, 100. [Google Scholar] [CrossRef]

- Gilbertson, J.K.; van Niekerk, A. Value of dimensionality reduction for crop differentiation with multi-temporal imagery and machine learning. Comput. Electron. Agric. 2017, 142, 50–58. [Google Scholar] [CrossRef]

- Jollife, I.T.; Cadima, J. Principal component analysis: A review and recent developments. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2016, 374, 20150202. [Google Scholar] [CrossRef]

- Hasan, B.M.S.; Abdulazeez, A.M. A Review of Principal Component Analysis Algorithm for Dimensionality Reduction. J. Soft Comput. Data Min. 2021, 2, 20–30. [Google Scholar] [CrossRef]

- Lussem, U.; Bolten, A.; Menne, J.; Gnyp, M.L.; Schellberg, J.; Bareth, G. Estimating biomass in temperate grassland with high resolution canopy surface models from UAV-based RGB images and vegetation indices. J. Appl. Remote Sens. 2019, 13, 1. [Google Scholar] [CrossRef]

- Coelho, A.P.; de Faria, R.T.; Leal, F.T.; Barbosa, J.d.A.; Dalri, A.B.; Rosalen, D.L. Estimation of irrigated oats yield using spectral indices. Agric. Water Manag. 2019, 223, 105700. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE)? -Arguments against avoiding RMSE in the literature. Geosci. Model. Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef]

- Cort, W.J.; Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 2005, 30, 79–82. [Google Scholar]

- Hyndman, R.J.; Koehler, A.B. Another look at measures of forecast accuracy. Int. J. Forecast. 2006, 22, 679–688. [Google Scholar] [CrossRef]

- Peters, K.C.; Hughes, M.P.; Daley, O. Field-scale calibration of the PAR Ceptometer and FieldScout CM for real-time estimation of herbage mass and nutritive value of rotationally grazed tropical pasture. Smart Agric. Technol. 2022, 2, 100037. [Google Scholar] [CrossRef]

- Lu, B.; Proctor, C.; He, Y. Investigating different versions of PROSPECT and PROSAIL for estimating spectral and biophysical properties of photosynthetic and non-photosynthetic vegetation in mixed grasslands. GIsci Remote Sens. 2021, 58, 354–371. [Google Scholar] [CrossRef]

- Zhang, Q.; Xu, L.; Zhang, M.; Wang, Z.; Gu, Z.; Wu, Y.; Shi, Y.; Lu, Z. Uncertainty analysis of remote sensing pretreatment for biomass estimation on Landsat OLI and Landsat ETM+. ISPRS Int. J. Geoinf. 2020, 9, 48. [Google Scholar] [CrossRef]

- Lu, D.; Chen, Q.; Wang, G.; Liu, L.; Li, G.; Moran, E. A survey of remote sensing-based aboveground biomass estimation methods in forest ecosystems. Int. J. Digit. Earth 2016, 9, 63–105. [Google Scholar] [CrossRef]

- Song, Q.; Albrecht, C.M.; Xiong, Z.; Zhu, X.X. Biomass Estimation and Uncertainty Quantification From Tree Height. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 4833–4845. [Google Scholar] [CrossRef]

- Grüner, E.; Astor, T.; Wachendorf, M. Biomass prediction of heterogeneous temperate grasslands using an SFM approach based on UAV imaging. Agronomy 2019, 9, 54. [Google Scholar] [CrossRef]

- Barboza, T.O.C.; Ardigueri, M.; Souza, G.F.C.; Ferraz, M.A.J.; Gaudencio, J.R.F.; Santos, A.F.d. Performance of Vegetation Indices to Estimate Green Biomass Accumulation in Common Bean. AgriEngineering 2023, 5, 840–854. [Google Scholar] [CrossRef]

- Pizarro, S.; Pricope, N.G.; Figueroa, D.; Carbajal, C.; Quispe, M.; Vera, J.; Alejandro, L.; Achallma, L.; Gonzalez, I.; Salazar, W.; et al. Implementing Cloud Computing for the Digital Mapping of Agricultural Soil Properties from High Resolution UAV Multispectral Imagery. Remote Sens. 2023, 15, 3203. [Google Scholar] [CrossRef]

- Sinde-González, I.; Gil-Docampo, M.; Arza-García, M.; Grefa-Sánchez, J.; Yánez-Simba, D.; Pérez-Guerrero, P.; Abril-Porras, V. Biomass estimation of pasture plots with multitemporal UAV-based photogrammetric surveys. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102355. [Google Scholar] [CrossRef]

| Indices | Equation | Description |

|---|---|---|

| Differenced Vegetation Index (DVI) | [50] | |

| Normalized Difference Vegetation Index (NDVI) | [51] | |

| Green Normalized Difference Vegetation (GNDVI) | [52] | |

| Normalized Difference Red Edge (NDRE) | [52] | |

| Enhanced Normalized Difference Vegetation Index (ENDVI) | [53] | |

| Renormalized Difference Vegetation Index (RDVI) | [54] | |

| Enhanced Vegetation Index (EVI) | [55] | |

| Visible Difference Vegetation Index (VDVI) | [56] | |

| Wide Dynamic Range Vegetation Index (WDRDVI) | [57] | |

| Transformed Vegetation Index (TVI) | [58] | |

| Soil-Adjusted Vegetation index (SAVI) | [59] | |

| Optimized Soil-Adjusted Vegetation Index (OSAVI) | [59] | |

| Content Validity Index (CVI) | [60] | |

| Modified Soil Adjusted Vegetation Index (MSAVI) | [61] | |

| Modified Chlorophyll Absorption in Reflectance Index (MCARI) | [62] | |

| Transformed Chlorophyll Absorption in the Reflectance Index (TCARI) | [63] | |

| Normalized Pigment Chlorophyll Reflectance (NPCI) | [60,64] | |

| Green Coverage Index (GCI) | [56] | |

| Red-Edge Chlorophyll Index (RECI) | [65] | |

| Structure Insensitive Pigment Index (SIPI) | [66] | |

| Anthocyanin Reflectance Index (ARI) | [67] |

| Agronomic Variable | Prom | Mean | Min | Max | σ |

|---|---|---|---|---|---|

| Height (meters) (h) | 1.50 | 1.50 | 1.13 | 1.88 | 0.15 |

| Tillers (t) | 29.97 | 29.00 | 5.00 | 61.00 | 11.57 |

| Dry matter (kg) (dm) | 0.29 | 0.28 | 0.03 | 0.63 | 0.12 |

| Grain weight (kg) (gw) | 0.04 | 0.04 | 0.00 | 0.10 | 0.02 |

| Flowers (f) | 14.76 | 16.00 | 3.00 | 26.00 | 5.38 |

| Germination percentage (gp) | 0.72 | 0.79 | 0.30 | 0.88 | 0.18 |

| Germination in emergency chamber (gem) | 0.62 | 0.71 | 0.15 | 0.78 | 0.18 |

| Total plants (tp) | 31.87 | 33.00 | 15.00 | 40.00 | 5.47 |

| Survival rate (sr) | 0.64 | 0.66 | 0.30 | 0.80 | 0.11 |

| Germination rate (gr) | 10.27 | 11.29 | 4.29 | 12.57 | 2.55 |

| Linear Regression | |||||||

|---|---|---|---|---|---|---|---|

| Residual | Coefficient | ||||||

| Min | −0.208 | Intercept | NDVI 111 | NDVI 125 | NDVI 131 | h | |

| 1Q | −0.091 | Estimate | −0.439 | 0.541 | −1.148 | 1.256 | 0.184 |

| Median | −0.016 | Std | 0.188 | 0.256 | 0.457 | 0.363 | 0.045 |

| 3Q | 0.075 | Error | −2.336 | 2.123 | −2.513 | 3.464 | 4.075 |

| Max | 0.314 | t-values | 0.02 | 0.034 | 0.012 | 0.004 | 5.8 |

| Parameters | RF | SVM | NN | ||||

| Hyperparameters | Node size | 100 | Cost | 1 | * HL 2 | Neurons 5 | |

| Trees | 500 | γ | 0.1 | Neurons 3 | |||

| N° var | 4 | ε | 0.01 | ||||

| Residual | Mean of squared | 0.011 | N° of vectors | 294 | |||

| % var | 52.23 | SVM | Radial | ||||

| Linear Regression | RF | SVM | NN | |||||

|---|---|---|---|---|---|---|---|---|

| Training | R2 | 0.12 | R2 | 0.68 | R2 | 0.87 | R2 | 0.83 |

| RMSE | 0.117 | RMSE | 0.080 | RMSE | 0.047 | RMSE | 0.051 | |

| MAE | 0.096 | MAE | 0.063 | MAE | 0.030 | MAE | 0.040 | |

| Test | R2 | 0.04 | R2 | 0.52 | R2 | 0.50 | R2 | 0.35 |

| RMSE | 0.119 | RMSE | 0.087 | RMSE | 0.085 | RMSE | 0.110 | |

| MAE | 0.097 | MAE | 0.069 | MAE | 0.066 | MAE | 0.086 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Urquizo, J.; Ccopi, D.; Ortega, K.; Castañeda, I.; Patricio, S.; Passuni, J.; Figueroa, D.; Enriquez, L.; Ore, Z.; Pizarro, S. Estimation of Forage Biomass in Oat (Avena sativa) Using Agronomic Variables through UAV Multispectral Imaging. Remote Sens. 2024, 16, 3720. https://doi.org/10.3390/rs16193720

Urquizo J, Ccopi D, Ortega K, Castañeda I, Patricio S, Passuni J, Figueroa D, Enriquez L, Ore Z, Pizarro S. Estimation of Forage Biomass in Oat (Avena sativa) Using Agronomic Variables through UAV Multispectral Imaging. Remote Sensing. 2024; 16(19):3720. https://doi.org/10.3390/rs16193720

Chicago/Turabian StyleUrquizo, Julio, Dennis Ccopi, Kevin Ortega, Italo Castañeda, Solanch Patricio, Jorge Passuni, Deyanira Figueroa, Lucia Enriquez, Zoila Ore, and Samuel Pizarro. 2024. "Estimation of Forage Biomass in Oat (Avena sativa) Using Agronomic Variables through UAV Multispectral Imaging" Remote Sensing 16, no. 19: 3720. https://doi.org/10.3390/rs16193720