Evaluating Burn Severity and Post-Fire Woody Vegetation Regrowth in the Kalahari Using UAV Imagery and Random Forest Algorithms

Abstract

:1. Introduction

2. Materials and Methods

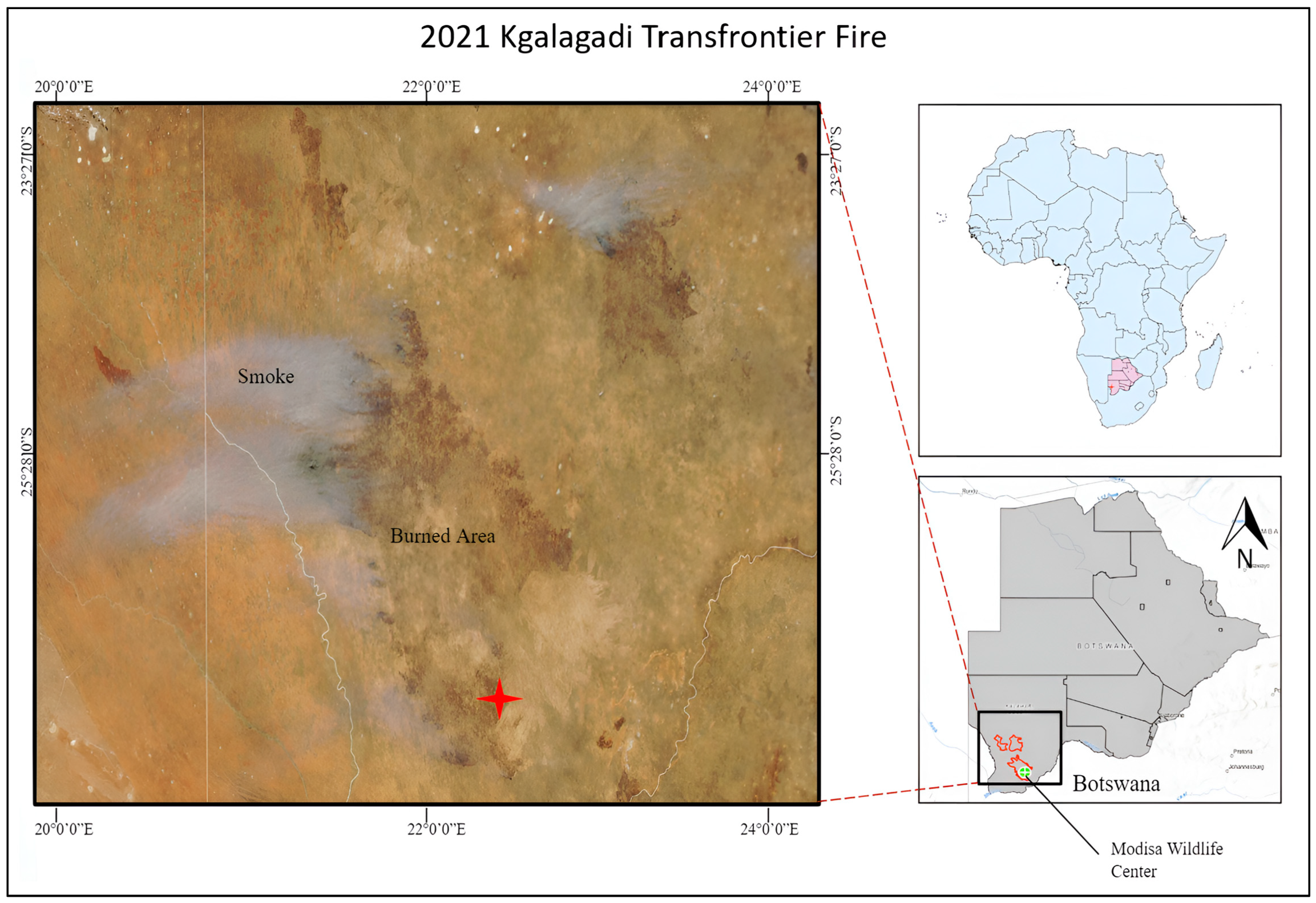

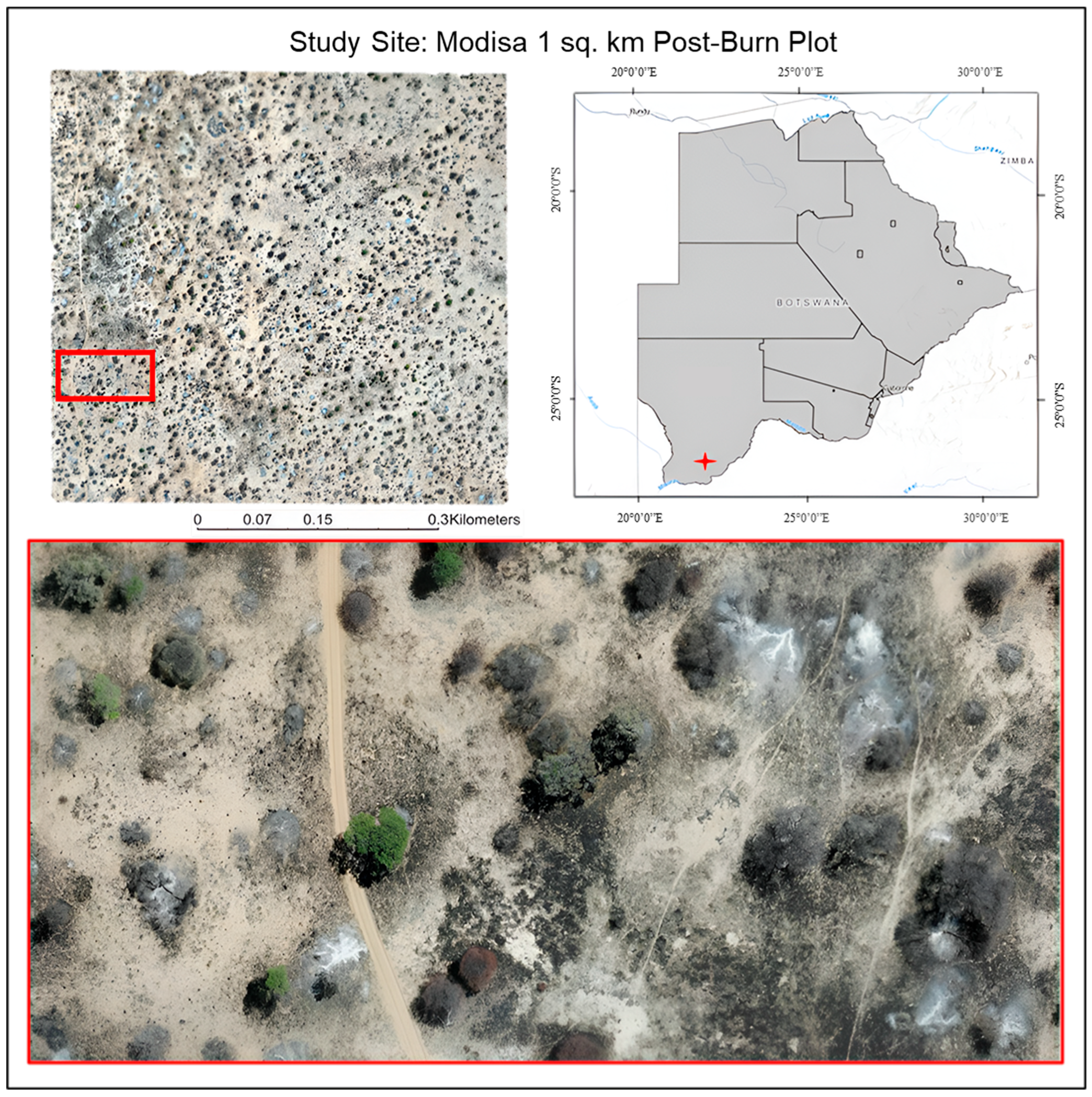

2.1. Study Area

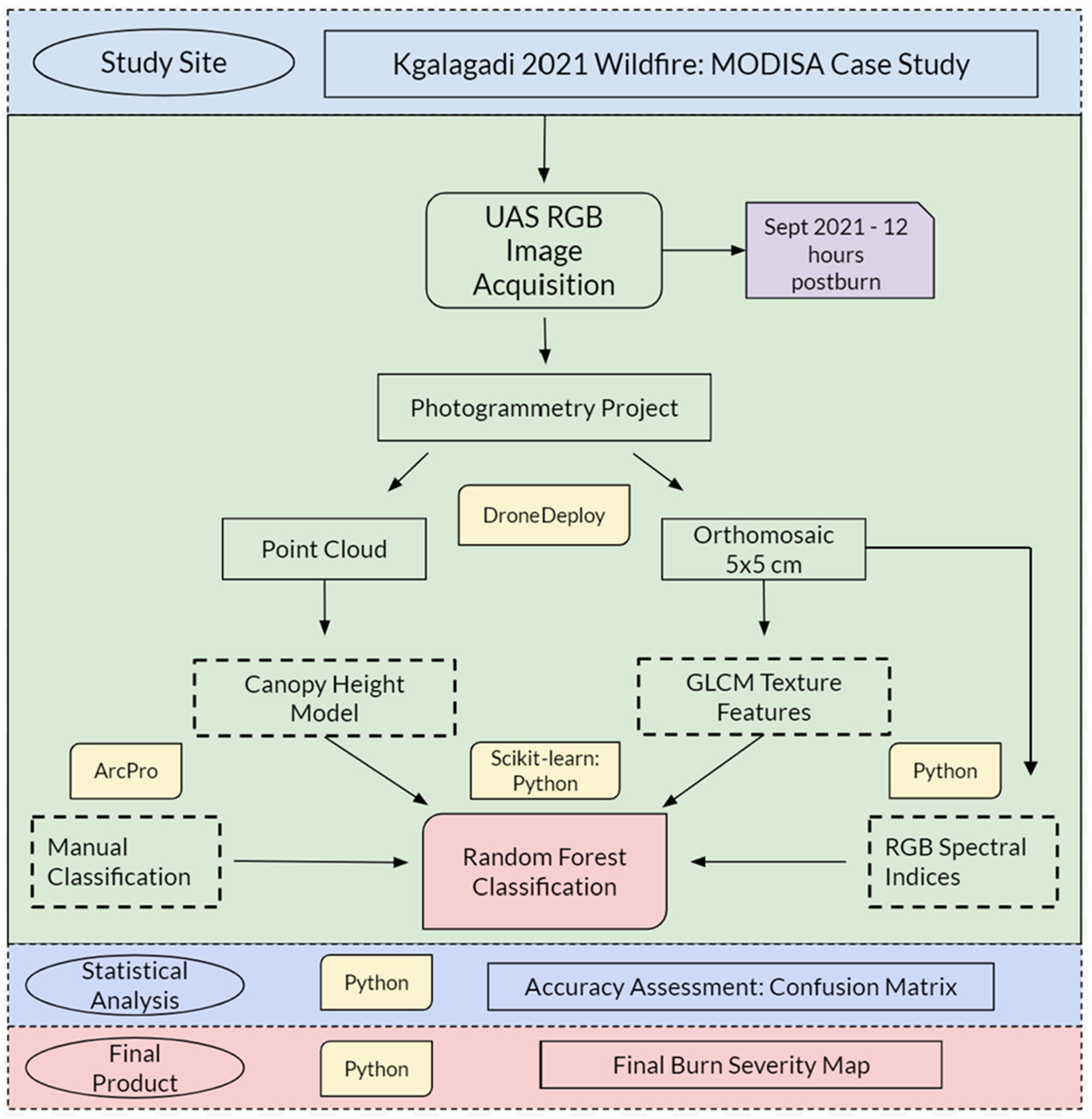

2.2. Approach

2.3. UAV Image Collection and Image Processing

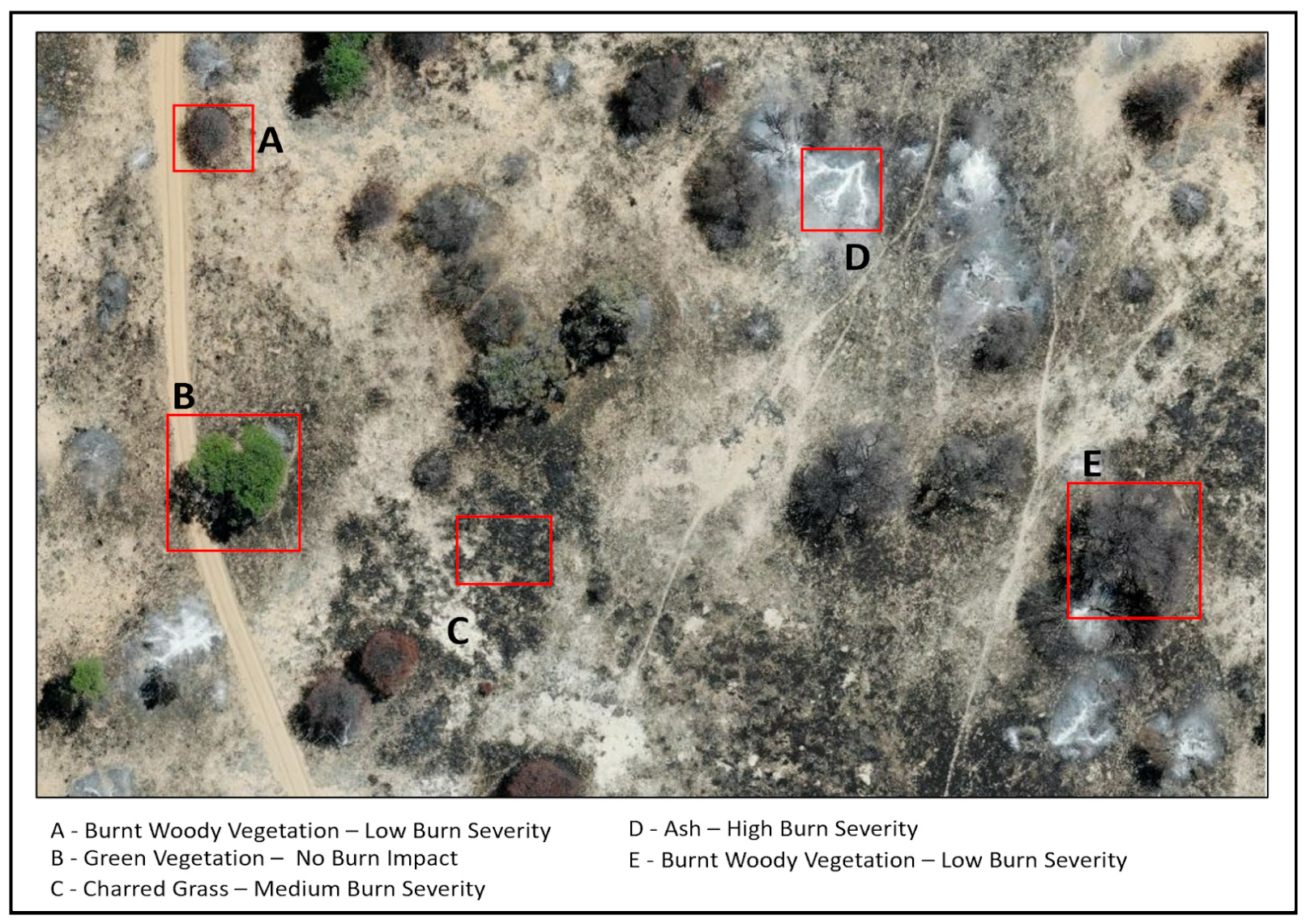

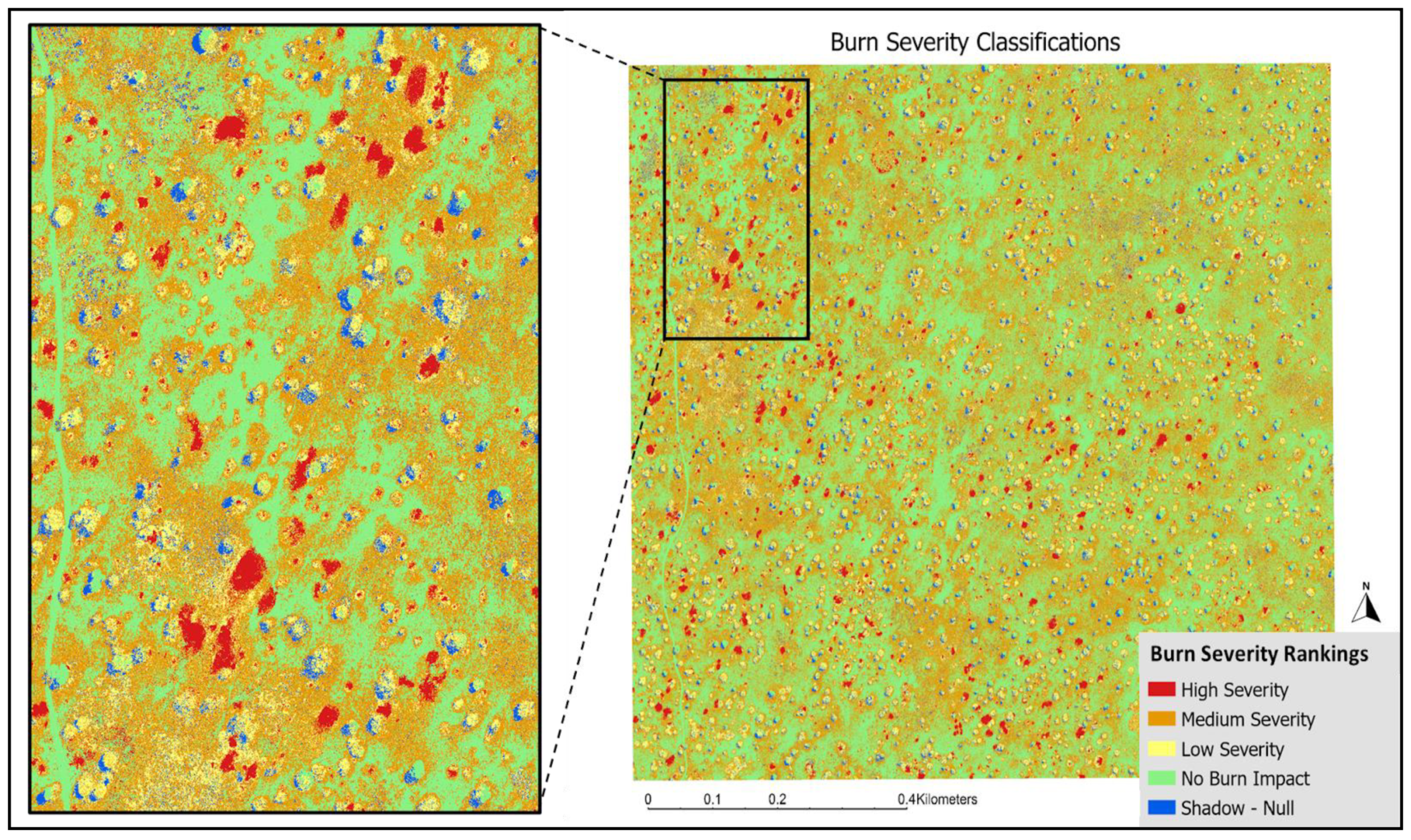

2.4. Burn Severity Classification

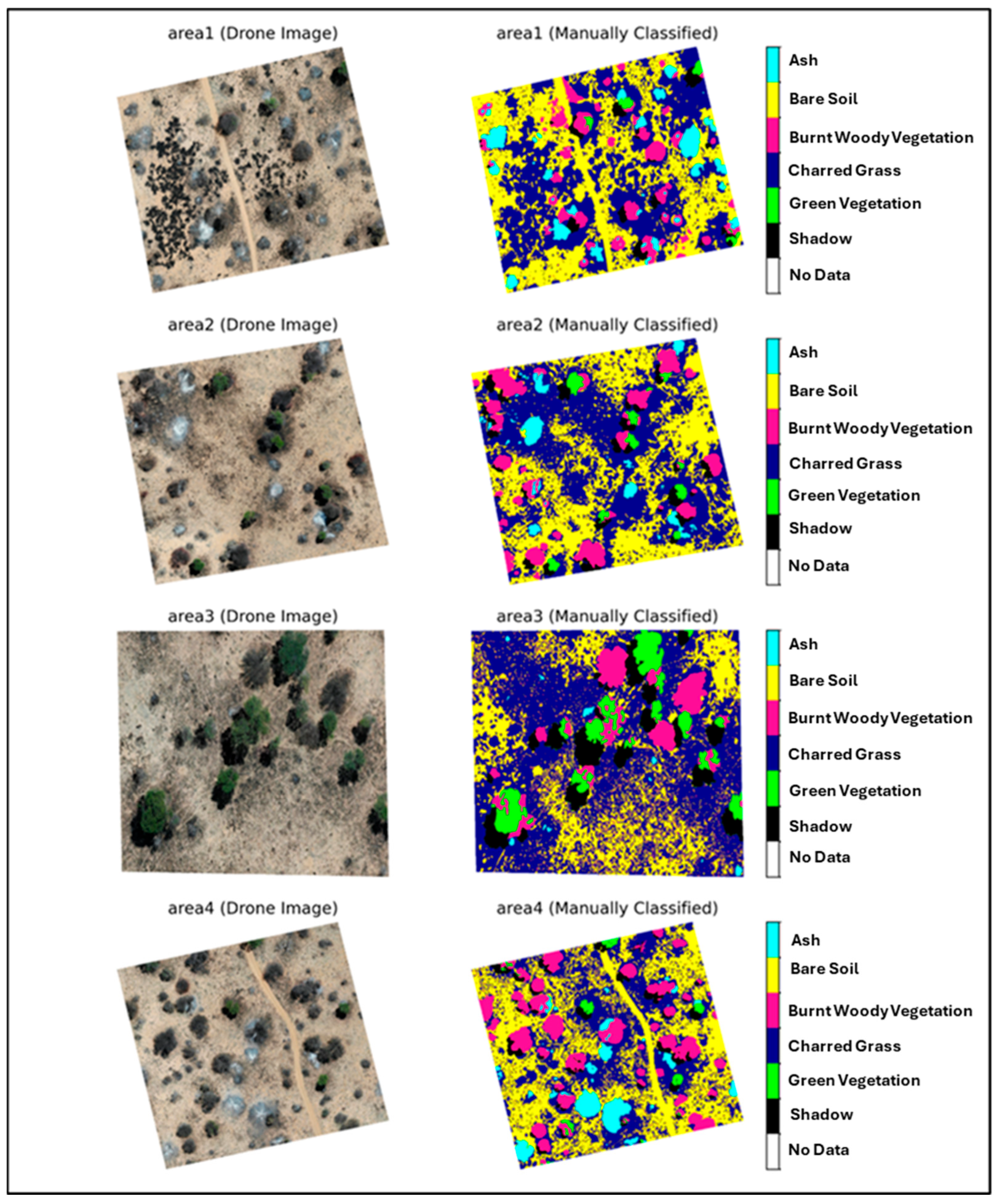

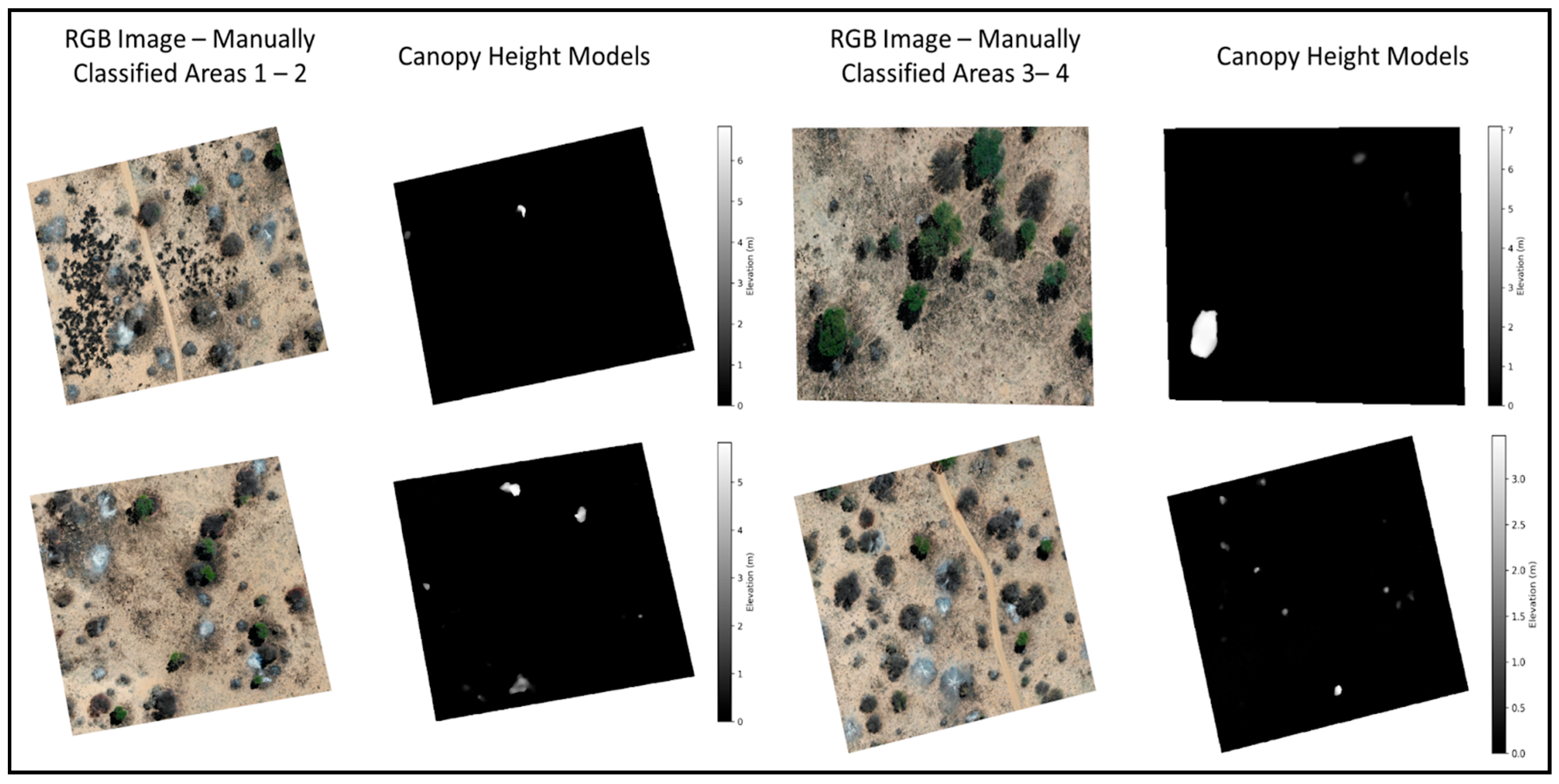

2.5. Supervised Classification—Training Dataset

2.6. Random Forest Classification and Input Variables

2.7. Woody Vegetation Survival/Regrowth Analysis

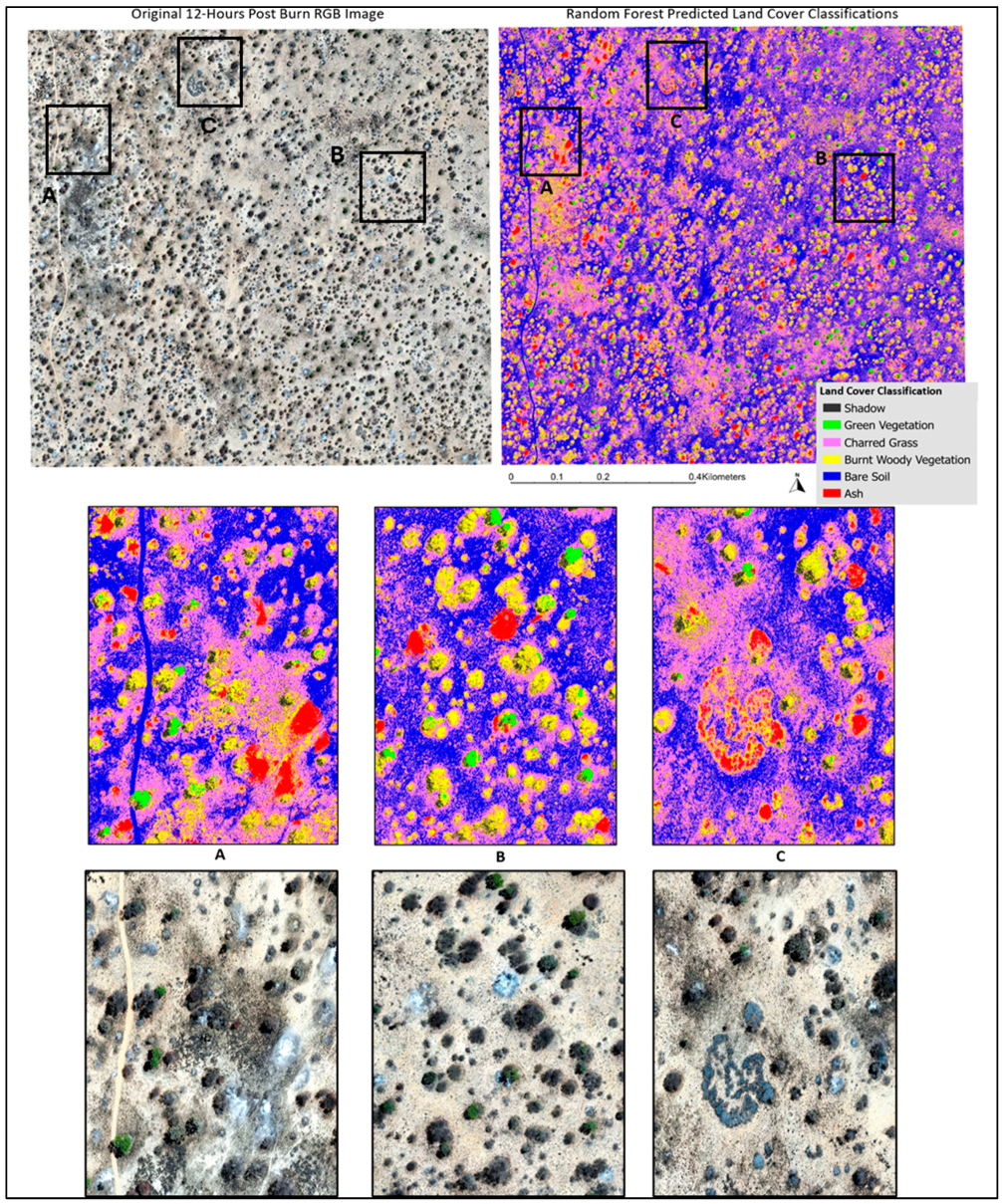

3. Results

3.1. Burn Severity Classification

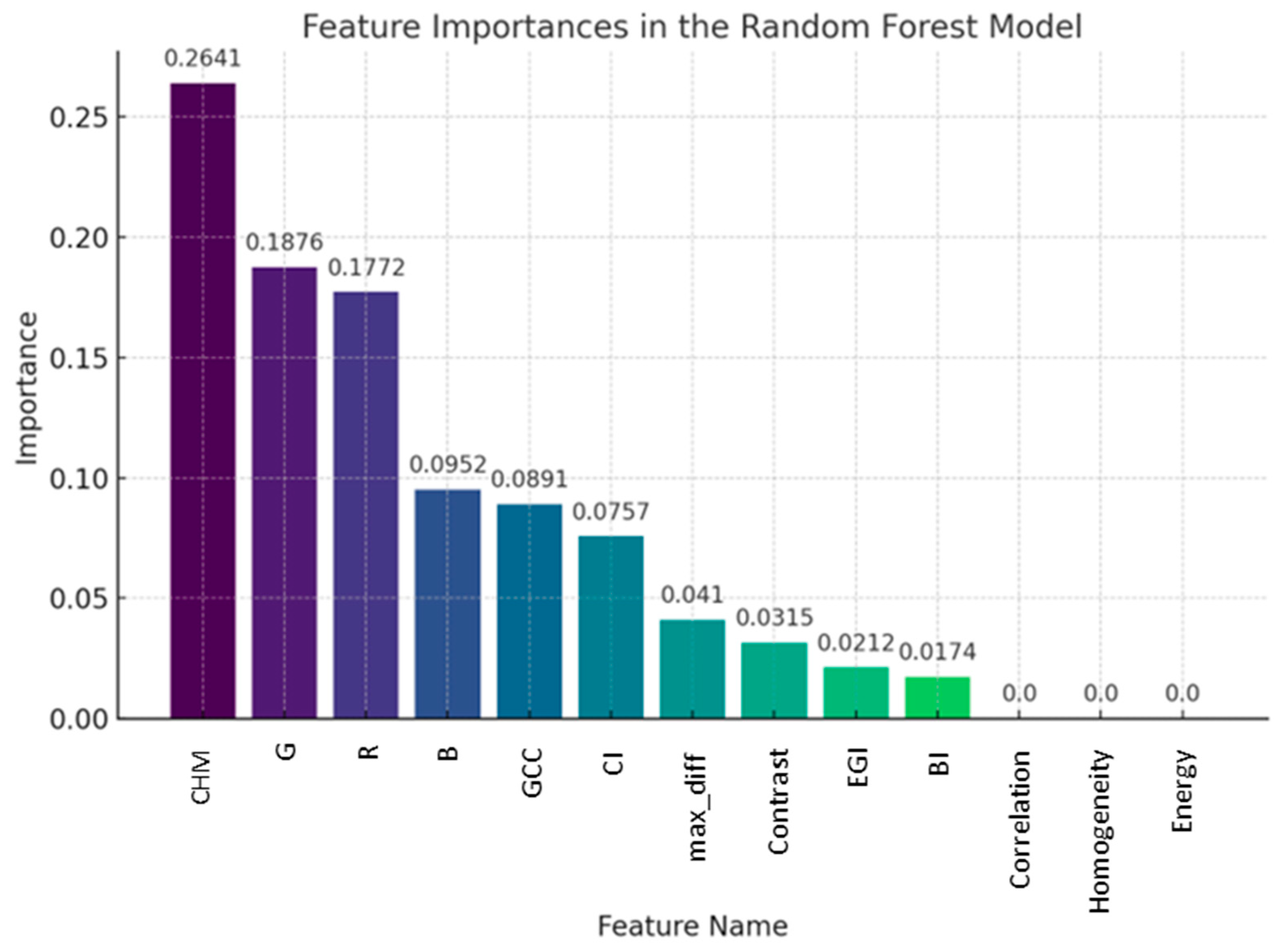

3.2. Relative Importance of Model Predictors

3.3. Woody Vegetation Survival/Regrowth Probabilities

4. Discussion

4.1. Severity Accuracy

4.2. Post-Fire Woody Vegetation Dynamics

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Archibald, S.; Scholes, R.J.; Roy, D.P.; Roberts, G.; Boschetti, L. Southern African Fire Regimes as Revealed by Remote Sensing. Int. J. Wildland Fire 2010, 19, 861. [Google Scholar] [CrossRef]

- Komarek, E.V., Sr. The natural history of lightning. In Proceedings of the Annual Tall Timbers Fire Ecology Conference Number 3, Tallahassee, FL, USA, 9–10 April 1964; pp. 139–183. [Google Scholar]

- Mathieu, R.; Main, R.; Roy, D.P.; Naidoo, L.; Yang, H. The Effect of Surface Fire in Savannah Systems in the Kruger National Park (KNP), South Africa, on the Backscatter of C-Band Sentinel-1 Images. Fire 2019, 2, 37. [Google Scholar] [CrossRef]

- Trollope, W.S.W. Effects and Use of Fire in Southern African Savannas; Department of Livestock and Pasture Science, Faculty of Agriculture University Fort Hare: Alice, South Africa, 1999. [Google Scholar]

- Meyer, T.; Holloway, P.; Christiansen, T.B.; Miller, J.A.; D’Odorico, P.; Okin, G.S. An Assessment of Multiple Drivers Determining Woody Species Composition and Structure: A Case Study from the Kalahari, Botswana. Land 2019, 8, 122. [Google Scholar] [CrossRef]

- Dintwe, K.; Okin, G.S.; Xue, Y. Fire-induced Albedo Change and Surface Radiative Forcing in sub-Saharan Africa Savanna Ecosystems: Implications for the Energy Balance. JGR Atmos. 2017, 122, 6186–6201. [Google Scholar] [CrossRef]

- Saha, M.V.; D’Odorico, P.; Scanlon, T.M. Kalahari Wildfires Drive Continental Post-Fire Brightening in Sub-Saharan Africa. Remote Sens. 2019, 11, 1090. [Google Scholar] [CrossRef]

- Higgins, S.I.; Bond, W.J.; Combrink, H.; Craine, J.M.; February, E.C.; Govender, N.; Lannas, K.; Moncreiff, G.; Trollope, W.S.W. Which Traits Determine Shifts in the Abundance of Tree Species in a Fire-prone Savanna? J. Ecol. 2012, 100, 1400–1410. [Google Scholar] [CrossRef]

- Joubert, D.F.; Smit, G.N.; Hoffman, M.T. The Role of Fire in Preventing Transitions from a Grass Dominated State to a Bush Thickened State in Arid Savannas. J. Arid. Environ. 2012, 87, 1–7. [Google Scholar] [CrossRef]

- Holdo, R.M. Stem Mortality Following Fire in Kalahari Sand Vegetation: Effects of Frost, Prior Damage, and Tree Neighbourhoods. Plant Ecol. 2005, 180, 77–86. [Google Scholar] [CrossRef]

- Govender, N.; Trollope, W.S.W.; Van Wilgen, B.W. The Effect of Fire Season, Fire Frequency, Rainfall and Management on Fire Intensity in Savanna Vegetation in South Africa. J. Appl. Ecol. 2006, 43, 748–758. [Google Scholar] [CrossRef]

- Hoffmann, W.A.; Solbrig, O.T. The Role of Topkill in the Differential Response of Savanna Woody Species to Fire. For. Ecol. Manag. 2003, 180, 273–286. [Google Scholar] [CrossRef]

- Lohmann, D.; Tietjen, B.; Blaum, N.; Joubert, D.F.; Jeltsch, F. Prescribed Fire as a Tool for Managing Shrub Encroachment in Semi-Arid Savanna Rangelands. J. Arid. Environ. 2014, 107, 49–56. [Google Scholar] [CrossRef]

- Hudak, A.T.; Ottmar, R.D.; Vihnanek, R.E.; Brewer, N.W.; Smith, A.M.S.; Morgan, P. The Relationship of Post-Fire White Ash Cover to Surface Fuel Consumption. Int. J. Wildland Fire 2013, 22, 780. [Google Scholar] [CrossRef]

- Keeley, J.E. Fire Intensity, Fire Severity and Burn Severity: A Brief Review and Suggested Usage. Int. J. Wildland Fire 2009, 18, 116. [Google Scholar] [CrossRef]

- Lentile, L.B.; Morgan, P.; Hudak, A.T.; Bobbitt, M.J.; Lewis, S.A.; Smith, A.M.S.; Robichaud, P.R. Post-Fire Burn Severity and Vegetation Response Following Eight Large Wildfires across the Western United States. Fire Ecol. 2007, 3, 91–108. [Google Scholar] [CrossRef]

- Bennett, L.T.; Bruce, M.J.; MacHunter, J.; Kohout, M.; Tanase, M.A.; Aponte, C. Mortality and Recruitment of Fire-Tolerant Eucalypts as Influenced by Wildfire Severity and Recent Prescribed Fire. For. Ecol. Manag. 2016, 380, 107–117. [Google Scholar] [CrossRef]

- Retallack, A.; Finlayson, G.; Ostendorf, B.; Lewis, M. Using Deep Learning to Detect an Indicator Arid Shrub in Ultra-High-Resolution UAV Imagery. Ecol. Indic. 2022, 145, 109698. [Google Scholar] [CrossRef]

- Devine, A.P.; McDonald, R.A.; Quaife, T.; Maclean, I.M.D. Determinants of Woody Encroachment and Cover in African Savannas. Oecologia 2017, 183, 939–951. [Google Scholar] [CrossRef]

- Kraaij, T.; Ward, D. Effects of Rain, Nitrogen, Fire and Grazing on Tree Recruitment and Early Survival in Bush-Encroached Savanna, South Africa. Plant Ecol. 2006, 186, 235–246. [Google Scholar] [CrossRef]

- Roques, K.G.; O’Connor, T.G.; Watkinson, A.R. Dynamics of Shrub Encroachment in an African Savanna: Relative Influences of Fire, Herbivory, Rainfall and Density Dependence. J. Appl. Ecol. 2001, 38, 268–280. [Google Scholar] [CrossRef]

- Sankaran, M.; Hanan, N.P.; Scholes, R.J.; Ratnam, J.; Augustine, D.J.; Cade, B.S.; Gignoux, J.; Higgins, S.I.; Le Roux, X.; Ludwig, F.; et al. Determinants of Woody Cover in African Savannas. Nature 2005, 438, 846–849. [Google Scholar] [CrossRef]

- Case, M.F.; Staver, A.C. Fire Prevents Woody Encroachment Only at Higher-than-historical Frequencies in a South African Savanna. J. Appl. Ecol. 2017, 54, 955–962. [Google Scholar] [CrossRef]

- Beltrán-Marcos, D.; Suárez-Seoane, S.; Fernández-Guisuraga, J.M.; Fernández-García, V.; Pinto, R.; García-Llamas, P.; Calvo, L. Mapping Soil Burn Severity at Very High Spatial Resolution from Unmanned Aerial Vehicles. Forests 2021, 12, 179. [Google Scholar] [CrossRef]

- Fraser, R.; Van Der Sluijs, J.; Hall, R. Calibrating Satellite-Based Indices of Burn Severity from UAV-Derived Metrics of a Burned Boreal Forest in NWT, Canada. Remote Sens. 2017, 9, 279. [Google Scholar] [CrossRef]

- McKenna, P.; Erskine, P.D.; Lechner, A.M.; Phinn, S. Measuring Fire Severity Using UAV Imagery in Semi-Arid Central Queensland, Australia. Int. J. Remote Sens. 2017, 38, 4244–4264. [Google Scholar] [CrossRef]

- Collins, L.; Griffioen, P.; Newell, G.; Mellor, A. The Utility of Random Forests for Wildfire Severity Mapping. Remote Sens. Environ. 2018, 216, 374–384. [Google Scholar] [CrossRef]

- Roy, D.P.; Boschetti, L.; Trigg, S.N. Remote Sensing of Fire Severity: Assessing the Performance of the Normalized Burn Ratio. IEEE Geosci. Remote Sens. Lett. 2006, 3, 112–116. [Google Scholar] [CrossRef]

- Smith, A.M.S.; Wooster, M.J.; Drake, N.A.; Dipotso, F.M.; Falkowski, M.J.; Hudak, A.T. Testing the Potential of Multi-Spectral Remote Sensing for Retrospectively Estimating Fire Severity in African Savannahs. Remote Sens. Environ. 2005, 97, 92–115. [Google Scholar] [CrossRef]

- Hillman, S.; Hally, B.; Wallace, L.; Turner, D.; Lucieer, A.; Reinke, K.; Jones, S. High-Resolution Estimates of Fire Severity—An Evaluation of UAS Image and LiDAR Mapping Approaches on a Sedgeland Forest Boundary in Tasmania, Australia. Fire 2021, 4, 14. [Google Scholar] [CrossRef]

- Von Nonn, J.; Villarreal, M.L.; Blesius, L.; Davis, J.; Corbett, S. An Open-Source Workflow for Scaling Burn Severity Metrics from Drone to Satellite to Support Post-Fire Watershed Management. Environ. Model. Softw. 2024, 172, 105903. [Google Scholar] [CrossRef]

- Kaduyu, I.; Tsheko, R.; Chepete, J.H.; Kgosiesele, E. Burned Area Estimation and Severity Classification Using the Fire Mapping Tool (Fmt) in Arid Savannas of Botswana, a Case Study—Kgalagadi District; Elsevier BV: Amsterdam, The Netherlands, 2023. [Google Scholar]

- Pérez-Rodríguez, L.A.; Quintano, C.; Marcos, E.; Suarez-Seoane, S.; Calvo, L.; Fernández-Manso, A. Evaluation of Prescribed Fires from Unmanned Aerial Vehicles (UAVs) Imagery and Machine Learning Algorithms. Remote Sens. 2020, 12, 1295. [Google Scholar] [CrossRef]

- Kgosikoma, O.E.; Batisani, N. Livestock Population Dynamics and Pastoral Communities’ Adaptation to Rainfall Variability in Communal Lands of Kgalagadi South, Botswana. Pastoralism 2014, 4, 19. [Google Scholar] [CrossRef]

- Porporato, A.; Laio, F.; Ridolfi, L.; Caylor, K.K.; Rodriguez-Iturbe, I. Soil Moisture and Plant Stress Dynamics along the Kalahari Precipitation Gradient. J. Geophys. Res. 2003, 108, 4127. [Google Scholar] [CrossRef]

- Modisa Wildlife Project—Mission. Available online: https://www.modisawildlifeproject.com/mission (accessed on 25 June 2024).

- Lewis, S.A.; Robichaud, P.R.; Hudak, A.T.; Strand, E.K.; Eitel, J.U.H.; Brown, R.E. Evaluating the Persistence of Post-Wildfire Ash: A Multi-Platform Spatiotemporal Analysis. Fire 2021, 4, 68. [Google Scholar] [CrossRef]

- Edwards, A.; Russell-Smith, J.; Maier, S.W. Measuring and Mapping Fire Severity in the Tropical Savannas. In Carbon Accounting and Savanna Fire Management; Murphy, B., Edwards, A., Meyer, M., Russell-Smith, J., Eds.; CSIRO Publishing: Melbourne, Australia, 2015; Chapter 8; pp. 169–181. [Google Scholar]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of Machine-Learning Classification in Remote Sensing: An Applied Review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An Assessment of the Effectiveness of a Random Forest Classifier for Land-Cover Classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random Forest in Remote Sensing: A Review of Applications and Future Directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Meddens, A.J.H.; Kolden, C.A.; Lutz, J.A. Detecting Unburned Areas within Wildfire Perimeters Using Landsat and Ancillary Data across the Northwestern United States. Remote Sens. Environ. 2016, 186, 275–285. [Google Scholar] [CrossRef]

- Mohammadpour, P.; Viegas, D.X.; Viegas, C. Vegetation Mapping with Random Forest Using Sentinel 2 and GLCM Texture Feature—A Case Study for Lousã Region, Portugal. Remote Sens. 2022, 14, 4585. [Google Scholar] [CrossRef]

- Phan, T.N.; Kuch, V.; Lehnert, L.W. Land Cover Classification Using Google Earth Engine and Random Forest Classifier—The Role of Image Composition. Remote Sens. 2020, 12, 2411. [Google Scholar] [CrossRef]

- Ghimire, B.; Rogan, J.; Galiano, V.R.; Panday, P.; Neeti, N. An Evaluation of Bagging, Boosting, and Random Forests for Land-Cover Classification in Cape Cod, Massachusetts, USA. GISci. Remote Sens. 2012, 49, 623–643. [Google Scholar] [CrossRef]

- Kupidura, P. The Comparison of Different Methods of Texture Analysis for Their Efficacy for Land Use Classification in Satellite Imagery. Remote Sens. 2019, 11, 1233. [Google Scholar] [CrossRef]

- Zhou, H.; Fu, L.; Sharma, R.P.; Lei, Y.; Guo, J. A Hybrid Approach of Combining Random Forest with Texture Analysis and VDVI for Desert Vegetation Mapping Based on UAV RGB Data. Remote Sens. 2021, 13, 1891. [Google Scholar] [CrossRef]

- Hall-Beyer, M. Practical Guidelines for Choosing GLCM Textures to Use in Landscape Classification Tasks over a Range of Moderate Spatial Scales. Int. J. Remote Sens. 2017, 38, 1312–1338. [Google Scholar] [CrossRef]

- Haralick, R.M. Statistical and Structural Approaches to Texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Coulston, J.W.; Blinn, C.E.; Thomas, V.A.; Wynne, R.H. Approximating Prediction Uncertainty for Random Forest Regression Models. Photogramm. Eng. Remote Sens. 2016, 82, 189–197. [Google Scholar] [CrossRef]

- Li, Z.; Bi, S.; Hao, S.; Cui, Y. Aboveground Biomass Estimation in Forests with Random Forest and Monte Carlo-Based Uncertainty Analysis. Ecol. Indic. 2022, 142, 109246. [Google Scholar] [CrossRef]

- Wang, G.; Gertner, G.Z.; Fang, S.; Anderson, A.B. A Methodology for Spatial Uncertainty Analysis of Remote Sensing and GIS Products. Photogramm. Eng. Remote Sens. 2005, 71, 1423–1432. [Google Scholar] [CrossRef]

- Zhang, X.; Cui, J.; Wang, W.; Lin, C. A Study for Texture Feature Extraction of High-Resolution Satellite Images Based on a Direction Measure and Gray Level Co-Occurrence Matrix Fusion Algorithm. Sensors 2017, 17, 1474. [Google Scholar] [CrossRef]

- Shin, J.; Seo, W.; Kim, T.; Park, J.; Woo, C. Using UAV Multispectral Images for Classification of Forest Burn Severity—A Case Study of the 2019 Gangneung Forest Fire. Forests 2019, 10, 1025. [Google Scholar] [CrossRef]

- Costa, H.; Benevides, P.; Moreira, F.D.; Moraes, D.; Caetano, M. Spatially Stratified and Multi-Stage Approach for National Land Cover Mapping Based on Sentinel-2 Data and Expert Knowledge. Remote Sens. 2022, 14, 1865. [Google Scholar] [CrossRef]

- Thomsen, A.M.; Ooi, M.K.J. Shifting Season of Fire and Its Interaction with Fire Severity: Impacts on Reproductive Effort in Resprouting Plants. Ecol. Evol. 2022, 12, e8717. [Google Scholar] [CrossRef]

- Meyer, K.M.; Ward, D.; Moustakas, A.; Wiegand, K. Big Is Not Better: Small Acacia Mellifera Shrubs Are More Vital after Fire. Afr. J. Ecol. 2005, 43, 131–136. [Google Scholar] [CrossRef]

- Brando, P.M.; Nepstad, D.C.; Balch, J.K.; Bolker, B.; Christman, M.C.; Coe, M.; Putz, F.E. Fire-induced Tree Mortality in a Neotropical Forest: The Roles of Bark Traits, Tree Size, Wood Density and Fire Behavior. Glob. Chang. Biol. 2012, 18, 630–641. [Google Scholar] [CrossRef]

| Date of Image Acquisition | Time Since Fire |

|---|---|

| 26 September 2021—Dry Season | 12 h post-burn |

| 29 December 2021—Wet Season | 6 months post-burn |

| 21 July 2022—Dry Season | 1 year post-burn |

| 9 August 2023—Dry Season | 2 years post-burn |

| 23 November 2023—Wet Season | 2.5 years post-burn |

| Classification Schema | Burn Severity Ranking |

|---|---|

| Green Vegetation | 0–No Burn Impact |

| Bare Soil | 0–No Burn Impact |

| Burnt Woody Vegetation | 1–Low Severity |

| Charred Grass | 2–Medium Severity |

| Ash | 3–High Severity |

| Shadow | Null |

| Training Indices and Variables | Equations and Descriptions |

|---|---|

| Excess Green Index (EGI) | 2 × G − R − B |

| Green Chromatic Coordinate Index (GCC) | G/(G + R + B) |

| Char Index (CI) | BI + (MaxDiff × 15) |

| Brightness Index (BI) | R + G + B |

| Maximum RGB Difference (MaxDiff) | Max(|B − G|, |B − R|, |R − G|) |

| Red Band | R |

| Green Band | G |

| Blue Band | B |

| CHM | The height of vegetation above the ground surface, derived by subtracting the DTM from the DSM. |

| GLCM—Contrast | Measures the local variations in GLCM. |

| GLCM—Energy | Provides the sum of squared elements in the GLCM. |

| GLCM—Correlation | Measures the joint probability occurrence of the specified pixel pairs. |

| GLCM—Homogeneity | Measures the closeness of the distribution of elements in the GLCM to the GLCM diagonal. |

| Land Cover Classification | Precision | Recall | F1-Score | Support | Percent of Cover |

|---|---|---|---|---|---|

| Shadow | 0.73 | 0.66 | 0.70 | 335,703 | 4.90% |

| Green Vegetation | 0.91 | 0.90 | 0.90 | 210,213 | 3.07% |

| Charred Grass | 0.74 | 0.77 | 0.75 | 3,019,852 | 44.11% |

| Burnt Woody Vegetation | 0.56 | 0.47 | 0.51 | 771,185 | 11.26% |

| Bare Soil | 0.77 | 0.78 | 0.78 | 2,285,950 | 33.39% |

| Ash | 0.78 | 0.74 | 0.75 | 223,648 | 3.27% |

| Weighted Average | 0.75 | 0.74 | 0.75 | ||

| Overall Accuracy (OA): 0.79717 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gillespie, M.; Okin, G.S.; Meyer, T.; Ochoa, F. Evaluating Burn Severity and Post-Fire Woody Vegetation Regrowth in the Kalahari Using UAV Imagery and Random Forest Algorithms. Remote Sens. 2024, 16, 3943. https://doi.org/10.3390/rs16213943

Gillespie M, Okin GS, Meyer T, Ochoa F. Evaluating Burn Severity and Post-Fire Woody Vegetation Regrowth in the Kalahari Using UAV Imagery and Random Forest Algorithms. Remote Sensing. 2024; 16(21):3943. https://doi.org/10.3390/rs16213943

Chicago/Turabian StyleGillespie, Madeleine, Gregory S. Okin, Thoralf Meyer, and Francisco Ochoa. 2024. "Evaluating Burn Severity and Post-Fire Woody Vegetation Regrowth in the Kalahari Using UAV Imagery and Random Forest Algorithms" Remote Sensing 16, no. 21: 3943. https://doi.org/10.3390/rs16213943

APA StyleGillespie, M., Okin, G. S., Meyer, T., & Ochoa, F. (2024). Evaluating Burn Severity and Post-Fire Woody Vegetation Regrowth in the Kalahari Using UAV Imagery and Random Forest Algorithms. Remote Sensing, 16(21), 3943. https://doi.org/10.3390/rs16213943