Recent Trends and Advances in Utilizing Digital Image Processing for Crop Nitrogen Management

Abstract

1. Introduction

2. Materials and Methods

- ‘Nitrogen’, ‘Machine Learning’, and ‘Computer Vision’;

- ‘Nitrogen’, ‘Deep Learning’, and ‘RGB images’;

- ‘Nitrogen’, Agriculture’, and ‘Digital Image Processing’;

- ‘Nitrogen’, ‘Artificial Intelligence’, and ‘Images’.

3. Overview of Digital Image Processing

3.1. Digital Image Processing System

3.2. Image Acquisition and Preprocessing

3.2.1. Radiometric and Geometric Error Correction

3.2.2. Noise Reduction

3.3. Image Enhancement

3.4. Thematic Information Extraction

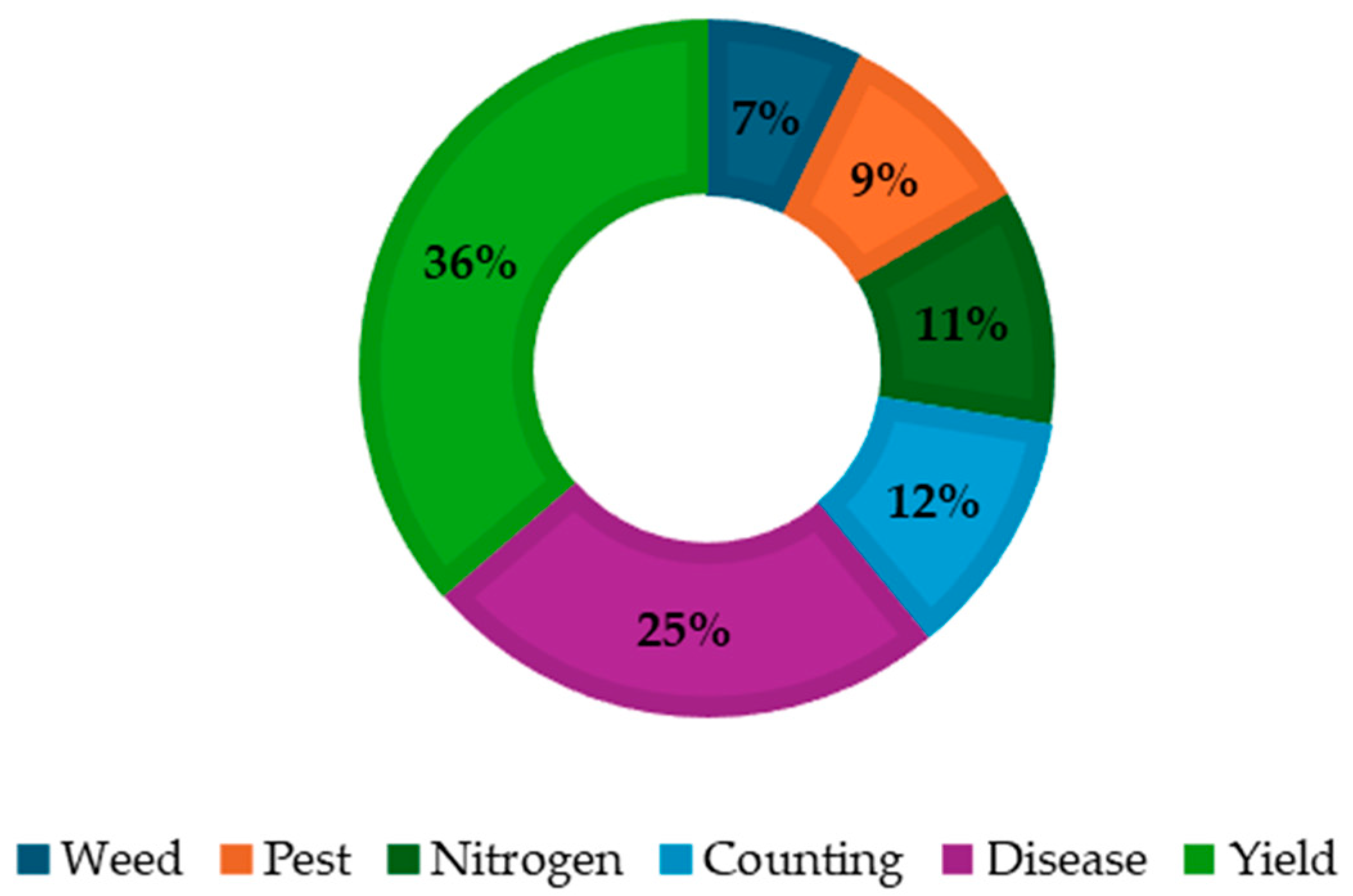

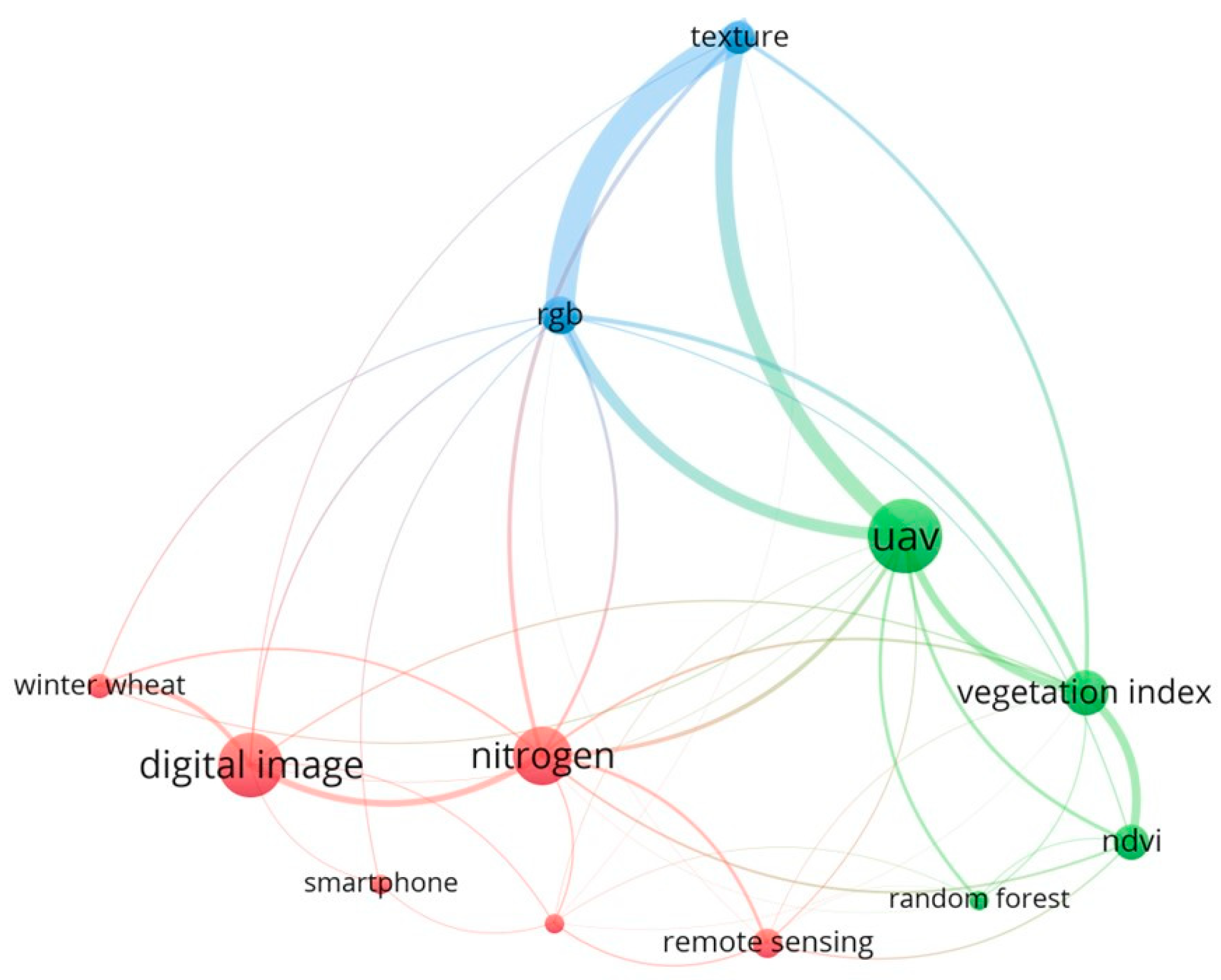

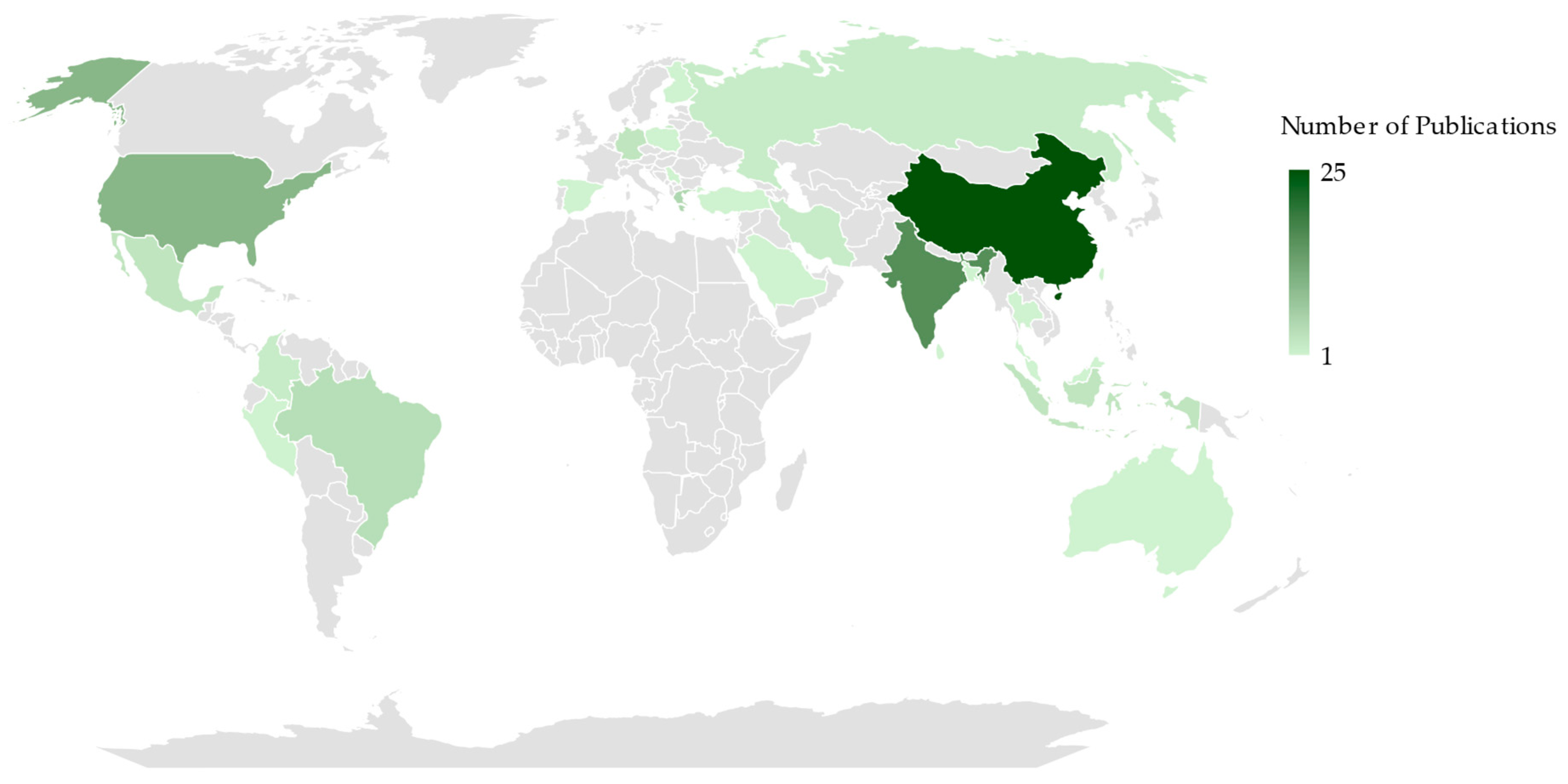

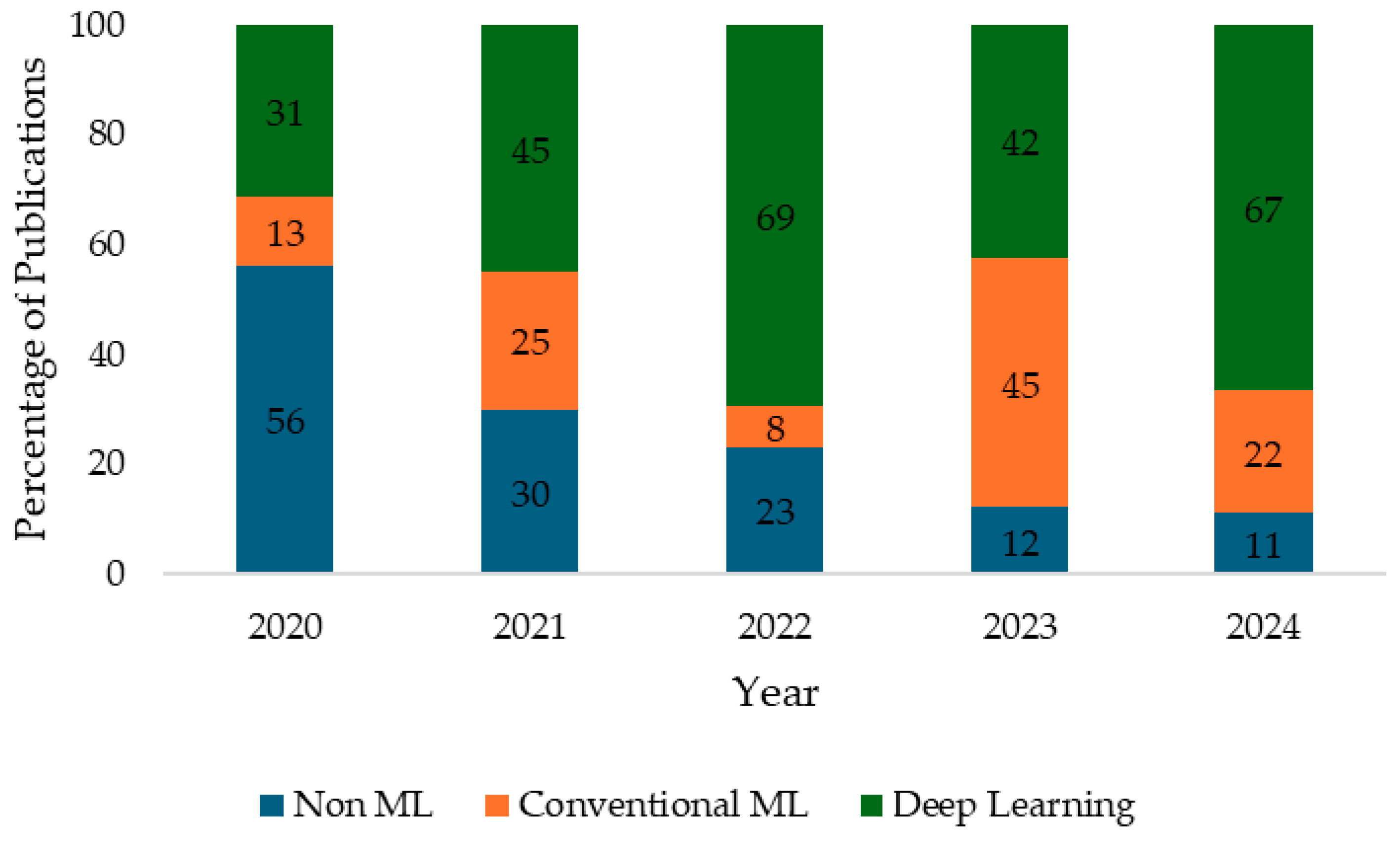

4. Digital Image Processing Trends in Crop Nitrogen Management

5. Challenges and Future Directions

5.1. Image Acquisition

5.2. Digital Image Processing

5.3. Socio-Economic Barriers

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Azimi, S.; Kaur, T.; Gandhi, T.K. A Deep Learning Approach to Measure Stress Level in Plants Due to Nitrogen Deficiency. Measurement 2021, 173, 108650. [Google Scholar] [CrossRef]

- Yi, J.; Lopez, G.; Hadir, S.; Weyler, J.; Klingbeil, L.; Deichmann, M.; Gall, J.; Seidel, S.J. Non-Invasive Diagnosis of Nutrient Deficiencies in Winter Wheat and Winter Rye Using Uav-Based Rgb Images. SSRN 2023. [Google Scholar] [CrossRef]

- Wu, Y.; Al-Jumaili, S.J.; Al-Jumeily, D.; Bian, H. Prediction of the Nitrogen Content of Rice Leaf Using Multi-Spectral Images Based on Hybrid Radial Basis Function Neural Network and Partial Least-Squares Regression. Sensors 2022, 22, 8626. [Google Scholar] [CrossRef] [PubMed]

- Banerjee, B.P.; Joshi, S.; Thoday-Kennedy, E.; Pasam, R.K.; Tibbits, J.; Hayden, M.; Spangenberg, G.; Kant, S. High-Throughput Phenotyping Using Digital and Hyperspectral Imaging-Derived Biomarkers for Genotypic Nitrogen Response. J. Exp. Bot. 2020, 71, 4604–4615. [Google Scholar] [CrossRef] [PubMed]

- Fuentes-Pacheco, J.; Roman-Rangel, E.; Reyes-Rosas, A.; Magadan-Salazar, A.; Juarez-Lopez, P.; Ontiveros-Capurata, R.E.; Rendón-Mancha, J.M. A Curriculum Learning Approach to Classify Nitrogen Concentration in Greenhouse Basil Plants Using a Very Small Dataset and Low-Cost RGB Images. IEEE Access 2024, 12, 27411–27425. [Google Scholar] [CrossRef]

- Zhang, X.; Zou, T.; Lassaletta, L.; Mueller, N.D.; Tubiello, F.N.; Lisk, M.D.; Lu, C.; Conant, R.T.; Dorich, C.D.; Gerber, J.; et al. Quantification of Global and National Nitrogen Budgets for Crop Production. Nat. Food 2021, 2, 529–540. [Google Scholar] [CrossRef] [PubMed]

- Anas, M.; Liao, F.; Verma, K.K.; Sarwar, M.A.; Mahmood, A.; Chen, Z.L.; Li, Q.; Zeng, X.P.; Liu, Y.; Li, Y.R. Fate of Nitrogen in Agriculture and Environment: Agronomic, Eco-Physiological, and Molecular Approaches to Improve Nitrogen Use Efficiency. Biol. Res. 2020, 53, 47. [Google Scholar] [CrossRef]

- Li, H.; Zhang, J.; Xu, K.; Jiang, X.; Zhu, Y.; Cao, W.; Ni, J. Spectral Monitoring of Wheat Leaf Nitrogen Content Based on Canopy Structure Information Compensation. Comput. Electron. Agric. 2021, 190, 106434. [Google Scholar] [CrossRef]

- Zhao, B.; Zhang, Y.; Duan, A.; Liu, Z.; Xiao, J.; Liu, Z.; Qin, A.; Ning, D.; Li, S.; Ata-Ul-Karim, S.T. Estimating the Growth Indices and Nitrogen Status Based on Color Digital Image Analysis During Early Growth Period of Winter Wheat. Front. Plant Sci. 2021, 12, 619522. [Google Scholar] [CrossRef] [PubMed]

- Thompson, L.J.; Puntel, L.A. Transforming Unmanned Aerial Vehicle (UAV) and Multispectral Sensor into a Practical Decision Support System for Precision Nitrogen Management in Corn. Remote Sens. 2020, 12, 1597. [Google Scholar] [CrossRef]

- Ahsan, M.; Eshkabilov, S.; Cemek, B.; Küçüktopcu, E.; Lee, C.W.; Simsek, H. Deep Learning Models to Determine Nutrient Concentration in Hydroponically Grown Lettuce Cultivars (Lactuca sativa L.). Sustainability 2021, 14, 416. [Google Scholar] [CrossRef]

- Montgomery, K.; Henry, J.; Vann, M.; Whipker, B.E.; Huseth, A.; Mitasova, H. Measures of Canopy Structure from Low-Cost UAS for Monitoring Crop Nutrient Status. Drones 2020, 4, 36. [Google Scholar] [CrossRef]

- Fan, Y.; Feng, H.; Jin, X.; Yue, J.; Liu, Y.; Li, Z.; Feng, Z.; Song, X.; Yang, G. Estimation of the Nitrogen Content of Potato Plants Based on Morphological Parameters and Visible Light Vegetation Indices. Front. Plant Sci. 2022, 13, 1012070. [Google Scholar] [CrossRef] [PubMed]

- Mendoza-Tafolla, R.O.; Ontiveros-Capurata, R.-E.; Juarez-Lopez, P.; Alia-Tejacal, I.; Lopez-Martinez, V.; Ruiz-Alvarez, O. Nitrogen and Chlorophyll Status in Romaine Lettuce Using Spectral Indices from RGB Digital Images. Zemdirbyste-Agriculture 2021, 108, 79–86. [Google Scholar] [CrossRef]

- Yi, J.; Krusenbaum, L.; Unger, P.; Hüging, H.; Seidel, S.J.; Schaaf, G.; Gall, J. Deep Learning for Non-Invasive Diagnosis of Nutrient Deficiencies in Sugar Beet Using RGB Images. Sensors 2020, 20, 5893. [Google Scholar] [CrossRef]

- Paudel, A.; Brown, J.; Upadhyaya, P.; Asad, A.B.; Kshetri, S.; Karkee, M.; Davidson, J.R.; Grimm, C.; Thompson, A. Machine Vision Based Assessment of Fall Color Changes in Apple Trees: Exploring Relationship with Leaf Nitrogen Concentration. arXiv 2024. [Google Scholar] [CrossRef]

- Ramírez-Pedraza, A.; Salazar-Colores, S.; Terven, J.; Romero-González, J.-A.; González-Barbosa, J.-J.; Córdova-Esparza, D.-M. Nutritional Monitoring of Rhodena Lettuce via Neural Networks and Point Cloud Analysis. AgriEngineering 2024, 6, 3474–3493. [Google Scholar] [CrossRef]

- Lin, R.; Chen, H.; Wei, Z.; Li, Y.; Han, N. Diagnosis of Nitrogen Concentration of Maize Based on Sentinel-2 Images: A Case Study of the Hetao Irrigation District. In Proceedings of the 2022 International Conference on Computer Engineering and Artificial Intelligence (ICCEAI), Shijiazhuang, China, 22–24 July 2022; pp. 163–167. [Google Scholar] [CrossRef]

- Ampatzidis, L.; Costa, L.; Albrecht, U. Precision Nutrient Management Utilizing UAV Multispectral Imaging and Artificial Intelligence. In Proceedings of the XXXI International Horticultural Congress (IHC2022): III International Symposium on Mechanization, Precision Horticulture and Robotics: Precision and Digital Horticulture in Field Environments, Angers, France, 14 August 2022; Acta Horticulturae. pp. 321–330. [Google Scholar] [CrossRef]

- Kou, J.; Duan, L.; Yin, C.; Ma, L.; Chen, X.; Gao, P.; Lv, X. Predicting Leaf Nitrogen Content in Cotton with UAV RGB Images. Sustainability 2022, 14, 9259. [Google Scholar] [CrossRef]

- Patil, S.M.; Choudhary, S.; Kholová, J.; Anbazhagan, K.; Parnandi, Y.; Gattu, P.; Mallayee, S.; Prasad, K.S.V.V.; Kumar, V.P.; Rajalakshmi, P.; et al. UAV-Based Digital Field Phenotyping for Crop Nitrogen Estimation Using RGB Imagery. In Proceedings of the 2023 IEEE IAS Global Conference on Emerging Technologies (GlobConET), London, UK, 19–21 May 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Alkhaled, A.; Townsend, P.A.; Wang, Y. Remote Sensing for Monitoring Potato Nitrogen Status. Am. J. Potato Res. 2023, 100, 1–14. [Google Scholar] [CrossRef]

- Shinde, B.S.; Dani, A.R. The Origins of Digital Image Processing & Application Areas in Digital Image Processing Medical Images. Int. J. Eng. Res. Technol. 2018, 1, 66–71. [Google Scholar] [CrossRef]

- Allen, G. A Pixel is Not a Little Square! [Except When It Is]. Available online: https://greg.org/archive/2010/07/01/a-pixel-is-not-a-little-square-except-when-it-is.html (accessed on 20 April 2024).

- Jensen, J.R. Introductory Digital Image Processing, 3rd ed.; Prentice Hall: Saddle River, NJ, USA, 2005. [Google Scholar]

- Shajahan, S. Agricultural Field Applications of Digital Image Processing Using an Open-Source ImageJ Platform. Ph.D. Thesis, North Dakota State University of Agriculture and Applied Science, Fargo, ND, USA, 2019. [Google Scholar]

- Annadurai, S.; Shanmugalakshmi, R. Fundamentals of Digital Image Processing; Pearson Education India: Tamil Nadu, India, 2007; ISBN 81-7758-479-0. [Google Scholar]

- Jähne, B. Digital Image Processing, 6th ed.; Springer: Berlin, Germany, 2005; ISBN 9783540275633. [Google Scholar]

- Vibhute, A.; Bodhe, S.K. Applications of image processing in agriculture: A survey. IJCA 2012, 52, 34–40. [Google Scholar] [CrossRef]

- Ghosh, S.K. Digital Image Processing; Alpha Science International Ltd: Oxford, UK, 2013; ISBN 9781842659960. [Google Scholar]

- Yadav, A.; Yadav, P. Digital Image Processing, 1st ed.; Lakshmi Publications Pvt Ltd: New Delhi, India, 2009; ISBN 1-944131-46-9. [Google Scholar]

- Verma, R.; Ali, J. A comparative study of various types of image noise and efficient noise removal techniques. IJARCSSE 2013, 3, 617–622. [Google Scholar]

- Fan, L.; Zhang, F.; Fan, H.; Zhang, C. Brief review of image denoising techniques. Vis. Comput. Ind. Biomed. Art 2019, 2, 7. [Google Scholar] [CrossRef]

- You, H.; Zhou, M.; Zhang, J.; Peng, W.; Sun, C. Sugarcane Nitrogen Nutrition Estimation with Digital Images and Machine Learning Methods. Remote Sens. 2023 13, 14939. [CrossRef]

- Wang, L.; Duan, Y.; Zhang, L.; Rehman, T.U.; Ma, D.; Jin, J. Precise Estimation of NDVI with a Simple NIR Sensitive RGB Camera and Machine Learning Methods for Corn Plants. Sensors 2020, 20, 3208. [Google Scholar] [CrossRef] [PubMed]

- Rover, D.P.B.; Mesquita, R.N.d.; Andrade, D.A.d.; Menezes, M.O.d. Nutritional Evaluation of Brachiaria brizantha cv. marandu using Convolutional Neural Networks. Int. Artif. 2020, 23, 85–96. [Google Scholar] [CrossRef]

- Meiyan, S.; Jinyu, Z.; Xiaohong, Y.; Xiaohe, G.; Baoguo, L.; Yuntao, M. A Spectral Decomposition Method for Estimating the Leaf Nitrogen Status of Maize by UAV-Based Hyperspectral Imaging. Comput. Electron. Agric. 2023, 212, 108100. [Google Scholar] [CrossRef]

- Oliveira, R.A.; Marcato Junior, J.; Soares Costa, C.; Näsi, R.; Koivumäki, N.; Niemeläinen, O.; Kaivosoja, J.; Nyholm, L.; Pistori, H.; Honkavaara, E. Silage Grass Sward Nitrogen Concentration and Dry Matter Yield Estimation Using Deep Regression and RGB Images Captured by UAV. Agronomy 2022, 12, 1352. [Google Scholar] [CrossRef]

- Kolhar, S.; Jagtap, J.; Shastri, R. Deep Neural Networks for Classifying Nutrient Deficiencies in Rice Plants Using Leaf Images. IJCDS 2024, 15, 305–314. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Zhou, X.; Cheng, Q.; Fei, S.; Chen, Z. A Machine-Learning Model Based on the Fusion of Spectral and Textural Features from UAV Multi-Sensors to Analyse the Total Nitrogen Content in Winter Wheat. Remote Sens. 2023, 15, 2152. [Google Scholar] [CrossRef]

- Wang, Y.; Feng, C.; Ma, Y.; Chen, X.; Lu, B.; Song, Y.; Zhang, Z.; Zhang, R. Estimation of Nitrogen Concentration in Walnut Canopies in Southern Xinjiang Based on UAV Multispectral Images. Agronomy 2023, 13, 1604. [Google Scholar] [CrossRef]

- Munir, S.; Seminar, K.B.; Sudradjat; Sukoco, H. The Application of Smart and Precision Agriculture (SPA) for Measuring Leaf Nitrogen Content of Oil Palm in Peat Soil Areas. In Proceedings of the 2023 International Conference on Computer Science, Information Technology and Engineering (ICCoSITE), Jakarta, Indonesia, 16 February 2023; pp. 650–655. [Google Scholar] [CrossRef]

- Budiman, R.; Seminar, K.B.; Sudredjat, É. Development of Soil Nitrogen Estimation System in Oil Palm Land with Sentinel-1 Image Analysis Approach. In Smart and Sustainable Agriculture, Communications in Computer and Information Science; Springer: Berlin/Heidelberg, Germany, 2021; pp. 153–165. [Google Scholar] [CrossRef]

- Jiang, J.; Wu, Y.; Liu, Q.; Liu, Y.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; Liu, X. Developing an Efficiency and Energy-Saving Nitrogen Management Strategy for Winter Wheat Based on the UAV Multispectral Imagery and Machine Learning Algorithm. Precis. Agric. 2023, 24, 2019–2043. [Google Scholar] [CrossRef]

- Yang, Y.; Wei, X.; Wang, J.; Zhou, G.; Wang, J.; Jiang, Z.; Zhao, J.; Ren, Y. Prediction of Seedling Oilseed Rape Crop Phenotype by Drone-Derived Multimodal Data. Remote Sens. 2023, 15, 3951. [Google Scholar] [CrossRef]

- Nath, D.; Dutta, P.K.; Bhattacharya, A.K. Detection of Plant Diseases and Nutritional Deficiencies from Unhealthy Plant Leaves Using Machine Learning Techniques. In Proceedings of the 4th Smart Cities Symposium (SCS 2021), Online Conference, Bahrain, 21–23 November 2021; pp. 351–356. [Google Scholar] [CrossRef]

- Sari, Y.; Maulida, M.; Maulana, R.; Wahyudi, J.; Shalludin, A. Detection of Corn Leaves Nutrient Deficiency Using Support Vector Machine (SVM). In Proceedings of the 2021 4th International Conference of Computer and Informatics Engineering (IC2IE), Depok, Indonesia, 14–15 September 2021; pp. 396–400. [Google Scholar] [CrossRef]

- Li, W.; Wang, K.; Han, G.; Wang, H.; Tan, N.; Yan, Z. Integrated Diagnosis and Time-Series Sensitivity Evaluation of Nutrient Deficiencies in Medicinal Plant (Ligusticum chuanxiong Hort.) Based on UAV Multispectral Sensors. Front. Plant Sci. 2023, 13, 1092610. [Google Scholar] [CrossRef]

- Song, Y.; Li, S.; Liu, Z.; Zhang, Y.; Shen, N. Analysis on Chlorophyll Diagnosis of Wheat Leaves Based on Digital Image Processing and Feature Selection. TS 2022, 39, 381–387. [Google Scholar] [CrossRef]

- Chaparro, J.E.; Aedo, J.E.; Lumbreras Ruiz, F. Machine Learning for the Estimation of Foliar Nitrogen Content in Pineapple Crops Using Multispectral Images and Internet of Things (IoT) Platforms. J. Agric. Food Res. 2024, 18, 101208. [Google Scholar] [CrossRef]

- Yin, H.; Li, F.; Yang, H.; Di, Y.; Hu, Y.; Yu, K. Mapping Plant Nitrogen Concentration and Aboveground Biomass of Potato Crops from Sentinel-2 Data Using Ensemble Learning Models. Remote Sens. 2024, 16, 349. [Google Scholar] [CrossRef]

- Song, Y.; Meng, X.; Li, Y.; Liu, Z.; Zhang, H. An Integrative Approach for Mineral Nutrient Quantification in Dioscorea Leaves: Uniting Image Processing and Machine Learning. EBSCO 2023, 40, 1153. [Google Scholar] [CrossRef]

- Wu, T.; Li, Y.; Ge, Y.; Xi, S.; Ren, M.; Yuan, X.; Zhuang, C. Estimation of Nitrogen Content in Citrus Leaves Using Stacking Ensemble Learning. J. Phys. Conf. Ser. 2021, 2025, 012072. [Google Scholar] [CrossRef]

- Zhang, L.; Song, X.; Niu, Y.; Zhang, H.; Wang, A.; Zhu, Y.; Zhu, X.; Chen, L.; Zhu, Q. Estimating Winter Wheat Plant Nitrogen Content by Combining Spectral and Texture Features Based on a Low-Cost UAV RGB System throughout the Growing Season. Agriculture 2024, 14, 456. [Google Scholar] [CrossRef]

- Shikhar, S.; Ranjan, R.; Sa, A.; Srivastava, A.; Srivastava, Y.; Kumar, D.; Tamaskar, S.; Sobti, A. Evaluation of Computer Vision Pipeline for Farm-Level Analytics: A Case Study in Sugarcane. In Proceedings of the 7th ACM SIGCAS/SIGCHI Conference on Computing and Sustainable Societies, New Delhi, India, 8 July 2024; pp. 238–247. [Google Scholar] [CrossRef]

- Jaihuni, M.; Khan, F.; Lee, D.; Basak, J.K.; Bhujel, A.; Moon, B.E.; Park, J.; Kim, H.T. Determining Spatiotemporal Distribution of Macronutrients in a Cornfield Using Remote Sensing and a Deep Learning Model. IEEE Access 2021, 9, 30256–30266. [Google Scholar] [CrossRef]

- Begum, S.S.; Chitrasimha Chowdary, M.; Rishika Devi, T.; Nallamothu, V.P.; Jahnavi, Y.; Vijayender, R. Deep Learning-Based Nutrient Deficiency Symptoms in Plant Leaves Using Digital Images. In Proceedings of the 2023 Second International Conference on Advances in Computational Intelligence and Communication (ICACIC), Puducherry, India, 7–8 December 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Hani, S.U.; Mallapur, S.V. Identification of NPK Deficiency in Toor Dal Leaf Using CNN Technique. In Proceedings of the 2023 International Conference on Integrated Intelligence and Communication Systems (ICIICS), Kalaburagi, India, 24–25 November 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Mishra, A.K.; Tripathi, N.; Gupta, A.; Upadhyay, D.; Pandey, N.K. Prediction and Detection of Nutrition Deficiency Using Machine Learning. In Proceedings of the 2023 International Conference on Device Intelligence, Computing and Communication Technologies (DICCT), Dehradun, India, 17–18 March 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Jia, W.B.; Wei, H.R.; Wei, Z.Y. Tomato Fertilizer Deficiency Classification and Fertilization Decision Model Based on Leaf Images and Deep Learning. In Proceedings of the International Conference on Computer, Artificial Intelligence, and Control Engineering (CAICE 2022), Zhuhai, China, 2 December 2022. [Google Scholar] [CrossRef]

- Pourdarbani, R.; Sabzi, S.; Rohban, M.H.; García-Mateos, G.; Arribas, J.I. Nondestructive Nitrogen Content Estimation in Tomato Plant Leaves by Vis-NIR Hyperspectral Imaging and Regression Data Models. Appl. Opt. 2021, 60, 9560–9569. [Google Scholar] [CrossRef] [PubMed]

- Du, L.; Jin, Z.; Chen, B.; Chen, B.; Gao, W.; Yang, J.; Shi, S.; Song, S.; Wang, M.; Gong, W.; et al. Application of Hyperspectral LiDAR on 3-D Chlorophyll-Nitrogen Mapping of Rohdea japonica in Laboratory. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 9667–9679. [Google Scholar] [CrossRef]

- Sharma, A.; Georgi, M.; Tregubenko, M.; Tselykh, A.; Tselykh, A. Enabling Smart Agriculture by Implementing Artificial Intelligence and Embedded Sensing. Comput. Ind. Eng. 2022, 165, 107936. [Google Scholar] [CrossRef]

- Hosseini, S.A.; Masoudi, H.; Sajadiye, S.M.; Mehdizadeh, S.A. Nitrogen Estimation in Sugarcane Fields from Aerial Digital Images Using Artificial Neural Network. Environ. Eng. Manag. J. 2021, 20, 713–723. [Google Scholar] [CrossRef]

- Wang, S.M.; Ma, J.H.; Zhao, Z.M.; Xuan, Y.M.; Ouyang, J.X.; Fan, D.M.; Yu, J.F.; Wang, X.C. Pixel-Class Prediction for Nitrogen Content of Tea Plants Based on Unmanned Aerial Vehicle Images Using Machine Learning and Deep Learning. Expert Syst. Appl. 2023, 227, 120351. [Google Scholar] [CrossRef]

- Gul, Z.; Bora, S. Exploiting Pre-Trained Convolutional Neural Networks for the Detection of Nutrient Deficiencies in Hydroponic Basil. Sensors 2023, 23, 5407. [Google Scholar] [CrossRef]

- Siva, K.P.M.E.; Vibin, K.C.; Kaarnika, A.; Ramkumar, T.R.; Priyanka, D. Revitalizing Paddy Yields with Computer Vision. In Proceedings of the 2024 2nd International Conference on Intelligent Data Communication Technologies and Internet of Things (IDCIoT), Bengaluru, India, 4–6 January 2024; pp. 745–749. [Google Scholar] [CrossRef]

- Priya, G.L.; Baskar, C.; Deshmane, S.S.; Adithya, C.; Das, S. Revolutionizing Holy-Basil Cultivation With AI-Enabled Hydroponics System. IEEE Access 2023, 11, 82624–82639. [Google Scholar] [CrossRef]

- Hui Chen, O.C.; Bong, C.H.; Lee, N.K. Benchmarking CNN Models for Black Pepper Diseases and Malnutrition Prediction. In Proceedings of the 2023 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET), Kota Kinabalu, Malaysia, 12–14 September 2023; pp. 146–151. [Google Scholar] [CrossRef]

- Orka, N.A.; Haque, E.; Uddin, M.N.; Ahamed, T. Nutrispace: A Novel Color Space to Enhance Deep Learning Based Early Detection of Cucurbits Nutritional Deficiency. Comput. Electron. Agric. 2024, 225, 109296. [Google Scholar] [CrossRef]

- Bhavya, T.; Seggam, R.; Jatoth, R.K. Fertilizer Recommendation for Rice Crop Based on NPK Nutrient Deficiency Using Deep Neural Networks and Random Forest Algorithm. In Proceedings of the 2023 3rd International Conference on Artificial Intelligence and Signal Processing (AISP), Vijayawada, India, 18–20 March 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Lee, C.J.; Yang, M.-D.; Tseng, H.-H.; Hsu, Y.-C.; Sung, Y.; Chen, W.-L. Single-Plant Broccoli Growth Monitoring Using Deep Learning with UAV Imagery. Comput. Electron. Agric. 2023, 207, 107739. [Google Scholar] [CrossRef]

- Han, M.K.A.; Watchareeruetai, U. Black Gram Plant Nutrient Deficiency Classification in Combined Images Using Convolutional Neural Network. In Proceedings of the 2020 8th International Electrical Engineering Congress (iEECON), Chiang Mai, Thailand, 4–6 March 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Wang, C.; Ye, Y.; Tian, Y.; Yu, Z. Classification of Nutrient Deficiency in Rice Based on CNN Model with Reinforcement Learning Augmentation. In Proceedings of the 2021 International Symposium on Artificial Intelligence and its Application on Media (ISAIAM), Xi’an, China, 21–23 May 2021; pp. 107–111. [Google Scholar] [CrossRef]

- Hammouch, H.; Patil, S.; El Yacoubi, M.A.; Masner, J.; Kholová, J.; Choudhary, S.; Anbazhagan, K.; Vaněk, J.; Qin, H.; Stočes, M.; et al. Exploring Novel AI-Based Approaches for Plant Features Extraction in Image Datasets with Small Size: The Case Study of Nitrogen Estimation in Sorghum Using UAV-Based RGB Sensing. SSRN 2023. [Google Scholar] [CrossRef]

- Malounas, I.; Lentzou, D.; Xanthopoulos, G.; Fountas, S. Testing the Suitability of Automated Machine Learning, Hyperspectral Imaging and CIELAB Color Space for Proximal In Situ Fertilization Level Classification. Smart Agric. Technol. 2024, 8, 100437. [Google Scholar] [CrossRef]

- Radočaj, D.; Jurišić, M.; Gašparović, M. The Role of Remote Sensing Data and Methods in a Modern Approach to Fertilization in Precision Agriculture. Remote Sens. 2022, 14, 778. [Google Scholar] [CrossRef]

- Ghazal, S.; Kommineni, N.; Munir, A. Comparative Analysis of Machine Learning Techniques Using RGB Imaging for Nitrogen Stress Detection in Maize. AI 2024, 5, 1286–1300. [Google Scholar] [CrossRef]

- Sakthipriya, S.; Naresh, R. Precision Agriculture Based on Convolutional Neural Network in Rice Production Nutrient Management Using Machine Learning Genetic Algorithm. Eng. Appl. Artif. Intell. 2024, 130, 107682. [Google Scholar] [CrossRef]

- Abidi, M.H.; Chintakindi, S.; Rehman, A.U.; Mohammed, M.K. Elucidation of Intelligent Classification Framework for Hydroponic Lettuce Deficiency Using Enhanced Optimization Strategy and Ensemble Multi-Dilated Adaptive Networks. IEEE Access 2024, 12, 58406–58426. [Google Scholar] [CrossRef]

- Supreetha, S.; Premalathamma, R.; Manjula, S.H. Deep Learning Techniques to Detect Nutrient Deficiency in Rice Plants. In Proceedings of the 2024 International Conference on Inventive Computation Technologies (ICICT), Lalitpur, Nepal, 24–26 April 2024; pp. 699–705. [Google Scholar] [CrossRef]

- Islam, S.; Reza, M.N.; Ahmed, S.; Samsuzzaman; Lee, K.H.; Cho, Y.J.; Noh, D.H.; Chung, S.O. Nutrient Stress Symptom Detection in Cucumber Seedlings Using Segmented Regression and a Mask Region-Based Convolutional Neural Network Model. Agriculture 2024, 14, 1390. [Google Scholar] [CrossRef]

- Sunoj, S.; McRoberts, K.C.; Benson, M.; Ketterings, Q.M. Digital Image Analysis Estimates of Biomass, Carbon, and Nitrogen Uptake of Winter Cereal Cover Crops. Comput. Electron. Agric. 2021, 184, 106093. [Google Scholar] [CrossRef]

- Alibabaei, K.; Gaspar, P.D.; Lima, T.M.; Campos, R.M.; Girão, I.; Monteiro, J.; Lopes, C.M. A Review of the Challenges of Using Deep Learning Algorithms to Support Decision-Making in Agricultural Activities. Remote Sens. 2022, 14, 638. [Google Scholar] [CrossRef]

- Ranjbar, A.; Rahimikhoob, A.; Ebrahimian, H.; Varavipour, M. Determination of Critical Nitrogen Dilution Curve Based on Canopy Cover Data for Summer Maize. Commun. Soil Sci. Plant Anal. 2020, 51, 2244–2256. [Google Scholar] [CrossRef]

- Fu, Y.; Yang, G.; Li, Z.; Song, X.; Li, Z.; Xu, X.; Wang, P.; Zhao, C. Winter Wheat Nitrogen Status Estimation Using UAV-Based RGB Imagery and Gaussian Processes Regression. Remote Sens. 2020, 12, 3778. [Google Scholar] [CrossRef]

- Chang, L.; Li, D.; Hameed, M.K.; Yin, Y.; Huang, D.; Niu, Q. Using a Hybrid Neural Network Model DCNN–LSTM for Image-Based Nitrogen Nutrition Diagnosis in Muskmelon. Horticulturae 2021, 7, 489. [Google Scholar] [CrossRef]

- Sabzi, S.; Pourdarbani, R.; Rohban, M.H.; García-Mateos, G.; Arribas, J.I. Estimation of Nitrogen Content in Cucumber Plant (Cucumis Sativus L.) Leaves Using Hyperspectral Imaging Data with Neural Network and Partial Least Squares Regressions. Chemom. Intell. Lab. Syst. 2021, 217, 104404. [Google Scholar] [CrossRef]

- Baesso, M.M.; Leveghin, L.; Sardinha, E.J.D.S.; Oliveira, G.P.D.C.N.; Sousa, R.V.D. Deep Learning-Based Model for Classification of Bean Nitrogen Status Using Digital Canopy Imaging. Eng. Agríc. 2023, 43, e20230068. [Google Scholar] [CrossRef]

- Xu, S.; Xu, X.; Zhu, Q.; Meng, Y.; Yang, G.; Feng, H.; Yang, M.; Zhu, Q.; Xue, H.; Wang, B. Monitoring Leaf Nitrogen Content in Rice Based on Information Fusion of Multi-Sensor Imagery from UAV. Precis. Agric. 2023, 24, 2327–2349. [Google Scholar] [CrossRef]

- Zhang, J.; Xie, T.; Yang, C.; Song, H.; Jiang, Z.; Zhou, G.; Zhang, D.; Feng, H.; Xie, J. Segmenting Purple Rapeseed Leaves in the Field from UAV RGB Imagery Using Deep Learning as an Auxiliary Means for Nitrogen Stress Detection. Remote Sens. 2020, 12, 1403. [Google Scholar] [CrossRef]

- Iatrou, M.; Karydas, C.; Tseni, X.; Mourelatos, S. Representation Learning with a Variational Autoencoder for Predicting Nitrogen Requirement in Rice. Remote Sens. 2022, 14, 5978. [Google Scholar] [CrossRef]

- Rokhafrouz, M.; Latifi, H.; Abkar, A.A.; Wojciechowski, T.; Czechlowski, M.; Naieni, A.S.; Maghsoudi, Y.; Niedbała, G. Simplified and Hybrid Remote Sensing-Based Delineation of Management Zones for Nitrogen Variable Rate Application in Wheat. Agriculture 2021, 11, 1104. [Google Scholar] [CrossRef]

- Lekakis, E.; Perperidou, D.; Kotsopoulos, S.; Simeonidou, P. Producing Mid-Season Nitrogen Application Maps for Arable Crops, by Combining Sentinel-2 Satellite Images and Agrometeorological Data in a Decision Support System for Farmers. The Case of NITREOS. In Environmental Software Systems. Data Science in Action; Athanasiadis. In Proceedings of the IFIP Advances in Information and Communication Technology, Cham, Switzerland, 29 January 2020; Athanasiadis, I.N., Frysinger, S.P., Schimak, G., Knibbe, W.J., Eds.; Springer: Berlin/Heidelberg, Germany; pp. 102–114. [Google Scholar] [CrossRef]

- Anitei, M.; Veres, C.; Pisla, A. Research on Challenges and Prospects of Digital Agriculture. In Proceedings of the 14th International Conference on Interdisciplinarity in Engineering—INTER-ENG 2020, Târgu Mureș, Romani, 19 January 2021; p. 67. [Google Scholar] [CrossRef]

- Janani, M.; Jebakumar, R. Detection and Classification of Groundnut Leaf Nutrient Level Extraction in RGB Images. Adv. Eng. Softw. 2023, 175, 103320. [Google Scholar] [CrossRef]

- Bahtiar, A.R.; Pranowo; Santoso, A.J.; Juhariah, J. Deep Learning Detected Nutrient Deficiency in Chili Plant. In Proceedings of the 2020 8th International Conference on Information and Communication Technology (ICoICT), Yogyakarta, Indonesia, 24–26 June 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Guerrero, R.; Renteros, B.; Castaneda, R.; Villanueva, A.; Belupu, I. Detection of Nutrient Deficiencies in Banana Plants Using Deep Learning. In Proceedings of the 2021 IEEE International Conference on Automation/XXIV Congress of the Chilean Association of Automatic Control (ICA-ACCA), Valparaíso, Chile, 22–26 March 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Manju, M.; Indira, P.P.; Shivaraj, S.; Kumar, P.R.; Shivesh, P.R. Smart Fields: Enhancing Agriculture with Machine Learning. In Proceedings of the 2024 2nd International Conference on Artificial Intelligence and Machine Learning Applications Theme: Healthcare and Internet of Things (AIMLA), Namakkal, India, 15–16 March 2024. [Google Scholar] [CrossRef]

- Alshihabi, O.; Piikki, K.; Söderström, M. CropSAT—A Decision Support System for Practical Use of Satellite Images in Precision Agriculture. In Advances in Smart Technologies Applications and Case Studies; El Moussati, A., Kpalma, K., Ghaouth Belkasmi, M., Saber, M., Guégan, S., Eds.; Lecture Notes in Electrical Engineering; Springer International Publishing: Cham, Switzerland, 2020; Volume 684, pp. 415–421. [Google Scholar] [CrossRef]

- Stansell, J.S.; Luck, J.D.; Smith, T.G.; Yu, H.; Rudnick, D.R.; Krienke, B.T. Leveraging Multispectral Imagery for Fertigation Timing Recommendations Through the N-time Automated Decision Support System. In Proceedings of the Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping VII., Orlando, FL, USA, 3 June 2022. [Google Scholar] [CrossRef]

- Sandra; Damayanti, R.; Inayah, Z. Nitrogen Fertilizer Prediction of Maize Plant with TCS3200 Sensor Based on Digital Image Processing. IOP Conf. Ser. Earth Environ. Sci. 2020, 515, 012014. [Google Scholar] [CrossRef]

| Keywords | Document Type | Database | Number of Results | After Screening and Selection |

|---|---|---|---|---|

| ‘Nitrogen’, ‘Machine Learning’, and ‘Computer Vision’ | Articles, proceeding papers, and early access | WoS | 26 | 9 |

| SCP | 26 | 9 | ||

| IEX | 24 | 5 | ||

| EV | 27 | 5 | ||

| ‘Nitrogen’, ‘Deep Learning’, and ‘RGB images’ | Articles, conference papers, and early access | WoS | 30 | 12 |

| SCP | 25 | 12 | ||

| IEX | 7 | 2 | ||

| EV | 14 | 8 | ||

| ‘Nitrogen’, Agriculture’, and ‘Digital Image Processing’ | Conference articles, proceedings, dissertations, preprints, and journals | WoS | 61 | 24 |

| SCP | 7 | 3 | ||

| IEX | 9 | 1 | ||

| EV | 40 | 13 | ||

| ‘Nitrogen’, ‘Artificial Intelligence’, and ‘Images’ | Journal articles, conference articles, proceedings, preprints, and dissertations | Wos | 94 | 14 |

| SCP | 48 | 7 | ||

| IEX | 42 | 19 | ||

| EV | 91 | 36 |

| Crop | Image | Algorithm/s | Best Performance | Reference |

|---|---|---|---|---|

| Winter wheat | RGB | SVM, Classification and Regression Trees, ANN, KNN, RF | R2 = 0.62 (SVM) | [54] |

| Paddy | MS and RGB | Genetic CNN | Accuracy = 99% | [79] |

| Sugarcane | MS | CNN | Accuracy = 0.47 (N stress classifier) | [55] |

| Lettuce | RGB | CNN, RAN, and VGG-16 | Accuracy = 96% | [80] |

| Paddy | RGB | Pre-trained CNN models (InceptionV3, VGG16, VGG19, ResNet50, and ResNet152), SVM | Accuracy = 0.9952 (ResNET152+SVM) | [81] |

| Basil | RGB | Curriculum by Smoothing technique with CNN (CS) and ResNet50V2 | Accuracy = 91.17% (CS) | [5] |

| Maize | RGB | ResNet50, EfficientNetB0, InceptionV3,DenseNet121, Vision Transformer model | Accuracy = 97% (EfficientNetB0) | [78] |

| Pinapple | MS | LR, SVM, Decision tree, RF, XGBoost, AdaBoost, Lasso regressor, Ridge Regressor, and MLP regressor | R2 = 68% (XGBoost) | [50] |

| Broccoli | HS and CIELAB | Pycaret, PLS-DA | Accuracy = 1.00 (pycaret) | [76] |

| Cucumber seedling | RGB | Mask R-CNN, ReNet50, FPN, RPN, GLCM | Precision = 93% (Mask R-CNN) | [82] |

| Lettuce | RGBD and RGB | SVM, NCA, DTC, and linear model with SGD | Accuracy = 90.87% (DTC) | [17] |

| Ash gourd, bitter gourd, and snake gourd | RGB | EfficientNetB0, MobileNetV2, and DenseNet121 | Accuracy = 90.62% (Nutrispace; a new colour space) | [70] |

| Paddy | RGB | ResNet50 | Accuracy = 97.22% | [67] |

| Paddy | RGB | Xception, Vision transformer, and MLP mixer | Accuracy = 95.14% (Xception) | [39] |

| Apple | RGBD | K-means clustering, gradient boost | R2 = 0.72 (gradient boost) | [16] |

| Potato | MS | RF, XGBoost, and stacking ensembled (KNN, PLSR, SVR, RF, GPR) | R2 = 0.74 (stacking ensembled model) | [51] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Konara, B.; Krishnapillai, M.; Galagedara, L. Recent Trends and Advances in Utilizing Digital Image Processing for Crop Nitrogen Management. Remote Sens. 2024, 16, 4514. https://doi.org/10.3390/rs16234514

Konara B, Krishnapillai M, Galagedara L. Recent Trends and Advances in Utilizing Digital Image Processing for Crop Nitrogen Management. Remote Sensing. 2024; 16(23):4514. https://doi.org/10.3390/rs16234514

Chicago/Turabian StyleKonara, Bhashitha, Manokararajah Krishnapillai, and Lakshman Galagedara. 2024. "Recent Trends and Advances in Utilizing Digital Image Processing for Crop Nitrogen Management" Remote Sensing 16, no. 23: 4514. https://doi.org/10.3390/rs16234514

APA StyleKonara, B., Krishnapillai, M., & Galagedara, L. (2024). Recent Trends and Advances in Utilizing Digital Image Processing for Crop Nitrogen Management. Remote Sensing, 16(23), 4514. https://doi.org/10.3390/rs16234514