LPST-Det: Local-Perception-Enhanced Swin Transformer for SAR Ship Detection

Abstract

1. Introduction

- A strong backbone is necessary to extract object features more effectively.

- After feature extraction by the backbone, the integration and propagation of both semantic and location information in the feature pyramid network (FPN) need to be strengthened for more effective feature representation.

- Oriented bounding boxes are preferred to horizontal ones in order to provide more precise locations for arbitrary-oriented objects and reduce the introduction of background information.

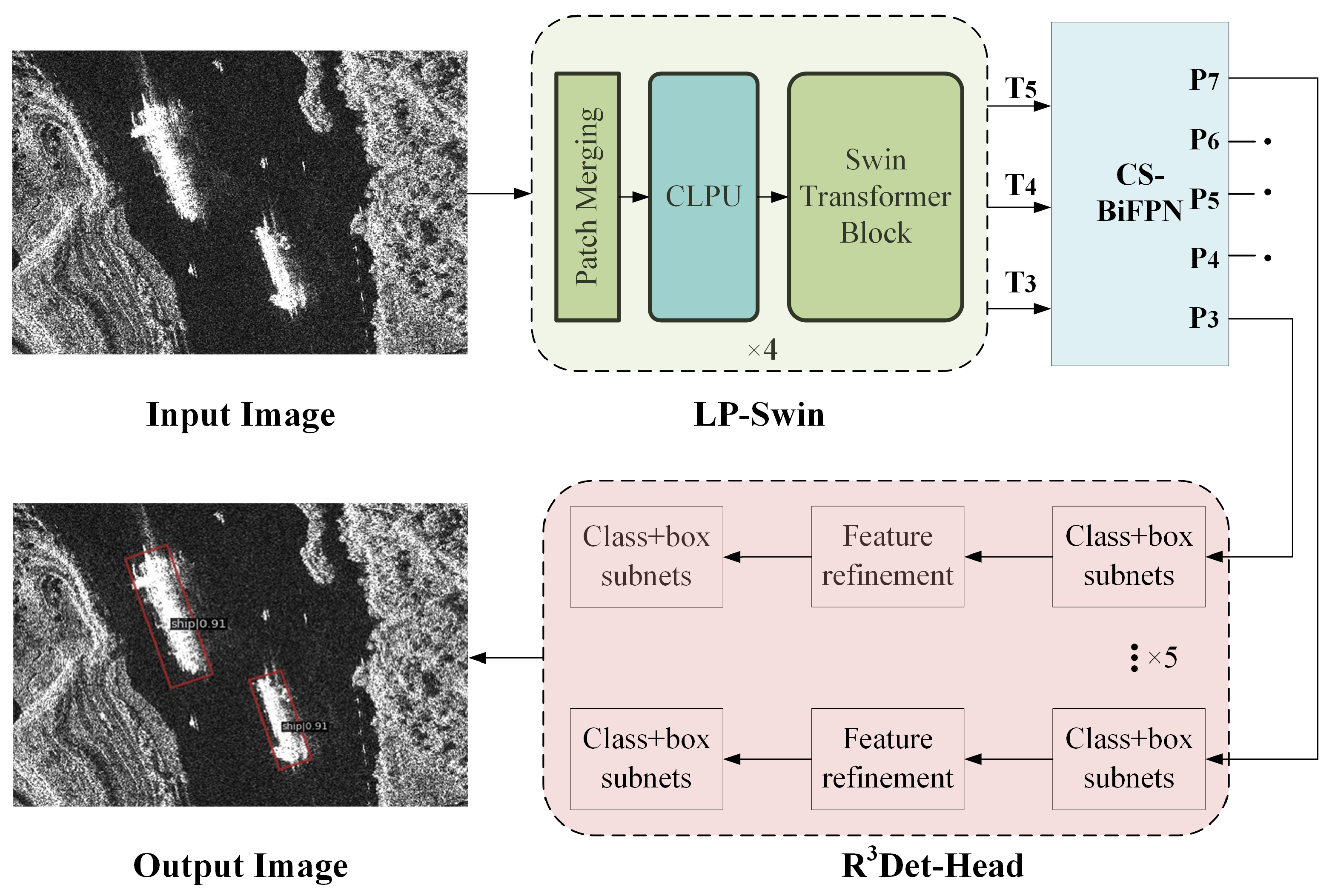

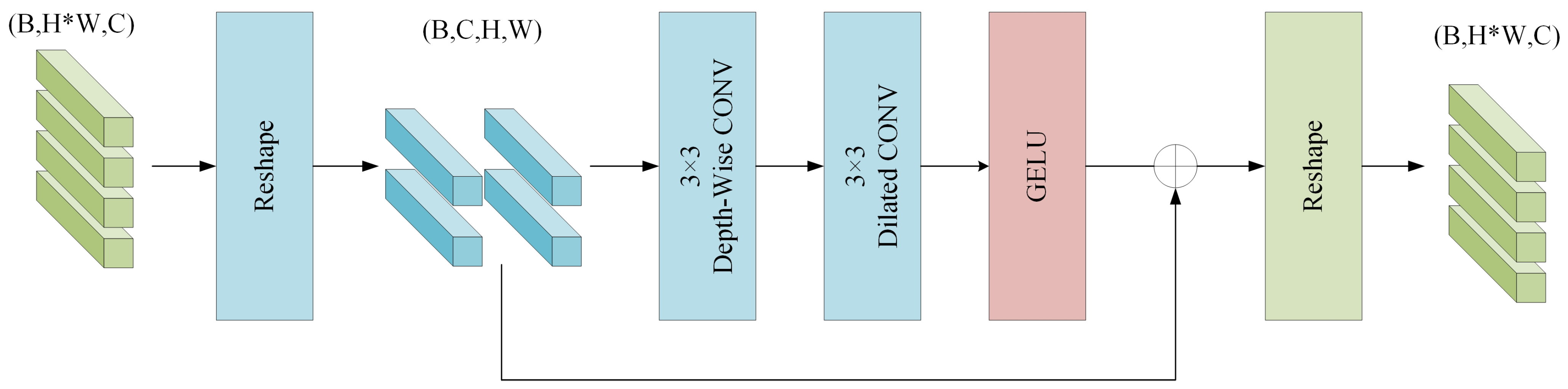

- We propose a local-perception-enhanced Swin Transformer backbone called LP-Swin, which combines the advantage of CNN in collecting local information and the advantage of Swin Transformer to extract long-distance dependencies, so that more powerful object features can be extracted from SAR images. Specifically, we introduce a convolution-based local perception unit termed CLPU consisting of dilated convolution and depth-wise convolution to facilitate the extraction of local information in the vision transformer structure and improve the overall detection performance.

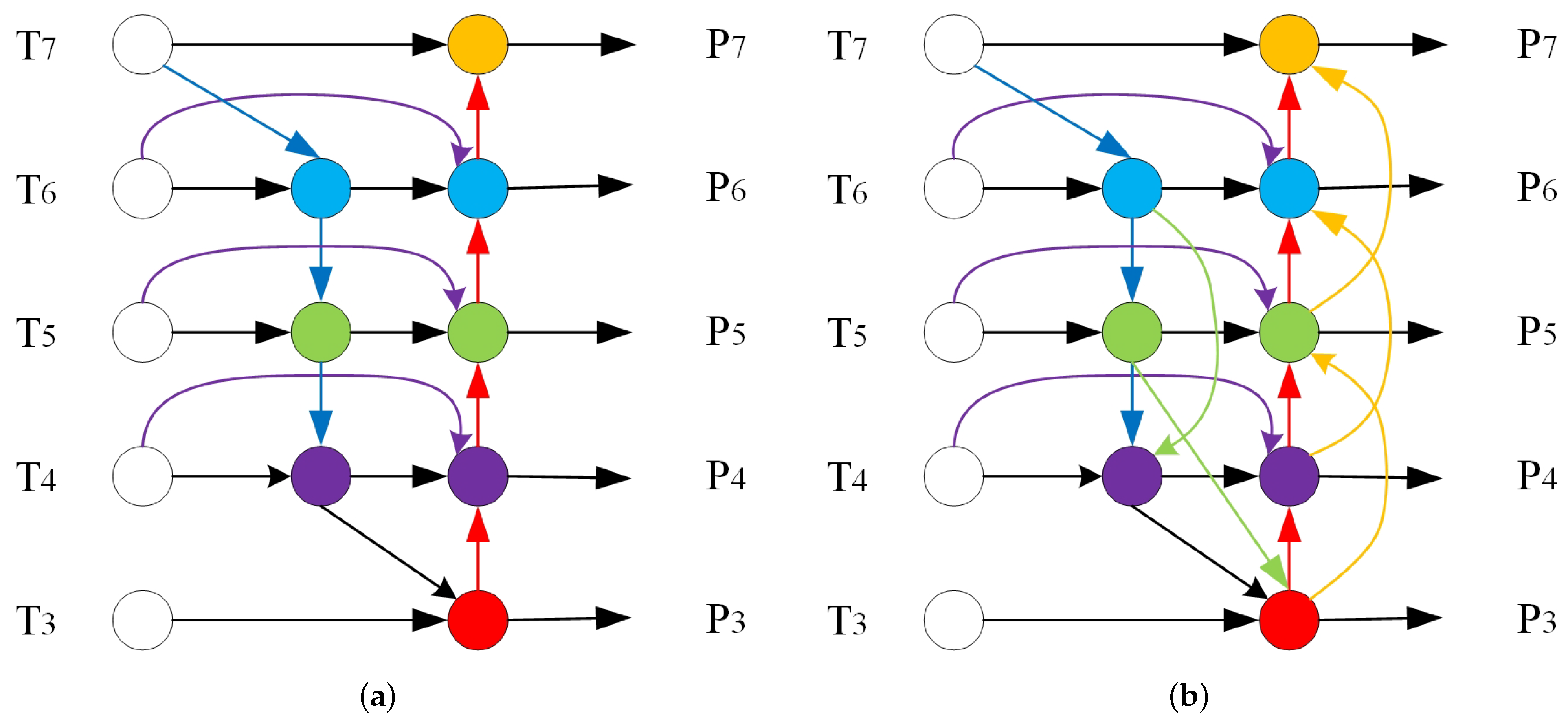

- We introduce a cross-scale bidirectional feature pyramid network termed CS-BiFPN with the aim of enhancing the utilization of features extracted by the backbone and mitigating the challenges posed by multi-scale ships. The incorporation of longitudinal cross-scale connections strengthens the integration and propagation of both semantic and location information, which benefits both classification and location tasks.

- We construct a one-stage framework integrated with LP-Swin and CS-BiFPN for arbitrary-oriented SAR ship detection. Ablation tests are conducted to assess the effect of our proposed components. Experiments on the public SSDD dataset illustrate that our approach attains comparable detection results with other advanced strategies.

2. Literature Review

2.1. CNN-Based SAR Object Detection

2.2. Transformer in Object Detection

2.3. Multi-Scale Feature Representations

3. Materials and Methods

3.1. Overview of the Proposed Method

3.2. Local-Perception-Enhanced Swin Transformer Backbone

3.2.1. Structure of Local-Perception-Enhanced Swin Transformer

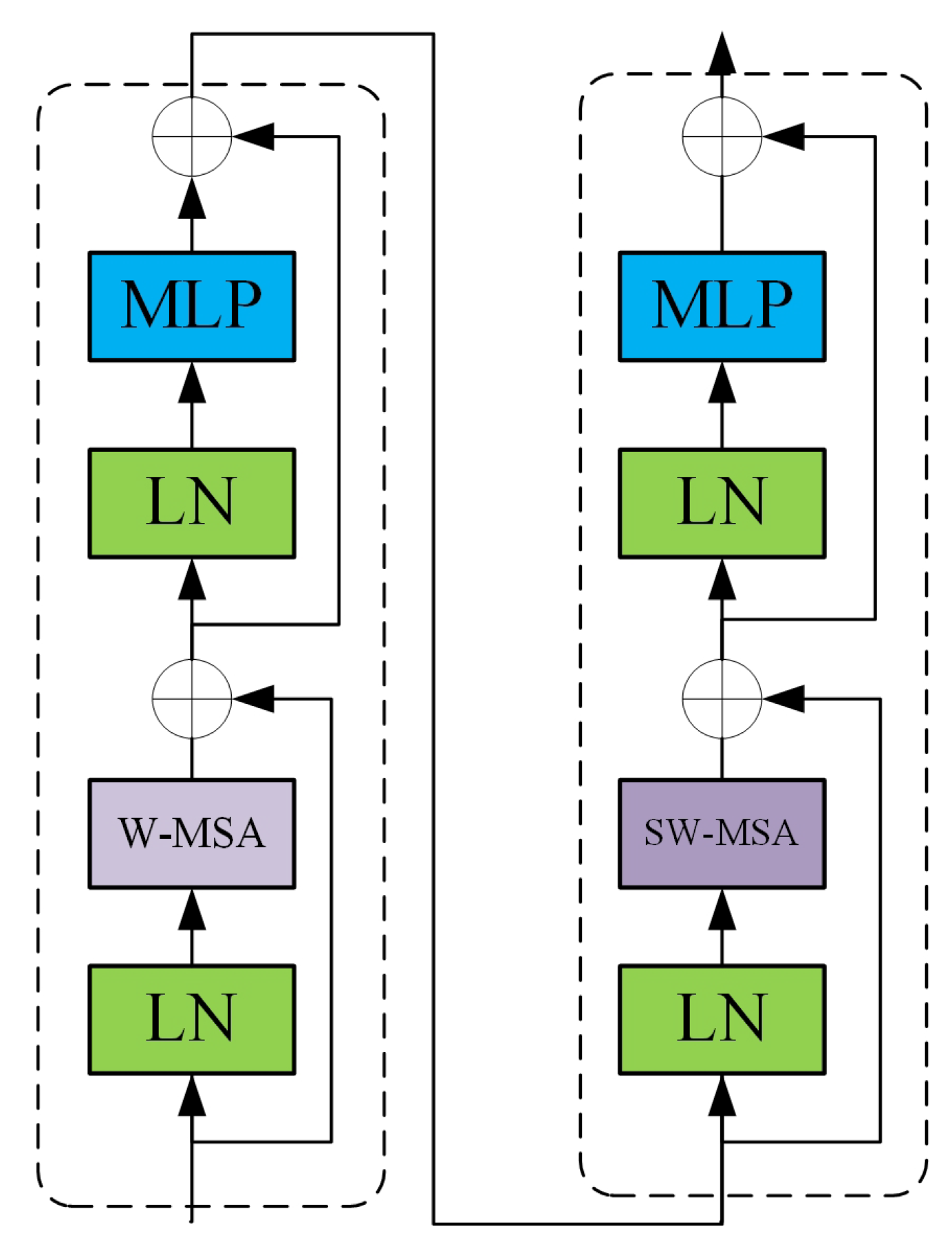

3.2.2. Swin Transformer Block

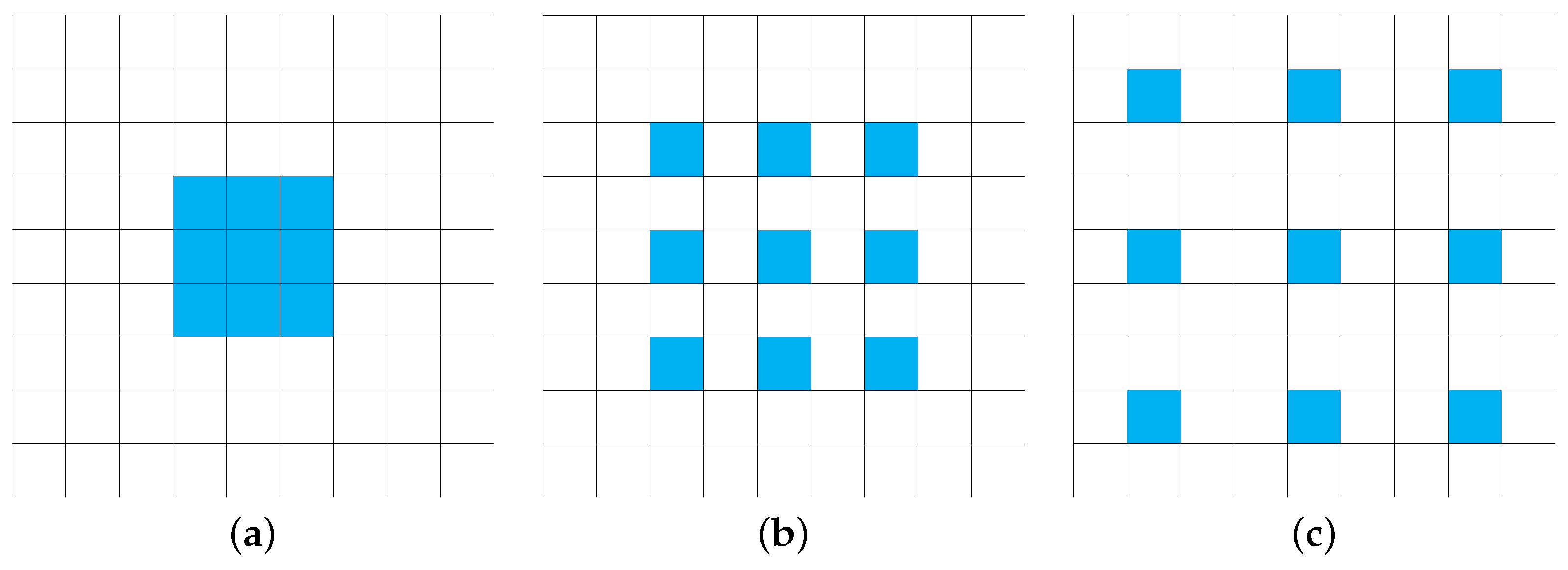

3.2.3. Convolution-based Local Perception Unit

3.3. Cross-Scale Bi-Directional Feature Pyramid Network

3.4. Evaluation Metrics

4. Results and Discussion

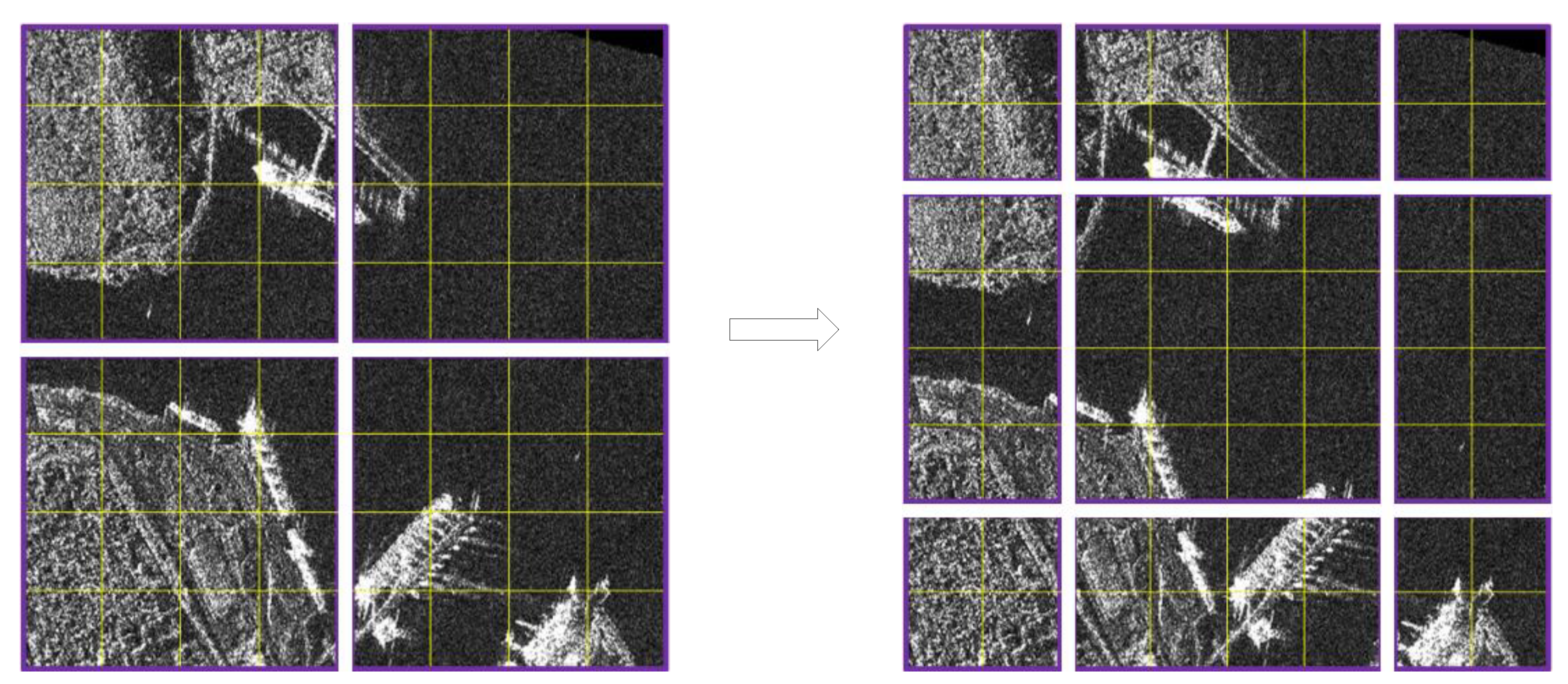

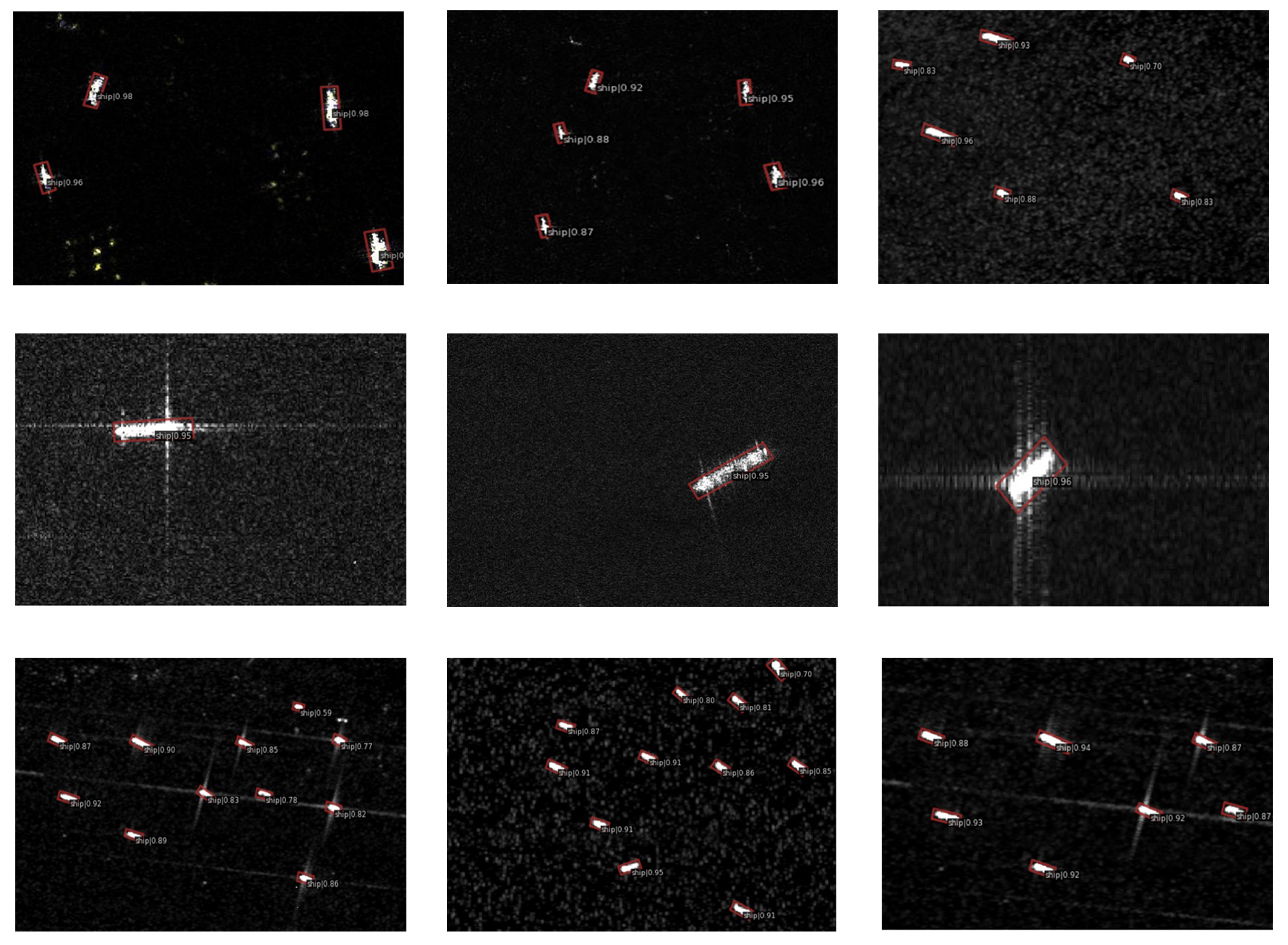

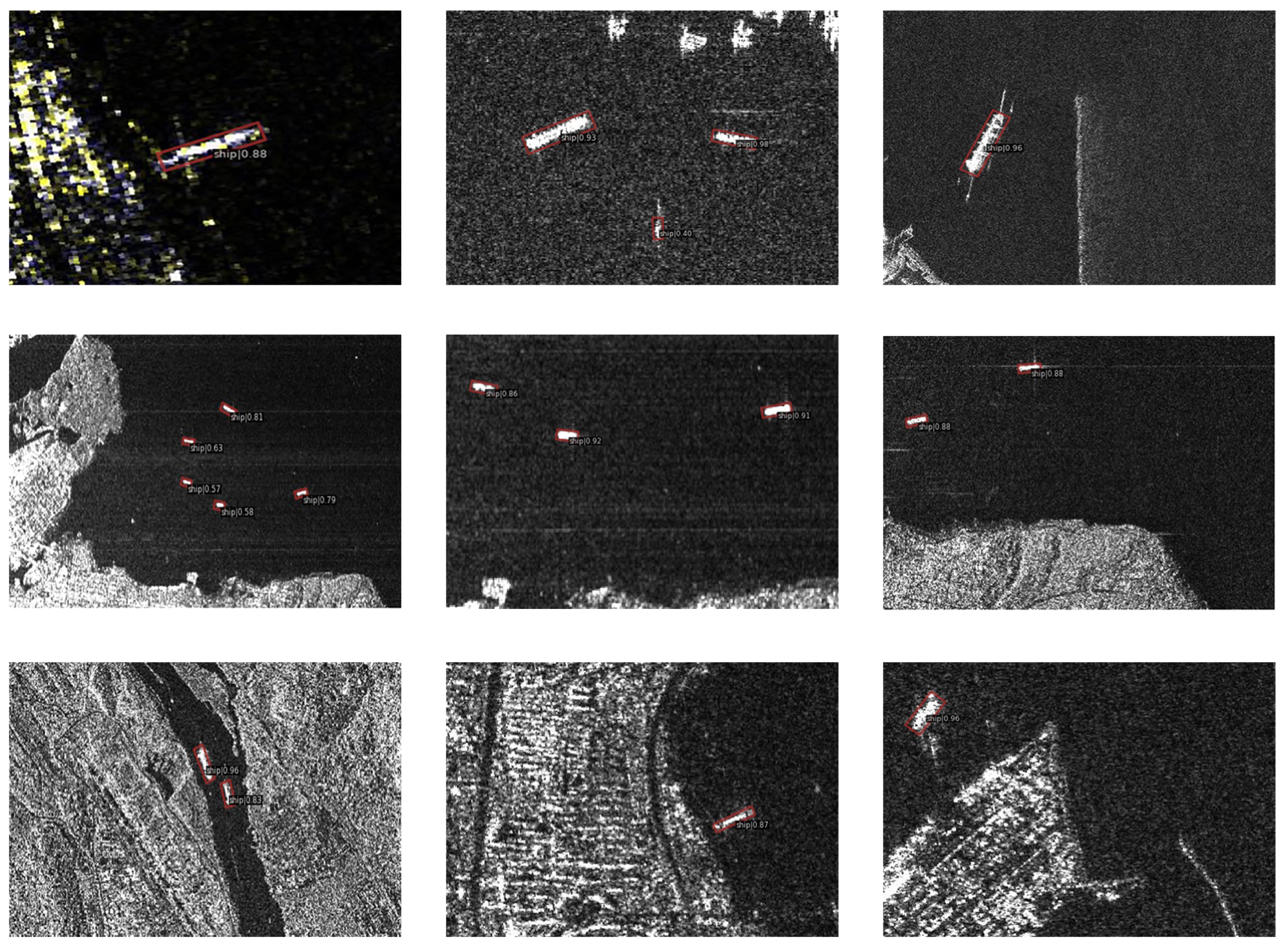

4.1. Dataset and Implementation Details

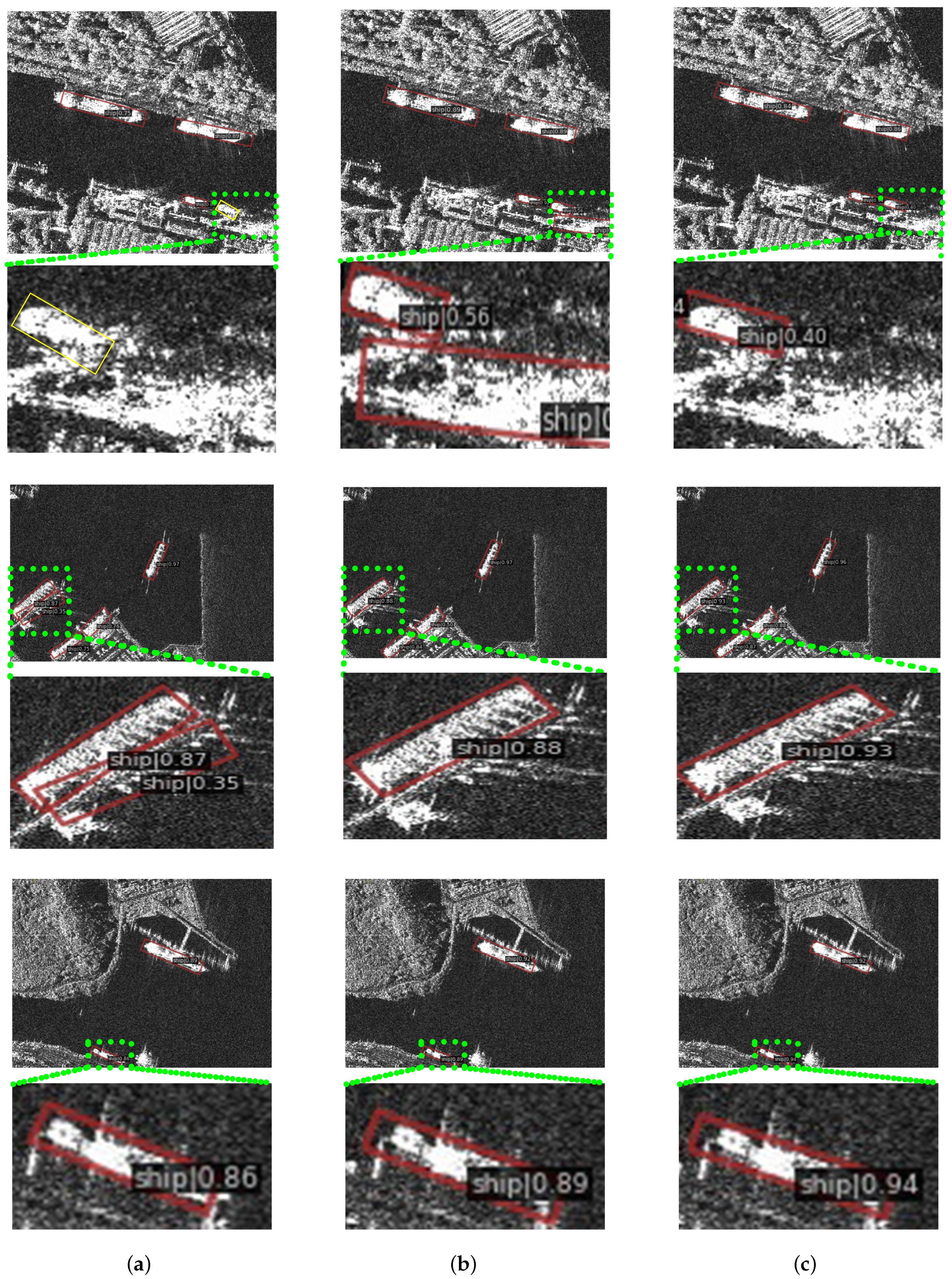

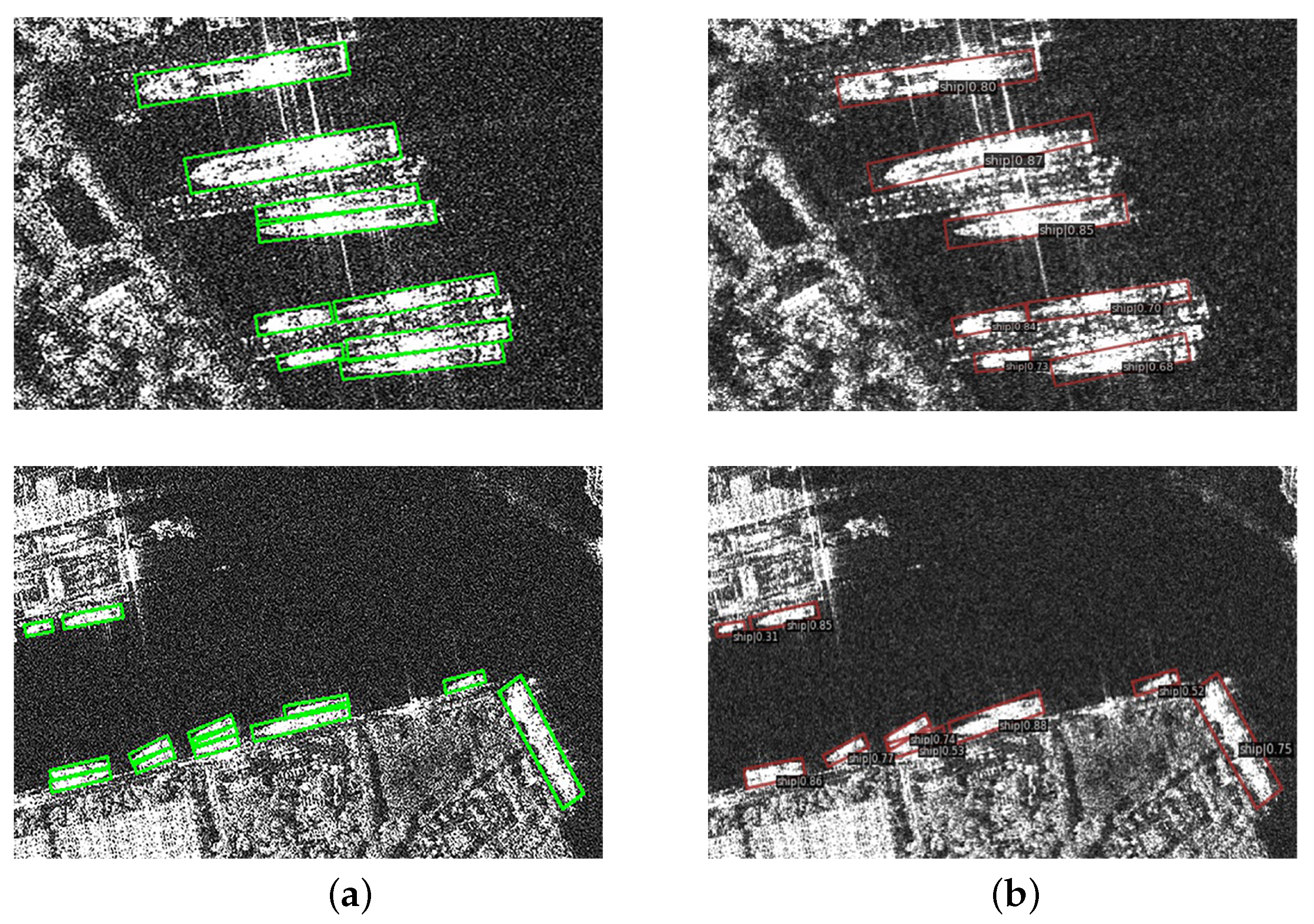

4.2. Experimental Results on the SSDD

4.3. Ablation Experiments

4.3.1. Experiments for the Dilation Rate in CLPU

4.3.2. Experiments for LP-Swin Backbone

4.3.3. Experiments for CS-BiFPN Network

4.4. Limitation and Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, T.; Zhang, J.; Gao, G.; Yang, J.; Marino, A. CFAR ship detection in polarimetric synthetic aperture radar images based on whitening filter. IEEE Trans. Geosci. Remote Sens. 2019, 58, 58–81. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, H.; Xu, C.; Lv, Y.; Fu, C.; Xiao, H.; He, Y. A lightweight feature optimizing network for ship detection in SAR image. IEEE Access 2019, 7, 141662–141678. [Google Scholar] [CrossRef]

- Schwegmann, C.P.; Kleynhans, W.; Salmon, B.P.; Mdakane, L.W.; Meyer, R.G.V. Very deep learning for ship discrimination in synthetic aperture radar imagery. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 104–107. [Google Scholar]

- Shao, Z.; Zhang, X.; Zhang, T.; Xu, X.; Zeng, T. RBFA-Net: A Rotated Balanced Feature-Aligned Network for Rotated SAR Ship Detection and Classification. Remote Sens. 2022, 14, 3345. [Google Scholar] [CrossRef]

- Gao, G.; Liu, L.; Zhao, L.; Shi, G.; Kuang, G. An adaptive and fast CFAR algorithm based on automatic censoring for target detection in high-resolution SAR images. IEEE Trans. Geosci. Remote Sens. 2008, 47, 1685–1697. [Google Scholar] [CrossRef]

- Cao, X.; Wu, C.; Yan, P.; Li, X. Linear SVM classification using boosting HOG features for vehicle detection in low-altitude airborne videos. In Proceedings of the 2011 IEEE International Conference Image Processing (ICIP), Brussels, Belgium, 11–14 September 2011; pp. 2421–2424. [Google Scholar]

- Zhou, G.; Tang, Y.; Zhang, W.; Liu, W.; Jiang, Y.; Gao, E. Shadow Detection on High-Resolution Digital Orthophoto Map (DOM) using Semantic Matching. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–20. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE ICCV, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolo9000: Better, faster, stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–27 July 2017; pp. 6517–6525. [Google Scholar]

- Joseph, R.; Ali, F. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Chen, S.-Q.; Zhan, R.-H.; Zhang, J. Robust single stage detector based on two-stage regression for SAR ship detection. In Proceedings of the International Conference on Innovation in Artificial Intelligence (ICIAI), Shanghai, China, 9–12 March 2018; pp. 169–174. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. arXiv 2021, arXiv:2103.14030. [Google Scholar]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Yang, X.; Liu, Q.; Yan, J.; Li, A.; Zhang, Z.; Yu, G. R3det: Refined single-stage detector with feature refinement for rotating object. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; pp. 3163–3171. [Google Scholar]

- Li, J.; Qu, C.; Shao, J. Ship detection in SAR images based on an improved faster R-CNN. In Proceedings of the SAR in Big Data Era: Models, Methods and Applications, Beijing, China, 13–14 November 2017; pp. 1–6. [Google Scholar]

- Zhang, T.; Zhang, X. High-speed ship detection in SAR images based on a grid convolutional neural network. Remote Sens. 2019, 11, 1206. [Google Scholar] [CrossRef]

- Jiao, J.; Zhang, Y.; Sun, H.; Yang, X.; Gao, X.; Hong, W.; Fu, K.; Sun, X. A densely connected end-to-end neural network for multi-scale and multiscene SAR ship detection. IEEE Access 2018, 6, 20881–20892. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, X.; Zhang, T. Lite-YOLOv5: A Lightweight Deep Learning Detector for On-Board Ship Detection in Large-Scene Sentinel-1 SAR Images. Remote Sens. 2022, 14, 1018. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, X.; Shao, Z.; Shi, J.; Wei, S.; Zhang, T.; Zeng, T. A Group-Wise Feature Enhancement-and-Fusion Network with Dual-Polarization Feature Enrichment for SAR Ship Detection. Remote Sens. 2022, 14, 5276. [Google Scholar] [CrossRef]

- Yasir, M.; Zhan, L.; Liu, S.; Wan, J.; Hossain, M.S.; Isiacik Colak, A.T.; Liu, M.; Islam, Q.U.; Raza Mehdi, S.; Yang, Q. Instance segmentation ship detection based on improved Yolov7 using complex background SAR images. Front. Mar. Sci. 2023, 10, 1113669. [Google Scholar] [CrossRef]

- Zheng, Y.; Liu, P.; Qian, L.; Qin, S.; Liu, X.; Ma, Y.; Cheng, G. Recognition and Depth Estimation of Ships Based on Binocular Stereo Vision. J. Mar. Sci. Eng. 2022, 10, 1153. [Google Scholar] [CrossRef]

- Pan, Z.; Yang, R.; Zhang, Z. MSR2N: Multi-stage rotational region based network for arbitrary-oriented ship detection in SAR images. Sensors 2020, 20, 2340. [Google Scholar] [CrossRef]

- Wang, J.; Lu, C.; Jiang, W. Simultaneous ship detection and orientation estimation in SAR images based on attention module and angle regression. Sensors 2018, 18, 2851. [Google Scholar] [CrossRef]

- An, Q.; Pan, Z.; Liu, L.; You, H. DRBox-v2: An Improved Detector with Rotatable Boxes for Target Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8333–8349. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, J.; Zhan, R. R2FA-Det: Delving into High-Quality Rotatable Boxes for Ship Detection in SAR Images. Remote Sens. 2020, 12, 2031. [Google Scholar] [CrossRef]

- Yang, M.; Wang, H.; Hu, K.; Yin, G.; Wei, Z. IA-Net: An Inception–Attention-Module-Based Network for Classifying Underwater Images From Others. IEEE J. Ocean. Eng. 2022, 47, 704–717. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Zhou, X.Z.; Su, W.J.; Lu, L.W.; Li, B.; Wang, X.G.; Dai, J.F. Deformable DETR: Deformable Transformers for End-to-End Object Detection. In Proceedings of the 9th International Conference on Learning Representations (ICLR), Virtual Event, Austria, 3–7 May 2020. [Google Scholar]

- Peng, Z.; Huang, W.; Gu, S.; Xie, L.; Wang, Y.; Jiao, J.; Ye, Q. Conformer: Local features coupling global representations for visual recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 367–376. [Google Scholar]

- Guo, J.; Han, K.; Wu, H.; Tang, Y.; Chen, X.; Wang, Y.; Xu, C. Cmt: Convolutional neural networks meet vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12175–12185. [Google Scholar]

- Xia, R.; Chen, J.; Huang, Z.; Wan, H.; Wu, B.; Sun, L.; Yao, B.; Xiang, H.; Xing, M. CRTransSar: A Visual Transformer Based on Contextual Joint Representation Learning for SAR Ship Detection. Remote Sens. 2022, 14, 1488. [Google Scholar] [CrossRef]

- Shi, H.; Chai, B.; Wang, Y.; Chen, L. A Local-Sparse-Information-Aggregation Transformer with Explicit Contour Guidance for SAR Ship Detection. Remote Sens. 2022, 14, 5247. [Google Scholar] [CrossRef]

- Li, K.; Zhang, M.; Xu, M.; Tang, R.; Wang, L.; Wang, H. Ship Detection in SAR Images Based on Feature Enhancement Swin Transformer and Adjacent Feature Fusion. Remote Sens. 2022, 14, 3186. [Google Scholar] [CrossRef]

- Ke, X.; Zhang, X.; Zhang, T.; Shi, J.; Wei, S. SAR ship detection based on an improved Faster R-CNN using deformable convolution. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium, Brussels, Belgium, 11–16 July 2021; pp. 3565–3568. [Google Scholar]

- Xu, X.; Feng, Z.; Cao, C.; Li, M.; Wu, J.; Wu, Z.; Shang, Y.; Ye, S. An Improved Swin Transformer-Based Model for Remote Sensing Object Detection and Instance Segmentation. Remote Sens. 2021, 13, 4779. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ghiasi, G.; Lin, T.-Y.; Le, Q.V. NAS-FPN: Learning Scalable Feature Pyramid Architecture for Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7029–7038. [Google Scholar]

- Liu, N.; Cui, Z.; Cao, Z.; Pi, Y.; Lan, H. Scale-Transferrable Pyramid Network for Multi-Scale Ship Detection in SAR Images. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1–4. [Google Scholar]

- Hu, W.; Tian, Z.; Chen, S.; Zhan, R.; Zhang, J. Dense feature pyramid network for ship detection in SAR images. In Proceedings of the Third International Conference on Image, Video Processing and Artificial Intelligence, Shanghai, China, 23–24 October 2020. [Google Scholar]

- Zhang, T.; Zhang, X.; Ke, X. Quad-FPN: A novel quad feature pyramid network for SAR ship detection. Remote Sens. 2021, 13, 2771. [Google Scholar] [CrossRef]

- Chen, J.; Wang, Q.; Peng, W.; Xu, H.; Li, X.; Xu, W. Disparity-Based Multiscale Fusion Network for Transportation Detection. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18855–18863. [Google Scholar] [CrossRef]

- Zhang, R.; Li, L.; Zhang, Q.; Zhang, J.; Xu, L.; Zhang, B.; Wang, B. Differential Feature Awareness Network within Antagonistic Learning for Infrared-Visible Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2023. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. SAR Ship Detection Dataset (SSDD): Official Release and Comprehensive Data Analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Ge, J.; Tang, Y.; Guo, K.; Zheng, Y.; Hu, H.; Liang, J. KeyShip: Towards High-Precision Oriented SAR Ship Detection Using Key Points. Remote Sens. 2023, 15, 2035. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.; Lu, Q. Learning RoI Transformer for Oriented Object Detection in Aerial Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 2849–2858. [Google Scholar]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.-S.; Bai, X. Gliding vertex on the horizontal bounding box for multi-oriented object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 1452–1459. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Yan, J. Arbitrary-Oriented Object Detection with Circular Smooth Label. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 677–694. [Google Scholar]

- Yang, X.; Yan, J.; Liao, W.; Yang, X.; Tang, J.; He, T. SCRDet++: Detecting Small, Cluttered and Rotated Objects via Instance-Level Feature Denoising and Rotation Loss Smoothing. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 2384–2399. [Google Scholar] [CrossRef]

- Han, J.; Ding, J.; Li, J.; Xia, G.-S. Align Deep Features for Oriented Object Detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5602511. [Google Scholar] [CrossRef]

- Jiang, X.; Xie, H.; Chen, J.; Zhang, J.; Wang, G.; Xie, K. Arbitrary-Oriented Ship Detection Method Based on Long-Edge Decomposition Rotated Bounding Box Encoding in SAR Images. Remote Sens. 2023, 14, 3599. [Google Scholar] [CrossRef]

| Method | Stage | Inshore AP | Offshore AP | FPS | |

|---|---|---|---|---|---|

| KeyShip * [55] | - | 90.72 | - | - | 13.3 |

| Cascade RCNN * [56] | Multiple | 88.45 | - | - | 2.8 |

| ROI Transformer [57] | Multiple | 90.51 | 75.11 | 96.40 | - |

| MSR2N * [32] | Two | 90.11 | - | - | 9.7 |

| Gliding Vertex [58] | Two | 91.88 | 75.23 | 98.35 | - |

| CSL [59] | Two | 92.16 | 76.15 | 98.87 | 7.0 |

| SCRDet++ [60] | Two | 92.56 | 77.17 | 99.16 | 8.8 |

| RetinaNet-R [12] | One | 86.14 | 59.35 | 97.10 | 30.6 |

| R3Det [24] | One | 90.45 | 76.36 | 97.21 | 24.5 |

| DRBox-v2 * [34] | One | 92.81 | - | - | 18.1 |

| S2A-Net [61] | One | 92.08 | 75.79 | 98.79 | 29.0 |

| Jiang’s * [62] | One | 92.50 | - | - | - |

| LPST-Det | One | 93.85 | 81.28 | 99.20 | 16.4 |

| Dilation Rate | Inshore AP | Offshore AP | Precision | Recall | |

|---|---|---|---|---|---|

| d = 1 | 92.95 | 79.96 | 97.98 | 95.5 | 94.2 |

| d = 2 | 93.15 | 80.90 | 98.05 | 96.1 | 94.2 |

| d = 3 | 93.34 | 80.95 | 98.26 | 96.3 | 94.4 |

| d = 4 | 93.03 | 80.56 | 98.00 | 95.7 | 94.2 |

| Method | Inshore AP | Offshore AP | Precision | Recall | |

|---|---|---|---|---|---|

| ResNet-50 | 90.45 | 76.36 | 97.21 | 93.5 | 91.7 |

| Swin-T | 92.83 | 79.57 | 97.80 | 95.2 | 93.8 |

| LP-Swin | 93.34 | 80.95 | 98.26 | 96.3 | 94.4 |

| Method | Inshore AP | Offshore AP | Precision | Recall | |

|---|---|---|---|---|---|

| FPN | 93.34 | 80.95 | 98.26 | 96.3 | 94.4 |

| BiFPN | 93.64 | 81.17 | 98.60 | 96.5 | 94.5 |

| CS-BiFPN | 93.85 | 81.28 | 99.20 | 97.1 | 94.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.; Xia, X.; Liu, Y.; Wen, G.; Zhang, W.E.; Guo, L. LPST-Det: Local-Perception-Enhanced Swin Transformer for SAR Ship Detection. Remote Sens. 2024, 16, 483. https://doi.org/10.3390/rs16030483

Yang Z, Xia X, Liu Y, Wen G, Zhang WE, Guo L. LPST-Det: Local-Perception-Enhanced Swin Transformer for SAR Ship Detection. Remote Sensing. 2024; 16(3):483. https://doi.org/10.3390/rs16030483

Chicago/Turabian StyleYang, Zhigang, Xiangyu Xia, Yiming Liu, Guiwei Wen, Wei Emma Zhang, and Limin Guo. 2024. "LPST-Det: Local-Perception-Enhanced Swin Transformer for SAR Ship Detection" Remote Sensing 16, no. 3: 483. https://doi.org/10.3390/rs16030483

APA StyleYang, Z., Xia, X., Liu, Y., Wen, G., Zhang, W. E., & Guo, L. (2024). LPST-Det: Local-Perception-Enhanced Swin Transformer for SAR Ship Detection. Remote Sensing, 16(3), 483. https://doi.org/10.3390/rs16030483