1. Introduction

Space dim target recognition using infrared sensors is a challenging task for SSA systems [

1]. The detection and recognition of a space target directly affects the security of the space environment, and pre-recognition of targets encourages the decision center to take proper initiatives quickly and efficiently to secure space station satellites [

2,

3]. In the target detection stage, researchers have conducted large amounts of research [

4,

5,

6,

7], but there is a lack of research on the recognition stage. Due to the long distance between the infrared sensors on the satellite platform and the target—and because the size of the space target is smaller than the instantaneous field of view of the detector—the space target images as a small dot on the infrared image plane, which lacks shape and target attitude information, resulting in fewer features to exploit [

8]. Additionally, due to the weak target imaging signal, the feature information of the target is more seriously affected by interference such as noise, resulting in poor recognition of targets relying only on a single feature.

In recent years, space infrared dim target recognition has been initially studied, and effective results have been presented. For example, Silberman et al. [

9] extracted the mean and variance statistical features of the radiation sequence of the target, constructed a classifier model based on the parameterization method, and then realized the classification and recognition of space targets. Gu et al. [

10] constructed features such as length–width ratio, the brightness of the centroid, the pixel area, and the invariant moment based on the imaging area of the target and combined these with DS fusion theory to realize the discrimination of the targets. Dai et al. selected radiation intensity as the initial recognition feature and then used the Adaboost classifier to achieve the initial recognition of the target, after which the temperature feature was used to discriminate the unrecognized target again [

11]. Zhang et al. simulated the irradiance signal of the target based on the Bhattacharyya optical decoy evaluation (BODE) model and extracted the temperature and effective radiation area of the target, which were input into the gaussian particle swarm optimization probabilistic neural network (GPSO-PNN) to achieve the recognition of the four types of targets [

12]. Ma only extracted the radiation intensity of each target as the feature and combined the random projection recurrent neural network (R-RNN) network architecture to achieve the target recognition [

13]. However, since the gray value of the target and the radiation can be considered linear within the normal operation of the infrared detector, it is still essentially using the infrared radiation of the target for recognition. These papers [

14,

15] also only used infrared radiation as input data for target recognition; the difference lies in the recognition algorithms.

From the above, most of the features extracted for spatial target recognition are mainly divided into two categories: (1) Image feature parameters of the target, such as length–width ratio, energy concentration, etc. These features can be extracted very quickly and require fewer computational resources, but they cannot reflect the intrinsic physical information of the target. Once the parameters of the imaging system of the infrared radiation detector change, the feature parameters of the target will also change, failing the training data set for the classifier established earlier. (2) Physical features of the target, such as temperature, radiation intensity, etc., which can reflect the changes in the properties and are the core data of the target. However, some features need to be obtained after model calculation, and the extraction speed of feature information is slower compared to that of image feature parameters. Moreover, most of the existing scholars focus on using a single feature or two features of the target to recognize the target, and the comprehensive use of various features is rare. Only a single feature is used to describe the state of the whole target, so the robustness of discrimination is low, and if the features show instability during the motion, the recognition performance is often not satisfactory. Therefore, it is extremely valuable to extract complete comprehensive feature information with a strong distinguishing ability from images produced by infrared detectors and comprehensively utilize these features to improve the recognition performance of the space target.

If multidimensional effective features are to be obtained, a preliminary analysis of the radiation and motion state of the space target must be performed during flight. In fact, due to the position of the target changing relative to the Sun, Earth, and other radiation sources, the solar radiation and Earth radiation absorbed by the target are also dynamic. Additionally, there are differences in the material, infrared emissivity, shape, and size of each target, and these factors will lead to differences in the trend of temperature change of the target, which provides a potential characteristic parameter for target recognition. In addition to temperature, the target will experience micromotion during the flight due to external disturbance factors [

16]. The target will either precess or tumble until entering the atmosphere depending on whether the target is regular in shape and has attitude controllers or not. The micromotion makes the difference in the emissivity–area product in the line of sight of the infrared detector in addition to the variability of the micromotion period, which gives the two features potential for recognition. According to Planck’s law, the temperature, the emissivity area product, and the micromotion period determine the variation of the radiation intensity of the target, which makes it the most critical reference feature. In addition, during the process of space target splitting, the newly generated target has a lighter mass and the velocity between targets will also be different according to the law of momentum conservation. Therefore, the velocity may also provide a certain reference feature for target recognition [

17]. It is exciting that the irradiance of the target can be inverted from the grayscale value of the image through the calibration relation equation of the detector, and combined with the distance information between the detector and the target, we can obtain the radiation intensity of the target so that the multi-frame images of the space target are converted into the infrared radiation intensity series signal of the target. Additionally, the relationship between the coordinates of target imaging points and space locations can be obtained via matrix variation, which provides the underlying theory for velocity extraction based on the pixel coordinates of the target. Therefore, in combination with the relevant physical laws, the multi-dimensional feature of the target can be extracted based on the infrared images.

Data fusion is the integrated processing of heterogeneous information from multiple sources to make a complete and accurate assessment of the state of the observed object, which helps us to make full use of the multidimensional feature information of the space target [

18]. It has been widely developed in several fields, such as machine fault diagnosis [

19], health discrimination [

20], emotion understanding [

21], and target intention judgment [

22]. However, in practical scenarios, the acquisition of feature data is easily disturbed by noise and other factors, resulting in feature data of targets being uncertain, and this uncertainty should be dealt with is still an open problem. To solve it, various theories have been proposed, such as fuzzy set theory [

23,

24], rough set theory [

25], Z numbers theory [

26], D-number theory [

27], Bayesian theory [

28,

29], and Dempster–Shafer theory [

30,

31].

Fuzzy sets can describe the fuzziness hiding in uncertain real word scenarios [

23]. Rough set theory plays an important role in simplifying information processing, studying expression learning, and discovering imprecise information [

25]. However, quantifying uncertainty is still a huge challenge for fuzzy set and rough set theories. Bayesian theory is a mathematical model based on the probabilistic inference that can effectively deal with uncertainty and incompleteness. However, it presupposes that prior knowledge of events is required [

28]. While Dempster–Shafer theory, an efficient method for data fusion, satisfies weaker conditions than Bayesian theory, it can operate without any prior information regarding event probability. It also describes evidence fusion rules that facilitate quantifying and dealing with the uncertainty of events. However, when the fused evidence is highly contradictory, the DS theory may produce counterintuitive results, leading to erroneous inference and conclusions about events. To address the above problem, a large number of research results have emerged, and these can be divided into two categories. One is to modify Dempster’s combination rule; for example, Yager [

32] believed that conflicting evidence cannot provide favorable support for the final decision and proposed a new fusion rule to reassign conflicting evidence. Dubois and Prade proposed a disjunctive combination rule to reassign the conflicting part of each evidence to the concatenated set [

33]. However, the modification of the fusion rule often leads to the destruction of the combination and exchangeability of the DS combination rule. The other is to modify the evidence body. This method does not change the fusion rule, and the original good properties are retained, including Murphy’s method [

34], Deng et al.’s method [

35], Sun et al.’s method [

36], and others. Murphy proposed modifying and averaging the initial body of evidence before performing fusion. Deng et al. used an improved averaging method based on the distance of evidence to combine quality functions. Sun used a weighting and averaging method to reassign conflicting values, introduced an evidence credibility metric, and successfully solved the problem of conflict between pieces of evidence.

Classifier fusion is an effective strategy for improving the classification performance of complex pattern recognition problems. In practice, multiple classifiers to be combined may have different reliabilities, and an appropriate ensemble classifier plays a crucial role in achieving optimal classification performance during the fusion process [

37]. Ensemble classifiers are widely applied in practical scenarios. For instance, Mai et al. used different classifiers learned from three features and combined them using convolutional layers to achieve fruit detection [

38]. Kaur et al. proposed a classifier fusion strategy to aggregate predictions from three classifiers: SVM, logistic regression, and K-nearest neighbor through majority voting [

39]. Bhowal et al. introduced an alternative low-complexity method for computing fuzzy measures and applied it to Choquet integrals to fuse deep learning classifiers from different application domains [

40]. Furthermore, the aforementioned [

32,

33,

34,

35,

36] are also considered classifier fusion, which modifies the DS evidence theory by formulating different fusion rules to fuse the evidence obtained by different classifiers.

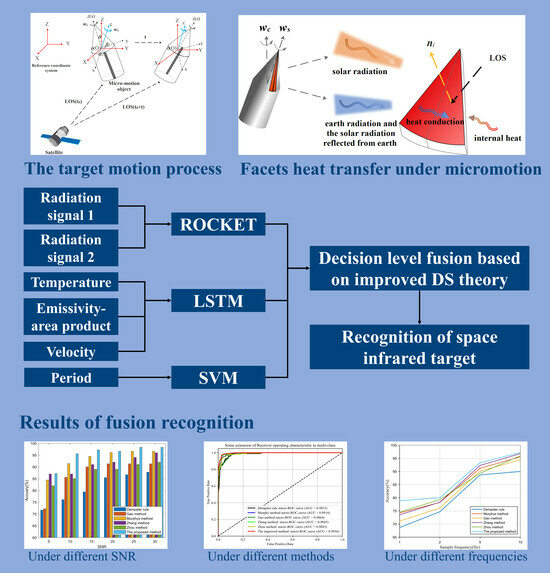

To comprehensively utilize the multidimensional feature and solve the recognition problem of space infrared dim target, this paper combines multiple classifiers with DS theory and proposes an ensemble classifier with an improved DS fusion rule recognition model. Specifically, the ensemble classifier can perform preliminary classification and recognition based on the extracted multidimensional feature to obtain the BPA of each feature evidence, and the improved DS fusion rule can modify the current evidence body to improve the conflict situation among the feature evidence.

The main contributions of this paper are as follows:

- (1)

The infrared radiation intensity model and imaging model of space target are developed. Firstly, the characteristics of external radiation, micromotion, temperature, and projected area change are analyzed to derive the radiation model of the space target. The imaging model is established according to the relationship between the target position and the imaging point of the infrared detector. Thus, multi-frame infrared images of space targets can be easily acquired.

- (2)

An ensemble classifier based on ROCKET, LSTM, and SVM is constructed, and the corresponding conversion methods are designed to convert the probability outputs and the classification accuracies of the three classifiers into the corresponding BPAs and weight values, respectively.

- (3)

A contraction–expansion function for the BPA is proposed, which can scale up or down the value of the current BPA according to whether the value satisfies the threshold to achieve the modification of the evidence.

- (4)

Through testing and evaluation of space target data in multiple scenes, it is verified that the recognition accuracy of the proposed method has a certain degree of improvement compared with that of relying on a single dimension, and in addition, it can significantly improve the recognition performance compared to other DST-based benchmark algorithms in strong noise scenes.

The rest of this paper is organized as follows.

Section 2 briefly describes the infrared radiation intensity model and the imaging model of the space target. In

Section 3, the ROCKET, LSTM, SVM, and DST are briefly introduced. Then, the main framework of our proposed algorithm is presented in

Section 4.

Section 5 presents the experimental results and performance comparison.

Section 6 concludes the paper and provides an outlook for the future work.

2. Infrared Radiation Intensity and Target Imaging Modeling

Since the flight region of the targets is outside the atmosphere and there is no prior information about the attributes, the real infrared radiation information of the objects is difficult to collect. Therefore, in this paper, factors affecting infrared radiation—such as temperature, material emissivity, shape, micromotion, and so on—are considered comprehensively to establish the simulation infrared radiation model. Additionally, considering that the positions of the targets and the infrared detector are changing, the image plane coordinates of the targets in the infrared detector vary dynamically, which affects the speed extraction of the targets. Therefore, in this section, the infrared radiation intensity model and the imaging model of the targets are established.

2.1. Micromotion Model

Some of the targets will split during the flight, producing multiple shapes that pose threats to satellites and space stations. Most of these shapes are ball-base cone, flat-base cone, cone–cylinder, cylinder, sphere, and arc debris. Since the axisymmetric shape is representative and the change law of the sphere is simple, this paper focuses on the four axisymmetric shapes of targets. In addition, during the splitting process, the targets will be subject to the interference moment in addition to the gravitational force, which will produce micromotion [

41]. In general, the conventional forms of micromotion are spinning, coning, and tumbling. If the targets have a regular shape and are equipped with an attitude control device, the precession will occur and is decomposed into a spinning component and a coning component.

In contrast, the target lacking a control device will tumble around the tumble axis at a certain angular velocity. The micromotion not only changes the projected area of the targets in the line-of-sight (LOS) of the infrared detector in a short period but also changes the temperature of the target surface elements. Additionally, the micromotion state of different shaped targets is different. Therefore, it is significant to model the micromotion law of space targets and analyze its influence on infrared radiation, to grasp the multi-dimensional characteristic information of the targets.

Assuming the cone–cylinder is coning and spinning during the flight, the local coordinate system is

, the reference coordinate system is

, and the point

is the origin of the two systems, as shown in

Figure 1, which depicts the motion process of the target from

to

. The azimuth and elevation angles of the precession axis are

and

at the initial time

, LOS is the sight vector from the detector to the target centroid in the reference coordinate system, and

and

are the angular velocities of the spinning and the coning, respectively.

The first step is to obtain the Euler rotation matrix for vector conversion in different coordinate systems. We assume that the matrix rotation order is

, and the Euler angle is

. The Euler rotation matrix

from the local coordinate system to the reference coordinate system is:

Then, the micromotion rotation matrices are calculated. For the precession target, the spinning axis of the target is considered to coincide with the

-axis in the local coordinate system. The spinning angular velocity vector in

is

According to the Rodrigues equation, the rotation matrices of spinning and coning can be calculated as:

where

and

are skew-symmetric matrices. By multiplying the three matrices, the global rotation matrix can be mathematically expressed as below:

Similar to the above, assuming

,

are the azimuth and elevation angles of the tumbling axis, respectively. The tumbling rotation matrix is:

where

is the skew-symmetric matrix. Based on the above contents, the normal vector

of any target surface facets in

can be translated to the normal vector

in

by

The above equation is the rotation matrix of the micromotion model, which shows the relationship of the vectors of target facets in different coordinate systems. It can calculate the normal vectors of the surface facets in the reference coordinate system at any moment, which provides a mathematical model to calculate the projected area of the target in the LOS of the infrared detector.

2.2. Infrared Radiation Intensity Model

Based on Planck’s Law, the infrared radiation intensity of a target is mainly determined by temperature, material emissivity, projected area, and so on. Therefore, it is imperative to analyze the temperature state of the target. The temperature change of a space target is mainly influenced by external radiation, heat conduction, and internal heat source, as shown in

Figure 2.

To analyze the temperature distribution of the target more finely, the surface of the target is faceted, and the effect of micromotion on the temperature of the facets is considered, the following equations present the external radiation absorbed by the facets:

where

,

,

are the solar radiation, the Earth radiation, the solar radiation reflected from Earth absorbed by the facet, respectively;

is the absorption coefficient;

is the solar constant;

is the area of facet;

is the micromotion matrix;

is the normal vector facet; and

is the solar radiation vector.

,

are the angle coefficients of radiation, and

is the average albedo of the Earth. According to the heat equivalence equation, the temperature of all facets satisfies the following:

where

is the number of facets. The relation is linearized and the temperature

of the target can be calculated using the Gauss–Seidel method. At any time, the area observed by the infrared detector is always smaller than the superficial area of the target. Therefore, it is necessary to calculate the projected area of the target in the LOS utilizing the micromotion model, as shown in the following:

According to the above, the models of temperature and projected area have been shown. However, one important factor has to be considered: the reflected radiation of the target, which will affect the magnitude of the radiation intensity in the detector pupil. This part will affect the accuracy of extracting the temperature and emissivity–area product, especially for the low emissivity of the target. In this paper, only three types of reflected radiation are considered: surface-reflected solar radiation

, surface-reflected Earth radiation

, and surface-reflected solar radiation reflected from Earth

. The corresponding radiation irradiances are:

where

is the bidirectional reflectance distribution coefficient. The self-radiance can be calculated for the facet as follows:

where

,

are the radiation constants respectively, assuming that B is the set of facets within the detector’s field of view. Based on the above, the infrared radiation intensity in the pupil of the detector, whose spectrum is

, is modeled as:

2.3. Simulating Imaging Model of the Target

The angle of the space target to the detector is usually less than the instantaneous field of view of the system so that the target is considered a point source [

42]. The image point of the target is determined by the projection position and irradiance. The mapping from the space coordinate of the target to the image plane coordinate requires conversion from Earth-centered inertial (ECI) coordinate system, orbital coordinate system, and sensor coordinate system to the image plane coordinate system. The transformation matrix is as shown:

where

is the rotation matrix from the ECI coordinate system to the orbital coordinate system and

is the rotation matrix from the orbital coordinate system to the sensor coordinate system. Assuming that the image plane coordinate of the target is point

, it is known from the imaging principle that the relationship between the image plane coordinate and the sensor coordinate is shown as follows:

Convert the image plane coordinates to the pixel coordinates of the target using the following equation:

where

is the focal length,

is the pixel size, and

are the number of pixels in the row and column of the image plane, respectively. When a point source is imaged, a diffraction ring with a bright central spot and several alternating bright and dark diffraction rings is formed on the imaging surface, where the central bright spot is called the Airy spot, whose energy accounts for about 84% of the entire image spot. The irradiance response of the target after diffraction at any image position is calculated by the two-dimensional Gaussian point spread function:

where

is the target position of the image plane and

is the energy diffusion range when

, about 98% of the target energy diffuses to 3 × 3 areas in the center of the pixel.

According to the above radiation intensity model and imaging model of the target, the position and irradiance value of the target at any moment during the flight can be simulated. Combined with the dual-band temperature measurement algorithm, CAMDF algorithm, dual-star positioning, and velocity extraction algorithms of the target, six dimensions—long-wave radiation intensity (8–12 ), long-medium-wave radiation intensity (6–7 ), temperature, emissivity–area product, micromotion period, and velocity of the target—can be extracted from multi-frame infrared images, which provides data support for the multi-dimensional feature decision-level fusion and recognition below.

4. The Proposed Method of Target Recognition

Uncertainty feature data processing is an indispensable step for space target recognition. In the process of judging the types of the space target, it is often necessary to fuse different feature data from observed targets to implement a comprehensive judgment and evaluation of target types. In addition, in the ensemble classifier architecture, the variability in the learning degree of different classifiers for feature data sources leads to divergent recognition results of space targets, which is called information source conflict. To enhance the rationality of feature data fusion, attenuate the conflicts among feature data and further improve the recognition accuracy, this paper proposes a new space target recognition method that combines an ensemble classifier and improved DS. Firstly, three kinds of trained classifiers (ROCKET + Ridge, LSTM, SVM) to test based on the six-dimensional target features (long-wave radiation intensity (8–12 )), infrared radiation intensity (6–7 ), temperature, emissivity–area product, velocity, and micromotion period) extracted from multi-frame infrared radiation images to construct the base BPA.

The proposed method consists of three main modules: an ensemble-classifier-based information processing module for multidimensional feature data, an improved DS information fusion module, and a decision module. The information-processing module uses the three types of classifiers based on the six-dimensional space target features for initial classification and outputs the posterior probability density values of each category to construct the basic BPA matrix for use in the next module. In the information fusion model, the credibility of the BPA value is judged based on the number of space target categories, and the contraction–expansion function is introduced for evidence scaling. The accuracy of each classifier is used as the discount coefficient to improve the reasonableness of the evidence. Finally, the target decision recognition module, by setting decision thresholds, makes fusion judgments on the target category. The flowchart of the proposed algorithm is shown in

Figure 4.

In the information processing stage, the main purpose is to perform pre-processing of the dual-band multi-frame infrared images generated from the infrared detector, including blind element removal, infrared dim target detection, target signal enhancement, target tracking, and so on. Then a series of pixel point coordinates and gray value information of the target can be extracted. In this paper, assuming that these two types of information about the target have been accurately obtained, the focus is on the feature extraction and the decision recognition of the target category. Extraction of infrared radiation intensity, temperature, emissivity–area product, and micromotion period of the target is achieved by using the gray value of the target in two infrared bands, and the velocity information of the target is extracted by using the pixel point coordinates of the target in the image combined with the infrared detector position and vector. The main contents of this section are as follows:

4.1. Multi-Dimensional Feature Extraction

Features are the intrinsic information describing the properties of objects, and different categories of targets have distributional variability in the feature space. Space targets have variability in the above six-dimensional features due to differences in materials, emissivity, mass distribution, etc. These provide feature data references for recognizing targets. For the infrared radiation intensity, its magnitude is reflected by the level of the pixel gray value of the target. The corresponding function equation of the radiation of the target and the gray value is required to obtain by the blackbody radiation calibration. Once the radiation equation is obtained, the inverse functional relationship can be used to achieve the conversion of gray values to radiation. In general, the equation is often characterized as a linear relationship, as shown below:

where

is the infrared radiation of the target;

is the gray values; and

are the coefficients obtained by fitted.

By performing the above calculation, the consecutive radiation signal of the target in two infrared bands can be extracted. Because of the long distance between the target and the detector, the irradiance magnitude of the target reaching the pupil is weak, so the effect of detector noise cannot be ignored. Considering that the infrared signal is regular, the DA-VMD algorithm [

53] is used to denoise the radiation signal. This approach avoids the artificial subjective setting of mode number

and quadratic penalty term

in the VMD and finds the optimal parameter values by iterating the objective function through the optimization algorithm. After that, the feature of infrared radiation intensity signals (8–12

, 6–7

) can be obtained. The extraction of temperature can be operated with the help of dual-band thermometry. However, since the radiation in the pupil of the detector contains not only the self-radiation of the target but also the external radiation reflected by the target during observing the target, the real temperature and the emissivity of the target can be accurately derived using the method, assuming that the value of the external radiation reflected by the target is known. However, when the target is flying in space, the external radiation is in dynamic change and difficult to know. This difficulty leads to a deviation between the extracted temperature from the real temperature of the target. According to previous studies, it has been shown that the use of 8–12

and 6–7

as the detector bands can reduce this bias to some extent. The principle of dual-band thermometry is shown in the following equation:

where

is the emissivity of the target;

,

are the lower edge of bands;

,

are the upper edge of bands;

is the temperature; and

is Planck’s Law equation.

is the relation between the temperature and ratio of the radiation of the two infrared bands, which can be obtained by the blackbody. In general,

is a monotonic function, and the

for the target can be solved by using the dichotomous method.

After that, under knowing the distance between the infrared detector and target, the emissivity-area product can be calculated by the following:

where

is the emissivity-area product of the target,

is the distance,

is the irradiance in the pupil, and

is the radiant intensity.

Since the micromotion leads to periodic fluctuations in the radiation signal of the target in the time domain dimension, the CAMDF is used to extract the micromotion period of the target [

54], which can overcome the drawback of the relevant algorithms to some extent: false valley points lead to period misclassification. The algorithm is as follows:

where

is the radiation signal,

is the length of the signal,

is the delay length, and

is the remainder operator.

When using a single-star infrared detector for target observation, if only the motion properties of the target are assumed to conform to the two-body motion law and the position and velocity of the target at the initial moment of observation are known, the space position and velocity of the target during the whole flight can be deduced by combining the Kalman filter and other related derived algorithms. However, there are two drawbacks: (1) In the actual observation scenario, when the LOS of the infrared detector captures the flying target and carries out stable tracking, the position and velocity of the target cannot be known at the initial moment; (2) when the target performs variable orbital motion, the characteristics of the target do not conform to the two-body motion model, failing single-star infrared detector extraction of velocity. Therefore, a combination of dual infrared detectors and the least-squares method is used to achieve the extraction of the target velocity in this paper [

55].

We first establish the mathematical model for solving the space position of the target and extracting the velocity based on the position. Firstly, according to the geometric positioning model of the dual infrared detectors, we establish the equation for solving the space position:

where

is the position coordinate of the space target and

,

denote the unit observation vector and the position of the detector in the J2000 coordinate system, respectively. According to the above equations, the unknown quantities are the position coordinate of the target, and the number of independent equations is six. According to the least-squares method, the target position

can be solved. Then, the velocity

of the target can be calculated according to the following equation:

Where is the time difference and , , are the variation of displacement.

Based on the above, the six-dimensional target features are extracted from the multi-frame images. for the velocity feature, its extraction accuracy is independent of the error of the radiation magnitude of the target and is related to these error sources, such as the location of the centroid of the target imaging point, the space coordinates of the infrared detector, the velocity of the infrared detector, the pose of the infrared detector, and so on. For the remainder, it should be explained that those which are non-independent are derived from the infrared radiation signal. Therefore, the accuracy of the infrared radiation has an impact on the extraction of other features. In particular, the emissivity–area product feature is affected not only by the infrared radiation but also by the accuracy of the temperature and the distance between the target and the detector.

4.2. Construction of the BPA and Decision Making

As mentioned above, building an ensemble classifier usually accomplishes two main tasks: (1) selecting several independent classifiers; (2) combining classification probability values to construct the basic BPA. Three classification algorithms, such as ROCKET + Ridge, LSTM, and SVM + sigmoid-fitting, are selected as individual classifiers in this paper. It should be clarified that ROCKET + Ridge is used to recognize the radiation intensity sequences of two infrared bands, and LSTM is used to separately recognize temperature sequences, emissivity–area product sequences, and velocity sequences. In the general case, the micromotion period remains constant rather than a time series during space targets flying outside the atmosphere, so the micromotion period is trained using SVM instead of LSTM or ROCKET, which can increase the computational speed and reduce the time to build the BPA. It should be explained that LSTM can also handle the classification of radiation intensity sequence signals, and we choose the additional classification algorithms because they have different classification mechanisms that complement as much information as possible to provide more accurate classification results. In addition, we found that for radiation intensity classification, ROCKET + Ridge has better classification results compared to LSTM.

For a test sample, the process of calculating the basic BPA corresponding to the radiation intensity sequence based on ROCKET is as follows:

4.2.1. Determine the initial BPA

The first step is to establish a basic recognition framework, use the classifier’s recognition results of six-dimensional features to construct an initial BPA, and assign evidence to each target category.

Assuming that there are categories of space targets, the initial recognized framework can be constructed as . The radiation intensity signals of all space targets are divided into a training set and a test set, and the training stage of the ROCKET + Ridge is accomplished using the training set, after which the denoising test set is input to the algorithm to obtain the accuracy, which is . When the infrared radiation intensity signal of a test sample is obtained and input to the algorithm, the probability value corresponding to each category is , which is the basic BPA value corresponding to the feature.

Therefore, the overall initial BPA matrix

for all feature is as follows:

4.2.2. Information fusion

This step is to accomplish the modification of the initial BPA, discount processing, and evidence fusion to better amplify the differences between the different probability values, which will help to make a category judgment.

From the previous description, it is known that there are conflicting situations among the evidence, which leads to fusion recognition results contrary to the facts. The sum of BPA is 1, and the average BPA of each category is

. In the extreme case, when the BPA value of a feature is

for all categories, the feature is not credible and is unsuccessful in recognizing the target. Therefore,

can be used as a threshold for scaling the BPA value. Here, we introduce a contraction-expansion function to improve the DS algorithm by modifying the basic BPA value.

When the BPA value of a feature corresponding to a certain category of the target is less than

, we consider that the probability of the test sample belonging to this category is small, so the BPA value is compressed. When the BPA value is greater than

, we consider that the probability of the sample belonging to this category is large, and the BPA value is scaled up. Then, the above-modified BPA values are normalized as follows:

After scaling, the accuracy of each recognition algorithm is used to weight the BPA of each category, and the algorithm uncertainty

is assigned to the BPA value of the overall recognition framework for the feature source. The calculation formula is below for each feature:

where

denotes the accuracy of the ith classifier and

denotes the probability that the recognition result belongs to all categories, i.e., it cannot be distinguished to which category the test sample belongs.

4.2.3. Category result output

This step is to make a target category judgment based on the results of evidence fusion. Obtain the above-modified BPA values and use Equation (32) to fuse these data. The probabilities after fusing are , and the probability assignment-based decision method is used as follows:

Supposing that

and

,

. If

,

satisfy the following conditions:

where

,

are the thresholds, then we consider the recognition result of the target to be the type

.

5. Experiments Results

The purpose of this section is to verify the necessity of multi-dimensional feature decision-level fusion and the performance of the proposed algorithm by implementing multiple experiments. First, the range of attribute value of space target and parameter of flight scene in this experiment are described in detail, and the dataset of space targets is built based on the infrared radiation model and target imaging model in the previous section. Subsequently, the recognition performance of multidimensional feature decision-level fusion is analyzed compared with the performance under a single feature. Then, the performance of our proposed algorithm and the comparison algorithm with different SNRs and different observation time lengths are discussed in the paper.

5.1. Experimental Parameter Setting

For the ROCKET, we choose the default parameters in the paper [

43]. Namely, the number of kernels of ROCKET is 10,000, and it produces 20,000 features for each infrared radiation sequence. The kernel function of SVM is RBF, the sigma of the kernel is 0.5, and the penalty factor is 1. The LSTM network is a five-layer neural network: one input layer, one LSTM layer with 200 hidden units, one fully connected layer, one softmax layer, and one classification layer. The classification layer outputs the probability for each category. For the training stage, the Adam optimizer is used with a learning rate of 0.001. The maximum number of epochs is 120, and the loss function is the cross-entropy in the network architecture. In the decision rule,

,

are set to 0 and 0.10, respectively.

5.2. Flight Scene and Space Target Property Setting

In the second section, the infrared radiation intensity model and imaging model of the space target are established in detail, and the main factors affecting the variation of infrared radiation intensity are analyzed. In this subsection, the corresponding parameters are set for the two models of the space target. To be more realistic, we simulate the infrared radiation image data of five types of space targets in six flight scenarios. The flight scenario environment, the flight time, the starting position, and the landing position of the targets are expressed in

Table 1. Although the starting position and the landing position of the targets in Scenes 1–3 are set to the same, the flight paths of the targets in each scene are different. The target flight path and observation satellite motion state are the same in Scene 5 and Scene 6; the difference is the lighting environment. Additionally, the lighting conditions of the targets in flight are set to three: full sunlight, full shadow, sunlight for the first half of the flight time, and shadow for the rest time.

The detector bands of infrared detectors are 8–12 μm and 6–7 μm respectively, and the frame frequency is 10 Hz, and the LOS of detectors always points to the centroid of the true target which is destructive. Also, the properties of each target are shown in

Table 2. The four shapes of targets are flat-base cone, ball-base cone, cone–cylinder, and cylinder. The categories of targets are real target (flat-base cone), master cabin (cone–cylinder), light decoy (ball-base cone, cylinder), and heavy decoy (cone–cylinder). There are 100 samples for each flight scene and 600 samples for the total flight scene, where the ratio of these targets number is 1:1:1:1:1. We used the infrared-observed images from 85 s to 100 s for recognition.

It should be noted that the infrared radiation intensity of the target extracted from the images can be disturbed by various factors: non-uniformity of the sensor image, target point coordinate extraction residual, measurement distance error, and other factors. These factors are usually described as Gaussian white noise to improve the realism of the data.

Figure 5a shows the normalized infrared radiation intensity of five targets under ideal conditions, and it can be seen that the radiation intensity of the targets is characterized by long-term variation and local fluctuation, which is the result of the micromotion. Master cabin and light decoy 1 have a relatively small amplitude of periodic variation, while real target, light decoy 2, and heavy decoy have large periodic variations.

Figure 5b shows the normalized radiation intensity signal extracted under noise interference (SNR = 10). It can be seen that the detailed information of the target radiation signal is seriously disturbed by noise, which may lead to a sharp decrease in the performance of target recognition. Therefore, 70% of the original data are randomly assigned to the training set and 30% of the data to the test set by adding different level noises to verify the performance of the proposed algorithm under different SNR ratios.

As shown in

Figure 6, five target images for six different flight scenarios are shown. The image pixel size is 1024 ∗ 1024, and it is assumed that the line of sight of the detector always points to the real target, which is always located at the center pixel point of the image. There is also a separation velocity relative to the real target when the other targets follow the set trajectories, causing each target to move towards the surroundings in the image, and it can be seen that there is indeed variability in the velocity of the individual targets.

5.3. Performance Comparison with the Single Feature

Since each dimensional feature data can be used as recognition, taking the case of the observation time length of 15 s as an example, the recognition accuracies of the single-dimensional feature under different noise levels are first calculated. In addition, to compare the recognition effect under fusing different dimensions, the accuracies of the proposed algorithm under fusing four features (temperature, emissivity–area product, period, and velocity) and fusing all features are also calculated, as shown in

Table 3.

It can be seen that for single-dimensional feature recognition, the recognition accuracies of long-wave radiation intensity and medium–long-wave radiation intensity are higher than that of other four dimensional features under any noise level—especially when the SNR > 10—the recognition accuracies of radiation intensity can be greater than 85%. For the three categories of features—namely, temperature, emissivity–area product, and period—the effect of recognition has a smaller difference. What surprises us is that the recognition accuracy is poor for the feature of velocity. We guess that the reason is that the difference of velocity of space flight targets is small, which leads to a reduced performance of the recognition algorithm during this observation process. By comparing the results in the table, it is found that the recognition effect of fusing six-dimensional features is higher than that of fusing four-dimensional features. For example, for , the recognition accuracy of the proposed algorithm for the fusion of six-dimensional features is 93.33%, which is higher than 83.89%. For the contribution of a single feature to the recognition accuracy, the most important is the radiation intensity, the contribution of velocity is the lowest, and the difference between the contribution of the remaining features is small. Although the recognition accuracy of fusing features is only slightly better than that relying only on radiation intensity at , the method of fusing features has a clear advantage at low SNR. In addition, although the recognition accuracy for any of the features (temperature, emissivity–area product, period, velocity) is lower, the accuracy after fusing is equally better than that of relying on the radiation intensity at low SNR, which shows that it is necessary to perform decision-level recognition with multidimensional feature fusion for space target recognition.

5.4. Comparison with Other Baseline Methods

We evaluate the performance of the proposed method with five classical baseline algorithms: the traditional DST [

31], the Murphy method [

34], the Gao method [

56], the Zhang method [

24], and the Zhou method [

29]. The Murphy method mainly averages the BPAs generated by multiple features for target discrimination and fuses the average BPAs several times to obtain the final recognition result. The Gao method introduces a new cross-entropy-based similarity criterion to modify the BPAs of multiple features and fuse the new values for decision recognition. The Zhang and Zhou methods fuse multidimensional features based on fuzzy sets and Bayesian theory, respectively, which are different from the DS evidence theory in this paper and therefore have wider reference value as comparative methods. It should be noted that the initial BPAs of the first three baseline algorithms are also obtained according to the ensemble classifier of this paper. To illustrate the recognition process of the proposed method, a test sample is used as an example, and

Table 4 shows the initial BPA values obtained after processing by the ensemble classifier.

During the whole observation process, assuming that the number of categories of the target is 5, the threshold value of the contraction-expansion function is 0.2. According to the Formula (41), the modified BPA is calculated, then the BPA is weighted by the accuracies of the classifiers to obtain the final BPA value. Finally, the final fusion results of this sample are obtained according to the DS fusion and decision rules, as shown in

Table 5, while the fusion results in the comparison algorithms are also listed. In this case, traditional Dempster’s rule, and Murphy’s method all have made wrong decisions. Gao’s method has a certain degree of improvement in the decision and makes correct judgments. The result of the proposed method is more discriminable and resolvable, which is more conducive to the control center making a reasonable judgment on the category of the target. Furthermore, both Zhang and Zhou’s methods made correct judgments, but their fusion results were still lower than the proposed method.

We compare the recognition performance of these algorithms under different observation times (L = 5 s, 10 s, 15 s) and different noise levels (SNR = 5, 10, 15, 20, 25, 30). The results are as shown in

Figure 7.

These results illustrate that when the observation time is fixed, with the improvement of the SNR, the recognition accuracy of each algorithm is improved to varying degrees, and then tends to be smooth. Once entering the smooth stage, increasing the SNR has no obvious effect on the improvement of the final recognition effect. This provides a certain index reference for designing the infrared detection system of the space target. Additionally, although the signal of the space target is pre-processed, it is undeniable that the residual noise still distorts the extracted features, which leads to a negative impact on the accuracy of space target recognition. In addition, the proposed algorithm in this paper outperforms the other five benchmark algorithms in most cases. For example, when the observation time is 15 s and the SNR is 5, the recognition accuracy of the proposed algorithm is 93.33%, which is better than 81.11% of the traditional DST algorithm. In summary, compared to the other algorithms, the proposed algorithm performs a higher recognition effect and certain robustness, especially in the case of low SNR.

The process of observing a space target by an infrared detector is dynamic and the size of the collected multi-frame images increases with time, which makes it necessary to analyze the effect of the images on the recognition accuracy at different observation times. Comparing the three figures, it can be seen that the recognition accuracy of the six algorithms improves with the increase in the observation time, and the proposed method outperforms the others. This is because the larger the observation time, the more information about the target can be obtained, which is useful for improving the recognition performance. It should be noted that when the length of observation time (L = 5 s) is smaller than the microrotation period of some targets, it will cause the complete information of one cycle of the target cannot be collected, which will lead to a larger recognition error rate. In this case, the length of observation time needs to be increased to ensure that the detector can acquire complete information about the target.

5.5. Comparison of ROC Curves

To assess the recognition performance of the proposed method more comprehensively,

Figure 8 illustrates the receiver operating characteristic (ROC) curves and the area under the ROC curve (AUC) for the above six methods at an observation time of 15 s and an SNR of 5. The conventional ROC represents how the binary classifier performance varies with the classifier threshold and is created by plotting the true positive rate (TPR) and false positive rate (FPR) at different classifier thresholds.

Since the space target recognition is a multi-classification scenario, the micro-ROC is used to evaluate the performance of the algorithm in the paper. AUC is defined as the area under the micro-ROC curve, which means the probability that when a positive sample and a negative sample are randomly selected, the confidence of the positive sample calculated based on the classifier is greater than the confidence of the negative sample. Generally, the AUC value is in the range of 0.5–1, and the larger the AUC value corresponding to the classifier, the more effective the classifier is. Generally, at 0.5–1, the larger the AUC value, the better the performance of the recognition model. According to the results, it can be found that the AUC of the proposed algorithm is the highest, which proves the proposed algorithm shows prominent performance.

5.6. Comparison of Different Frequencies

Since the signals sampled by the infrared detector are discrete and the infrared radiation intensity of the target is periodic, the frequency of the detector will affect the sampled radiation intensity waveform.

Figure 9 illustrates the recognition accuracy of space targets at different sampling frequencies when the SNR is 10. We can see that the performance of recognition is the lowest when the frequency is 1 Hz, and the second-lowest when the frequency is 2 Hz. This is because the micromotion velocity of some targets is fast, and the signal obtained from the detector loses important local information about the target according to Nyquist’s sampling law, which is different from the real radiation intensity of the target. In addition, it leads to a larger error in period extraction based on the sampled signal. These factors cause the poor accuracy of target recognition at low sampling frequencies. As the sampling frequency increases, the amount of information in the sampled signal increases, and the recognition accuracy increases gradually. When the sampled frequency of the detector is 10 Hz, the accuracy of target recognition is relatively highest at the same noise level. From the comparison of methods, at low frequencies (1 Hz and 2 Hz), the proposed method has the best accuracy, followed by the Zhou method; at high frequencies (5 Hz and 10 Hz), the proposed method still has the best accuracy, followed by the Zhang method. This verifies the effectiveness of the proposed method at different frequencies.

6. Conclusions

For the problem of the space infrared dim target recognition, a novel intelligent method that combines an ensemble classifier and improved Dempster–Shafer evidence theory for multi-feature decision-level fusion is proposed. The method innovatively combines ROCKET, LSTM, and SVM classifiers with information fusion theory to take full advantage of the different classifiers in information processing to generate the BPA required for the fusion decision stage. Then, a contraction-expansion function is defined to attenuate the contradiction between the BPAs of features, and the value of BPA is scaled by comparing it to the threshold value, which is determined according to the type and number of targets. Next, a discount operation is performed on the values according to the classifier accuracy, to improve the rationality among the features. Then the final discriminations of the target categories are made according to the decision rules. The experimental results show that the recognition accuracy of the method is significantly better than that with a single feature, especially when the SNR of the data is low (when the observation duration is 15 s, the accuracy of the method can still achieve 90%). This gives full play to the advantages of data fusion in improving recognition performance and significantly reflects the effect of feature fusion. In the case of different observation times, the method is still able to identify the target categories more accurately than other existing fusion decision methods, and the recognition accuracy can reach 87%, which is higher than 71.67%, 72.22%, and 84.44% for an observation time of 5 s and an SNR of 5. In addition, the proposed method can be applied to the fields of space situational awareness and multi-source data fusion to provide the corresponding technical support for the decision-making process.

In addition to the field of spatial infrared technology, this method can also be extended to security monitoring, industrial automation, medical diagnosis, and other fields. However, when applying this method to other domains, certain challenges and limitations may arise. Variations in target characteristics and background environments across different fields may necessitate adjustments and optimizations to the method. Additionally, practical considerations such as real-time performance, robustness, and computational efficiency should be considered. Enhancing the algorithm’s usability and applicability will be a focal point of our future research endeavors.

In future research work, we will further optimize our algorithm using more scene datasets, fully exploit the support of the velocity feature for recognition, and continue to study the correction rules of the BPA function to generate more appropriate weight data.