A Multi-Feature Fusion-Based Method for Crater Extraction of Airport Runways in Remote-Sensing Images

Abstract

:1. Introduction

2. Methodology

2.1. Runway Extraction

2.1.1. Region of Interest Extraction

2.1.2. Feature Extraction

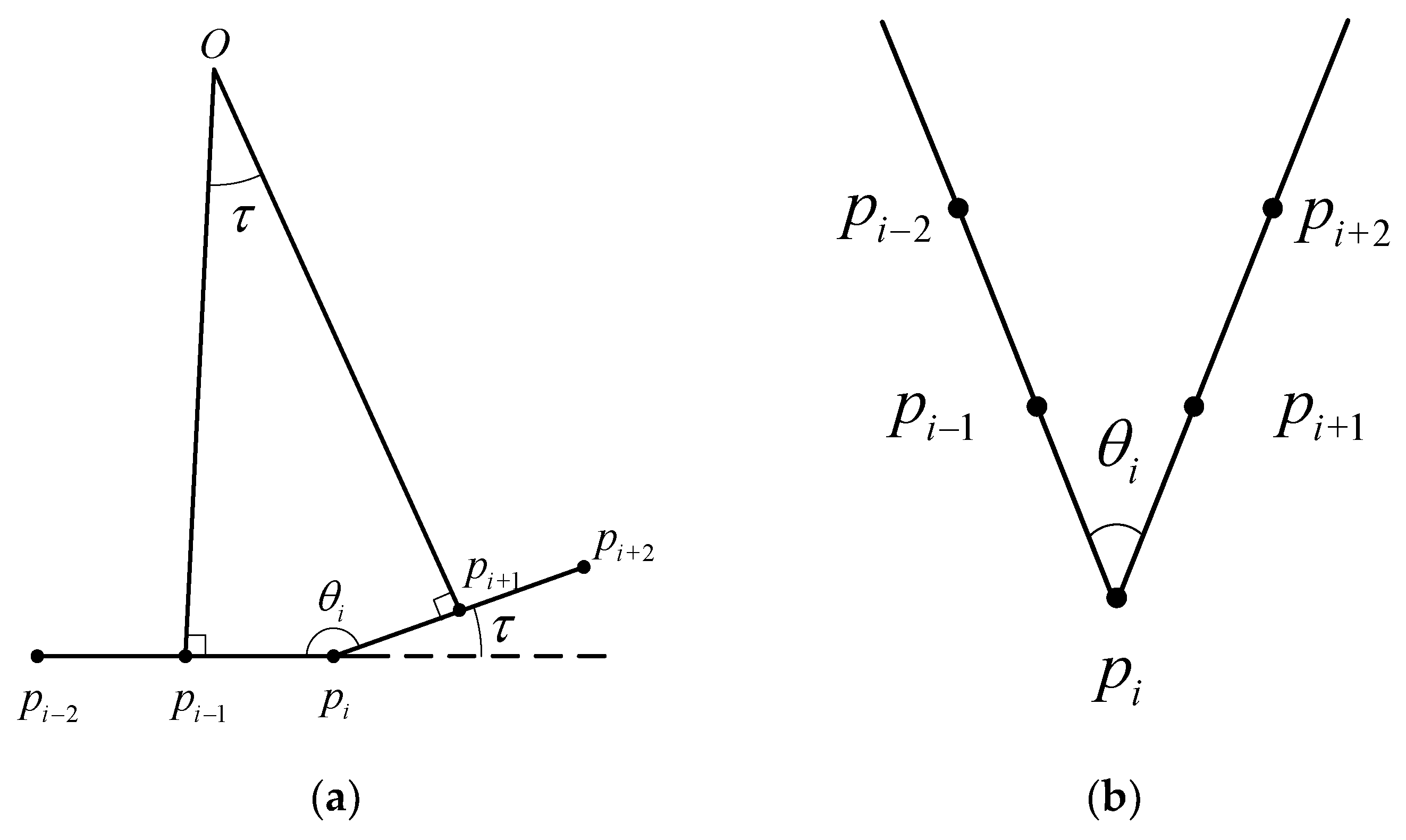

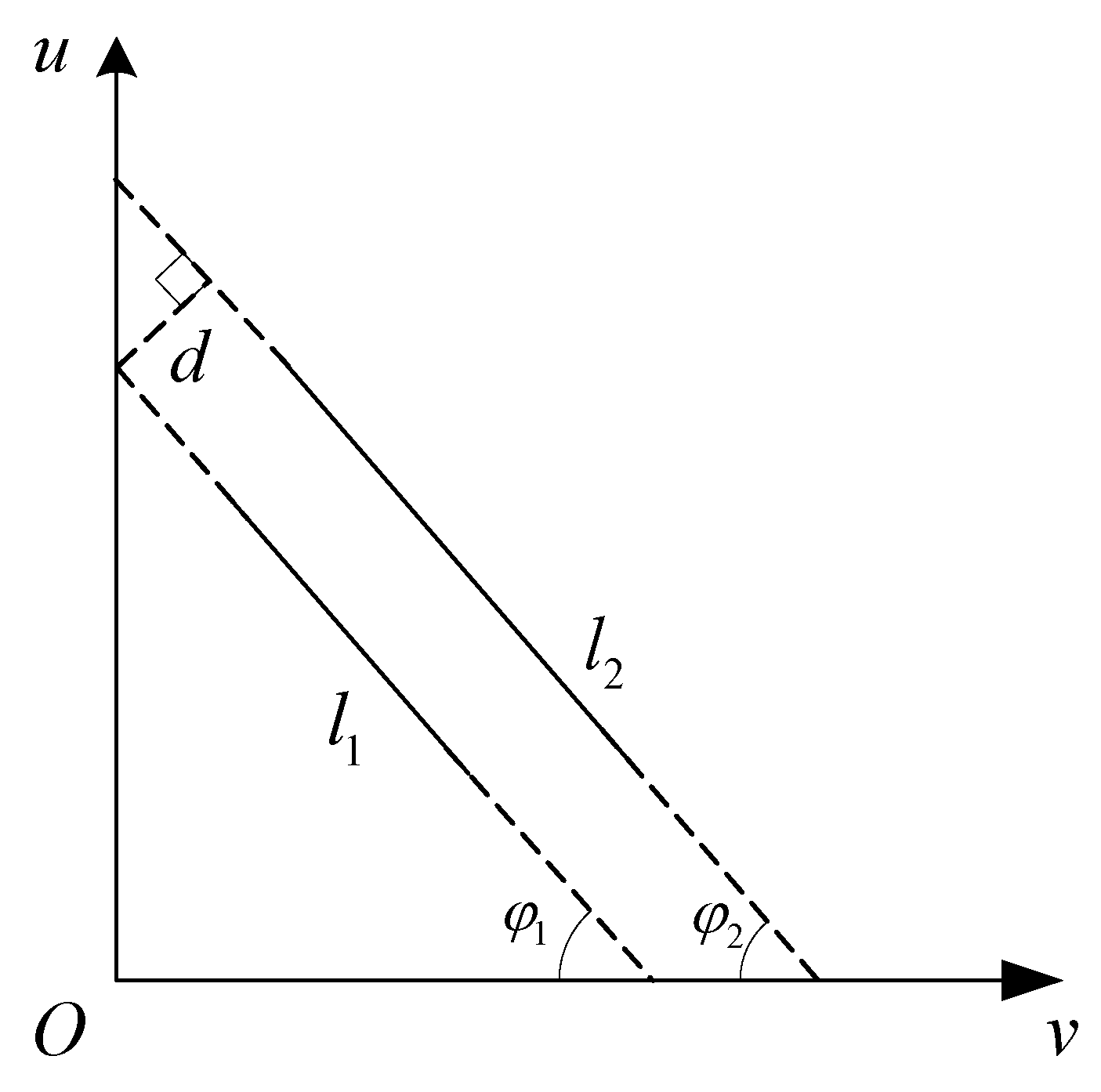

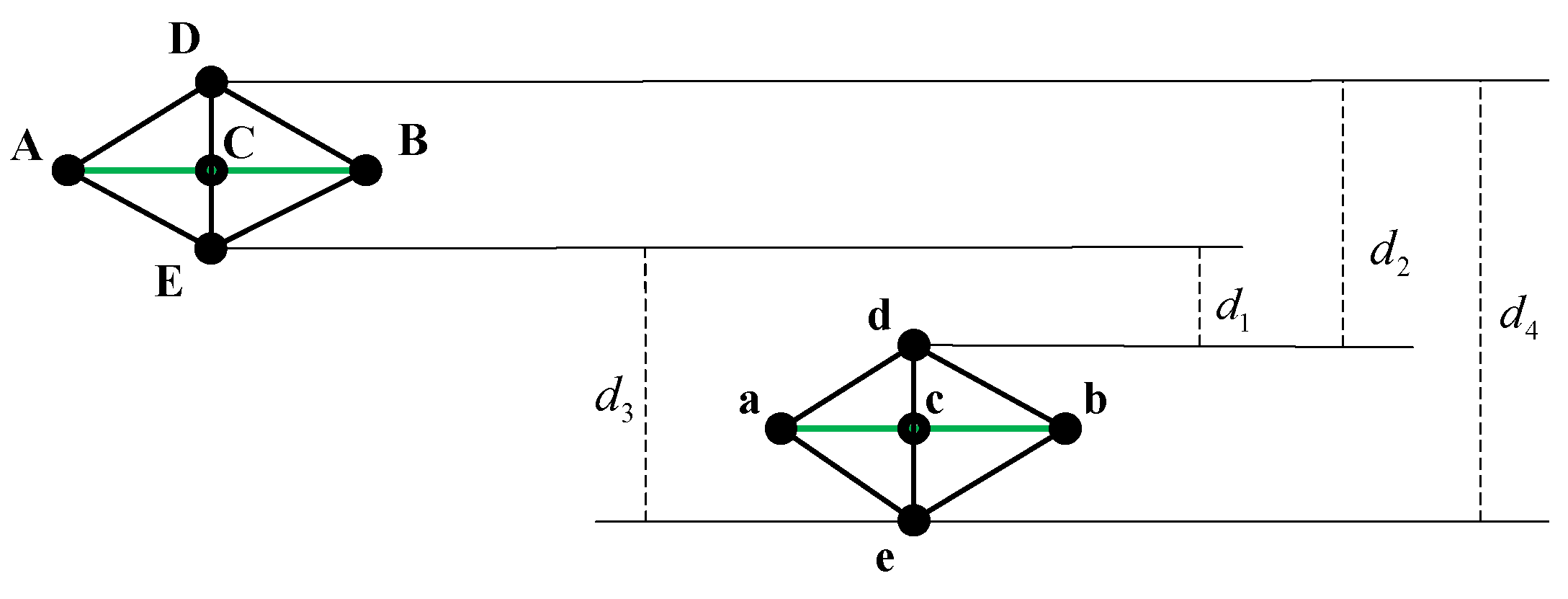

- Model of the feature extraction

- 2.

- Extraction of runway axis

- 3.

- Extraction of runway endpoints

2.1.3. Generate and Recognition of the Candidate Runway Extraction Results

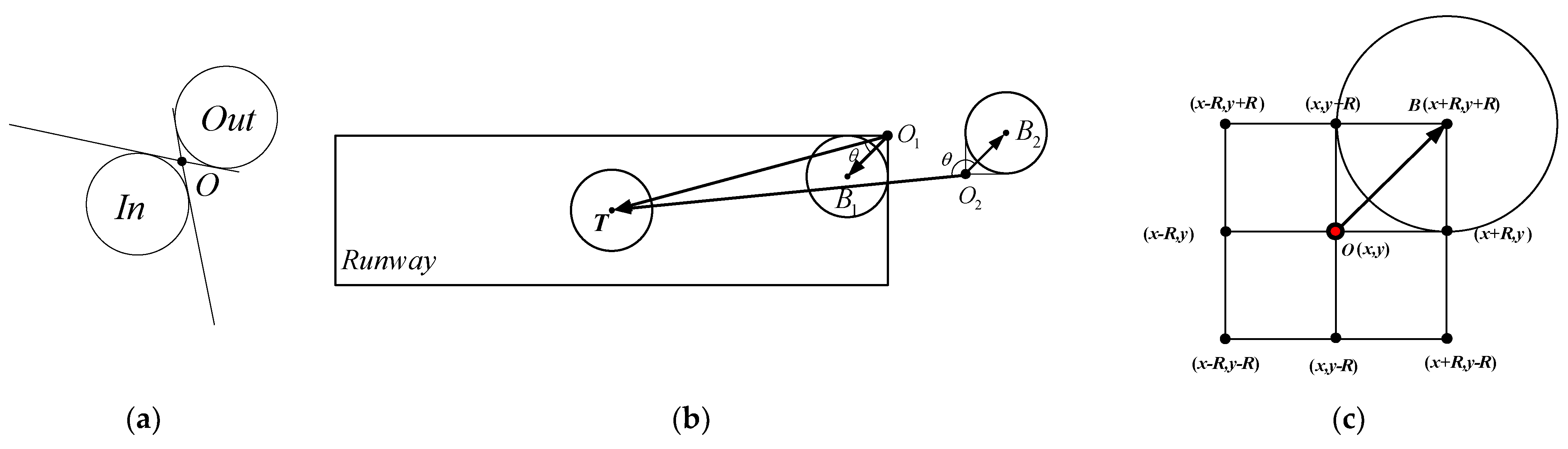

2.2. Crater Extraction

3. Results

3.1. Datasets and Parameters

3.2. Evaluation Metrics

3.3. Experimental Results and Comparison with State-of-the-Arts

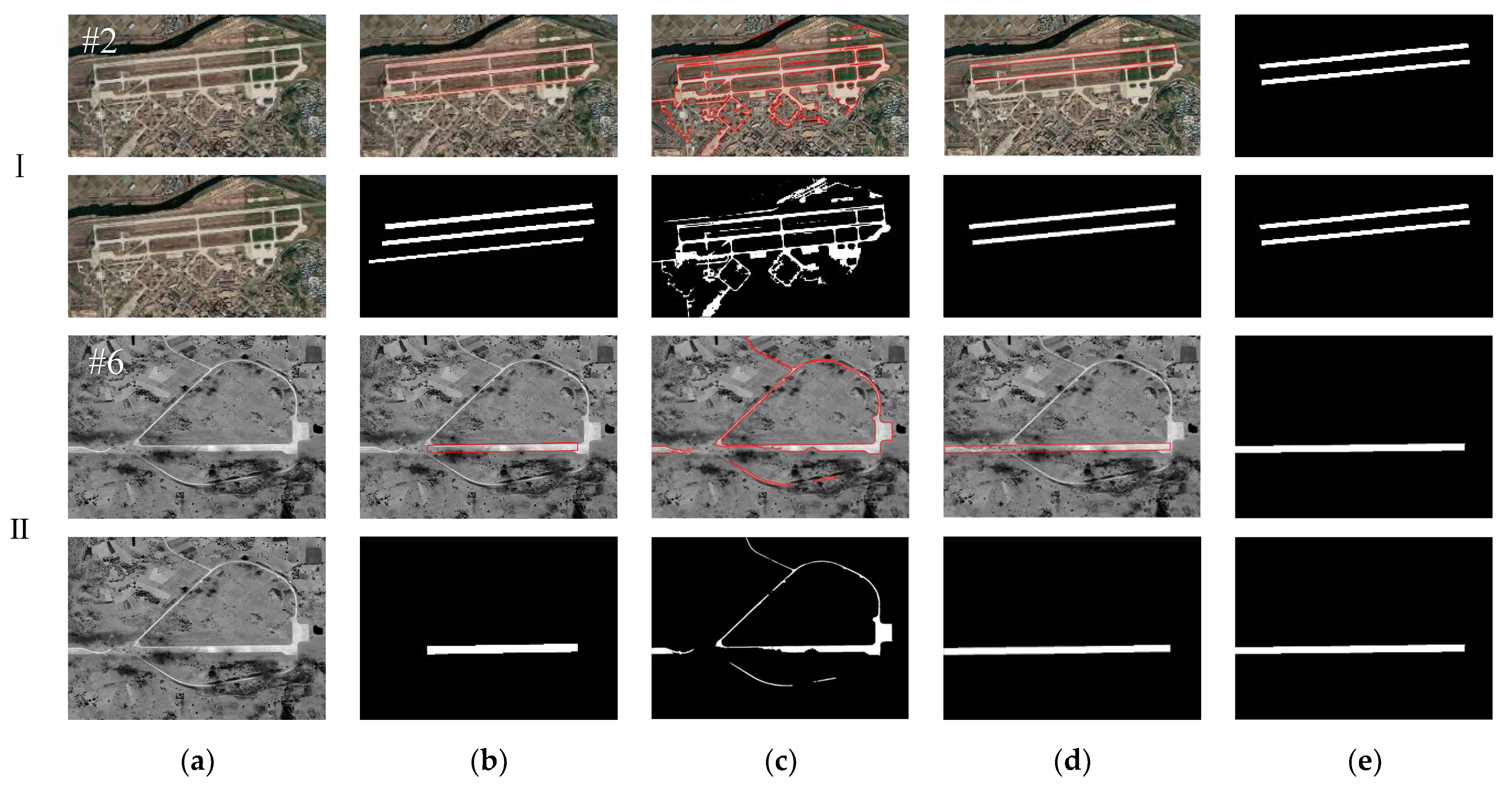

3.3.1. Experiment I

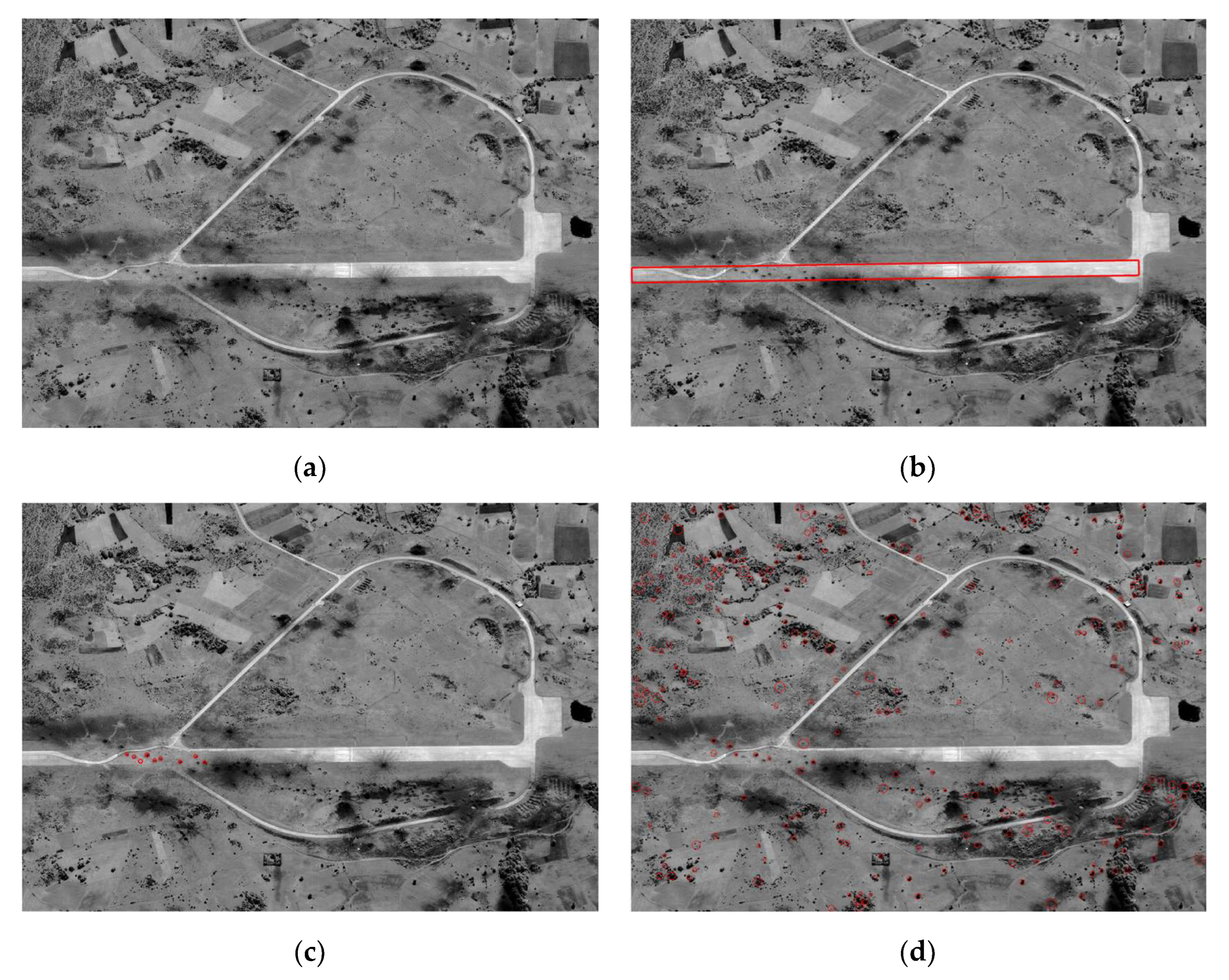

3.3.2. Experiment II

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wei, W.; Chen, Y.; Wang, Z.; Li, S.; Chen, L.; Huang, C. Comparative study on damage effects of penetration and explosion modes on airport runway. Constr. Build Mater. 2023, 411, 134169. [Google Scholar] [CrossRef]

- Al-Muhammed, M.J.; Zitar, R.A. Probability-directed random search algorithm for unconstrained optimization problem. Appl. Soft Comput. 2018, 71, 165–182. [Google Scholar] [CrossRef]

- Zhai, C.; Chen, X. Damage assessment of the target area of the island_reef under theattack of missile warhead. Def. Technol. 2020, 16, 18–28. [Google Scholar] [CrossRef]

- Liu, Z.; Xue, J.; Wang, N.; Bai, W.; Mo, Y. Intelligent Damage Assessment for Post-Earthquake Buildings Using Computer Vision and Augmented Reality. Sustainability 2023, 15, 5591. [Google Scholar] [CrossRef]

- Shen, Y.; Zhu, S.; Yang, T.; Chen, C.; Pan, D.; Chen, J.; Xiao, L.; Du, Q. BDANet: Multiscale Convolutional Neural Network with Cross-Directional Attention for Building Damage Assessment from Satellite Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5402114. [Google Scholar] [CrossRef]

- Tu, J.; Gao, F.; Sun, J.; Hussain, A.; Zhou, H. Airport Detection in SAR Images Via Salient Line Segment Detector and Edge-Oriented Region Growing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 314–326. [Google Scholar] [CrossRef]

- Li, N.; Cheng, L.; Ji, C.; Dongye, S.; Li, M. An Improved Framework for Airport Detection Under the Complex and Wide Background. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 9545–9555. [Google Scholar] [CrossRef]

- Boucek, T.; Stará, L.; Pavelka, K.; Pavelka, K.J. Monitoring of the Rehabilitation of the Historic World War II US Air Force Base in Greenland. Remote Sens. 2023, 15, 4323. [Google Scholar] [CrossRef]

- Suau-Sanchez, P.; Burghouwt, G.; Pallares-Barbera, M. An appraisal of the CORINE land cover database in airport catchment area analysis using a GIS approach. J. Air Transp. Manag. 2014, 34, 12–16. [Google Scholar] [CrossRef]

- De Luca, M.; Dell’Acqua, G. Runway surface friction characteristics assessment for Lamezia Terme airfield pavement management system. J. Air Transp. Manag. 2014, 34, 1–5. [Google Scholar] [CrossRef]

- He, C.; Zhang, Y.; Shi, B.; Su, X.; Xu, X.; Liao, M. Weakly supervised object extraction with iterative contour prior for remote sensing images. EURASIP J. Adv. Sig. Process. 2013, 2013, 19. [Google Scholar] [CrossRef]

- Wang, Y.; Song, Q.; Wang, J.; Yu, H. Airport Runway Foreign Object Debris Detection System Based on Arc-Scanning SAR Technology. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5221416. [Google Scholar] [CrossRef]

- Jing, Y.; Zheng, H.; Lin, C.; Zheng, W.; Dong, K.; Li, X. Foreign Object Debris Detection for Optical Imaging Sensors Based on Random Forest. Sensors 2022, 22, 2463. [Google Scholar] [CrossRef] [PubMed]

- Aytekin, Ö.; Zöngür, U.; Halici, U. Texture-Based Airport Runway Detection. IEEE Geosci. Remote Sens. Lett. 2012, 10, 471–475. [Google Scholar] [CrossRef]

- Ding, W.; Wu, J. An Airport Knowledge-Based Method for Accurate Change Analysis of Airport Runways in VHR Remote Sensing Images. Remote Sens. 2020, 12, 3163. [Google Scholar] [CrossRef]

- Wang, X.; Lv, Q.; Wang, B.; Zhang, L. Airport Detection in Remote Sensing Images: A Method Based on Saliency Map. Cogn. Neurodynamics. 2013, 7, 143–154. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Liu, Z.; Shi, W. Semiautomatic Airport Runway Extraction Using a Line-Finder-Aided Level Set Evolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4738–4749. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, J.; An, Z.; Shang, Y. Runway Image Recognition Technology based on Line Feature. In Proceedings of the 2022 IEEE 2nd International Conference on Electronic Technology, Communication and Information (ICETCI), Changchun, China, 27–29 May 2022. [Google Scholar]

- Wu, W.; Xia, R.; Xiang, W.; Hui, B.; Chang, Z.; Liu, Y.; Zhang, Y. Recognition of airport runways in FLIR images based on knowledge. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1534–1538. [Google Scholar] [CrossRef]

- Liu, C.; Cheng, I.; Basu, A. Real-Time Runway Detection for Infrared Aerial Image Using Synthetic Vision and an ROI Based Level Set Method. Remote Sens. 2018, 10, 1544. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhang, L.; Shi, W.; Liu, Y. Airport Extraction via Complementary Saliency Analysis and Saliency-Oriented Active Contour Model. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1085–1089. [Google Scholar] [CrossRef]

- Jing, W.; Yuan, Y.; Wang, Q. Dual-Field-of-View Context Aggregation and Boundary Perception for Airport Runway Extraction. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4702412. [Google Scholar] [CrossRef]

- Liu, L.; Cheng, L.; Ji, C.; Jing, M.; Li, N.; Chen, H. Framework for Runway’s True Heading Extraction in Remote Sensing Images Based on Deep Learning and Semantic Constraints. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 6659–6670. [Google Scholar] [CrossRef]

- Amit, R.A.; Mohan, C.K. A Robust Airport Runway Detection Network Based on R-CNN Using Remote Sensing Images. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 4–20. [Google Scholar] [CrossRef]

- Men, Z.; Jiang, J.; Guo, X.; Chen, L.; Liu, D. Airport Runway Semantic Segmentation Based on DCNN in High Spatial Resolution Remote Sensing Images. In Proceedings of the International Conference on Geomatics in the Big Data Era (ICGBD), Guilin, China, 15–17 November 2020. [Google Scholar]

- Chen, L.; Tan, S.; Pan, Z.; Xing, J.; Yuan, Z.; Xing, X.; Zhang, P. A New Framework for Automatic Airports Extraction from SAR Images Using Multi-Level Dual Attention Mechanism. Remote Sens. 2020, 12, 560. [Google Scholar] [CrossRef]

- Lin, E.; Qin, R.; Edgerton, J.; Kong, D. Crater detection from commercial satellite imagery to estimate unexploded ordnance in Cambodian agricultural land. PLoS ONE 2020, 15, e0229826. [Google Scholar] [CrossRef]

- Geiger, M.; Martin, D.; Kühl, N. Deep Domain Adaptation for Detecting Bomb Craters in Aerial Images. In Proceedings of the 56th Annual Hawaii International Conference on System Sciences (HICSS), Waikiki Beach, HI, USA, 3–6 January 2022. [Google Scholar]

- Dolejš, M.; Pacina, J.; Veselý, M.; Brétt, D. Aerial Bombing Crater Identification: Exploitation of Precise Digital Terrain Models. ISPRS Int. J. Geo-Inf. 2020, 7, 713. [Google Scholar] [CrossRef]

- Merler, S.; Furlanello, C.; Jurman, G. Machine Learning on Historic Air Photographs for Mapping Risk of Unexploded Bombs. In Proceedings of the 2005 13th International Conference on Image Analysis and Processing (ICIAP), Cagliari, Italy, 6–8 September 2005. [Google Scholar]

- Dolejš, M.; Samek, V.; Veselý, M.; Elznicová, J. Detecting World War II bombing relics in markedly transformed landscapes (city of Most, Czechia). Appl. Geogr. 2020, 119, 102225. [Google Scholar] [CrossRef]

- Kruse, C.; Wittich, D.; Rottensteiner, F.; Heipke, C. Generating impact maps from bomb craters automatically detected in aerial wartime images using marked point processes. ISPRS Open J. Photogramm. Remote Sens. 2022, 5, 100017. [Google Scholar] [CrossRef]

- Descombes, X.; Zerubia, J. Marked point process in image analysis. IEEE Signal Process. Mag. 2002, 19, 77–84. [Google Scholar] [CrossRef]

- Lacroix, V.; Vanhuysse, S. Crater Detection using CGC—A New Circle Detection Method. In Proceedings of the 4th International Conference on Pattern Recognition Applications and Methods (ICPRAM), Lisbon, Portugal, 10–12 January 2015. [Google Scholar]

- Ding, M.; Cao, Y.; Wu, Q. Method of passive image based crater autonomous detection. Chin. J. Aeronaut. 2009, 22, 301–306. [Google Scholar]

- Bandeira, L.; Saraiva, J.; Pina, P. Impact Crater Recognition on Mars Based on a Probability Volume Created by Template Matching. IEEE Trans. Geosci. Remote Sens. 2007, 45, 4008–4015. [Google Scholar] [CrossRef]

- Pedrosa, M.M.; De Azevedo, S.C.; Da Silva, E.A.; Dias, M.A. Improved automatic impact crater detection on Mars based on morphological image processing and template matching. Geomat. Nat. Hazards Risk. 2017, 8, 1306–1319. [Google Scholar] [CrossRef]

- Yu, M.; Cui, H.; Tian, Y. A new approach based on crater detection and matching for visual navigation in planetary landing. Adv. Space Res. 2014, 53, 1810–1821. [Google Scholar] [CrossRef]

- Sawabe, Y.; Matsunaga, T.; Rokugawa, S. Automated detection and classification of lunar craters using multiple approaches. Adv. Space Res. 2006, 37, 21–27. [Google Scholar] [CrossRef]

- Yang, S.; Cai, Z. High-Resolution Feature Pyramid Network for Automatic Crater Detection on Mars. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Wang, S.; Fan, Z.; Li, Z.; Zhang, H.; Wei, C. An Effective Lunar Crater Recognition Algorithm Based on Convolutional Neural Network. Remote Sens. 2020, 12, 2694. [Google Scholar] [CrossRef]

- Tawari, A.; Verma, V.; Srivastava, P.; Jain, V.; Khanna, N. Automated Crater detection from Co-registered optical images, elevation maps and slope maps using deep learning. Planet. Space Sci. 2022, 218, 105500. [Google Scholar] [CrossRef]

- Zhao, Y.; Ye, H. SqUNet: An High-Performance Network for Crater Detection With DEM Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 8577–8585. [Google Scholar] [CrossRef]

- Jia, Y.; Liu, L.; Zhang, C. Moon Impact Crater Detection Using Nested Attention Mechanism Based UNet plus. IEEE Access 2021, 9, 44107–44116. [Google Scholar] [CrossRef]

- Zhao, Y.; Cong, Y.; Wang, Z.; Gong, J.; Chen, D. Damaged Airport Runway Extraction Based on Line and Corner Constraints. In Proceedings of the 2022 IEEE International Conference on Unmanned Systems (ICUS), Guangzhou, China, 28–30 October 2022. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, D.; Gong, J.; Wang, C. Fast high-precision ellipse detection method. Pattern Recognit. 2021, 111, 107741. [Google Scholar] [CrossRef]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A fast line segment detector with a false detection control. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 32, 722–732. [Google Scholar] [CrossRef]

- Cote, M.; Saeedi, P. Automatic rooftop extraction in nadir aerial imagery of suburban regions using corners and variational level set evolution. IEEE Trans. Geosci. Remote Sens. 2012, 51, 313–328. [Google Scholar] [CrossRef]

- Blob Detection Using OpenCV (Python, C++). Available online: https://www.learnopencv.com/blob-detection-using-opencv-python-c/ (accessed on 30 October 2023).

- Chan, T.F.; Vese, L.A. Active contours without edges. IEEE Trans. Image Proc. 2001, 10, 266–277. [Google Scholar] [CrossRef] [PubMed]

| Test Images | Image Size (Pixels) | Similarity Threshold (Th1) | Gray Average Threshold (Th2) | Gray Difference Threshold (Th3) |

|---|---|---|---|---|

| #1 | 1024 × 768 | 0.3 | 0.6 | 1.3 |

| #2 | 1317 × 727 | 0.3 | 0.6 | 1.3 |

| #3 | 1920 × 1080 | 0.2 | 0.6 | 1.3 |

| #4 | 1920 × 1080 | 0.3 | 0.6 | 1.2 |

| #5 | 1101 × 781 | 0.2 | 0.5 | 1.3 |

| #6 | 1051 × 801 | 0.3 | 0.5 | 1.3 |

| #7 | 1122 × 840 | 0.3 | 0.5 | 1.3 |

| #8 | 1130 × 806 | 0.4 | 0.5 | 1.3 |

| Test Images | Completeness | Correctness | Quality |

|---|---|---|---|

| #1 | 0.926 | 0.790 | 0.743 |

| #2 | 0.907 | 0.931 | 0.850 |

| #3 | 0.922 | 0.817 | 0.764 |

| #4 | 0.967 | 0.829 | 0.806 |

| #5 | 0.948 | 0.930 | 0.885 |

| #6 | 0.905 | 0.920 | 0.838 |

| #7 | 0.778 | 0.782 | 0.639 |

| #8 | 0.964 | 0.786 | 0.764 |

| Average | 0.915 | 0.848 | 0.786 |

| Indices | Images | Method [15] | Method [17] | Our |

|---|---|---|---|---|

| Completeness | #2 | 0.916 | 0.793 | 0.907 |

| #6 | 0.638 | 0.594 | 0.905 | |

| Correctness | #2 | 0.645 | 0.280 | 0.931 |

| #6 | 0.886 | 0.424 | 0.920 | |

| Quality | #2 | 0.609 | 0.261 | 0.850 |

| #6 | 0.590 | 0.329 | 0.838 | |

| Running time (s) | #2 | 13.99 | 25.81 | 2.11 |

| #6 | 5.28 | 22.98 | 1.23 |

| Test Images | Recall (R) | Precision (P) | F1-Score |

|---|---|---|---|

| #5 | 0.857 | 0.960 | 0.906 |

| #6 | 0.875 | 0.778 | 0.824 |

| Average | 0.866 | 0.869 | 0.865 |

| Test Images | Recall (R) | Precision (P) | F1-Score |

|---|---|---|---|

| #5 | 0.357 | 0.042 | 0.075 |

| #6 | 0.444 | 0.015 | 0.029 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Chen, D.; Gong, J. A Multi-Feature Fusion-Based Method for Crater Extraction of Airport Runways in Remote-Sensing Images. Remote Sens. 2024, 16, 573. https://doi.org/10.3390/rs16030573

Zhao Y, Chen D, Gong J. A Multi-Feature Fusion-Based Method for Crater Extraction of Airport Runways in Remote-Sensing Images. Remote Sensing. 2024; 16(3):573. https://doi.org/10.3390/rs16030573

Chicago/Turabian StyleZhao, Yalun, Derong Chen, and Jiulu Gong. 2024. "A Multi-Feature Fusion-Based Method for Crater Extraction of Airport Runways in Remote-Sensing Images" Remote Sensing 16, no. 3: 573. https://doi.org/10.3390/rs16030573

APA StyleZhao, Y., Chen, D., & Gong, J. (2024). A Multi-Feature Fusion-Based Method for Crater Extraction of Airport Runways in Remote-Sensing Images. Remote Sensing, 16(3), 573. https://doi.org/10.3390/rs16030573