Improving Unsupervised Object-Based Change Detection via Hierarchical Multi-Scale Binary Partition Tree Segmentation: A Case Study in the Yellow River Source Region

Abstract

1. Introduction

- (1)

- Scale issue of analysis units: Units of analysis that are too small in scale may result in more noises, and that are too large may overlook small-scale changes in ecosystems. Deep learning, excelling in semantic segmentation, may misinterpret subtle changes in natural ecosystem succession as noises, hindering accurate change boundary detection.

- (2)

- Complexity and underutilization of change features: None-feature-level CD methods based on single-temporal segmentation ignore the feature potential for describing change at the object level. And in the scene with various changes, the accuracy assessment biases and dimension disasters introduced by complex features limit the capability of existing unsupervised CD methods.

- (1)

- Hierarchical multi-scale segmentation with BPT (Binary Partition Tree) bi-directional strategy: This novel segmentation method seamlessly bridges the gap between the strengths of PBCD and OBCD. By iteratively generating image objects through a hierarchical framework, it combines the precise small-change detection of PBCD with the object completeness of OBCD, effectively extracting changes from bi-temporal images. Moreover, the BPT bi-directional segmentation strategy facilitates better adaptation to diverse sizes of changed spatial objects, overcoming limitations of single-scale approaches.

- (2)

- Comprehensive feature space and improved OBCD-IRMAD integration: We developed a richer feature space, encompassing spectral statics, indices, and texture information. Importantly, our approach integrates a robust feature selection method with an improved OBCD-IRMAD algorithm suitable for high-dimensional data. This integrated framework effectively mitigates the typical challenges of feature underutilization and high-dimensionality in binary change detection, paving the way for enhanced accuracy and efficiency.

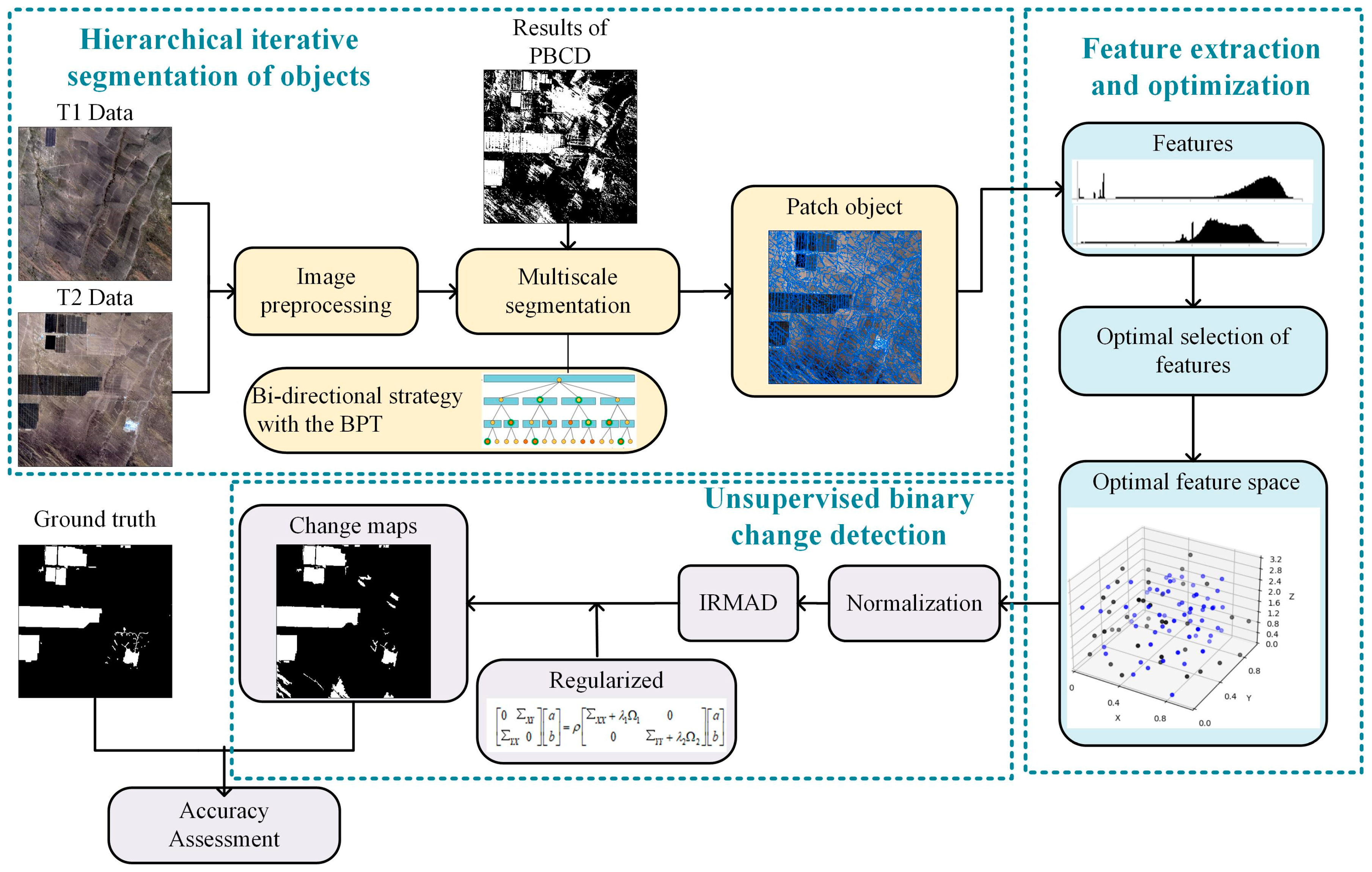

2. Methods

- (1)

- Hierarchical iterative segmentation of objects (Section 2.1): To overcome the limitations of single-scale segmentation, a bi-directional segmentation strategy leveraging the BPT model and guided by pixel-based difference maps is used. This iterative approach enables hierarchical multi-scale segmentation, effectively combining the strengths of both pixel-level and object-level analysis.

- (2)

- Feature extraction and optimization (Section 2.2): Spectral and texture features are extracted from the objects, and the informative features are selected by ranking their importance.

- (3)

- Unsupervised binary change detection based on object-oriented regularized IRMAD (Section 2.3): The IRMAD algorithm of PBCD is adapted to OBCD, while avoiding the influence of noise on the detection results. The proposed method also introduces an IRMAD with normalization strategy for objects to accommodate multi-dimensional features.

2.1. Hierarchy Iterative Segmentation of Objects

2.1.1. Pixel-Based Difference Maps

2.1.2. Image Object Generation

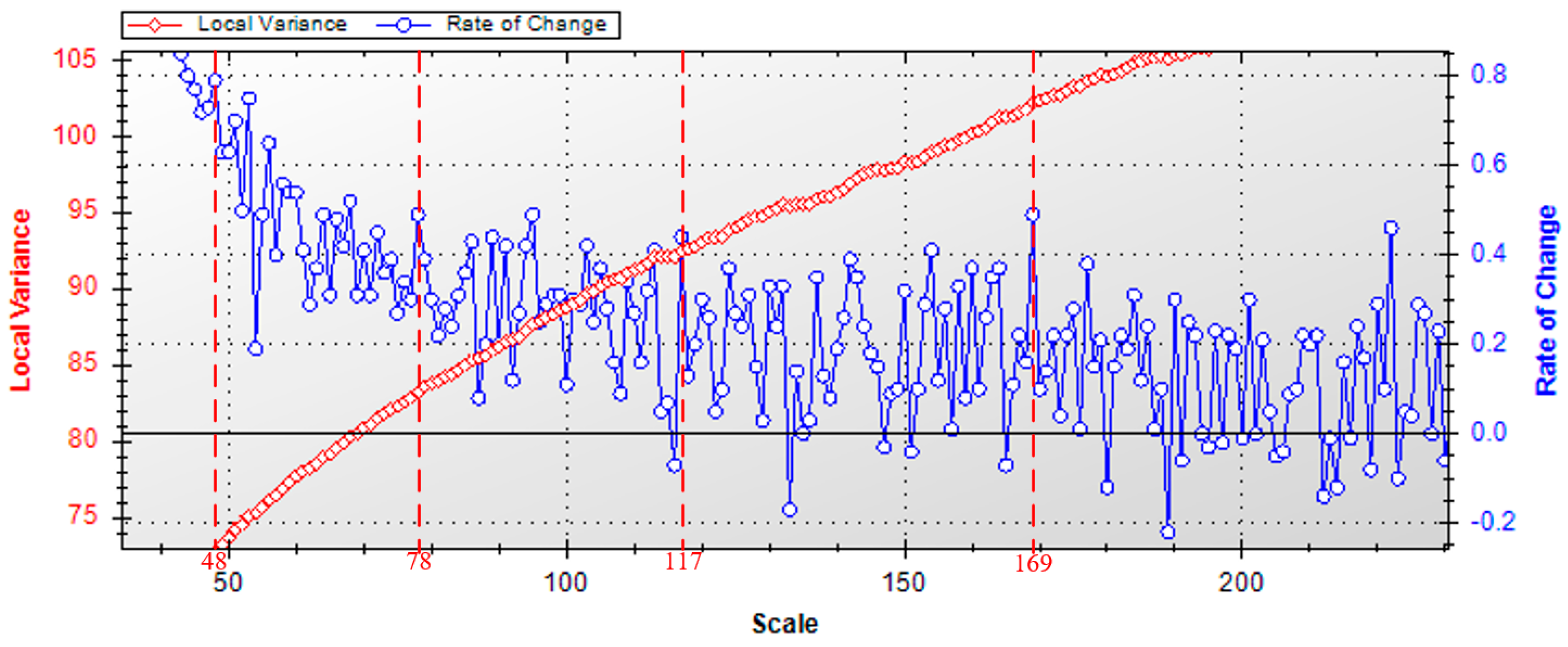

2.1.3. Multi-Scale Iterative Segmentation

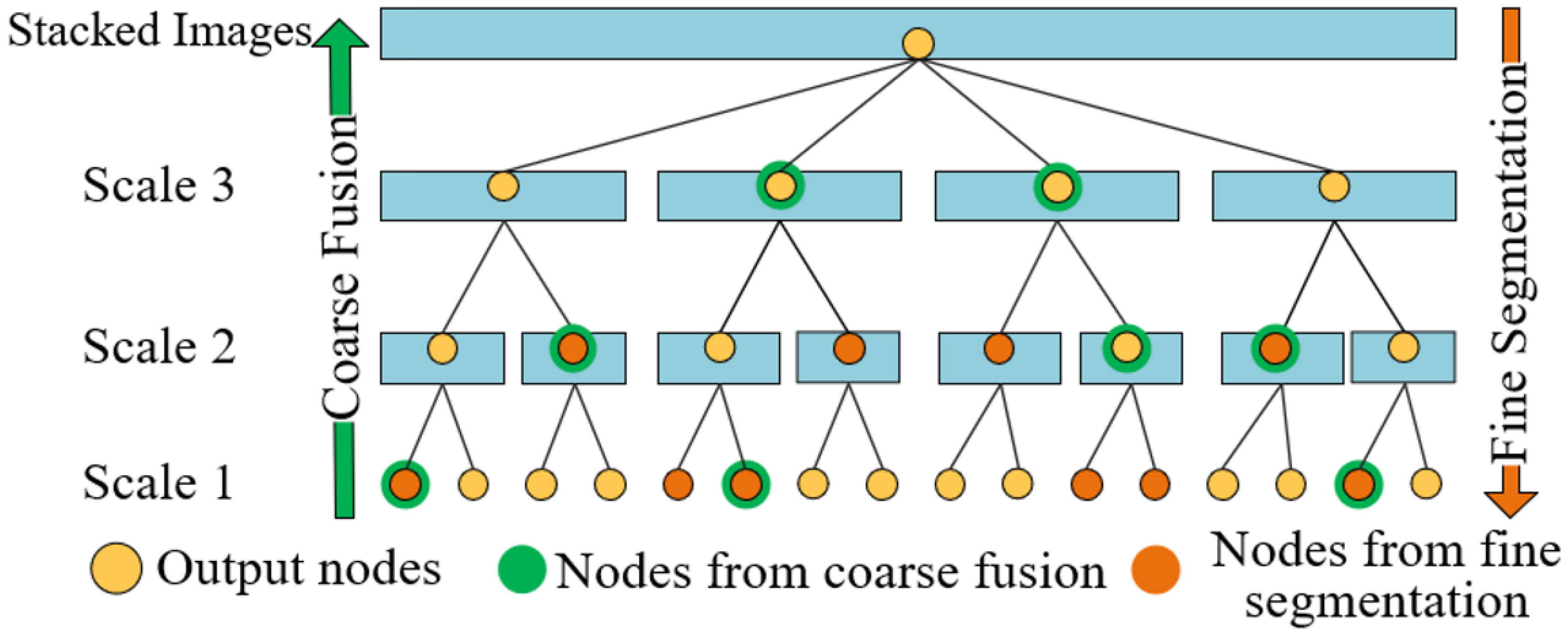

- (1)

- Bottom-up coarse fusion: Adjacent segments progressively merge, guided by tracking nodes along each path. Homogeneity analysis ensures merging stops when regions belong to the same geographic element, preventing excessively small objects with high internal variability. However, if an abrupt drop in LV accompanies object homogeneity, it indicates a potential small changed object within, triggering the corresponding node as optimal for that path.

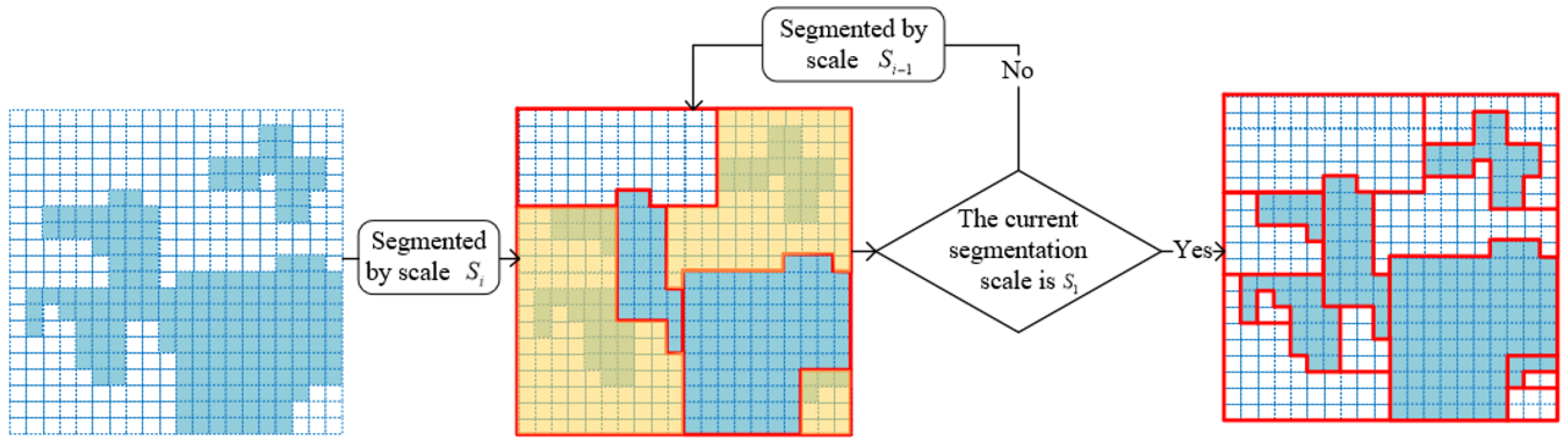

- (2)

- Top-down fine splitting: To generate objects that conform to the boundaries of actual changed ground objects minimizing segmentation omissions, the stacked and images are iteratively segmented based on the PBCD binary map, with CVA as a representative, as shown in Figure 3. Segmentation from the coarsest optimal node along each path and a top-down assessment determines participation in the next scale of segmentation until the finest scale is reached. In each iteration i with scale Si, the objects derived from the previous iteration (scale Si−1) undergo re-segmentation if proposition (1) is satisfied (yellow regions in Figure 3).

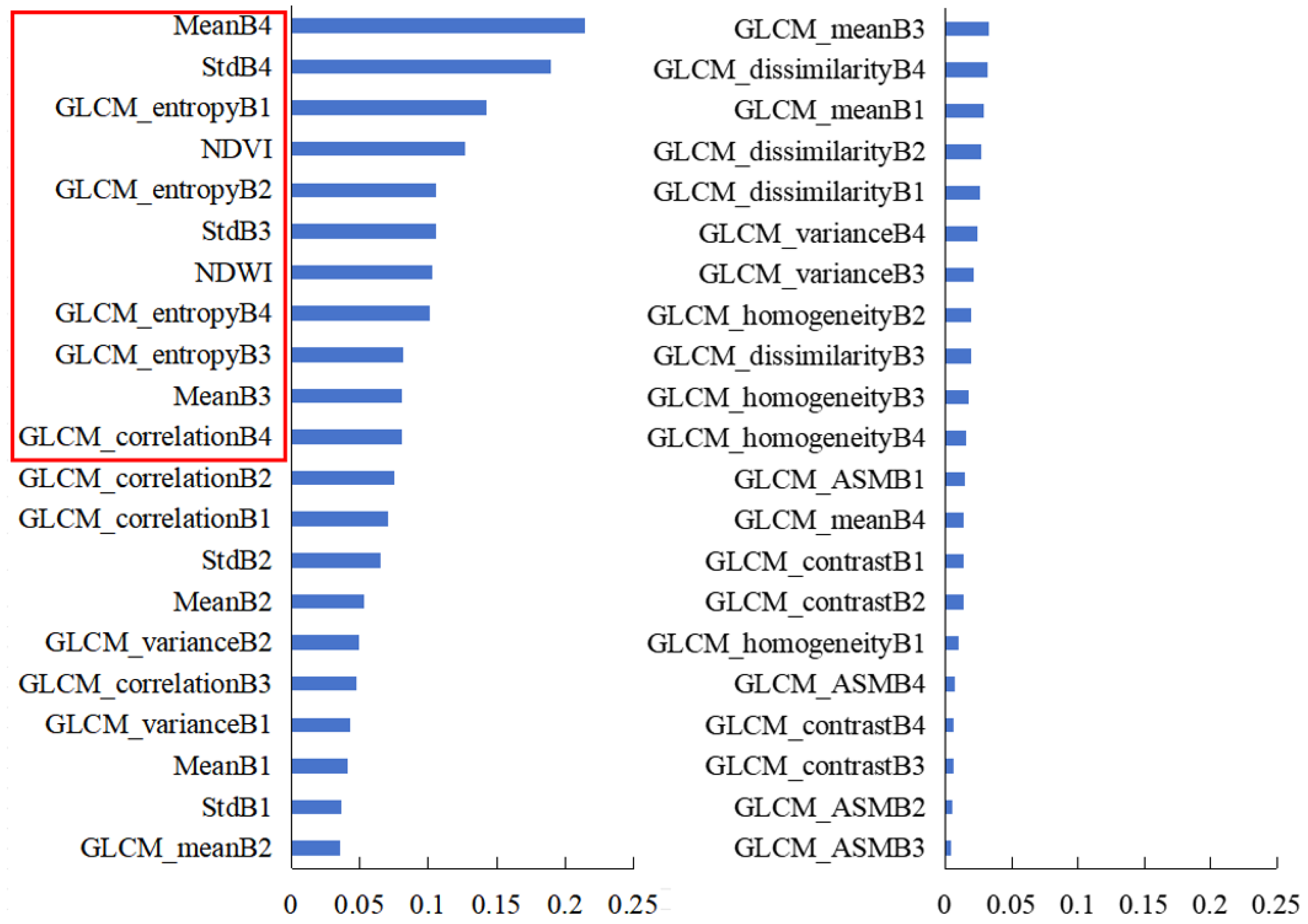

2.2. Feature Extraction and Optimization

2.2.1. Construction of Feature Space

2.2.2. Optimal Selection of Features

2.3. Object-Oriented Regularized IRMAD

2.3.1. Object-Based IRMAD

2.3.2. Regularized Canonical Correlation

2.4. Accuracy Assessment

3. Results

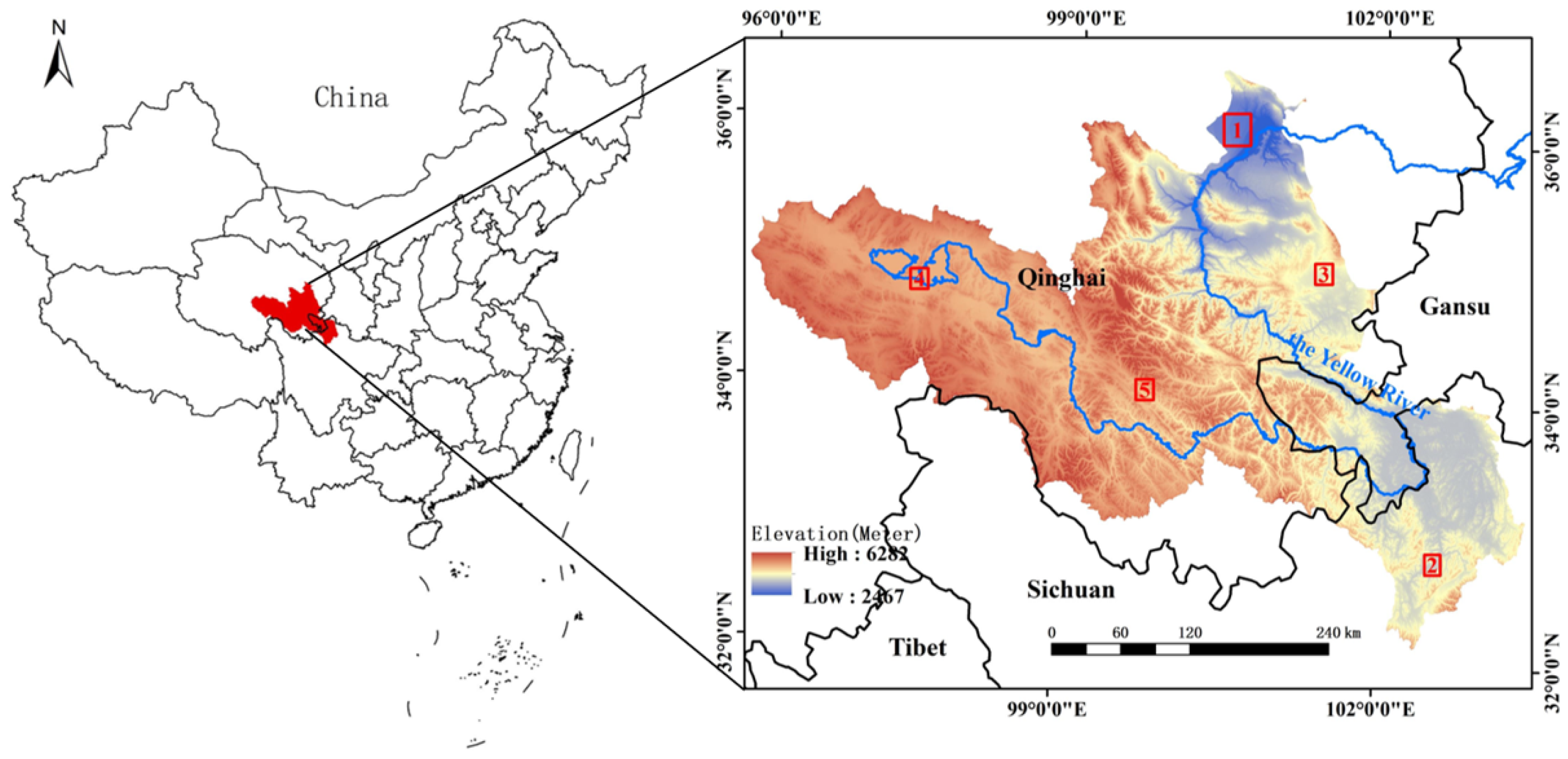

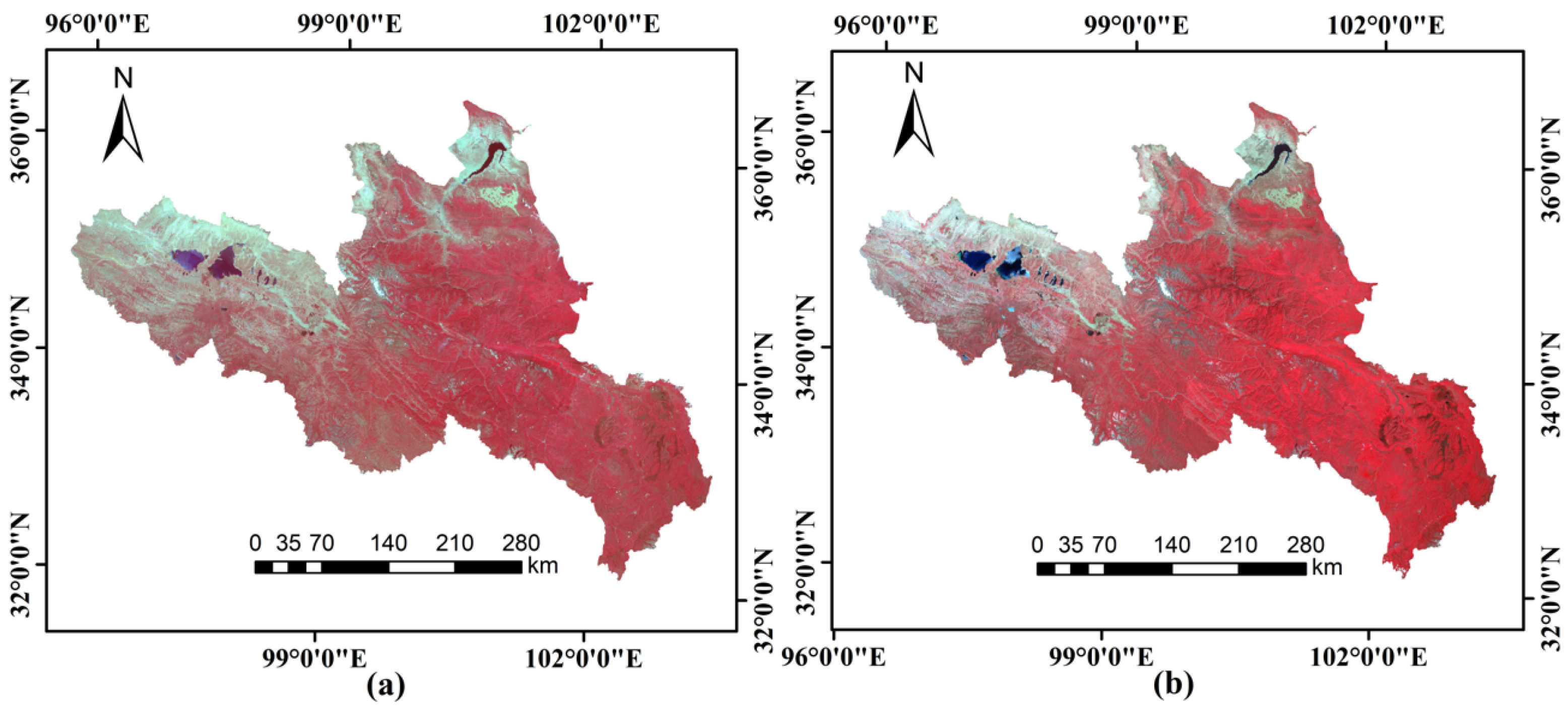

3.1. Study Area and Data Preprocessing

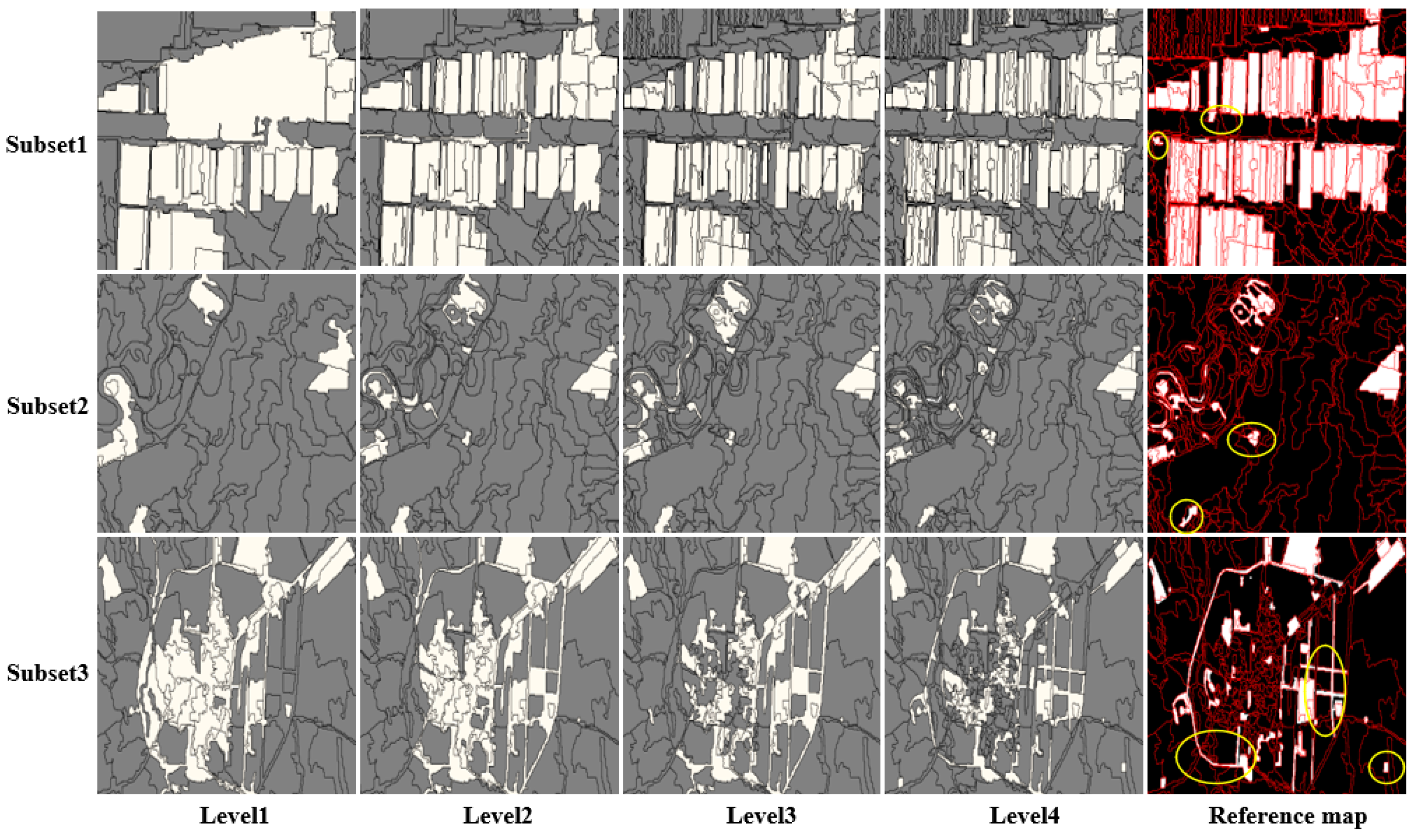

3.2. Results of Hierarchical Multi-Scale Segmentation

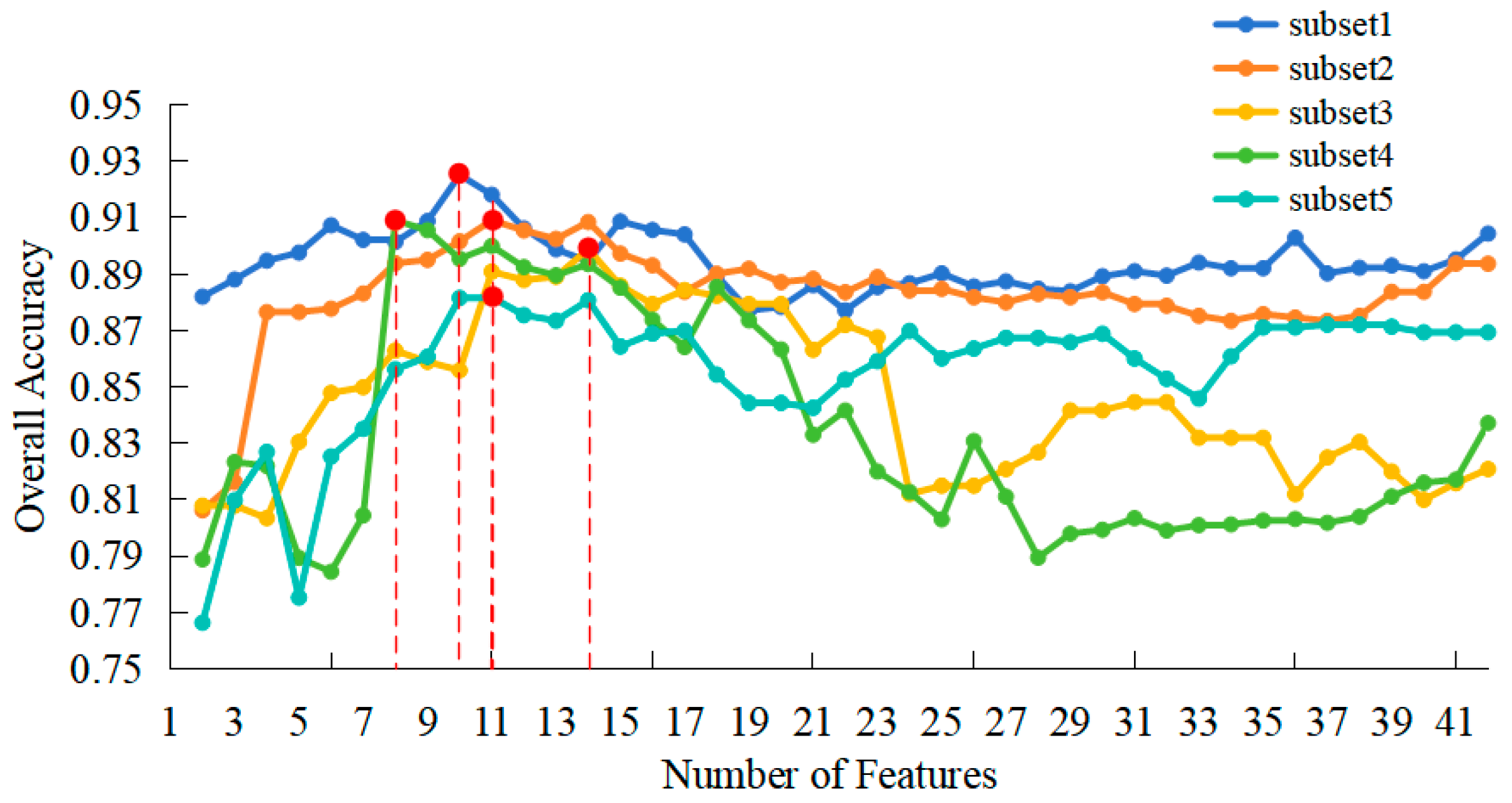

3.3. Feature Analysis and Optimization

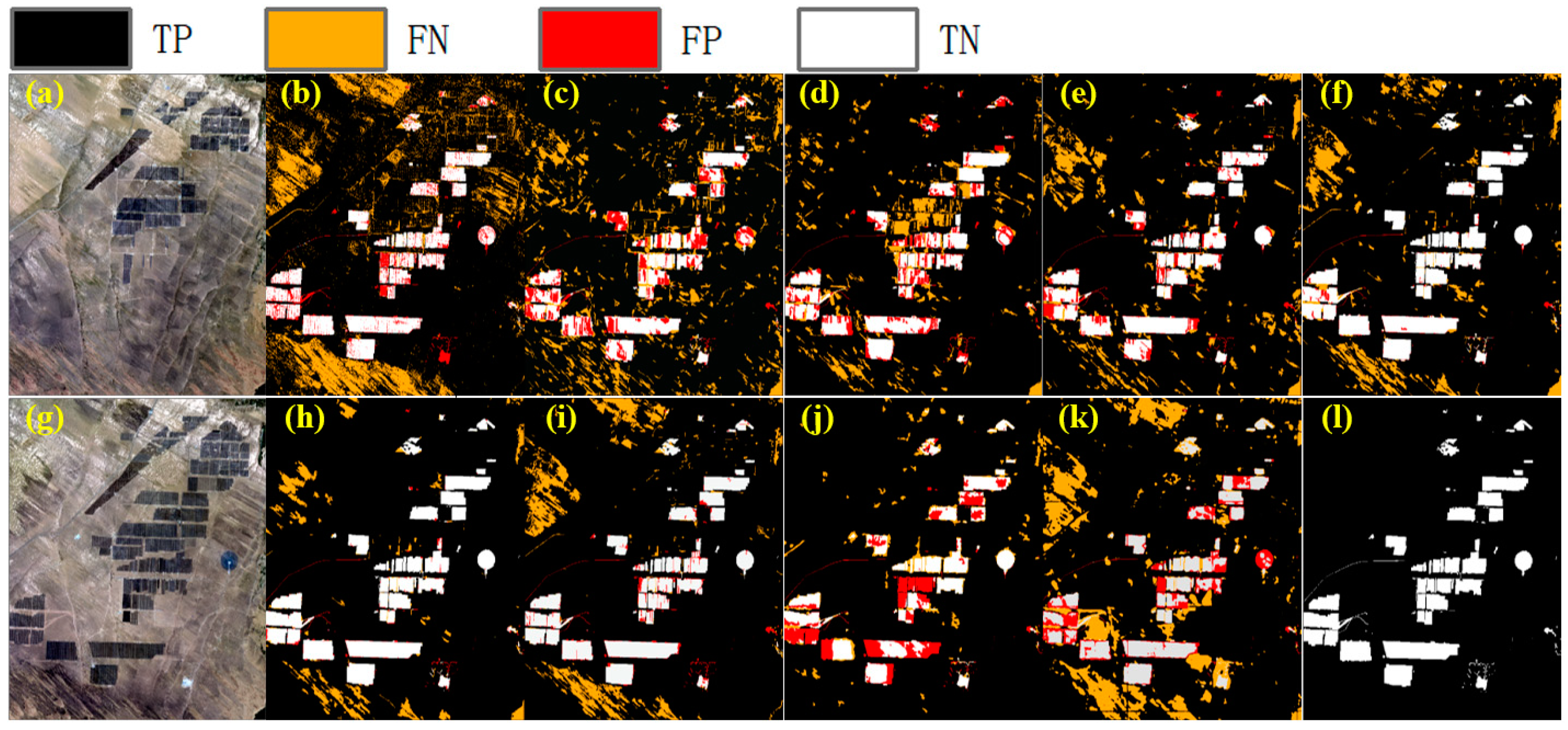

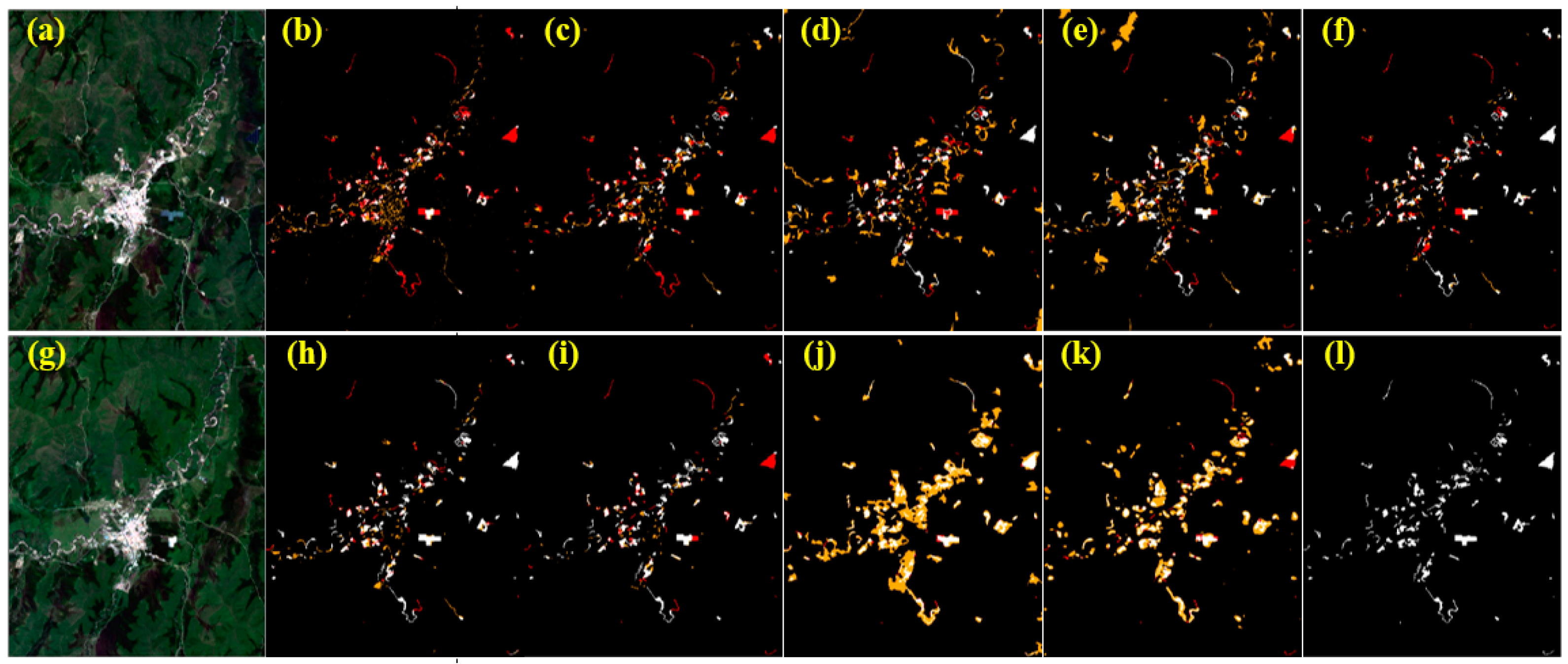

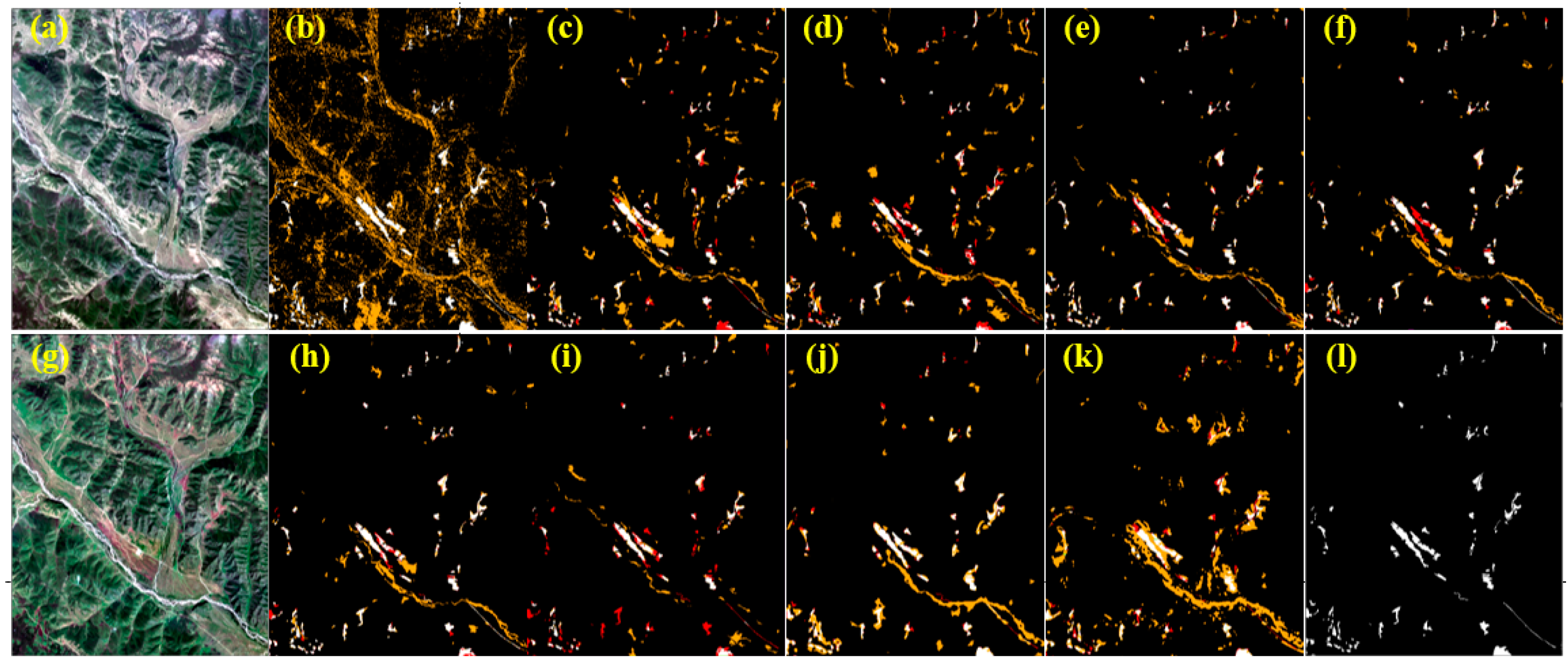

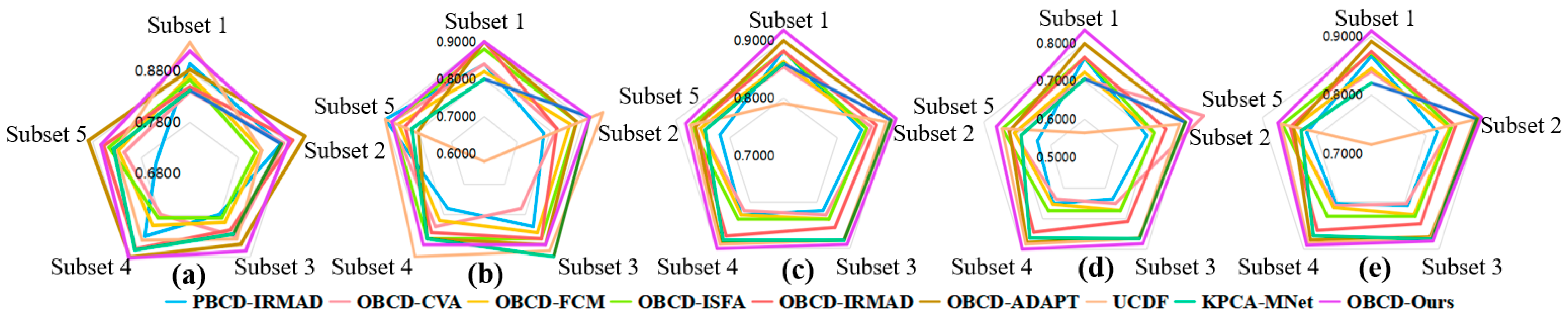

3.4. OBCD Experiments

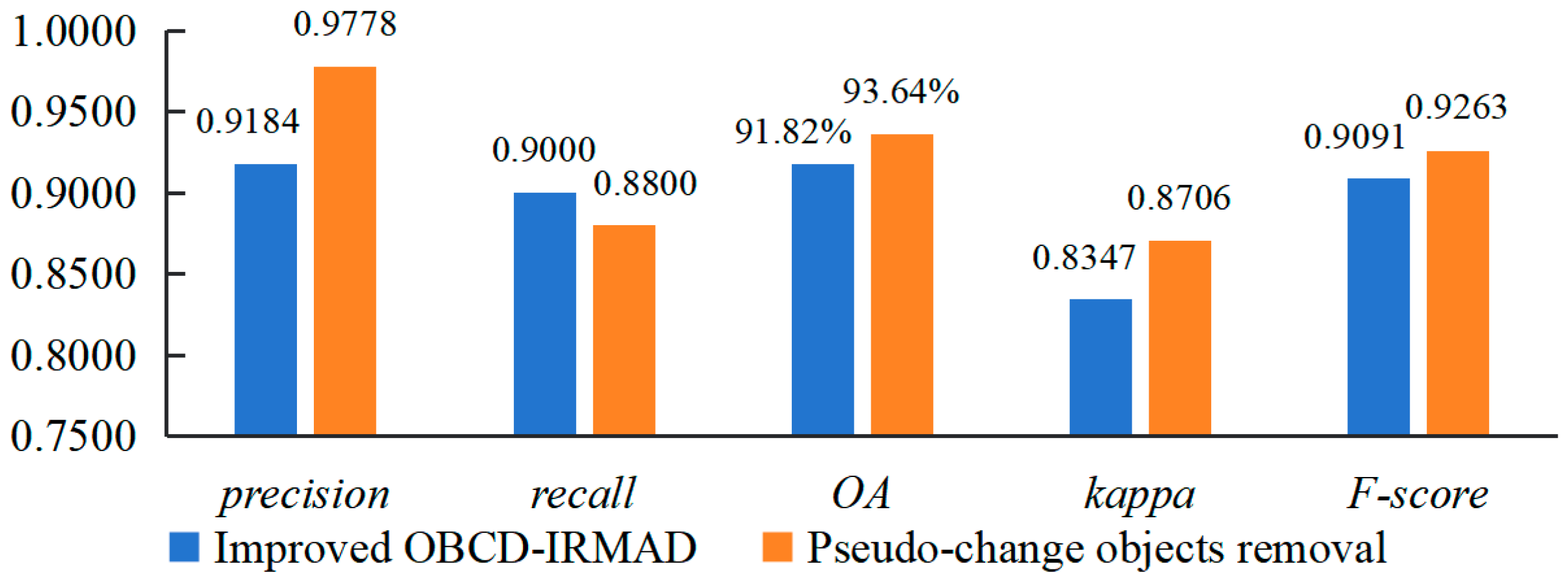

3.5. Ablation Experiments

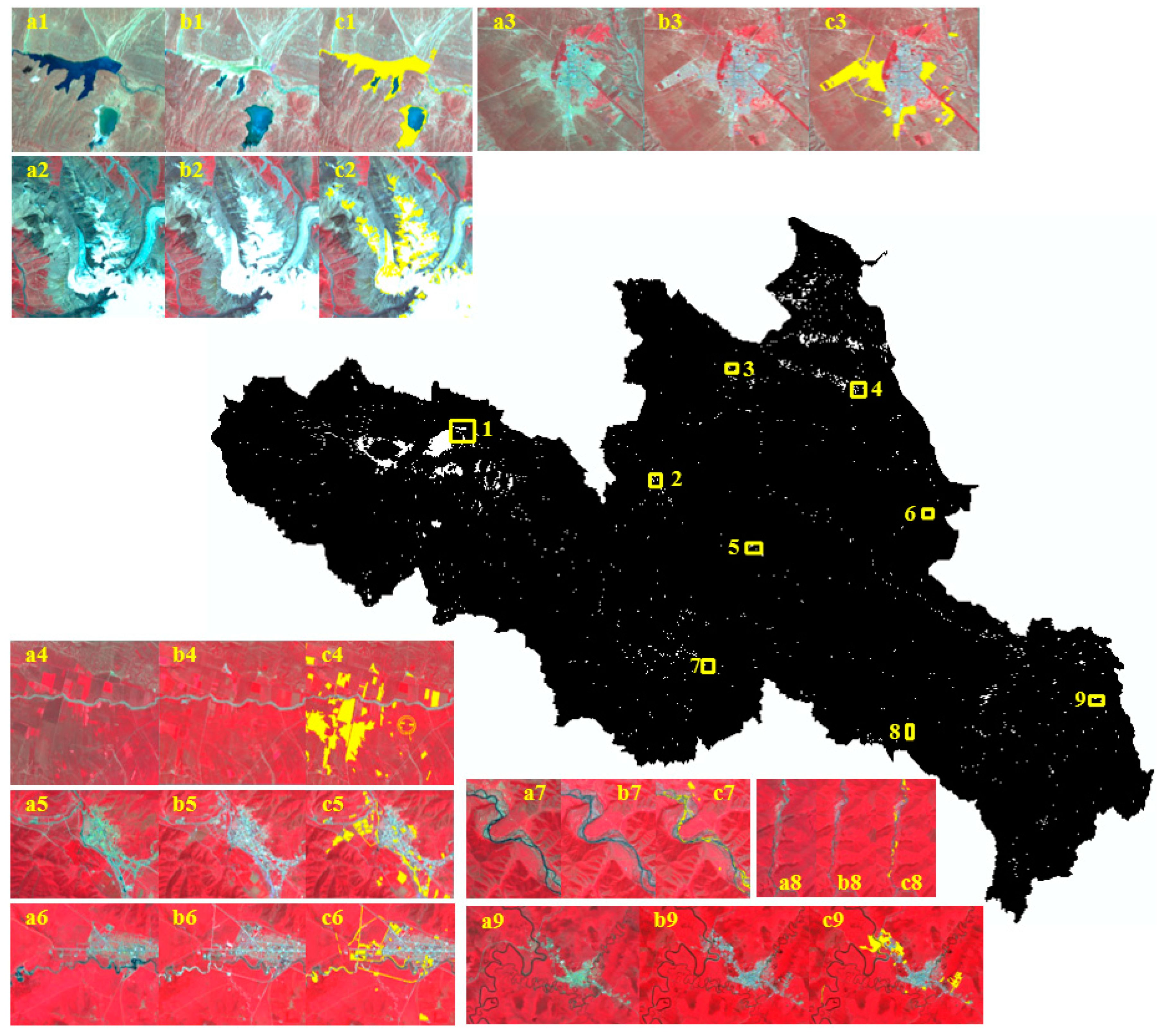

3.6. A Case Study in the Yellow River Source Region

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| Abbreviation | Full Name |

| CD | Change Detection |

| PBCD | Pixel-Based Change Detection |

| OBCD | Object-Oriented Change Detection |

| SAM | Segment Anything Model |

| MAD | Multivariate Alteration Detection |

| CVA | Canonical Variate Analysis |

| IRMAD | Iteratively Reweighted Multivariate Alteration Detection |

| DST | Dempster–Shafer theory |

| BRT | Binary Partition Tree |

| ESP2 | Estimation of Scale Parameter 2 |

| CCA | Canonical Correlation Analysis |

| ROC-LV | Change rates of Local Variance |

| MIoU | Mean Intersection over Union |

| NDVI | Normalized Difference Vegetation Index |

| NDWI | Normalized Difference Water Index |

| GLCM | Gray-Level Co-occurrence Matrix |

| CRESDA | China Center for Resource Satellite Data and Application |

| HIS | Hierarchy Iterative Segmentation of Objects |

| FO | Feature Optimization |

| OA | Overall Accuracy |

| ISODATA | Iterative Self-Organizing Data Analysis Techniques Algorithm |

| SAR | Synthetic Aperture Radar |

References

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Diaz-Delgado, R.; Cazacu, C.; Adamescu, M. Rapid Assessment of Ecological Integrity for LTER Wetland Sites by Using UAV Multispectral Mapping. Drones 2019, 3, 3. [Google Scholar] [CrossRef]

- Sajjad, A.; Lu, J.Z.; Chen, X.L.; Chisenga, C.; Saleem, N.; Hassan, H. Operational Monitoring and Damage Assessment of Riverine Flood-2014 in the Lower Chenab Plain, Punjab, Pakistan, Using Remote Sensing and GIS Techniques. Remote Sens. 2020, 12, 714. [Google Scholar] [CrossRef]

- Wang, H.B.; Gong, X.S.; Wang, B.B.; Deng, C.; Cao, Q. Urban development analysis using built-up area maps based on multiple high-resolution satellite data. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 17. [Google Scholar] [CrossRef]

- Panuju, D.R.; Paull, D.J.; Trisasongko, B.H. Combining Binary and Post-Classification Change Analysis of Augmented ALOS Backscatter for Identifying Subtle Land Cover Changes. Remote Sens. 2019, 11, 100. [Google Scholar] [CrossRef]

- Niemeyer, I.; Marpu, P.R.; Nussbaum, S. Change Detection Using the Object Features. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Barcelona, Spain, 23–27 July 2007; IEEE: Barcelona, Spain, 2007; p. 2374. [Google Scholar]

- Hirayama, H.; Sharma, R.C.; Tomita, M.; Hara, K. Evaluating multiple classifier system for the reduction of salt-and-pepper noise in the classification of very-high-resolution satellite images. Int. J. Remote Sens. 2019, 40, 2542–2557. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Wang, Z.; Wei, C.; Liu, X.; Zhu, L.; Yang, Q.; Wang, Q.; Zhang, Q.; Meng, Y. Object-based change detection for vegetation disturbance and recovery using Landsat time series. GIScience Remote Sens. 2022, 59, 1706–1721. [Google Scholar] [CrossRef]

- Wang, J.; Jiang, L.L.; Qi, Q.W.; Wang, Y.J. Exploration of Semantic Geo-Object Recognition Based on the Scale Parameter Optimization Method for Remote Sensing Images. Isprs Int. J. Geo. Inf. 2021, 10, 420. [Google Scholar] [CrossRef]

- Zhang, X.L.; Xiao, P.F.; Feng, X.Z.; Yuan, M. Separate segmentation of multi-temporal high-resolution remote sensing images for object-based change detection in urban area. Remote Sens. Environ. 2017, 201, 243–255. [Google Scholar] [CrossRef]

- Xu, L.; Ming, D.; Zhou, W.; Bao, H.; Chen, Y.; Ling, X. Farmland Extraction from High Spatial Resolution Remote Sensing Images Based on Stratified Scale Pre-Estimation. Remote Sens. 2019, 11, 108. [Google Scholar] [CrossRef]

- Xiao, T.; Wan, Y.; Chen, J.; Shi, W.; Qin, J.; Li, D. Multiresolution-Based Rough Fuzzy Possibilistic C-Means Clustering Method for Land Cover Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 570–580. [Google Scholar] [CrossRef]

- Su, T.F. Scale-variable region-merging for high resolution remote sensing image segmentation. ISPRS J. Photogramm. Remote Sens. 2019, 147, 319–334. [Google Scholar] [CrossRef]

- Zhang, X.L.; Xiao, P.F.; Feng, X.Z. Object-specific optimization of hierarchical multiscale segmentations for high-spatial resolution remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 159, 308–321. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, S.W.; Zhang, C.L.; Wang, B.H. Hidden Feature-Guided Semantic Segmentation Network for Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 17. [Google Scholar] [CrossRef]

- Chen, K.; Liu, C.; Chen, H.; Zhang, H.; Li, W.; Zou, Z.; Shi, Z. RSPrompter: Learning to Prompt for Remote Sensing Instance Segmentation based on Visual Foundation Model. arXiv 2023, arXiv:2306.16269. [Google Scholar] [CrossRef]

- Chen, F.; Chen, L.Y.; Han, H.J.; Zhang, S.N.; Zhang, D.Q.; Liao, H.E. The ability of Segmenting Anything Model (SAM) to segment ultrasound images. BioSci. Trends 2023, 17, 211–218. [Google Scholar] [CrossRef]

- Bai, T.; Sun, K.; Li, W.; Li, D.; Chen, Y.; Sui, H. A Novel Class-Specific Object-Based Method for Urban Change Detection Using High-Resolution Remote Sensing Imagery. Photogramm. Eng. Remote Sens. 2021, 87, 249–262. [Google Scholar] [CrossRef]

- Serban, R.D.; Serban, M.; He, R.X.; Jin, H.J.; Li, Y.; Li, X.Y.; Wang, X.B.; Li, G.Y. 46-Year (1973–2019) Permafrost Landscape Changes in the Hola Basin, Northeast China Using Machine Learning and Object-Oriented Classification. Remote Sens. 2021, 13, 1910. [Google Scholar] [CrossRef]

- Zhang, Q.; Li, Z.; Daoli, P. Land use change detection based on object-oriented change vector analysis (OCVA). J. China Agric. Univ. 2019, 24, 166–174. [Google Scholar]

- Xu, Q.Q.; Liu, Z.J.; Li, F.F.; Yang, M.Z.; Ren, H.C. The Regularized Iteratively Reweighted Object-Based MAD Method for Change Detection in Bi-Temporal, Multispectral Data. In Proceedings of the International Symposium on Hyperspectral Remote Sensing Applications/International Symposium on Environmental Monitoring and Safety Testing Technolog, Beijing, China, 9–11 May 2016; SPIE: Beijing, China, 2016. [Google Scholar]

- Yu, J.X.; Liu, Y.L.; Ren, Y.H.; Ma, H.J.; Wang, D.C.; Jing, Y.F.; Yu, L.J. Application Study on Double-Constrained Change Detection for Land Use/Land Cover Based on GF-6 WFV Imageries. Remote Sens. 2020, 12, 2943. [Google Scholar] [CrossRef]

- Lv, Z.Y.; Liu, T.F.; Wan, Y.L.; Benediktsson, J.A.; Zhang, X.K. Post-Processing Approach for Refining Raw Land Cover Change Detection of Very High-Resolution Remote Sensing Images. Remote Sens. 2018, 10, 472. [Google Scholar] [CrossRef]

- Cui, G.Q.; Lv, Z.Y.; Li, G.F.; Benediktsson, J.A.; Lu, Y.D. Refining Land Cover Classification Maps Based on Dual-Adaptive Majority Voting Strategy for Very High Resolution Remote Sensing Images. Remote Sens. 2018, 10, 238. [Google Scholar] [CrossRef]

- Tan, K.; Zhang, Y.S.; Wang, X.; Chen, Y. Object-Based Change Detection Using Multiple Classifiers and Multi-Scale Uncertainty Analysis. Remote Sens. 2019, 11, 359. [Google Scholar] [CrossRef]

- Han, Y.; Javed, A.; Jung, S.; Liu, S. Object-Based Change Detection of Very High Resolution Images by Fusing Pixel-Based Change Detection Results Using Weighted Dempster–Shafer Theory. Remote Sens. 2020, 12, 983. [Google Scholar] [CrossRef]

- Wang, Q.; Yuan, Z.H.; Du, Q.; Li, X.L. GETNET: A General End-to-End 2-D CNN Framework for Hyperspectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3–13. [Google Scholar] [CrossRef]

- Chen, K.; Liu, C.; Li, W.; Liu, Z.; Chen, H.; Zhang, H.; Zou, Z.; Shi, Z. Time Travelling Pixels: Bitemporal Features Integration with Foundation Model for Remote Sensing Image Change Detection. arXiv 2023, arXiv:2312.16202. [Google Scholar]

- Xu, Q.; Shi, Y.; Guo, J.; Ouyang, C.; Zhu, X.X. UCDFormer: Unsupervised Change Detection Using a Transformer-Driven Image Translation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–17. [Google Scholar] [CrossRef]

- Luppino, L.T.; Kampffmeyer, M.; Bianchi, F.M.; Moser, G.; Serpico, S.B.; Jenssen, R.; Anfinsen, S.N. Deep Image Translation With an Affinity-Based Change Prior for Unsupervised Multimodal Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 22. [Google Scholar] [CrossRef]

- Bai, T.; Wang, L.; Yin, D.M.; Sun, K.M.; Chen, Y.P.; Li, W.Z.; Li, D.R. Deep learning for change detection in remote sensing: A review. Geo-Spat. Inf. Sci. 2022, 27, 262–288. [Google Scholar] [CrossRef]

- Du, P.J.; Liu, S.C.; Liu, P.; Tan, K.; Cheng, L. Sub-pixel change detection for urban land-cover analysis via multi-temporal remote sensing images. Geo-Spat. Inf. Sci. 2014, 17, 26–38. [Google Scholar] [CrossRef]

- Carvalho, O.A.; Guimaraes, R.F.; Gillespie, A.R.; Silva, N.C.; Gomes, R.A.T. A New Approach to Change Vector Analysis Using Distance and Similarity Measures. Remote Sens. 2011, 3, 2473–2493. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification. with airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Dragut, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Li, X.N.; Wang, X.P.; Wei, T.Y. Multiscale and Adaptive Morphology for Remote Sensing Image Segmentation of Vegetation Areas. Laser Optoelectron. Prog. 2022, 59, 7. [Google Scholar]

- Yang, Y.J.; Huang, Y.; Tian, Q.J.; Wang, L.; Geng, J.; Yang, R.R. The Extraction Model of Paddy Rice Information Based on GF-1 Satellite WFV Images. Spectrosc. Spectr. Anal. 2015, 35, 3255–3261. [Google Scholar]

- Wang, H.; Zhao, Y.; Pu, R.L.; Zhang, Z.Z. Mapping Robinia Pseudoacacia Forest Health Conditions by Using Combined Spectral, Spatial, and Textural Information Extracted from IKONOS Imagery and Random Forest Classifier. Remote Sens. 2015, 7, 9020–9044. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, Y.; Chen, Z.C.; Yang, H.; Sun, Y.X.; Kang, J.M.; Yang, Y.; Liang, X.J. Application of Relieff Algorithm to Selecting Feature Sets for Classification of High Resolution Remote Sensing Image. In Proceedings of the 36th IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; IEEE: Beijing, China, 2016; pp. 755–758. [Google Scholar]

- Kononenko, I.; RobnikSikonja, M.; Pompe, U. Relieff for Estimation and Discretization of Attributes in Classification, Regression, and ILP Problems. In Proceedings of the 7th International Conference on Artificial Intelligence—Methodology, Systems, Applications (AIMSA 96), Sozopol, Bulgaria, 18–20 September 1996; IIEEEOS Press: Sozopol, Bulgaria, 1996; pp. 31–40. [Google Scholar]

- Nielsen, A.A.; Conradsen, K.; Simpson, J.J. Multivariate alteration detection (MAD) and MAF postprocessing in multispectral, bitemporal image data: New approaches to change detection studies. Remote Sens. Environ. 1998, 64, 1–19. [Google Scholar] [CrossRef]

- Lv, Z.Y.; Wang, F.J.; Cui, G.Q.; Benediktsson, J.A.; Lei, T.; Sun, W.W. Spatial-Spectral Attention Network Guided With Change Magnitude Image for Land Cover Change Detection Using Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 12. [Google Scholar] [CrossRef]

- Guo, B.; Wei, C.; Yu, Y.; Liu, Y.; Li, J.; Meng, C.; Cai, Y. The dominant influencing factors of desertification changes in the source region of Yellow River: Climate change or human activity? Sci. Total Environ. 2022, 813, 152512. [Google Scholar] [CrossRef]

- Wu, G.L.; Cheng, Z.; Alatalo, J.M.; Zhao, J.X.; Liu, Y. Climate Warming Consistently Reduces Grassland Ecosystem Productivity. Earth Future 2021, 9, 14. [Google Scholar] [CrossRef]

- Ji, C.C.; Li, X.S.; Wei, H.D.; Li, S.K. Comparison of Different Multispectral Sensors for Photosynthetic and Non-Photosynthetic Vegetation-Fraction Retrieval. Remote Sens. 2020, 12, 115. [Google Scholar] [CrossRef]

- Nielsen, A.A. The regularized iteratively reweighted MAD method for change detection in multi- and hyperspectral data. IEEE Trans. Image Process 2007, 16, 463–478. [Google Scholar] [CrossRef] [PubMed]

- Sun, H.; Zhou, W.; Zhang, Y.X.; Cai, C.C.; Chen, Q. Integrating spectral and textural attributes to measure magnitude in object-based change vector analysis. Int. J. Remote Sens. 2019, 40, 5749–5767. [Google Scholar] [CrossRef]

- Wang, X.; Huang, J.; Chu, Y.L.; Shi, A.Y.; Xu, L.Z. Change Detection in Bitemporal Remote Sensing Images by using Feature Fusion and Fuzzy C-Means. KSII Trans. Internet Inf. Syst. 2018, 12, 1714–1729. [Google Scholar]

- Xu, J.F.; Zhao, C.; Zhang, B.M.; Lin, Y.Z.; Yu, D.H. Hybrid Change Detection Based on ISFA for High-Resolution Imagery. In Proceedings of the 3rd IEEE International Conference on Image, Vision and Computing (ICIVC), Chongqing, China, 27–29 June 2018; IEEE: Chongqing, China, 2018; pp. 76–80. [Google Scholar]

- Wu, C.; Chen, H.R.X.; Du, B.; Zhang, L.P. Unsupervised Change Detection in Multitemporal VHR Images Based on Deep Kernel PCA Convolutional Mapping Network. IEEE Trans. Cybern. 2022, 52, 12084–12098. [Google Scholar] [CrossRef] [PubMed]

- Pan, Y.L.; Xu, X.D.; Long, J.P.; Lin, H. Change detection of wetland restoration in China’s Sanjiang National Nature Reserve using STANet method based on GF-1 and GF-6 images. Ecol. Indic. 2022, 145, 12. [Google Scholar] [CrossRef]

- Lv, Z.; Liu, T.; Benediktsson, J.A.; Falco, N. Land Cover Change Detection Techniques: Very-high-resolution optical images: A review. IEEE Geosci. Remote Sens. Mag. 2022, 10, 44–63. [Google Scholar] [CrossRef]

- Hao, M.; Zhou, M.C.; Jin, J.; Shi, W.Z. An Advanced Superpixel-Based Markov Random Field Model for Unsupervised Change Detection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1401–1405. [Google Scholar] [CrossRef]

- Zhu, L.; Gao, D.J.; Jia, T.; Zhang, J.Y. Using Eco-Geographical Zoning Data and Crowdsourcing to Improve the Detection of Spurious Land Cover Changes. Remote Sens. 2021, 13, 3244. [Google Scholar] [CrossRef]

- Sood, V.; Gusain, H.S.; Gupta, S.; Singh, S. Topographically derived subpixel-based change detection for monitoring changes over rugged terrain Himalayas using AWiFS data. J. Mt. Sci. 2021, 18, 126–140. [Google Scholar] [CrossRef]

- Chen, L.; Wang, H.Y.; Wang, T.S.; Kou, C.H. Remote Sensing for Detecting Changes of Land Use in Taipei City, Taiwan. J. Indian Soc. Remote Sens. 2019, 47, 1847–1856. [Google Scholar] [CrossRef]

| Texture Feature | Formula | Texture Feature | Formula |

|---|---|---|---|

| GLCM_mean | GLCM_dissimilarity | ||

| GLCM_variance | GLCM_entropy | ||

| GLCM_homogeneity | GLCM_ASM | ||

| GLCM_contrast | GLCM_correlation |

| Detection Result Ground Truth | Unchanged | Changed | Total |

|---|---|---|---|

| Unchanged | TP | FN | NT |

| Changed | FP | TN | NF |

| Total | NP | NN | N |

| Sensor | Spatial Resolution (m) | Width (km) | Band Name | Wavelength (μm) | Band Name | Wavelength (μm) |

|---|---|---|---|---|---|---|

| GF-1 WFV | 16 | 800 | B1 (blue) | 0.45–0.52 | ||

| B2 (green) | 0.52–0.59 | |||||

| B3 (red) | 0.63–0.69 | |||||

| B4 (near-infrared) | 0.77–0.89 | |||||

| GF-6 WFV | 16 | 800 | B7 (purple) | 0.40–0.45 | B3 (red) | 0.63–0.69 |

| B1 (blue) | 0.45–0.52 | B5 (red-edge1) | 0.69–0.73 | |||

| B2 (green) | 0.52–0.59 | B6 (red-edge2) | 0.73–0.77 | |||

| B8 (yellow) | 0.59–0.63 | B4 (near-infrared) | 0.77–0.79 |

| Data | Imaging Time | Data | Imaging Time | Data | Imaging Time |

|---|---|---|---|---|---|

| GF1-WFV1 | 28 July 2015 | GF1-WFV2 | 14 August 2015 | GF1-WFV4 | 9 July 2015 |

| 1 August 2015 | 6 July 2022 | 23 August 2015 | |||

| 13 August 2015 | GF1-WFV3 | 5 June 2015 | 29 July 2015 | ||

| 23 August 2022 | 23 August 2015 | 22 July 2022 | |||

| 29 July 2022 | 27 August 2015 | 6 July 2022 | |||

| GF1-WFV2 | 28 July 2015 | 6 July 2022 | GF6-WFV | 25 July 2022 | |

| 1 August 2015 | 22 July 2022 | 8 July 2022 | |||

| 5 August 2015 | GF1-WFV4 | 25 July 2015 | 21 August 2022 | ||

| 7 August 2022 | 29 July 2015 |

| Subset 1 | Subset 2 | Subset 3 | ||

|---|---|---|---|---|

| Level 1 | MIoU | 0.7757 | 0.6828 | 0.5259 |

| F-score | 0.8717 | 0.9729 | 0.8619 | |

| Level 2 | MIoU | 0.9097 | 0.8401 | 0.5405 |

| F-score | 0.9562 | 0.9892 | 0.8688 | |

| Level 3 | MIoU | 0.9273 | 0.8244 | 0.5847 |

| F-score | 0.9662 | 0.9874 | 0.8984 | |

| Level 4 | MIoU | 0.9456 | 0.9316 | 0.7154 |

| F-score | 0.9739 | 0.9962 | 0.9482 |

| PBCD- IRMAD [47] | OBCD-CVA [48] | OBCD-FCM [49] | OBCD-ISFA [50] | OBCD- IRMAD [22] | OBCD-DST [27] | UCDF [30] | KPCA-MNet [51] | Improved OBCD-IRMAD | |

|---|---|---|---|---|---|---|---|---|---|

| precision | 0.8236 | 0.8269 | 0.8253 | 0.8190 | 0.8545 | 0.8834 | 0.8544 | 0.8468 | 0.8827 |

| recall | 0.8280 | 0.8320 | 0.8440 | 0.8800 | 0.8600 | 0.8720 | 0.8600 | 0.8720 | 0.9000 |

| OA | 83.82% | 84.36% | 84.73% | 85.64% | 86.91% | 88.69% | 86.55% | 87.38% | 90.00% |

| Kappa | 0.6742 | 0.7111 | 0.6927 | 0.7123 | 0.7362 | 0.7722 | 0.7277 | 0.7439 | 0.7987 |

| F-score | 0.8234 | 0.8287 | 0.8341 | 0.8481 | 0.8565 | 0.8770 | 0.8467 | 0.8584 | 0.8912 |

| Improved-IRMAD | HIS | FO | Precision | Recall | F-Score | |

|---|---|---|---|---|---|---|

| OBCD-IRMAD | 0.8148 | 0.8800 | 0.8462 | |||

| Improved OBCD-IRMAD | √ | 0.8776 | 0.8600 | 0.8687 | ||

| Improved OBCD-IRMAD+HIS | √ | √ | 0.8627 | 0.8800 | 0.8713 | |

| Improved OBCD-IRMAD+FO | √ | √ | 0.8491 | 0.9000 | 0.8738 | |

| Improved OBCD-IRMAD+HIS+FO | √ | √ | √ | 0.8654 | 0.9000 | 0.8824 |

| Primary Ecosystems | Forest | Scrub | Grassland | Wetland | Artificial | Desert | Glacier |

|---|---|---|---|---|---|---|---|

| Number of changed samples | 110 | 126 | 160 | 160 | 160 | 144 | 40 |

| Number of unchanged samples | 200 | 200 | 200 | 200 | 200 | 176 | 44 |

| Number of omission detections | 3 | 2 | 27 | 23 | 22 | 17 | 3 |

| Number of false detections | 5 | 3 | 24 | 27 | 32 | 19 | 3 |

| Percentage of omission detections among all changed samples | 2.73% | 1.59% | 16.88% | 14.38% | 13.75% | 11.81% | 7.50% |

| Percentage of false detections among all unchanged samples | 2.50% | 1.50% | 12.00% | 13.50% | 16.00% | 10.80% | 6.82% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, Y.; He, X.; Chen, L.; Wang, D.; Jiao, W.; Liu, Y.; He, G.; Long, T. Improving Unsupervised Object-Based Change Detection via Hierarchical Multi-Scale Binary Partition Tree Segmentation: A Case Study in the Yellow River Source Region. Remote Sens. 2024, 16, 629. https://doi.org/10.3390/rs16040629

Du Y, He X, Chen L, Wang D, Jiao W, Liu Y, He G, Long T. Improving Unsupervised Object-Based Change Detection via Hierarchical Multi-Scale Binary Partition Tree Segmentation: A Case Study in the Yellow River Source Region. Remote Sensing. 2024; 16(4):629. https://doi.org/10.3390/rs16040629

Chicago/Turabian StyleDu, Yihong, Xiaoming He, Liujia Chen, Duo Wang, Weili Jiao, Yongkun Liu, Guojin He, and Tengfei Long. 2024. "Improving Unsupervised Object-Based Change Detection via Hierarchical Multi-Scale Binary Partition Tree Segmentation: A Case Study in the Yellow River Source Region" Remote Sensing 16, no. 4: 629. https://doi.org/10.3390/rs16040629

APA StyleDu, Y., He, X., Chen, L., Wang, D., Jiao, W., Liu, Y., He, G., & Long, T. (2024). Improving Unsupervised Object-Based Change Detection via Hierarchical Multi-Scale Binary Partition Tree Segmentation: A Case Study in the Yellow River Source Region. Remote Sensing, 16(4), 629. https://doi.org/10.3390/rs16040629