Deep Learning Approach to Improve Spatial Resolution of GOES-17 Wildfire Boundaries Using VIIRS Satellite Data

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Source

2.1.1. Geostationary Operational Environmental Satellite (GOES)

2.1.2. Visible Infrared Imaging Radiometer Suite (VIIRS)

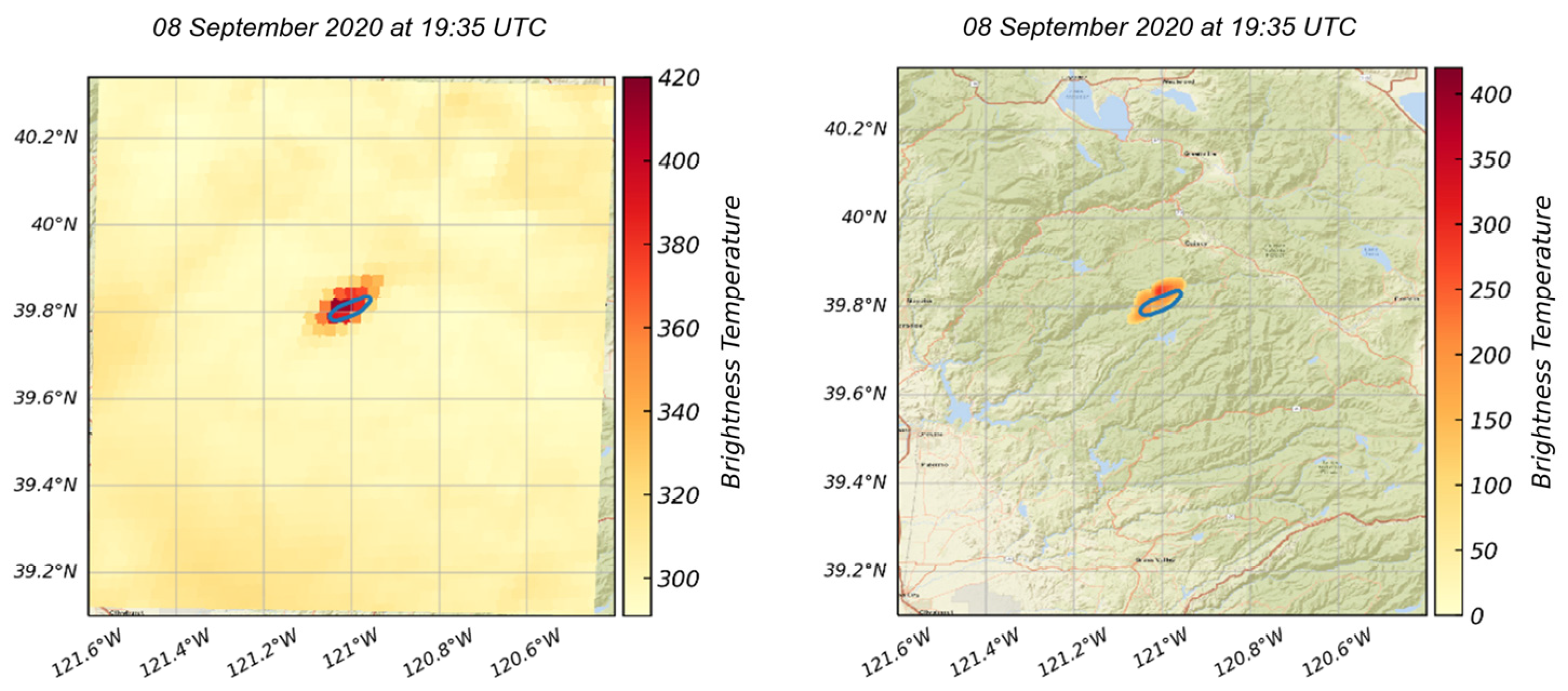

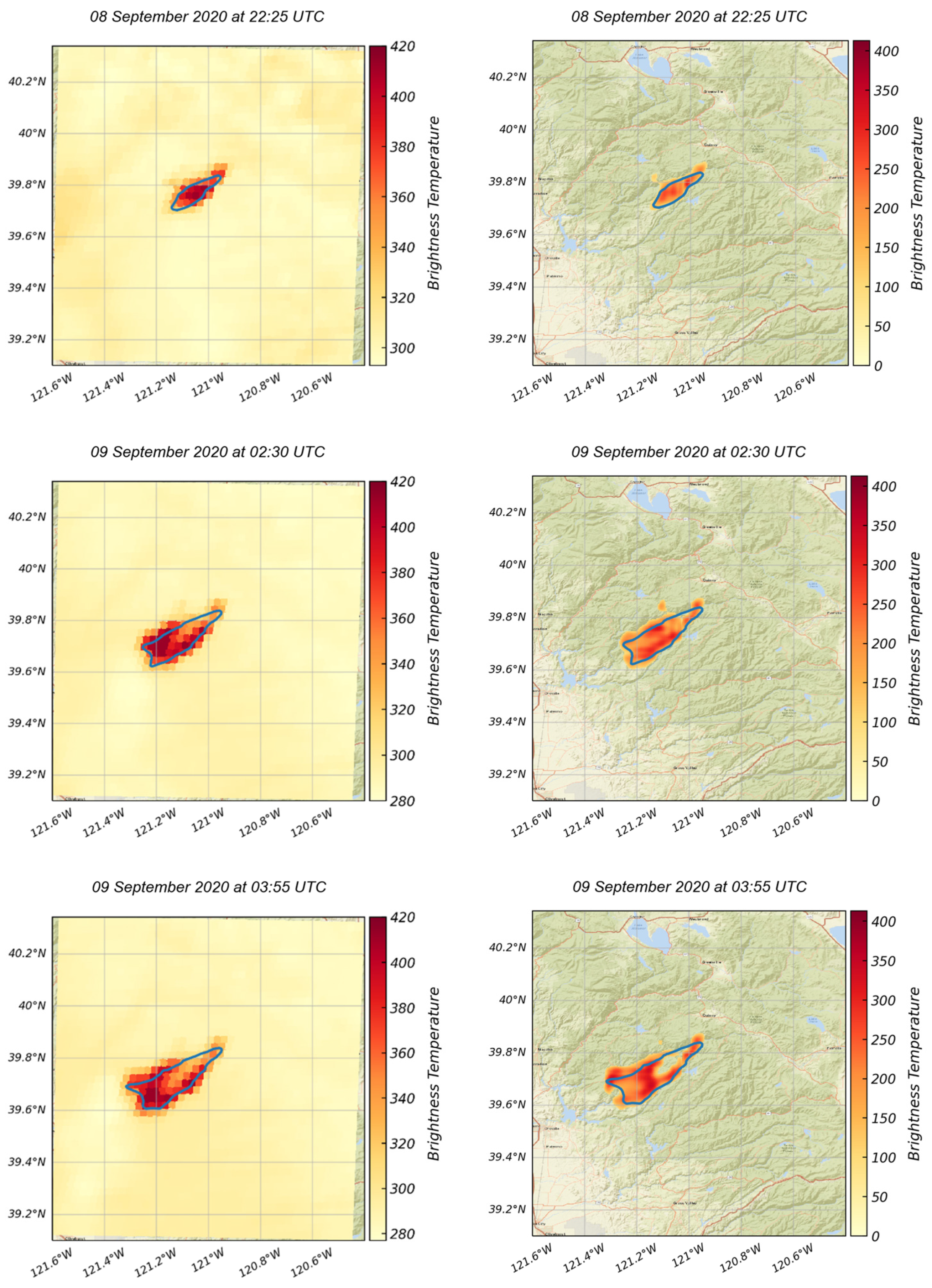

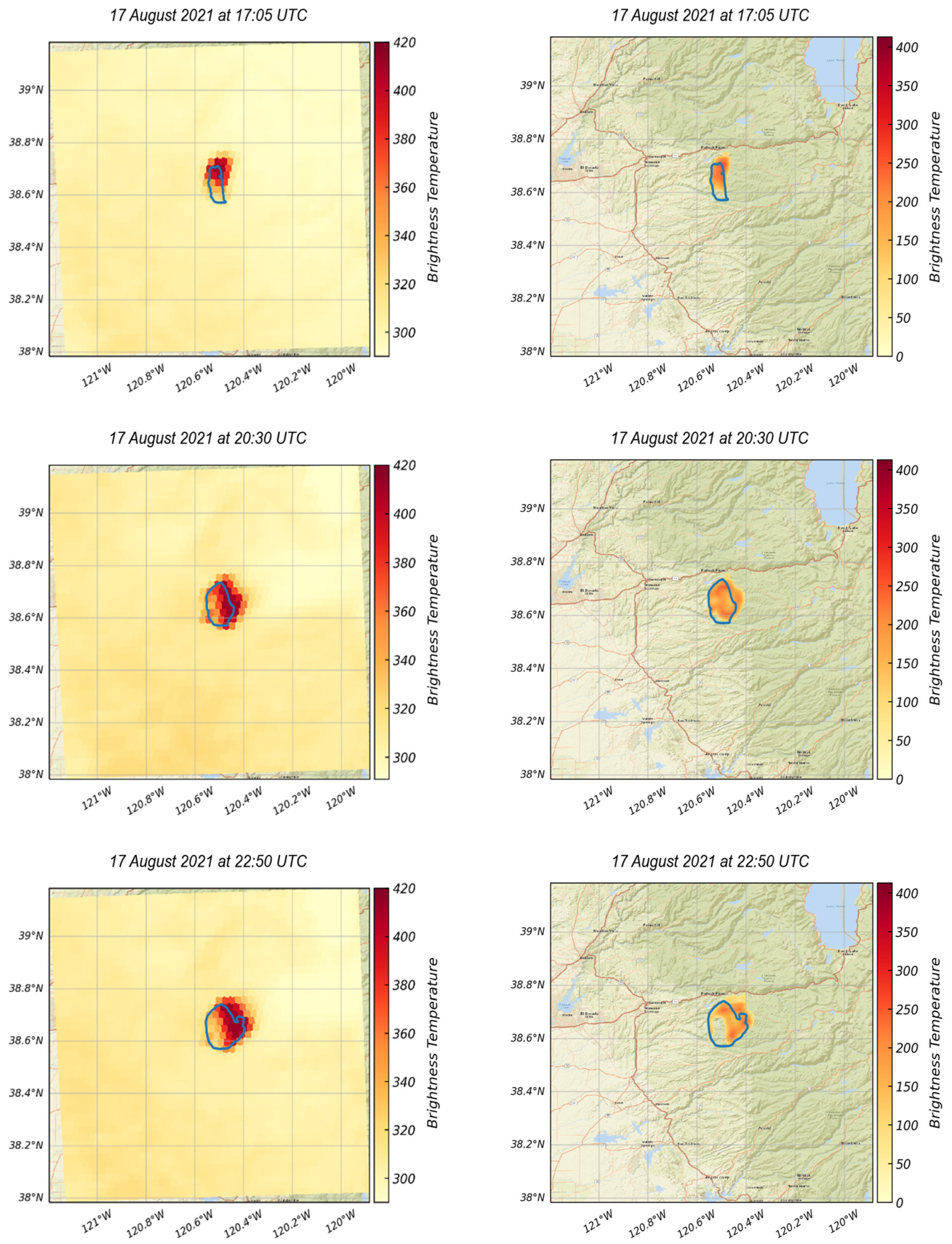

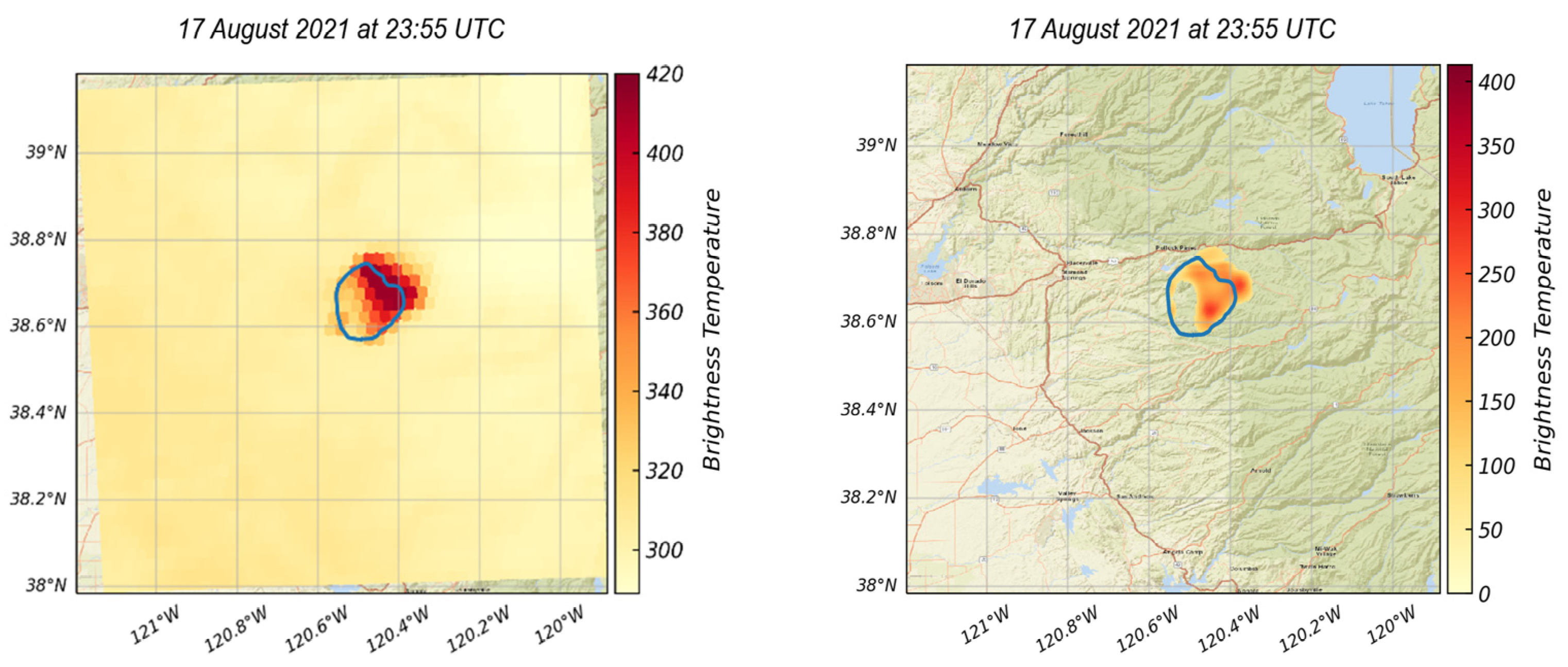

2.2. Data Pre-Processing

- Step 1: Extracting wildfire event data from VIIRS and identifying timestamps. The pipeline first extracts the records from the VIIRS CSV file to identify detected fire hotspots that fall within the ROI and duration of wildfire event. The pipeline also identifies unique timestamps from the extracted records.

- Step 2: Downloading GOES images for each identified timestamp. In order to ensure a contemporaneous dataset, the pre-processing pipeline downloads GOES images with captured times that are near to each VIIRS timestamp identified in Step 1. GOES have a temporal resolution of 5 min, meaning that there will always be a GOES image within 2.5 min of the VIIRS captured time, except in cases where the GOES file is corrupted due to cooling system issue [33]. In case of corrupted GOES data, Steps 3 and 4 will be halted and the pipeline will proceed to the next timestamp. It is important to note that the instrument delivers 94% of the intended data [33].

- Step 3: Creating processed GOES images. The GOES images obtained in Step 2 have different projection from the corresponding VIIRS. In this step, the GOES images are cropped to match the site’s ROI and reprojected into a standard coordinate reference system (CRS).

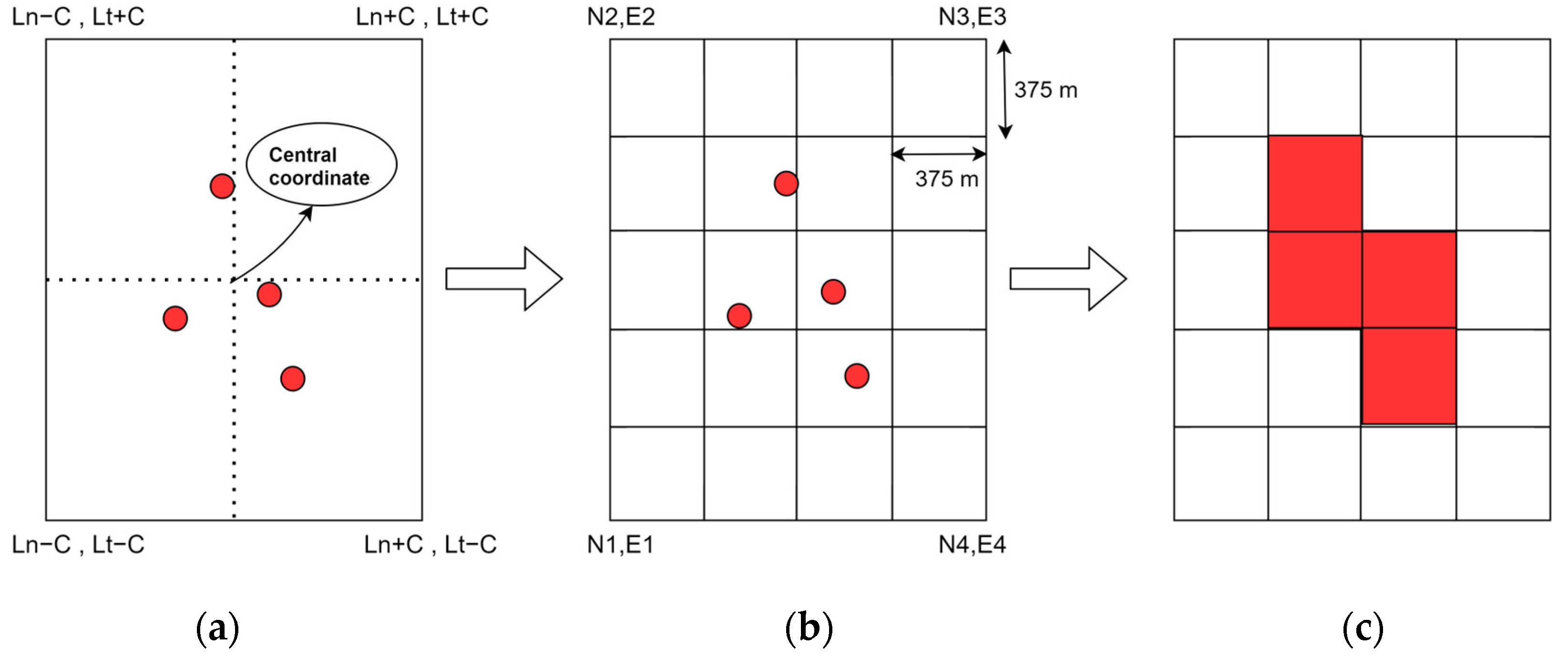

- Step 4: Creating processed VIIRS images. The VIIRS records obtained in Step 1 are grouped by timestamp and rasterized, interpolated, and saved into GeoTIFF images using the same projection as the one used to reproject GOES images in Step 3.

2.2.1. GOES Pre-Processing

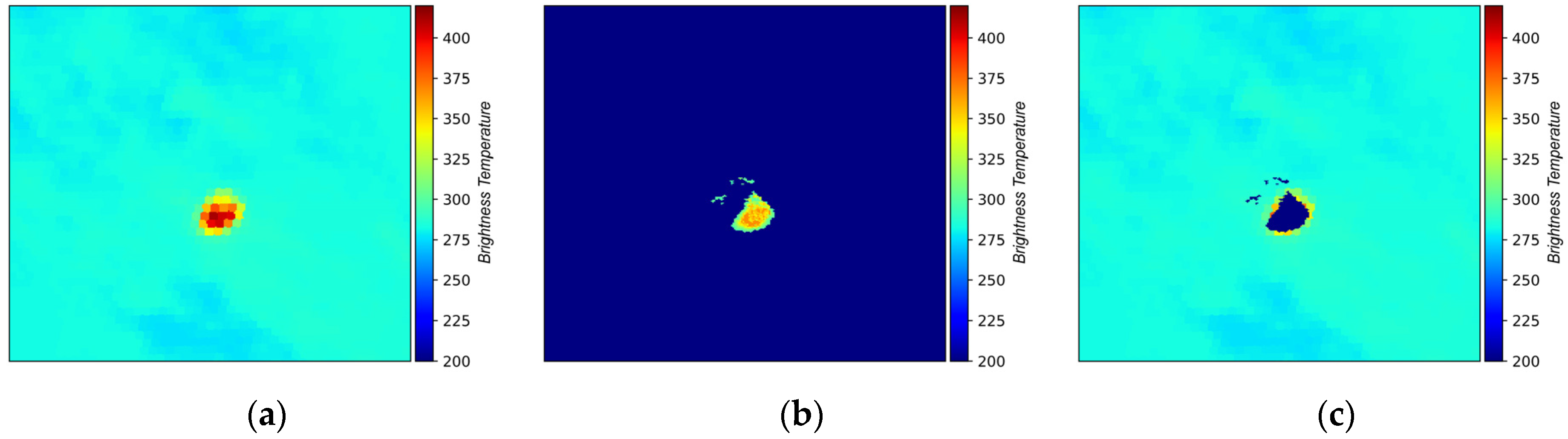

2.2.2. VIIRS Pre-Processing

3. Proposed Approach

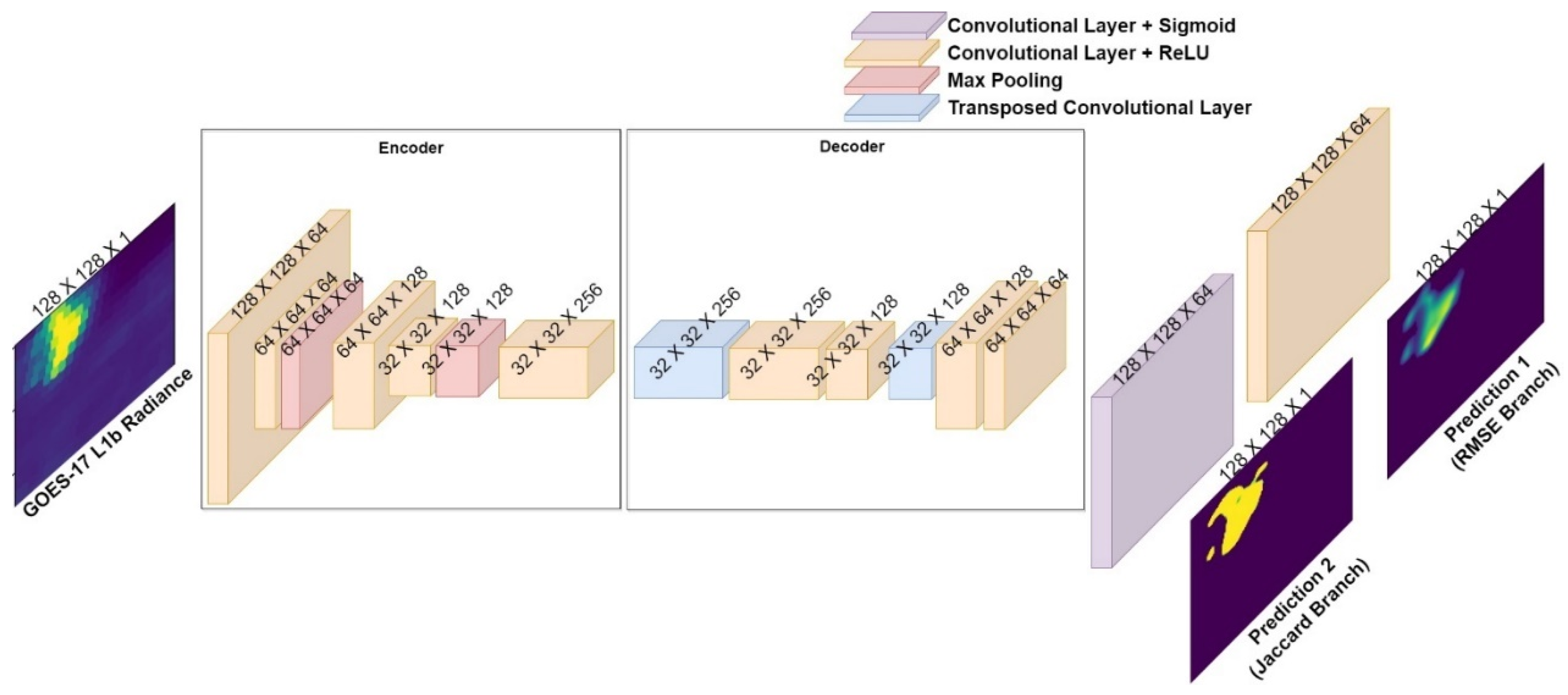

3.1. Autoencoder

3.2. Loss Functions and Architectural Tweaking

3.2.1. Global Root Mean Square Error (GRMSE)

3.2.2. Global Plus Local RMSE (GLRMSE)

3.2.3. Jaccard Loss (JL)

3.2.4. RMSE Plus Jaccard Loss Using Two-Branch Architecture

3.3. Evaluation

3.3.1. Pre-Processing: Removing Background Noise

3.3.2. Evaluation Metrics

3.3.3. Dataset Categorization

3.3.4. Post-Processing: Normalization of Prediction values

4. Results

4.1. Training

4.2. Testing

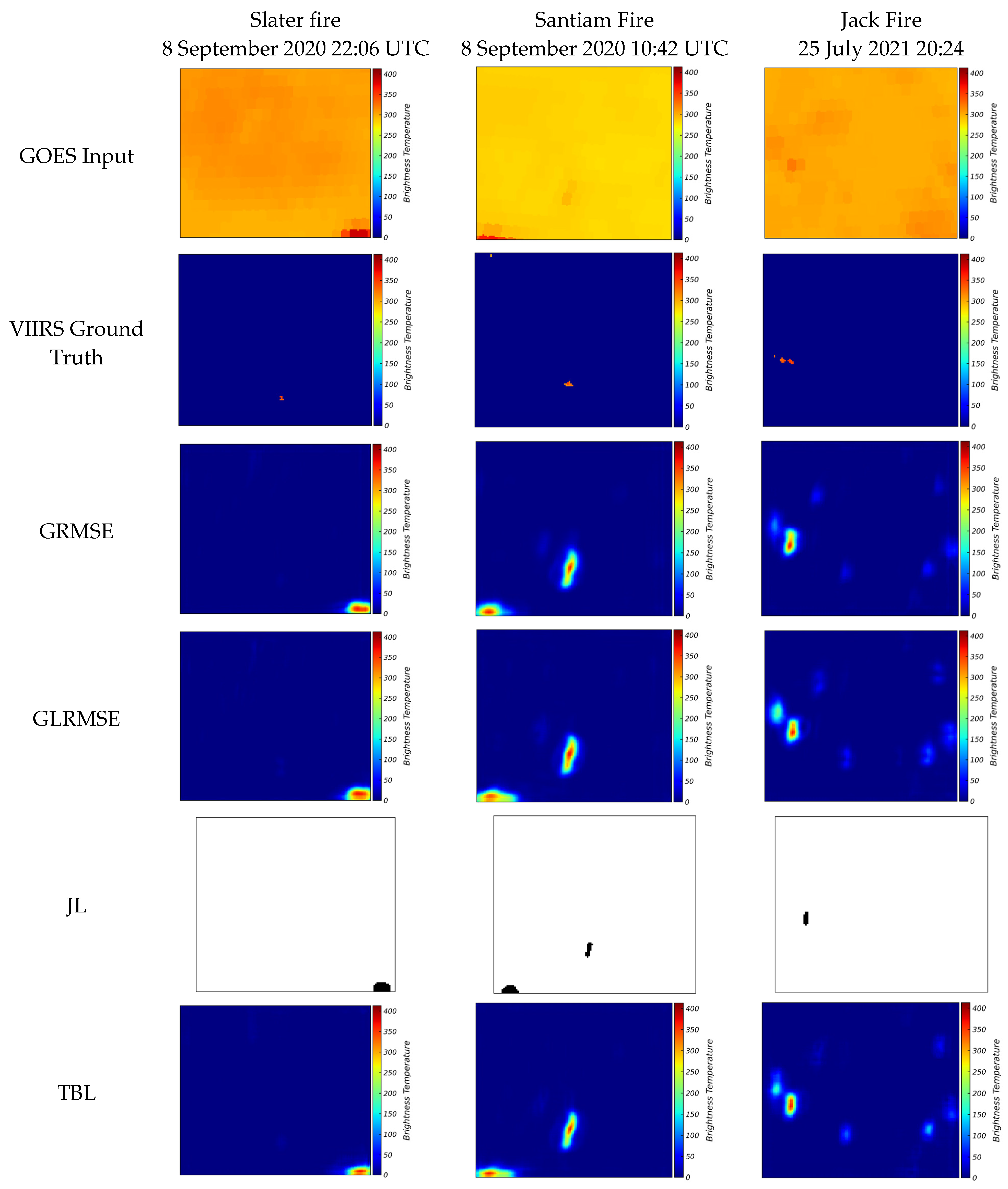

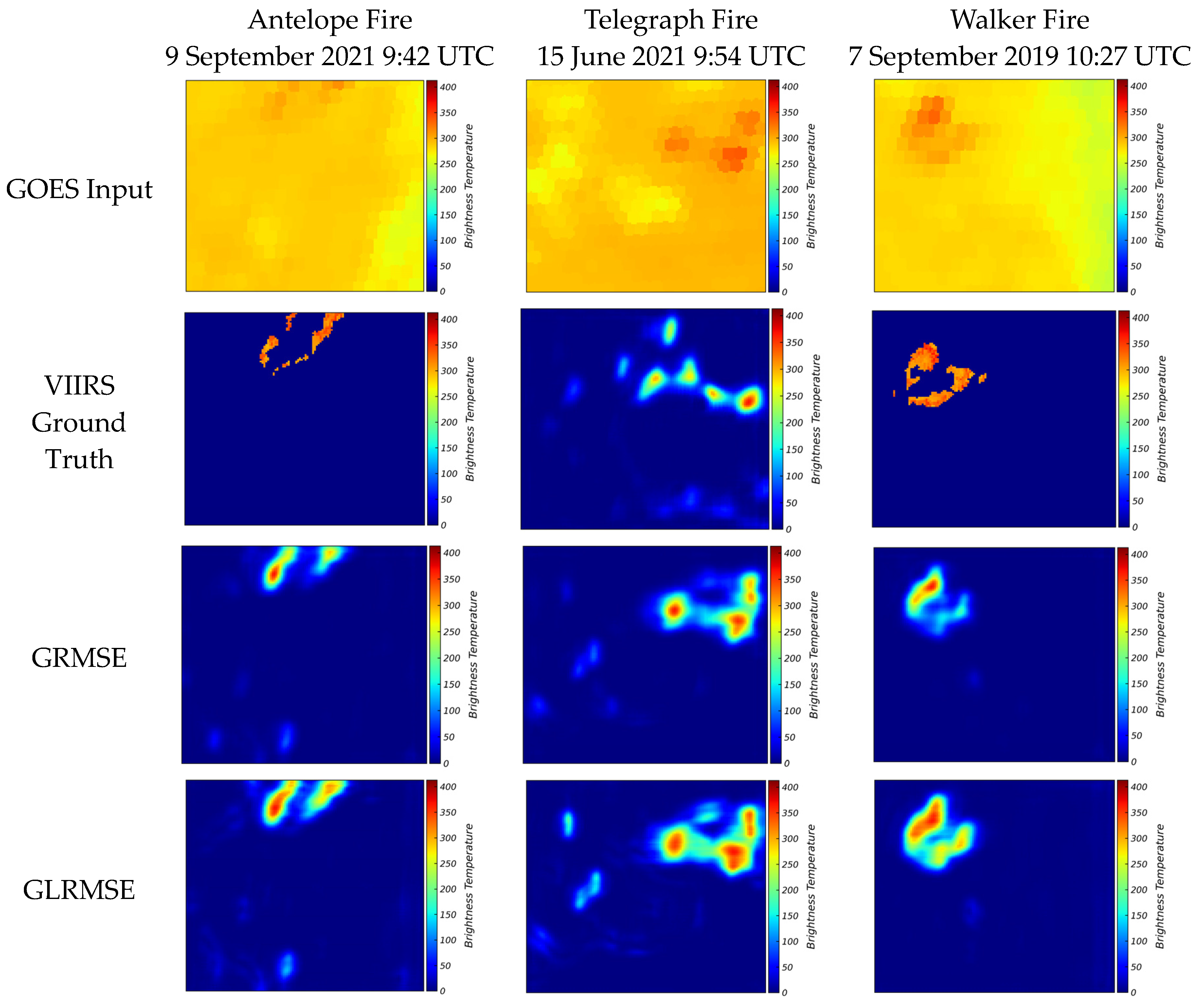

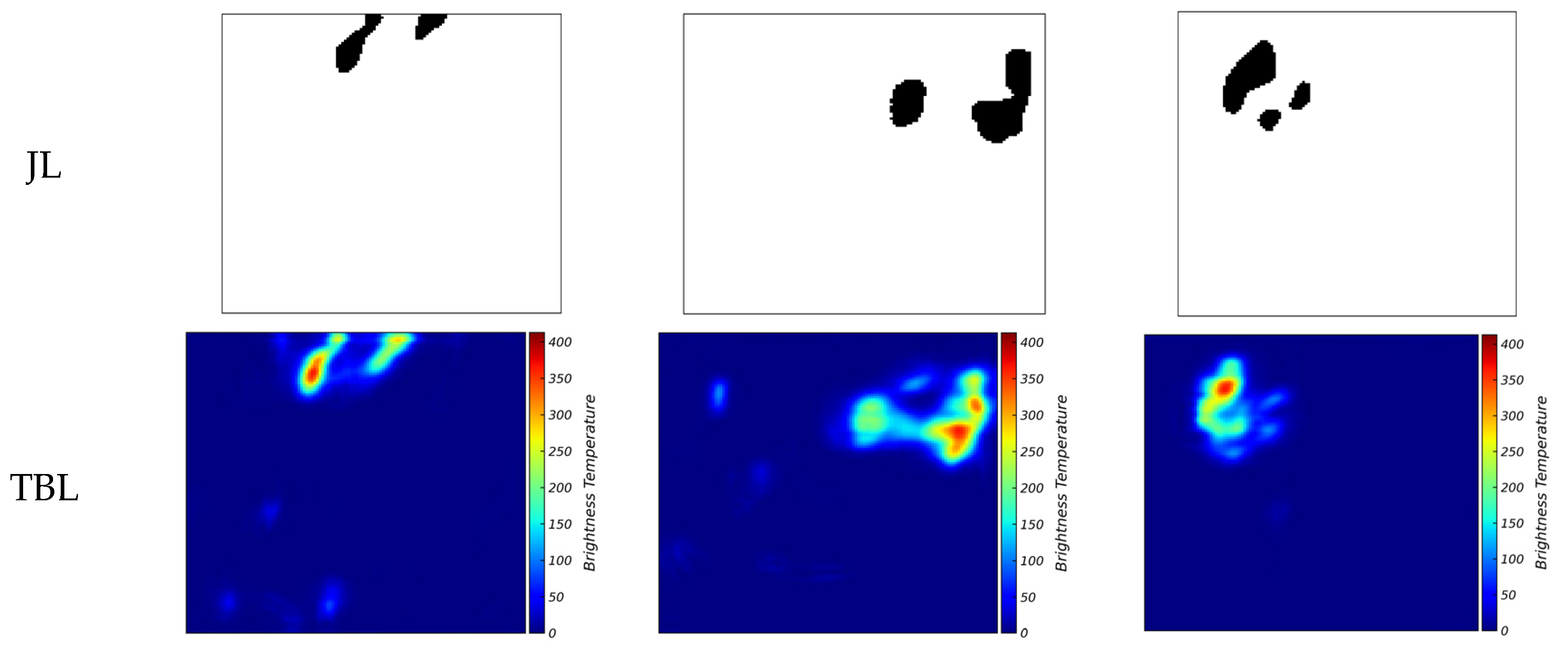

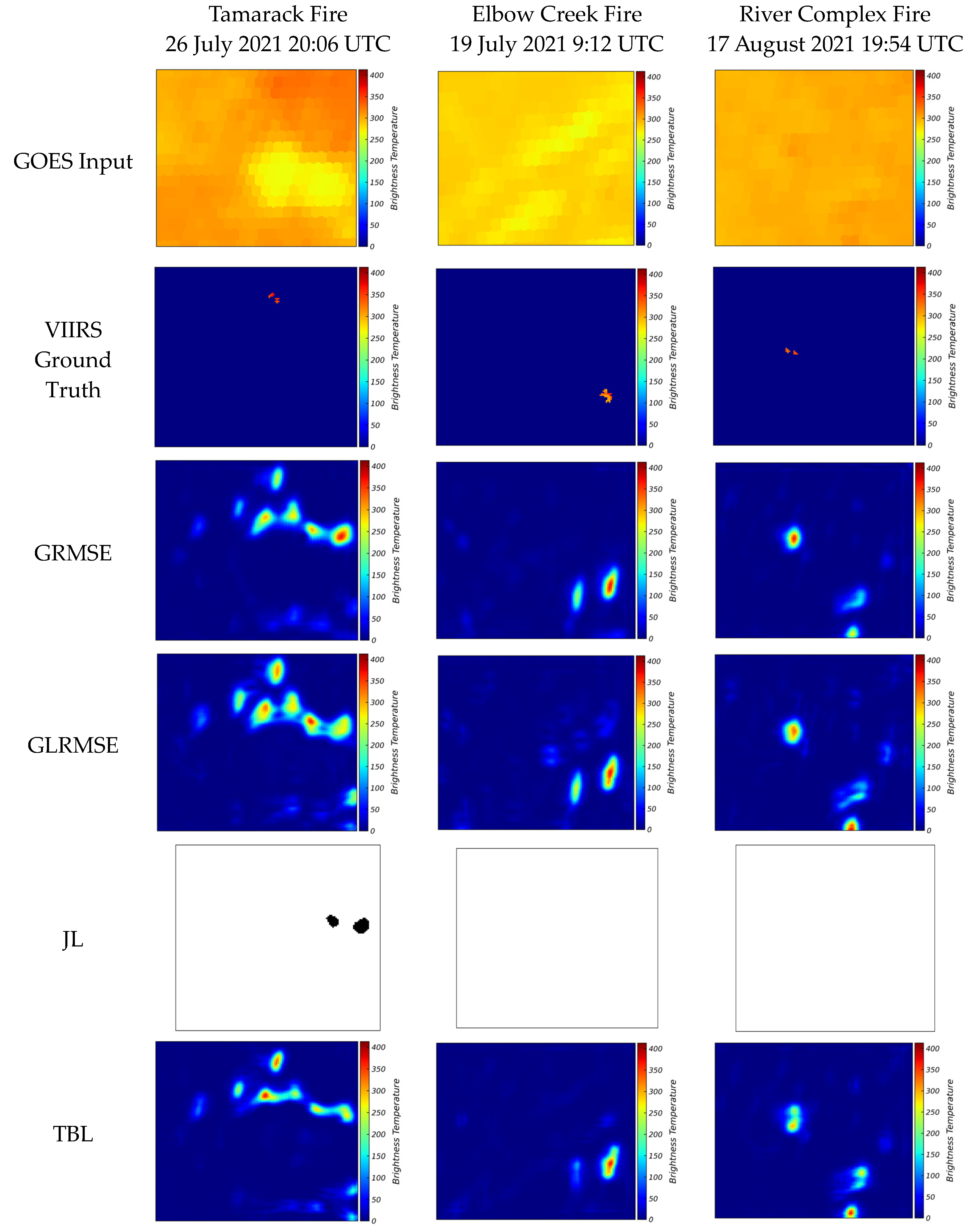

4.2.1. LCHI: Low Coverage with High IOU

4.2.2. LCLI: Low Coverage with Low IOU

4.2.3. HCHI: High Coverage with High IOU

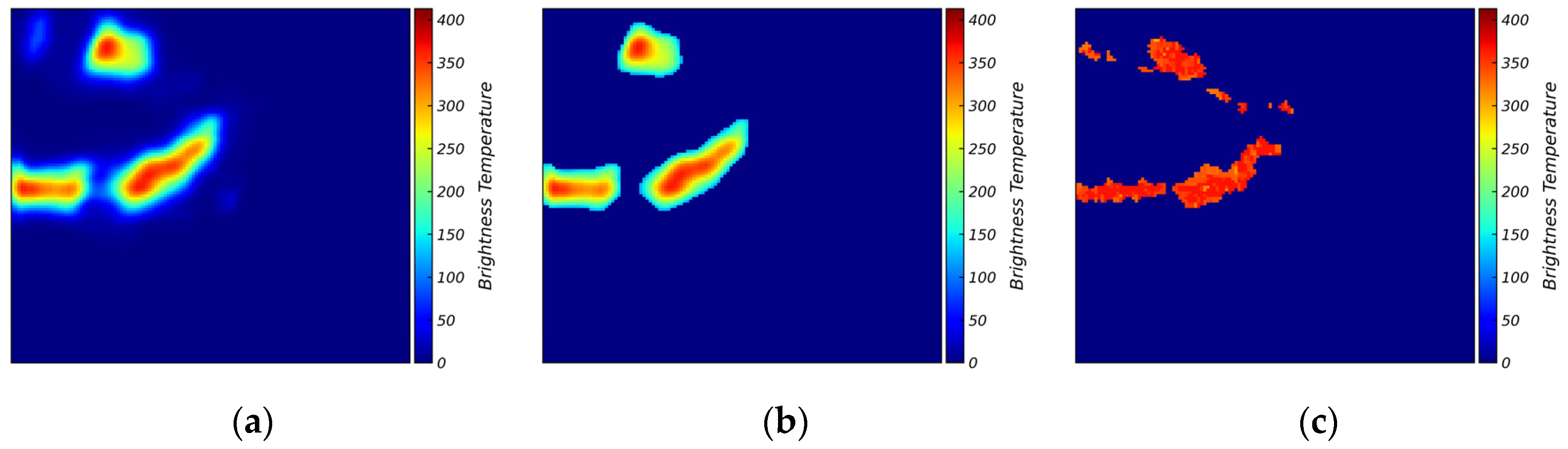

4.2.4. HCLI: High Coverage with Low IOU

4.3. Blind Testing

4.4. Opportunities and Limitations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. List of Wildfire Events Used in This Study

| Site | Central Longitude | Central Latitude | Fire Start Date | Fire End Date |

|---|---|---|---|---|

| Kincade | −122.780 | 38.792 | 23 October 2019 | 6 November 2019 |

| Walker | −120.669 | 40.053 | 4 September 2019 | 25 September 2019 |

| Tucker | −121.243 | 41.726 | 28 July 2019 | 15 August 2019 |

| Taboose | −118.345 | 37.034 | 4 September 2019 | 21 November 2019 |

| Maria | −118.997 | 34.302 | 31 October 2019 | 5 November 2019 |

| Redbank | −122.64 | 40.12 | 5 September 2019 | 13 September 2019 |

| Saddle ridge | −118.481 | 34.329 | 10 October 2019 | 31 October 2019 |

| Lone | −121.576 | 39.434 | 5 September 2019 | 13 September 2019 |

| Richter creek fire | −119.66 | 49.04 | 13 May 2019 | 20 May 2019 |

| LNU lighting complex | −122.237 | 38.593 | 18 August 2020 | 30 September 2020 |

| SCU lighting complex | −121.438 | 37.352 | 14 August 2020 | 1 October 2020 |

| CZU lighting complex | −122.280 | 37.097 | 16 August 2020 | 22 September 2020 |

| August complex | −122.97 | 39.868 | 17 August 2020 | 23 September 2020 |

| North complex fire | −120.12 | 39.69 | 14 August 2020 | 3 December 2020 |

| Glass fire | −122.496 | 38.565 | 27 September 2020 | 30 October 2020 |

| Beachie wildfire | −122.138 | 44.745 | 2 September 2020 | 14 September 2020 |

| Beachie wildfire 2 | −122.239 | 45.102 | 2 September 2020 | 14 September 2020 |

| Holiday farm wildfire | −122.49 | 44.15 | 7 September 2020 | 14 September 2020 |

| Cold spring fire | −119.572 | 48.850 | 6 September 2020 | 14 September 2020 |

| Creek fire | −119.3 | 37.2 | 5 September 2020 | 10 September 2020 |

| Blue ridge fire | −117.68 | 33.88 | 26 October 2020 | 30 October 2020 |

| Silverado fire | −117.66 | 33.74 | 26 October 2020 | 27 October 2020 |

| Bond fire | −117.67 | 33.74 | 2 December 2020 | 7 December 2020 |

| Washinton fire | −119.556 | 48.825 | 18 August 2020 | 30 August 2020 |

| Oregon fire | −121.645 | 44.738 | 17 August 2020 | 30 August 2020 |

| Christie mountain | −119.54 | 49.364 | 18 August 2020 | 30 September 2020 |

| Bush fire | −111.564 | 33.629 | 13 June 2020 | 6 July 2020 |

| Magnum fire | −112.34 | 36.61 | 8 June 2020 | 6 July 2020 |

| Bighorn fire | −111.03 | 32.53 | 6 June 2020 | 23 July 2020 |

| Santiam fire | −122.19 | 44.82 | 31 August 2020 | 30 September 2020 |

| Holiday farm fire | −122.45 | 44.15 | 7 September 2020 | 30 September 2020 |

| Slater fire | −123.38 | 41.77 | 7 September 2020 | 30 September 2020 |

| Pinnacle fire | −110.201 | 32.865 | 10 June 2021 | 16 July 2021 |

| Backbone fire | −111.677 | 34.344 | 16 June 2021 | 19 July 2021 |

| Rafael fire | −112.162 | 34.942 | 18 June 2021 | 15 July 2021 |

| Telegraph fire | −111.092 | 33.209 | 4 June 2021 | 3 July 2021 |

| Dixie | −121 | 40 | 15 June 2021 | 15 August 2021 |

| Monument | −123.33 | 40.752 | 30 July 2021 | 25 October 2021 |

| River complex | −123.018 | 41.143 | 30 July 2021 | 25 October 2021 |

| Antelope | −121.919 | 41.521 | 1 August 2021 | 15 October 2021 |

| McFarland | −123.034 | 40.35 | 29 July 2021 | 16 September 2021 |

| Beckwourth complex | −118.811 | 36.567 | 3 July 2021 | 22 September 2021 |

| Windy | −118.631 | 36.047 | 9 September 2021 | 15 November 2021 |

| Mccash | −123.404 | 41.564 | 31 July 2021 | 27 October 2021 |

| Knp complex | −118.811 | 36.567 | 10 September 2021 | 16 December 2021 |

| Tamarack | −119.857 | 38.628 | 4 July 2021 | 8 October 2021 |

| French | −118.55 | 35.687 | 18 August 2021 | 19 October 2021 |

| Lava | −122.329 | 41.459 | 25 June 2021 | 03 September 2021 |

| Alisal | −120.131 | 34.517 | 11 October 2021 | 16 November 2021 |

| Salt | −122.336 | 40.849 | 30 June 2021 | 19 July 2021 |

| Tennant | −122.039 | 41.665 | 28 June 2021 | 12 July 2021 |

| Bootleg | −121.421 | 42.616 | 6 July 2021 | 14 August 2021 |

| Cougar peak | −120.613 | 42.277 | 7 September 2021 | 21 October 2021 |

| Devil’s Knob Complex | −123.268 | 41.915 | 3 August 2021 | 19 October 2021 |

| Roughpatch complex | −122.676 | 43.511 | 29 July 2021 | 29 November 2021 |

| Middlefork complex | −122.409 | 43.869 | 29 July 2021 | 13 December 2021 |

| Bull complex | −122.009 | 44.879 | 2 August 2021 | 19 November 2021 |

| Jack | −122.686 | 43.322 | 5 July 2021 | 29 November 2021 |

| Elbow Creek | −117.619 | 45.867 | 15 July 2021 | 24 September 2021 |

| Black Butte | −118.326 | 44.093 | 3 August 2021 | 27 September 2021 |

| Fox complex | −120.599 | 42.21 | 13 August 2021 | 1 September 2021 |

| Joseph canyon | −117.081 | 45.989 | 4 June 2021 | 15 July 2021 |

| Wrentham market | −121.006 | 45.49 | 29 June 2021 | 3 July 2021 |

| S-503 | −121.476 | 45.087 | 18 June 2021 | 18 August 2021 |

| Grandview | −121.4 | 44.466 | 11 July 2021 | 25 July 2021 |

| Lick Creek fire | −117.416 | 46.262 | 7 July 2021 | 14 August 2021 |

| Richter mountain fire | −119.7 | 49.06 | 26 July 2019 | 30 July 2019 |

References

- NIFC Wildfires and Acres|National Interagency Fire Center. Available online: https://www.nifc.gov/fire-information/statistics/wildfires (accessed on 21 January 2024).

- Taylor, A.H.; Harris, L.B.; Skinner, C.N. Severity Patterns of the 2021 Dixie Fire Exemplify the Need to Increase Low-Severity Fire Treatments in California’s Forests. Environ. Res. Lett. 2022, 17, 071002. [Google Scholar] [CrossRef]

- Liao, Y.; Kousky, C. The Fiscal Impacts of Wildfires on California Municipalities. J. Assoc. Environ. Resour. Econ. 2022, 9, 455–493. [Google Scholar] [CrossRef]

- Westerling, A.L.; Hidalgo, H.G.; Cayan, D.R.; Swetnam, T.W. Warming and Earlier Spring Increase Western U.S. Forest Wildfire Activity. Science 2006, 313, 940–943. [Google Scholar] [CrossRef]

- Szpakowski, D.M.; Jensen, J.L.R. A Review of the Applications of Remote Sensing in Fire Ecology. Remote Sens. 2019, 11, 2638. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef] [PubMed]

- Radke, L.F.; Clark, T.L.; Coen, J.L.; Walther, C.A.; Lockwood, R.N.; Riggan, P.J.; Brass, J.A.; Higgins, R.G. The Wildfire Experiment (WIFE): Observations with Airborne Remote Sensors. Can. J. Remote Sens. 2000, 26, 406–417. [Google Scholar] [CrossRef]

- Loew, A.; Bell, W.; Brocca, L.; Bulgin, C.E.; Burdanowitz, J.; Calbet, X.; Donner, R.V.; Ghent, D.; Gruber, A.; Kaminski, T. Validation Practices for Satellite-Based Earth Observation Data across Communities. Rev. Geophys. 2017, 55, 779–817. [Google Scholar] [CrossRef]

- Kumar, S.S.; Roy, D.P. Global Operational Land Imager Landsat-8 Reflectance-Based Active Fire Detection Algorithm. Int. J. Digit. Earth 2018, 11, 154–178. [Google Scholar] [CrossRef]

- Li, J.; Roy, D.P. A Global Analysis of Sentinel-2a, Sentinel-2b and Landsat-8 Data Revisit Intervals and Implications for Terrestrial Monitoring. Remote Sens. 2017, 9, 902. [Google Scholar] [CrossRef]

- Lentile, L.B.; Holden, Z.A.; Smith, A.M.S.; Falkowski, M.J.; Hudak, A.T.; Morgan, P.; Lewis, S.A.; Gessler, P.E.; Benson, N.C. Remote Sensing Techniques to Assess Active Fire Characteristics and Post-Fire Effects. Int. J. Wildl. Fire 2006, 15, 319–345. [Google Scholar] [CrossRef]

- Hu, X.; Ban, Y.; Nascetti, A. Sentinel-2 MSI Data for Active Fire Detection in Major Fire-Prone Biomes: A Multi-Criteria Approach. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102347. [Google Scholar] [CrossRef]

- Schroeder, W.; Oliva, P.; Giglio, L.; Csiszar, I.A. The New VIIRS 375 m Active Fire Detection Data Product: Algorithm Description and Initial Assessment. Remote Sens. Environ. 2014, 143, 85–96. [Google Scholar] [CrossRef]

- Giglio, L.; Schroeder, W.; Justice, C.O. The Collection 6 MODIS Active Fire Detection Algorithm and Fire Products. Remote Sens. Environ. 2016, 178, 31–41. [Google Scholar] [CrossRef]

- Xu, W.; Wooster, M.J.; He, J.; Zhang, T. First Study of Sentinel-3 SLSTR Active Fire Detection and FRP Retrieval: Night-Time Algorithm Enhancements and Global Intercomparison to MODIS and VIIRS AF Products. Remote Sens. Environ. 2020, 248, 111947. [Google Scholar] [CrossRef]

- Oliva, P.; Schroeder, W. Assessment of VIIRS 375 m Active Fire Detection Product for Direct Burned Area Mapping. Remote Sens. Environ. 2015, 160, 144–155. [Google Scholar] [CrossRef]

- Schroeder, W.; Prins, E.; Giglio, L.; Csiszar, I.; Schmidt, C.; Morisette, J.; Morton, D. Validation of GOES and MODIS Active Fire Detection Products Using ASTER and ETM+ Data. Remote Sens. Environ. 2008, 112, 2711–2726. [Google Scholar] [CrossRef]

- Koltunov, A.; Ustin, S.L.; Prins, E.M. On Timeliness and Accuracy of Wildfire Detection by the GOES WF-ABBA Algorithm over California during the 2006 Fire Season. Remote Sens. Environ. 2012, 127, 194–209. [Google Scholar] [CrossRef]

- Li, F.; Zhang, X.; Kondragunta, S.; Schmidt, C.C.; Holmes, C.D. A Preliminary Evaluation of GOES-16 Active Fire Product Using Landsat-8 and VIIRS Active Fire Data, and Ground-Based Prescribed Fire Records. Remote Sens. Environ. 2020, 237, 111600. [Google Scholar] [CrossRef]

- Lindley, T.T.; Zwink, A.B.; Gravelle, C.M.; Schmidt, C.C.; Palmer, C.K.; Rowe, S.T.; Heffernan, R.; Driscoll, N.; Kent, G.M. Ground-Based Corroboration of Goes-17 Fire Detection Capabilities during Ignition of the Kincade Fire. J. Oper. Meteorol. 2020, 8, 105–110. [Google Scholar] [CrossRef]

- Rashid, M.; Sulaiman, N.; Mustafa, M.; Khatun, S.; Bari, B.S. The Classification of EEG Signal Using Different Machine Learning Techniques for BCI Application. Commun. Comput. Inf. Sci. 2019, 1015, 207–221. [Google Scholar] [CrossRef]

- Ren, S.; Zhou, Y.; He, L. Multi-Object Tracking with Pre-Classified Detection. In Proceedings of the Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2019; Volume 751, pp. 503–513. [Google Scholar]

- Nezhad, M.Z.; Sadati, N.; Yang, K.; Zhu, D. A Deep Active Survival Analysis Approach for Precision Treatment Recommendations: Application of Prostate Cancer. Expert Syst. Appl. 2019, 115, 16–26. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building Extraction in Very High Resolution Remote Sensing Imagery Using Deep Learning and Guided Filters. Remote Sens. 2018, 10, 144. [Google Scholar] [CrossRef]

- Albert, A.; Kaur, J.; Gonzalez, M.C. Using Convolutional Networks and Satellite Imagery to Identify Patterns in Urban Environments at a Large Scale. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13 August 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1357–1366. [Google Scholar]

- Oh, T.; Chung, M.J.; Myung, H. Accurate Localization in Urban Environments Using Fault Detection of GPS and Multi-Sensor Fusion. In Proceedings of the Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2017; Volume 447, pp. 43–53. [Google Scholar]

- Toan, N.T.; Thanh Cong, P.; Viet Hung, N.Q.; Jo, J. A Deep Learning Approach for Early Wildfire Detection from Hyperspectral Satellite Images. In Proceedings of the 2019 7th International Conference on Robot Intelligence Technology and Applications, RiTA 2019, Daejeon, Republic of Korea, 1 November 2019; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA; pp. 38–45. [Google Scholar]

- Phan, T.C.; Nguyen, T.T.; Hoang, T.D.; Nguyen, Q.V.H.; Jo, J. Multi-Scale Bushfire Detection from Multi-Modal Streams of Remote Sensing Data. IEEE Access 2020, 8, 228496–228513. [Google Scholar] [CrossRef]

- Zhao, Y.; Ban, Y. GOES-R Time Series for Early Detection of Wildfires with Deep GRU-Network. Remote Sens. 2022, 14, 4347. [Google Scholar] [CrossRef]

- McCarthy, N.F.; Tohidi, A.; Aziz, Y.; Dennie, M.; Valero, M.M.; Hu, N. A Deep Learning Approach to Downscale Geostationary Satellite Imagery for Decision Support in High Impact Wildfires. Forests 2021, 12, 294. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. Deep Learning Approaches for Wildland Fires Using Satellite Remote Sensing Data: Detection, Mapping, and Prediction. Fire 2023, 6, 192. [Google Scholar] [CrossRef]

- Chen, Z.; Pawar, K.; Ekanayake, M.; Pain, C.; Zhong, S.; Egan, G.F. Deep Learning for Image Enhancement and Correction in Magnetic Resonance Imaging-State-of-the-Art and Challenges. J. Digit. Imaging 2023, 36, 204–230. [Google Scholar] [CrossRef] [PubMed]

- Valenti, J. Goes R Series Product Definition and Users’ Guide; NOAA: Silver Spring, ML, USA, 2018; Volume 4. [Google Scholar]

- Losos, D. Beginner’s Guide to GOES-R Series Data; NOAA: Silver Spring, ML, USA, 2021. [Google Scholar]

- Schroeder, W.; Giglio, L. NASA VIIRS Land Science Investigator Processing System (SIPS) Visible Infrared Imaging Radiometer Suite (VIIRS) 375 m & 750 m Active Fire Products: Product User’s Guide; NOAA: Silver Spring, ML, USA, 2018; Volumes 1–4. [Google Scholar]

- Cao, C.; Blonski, S.; Wang, W.; Uprety, S.; Shao, X.; Choi, J.; Lynch, E.; Kalluri, S. NOAA-20 VIIRS on-Orbit Performance, Data Quality, and Operational Cal/Val Support. In SPIE 10781, Earth Observing Missions and Sensors: Development, Implementation, and Characterization; SPIE: Bellingham, WA, USA, 2018; p. 21. ISBN 9781510621374. [Google Scholar]

- Visible Infrared Imaging Radiometer Suite (VIIRS)|NESDIS. Available online: https://www.nesdis.noaa.gov/current-satellite-missions/currently-flying/joint-polar-satellite-system/jpss-mission-and-2 (accessed on 18 October 2022).

- Visible Infrared Imaging Radiometer Suite (VIIRS)—LAADS DAAC. Available online: https://ladsweb.modaps.eosdis.nasa.gov/missions-and-measurements/viirs/ (accessed on 9 February 2023).

- VNP14IMGTDL_NRT|Earthdata. Available online: https://www.earthdata.nasa.gov/learn/find-data/near-real-time/firms/vnp14imgtdlnrt (accessed on 4 January 2023).

- Wythoff, B.J. Backpropagation Neural Networks. Chemom. Intell. Lab. Syst. 1993, 18, 115–155. [Google Scholar] [CrossRef]

- NASA | LANCE | FIRMS. Available online: https://firms.modaps.eosdis.nasa.gov/country/ (accessed on 7 October 2022).

- Murphy, K.J.; Davies, D.K.; Michael, K.; Justice, C.O.; Schmaltz, J.E.; Boller, R.; McLemore, B.D.; Ding, F.; Wong, M.M. Lance, Nasa’s Land, Atmosphere near Real-Time Capability for Eos. In Time-Sensitive Remote Sensing; Springer: New York, NY, USA, 2015; pp. 113–127. [Google Scholar]

- Congalton, R.G. Exploring and Evaluating the Consequences of Vector-to-Raster and Raster-to-Vector Conversion. Photogramm. Eng. Remote Sens. 1997, 63, 425–434. [Google Scholar]

- Incidents|CAL FIRE. Available online: https://www.fire.ca.gov/incidents (accessed on 23 January 2024).

- 2020 Western United States Wildfires—Homeland Security Digital Library. Available online: https://www.hsdl.org/c/tl/2020-wildfires/ (accessed on 1 March 2023).

- Hoese, D. SatPy: A Python Library for Weather Satellite Processing. Ninth Symp. Adv. Model. Anal. Using Python 2019. [Google Scholar] [CrossRef]

- Berk, A. Analytically Derived Conversion of Spectral Band Radiance to Brightness Temperature. J. Quant. Spectrosc. Radiat. Transf. 2008, 109, 1266–1276. [Google Scholar] [CrossRef]

- Xing, Y.; Song, Q.; Cheng, G. Benefit of Interpolation in Nearest Neighbor Algorithms. SIAM J. Math. Data Sci. 2022, 4, 935–956. [Google Scholar] [CrossRef]

- GDAL/OGR contributors GDAL/OGR Geospatial Data Abstraction Software Library. Open Source Geospat. Found. 2024. [CrossRef]

- Park, S.; Gach, H.M.; Kim, S.; Lee, S.J.; Motai, Y. Autoencoder-Inspired Convolutional Network-Based Super-Resolution Method in MRI. IEEE J. Transl. Eng. Health Med. 2021, 9, 1–13. [Google Scholar] [CrossRef]

- Wang, W.; Huang, Y.; Wang, Y.; Wang, L. Generalized Autoencoder: A Neural Network Framework for Dimensionality Reduction. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2016; pp. 496–503. [Google Scholar] [CrossRef]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a Convolutional Neural Network. In Proceedings of the International Conference on Engineering and Technology, ICET 2017, Antalya, Turkey, 7 March 2017; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Shi, W.; Caballero, J.; Theis, L.; Huszar, F.; Aitken, A.; Ledig, C.; Wang, Z. Is the Deconvolution Layer the Same as a Convolutional Layer? arXiv 2016, arXiv:1609.07009. [Google Scholar] [CrossRef]

- Chiang, H.T.; Hsieh, Y.Y.; Fu, S.W.; Hung, K.H.; Tsao, Y.; Chien, S.Y. Noise Reduction in ECG Signals Using Fully Convolutional Denoising Autoencoders. IEEE Access 2019, 7, 60806–60813. [Google Scholar] [CrossRef]

- Barrowclough, O.J.D.; Muntingh, G.; Nainamalai, V.; Stangeby, I. Binary Segmentation of Medical Images Using Implicit Spline Representations and Deep Learning. Comput. Aided Geom. Des. 2021, 85, 101972. [Google Scholar] [CrossRef]

- Duque-Arias, D.; Velasco-Forero, S.; Deschaud, J.E.; Goulette, F.; Serna, A.; Decencière, E.; Marcotegui, B. On Power Jaccard Losses for Semantic Segmentation. In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Prague, Czech Republic, 10 February 2021; SCITEPRESS—Science and Technology Publications: Lisboa, Portugal, 2021; Volume 5, pp. 561–568. [Google Scholar]

- Narayan, S. The Generalized Sigmoid Activation Function: Competitive Supervised Learning. Inf. Sci. 1997, 99, 69–82. [Google Scholar] [CrossRef]

- Jun, Z.; Jinglu, H. Image Segmentation Based on 2D Otsu Method with Histogram Analysis. In Proceedings of the International Conference on Computer Science and Software Engineering, CSSE 2008, Wuhan, China, 12–14 December 2008; IEEE: Piscataway, NJ, USA, 2008; Volume 6, pp. 105–108. [Google Scholar]

- Kingma, D.P.; Ba, J.L. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, ICLR, San Diego, CA, USA, 22 December 2015. [Google Scholar]

- Lareau, N.P.; Donohoe, A.; Roberts, M.; Ebrahimian, H. Tracking Wildfires With Weather Radars. J. Geophys. Res. Atmos. 2022, 127, e2021JD036158. [Google Scholar] [CrossRef]

- Finney, M.A.; Grenfell, I.C.; McHugh, C.W.; Seli, R.C.; Trethewey, D.; Stratton, R.D.; Brittain, S. A Method for Ensemble Wildland Fire Simulation. Environ. Model. Assess. 2011, 16, 153–167. [Google Scholar] [CrossRef]

- Hong, Z.; Hamdan, E.; Zhao, Y.; Ye, T.; Pan, H.; Cetin, A.E. Wildfire Detection via Transfer Learning: A Survey. Signal, Image Video Process. 2023, 18, 207–214. [Google Scholar] [CrossRef]

| Category | LCHI | LCLI | HCHI | HCLI |

|---|---|---|---|---|

| Condition | Coverage < 20% IOU > 5% | Coverage < 20% IOU < 5% | Coverage > 20% IOU > 5% | Coverage > 20% IOU < 5% |

| Evaluation Metrics | GRMSE | GLRMSE | JL | TBL |

|---|---|---|---|---|

| IOU | 0.1358 | 0.1275 | 0.1197 | 0.1389 |

| IPSNR | 46.6864 | 48.5989 | N/A | 46.0219 |

| Evaluation Metrics | GRMSE | GLRMSE | JL | TBL |

|---|---|---|---|---|

| IOU | 0.2372 | 0.2225 | 0.2294 | 0.2408 |

| IPSNR | 56.4517 | 58.6385 | N/A | 56.2793 |

| Evaluation Metrics | GRMSE | GLRMSE | JL | TBL |

|---|---|---|---|---|

| IOU | 0.0320 | 0.0304 | 0.0208 | 0.0334 |

| IPSNR | 32.4128 | 33.5530 | N/A | 31.5966 |

| Evaluation Metrics | GRMSE | GLRMSE | JL | TBL |

|---|---|---|---|---|

| IOU | 0.1820 | 0.1729 | 0.1400 | 0.1839 |

| IPSNR | 56.5220 | 57.1891 | N/A | 56.0820 |

| Evaluation Metrics | GRMSE | GLRMSE | JL | TBL |

|---|---|---|---|---|

| IOU | 0.0539 | 0.0499 | 0.0375 | 0.0575 |

| IPSNR | 37.8572 | 40.8997 | N/A | 37.0405 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Badhan, M.; Shamsaei, K.; Ebrahimian, H.; Bebis, G.; Lareau, N.P.; Rowell, E. Deep Learning Approach to Improve Spatial Resolution of GOES-17 Wildfire Boundaries Using VIIRS Satellite Data. Remote Sens. 2024, 16, 715. https://doi.org/10.3390/rs16040715

Badhan M, Shamsaei K, Ebrahimian H, Bebis G, Lareau NP, Rowell E. Deep Learning Approach to Improve Spatial Resolution of GOES-17 Wildfire Boundaries Using VIIRS Satellite Data. Remote Sensing. 2024; 16(4):715. https://doi.org/10.3390/rs16040715

Chicago/Turabian StyleBadhan, Mukul, Kasra Shamsaei, Hamed Ebrahimian, George Bebis, Neil P. Lareau, and Eric Rowell. 2024. "Deep Learning Approach to Improve Spatial Resolution of GOES-17 Wildfire Boundaries Using VIIRS Satellite Data" Remote Sensing 16, no. 4: 715. https://doi.org/10.3390/rs16040715

APA StyleBadhan, M., Shamsaei, K., Ebrahimian, H., Bebis, G., Lareau, N. P., & Rowell, E. (2024). Deep Learning Approach to Improve Spatial Resolution of GOES-17 Wildfire Boundaries Using VIIRS Satellite Data. Remote Sensing, 16(4), 715. https://doi.org/10.3390/rs16040715