Pansharpening Low-Altitude Multispectral Images of Potato Plants Using a Generative Adversarial Network

Abstract

:1. Introduction

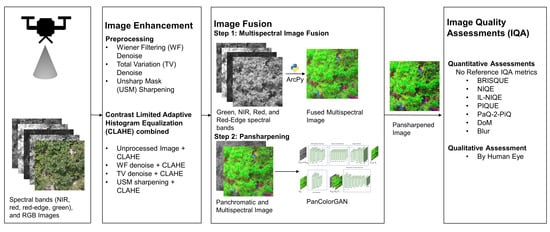

- How well does the PanColorGAN pansharpens the input panchromatic and multispectral images concerning the preservation of spatial attributes measured by the no-reference image quality assessment (NR-IQA) metrics?

- What effect does image preprocessing using image denoising, deblurring, and the CLAHE technique have on improving spatial characteristics and preserving color in the fused images?

2. Theoretical Background

2.1. Challenges in Agricultural Image Datasets

2.2. Filter Algorithms

2.3. Deep Neural Networks and Generative Adversarial Networks

3. Materials and Methods

3.1. Dataset

3.2. Image Preprocessing

3.2.1. Wiener Filtering (WF) Denoise

3.2.2. Total Variation (TV) Denoise

3.2.3. Unsharp Mask (USM) Sharpening

3.2.4. Contrast Limited Adaptive Histogram Equalization (CLAHE)

3.3. Image Enhancement

3.3.1. Multispectral Image Fusion

3.3.2. Pansharpening

3.3.3. Architecture Details

3.3.4. Transfer Learning

3.4. Image Quality Assessments (IQA) Metrics

4. Results

4.1. Quantitative Assessment

4.2. Qualitative Assessment

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AHE | Adaptive Histogram Equalization |

| BRISQUE | Blind/Referenceless Image Spatial Quality Evaluator |

| CLAHE | Contrast Limited Adaptive Histogram Equalization |

| CNN | Convolutional Neural Network |

| CS | Component Substitution |

| DCNN | Deep Convolutional Neural Network |

| DL | Deep Learning |

| FR-IQA | Full Reference Image Quality Assessments |

| GAN | Generative Adversarial Networks |

| GIS | Geographic Information System |

| IL-NIQE | Integrated Local Niqe |

| ML | Machine Learning |

| MRA | Multi-Resolution Analysis |

| MS | Multispectral |

| NIQE | Natural Image Quality Evaluator |

| NIR | Near Infrared |

| NR-IQA | No Reference Image Quality Assessments |

| NSS | Natural Scene Statistics |

| PAN | Panchromatic |

| PIQUE | Perception-Based Image Quality Evaluator |

| PSNR | Peak Signal-To-Noise Ratio |

| SISR | Single Image Super-Resolution |

| SSIM | Structural Similarity Index |

| SSM | Site-Specific Management |

| TV | Total Variation |

| UAV | Unmanned Aerial Vehicles |

| USM | Unsharp Mask |

| WF | Wiener Filtering |

References

- Lipiec, J.; Doussan, C.; Nosalewicz, A.; Kondracka, K. Effect of drought and heat stresses on plant growth and yield: A review. Int. Agrophys. 2013, 27, 463–477. [Google Scholar] [CrossRef]

- Oshunsanya, S.O.; Nwosu, N.J.; Li, Y.; Oshunsanya, S.O.; Nwosu, N.J.; Li, Y. Abiotic stress in agricultural crops under climatic conditions. In Sustainable Agriculture, Forest and Environmental Management; Springer: Singapore, 2019; pp. 71–100. [Google Scholar] [CrossRef]

- Savci, S. Investigation of Effect of Chemical Fertilizers on Environment. APCBEE Procedia 2012, 1, 287–292. [Google Scholar] [CrossRef]

- Bongiovanni, R.; Lowenberg-Deboer, J. Precision agriculture and sustainability. Precis. Agric. 2004, 5, 359–387. [Google Scholar] [CrossRef]

- Maimaitiyiming, M.; Ghulam, A.; Bozzolo, A.; Wilkins, J.L.; Kwasniewski, M.T. Early Detection of Plant Physiological Responses to Different Levels of Water Stress Using Reflectance Spectroscopy. Remote Sens. 2017, 9, 745. [Google Scholar] [CrossRef]

- Walter, A.; Finger, R.; Huber, R.; Buchmann, N. Smart farming is key to developing sustainable agriculture. Proc. Natl. Acad. Sci. USA 2017, 114, 6148–6150. [Google Scholar] [CrossRef]

- Steven, M.D.; Clark, J.A. Applications of Remote Sensing in Agriculture; Elsevier: Amsterdam, The Netherlands, 2013. [Google Scholar]

- Chiu, M.T.; Xu, X.; Wei, Y.; Huang, Z.; Schwing, A.G.; Brunner, R.; Khachatrian, H.; Karapetyan, H.; Dozier, I.; Rose, G.; et al. Agriculture-Vision: A Large Aerial Image Database for Agricultural Pattern Analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2828–2838. [Google Scholar]

- Sieberth, T.; Wackrow, R.; Chandler, J.H. Automatic detection of blurred images in UAV image sets. ISPRS J. Photogramm. Remote Sens. 2016, 122, 1–16. [Google Scholar] [CrossRef]

- Wang, R.; Xiao, X.; Guo, B.; Qin, Q.; Chen, R. An Effective Image Denoising Method for UAV Images via Improved Generative Adversarial Networks. Sensors 2018, 18, 1985. [Google Scholar] [CrossRef] [PubMed]

- Jeong, E.; Seo, J.; Wacker, J.P. UAV-aided bridge inspection protocol through machine learning with improved visibility images. Expert Syst. Appl. 2022, 197, 116791. [Google Scholar] [CrossRef]

- Kwak, G.H.; Park, N.W. Impact of Texture Information on Crop Classification with Machine Learning and UAV Images. Appl. Sci. 2019, 9, 643. [Google Scholar] [CrossRef]

- Maini, R.; Aggarwal, H. Study and comparison of various image edge detection techniques. Int. J. Image Process. (IJIP) 2009, 3, 1–11. [Google Scholar]

- Motayyeb, S.; Fakhri, S.A.; Varshosaz, M.; Pirasteh, S.; Motayyeb, S.; Fakhri, S.A.; Varshosaz, M.; Pirasteh, S. Enhancing Contrast of Images to Improve Geometric Accuracy of a Uav Photogrammetry Project. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43-B1, 389–398. [Google Scholar] [CrossRef]

- Hung, S.C.; Wu, H.C.; Tseng, M.H. Integrating image quality enhancement methods and deep learning techniques for remote sensing scene classification. Appl. Sci. 2021, 11, 11659. [Google Scholar] [CrossRef]

- Milanfar, P. A tour of modern image filtering: New insights and methods, both practical and theoretical. IEEE Signal Process. Mag. 2012, 30, 106–128. [Google Scholar] [CrossRef]

- Al-amri, S.S.; Kalyankar, N.V.; Khamitkar, S.D. Contrast Stretching Enhancement in Remote Sensing Image. BIOINFO Sens. Netw. 2011, 1, 6–9. [Google Scholar]

- Thomas, C.; Ranchin, T.; Wald, L.; Chanussot, J. Synthesis of multispectral images to high spatial resolution: A critical review of fusion methods based on remote sensing physics. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1301–1312. [Google Scholar] [CrossRef]

- Fonseca, L.; Namikawa, L.; Castejon, E.; Carvalho, L.; Pinho, C.; Pagamisse, A.; Fonseca, L.; Namikawa, L.; Castejon, E.; Carvalho, L.; et al. Image Fusion for Remote Sensing Applications. In Image Fusion and Its Applications; IntechOpen: London, UK, 2011. [Google Scholar] [CrossRef]

- Kremezi, M.; Kristollari, V.; Karathanassi, V.; Topouzelis, K.; Kolokoussis, P.; Taggio, N.; Aiello, A.; Ceriola, G.; Barbone, E.; Corradi, P. Pansharpening PRISMA Data for Marine Plastic Litter Detection Using Plastic Indexes. IEEE Access 2021, 9, 61955–61971. [Google Scholar] [CrossRef]

- Karakus, P.; Karabork, H. Effect of Pansharpened Image on Some of Pixel Based and Object Based Classification Accuracy. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B7, 235–239. [Google Scholar] [CrossRef]

- Chen, F.; Lou, S.; Song, Y. Improving object detection of remotely sensed multispectral imagery via pan-sharpening. In Proceedings of the ICCPR 2020: 2020 9th International Conference on Computing and Pattern Recognition, Xiamen, China, 30 October–1 November 2020; ACM International Conference Proceeding Series. ACM: New York, NY, USA, 2020; pp. 136–140. [Google Scholar] [CrossRef]

- Xu, Q.; Zhang, Y.; Li, B. Recent advances in pansharpening and key problems in applications. Int. J. Image Data Fusion 2014, 5, 175–195. [Google Scholar] [CrossRef]

- Ozcelik, F.; Alganci, U.; Sertel, E.; Unal, G. Rethinking CNN-Based Pansharpening: Guided Colorization of Panchromatic Images via GANs. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3486–3501. [Google Scholar] [CrossRef]

- ArcGIS API for Python—ArcGIS Pro|Documentation; Esri: Redlands, CA, USA, 2023.

- Haridasan, A.; Thomas, J.; Raj, E.D. Deep learning system for paddy plant disease detection and classification. Environ. Monit. Assess. 2023, 195, 120. [Google Scholar] [CrossRef]

- Bhujade, V.G.; Sambhe, V. Role of digital, hyper spectral, and SAR images in detection of plant disease with deep learning network. Multimed. Tools Appl. 2022, 81, 33645–33670. [Google Scholar] [CrossRef]

- Wang, A.; Zhang, W.; Wei, X. A review on weed detection using ground-based machine vision and image processing techniques. Comput. Electron. Agric. 2019, 158, 226–240. [Google Scholar] [CrossRef]

- Arsenovic, M.; Karanovic, M.; Sladojevic, S.; Anderla, A.; Stefanovic, D. Solving Current Limitations of Deep Learning Based Approaches for Plant Disease Detection. Symmetry 2019, 11, 939. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A review on the main challenges in automatic plant disease identification based on visible range images. Biosyst. Eng. 2016, 144, 52–60. [Google Scholar] [CrossRef]

- Di Cicco, M.; Potena, C.; Grisetti, G.; Pretto, A. Automatic model based dataset generation for fast and accurate crop and weeds detection. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 5188–5195. [Google Scholar] [CrossRef]

- Labhsetwar, S.R.; Haridas, S.; Panmand, R.; Deshpande, R.; Kolte, P.A.; Pati, S. Performance Analysis of Optimizers for Plant Disease Classification with Convolutional Neural Networks. In Proceedings of the 2021 4th Biennial International Conference on Nascent Technologies in Engineering (ICNTE), Navi Mumbai, India, 15–16 January 2021; pp. 1–6. [Google Scholar]

- Barbedo, J.G.A.; Koenigkan, L.V.; Santos, T.T.; Santos, P.M. A Study on the Detection of Cattle in UAV Images Using Deep Learning. Sensors 2019, 19, 5436. [Google Scholar] [CrossRef]

- Wen, D.; Ren, A.; Ji, T.; Flores-Parra, I.M.; Yang, X.; Li, M. Segmentation of thermal infrared images of cucumber leaves using K-means clustering for estimating leaf wetness duration. Int. J. Agric. Biol. Eng. 2020, 13, 161–167. [Google Scholar] [CrossRef]

- Ouhami, M.; Hafiane, A.; Es-Saady, Y.; Hajji, M.E.; Canals, R. Computer Vision, IoT and Data Fusion for Crop Disease Detection Using Machine Learning: A Survey and Ongoing Research. Remote Sens. 2021, 13, 2486. [Google Scholar] [CrossRef]

- Xu, J.X.; Ma, J.; Tang, Y.N.; Wu, W.X.; Shao, J.H.; Wu, W.B.; Wei, S.Y.; Liu, Y.F.; Wang, Y.C.; Guo, H.Q. Estimation of Sugarcane Yield Using a Machine Learning Approach Based on UAV-LiDAR Data. Remote Sens. 2020, 12, 2823. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral Remote Sensing from Unmanned Aircraft: Image Processing Workflows and Applications for Rangeland Environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef]

- Choi, M.; Kim, R.Y.; Nam, M.R.; Kim, H.O. Fusion of multispectral and panchromatic satellite images using the curvelet transform. IEEE Geosci. Remote Sens. Lett. 2005, 2, 136–140. [Google Scholar] [CrossRef]

- Lu, Y.; Perez, D.; Dao, M.; Kwan, C.; Li, J. Deep Learning with Synthetic Hyperspectral Images for Improved Soil Detection in Multispectral Imagery. In Proceedings of the 2018 9th IEEE Annual Ubiquitous Computing, Electronics and Mobile Communication Conference (UEMCON 2018), New York, NY, USA, 8–10 November 2018; pp. 666–672. [Google Scholar] [CrossRef]

- Sekrecka, A.; Kedzierski, M.; Wierzbicki, D. Pre-Processing of Panchromatic Images to Improve Object Detection in Pansharpened Images. Sensors 2019, 19, 5146. [Google Scholar] [CrossRef]

- Lagendijk, R.L.; Biemond, J. Basic methods for image restoration and identification. In The Essential Guide to Image Processing; Elsevier: Amsterdam, The Netherlands, 2009; pp. 323–348. [Google Scholar]

- Diwakar, M.; Kumar, M. A review on CT image noise and its denoising. Biomed. Signal Process. Control 2018, 42, 73–88. [Google Scholar] [CrossRef]

- Saxena, C.; Kourav, D. Noises and image denoising techniques: A brief survey. Int. J. Emerg. Technol. Adv. Eng. 2014, 4, 878–885. [Google Scholar]

- Verma, R.; Ali, J. A comparative study of various types of image noise and efficient noise removal techniques. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2013, 3, 617–622. [Google Scholar]

- Vijaykumar, V.; Vanathi, P.; Kanagasabapathy, P. Fast and efficient algorithm to remove gaussian noise in digital images. IAENG Int. J. Comput. Sci. 2010, 37, 300–302. [Google Scholar]

- Kumain, S.C.; Singh, M.; Singh, N.; Kumar, K. An efficient Gaussian noise reduction technique for noisy images using optimized filter approach. In Proceedings of the 2018 first international conference on secure cyber computing and communication (ICSCCC), Jalandhar, India, , 2018, 15–17 December 2018; IEEE: New York, NY, USA, 2018; pp. 243–248. [Google Scholar]

- Ren, R.; Guo, Z.; Jia, Z.; Yang, J.; Kasabov, N.K.; Li, C. Speckle noise removal in image-based detection of refractive index changes in porous silicon microarrays. Sci. Rep. 2019, 9, 15001. [Google Scholar] [CrossRef]

- Aboshosha, A.; Hassan, M.; Ashour, M.; Mashade, M.E. Image denoising based on spatial filters, an analytical study. In Proceedings of the 2009 International Conference on Computer Engineering and Systems (ICCES’09), Cairo, Egypt, 14–16 December 2009; pp. 245–250. [Google Scholar] [CrossRef]

- Bera, T.; Das, A.; Sil, J.; Das, A.K. A survey on rice plant disease identification using image processing and data mining techniques. Adv. Intell. Syst. Comput. 2019, 814, 365–376. [Google Scholar]

- Paris, S.; Kornprobst, P.; Tumblin, J.; Durand, F. Bilateral filtering: Theory and applications. Found. Trends Comput. Graph. Vis. 2009, 4, 1–73. [Google Scholar] [CrossRef]

- Kumar, S.; Kumar, P.; Gupta, M.; Nagawat, A.K. Performance Comparison of Median and Wiener Filter in Image De-noising. Int. J. Comput. Appl. 2010, 12, 27–31. [Google Scholar] [CrossRef]

- Archana, K.S.; Sahayadhas, A. Comparison of various filters for noise removal in paddy leaf images. Int. J. Eng. Technol. 2018, 7, 372–374. [Google Scholar] [CrossRef]

- Gulat, N.; Kaushik, A. Remote sensing image restoration using various techniques: A review. Int. J. Sci. Eng. Res. 2012, 3, 1–6. [Google Scholar]

- Wang, R.; Tao, D. Recent progress in image deblurring. arXiv 2014, arXiv:1409.6838. [Google Scholar]

- Rahimi-Ajdadi, F.; Mollazade, K. Image deblurring to improve the grain monitoring in a rice combine harvester. Smart Agric. Technol. 2023, 4, 100219. [Google Scholar] [CrossRef]

- Al-qinani, I.H. Deblurring image and removing noise from medical images for cancerous diseases using a Wiener filter. Int. Res. J. Eng. Technol. 2017, 4, 2354–2365. [Google Scholar]

- Al-Ameen, Z.; Sulong, G.; Johar, M.G.M.; Verma, N.; Kumar, R.; Dachyar, M.; Alkhawlani, M.; Mohsen, A.; Singh, H.; Singh, S.; et al. A comprehensive study on fast image deblurring techniques. Int. J. Adv. Sci. Technol. 2012, 44, 1–10. [Google Scholar]

- Petrellis, N. A Review of Image Processing Techniques Common in Human and Plant Disease Diagnosis. Symmetry 2018, 10, 270. [Google Scholar] [CrossRef]

- Holmes, T.J.; Bhattacharyya, S.; Cooper, J.A.; Hanzel, D.; Krishnamurthi, V.; Lin, W.c.; Roysam, B.; Szarowski, D.H.; Turner, J.N. Light microscopic images reconstructed by maximum likelihood deconvolution. In Handbook of Biological Confocal Microscopy; Springer: Boston, MA, USA, 1995; pp. 389–402. [Google Scholar]

- Yi, C.; Shimamura, T. An Improved Maximum-Likelihood Estimation Algorithm for Blind Image Deconvolution Based on Noise Variance Estimation. J. Signal Process. 2012, 16, 629–635. [Google Scholar] [CrossRef]

- Liu, L.; Jia, Z.; Yang, J.; Kasabov, N.; Fellow IEEE. A medical image enhancement method using adaptive thresholding in NSCT domain combined unsharp masking. Int. J. Imaging Syst. Technol. 2015, 25, 199–205. [Google Scholar] [CrossRef]

- Chourasiya, A.; Khare, N. A Comprehensive Review of Image Enhancement Techniques. Int. J. Innov. Res. Growth 2019, 8, 60–71. [Google Scholar] [CrossRef]

- Bashir, S.; Sharma, N. Remote area plant disease detection using image processing. IOSR J. Electron. Commun. Eng. 2012, 2, 31–34. [Google Scholar] [CrossRef]

- Ansari, A.S.; Jawarneh, M.; Ritonga, M.; Jamwal, P.; Mohammadi, M.S.; Veluri, R.K.; Kumar, V.; Shah, M.A. Improved Support Vector Machine and Image Processing Enabled Methodology for Detection and Classification of Grape Leaf Disease. J. Food Qual. 2022, 2022, 9502475. [Google Scholar] [CrossRef]

- Rubini, C.; Pavithra, N. Contrast Enhancementof MRI Images using AHE and CLAHE Techniques. Int. J. Innov. Technol. Explor. Eng. 2019, 9, 2442–2445. [Google Scholar] [CrossRef]

- Lilhore, U.K.; Imoize, A.L.; Lee, C.C.; Simaiya, S.; Pani, S.K.; Goyal, N.; Kumar, A.; Li, C.T. Enhanced Convolutional Neural Network Model for Cassava Leaf Disease Identification and Classification. Mathematics 2022, 10, 580. [Google Scholar] [CrossRef]

- Dong, W.; Wang, P.; Yin, W.; Shi, G.; Wu, F.; Lu, X. Denoising Prior Driven Deep Neural Network for Image Restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 2305–2318. [Google Scholar] [CrossRef] [PubMed]

- Tian, C.; Fei, L.; Zheng, W.; Xu, Y.; Zuo, W.; Lin, C.W. Deep learning on image denoising: An overview. Neural Netw. 2020, 131, 251–275. [Google Scholar] [CrossRef] [PubMed]

- Quan, Y.; Chen, M.; Pang, T.; Ji, H. Self2self with dropout: Learning self-supervised denoising from single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Huang, T.; Li, S.; Jia, X.; Lu, H.; Liu, J. Neighbor2neighbor: Self-supervised denoising from single noisy images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Xu, J.; Adalsteinsson, E. Deformed2Self: Self-supervised Denoising for Dynamic Medical Imaging. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 25–35. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mehdi, B.M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- Iglesias, G.; Talavera, E.; Díaz-Álvarez, A. A survey on GANs for computer vision: Recent research, analysis and taxonomy. Comput. Sci. Rev. 2023, 48, 100553. [Google Scholar] [CrossRef]

- Vo, D.M.; Nguyen, D.M.; Le, T.P.; Lee, S.W. HI-GAN: A hierarchical generative adversarial network for blind denoising of real photographs. Inf. Sci. 2021, 570, 225–240. [Google Scholar] [CrossRef]

- Zhang, K.; Ren, W.; Luo, W.; Lai, W.S.; Stenger, B.; Yang, M.H.; Li, H. Deep Image Deblurring: A Survey. Int. J. Comput. Vis. 2022, 130, 2103–2130. [Google Scholar] [CrossRef]

- Nimisha, T.M.; Sunil, K.; Rajagopalan, A.N. Unsupervised class-specific deblurring. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Liu, P.; Janai, J.; Pollefeys, M.; Sattler, T.; Geiger, A. Self-Supervised Linear Motion Deblurring. IEEE Robot. Autom. Lett. 2020, 5, 2475–2482. [Google Scholar] [CrossRef]

- Li, B.; Gou, Y.; Gu, S.; Liu, J.Z.; Zhou, J.T.; Peng, X. You Only Look Yourself: Unsupervised and Untrained Single Image Dehazing Neural Network. Int. J. Comput. Vis. 2021, 129, 1754–1767. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Chen, J.; Hoi, S.C.H. Deep Learning for Image Super-Resolution: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3365–3387. [Google Scholar] [CrossRef] [PubMed]

- Ehlers, M. Multi-image fusion in remote sensing: Spatial enhancement vs. spectral characteristics preservation. In Advances in Visual Computing—ISVC 2008; Lecture Notes in Computer Science—LNCS (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2008; Volume 5359, pp. 75–84. [Google Scholar] [CrossRef]

- Meng, X.; Shen, H.; Li, H.; Zhang, L.; Fu, R. Review of the pansharpening methods for remote sensing images based on the idea of meta-analysis: Practical discussion and challenges. Inf. Fusion 2019, 46, 102–113. [Google Scholar] [CrossRef]

- Javan, F.D.; Samadzadegan, F.; Mehravar, S.; Toosi, A.; Khatami, R.; Stein, A. A review of image fusion techniques for pan-sharpening of high-resolution satellite imagery. ISPRS J. Photogramm. Remote Sens. 2021, 171, 101–117. [Google Scholar] [CrossRef]

- Saxena, N.; Saxena, G.; Khare, N.; Rahman, M.H. Pansharpening scheme using spatial detail injection–based convolutional neural networks. IET Image Process. 2022, 16, 2297–2307. [Google Scholar] [CrossRef]

- Wang, P.; Alganci, U.; Sertel, E. Comparative analysis on deep learning based pan-sharpening of very high-resolution satellite images. Int. J. Environ. Geoinform. 2021, 8, 150–165. [Google Scholar] [CrossRef]

- Maqsood, M.H.; Mumtaz, R.; Haq, I.U.; Shafi, U.; Zaidi, S.M.H.; Hafeez, M. Super resolution generative adversarial network (Srgans) for wheat stripe rust classification. Sensors 2021, 21, 7903. [Google Scholar] [CrossRef]

- Salmi, A.; Benierbah, S.; Ghazi, M. Low complexity image enhancement GAN-based algorithm for improving low-resolution image crop disease recognition and diagnosis. Multimed. Tools Appl. 2022, 81, 8519–8538. [Google Scholar] [CrossRef]

- Yeswanth, P.; Deivalakshmi, S.; George, S.; Ko, S.B. Residual skip network-based super-resolution for leaf disease detection of grape plant. Circuits Syst. Signal Process. 2023, 42, 6871–6899. [Google Scholar] [CrossRef]

- Dai, Q.; Cheng, X.; Qiao, Y.; Zhang, Y. Crop leaf disease image super-resolution and identification with dual attention and topology fusion generative adversarial network. IEEE Access 2020, 8, 55724–55735. [Google Scholar] [CrossRef]

- Shah, M.; Kumar, P. Improved handling of motion blur for grape detection after deblurring. In Proceedings of the 2021 8th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 26–27 August 2021; IEEE: New York, NY, USA, 2021; pp. 949–954. [Google Scholar]

- Kupyn, O.; Martyniuk, T.; Wu, J.; Wang, Z. Deblurgan-v2: Deblurring (orders-of-magnitude) faster and better. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8878–8887. [Google Scholar]

- Yun, C.; Kim, Y.H.; Lee, S.J.; Im, S.J.; Park, K.R. WRA-Net: Wide Receptive Field Attention Network for Motion Deblurring in Crop and Weed Image. Plant Phenomics 2023, 5, 0031. [Google Scholar] [CrossRef]

- Xiao, Y.; Zhang, J.; Chen, W.; Wang, Y.; You, J.; Wang, Q. SR-DeblurUGAN: An End-to-End Super-Resolution and Deblurring Model with High Performance. Drones 2022, 6, 162. [Google Scholar] [CrossRef]

- Butte, S.; Vakanski, A.; Duellman, K.; Wang, H.; Mirkouei, A. Potato crop stress identification in aerial images using deep learning-based object detection. Agron. J. 2021, 113, 3991–4002. [Google Scholar] [CrossRef]

- Veldhuizen, T.L. Grid Filters for Local Nonlinear Image Restoration. Master’s Thesis, University of Waterloo, Waterloo, ON, Canada, 1998. Available online: http://osl.iu.edu/čtveldhui/papers/MAScThesis/node18.html (accessed on 20 December 2005).

- 2-D Adaptive Noise-Removal Filtering—MATLAB Wiener2—MathWorks Deutschland—De.mathworks.com. Available online: https://de.mathworks.com/help/images/ref/wiener2.html (accessed on 22 November 2023).

- Scipy.signal.wiener—SciPy v1.11.4 Manual—Docs.scipy.org. Available online: https://docs.scipy.org/doc/scipy/reference/generated/scipy.signal.wiener.html (accessed on 22 November 2023).

- Fan, L.; Zhang, F.; Fan, H.; Zhang, C. Brief review of image denoising techniques. Vis. Comput. Ind. Biomed. Art 2019, 2, 7. [Google Scholar] [CrossRef]

- An algorithm for total variation minimization and applications. J. Math. Imaging Vis. 2004, 20, 89–97. [CrossRef]

- Duran, J.; Coll, B.; Sbert, C. Chambolle’s projection algorithm for total variation denoising. Image Process. Line 2013, 2013, 311–331. [Google Scholar] [CrossRef]

- Skimage.restoration—Skimage 0.22.0 Documentation—Scikit-image.org. Available online: https://scikit-image.org/docs/stable/api/skimage.restoration.html#skimage.restoration.denoise_tv_chambolle (accessed on 22 November 2023).

- Bhateja, V.; Misra, M.; Urooj, S. Unsharp masking approaches for HVS based enhancement of mammographic masses: A comparative evaluation. Future Gener. Comput. Syst. 2018, 82, 176–189. [Google Scholar] [CrossRef]

- Gonzalez, R.C. Digital Image Processing; Pearson Education India: Noida, India, 2009. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Crete, F.; Dolmiere, T.; Ladret, P.; Nicolas, M. The blur effect: Perception and estimation with a new no-reference perceptual blur metric. In Proceedings of the Human Vision and Electronic Imaging XII—SPIE, San Jose, CA, USA, 29 January–1 February 2007; Volume 6492, pp. 196–206. [Google Scholar]

- Estimate Strength of Blur—Skimage 0.21.0 Documentation. Available online: https://scikit-image.org (accessed on 3 September 2023).

- Kumar, J.; Chen, F.; Doermann, D. Sharpness estimation for document and scene images. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; IEEE: New York, NY, USA, 2012; pp. 3292–3295. [Google Scholar]

- GitHub—Umang-Singhal/Pydom: Sharpness Estimation for Document and Scene Images. Available online: https://github.com (accessed on 3 September 2023).

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “Completely Blind” Image Quality Analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Bovik, A.C. A feature-enriched completely blind image quality evaluator. IEEE Trans. Image Process. 2015, 24, 2579–2591. [Google Scholar] [CrossRef]

- Venkatanath, N.; Praneeth, D.; Bh, M.C.; Channappayya, S.S.; Medasani, S.S. Blind image quality evaluation using perception based features. In Proceedings of the 2015 Twenty First National Conference on Communications (NCC), Mumbai, India, 27 February–1 March 2015; IEEE: New York, NY, USA, 2015; pp. 1–6. [Google Scholar]

- Zhuang, Y.; Zhai, H. Multi-focus image fusion method using energy of Laplacian and a deep neural network. Appl. Opt. 2020, 59, 1684–1694. [Google Scholar] [CrossRef]

- Ying, Z.; Niu, H.; Gupta, P.; Mahajan, D.; Ghadiyaram, D.; Bovik, A. From patches to pictures (PaQ-2-PiQ): Mapping the perceptual space of picture quality. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3575–3585. [Google Scholar]

- Pyiqa—Pypi.org. Available online: https://pypi.org/project/pyiqa/ (accessed on 3 September 2023).

- Shapiro, S.S.; Wilk, M.B. An Analysis of Variance Test for Normality (Complete Samples). Biometrika 1965, 52, 591. [Google Scholar] [CrossRef]

- Tukey, J.W. Comparing Individual Means in the Analysis of Variance. Biometrics 1949, 5, 99. [Google Scholar] [CrossRef]

- Zhang, S.; Wu, X.; You, Z.; Zhang, L. Leaf image based cucumber disease recognition using sparse representation classification. Comput. Electron. Agric. 2017, 134, 135–141. [Google Scholar] [CrossRef]

- Anam, C.; Fujibuchi, T.; Toyoda, T.; Sato, N.; Haryanto, F.; Widita, R.; Arif, I.; Dougherty, G. An investigation of a CT noise reduction using a modified of wiener filtering-edge detection. J. Phys. Conf. Ser. 2019, 1217, 12022. [Google Scholar] [CrossRef]

- Buades, A.; Coll, B.; Morel, J.M. A Review of Image Denoising Algorithms, with a New One. Multiscale Model. Simul. 2005, 4, 490–530. [Google Scholar] [CrossRef]

- Bhosale, N.P.; Manza, R.; Kale, K. Analysis of Effect of Gaussian, Salt and Pepper Noise Removal from Noisy Remote Sensing Images. Int. J. Sci. Eng. Res. 2014, 4, 1511–1514. [Google Scholar]

- Kumar, N.; Nachamai, M. Noise removal and filtering techniques used in medical images. Orient. J. Comp. Sci. Technol. 2017, 10, 103–113. [Google Scholar] [CrossRef]

- Liu, L.; Jia, Z.; Yang, J.; Kasabov, N. A remote sensing image enhancement method using mean filter and unsharp masking in non-subsampled contourlet transform domain. Trans. Inst. Meas. Control 2017, 39, 183–193. [Google Scholar] [CrossRef]

- Malik, R.; Dhir, R.; Mittal, S.K. Remote sensing and landsat image enhancement using multiobjective PSO based local detail enhancement. J. Ambient Intell. Humaniz. Comput. 2019, 10, 3563–3571. [Google Scholar] [CrossRef]

- Hu, L.; Qin, M.; Zhang, F.; Zhenhong, D.; Liu, R. RSCNN: A CNN-Based Method to Enhance Low-Light Remote-Sensing Images. Remote Sens. 2020, 13, 62. [Google Scholar] [CrossRef]

- Zheng, Y.; Li, J.; Li, Y.; Cao, K.; Wang, K. Deep Residual Learning for Boosting the Accuracy of Hyperspectral Pansharpening. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1435–1439. [Google Scholar] [CrossRef]

- Khan, S.S.; Ran, Q.; Khan, M. Image pan-sharpening using enhancement based approaches in remote sensing. Multimed. Tools Appl. 2020, 79, 32791–32805. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by Convolutional Neural Networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef]

- Teke, M.; San, E.; Koc, E. Unsharp masking based pansharpening of high resolution satellite imagery. In Proceedings of the 26th IEEE Signal Processing and Communications Applications Conference (SIU 2018), Izmir, Turkey, 2–5 May 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Fang, L.; Hu, J.; Yin, H. Pixel-level image fusion: A survey of the state of the art. Inf. Fusion 2017, 33, 100–112. [Google Scholar] [CrossRef]

- Guo, S.; Yan, Z.; Zhang, K.; Zuo, W.; Zhang, L. Toward convolutional blind denoising of real photographs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1712–1722. [Google Scholar]

- Zheng, D.; Tan, S.H.; Zhang, X.; Shi, Z.; Ma, K.; Bao, C. An unsupervised deep learning approach for real-world image denoising. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

| Technique | Operators |

|---|---|

| Preprocessing | WF denoise |

| TV denoise | |

| USM sharpening | |

| CLAHE | Unprocessed Image + CLAHE |

| WF denoise + CLAHE | |

| TV denoise + CLAHE | |

| USM sharpening + CLAHE |

| Metrics | Characteristics |

|---|---|

| BRISQUE [109] | Holistic, uses luminance coefficients and human opinion scores. |

| NIQE [110] | NSS algorithm, opinion-unaware, no human-modified images. |

| IL-NIQE [111] | Robust, uses five NSS features for opinion-unaware assessment. |

| PIQUE [112] | Blind evaluator, assesses distortion without training images. |

| PaQ-2-PiQ [114] | Deep learning-based, trained on subjective scores, effective overall quality quantification. |

| DoM [107] | Measures sharpness through grayscale luminance values of edges. |

| Blur [105] | Evaluates blurriness using a no-reference metric, considers human perception. |

| Methods | Metrics | |||||||

|---|---|---|---|---|---|---|---|---|

| BRISQUE (± 1SD) | NIQE (± 1 SD) | IL-NIQE (± 1 SD) | PIQUE (± 1 SD) | PaQ2PiQ (± 1 SD) | DoM (± 1 SD) | Blur (± 1 SD) | ||

| Unprocessed RGB and Multispectral | RGB | 43.40 ± 1.15 | 5.61 ± 0.57 | 24.06 ± 1.85 | 36.28 ± 4.91 | 67.74 ± 2.66 | 0.89 ± 0.02 | 0.37 ± 0.02 |

| UN MS | 36.14 ± 2.55 | 4.69 ± 0.69 | 67.45 ± 7.58 | 11.34 ± 2.28 | 69.46 ± 2.20 | 0.97 ± 0.03 | 0.32 ± 0.02 | |

| Preprocessed Multispectral | MS WF | 40.87 ± 2.20 | 5.51 ± 0.38 | 82.19 ± 7.31 | 32.20 ± 6.11 | 63.52 ± 1.18 | 0.84 ± 0.03 | 0.37 ± 0.02 |

| MS TV | 40.70 ± 1.46 | 4.83 ± 0.36 | 61.24 ± 6.90 | 21.24 ± 6.00 | 69.50 ± 1.67 | 0.94 ± 0.03 | 0.34 ± 0.02 | |

| MS USM | 26.85 ± 3.53 | 4.13 ± 0.37 | 56.43 e ± 6.81 | 6.51 e ± 1.10 | 72.61 ± 1.68 | 1.08 e ± 0.02 | 0.25 ± 0.01 | |

| MS CL | 32.64 e ± 3.17 | 5.11 ± 1.40 | 86.21 ± 12.30 | 9.71 ± 2.44 | 73.25 ± 1.26 | 0.98 ± 0.03 | 0.30 e ± 0.02 | |

| MS CL+WF | 39.86 ± 1.73 | 5.78 ± 1.78 | 95.31 ± 9.61 | 25.96 ± 5.35 | 67.57 ± 1.18 | 0.85 ± 0.02 | 0.33 ± 0.02 | |

| MS Cl+USM | 32.20 e ± 5.13 | 5.23 ± 1.04 | 88.71 ± 12.31 | 8.49 ± 2.32 | 76.25 ± 0.97 | 1.05 ± 0.03 | 0.26 ± 0.01 | |

| MS CL+TV | 37.31 ± 2.68 | 5.56 ± 0.35 | 75.31 ± 9.79 | 16.57 ± 4.53 | 72.28 ± 1.27 | 0.96 ± 0.03 | 0.33 ± 0.02 | |

| Unprocessed and Preprocessed Pansharpened | UN PS | 36.13 ± 1.68 | 5.06 ± 0.58 | 58.64 ± 8.13 | 25.86 ± 2.86 | 72.99 ± 0.68 | 0.95 ± 0.02 | 0.30 e ± 0.01 |

| PS WF | 38.6 ± 1.92 | 5.32 ± 0.57 | 60.29 ± 7.00 | 36.45 ± 2.39 | 69.92 ± 1.66 | 0.86 ± 0.03 | 0.33 ± 0.02 | |

| PS TV | 37.97 ± 1.80 | 5.18 ± 0.59 | 55.71 e ± 7.362 | 40.57 ± 3.52 | 72.00 ± 0.87 | 0.91 ± 0.03 | 0.32 ± 0.02 | |

| PS USM | 27.58 ± 1.97 | 4.57 e ± 0.57 | 49.67 ± 8.09 | 10.94 ± 1.05 | 77.56 ± 0.51 | 1.03 ± 0.01 | 0.26 ± 0.01 | |

| PS CL | 42.88 ± 1.96 | 6.19 ± 0.69 | 70.42 ± 9.89 | 29.68 ± 4.17 | 73.50 e ± 0.71 | 0.90 ± 0.02 | 0.30 e ± 0.01 | |

| PS CL+WF | 42.17 ± 2.14 | 6.07 ± 0.63 | 74.13 ± 9.45 | 40.58 ± 3.57 | 71.16 ± 1.02 | 0.96 ± 0.01 | 0.32 e ± 0.01 | |

| PS CL+TV | 43.02 ± 1.52 | 6.09 ± 0.67 | 66.31 ± 10.08 | 36.94 ± 3.97 | 73.10 ± 0.77 | 1.03 ± 0.01 | 0.32 ± 0.01 | |

| PS CL+USM | 34.16 ± 2.98 | 4.57 ± 0.57 | 72.83 ± 10.80 | 16.60 ± 2.24 | 75.16 ± 0.53 | 0.98 ± 0.01 | 0.26 ± 0.01 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Modak, S.; Heil, J.; Stein, A. Pansharpening Low-Altitude Multispectral Images of Potato Plants Using a Generative Adversarial Network. Remote Sens. 2024, 16, 874. https://doi.org/10.3390/rs16050874

Modak S, Heil J, Stein A. Pansharpening Low-Altitude Multispectral Images of Potato Plants Using a Generative Adversarial Network. Remote Sensing. 2024; 16(5):874. https://doi.org/10.3390/rs16050874

Chicago/Turabian StyleModak, Sourav, Jonathan Heil, and Anthony Stein. 2024. "Pansharpening Low-Altitude Multispectral Images of Potato Plants Using a Generative Adversarial Network" Remote Sensing 16, no. 5: 874. https://doi.org/10.3390/rs16050874

APA StyleModak, S., Heil, J., & Stein, A. (2024). Pansharpening Low-Altitude Multispectral Images of Potato Plants Using a Generative Adversarial Network. Remote Sensing, 16(5), 874. https://doi.org/10.3390/rs16050874