Spatio-Temporal Residual Attention Network for Satellite-Based Infrared Small Target Detection

Abstract

Highlights

- A spatio-temporal detection framework is proposed for infrared small target detection in satellite video, which combines inter-frame residuals with spatial and temporal feature learning.

- The proposed method achieves superior detection accuracy and robustness compared with state-of-the-art approaches, particularly for tiny and dim targets in complex backgrounds.

- The framework provides an effective solution for detecting small moving aerial targets from satellite infrared video, supporting reliable long-range monitoring.

- This study demonstrates the potential of integrating temporal consistency and multi-scale spatial features to advance real-world remote sensing applications.

Abstract

1. Introduction

- We introduce an inter-frame residual module to explicitly highlight motion-related features and enhance the sensitivity of the network to subtle target motion in remote infrared satellite images.

- We design a dual-branch structure to encode spatial semantics and temporal evolution separately, which achieves more effective spatio-temporal feature fusion and reduces information redundancy.

- A multi-scale fusion strategy combined with a custom detection head is proposed to improve the detection performance of small low-contrast targets in complex backgrounds.

2. Related Work

2.1. Infrared Small Target Detection

2.2. Video-Based Object Tracking

3. Proposed Algorithm

3.1. Problem Definition

3.2. Overall Framework

| Algorithm 1 Spatio-Temporal Infrared Small Target Detection Framework |

Input: Infrared video sequence |

Output: Detected target bounding boxes |

1: Initialize empty detection results: |

2: for to T do do |

3: Compute inter-frame motion enhancement: |

4: Extract spatial features from and : |

5: |

6: |

7: Fuse spatial features: |

8: |

9: Aggregate temporal features over window : |

10: |

11: Perform multi-scale feature fusion: |

12: |

13: Detect targets from fused features: |

14: |

15: Append to results: |

16: end for |

3.3. Inter-Frame Motion Enhancement

3.4. Spatial and Temporal Feature Encoding

3.5. Multi-Scale Feature Fusion

4. Experiments

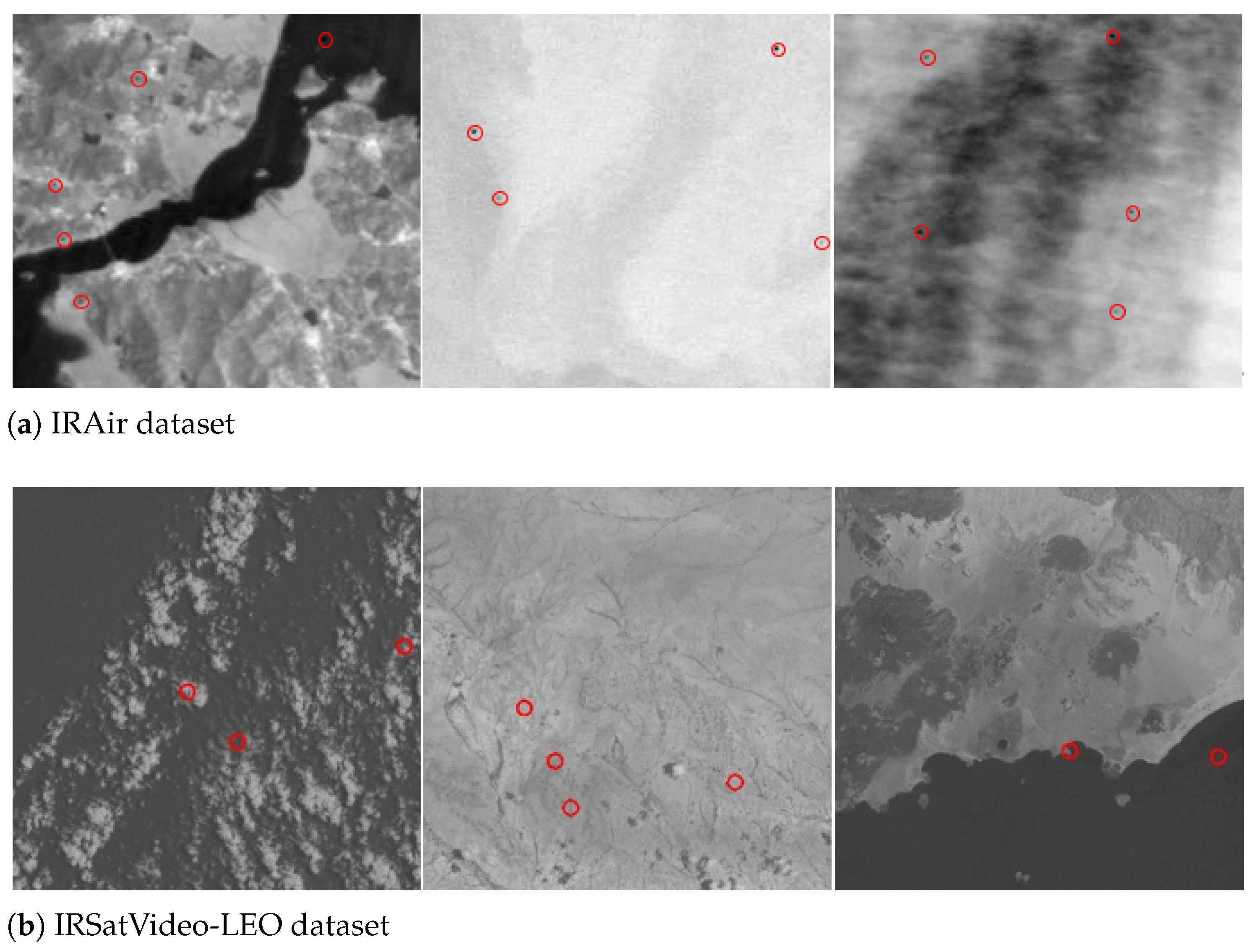

4.1. Experimental Setup

4.2. Evaluation Metrics

4.3. Results

4.3.1. Comprehensive Comparison

4.3.2. Ablation Study

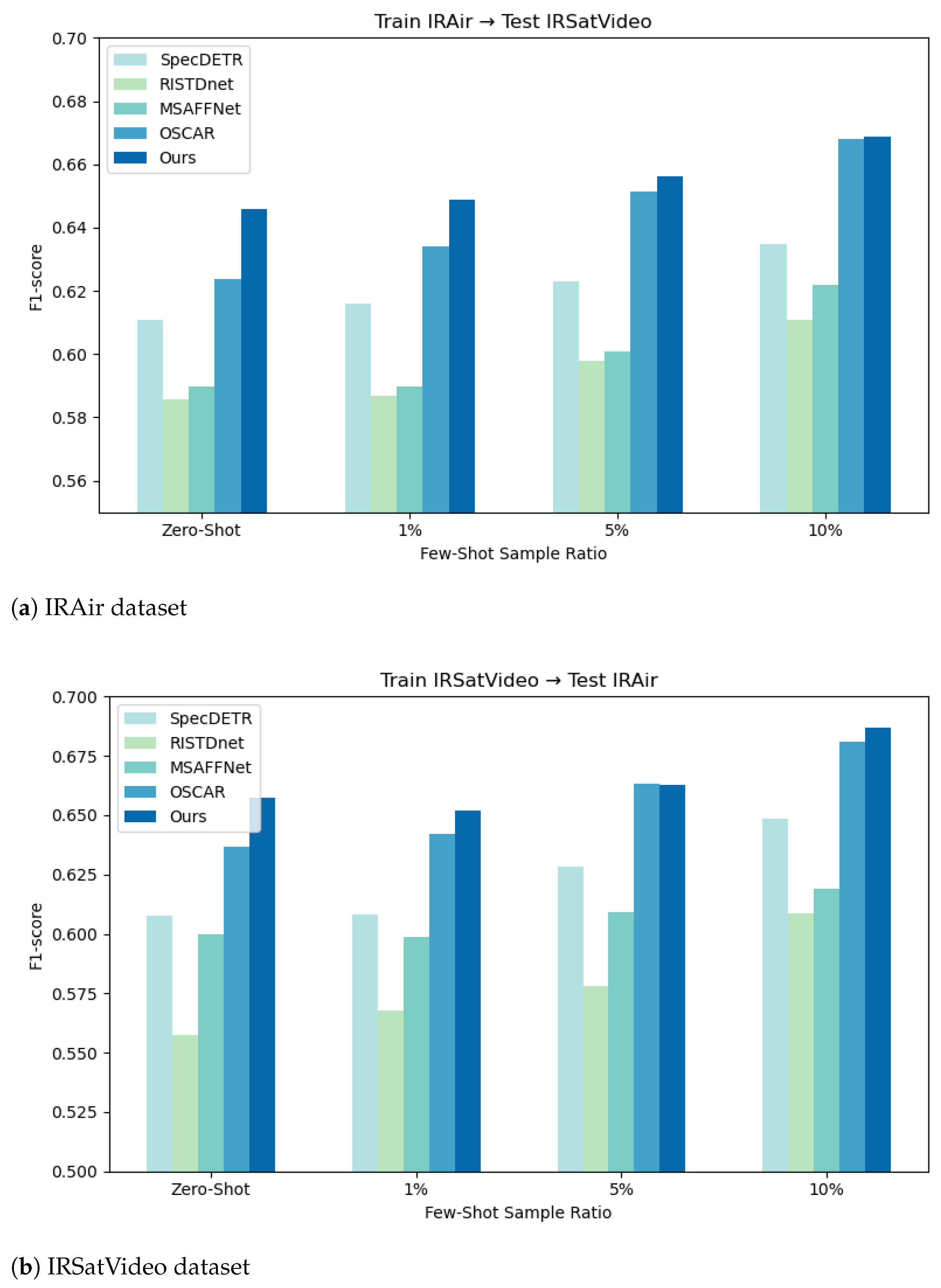

4.3.3. Cross-Dataset Comparison

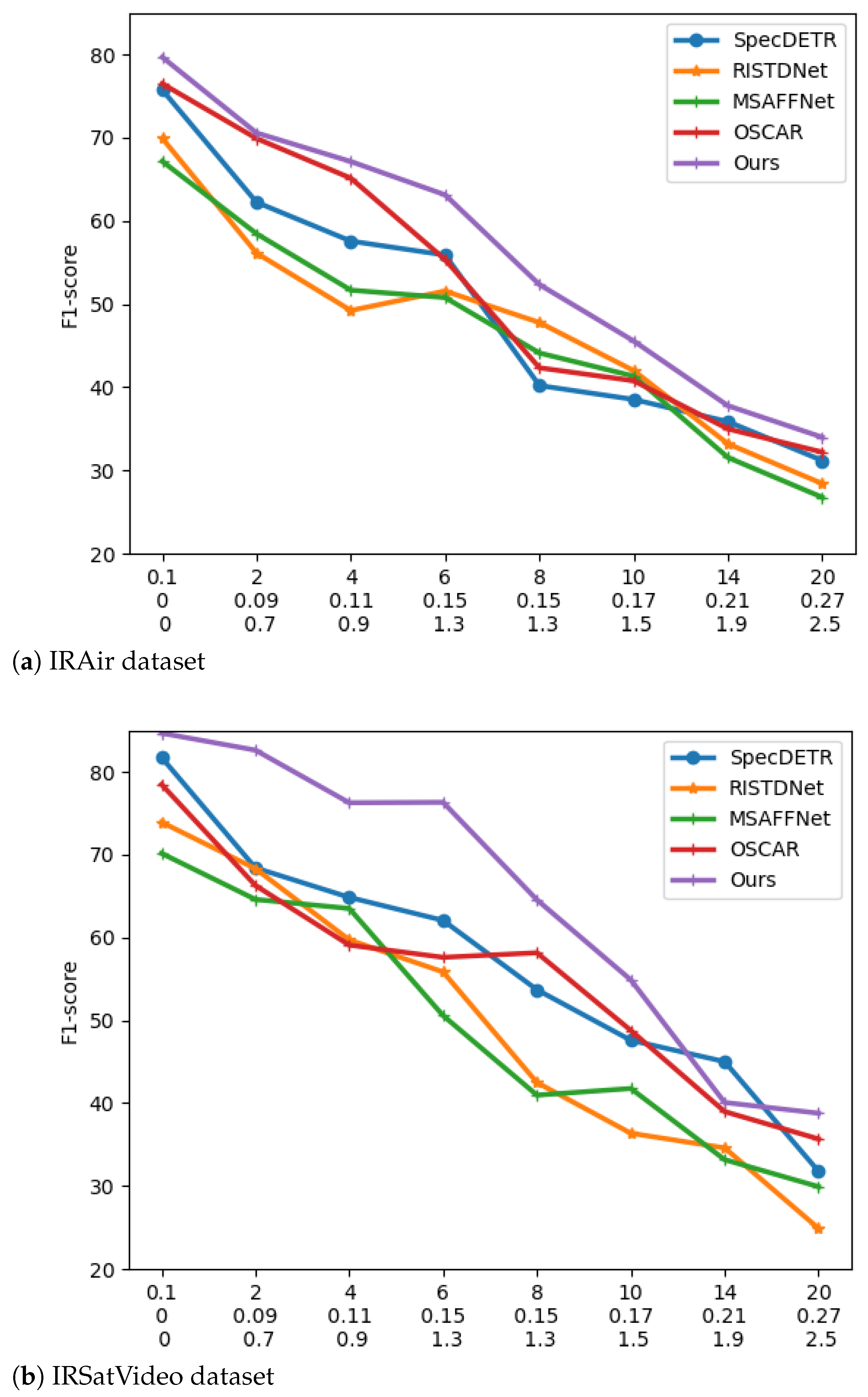

4.3.4. Sensitivity Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Qin, Y.; Li, B. Effective infrared small target detection utilizing a novel local contrast method. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1890–1894. [Google Scholar] [CrossRef]

- Zhuang, J.; Chen, W.; Guo, B.; Yan, Y. Infrared weak target detection in dual images and dual areas. Remote Sens. 2024, 16, 3608. [Google Scholar]

- Zhao, M.; Li, W.; Li, L.; Hu, J.; Ma, P.; Tao, R. Single-frame infrared small-target detection: A survey. IEEE Geosci. Remote Sens. Mag. 2022, 10, 87–119. [Google Scholar]

- Li, B.; Xiao, C.; Wang, L.; Wang, Y.; Lin, Z.; Li, M.; An, W.; Guo, Y. Dense nested attention network for infrared small target detection. IEEE Trans. Image Process. 2022, 32, 1745–1758. [Google Scholar] [CrossRef] [PubMed]

- Zhao, B.; Wang, C.; Fu, Q.; Han, Z. A novel pattern for infrared small target detection with generative adversarial network. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4481–4492. [Google Scholar]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Attentional local contrast networks for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9813–9824. [Google Scholar]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric contextual modulation for infrared small target detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 950–959. [Google Scholar]

- Chen, T.; Ye, Z.; Tan, Z.; Gong, T.; Wu, Y.; Chu, Q.; Liu, B.; Yu, N.; Ye, J. Mim-istd: Mamba-in-mamba for efficient infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5007613. [Google Scholar] [CrossRef]

- Han, J.; Liang, K.; Zhou, B.; Zhu, X.; Zhao, J.; Zhao, L. Infrared small target detection utilizing the multiscale relative local contrast measure. IEEE Geosci. Remote Sens. Lett. 2018, 15, 612–616. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, R.; Yang, Y.; Bai, H.; Zhang, J.; Guo, J. ISNet: Shape matters for infrared small target detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 877–886. [Google Scholar]

- Liu, Q.; Liu, R.; Zheng, B.; Wang, H.; Fu, Y. Infrared small target detection with scale and location sensitivity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16-22 June 2024; pp. 17490–17499. [Google Scholar]

- Wang, K.; Du, S.; Liu, C.; Cao, Z. Interior attention-aware network for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Zhang, M.; Wang, Y.; Guo, J.; Li, Y.; Gao, X.; Zhang, J. IRSAM: Advancing segment anything model for infrared small target detection. In Proceedings of the European Conference on Computer Vision, Milan, Italy, September 29–October 4 2024; Springer: Cham, Switzerland, 2024; pp. 233–249. [Google Scholar]

- Kou, R.; Wang, C.; Peng, Z.; Zhao, Z.; Chen, Y.; Han, J.; Huang, F.; Yu, Y.; Fu, Q. Infrared small target segmentation networks: A survey. Pattern Recognit. 2023, 143, 109788. [Google Scholar] [CrossRef]

- Yang, B.; Zhang, X.; Zhang, J.; Luo, J.; Zhou, M.; Pi, Y. EFLNet: Enhancing feature learning network for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5906511. [Google Scholar]

- Pan, L.; Liu, T.; Cheng, J.; Cheng, B.; Cai, Y. AIMED-Net: An enhancing infrared small target detection net in UAVs with multi-layer feature enhancement for edge computing. Remote Sens. 2024, 16, 1776. [Google Scholar]

- Peng, L.; Lu, Z.; Lei, T.; Jiang, P. Dual-Structure elements morphological filtering and local z-score normalization for infrared small target detection against heavy clouds. Remote Sens. 2024, 16, 2343. [Google Scholar] [CrossRef]

- Hou, Q.; Wang, Z.; Tan, F.; Zhao, Y.; Zheng, H.; Zhang, W. RISTDnet: Robust infrared small target detection network. IEEE Geosci. Remote Sens. Lett. 2021, 19, 7000805. [Google Scholar]

- Tong, X.; Su, S.; Wu, P.; Guo, R.; Wei, J.; Zuo, Z.; Sun, B. MSAFFNet: A multiscale label-supervised attention feature fusion network for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5002616. [Google Scholar]

- Dai, Y.; Li, X.; Zhou, F.; Qian, Y.; Chen, Y.; Yang, J. One-stage cascade refinement networks for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5000917. [Google Scholar]

- Li, Z.; An, W.; Guo, G.; Wang, L.; Wang, Y.; Lin, Z. SpecDETR: A transformer-based hyperspectral point object detection network. ISPRS J. Photogramm. Remote Sens. 2025, 226, 221–246. [Google Scholar] [CrossRef]

- Wei, H.; Yang, Y.; Sun, S.; Feng, M.; Song, X.; Lei, Q.; Hu, H.; Wang, R.; Song, H.; Akhtar, N.; et al. Mono3DVLT: Monocular-Video-Based 3D Visual Language Tracking. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10-17 June 2025; pp. 13886–13896. [Google Scholar]

- Jurosch, F.; Zeller, J.; Wagner, L.; Özsoy, E.; Jell, A.; Kolb, S.; Wilhelm, D. Video-based multi-target multi-camera tracking for postoperative phase recognition. Int. J. Comput. Assist. Radiol. Surg. 2025, 20, 1159–1166. [Google Scholar] [PubMed]

- Habibi, M.; Delaram, Z.; Nourani, M.; Sullivan, D.H. Video-Based Human-Object Interaction Analysis for Patient Behavioral Monitoring. In Proceedings of the 2025 IEEE 13th International Conference on Healthcare Informatics (ICHI), Rende, Italy, 18–21 June 2025; pp. 414–422. [Google Scholar]

- Wan, M.; Gu, G.; Qian, W.; Ren, K.; Maldague, X.; Chen, Q. Unmanned aerial vehicle video-based target tracking algorithm using sparse representation. IEEE Internet Things J. 2019, 6, 9689–9706. [Google Scholar] [CrossRef]

- Howard, R.T.; Book, M.L.; Bryan, T.C. Video-based sensor for tracking three-dimensional targets. In Proceedings of the Atmospheric Propagation, Adaptive Systems, and Laser Radar Technology for Remote Sensing, Barcelona, Spain, 31 January 2001; SPIE: Bellingham, WA, USA, 2001; Volume 4167, pp. 242–251. [Google Scholar]

- Zhang, Z.; Wang, C.; Song, J.; Xu, Y. Object tracking based on satellite videos: A literature review. Remote Sens. 2022, 14, 3674. [Google Scholar]

- Wan, X.; Zhou, S.; Wang, J.; Meng, R. Multiple object tracking by trajectory map regression with temporal priors embedding. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual, 20–24 October 2021; pp. 1377–1386. [Google Scholar]

- Chen, S.; Wang, T.; Wang, H.; Wang, Y.; Hong, J.; Dong, T.; Li, Z. Vehicle tracking on satellite video based on historical model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7784–7796. [Google Scholar] [CrossRef]

- Othmani, M. A vehicle detection and tracking method for traffic video based on faster R-CNN. Multimed. Tools Appl. 2022, 81, 28347–28365. [Google Scholar] [CrossRef]

- Ibrahim, N.; Darlis, A.R.; Herianto; Kusumoputro, B. Performance Analysis of YOLO-DeepSORT on Thermal Video-Based Online Multi-Object Tracking. In Proceedings of the 2023 3rd International Conference on Robotics, Automation and Artificial Intelligence (RAAI), Singapore, 14–16 December 2023; pp. 46–51. [Google Scholar]

- Zhao, S.; Xu, T.; Li, H.; Wu, X.J.; Kittler, J. Visual complexity guided diffusion defender for video object tracking and recognition. Pattern Recognit. 2026, 169, 111867. [Google Scholar] [CrossRef]

- Liu, Z.; Liang, S.; Guan, B.; Tan, D.; Shang, Y.; Yu, Q. Collimator-assisted high-precision calibration method for event cameras. Opt. Lett. 2025, 50, 4254–4257. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Chen, C.; Guan, B.; Tan, Z.; Shang, Y.; Li, Z.; Yu, Q. Ridge estimation-based vision and laser ranging fusion localization method for UAVs. Appl. Opt. 2025, 64, 1352–1361. [Google Scholar] [CrossRef]

- Xu, C.; Qi, H.; Zheng, Y.; Peng, S. Real-time moving vehicle detection in satellite video based on historical differential information and grouping features. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5614716. [Google Scholar] [CrossRef]

- Tang, D.; Tang, S.; Wang, Y.; Guan, S.; Jin, Y. A global object-oriented dynamic network for low-altitude remote sensing object detection. Sci. Rep. 2025, 15, 19071. [Google Scholar] [CrossRef]

- Shi, Y.; Xu, G.; Liu, Y.; Chen, H.; Zhou, S.; Yang, J.; Dong, C.; Lin, Z.; Wu, J. Deep Learning-Based Real-Time Surf Detection Model During Typhoon Events. Remote Sens. 2025, 17, 1039. [Google Scholar] [CrossRef]

- Li, Z.X.; Xu, Q.Y.; An, W.; He, X.; Guo, G.W.; Li, M.; Ling, Q.; Wang, L.G.; Xiao, C.; Lin, Z.P. A lightweight dark object detection network for infrared images. J. Infrared Millim. Waves 2025, 44, 285. [Google Scholar] [CrossRef]

- Ying, X.; Liu, L.; Lin, Z.; Shi, Y.; Wang, Y.; Li, R.; Cao, X.; Li, B.; Zhou, S.; An, W. Infrared small target detection in satellite videos: A new dataset and a novel recurrent feature refinement framework. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5002818. [Google Scholar]

| Method | Precision↑ | Recall↑ | F1-Score↑ | AP↑ | FAR↓ | LE(px)↓ | |

|---|---|---|---|---|---|---|---|

| IRAir | SpecDETR | 72.53 | 67.99 | 78.06 | 68.48 | 17.22 | 6.60 |

| RISTDnet | 65.78 | 61.89 | 67.78 | 63.56 | 28.56 | 10.90 | |

| MSAFFNet | 69.25 | 66.43 | 75.93 | 67.17 | 22.31 | 8.86 | |

| OSCAR | 75.86 | 77.02 | 78.55 | 71.86 | 15.98 | 5.53 | |

| Ours | 82.12 | 78.34 | 80.23 | 80.45 | 14.23 | 4.12 | |

| IRSatVideo | SpecDETR | 78.15 | 71.47 | 75.12 | 72.71 | 18.24 | 15.94 |

| RISTDnet | 69.25 | 66.43 | 75.93 | 67.17 | 22.31 | 26.86 | |

| MSAFFNetx | 72.79 | 68.66 | 73.14 | 66.76 | 26.75 | 23.30 | |

| OSCAR | 83.14 | 77.56 | 76.28 | 73.60 | 13.98 | 13.36 | |

| Ours | 84.12 | 79.34 | 83.23 | 79.45 | 13.34 | 12.12 |

| Method | Precision↑ | Recall↑ | F1-Score↑ | AP↑ | FAR↓ | FPS↑ | |

|---|---|---|---|---|---|---|---|

| SpecDETR | 73.11 | 67.73 | 73.30 | 66.87 | 20.58 | 58.3 | |

| RISTDnet | 67.32 | 64.26 | 63.91 | 61.76 | 34.06 | 81.0 | |

| 512 × 512 | MSAFFNet | 70.37 | 64.92 | 65.64 | 64.12 | 30.59 | 72.9 |

| OSCAR | 76.47 | 71.01 | 77.86 | 72.75 | 16.30 | 43.4 | |

| Ours | 83.02 | 77.58 | 80.53 | 77.25 | 15.57 | 31.4 | |

| SpecDETR | 67.85 | 72.23 | 65.54 | 64.15 | 28.92 | 34.7 | |

| RISTDnet | 65.80 | 61.94 | 59.25 | 57.54 | 39.49 | 45.2 | |

| 256 × 256 | MSAFFNet | 66.34 | 62.53 | 64.35 | 61.31 | 28.55 | 41.6 |

| OSCAR | 72.51 | 74.57 | 72.32 | 72.47 | 31.18 | 27.4 | |

| Ours | 80.17 | 75.60 | 76.06 | 73.21 | 21.36 | 21.4 |

| Residual | Spatial | Temporal | Precision↑ | Recall↑ | F1-Score↑ | AP↑ | FAR↓ | LE(px)↓ |

|---|---|---|---|---|---|---|---|---|

| ✓ | 68.23 | 62.15 | 65.12 | 63.45 | 28.34 | 12.45 | ||

| ✓ | 65.67 | 60.34 | 62.89 | 60.12 | 30.12 | 13.67 | ||

| ✓ | ✓ | 76.34 | 72.67 | 74.90 | 74.23 | 17.78 | 4.23 | |

| ✓ | ✓ | 81.12 | 79.56 | 78.84 | 79.32 | 15.65 | 5.01 | |

| ✓ | ✓ | ✓ | 82.12 | 78.34 | 80.23 | 80.45 | 14.23 | 4.12 |

| Hyperparameters | Values/F1-Score | ||

|---|---|---|---|

| Loss weight of residuals | 1.0 (original) | 0.5 | 2.0 |

| 80.23 | 80.86 | 80.10 | |

| ConvLSTM kernal size | (original) | ||

| 80.23 | 79.02 | 78.75 | |

| Number of ConvLSTM layers | 1 (original) | 2 | 3 |

| 80.23 | 79.65 | 80.73 | |

| Learning rate | (original) | ||

| 80.23 | 80.59 | 80.30 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, Y.; Ma, D.; Yang, Q.; Li, S.; Zhang, D. Spatio-Temporal Residual Attention Network for Satellite-Based Infrared Small Target Detection. Remote Sens. 2025, 17, 3457. https://doi.org/10.3390/rs17203457

Chang Y, Ma D, Yang Q, Li S, Zhang D. Spatio-Temporal Residual Attention Network for Satellite-Based Infrared Small Target Detection. Remote Sensing. 2025; 17(20):3457. https://doi.org/10.3390/rs17203457

Chicago/Turabian StyleChang, Yan, Decao Ma, Qisong Yang, Shaopeng Li, and Daqiao Zhang. 2025. "Spatio-Temporal Residual Attention Network for Satellite-Based Infrared Small Target Detection" Remote Sensing 17, no. 20: 3457. https://doi.org/10.3390/rs17203457

APA StyleChang, Y., Ma, D., Yang, Q., Li, S., & Zhang, D. (2025). Spatio-Temporal Residual Attention Network for Satellite-Based Infrared Small Target Detection. Remote Sensing, 17(20), 3457. https://doi.org/10.3390/rs17203457