RSAM-Seg: A SAM-Based Model with Prior Knowledge Integration for Remote Sensing Image Semantic Segmentation

Abstract

1. Introduction

- 1.

- Domain Mismatch: The pretraining of SAM primarily focuses on natural images, leading to suboptimal performance on remote sensing imagery. Remote sensing data presents unique challenges, such as varied spatial resolutions, complex atmospheric effects, and distinct object characteristics that differ significantly from natural images.

- 2.

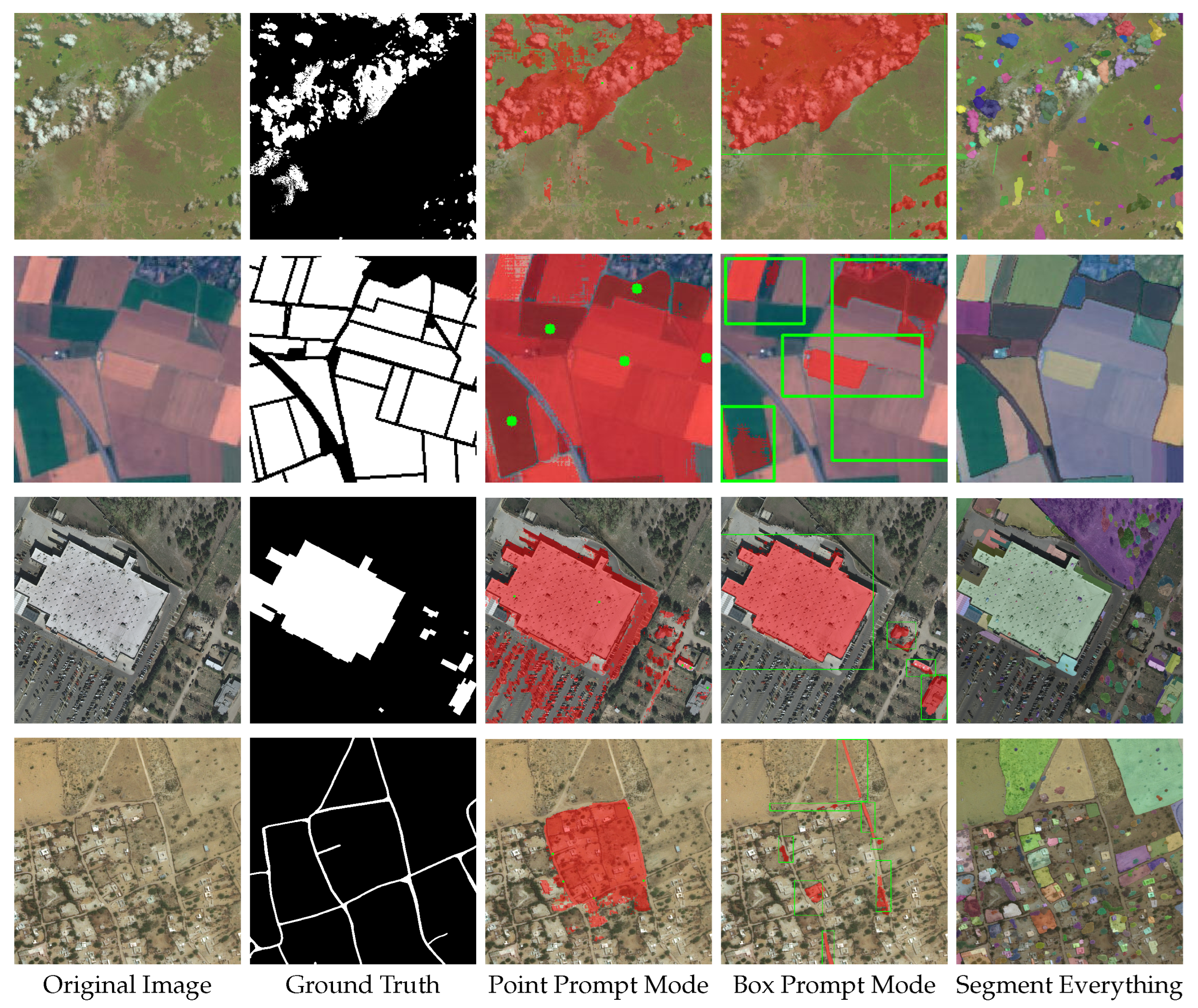

- Manual Prompt Dependency: SAM requires manual prompt inputs for optimal performance, which becomes impractical in large-scale remote sensing applications. The quality and placement of prompts significantly influence segmentation results, introducing variability and reducing efficiency in automated processing pipelines.

- 1.

- We propose RSAM-Seg, a novel deep learning model for object segmentation tasks in remote sensing images, demonstrating better adaptability and eliminating the need for manual intervention to provide prompts, thereby streamlining the workflow;

- 2.

- RSAM-Seg can incorporate custom, task-specific prior information, making it adaptable to diverse tasks in the remote sensing field;

- 3.

- RSAM-Seg outperforms the original SAM, DeepLabV3+, Segformer, and U-Net models across diverse scenarios, including binary segmentation tasks such as cloud detection, building identification, field monitoring, and road mapping. It also excels in multi-class segmentation challenges, particularly in cloud-like scenarios and land-cover segmentation tasks;

- 4.

- RSAM-Seg effectively identifies missing areas within the ground truths of certain datasets and demonstrates robust few-shot learning capabilities, enhancing its reliability and versatility in practical applications.

2. Related Work

2.1. Supervised Learning

2.2. Weakly Supervised Learning

2.3. Unsupervised Learning

2.4. Few-Shot and Zero-Shot Learning

3. Method

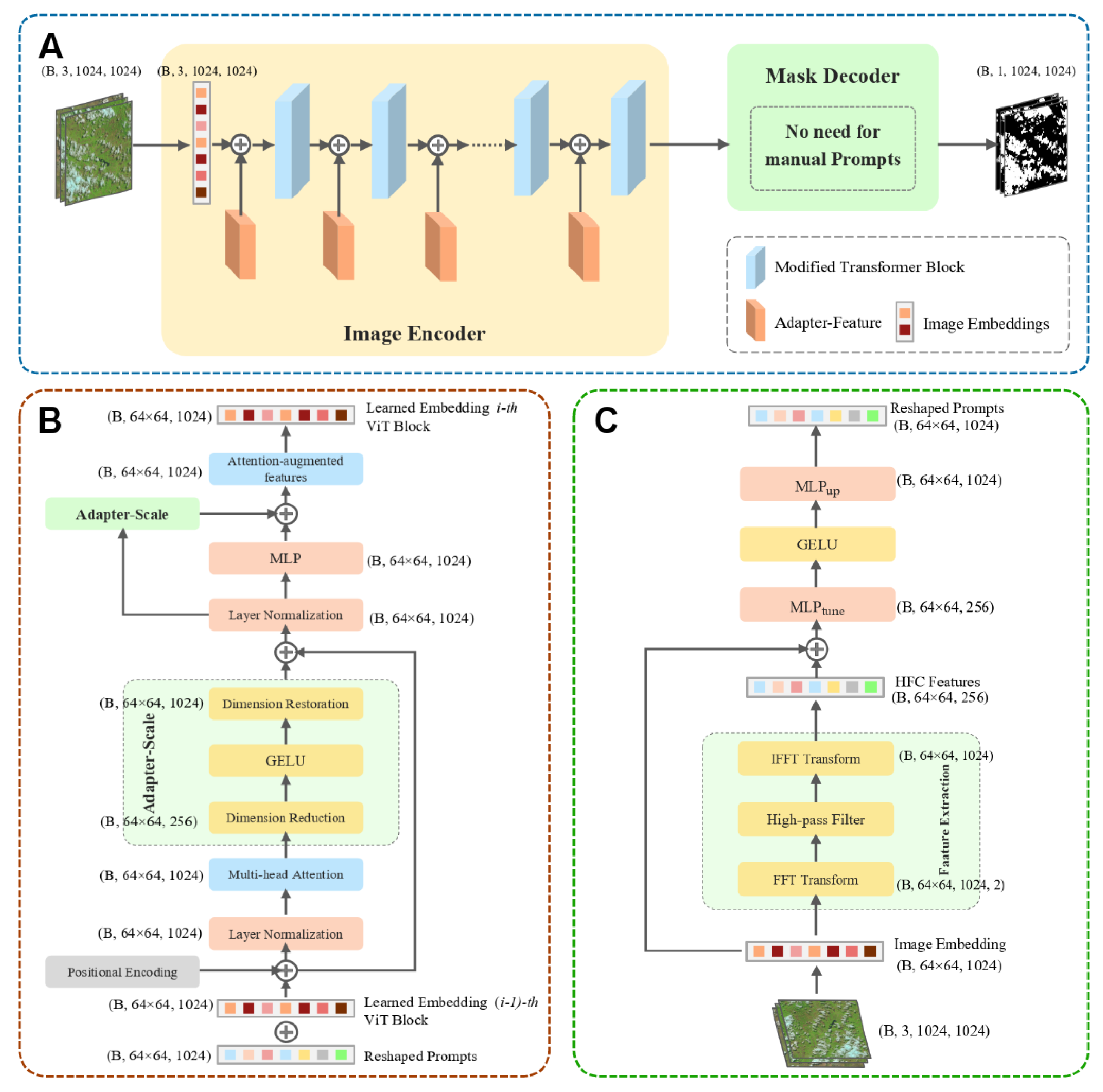

3.1. RSAM-Seg Architecture

3.2. Adapter Details

3.2.1. Adapter-Scale

3.2.2. Adapter-Feature

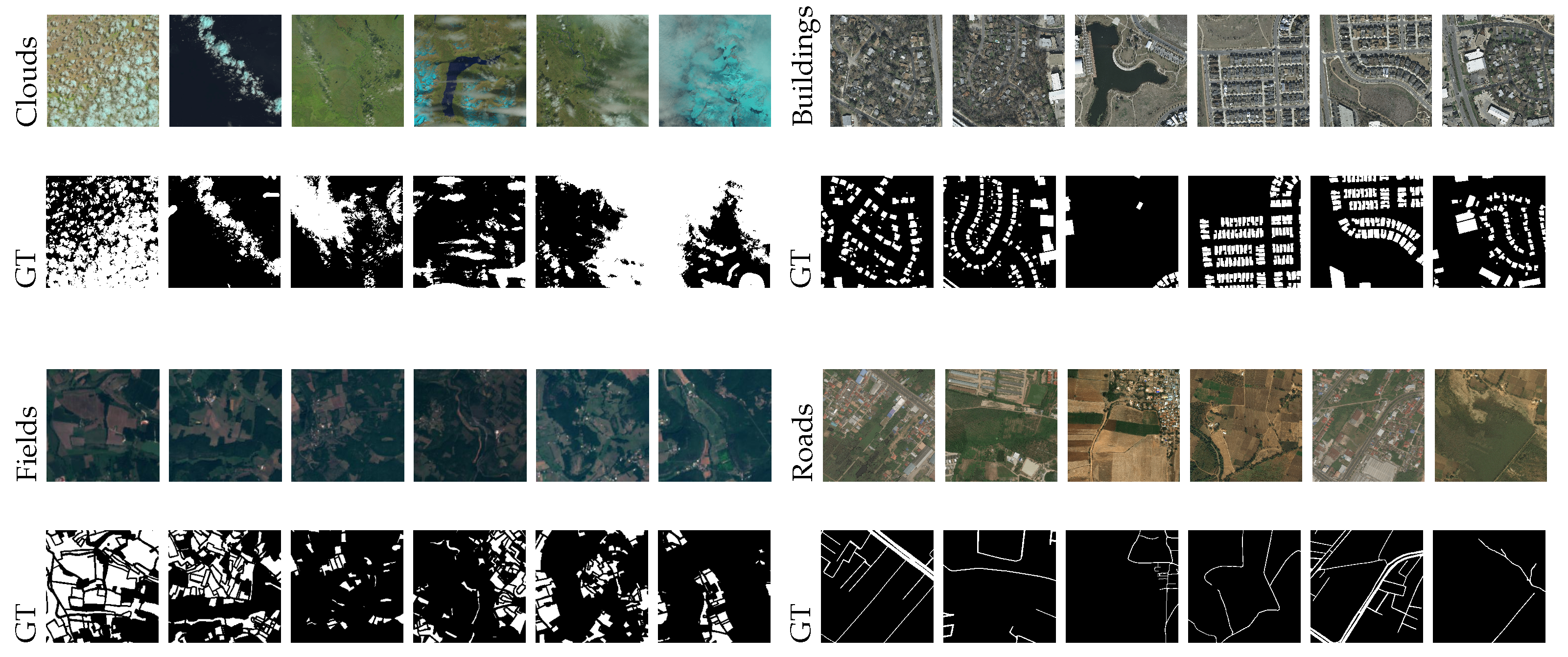

4. Datasets

4.1. Binary Segmentation Datasets

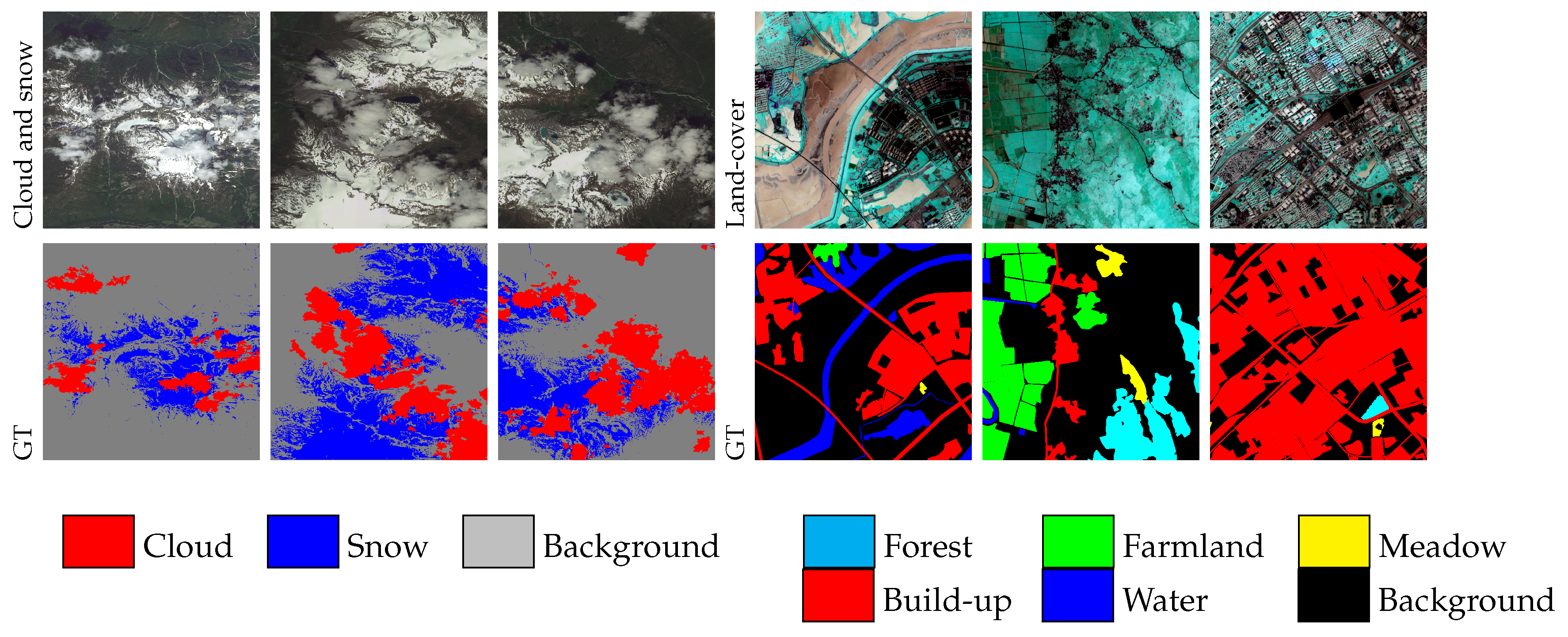

4.2. Multi-Class Segmentation Dataset

4.2.1. Cloud-like Scenes Segmentation Dataset

4.2.2. Land-Cover Scenes Segmentation Dataset

5. Experiment Settings

5.1. Implementation Details

5.2. Performance Metrics

- 1.

- index (IoU):

- 2.

- :

- 3.

- :

- 4.

- score:

- 5.

- Overall accuracy:

- 6.

- (mean intersection over union):where , , , and denote true positives, true negatives, false positives, and false negatives, respectively, and n denotes the number of classes. These metrics provide a comprehensive evaluation of the segmentation performance, capturing various aspects such as , , and overall accuracy. Additionally, metrics like the index and mean intersection over union () are crucial for evaluating segmentation performance, with higher values indicating better quality.

6. Results

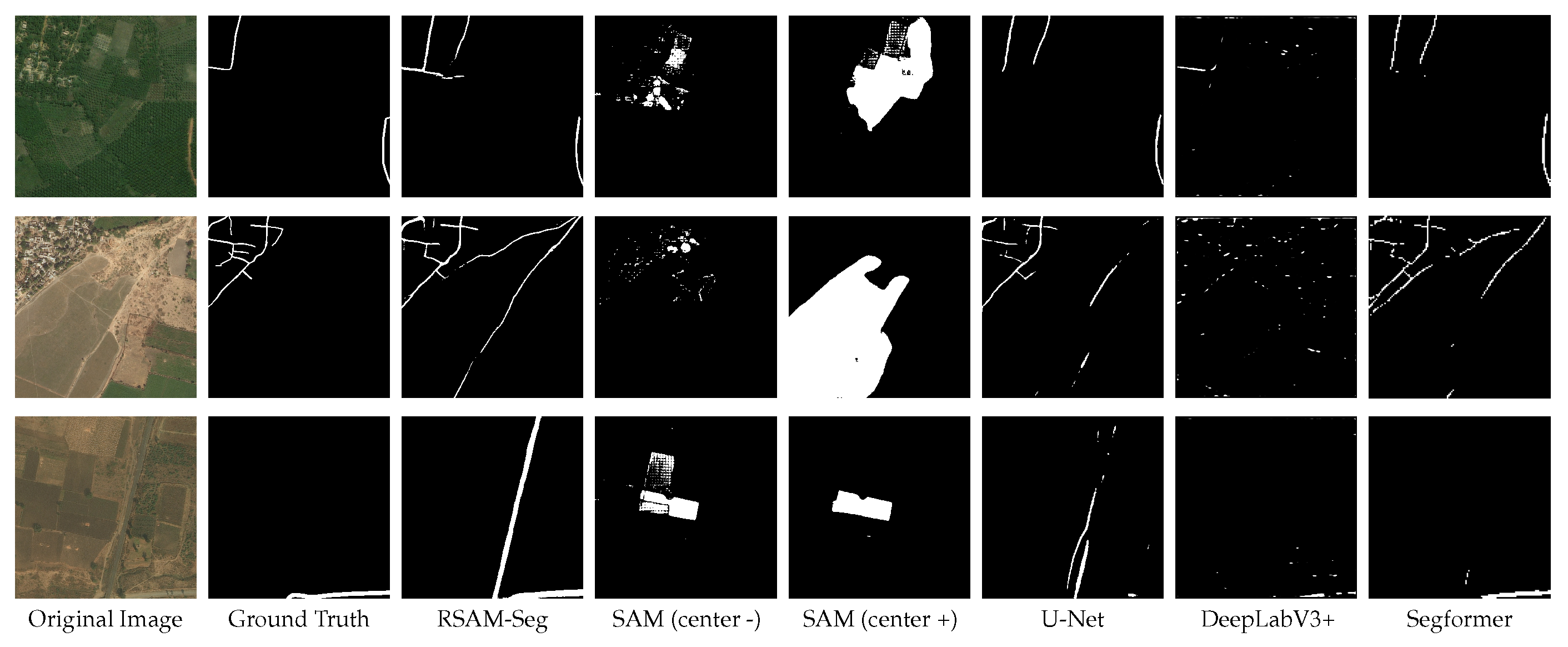

6.1. Results for Binary Segmentation Scenarios

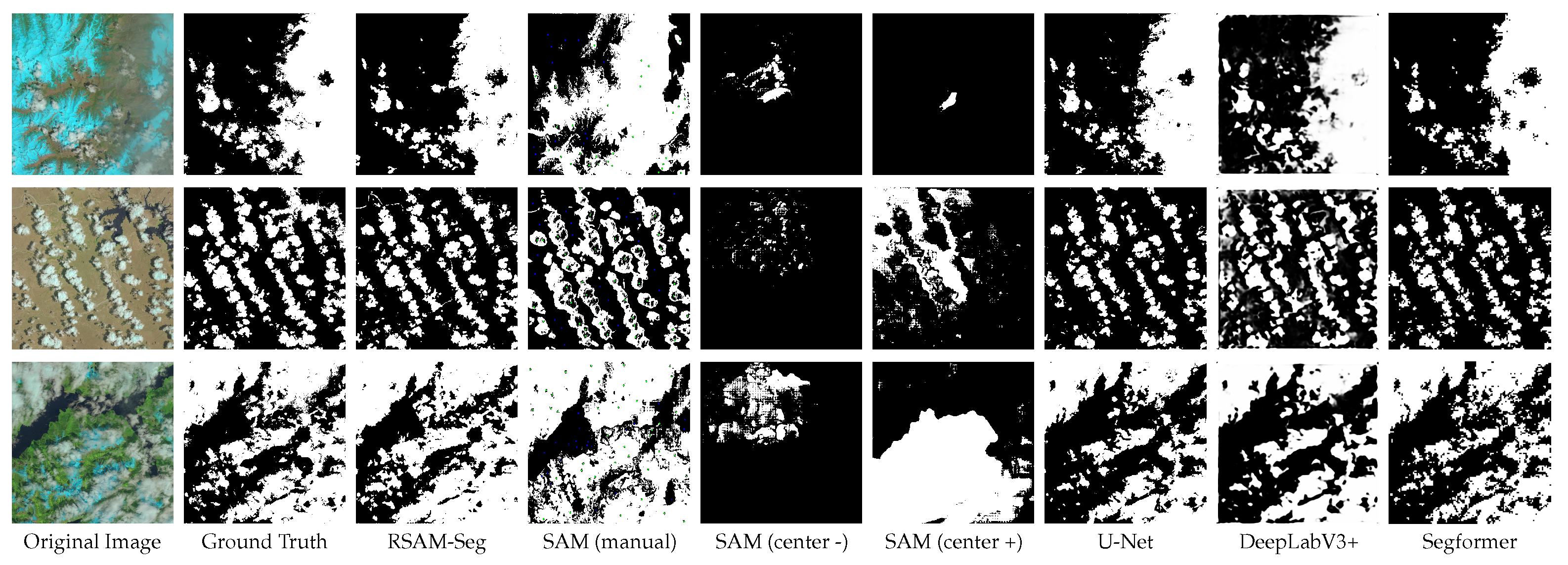

6.1.1. Results for the Cloud Scenario

6.1.2. Results for the Field Scenario

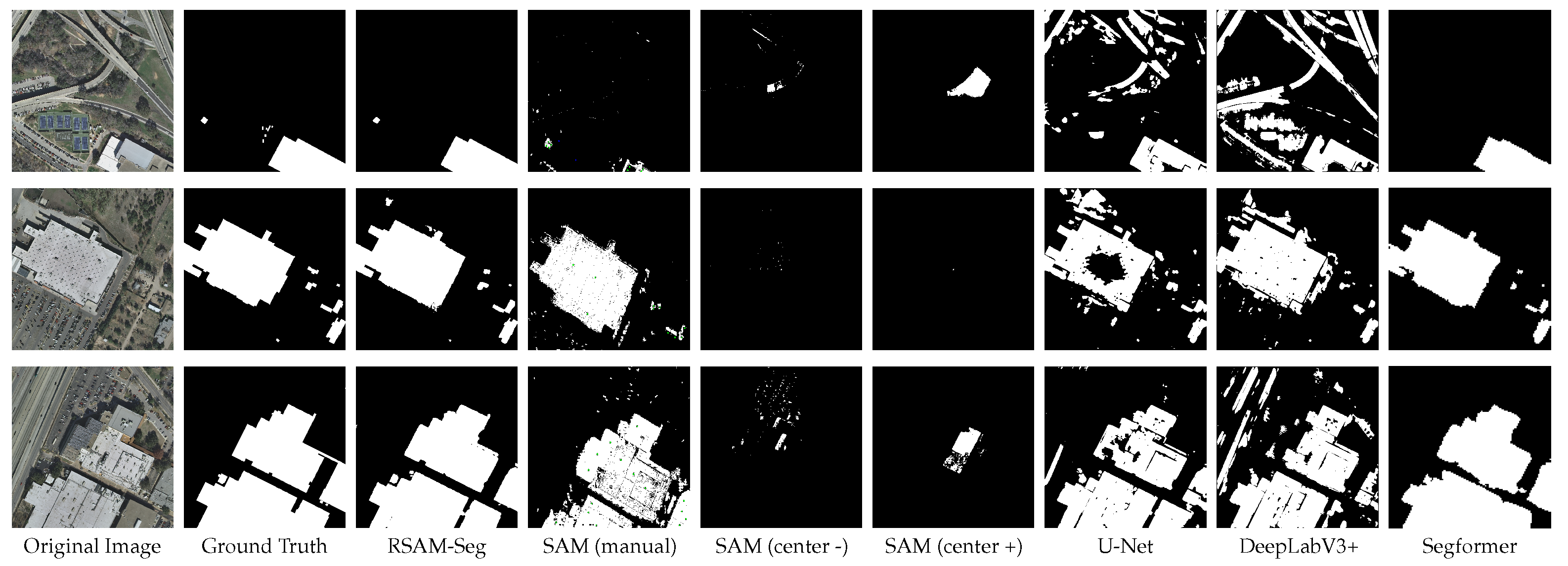

6.1.3. Results for the Building Scenario

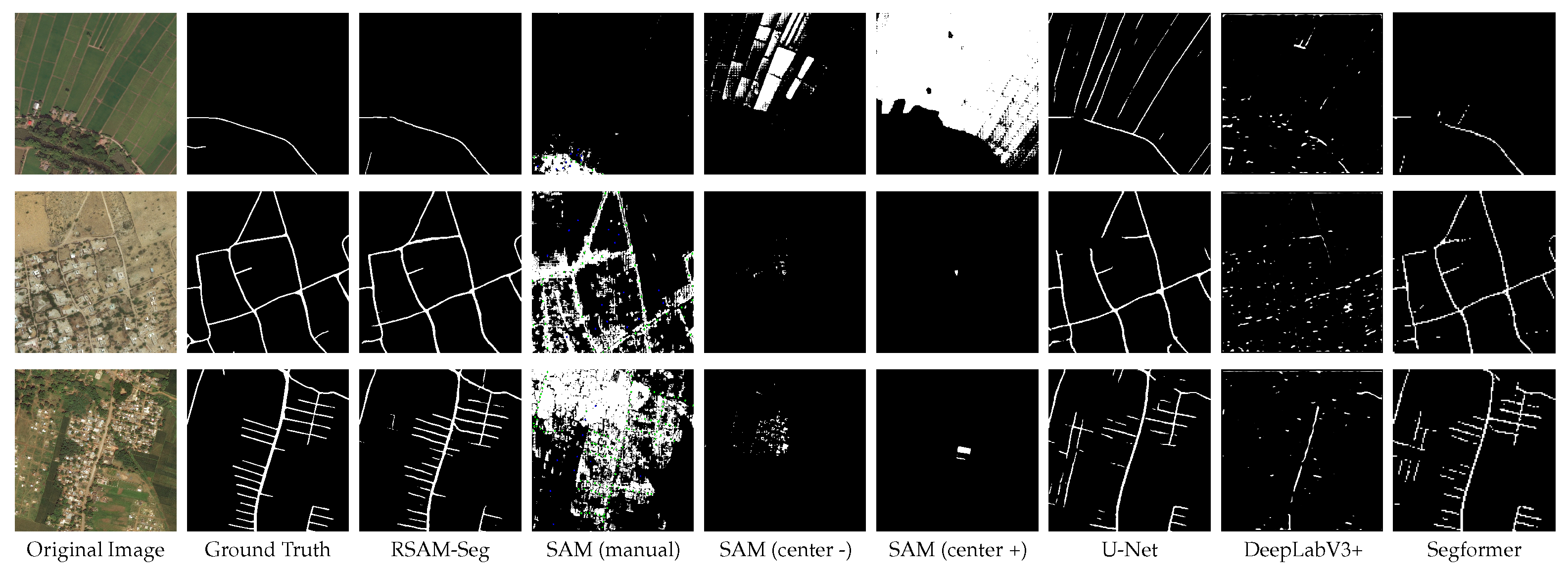

6.1.4. Results for the Road Scenario

6.2. Results for Multi-Class Scenario

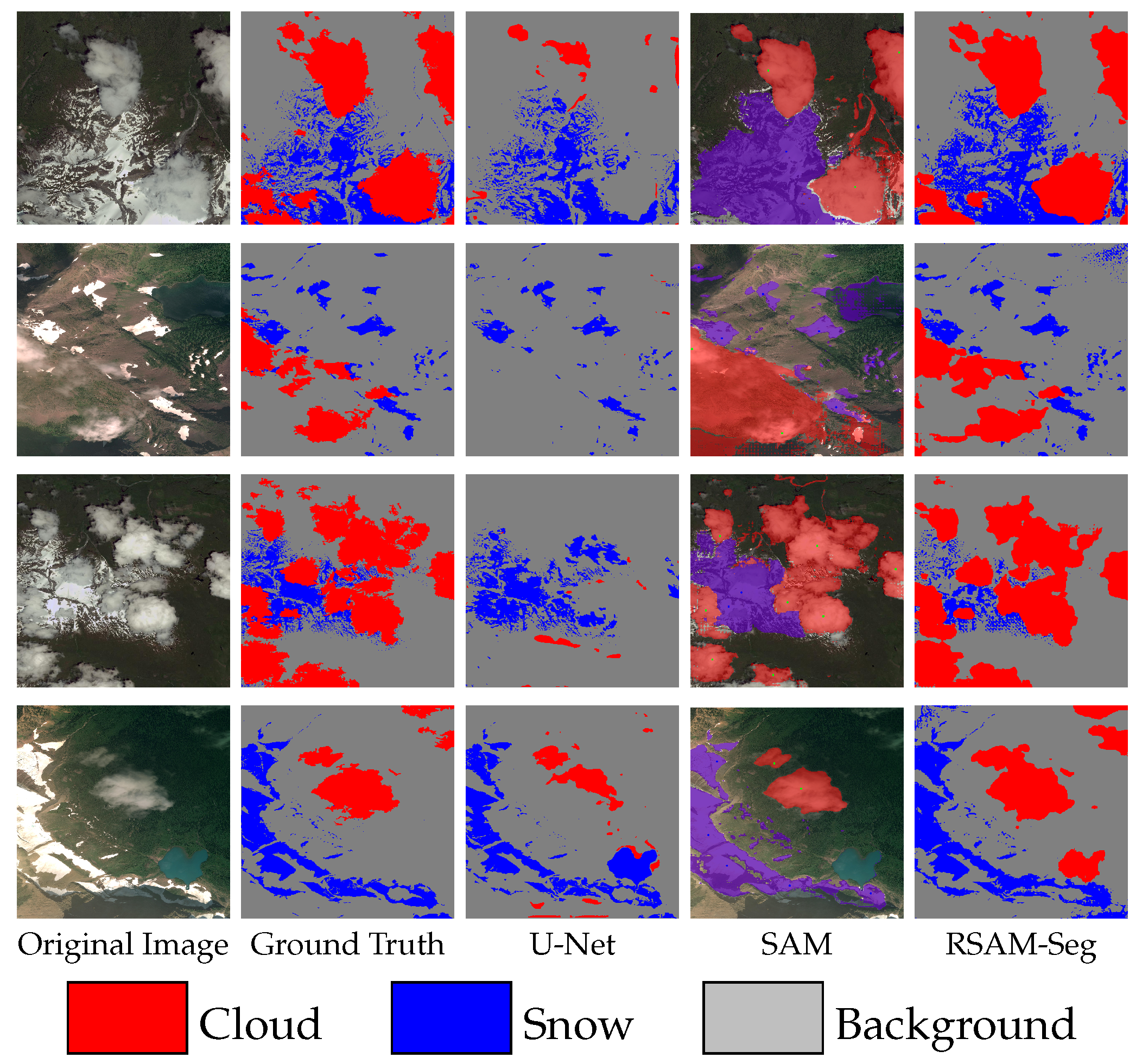

6.2.1. Results for the Cloud-like Scenario

6.2.2. Results for the Land-Cover Scene Scenario

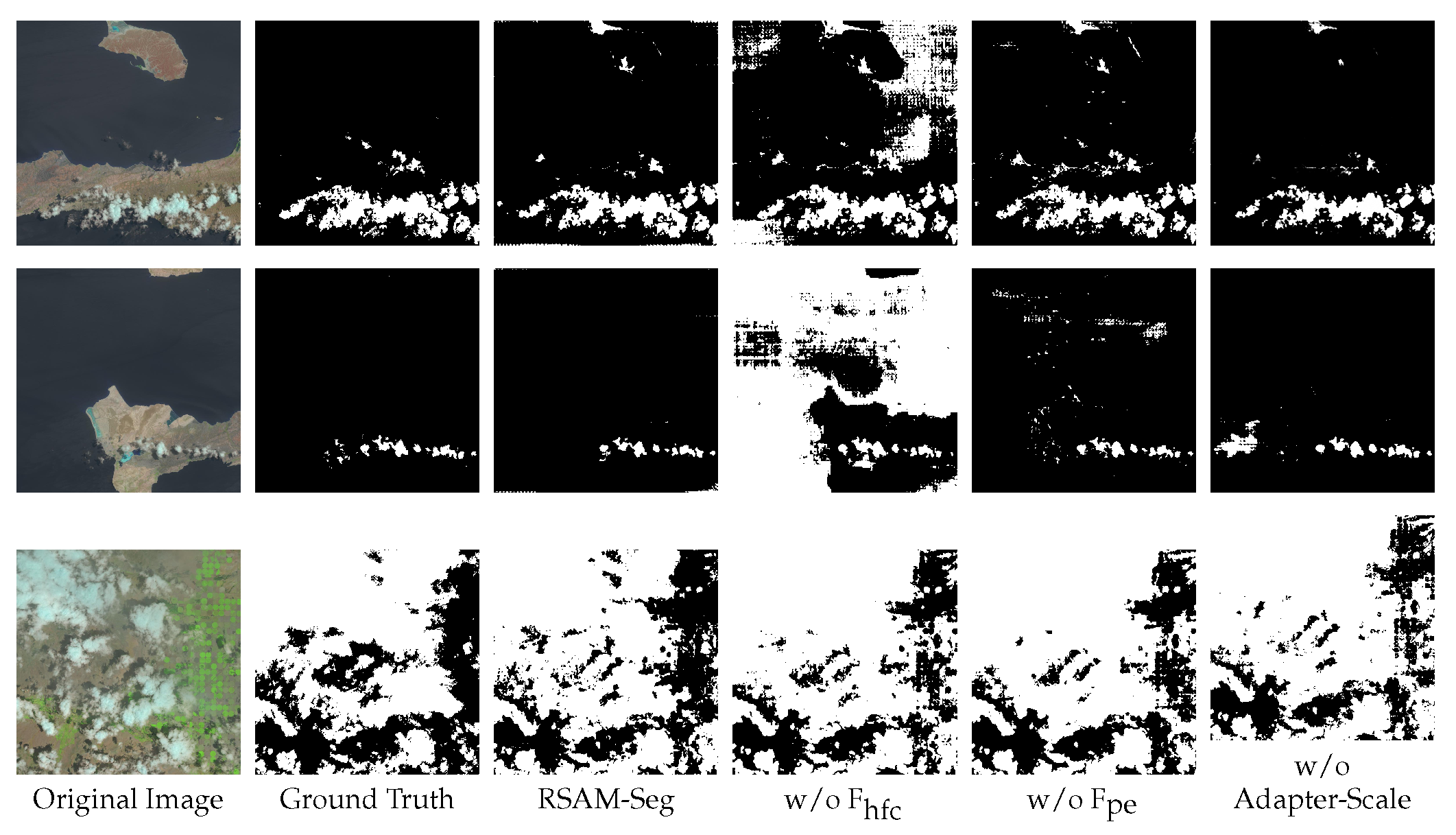

6.3. Ablation Study

7. Discussion

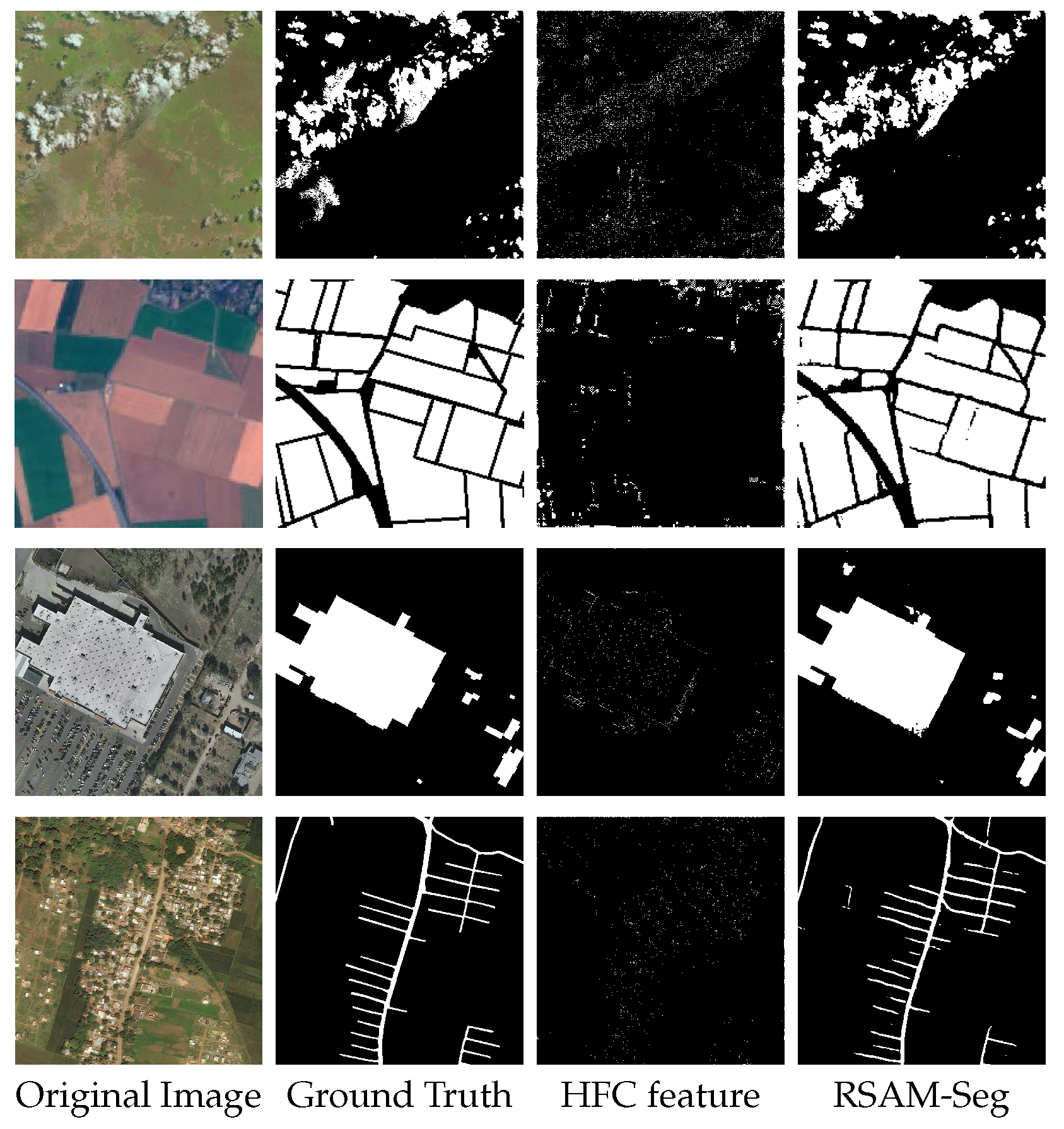

7.1. Leveraging Task-Specific Prior Knowledge for Prompt

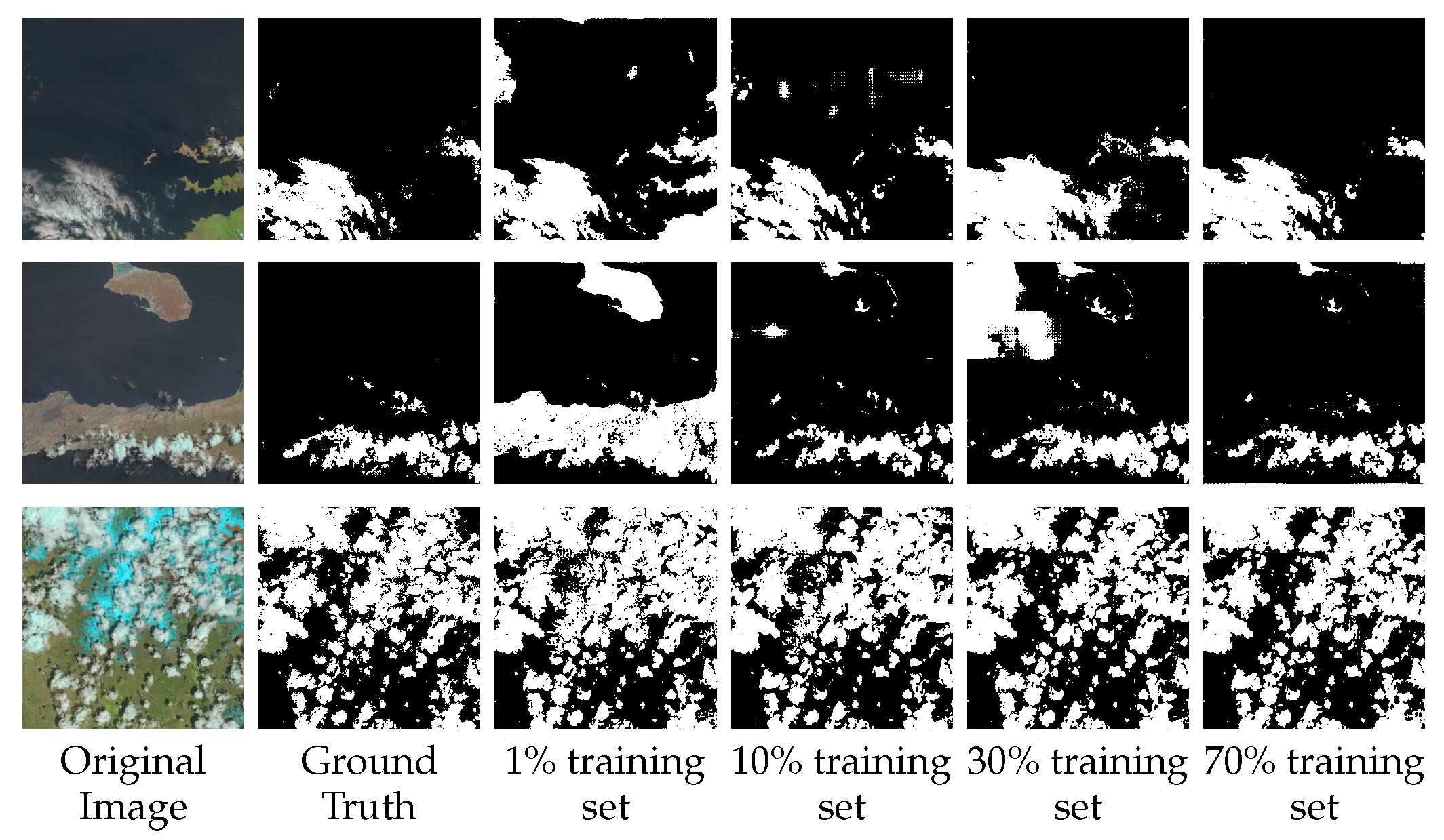

7.2. Few-Shot Scenario

7.3. Beyond Ground Truth

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, B.; Wu, Y.; Zhao, B.; Chanussot, J.; Hong, D.; Yao, J.; Gao, L. Progress and Challenges in Intelligent Remote Sensing Satellite Systems. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1814–1822. [Google Scholar] [CrossRef]

- Mahajan, S.; Fataniya, B. Cloud detection methodologies: Variants and development—A review. Complex Intell. Syst. 2020, 6, 251–261. [Google Scholar] [CrossRef]

- Patino, J.E.; Duque, J.C. A review of regional science applications of satellite remote sensing in urban settings. Comput. Environ. Urban Syst. 2013, 37, 1–17. [Google Scholar] [CrossRef]

- Khanal, S.; Kc, K.; Fulton, J.P.; Shearer, S.; Ozkan, E. Remote Sensing in Agriculture—Accomplishments, Limitations, and Opportunities. Remote Sens. 2020, 12, 3783. [Google Scholar] [CrossRef]

- Chen, Z.; Deng, L.; Luo, Y.; Li, D.; Junior, J.M.; Gonçalves, W.N.; Nurunnabi, A.A.M.; Li, J.; Wang, C.; Li, D. Road extraction in remote sensing data: A survey. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102833. [Google Scholar] [CrossRef]

- Gonzales, C.; Sakla, W. Semantic Segmentation of Clouds in Satellite Imagery Using Deep Pre-Trained U-Nets. In Proceedings of the IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 15–17 October 2019; pp. 1–7. [Google Scholar]

- Blaschke, T.; Hay, G.J.; Weng, Q.; Resch, B. Collective Sensing: Integrating Geospatial Technologies to Understand Urban Systems—An Overview. Remote Sens. 2011, 3, 1743–1776. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Lian, R.; Wang, W.; Mustafa, N.; Huang, L. Road Extraction Methods in High-Resolution Remote Sensing Images: A Comprehensive Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5489–5507. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y. Urban Land-Use Mapping Using a Deep Convolutional Neural Network with High Spatial Resolution Multispectral Remote Sensing Imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data: A Technical Tutorial on the State of the Art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Wang, W.; Yang, N.; Zhang, Y.; Wang, F.; Cao, T.; Eklund, P. A review of road extraction from remote sensing images. J. Traffic Transp. Eng. (Engl. Ed.) 2016, 3, 271–282. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Hossain, M.D.; Chen, D. Segmentation for Object-Based Image Analysis (OBIA): A review of algorithms and challenges from remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Kotaridis, I.; Lazaridou, M. Remote sensing image segmentation advances: A meta-analysis. ISPRS J. Photogramm. Remote Sens. 2021, 173, 309–322. [Google Scholar] [CrossRef]

- Moser, G.; Serpico, S.B.; Benediktsson, J.A. Land-cover mapping by markov modeling of spatial-contextual information in very-high-resolution remote sensing images. Proc. IEEE 2013, 101, 631–651. [Google Scholar] [CrossRef]

- Dey, V.; Zhang, Y.; Zhong, M. A Review on Image Segmentation Techniques with Remote Sensing Perspective; Wagner, W., Székely, B., Eds.; ISPRS TC VII Symposium—100 Years ISPRS; IAPRS: Vienna, Austria, 2010; pp. 31–42. [Google Scholar]

- Schiewe, J. Segmentation of high-resolution remotely sensed data-concepts, ap- plications and problems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 380–385. [Google Scholar]

- Bagwari, N.; Kumar, S.; Verma, V.S. A comprehensive review on segmentation techniques for satellite images. Arch. Comput. Methods Eng. 2023, 30, 4325–4358. [Google Scholar] [CrossRef]

- Chandra, M.A.; Bedi, S. Survey on SVM and their application in image classification. Int. J. Inf. Technol. 2021, 13, 1–11. [Google Scholar] [CrossRef]

- Boston, T.; Van Dijk, A.; Larraondo, P.R.; Thackway, R. Comparing CNNs and random forests for Landsat image segmentation trained on a large proxy land cover dataset. Remote Sens. 2022, 14, 3396. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. Support vector machines for classification in remote sensing. Int. J. Remote Sens. 2005, 26, 1007–1011. [Google Scholar] [CrossRef]

- Juel, A.; Groom, G.B.; Svenning, J.C.; Ejrnaes, R. Spatial application of Random Forest models for fine-scale coastal vegetation classification using object based analysis of aerial orthophoto and DEM data. Int. J. Appl. Earth Obs. Geoinf. 2015, 42, 106–114. [Google Scholar] [CrossRef]

- Mellor, A.; Boukir, S.; Haywood, A.; Jones, S. Exploring issues of training data imbalance and mislabelling on random forest performance for large area land cover classification using the ensemble margin. ISPRS J. Photogramm. Remote Sens. 2015, 105, 155–168. [Google Scholar] [CrossRef]

- Toldo, M.; Maracani, A.; Michieli, U.; Zanuttigh, P. Unsupervised Domain Adaptation in Semantic Segmentation: A Review. Technologies 2020, 8, 35. [Google Scholar] [CrossRef]

- Li, W.; Wang, Z.; Wang, Y.; Wu, J.; Wang, J.; Jia, Y.; Gui, G. Classification of High-Spatial-Resolution Remote Sensing Scenes Method Using Transfer Learning and Deep Convolutional Neural Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1986–1995. [Google Scholar] [CrossRef]

- Pires de Lima, R.; Marfurt, K. Convolutional Neural Network for Remote-Sensing Scene Classification: Transfer Learning Analysis. Remote Sens. 2020, 12, 86. [Google Scholar] [CrossRef]

- Liu, M.; Shi, Q.; Chai, Z.; Li, J. PA-Former: Learning Prior-Aware Transformer for Remote Sensing Building Change Detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Zeng, Q.; Geng, J. Task-specific contrastive learning for few-shot remote sensing image scene classification. ISPRS J. Photogramm. Remote Sens. 2022, 191, 143–154. [Google Scholar] [CrossRef]

- Ren, W.; Tang, Y.; Sun, Q.; Zhao, C.; Han, Q.L. Visual semantic segmentation based on few/zero-shot learning: An overview. IEEE/CAA J. Autom. Sin. 2023, 11, 1106–1126. [Google Scholar] [CrossRef]

- Chang, Z.; Lu, Y.; Ran, X.; Gao, X.; Wang, X. Few-shot semantic segmentation: A review on recent approaches. Neural Comput. Appl. 2023, 35, 18251–18275. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Roll, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Girshick, R. Segment Anything. arXiv 2023, arXiv:2304.02643. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Yuan, X.; Shi, J.; Gu, L. A review of deep learning methods for semantic segmentation of remote sensing imagery. Expert Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. Comprehensive survey of deep learning in remote sensing: Theories, tools, and challenges for the community. J. Appl. Remote Sens. 2017, 11, 42609. [Google Scholar] [CrossRef]

- Liu, X.; Hu, C.; Li, P. Automatic segmentation of overlapped poplar seedling leaves combining Mask R-CNN and DBSCAN. Comput. Electron. Agric. 2020, 178, 105753. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, L.A. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Liu, S.; Liu, L.; Xu, F.; Chen, J.; Yuan, Y.; Chen, X. A deep learning method for individual arable field (IAF) extraction with cross-domain adversarial capability. Comput. Electron. Agric. 2022, 203, 107473. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Dong, Z.; An, S.; Zhang, J.; Yu, J.; Li, J.; Xu, D. L-unet: A landslide extraction model using multi-scale feature fusion and attention mechanism. Remote Sens. 2022, 14, 2552. [Google Scholar] [CrossRef]

- Hou, Y.; Liu, Z.; Zhang, T.; Li, Y. C-UNet: Complement UNet for remote sensing road extraction. Sensors 2021, 21, 2153. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with pretrained encoder and dilated convolution for high resolution satellite imagery road extraction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Ahn, J.; Cho, S.; Kwak, S. Weakly supervised learning of instance segmentation with inter-pixel relations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 2209–2218. [Google Scholar]

- Huang, Z.; Wang, X.; Wang, J.; Liu, W.; Wang, J. Weakly-supervised semantic segmentation network with deep seeded region growing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7014–7023. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Fu, K.; Lu, W.; Diao, W.; Yan, M.; Sun, H.; Zhang, Y.; Sun, X. WSF-NET: Weakly supervised feature-fusion network for binary segmentation in remote sensing image. Remote Sens. 2018, 10, 1970. [Google Scholar] [CrossRef]

- Wang, S.; Chen, W.; Xie, S.M.; Azzari, G.; Lobell, D.B. Weakly supervised deep learning for segmentation of remote sensing imagery. Remote Sens. 2020, 12, 207. [Google Scholar] [CrossRef]

- Nyborg, J.; Assent, I. Weakly-Supervised Cloud Detection with Fixed-Point GANs. In Proceedings of the IEEE International Conference on Big Data (Big Data), Orlando, FL, USA, 15–18 December 2021. [Google Scholar]

- Chen, Y.; Zhang, G.; Cui, H.; Li, X.; Hou, S.; Ma, J.; Li, Z.; Li, H.; Wang, H. A Novel Weakly Supervised Semantic Segmentation Framework to Improve the Resolution of Land Cover Product. ISPRS J. Photogramm. Remote. Sens. 2023, 196, 73–92. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, X.; Peng, Z.; Jia, X.; Tang, X.; Jiao, L. MOL: Towards Accurate Weakly Supervised Remote Sensing Object Detection via Multi-View noisy Learning. ISPRS J. Photogramm. Remote Sens. 2023, 196, 457–470. [Google Scholar] [CrossRef]

- Xu, Y.; Ghamisi, P. Consistency-regularized region-growing network for semantic segmentation of urban scenes with point-level annotations. IEEE Trans. Image Process. 2022, 31, 5038–5051. [Google Scholar] [CrossRef]

- Zhu, J.; Guo, Y.; Sun, G.; Yang, L.; Deng, M.; Chen, J. Unsupervised Domain Adaptation Semantic Segmentation of High-Resolution Remote Sensing Imagery with Invariant Domain-Level Prototype Memory. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5603518. [Google Scholar] [CrossRef]

- Chen, J.; Zhu, J.; Guo, Y.; Sun, G.; Zhang, Y.; Deng, M. Unsupervised domain adaptation for semantic segmentation of high-resolution remote sensing imagery driven by category-certainty attention. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5616915. [Google Scholar] [CrossRef]

- Li, Y.; Shi, T.; Zhang, Y.; Chen, W.; Wang, Z.; Li, H. Learning deep semantic segmentation network under multiple weakly-supervised constraints for cross-domain remote sensing image semantic segmentation. ISPRS J. Photogramm. Remote Sens. 2021, 175, 20–33. [Google Scholar] [CrossRef]

- Zhang, L.; Lan, M.; Zhang, J.; Tao, D. Stagewise unsupervised domain adaptation with adversarial self-training for road segmentation of remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5609413. [Google Scholar] [CrossRef]

- Chen, J.; He, P.; Zhu, J.; Guo, Y.; Sun, G.; Deng, M.; Li, H. Memory-Contrastive Unsupervised Domain Adaptation for Building Extraction of High-Resolution Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 61, 1–15. [Google Scholar] [CrossRef]

- Cai, Y.; Yang, Y.; Zheng, Q.; Shen, Z.; Shang, Y.; Yin, J.; Shi, Z. BiFDANet: Unsupervised bidirectional domain adaptation for semantic segmentation of remote sensing images. Remote Sens. 2022, 14, 190. [Google Scholar] [CrossRef]

- Yao, X.; Cao, Q.; Feng, X.; Cheng, G.; Han, J. Scale-aware detailed matching for few-shot aerial image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–11. [Google Scholar] [CrossRef]

- Zhang, X.; Wei, Y.; Yang, Y.; Huang, T.S. Sg-one: Similarity guidance network for one-shot semantic segmentation. IEEE Trans. Cybern. 2020, 50, 3855–3865. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Fu, R.; Fang, H.; Liu, Y.; Wang, Z.; Xu, Y.; Jin, Y.; Arbel, T. Medical sam adapter: Adapting segment anything model for medical image segmentation. arXiv 2023, arXiv:2304.12620. [Google Scholar]

- Liu, W.; Shen, X.; Pun, C.M.; Cun, X. Explicit visual prompting for low-level structure segmentations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 19434–19445. [Google Scholar]

- Li, X.; Shi, D.; Diao, X.; Xu, H. SCL-MLNet: Boosting few-shot remote sensing scene classification via self-supervised contrastive learning. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Sun, X.; Wang, B.; Wang, Z.; Li, H.; Li, H.; Fu, K. Research progress on few-shot learning for remote sensing image interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2387–2402. [Google Scholar] [CrossRef]

- An, X.; He, W.; Zou, J.; Yang, G.; Zhang, H. Pretrain a Remote Sensing Foundation Model by Promoting Intra-instance Similarity. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5643015. [Google Scholar] [CrossRef]

- Li, S.; Liu, F.; Jiao, L.; Liu, X.; Chen, P.; Li, L. Mask-guided correlation learning for few-shot segmentation in remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5636714. [Google Scholar] [CrossRef]

- Li, Y.; Shao, Z.; Huang, X.; Cai, B.; Peng, S. Meta-FSEO: A meta-learning fast adaptation with self-supervised embedding optimization for few-shot remote sensing scene classification. Remote Sens. 2021, 13, 2776. [Google Scholar] [CrossRef]

- Zhuang, Y.; Liu, Y.; Zhang, T.; Chen, L.; Chen, H.; Li, L. Heterogeneous Prototype Distillation with Support-Query Correlative Guidance for Few-Shot Remote Sensing Scene Classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5627918. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, F.; Wu, C.; Xia, J. Rapid mapping of volcanic eruption building damage: A model based on prior knowledge and few-shot fine-tuning. Int. J. Appl. Earth Obs. Geoinf. 2024, 126, 103622. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can Semantic Labeling Methods Generalize to Any City? The Inria Aerial Image Labeling Benchmark. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017. [Google Scholar]

- Mohajerani, S.; Saeedi, P. Cloud-Net: An End-To-End Cloud Detection Algorithm for Landsat 8 Imagery. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1029–1032. [Google Scholar] [CrossRef]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raskar, R. DeepGlobe 2018: A Challenge to Parse the Earth Through Satellite Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Aung, H.L.; Uzkent, B.; Burke, M.; Lobell, D.; Ermon, S. Farm Parcel Delineation Using Spatio-temporal Convolutional Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 76–77. [Google Scholar]

- Zhang, G.; Gao, X.; Yang, Y.; Wang, M.; Ran, S. Controllably Deep Supervision and Multi-Scale Feature Fusion Network for Cloud and Snow Detection Based on Medium- and High-Resolution Imagery Dataset. Remote Sens. 2021, 13, 4805. [Google Scholar] [CrossRef]

- Tong, X.Y.; Xia, G.S.; Lu, Q.; Shen, H.; Li, S.; You, S.; Zhang, L. Land-cover classification with high-resolution remote sensing images using transferable deep models. Remote Sens. Environ. 2020, 237, 111322. [Google Scholar] [CrossRef]

- Ren, S.; Luzi, F.; Lahrichi, S.; Kassaw, K.; Collins, L.M.; Bradbury, K.; Malof, J.M. Segment anything, from space? In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 8355–8365. [Google Scholar]

| Scenarios | Region | Dataset | Total Area (km2) | Resolution (m) | Picture Resolution () |

|---|---|---|---|---|---|

| Building | Austin Chicago Kitsap County | Inria | 81 | 0.3 | 1024 × 1024 |

| Cloud | - | 38-Cloud | 2,188,800 | 30 | 1024 × 1024 |

| Field | France | Sentinel-2 | 785 | 6 0 | 224 × 224 |

| Road | Thailand Indonesia India | DeepGlobe | 2220 | 0.50 | 1024 × 1024 |

| Scenario | Method | Score | Overall Accuracy | ||||

|---|---|---|---|---|---|---|---|

| Cloud | U-Net | 0.6461 | 0.8994 | 0.6740 | 0.7467 | 0.9021 | 0.7262 |

| DeepLabV3+ | 0.6440 | 0.7826 | 0.8058 | 0.7804 | 0.9227 | 0.7474 | |

| Segformer | 0.6553 | 0.8273 | 0.7087 | 0.7478 | 0.8360 | 0.7245 | |

| SAM(center +) | 0.1940 | 0.4107 | 0.3929 | 0.2502 | 0.4838 | 0.2666 | |

| SAM(center −) | 0.0637 | 0.3652 | 0.0801 | 0.0956 | 0.6221 | 0.3246 | |

| SAM(manual) | 0.5837 | 0.6245 | 0.8926 | 0.7292 | 0.7693 | 0.5837 | |

| RSAM-Seg | 0.7310 | 0.8301 | 0.8396 | 0.8152 | 0.9197 | 0.7646 | |

| Field | U-Net | 0.5011 | 0.6963 | 0.6484 | 0.6391 | 0.7392 | 0.5545 |

| DeepLabV3+ | 0.2998 | 0.6705 | 0.3089 | 0.3410 | 0.9743 | 0.6527 | |

| Segformer | 0.5535 | 0.7537 | 0.6614 | 0.6826 | 0.7914 | 0.6155 | |

| SAM(center +) | 0.1798 | 0.5323 | 0.3399 | 0.2627 | 0.5130 | 0.2866 | |

| SAM(center −) | 0.0650 | 0.5442 | 0.0751 | 0.1176 | 0.5502 | 0.2989 | |

| SAM(manual) | 0.6745 | 0.8021 | 0.8018 | 0.8011 | 0.7727 | 0.6745 | |

| RSAM-Seg | 0.6346 | 0.7600 | 0.7818 | 0.7592 | 0.8201 | 0.6634 | |

| Building | U-Net | 0.5047 | 0.6318 | 0.7151 | 0.6496 | 0.9125 | 0.7017 |

| DeepLabV3+ | 0.6081 | 0.6767 | 0.8560 | 0.7277 | 0.8714 | 0.7246 | |

| Segformer | 0.7967 | 0.8644 | 0.8482 | 0.8539 | 0.9191 | 0.8229 | |

| SAM(center +) | 0.0046 | 0.1522 | 0.0062 | 0.0083 | 0.8070 | 0.4057 | |

| SAM(center −) | 0.0067 | 0.2146 | 0.0072 | 0.0132 | 0.8433 | 0.4249 | |

| SAM(manual) | 0.6766 | 0.7615 | 0.8491 | 0.7965 | 0.8485 | 0.6766 | |

| RSAM-Seg | 0.7353 | 0.8390 | 0.8360 | 0.8337 | 0.9583 | 0.8424 | |

| Road | U-Net | 0.5286 | 0.6673 | 0.7276 | 0.6774 | 0.9740 | 0.7506 |

| DeepLabV3+ | 0.2998 | 0.6705 | 0.3089 | 0.3410 | 0.9743 | 0.6527 | |

| Segformer | 0.4810 | 0.5130 | 0.7631 | 0.6008 | 0.9948 | 0.7744 | |

| SAM(center +) | 0.0068 | 0.0244 | 0.0354 | 0.0112 | 0.8706 | 0.4383 | |

| SAM(center −) | 0.0031 | 0.0216 | 0.0050 | 0.0061 | 0.9257 | 0.4644 | |

| SAM(manual) | 0.1965 | 0.2445 | 0.5747 | 0.3057 | 0.8588 | 0.1965 | |

| RSAM-Seg | 0.6195 | 0.7332 | 0.8104 | 0.7548 | 0.9785 | 0.7982 |

| Method | Score | Overall Accu | |||||

|---|---|---|---|---|---|---|---|

| RSAM-Seg | 0.7310 | 0.8301 | 0.8396 | 0.8152 | 0.9197 | 0.7646 | |

| Adapter-Feature | w/o | 0.7230 | 0.8146 | 0.8469 | 0.8049 | 0.9131 | 0.7597 |

| w/o | 0.7118 | 0.7888 | 0.7885 | 0.892 | 0.7364 | ||

| RSAM-Seg w/o Adapter-Scale | 0.7287 | 0.8173 | 0.8562 | 0.8114 | 0.9056 | 0.7608 | |

| Dataset | Score | Overall Accu | ||||

|---|---|---|---|---|---|---|

| 1% 38-Cloud | 0.5552 | 0.7389 | 0.6777 | 0.6561 | 0.7919 | 0.6412 |

| 10% 38-Cloud | 0.6733 | 0.7984 | 0.8032 | 0.7587 | 0.8738 | 0.7172 |

| 30% 38-Cloud | 0.6940 | 0.7723 | 0.8597 | 0.7770 | 0.8797 | 0.7234 |

| 70% 38-Cloud | 0.7310 | 0.8301 | 0.8396 | 0.8152 | 0.9197 | 0.7646 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Li, Y.; Yang, X.; Jiang, R.; Zhang, L. RSAM-Seg: A SAM-Based Model with Prior Knowledge Integration for Remote Sensing Image Semantic Segmentation. Remote Sens. 2025, 17, 590. https://doi.org/10.3390/rs17040590

Zhang J, Li Y, Yang X, Jiang R, Zhang L. RSAM-Seg: A SAM-Based Model with Prior Knowledge Integration for Remote Sensing Image Semantic Segmentation. Remote Sensing. 2025; 17(4):590. https://doi.org/10.3390/rs17040590

Chicago/Turabian StyleZhang, Jie, Yunxin Li, Xubing Yang, Rui Jiang, and Li Zhang. 2025. "RSAM-Seg: A SAM-Based Model with Prior Knowledge Integration for Remote Sensing Image Semantic Segmentation" Remote Sensing 17, no. 4: 590. https://doi.org/10.3390/rs17040590

APA StyleZhang, J., Li, Y., Yang, X., Jiang, R., & Zhang, L. (2025). RSAM-Seg: A SAM-Based Model with Prior Knowledge Integration for Remote Sensing Image Semantic Segmentation. Remote Sensing, 17(4), 590. https://doi.org/10.3390/rs17040590