Infrared Imaging Detection for Hazardous Gas Leakage Using Background Information and Improved YOLO Networks

Abstract

:1. Introduction

- We employ background estimation and image synthesis methods for the first time to incorporate background information into gas plume images, seeking to address the challenge of the feature texture information of leaking gas plume targets being easily disrupted by complex backgrounds. This approach significantly enhances the learning capacity of existing neural networks regarding the motion characteristics of gas plume targets and reduces the difficulty in manual dataset labeling.

- To effectively manage the characteristics of weak and unfixed gas plume targets, we introduce a multi-scale, deformable, large-kernel convolution gas plume attention enhancement module (MSDC-AEM). This module is designed to flexibly capture the diverse features of gas plume targets, improving the overall network’s perception of gas plume features.

- We integrate an enhanced C2f-WTConv module, based on wavelet convolution, into the neck section of the YOLO network. This module strengthens the learning of gas plume features from deep features, ensuring that gas plume targets can still be accurately identified even under complex background conditions.

2. Related Works

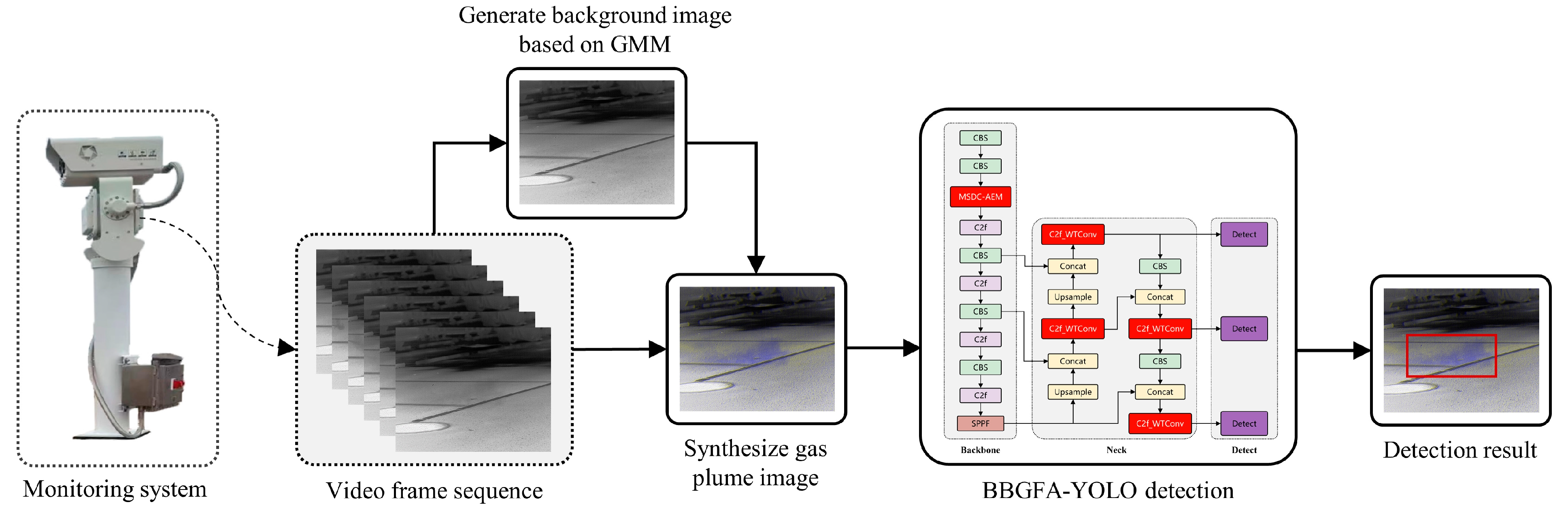

3. Methods

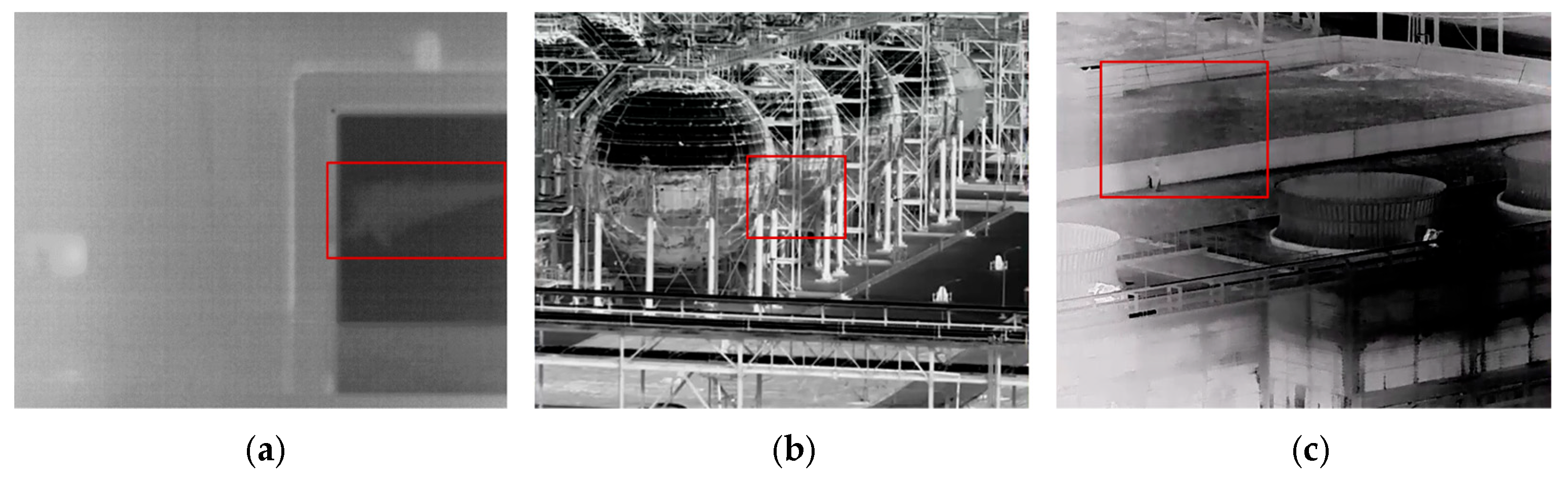

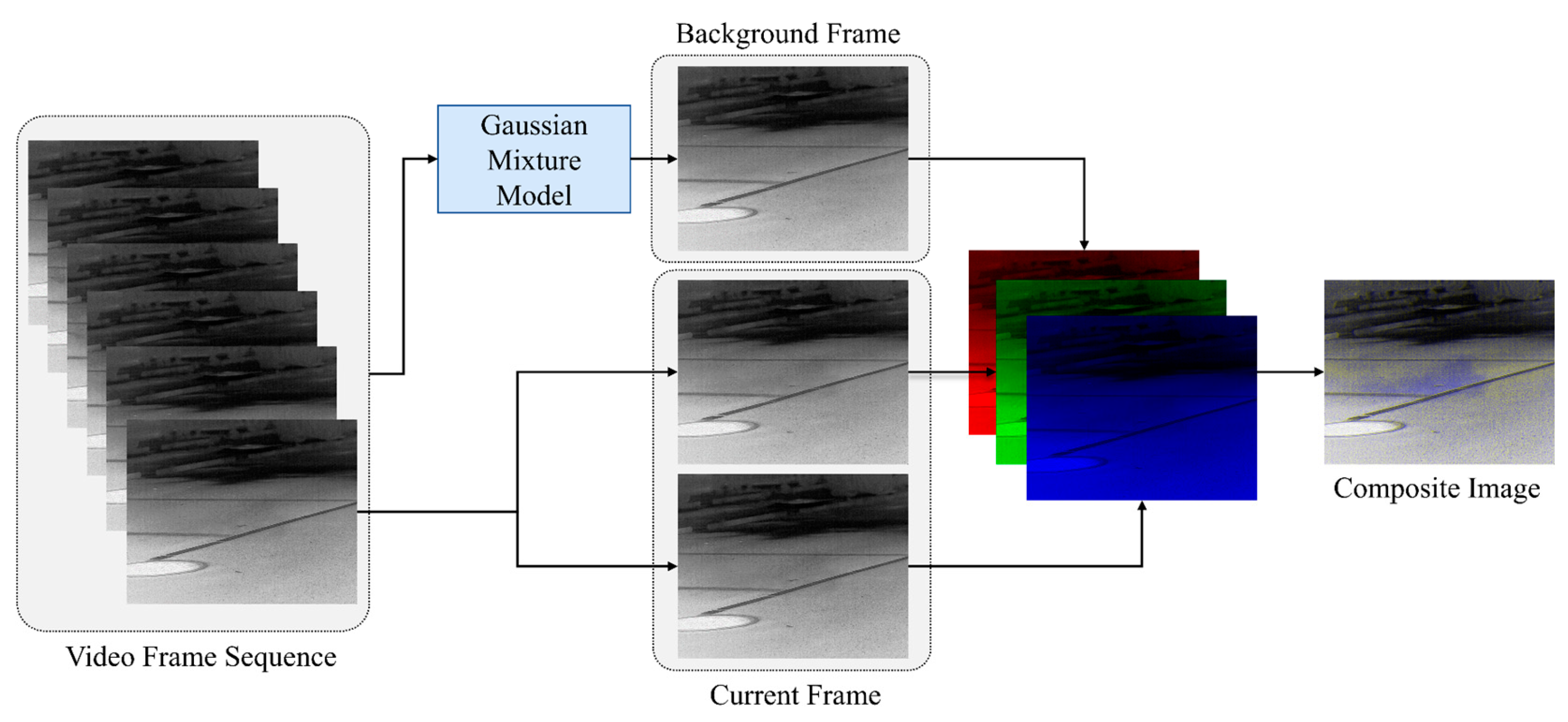

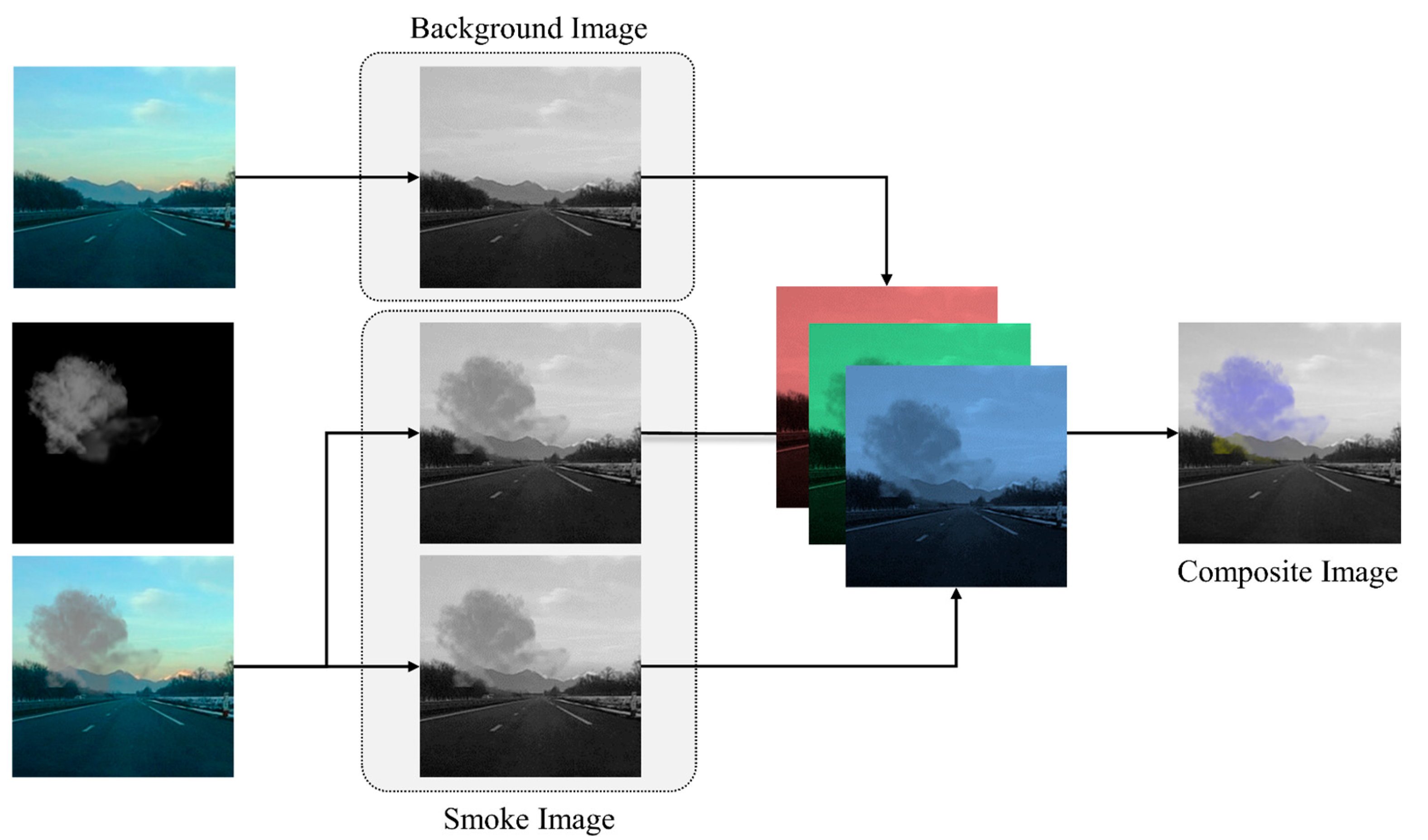

3.1. Synthesis of Gas Plume Images Based on Reference Backgrounds

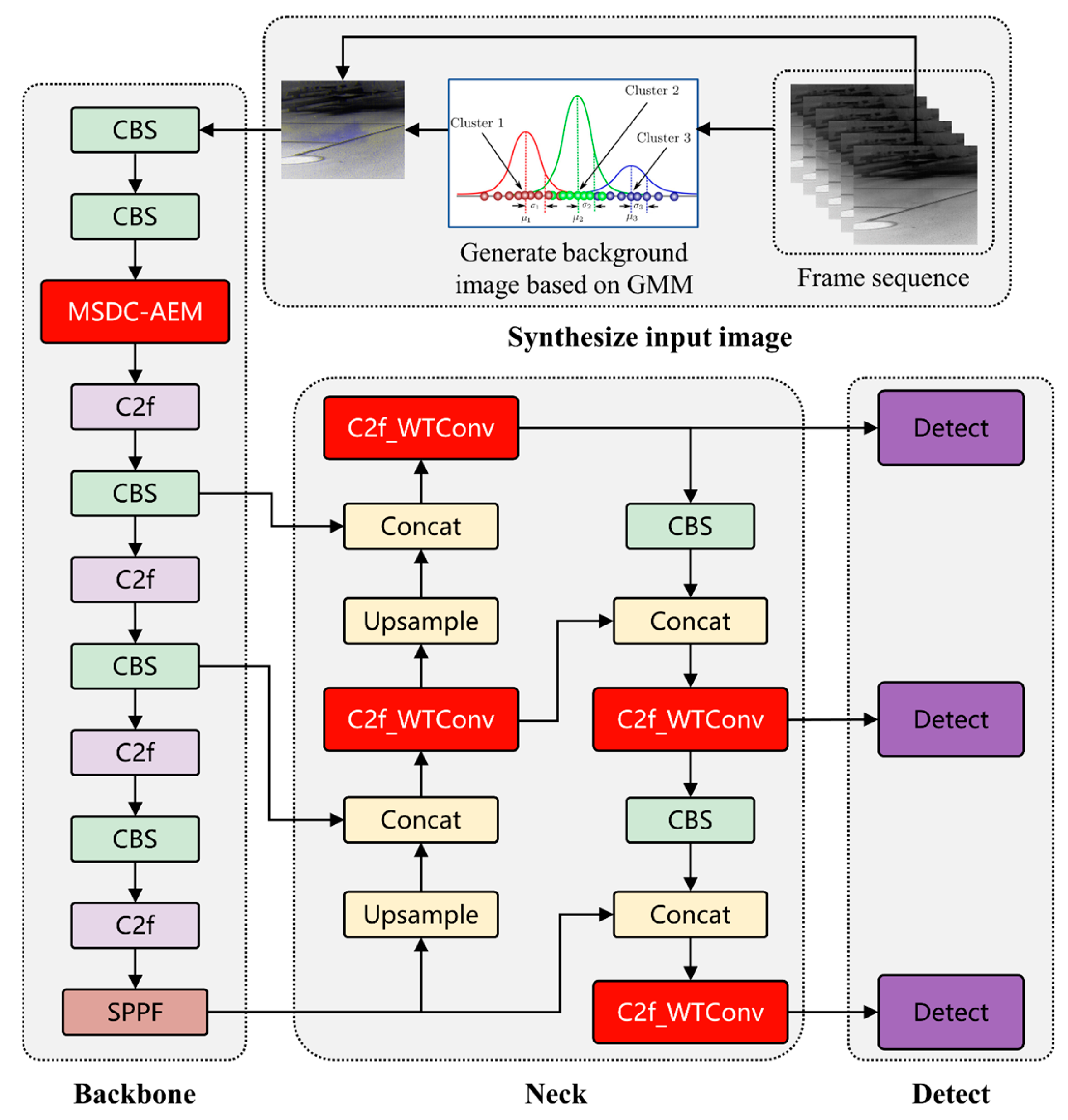

3.2. BBGFA-YOLO Network Architecture

3.3. Gas Feature Attention Enhancement Module, MSDC-AEM

3.4. C2f-WTConv Module Based on Deformable Convolution Improvement

3.5. Pre-Training Method for Transfer Learning Based on Reference Background

4. Experiments

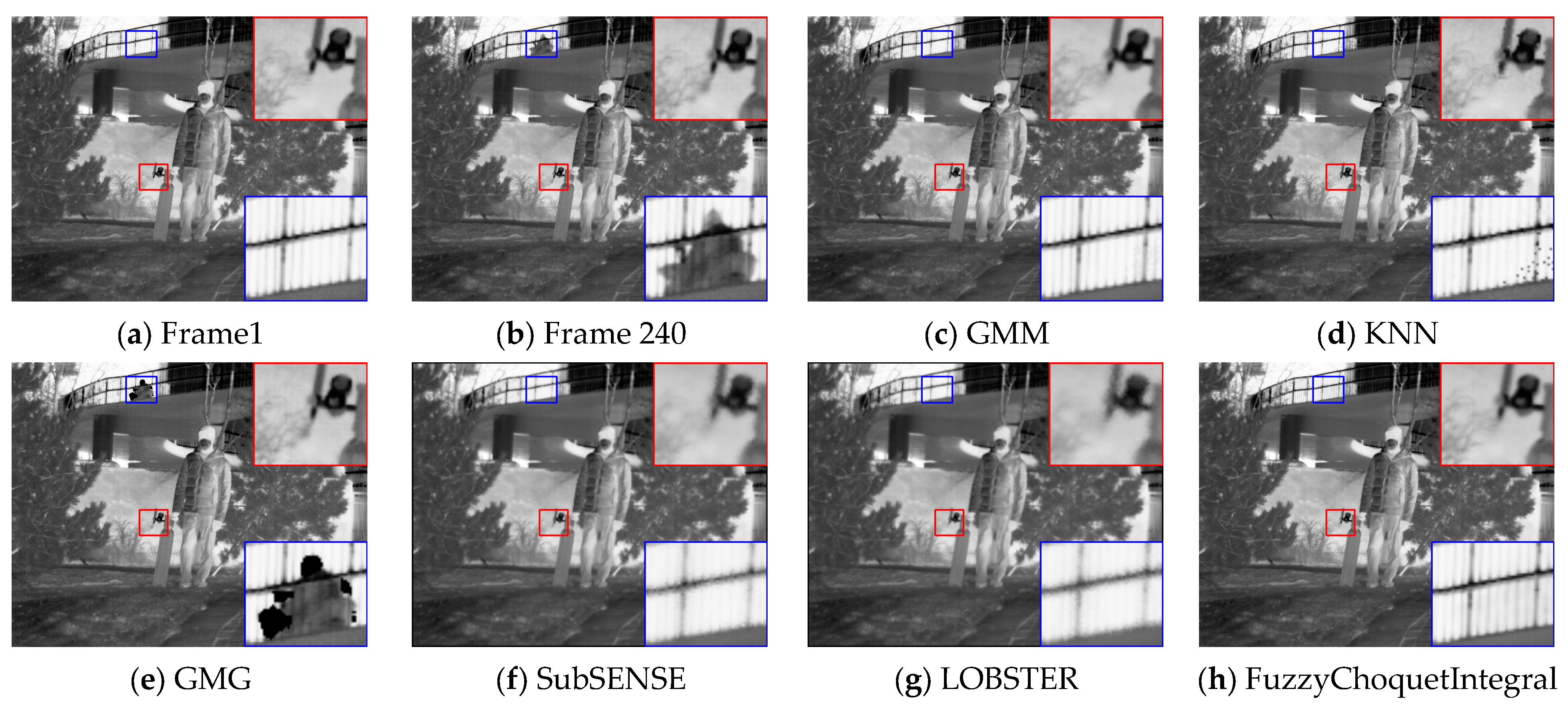

4.1. Comparative Experiments on Background Estimation Models

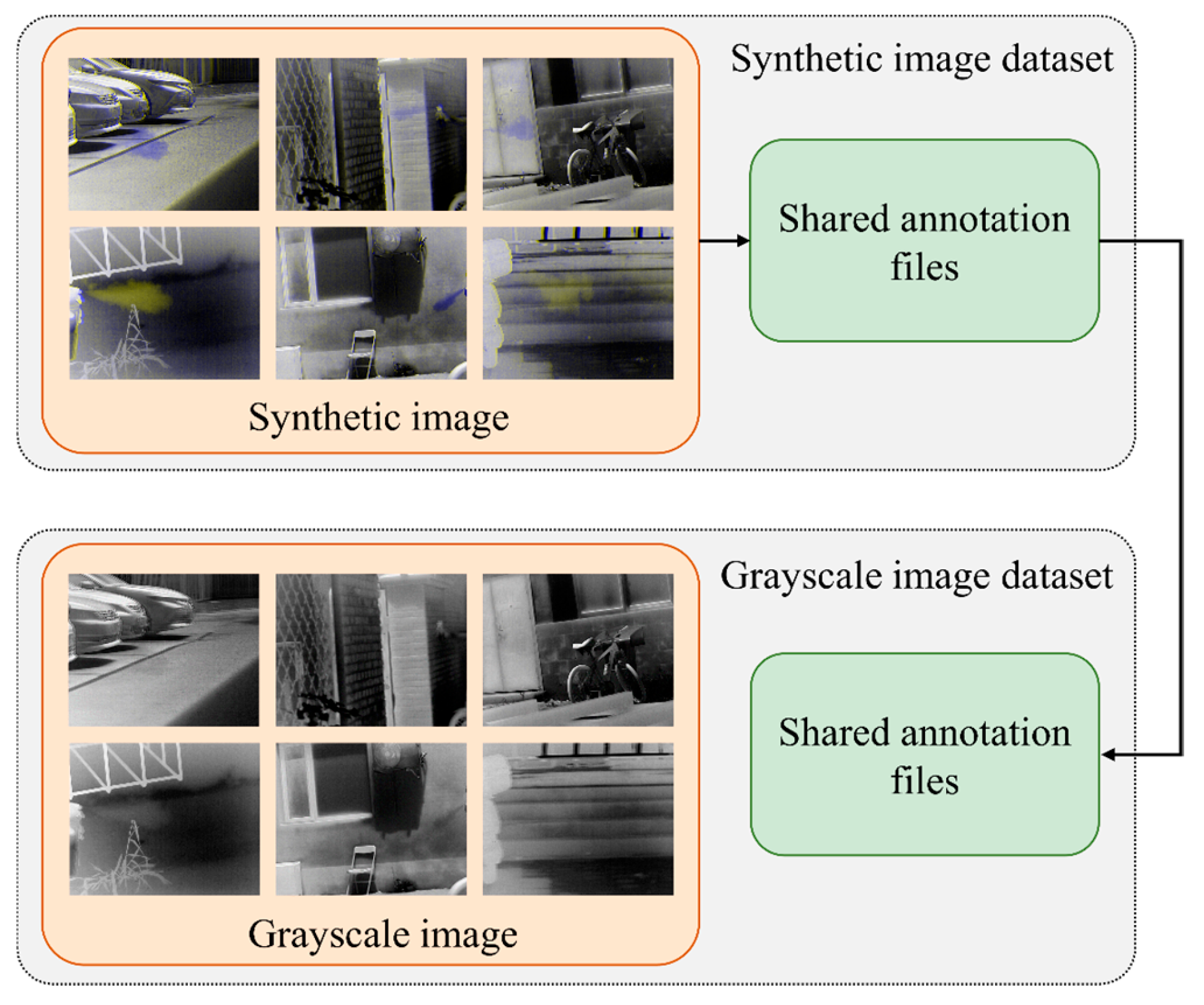

4.2. Dataset Preparation

4.3. Training Configuration and Evaluation Indicators

4.4. Comparison with Classical Target Detection Models

4.5. Ablation Experiments

- I.

- Training with the gas plume grayscale image dataset;

- II.

- Training with the gas plume synthetic color image dataset;

- III.

- Adding the multi-scale gas attention enhancement module, MSDC-AEM;

- IV.

- Adding the refined feature extraction module, C2f-WTConv;

- V.

- Adding the synthetic color smoke dataset for transfer learning.

4.6. Visualization Experiments

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, J.; Wang, L.; Wang, M.; Gao, Y.; Jin, W. Gas Imaging Detectivity Model Combining Leakage Spot Size and Range. In Proceedings of the Thermosense: Thermal Infrared Applications XXXIV, Bellingham, WA, USA, 18 May 2012; SPIE: Bellingham, WA, USA; Volume 8354, pp. 360–371. [Google Scholar]

- Hinnrichs, M. Imaging Spectrometer for Fugitive Gas Leak Detection. In Proceedings of the Environmental Monitoring and Remediation Technologies II, Boston, MA, USA, 21 December 1999; SPIE: Bellingham, WA, USA; Volume 3853, pp. 152–161. [Google Scholar]

- Tan, Y.; Li, J.; Jin, W.; Wang, X. Model Analysis of the Sensitivity of Single-Point Sensor and IRFPA Detectors Used in Gas Leakage Detection. Infrared Laser Eng. 2014, 43, 2489–2495. [Google Scholar]

- Li, J.; Jin, W.; Wang, X.; Zhang, X. MRGC Performance Evaluation Model of Gas Leak Infrared Imaging Detection System. Opt. Express 2014, 22, A1701–A1712. [Google Scholar] [CrossRef] [PubMed]

- Lu, Q.; Li, Q.; Hu, L.; Huang, L. An Effective Low-Contrast SF6 Gas Leakage Detection Method for Infrared Imaging. IEEE Trans. Instrum. Meas. 2021, 70, 5009009. [Google Scholar] [CrossRef]

- Bhatt, R.; Gokhan Uzunbas, M.; Hoang, T.; Whiting, O.C. Segmentation of Low-Level Temporal Plume Patterns From IR Video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Wang, J.; Tchapmi, L.P.; Ravikumar, A.P.; McGuire, M.; Bell, C.S.; Zimmerle, D.; Savarese, S.; Brandt, A.R. Machine Vision for Natural Gas Methane Emissions Detection Using an Infrared Camera. Appl. Energy 2020, 257, 113998. [Google Scholar] [CrossRef]

- Badawi, D.; Pan, H.; Cetin, S.C.; Enis Cetin, A. Computationally Efficient Spatio-Temporal Dynamic Texture Recognition for Volatile Organic Compound (VOC) Leakage Detection in Industrial Plants. IEEE J. Sel. Top. Signal Process. 2020, 14, 676–687. [Google Scholar] [CrossRef]

- Tan, J.; Cao, Y.; Wang, F.; Xia, X.; Xu, Z. VOCs Leakage Detection Based on Weak Temporal Attention Asymmetric 3D Convolution. In Proceedings of the 2022 International Conference on Advanced Robotics and Mechatronics (ICARM), Guilin, China, 9 July 2022; IEEE: Piscataway, NJ, USA; pp. 200–205. [Google Scholar]

- Shi, J.; Chang, Y.; Xu, C.; Khan, F.; Chen, G.; Li, C. Real-Time Leak Detection Using an Infrared Camera and Faster R-CNN Technique. Comput. Chem. Eng. 2020, 135, 106780. [Google Scholar] [CrossRef]

- Lin, H.; Gu, X.; Hu, J.; Gu, X. Gas Leakage Segmentation in Industrial Plants. In Proceedings of the 2020 Chinese Automation Congress (CAC), Shanghai, China, 6 November 2020; IEEE: Piscataway, NJ, USA; pp. 1639–1644. [Google Scholar]

- Wang, J.; Ji, J.; Ravikumar, A.P.; Savarese, S.; Brandt, A.R. VideoGasNet: Deep Learning for Natural Gas Methane Leak Classification Using an Infrared Camera. Energy 2022, 238, 121516. [Google Scholar] [CrossRef]

- Xu, S.; Wang, X.; Sun, Q.; Dong, K. MWIRGas-YOLO: Gas Leakage Detection Based on Mid-Wave Infrared Imaging. Sensors 2024, 24, 4345. [Google Scholar] [CrossRef]

- Yao, J.; Xiong, Z.; Li, S.; Yu, Z.; Liu, Y. TSFF-Net: A Novel Lightweight Network for Video Real-Time Detection of SF6 Gas Leaks. Expert Syst. Appl. 2024, 247, 123219. [Google Scholar] [CrossRef]

- Stauffer, C.; Grimson, W.E.L. Adaptive Background Mixture Models for Real-Time Tracking. In Proceedings of the 1999 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (Cat. No PR00149), Fort Collins, CO, USA, 23–25 June 1999; Volume 2, pp. 246–252. [Google Scholar]

- Azad, R.; Niggemeier, L.; Hüttemann, M.; Kazerouni, A.; Aghdam, E.K.; Velichko, Y.; Bagci, U.; Merhof, D. Beyond Self-Attention: Deformable Large Kernel Attention for Medical Image Segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 1287–1297. [Google Scholar]

- Finder, S.E.; Amoyal, R.; Treister, E.; Freifeld, O. Wavelet Convolutions for Large Receptive Fields. In Proceedings of the Computer Vision—ECCV 2024, Milan, Italy, 29 September–4 October 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2025; pp. 363–380. [Google Scholar]

- Cheng, H.-Y.; Yin, J.-L.; Chen, B.-H.; Yu, Z.-M. Smoke 100k: A Database for Smoke Detection. In Proceedings of the 2019 IEEE 8th Global Conference on Consumer Electronics (GCCE), Osaka, Japan, 15–18 October 2019; pp. 596–597. [Google Scholar]

- Zivkovic, Z.; Van Der Heijden, F. Efficient Adaptive Density Estimation per Image Pixel for the Task of Background Subtraction. Pattern Recognit. Lett. 2006, 27, 773–780. [Google Scholar] [CrossRef]

- Godbehere, A.B.; Matsukawa, A.; Goldberg, K. Visual Tracking of Human Visitors under Variable-Lighting Conditions for a Responsive Audio Art Installation. In Proceedings of the 2012 American Control Conference (ACC), Montreal, QC, Canada, 27–29 June 2012; IEEE: Piscataway, NJ, USA; pp. 4305–4312. [Google Scholar]

- St-Charles, P.-L.; Bilodeau, G.-A.; Bergevin, R. Flexible Background Subtraction with Self-Balanced Local Sensitivity. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; IEEE: Piscataway, NJ, USA; pp. 414–419. [Google Scholar]

- St-Charles, P.-L.; Bilodeau, G.-A. Improving Background Subtraction Using Local Binary Similarity Patterns. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Steamboat Springs, CO, USA, 24–26 March 2014; IEEE: Piscataway, NJ, USA; pp. 509–515. [Google Scholar]

- El Baf, F.; Bouwmans, T.; Vachon, B. Fuzzy Integral for Moving Object Detection. In Proceedings of the 2008 IEEE International Conference on Fuzzy Systems (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–6 June 2008; pp. 1729–1736. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Zhang, Y.; Guo, Z.; Wu, J.; Tian, Y.; Tang, H.; Guo, X. Real-Time Vehicle Detection Based on Improved YOLO V5. Sustainability 2022, 14, 12274. [Google Scholar] [CrossRef]

- YOLOv8: State-of-the-Art Computer Vision Model. Available online: https://yolov8.com/ (accessed on 7 March 2025).

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection 2024. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Bin, J.; Bahrami, Z.; Rahman, C.A.; Du, S.; Rogers, S.; Liu, Z. Foreground Fusion-Based Liquefied Natural Gas Leak Detection Framework From Surveillance Thermal Imaging. IEEE Trans. Emerg. Top. Comput. Intell. 2023, 7, 1151–1162. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations From Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Method | MSE ↓ | PSNR ↑ | SSIM ↑ | Time (ms) ↓ |

|---|---|---|---|---|

| GMM | 51.85 | 31.48 | 0.941 | 12.2/ 0.53(GPU) |

| KNN | 206.05 | 28.79 | 0.870 | 13.8 |

| GMG | 154.00 | 28.80 | 0.876 | 12.1 |

| SubSENSE | 241.46 | 24.30 | 0.873 | 116.1 |

| LOBSTER | 230.76 | 24.50 | 0.856 | 81.6 |

| FuzzyChoquetIntegral | 241.45 | 24.31 | 0.873 | 61.4 |

| Network | Dataset | AP50 (%) ↑ | AP50-95 (%) ↑ | FPR (%) ↓ | FNR (%) ↓ | Parameter (M) | GFLOPs (G) |

|---|---|---|---|---|---|---|---|

| RetinaNet | Grayscale | 74.6 | 40.1 | 9.03 | 21.7 | 37.97 | 61.26 |

| EfficientDet | Grayscale | 80.2 | 46.8 | 6.74 | 19.8 | 3.83 | 4.27 |

| YOLOv5(s) | Grayscale | 69.7 | 41.4 | 8.39 | 29.2 | 9.12 | 24.0 |

| YOLOv8(s) | Grayscale | 67.5 | 42.7 | 7.74 | 30.5 | 11.13 | 28.4 |

| YOLOv8(m) | Grayscale | 66.4 | 42.7 | 5.16 | 34.6 | 25.86 | 79.1 |

| YOLOv10(s) | Grayscale | 71.9 | 44.7 | 5.16 | 28.6 | 8.04 | 24.4 |

| OGI Faster R-CNN | Grayscale | 77.8 | 39.3 | 6.71 | 19.8 | 46.95 | 147.61 |

| BBGFA-YOLO | Grayscale | 74.2 | 45.4 | 7.15 | 28.5 | 10.47 | 52.3 |

| RetinaNet | Synthetic | 79.1 | 42.6 | 8.39 | 9.9 | 37.97 | 61.26 |

| EfficientDet | Synthetic | 92.0 | 58.1 | 3.45 | 12.3 | 3.83 | 4.27 |

| YOLOv5(s) | Synthetic | 94.2 | 62.8 | 2.58 | 11.1 | 9.12 | 24.0 |

| YOLOv8(s) | Synthetic | 94.3 | 62.5 | 1.94 | 10.5 | 11.13 | 28.4 |

| YOLOv8(m) | Synthetic | 94.2 | 62.7 | 1.29 | 12.3 | 25.96 | 79.1 |

| YOLOv10(s) | Synthetic | 92.0 | 60.1 | 3.22 | 13.7 | 8.04 | 24.4 |

| OGI Faster R-CNN | Synthetic | 88.6 | 53.2 | 4.10 | 12.7 | 46.95 | 147.61 |

| TSFF-Net | Fusion | 90.5 | 53.6 | 3.22 | 13.4 | 2.67 | 8.7 |

| FFBGD | Fusion | 90.2 | 54.3 | 3.89 | 14.8 | 55.19 | 109.16 |

| BBGFA-YOLO | Synthetic | 96.1 | 64.7 | 0.65 | 8.6 | 10.47 | 52.3 |

| Network | I | II | III | IV | V | AP50 | AP50-95 | GFLOPs |

|---|---|---|---|---|---|---|---|---|

| BBGFA-YOLO | √ | 67.5 | 42.4 | 28.4 | ||||

| √ | 94.3 | 62.5 | 28.4 | |||||

| √ | √ | 95.6 | 62.4 | 25.1 | ||||

| √ | √ | √ | 96.1 | 64.7 | 52.3 | |||

| √ | √ | √ | √ | 96.2 | 64.6 | 52.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, M.; Sheng, D.; Yuan, P.; Jin, W.; Li, L. Infrared Imaging Detection for Hazardous Gas Leakage Using Background Information and Improved YOLO Networks. Remote Sens. 2025, 17, 1030. https://doi.org/10.3390/rs17061030

Wang M, Sheng D, Yuan P, Jin W, Li L. Infrared Imaging Detection for Hazardous Gas Leakage Using Background Information and Improved YOLO Networks. Remote Sensing. 2025; 17(6):1030. https://doi.org/10.3390/rs17061030

Chicago/Turabian StyleWang, Minghe, Dian Sheng, Pan Yuan, Weiqi Jin, and Li Li. 2025. "Infrared Imaging Detection for Hazardous Gas Leakage Using Background Information and Improved YOLO Networks" Remote Sensing 17, no. 6: 1030. https://doi.org/10.3390/rs17061030

APA StyleWang, M., Sheng, D., Yuan, P., Jin, W., & Li, L. (2025). Infrared Imaging Detection for Hazardous Gas Leakage Using Background Information and Improved YOLO Networks. Remote Sensing, 17(6), 1030. https://doi.org/10.3390/rs17061030