Shiftable Leading Point Method for High Accuracy Registration of Airborne and Terrestrial LiDAR Data

Abstract

:1. Introduction

2. Related Work

2.1. Review on Registration of LiDAR Data from the Same Platform

2.1.1. Registration of Multi-Scan Terrestrial LiDAR Data

2.1.2. Registration of Airborne LiDAR Strips

2.2. Review of the Registration of Airborne and Terrestrial LiDAR Data

3. Method

3.1. Extraction of Building Corners from Airborne and Terrestrial LiDAR Data

3.2. Initial Matching of Terrestrial and Airborne Corners

- (1)

- Select three points from point set A and B, respectively; then, compute translation matrix T and rotation matrix R with the six-parameter model.

- (2)

- All points in B are converted using translation matrix T and rotation matrix R, to obtain C = {Ci, i = 0, 1, 2, …, v}. Seek the closest point Ccloset in C for each point Ai in A. If the distance from Ai to Ccloset is smaller than the determined distance threshold, the two points are considered to be matched points. If point Ccloset is the closest point for both point A1 and point A2 in A, compare distance A1Ccloest and distance A2Ccloest; the set of points that are closest together are considered to be successfully matched. Record the successfully matched point pairs in this transformation relationship as MA = {MAi, i = 1, 2, …, n} and MB = {MBi, i = 1, 2, …, n}.

- (3)

- Repeat a, b and select the transformation matrix Ri and Ti with the greatest number of matching pairs.

- (4)

- For each group of Ri and Ti , calculate the distance between the corresponding elements in MA and MB. The transformation relationship with the smallest distance is regarded as the best.

- (5)

- The initial matching of corners is obtained after all points in B have been converted by resorting to the best transformation matrix R1 and T1 (Figure 2; green circles are terrestrial corners, and black triangles are airborne corners).

3.3. Shiftable Leading Point Method for Improvement of the Geometric Accuracy of Registration

- (1)

- Register P and U using the least squares algorithm, and obtain a rotation matrix R and a translation matrix T, with which the airborne corners P are transformed as Q = {Qi, i = 0, 1, 2, …, n} (there is no leading point shift in the first iteration).

- (2)

- Calculate the three-dimensional spatial distance between conjugate points among point set P and its corresponding point set U, and obtain a one-dimensional distance matrix D = {D(Ui,Qi), i = 0, 1, 2, … n}. Calculate the overall position error of conjugate points ; the iteration is stopped if Errcurrent > thresh × Errpre , where Errcurrent is the current position error, thre is a threshold and Errpre is the former position error.

- (3)

- Seek the maximum distance in D and find its corresponding points Umax and Qmax in point sets U and Q, respectively. Shift leading point Qmax to the corresponding terrestrial corner Umax. Point set P is modified as P = {P1, P2, …, Pmax, …, Pn}.

- (4)

- Repeat procedures (1)–(3) until the iteration stops during Procedure (2). The final transformation matrix is used to register airborne and terrestrial LiDAR points, thus finishing the registration procedure.

4. Experiment and Analysis

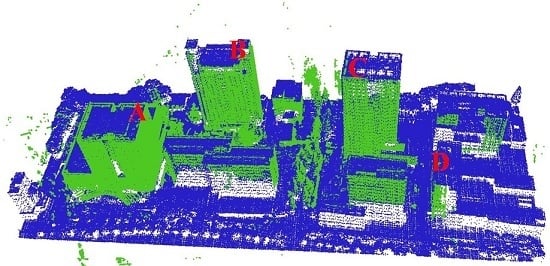

4.1. Experimental Data

4.2. Evaluation of Building Contour and Corner Extraction

| Actual Number | Correct Number | Incorrect Number | Missing Number | Correctness | Completeness | |

|---|---|---|---|---|---|---|

| Airborne contours | 99 | 79 | 4 | 20 | 95.2% | 79.8% |

| Terrestrial contours | 36 | 33 | 0 | 3 | 100% | 91.7% |

| Average Error (m) | Max Error (m) | RMSE (m) | |

|---|---|---|---|

| Airborne corners | 0.91 | 2.15 | 1.08 |

| Terrestrial corners | 0.13 | 0.19 | 0.14 |

4.3. Change of Error between Leading and Terrestrial Point Pairs during Iterations

| Average Error (m) | Max Error (m) | RMSE (m) | |

|---|---|---|---|

| FC | 0.93 | 1.94 | 1.06 |

| RC | 0.61 | 1.30 | 0.41 |

| SC | 0.31 | 0.51 | 0.34 |

4.4. Evaluation of Geometric Accuracy of LiDAR Data Registration

4.4.1. Visual Check

4.4.2. Evaluation with Common Sections

4.4.3. Quantitative Analysis using Common Building Contours

| Transect Distance (m) | Angle (Degree) | |||||

|---|---|---|---|---|---|---|

| Average | Max | RMSE | Average | Max | RMSE | |

| FC | 0.81 | 1.73 | 0.95 | 0.75 | 2.80 | 0.95 |

| RC | 0.49 | 0.96 | 0.38 | 0.71 | 1.89 | 0.60 |

| SC | 0.31 | 0.89 | 0.37 | 0.44 | 1.30 | 0.53 |

4.4.4. Quantitative Analysis using Common Ground Points

| Average | Max | RMSE | |

|---|---|---|---|

| FC | 0.51 | 0.82 | 0.56 |

| RC | 0.43 | 0.62 | 0.38 |

| SC | 0.26 | 0.46 | 0.30 |

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Bang, K.I.; Habib, A.F.; Kusevic, K.; Mrstik, P. Integration of terrestrial and airborne LiDAR data for system calibration. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, 3–11 July 2008; pp. 391–398.

- Chen, Y.; Cheng, L.; Li, M.; Wang, J.; Tong, L.; Yang, K. Multiscale grid method for detection and reconstruction of building roofs from airborne LiDAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4081–4094. [Google Scholar] [CrossRef]

- Cheng, L.; Wu, Y.; Wang, Y.; Zhong, L.; Chen, Y.; Li, M. Three-Dimensional reconstruction of large multilayer interchange bridge using airborne LiDAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 8. [Google Scholar] [CrossRef]

- Cheng, L.; Tong, L.; Wang, Y.; Li, M. Extraction of urban power lines from vehicle-borne LiDAR data. Remote Sens. 2014, 6, 3302–3320. [Google Scholar] [CrossRef]

- Puttonen, E.; Lehtomäki, M.; Kaartinen, H.; Zhu, L.; Kukko, A.; Jaakkola, A. Improved sampling for terrestrial and mobile laser scanner point cloud data. Remote Sens. 2013, 5, 1754–1773. [Google Scholar] [CrossRef]

- Eysn, L.; Pfeifer, N.; Ressl, C.; Hollaus, M.; Grafl, A.; Morsdorf, F. A practical approach for extracting tree models in forest environments based on equirectangular projections of terrestrial laser scans. Remote Sens. 2013, 5, 5424–5448. [Google Scholar] [CrossRef]

- Kang, Z.; Zhang, L.; Tuo, L.; Wang, B.; Chen, J. Continuous extraction of subway tunnel cross sections based on terrestrial point clouds. Remote Sens. 2014, 6, 857–879. [Google Scholar] [CrossRef]

- Weinmann, M.; Weinmann, M.; Hinz, S.; Jutzi, B. Fast and automatic image-based registration of TLS data. ISPRS J. Photogramm. Remote Sens. 2011, 66, S62–S70. [Google Scholar] [CrossRef]

- Heritage, G.; Large, A. Laser Scanning for the Environmental Sciences; Wiley-Blackwell: Hoboken, NJ, USA, 2009. [Google Scholar]

- Ruiz, A.; Kornus, W.; Talaya, J.; Colomer, J.L. Terrain modeling in an extremely steep mountain: A combination of airborne and terrestrial LiDAR. In Proceedings of the 20th International Society for Photogrammetry and Remote Sensing (ISPRS) Congress on Geo-imagery Bridging Continents, Istanbul, Turkey, 12–23 July 2004; pp. 1–4.

- Heckmann, T.; Bimböse, M.; Krautblatter, M.; Haas, F.; Becht, M.; Morche, D. From geotechnical analysis to quantification and modelling using LiDAR data: A study on rockfall in the Reintal catchment, Bavarian Alps, Germany. Earth Surf. Process. Landforms 2012, 37, 119–133. [Google Scholar] [CrossRef]

- Jung, S.E.; Kwak, D.A.; Park, T.; Lee, W.K.; Yoo, S. Estimating crown variables of individual trees using airborne and terrestrial laser scanners. Remote Sens. 2011, 3, 2346–2363. [Google Scholar] [CrossRef]

- Hohenthal, J.; Alho, P.; Hyyppä, J.; Hyyppä, H. Laser scanning applications in fluvial studies. Prog. Phys. Geog. 2011, 35, 782–809. [Google Scholar] [CrossRef]

- Kedzierski, M.; Fryskowska, A. Terrestrial and aerial laser scanning data integration using wavelet analysis for the purpose of 3D building modeling. Sensors 2014, 14, 12070–12092. [Google Scholar] [CrossRef] [PubMed]

- Jaw, J.J.; Chuang, T.Y. Feature-based registration of terrestrial and aerial LiDAR point clouds towards complete 3D scene. In Proceedings of the 29th Asian Conference on Remote Sensing, Colombo, Sri Lanka, 10–14 November 2008; pp. 10–14.

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Hilker, T.; Coops, N.C.; Culvenor, D.S.; Newnham, G.; Wulder, M.A.; Bater, C.W.; Siggins, A. A simple technique for co-registration of terrestrial LiDAR observations for forestry applications. Remote Sens. Lett. 2012, 3, 239–247. [Google Scholar] [CrossRef]

- Liang, X.H.; Liang, J.; Xiao, Z.Z.; Liu, J.W.; Guo, C. Study on multi-views point clouds registration. Adv. Sci. Lett. 2011, 4, 8–10. [Google Scholar]

- Wang, J. Block-to-Point fine registration in terrestrial laser scanning. Remote Sens. 2013, 5, 6921–6937. [Google Scholar] [CrossRef]

- Jaw, J.J.; Chuang, T.Y. Registration of ground-based LiDAR point clouds by means of 3D line features. J. Chin. Inst. Eng. 2008, 31, 1031–1045. [Google Scholar] [CrossRef]

- Bucksch, A.; Khoshelham, K. Localized registration of point clouds of botanic Trees. IEEE Geosci. Remote Sens. Lett. 2013, 10, 631–635. [Google Scholar] [CrossRef]

- Von Hansen, W. Robust automatic marker-free registration of terrestrial scan data. Proc. Photogramm. Comput. Vis. 2006, 36, 105–110. [Google Scholar]

- Stamos, I.; Leordeanu, M. Automated feature-based range registration of urban scenes of large scale. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 18–20 June 2003; pp. II-555–Ii-561.

- Zhang, D.; Huang, T.; Li, G.; Jiang, M. Robust algorithm for registration of building point clouds using planar patches. J. Surv. Eng. 2011, 138, 31–36. [Google Scholar] [CrossRef]

- Bae, K.H.; Lichti, D.D. A method for automated registration of unorganised point clouds. ISPRS J. Photogramm. Remote Sens. 2008, 63, 36–54. [Google Scholar] [CrossRef]

- Chen, H.; Bhanu, B. 3D free-form object recognition in range images using local surface patches. Pattern Recognit. Lett. 2007, 28, 1252–1262. [Google Scholar] [CrossRef]

- He, B.; Lin, Z.; Li, Y.F. An automatic registration algorithm for the scattered point clouds based on the curvature feature. Opt. Laser Technol. 2012, 46, 53–60. [Google Scholar] [CrossRef]

- Makadia, A.; Patterson, A.; Daniilidis, K. Fully automatic registration of 3D point clouds. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 1297–1304.

- Han, J.Y.; Perng, N.H.; Chen, H.J. LiDAR point cloud registration by image detection technique. IEEE Geosci. Remote Sens. Lett. 2013, 10, 746–750. [Google Scholar] [CrossRef]

- Al-Manasir, K.; Fraser, C.S. Registration of terrestrial laser scanner data using imagery. Photogramm. Rec. 2006, 21, 255–268. [Google Scholar] [CrossRef]

- Akca, D. Matching of 3D surfaces and their intensities. ISPRS J. Photogramm. Remote Sens. 2007, 62, 112–121. [Google Scholar] [CrossRef]

- Wang, Z.; Brenner, C. Point based registration of terrestrial laser data using intensity and geometry features. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, 3–11 July 2008; pp. 583–589.

- Kang, Z.; Li, J.; Zhang, L.; Zhao, Q.; Zlatanova, S. Automatic registration of terrestrial laser scanning point clouds using panoramic reflectance images. Sensors 2009, 9, 2621–2646. [Google Scholar] [CrossRef] [PubMed]

- Eo, Y.D.; Pyeon, M.W.; Kim, S.W.; Kim, J.R.; Han, D.Y. Coregistration of terrestrial LiDAR points by adaptive scale-invariant feature transformation with constrained geometry. Autom. Constr. 2012, 25, 49–58. [Google Scholar] [CrossRef]

- Schenk, T.; Krupnik, A.; Postolov, Y. Comparative study of surface matching algorithms. Int. Arch. Photogramm. Remote Sens. 2000, 33, 518–524. [Google Scholar]

- Maas, H.G. Least-Squares matching with airborne laser scanning data in a TIN structure. Int. Arch. Photogramm. Remote Sens. 2000, 33, 548–555. [Google Scholar]

- Bretar, F.; Pierrot-Deseilligny, M.; Roux, M. Solving the strip adjustment problem of 3D airborne lidar data. In Proceedings of Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; pp. 4734–4737.

- Lee, J.; Yu, K.; Kim, Y.; Habib, A.F. Adjustment of discrepancies between LiDAR data strips using linear features. IEEE Geosci. Remote Sens. Lett. 2007, 4, 475–479. [Google Scholar] [CrossRef]

- Habib, A.F.; Kersting, A.P.; Bang, K.I.; Zhai, R.; Al-Durgham, M. A strip adjustment procedure to mitigate the impact of inaccurate mounting parameters in parallel LiDAR strips. Photogramm. Rec. 2009, 24, 171–195. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Almhdie, A.; Léger, C.; Deriche, M.; Lédée, R. 3D registration using a new implementation of the ICP algorithm based on a comprehensive lookup matrix: Application to medical imaging. Pattern Recogn. Lett. 2007, 28, 1523–1533. [Google Scholar] [CrossRef]

- Greenspan, M.; Yurick, M. Approximate KD tree search for efficient ICP. In Proceedings of the Fourth IEEE International Conference on 3-D Digital Imaging and Modeling, Kingston, ON, Canada, 6–10 October 2003; pp. 442–448.

- Liu, Y. Improving ICP with easy implementation for free-form surface matching. Pattern Recogn. 2004, 37, 211–226. [Google Scholar] [CrossRef]

- Sharp, G.C.; Lee, S.W.; Wehe, D.K. ICP registration using invariant features. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 90–102. [Google Scholar] [CrossRef]

- Böhm, J.; Haala, N. Efficient integration of aerial and terrestrial laser data for virtual city modeling using LASERMAPs. In Proceedings of the ISPRS Workshop Laser scanning, Enschede, The Netherlands, 13–15 June 2007; pp. 192–197.

- Von Hansen, W.; Gross, H.; Thoennessen, U. Line-based registration of terrestrial and airborne LiDAR data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 161–166. [Google Scholar]

- Cheng, L.; Zhao, W.; Han, P.; Zhang, W.; Shan, J.; Liu, Y.; Li, M. Building region derivation from LiDAR data using a reversed iterative mathematic morphological algorithm. Opt. Commun. 2013, 286, 244–250. [Google Scholar] [CrossRef]

- Sampath, A.; Shan, J. Building boundary tracing and regularization from airborne LiDAR point clouds. Photogramm. Eng. Remote Sens. 2007, 73, 805–182. [Google Scholar] [CrossRef]

- Cheng, L.; Tong, L.; Li, M.; Liu, Y. Semi-automatic registration of airborne and terrestrial laser scanning data using building corner matching with boundaries as reliablity check. Remote Sens. 2013, 5, 6260–6283. [Google Scholar] [CrossRef]

- Li, B.J.; Li, Q.Q.; Shi, W.Z.; Wu, F.F. Feature extraction and modeling of urban building from vehicle-borne laser scanning data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 934–940. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, L.; Tong, L.; Wu, Y.; Chen, Y.; Li, M. Shiftable Leading Point Method for High Accuracy Registration of Airborne and Terrestrial LiDAR Data. Remote Sens. 2015, 7, 1915-1936. https://doi.org/10.3390/rs70201915

Cheng L, Tong L, Wu Y, Chen Y, Li M. Shiftable Leading Point Method for High Accuracy Registration of Airborne and Terrestrial LiDAR Data. Remote Sensing. 2015; 7(2):1915-1936. https://doi.org/10.3390/rs70201915

Chicago/Turabian StyleCheng, Liang, Lihua Tong, Yang Wu, Yanming Chen, and Manchun Li. 2015. "Shiftable Leading Point Method for High Accuracy Registration of Airborne and Terrestrial LiDAR Data" Remote Sensing 7, no. 2: 1915-1936. https://doi.org/10.3390/rs70201915