Fusion of Airborne Discrete-Return LiDAR and Hyperspectral Data for Land Cover Classification

Abstract

:1. Introduction

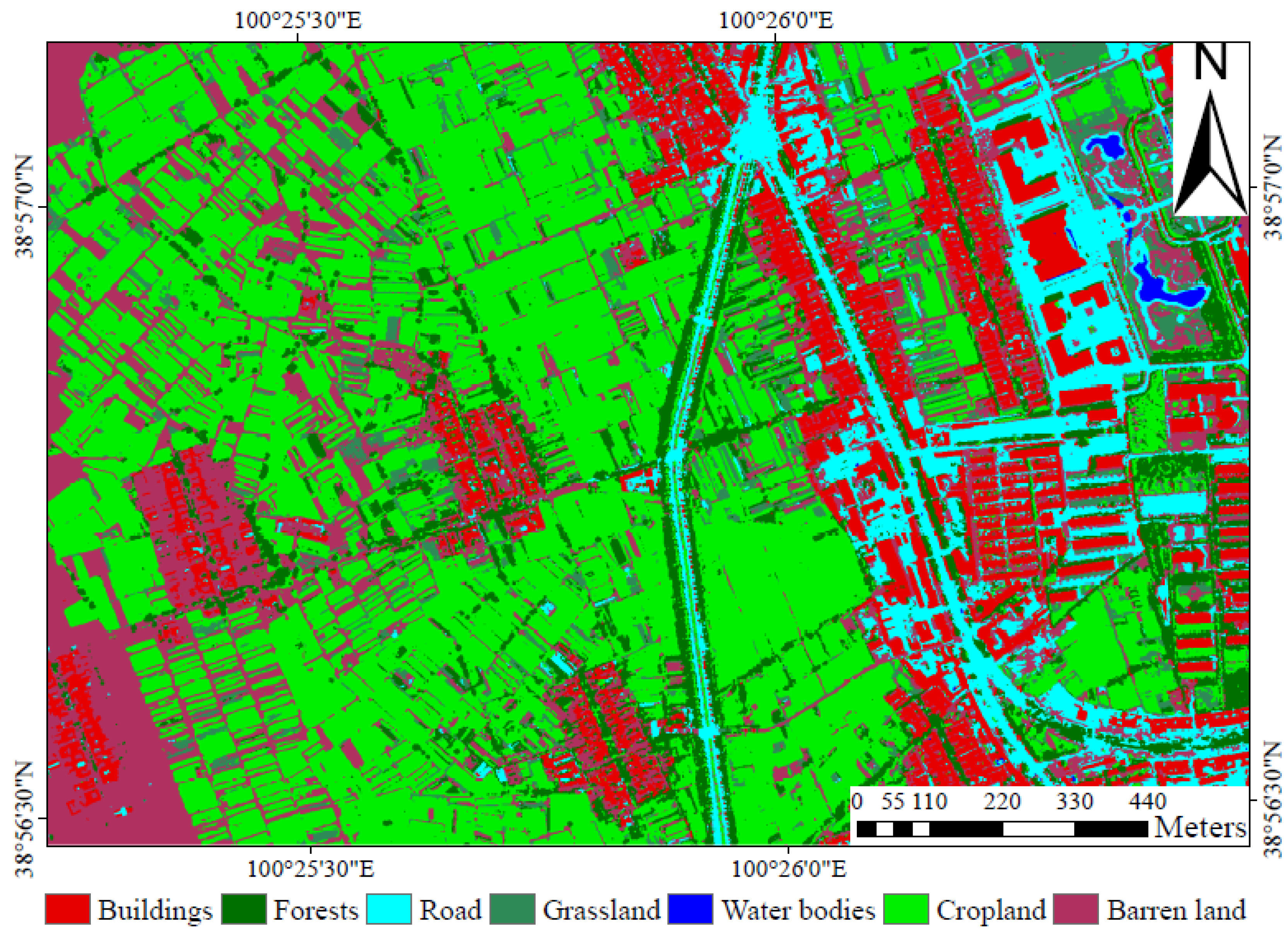

2. Study Areas and Data

2.1. Study Areas

2.2. Field Measurements

2.3. Remotely Sensed Data Acquisition and Processing

2.3.1. Hyperspectral Data

| Parameter | Specification |

|---|---|

| Flight height | 2000 m |

| Swath width | 1500 m |

| Number of spectral bands | 48 |

| Spatial resolution | 1.0 m |

| Spectral resolution | 7.2 nm |

| Field of view | 40° |

| Wavelength range | 380–1050 nm |

2.3.2. LiDAR Data

3. Methodology

3.1. Fusion of the LiDAR and CASI Data

3.2. Classification Methods

| Class | Number of Training Sample (Points) | Number of Validation Sample (Points) |

|---|---|---|

| Buildings | 160 | 80 |

| Road | 225 | 113 |

| Water bodies | 44 | 22 |

| Forests | 397 | 198 |

| Grassland | 278 | 139 |

| Cropland | 307 | 154 |

| Barren land | 183 | 92 |

| 1594 | 798 |

3.3. Accuracy Assessment

4. Results and Discussion

| Resolution (meters) | Accuracy Metrics | LiDAR and CASI Data Alone | Fused Data of LiDAR and CASI | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| LiDAR Data | CASI Data | PCA | Layer stacking | |||||||||

| MLC | SVM | MLC | SVM | MLC | SVM | MLC | SVM | |||||

| 1 | OA (%) | 25 | 75.6 | 84.7 | 88.7 | 91.9 | 95.3 | 92.9 | 97.8 | |||

| K | 0.181 | 0.582 | 0.758 | 0.836 | 0.868 | 0.923 | 0.888 | 0.964 | ||||

| 2 | OA (%) | 27.8 | 78.2 | 82.8 | 87.1 | 90.7 | 96.5 | 92.1 | 97.7 | |||

| K | 0.203 | 0.654 | 0.743 | 0.825 | 0.862 | 0.943 | 0.884 | 0.963 | ||||

| 4 | OA (%) | 34.1 | 74.5 | 80.2 | 85.6 | 89.9 | 94.5 | 91.2 | 96.3 | |||

| K | 0.262 | 0.626 | 0.726 | 0.803 | 0.86 | 0.922 | 0.878 | 0.948 | ||||

| 8 | OA (%) | 37.8 | 69.5 | 76.6 | 81.1 | 87.1 | 88.9 | 89 | 92.8 | |||

| K | 0.292 | 0.579 | 0.694 | 0.757 | 0.829 | 0.849 | 0.855 | 0.904 | ||||

| 10 | OA (%) | 39.3 | 68.2 | 75.6 | 80.5 | 85.9 | 87.9 | 87.7 | 91.2 | |||

| K | 0.302 | 0.561 | 0.692 | 0.746 | 0.814 | 0.836 | 0.84 | 0.883 | ||||

| 20 | OA (%) | 53.5 | 62.6 | 74.8 | 77.3 | 81.5 | 81.2 | 84.5 | 86.5 | |||

| K | 0.401 | 0.445 | 0.679 | 0.692 | 0.741 | 0.723 | 0.783 | 0.805 | ||||

| 30 | OA (%) | 48.7 | 60.3 | 73.1 | 71.2 | 81.4 | 79.7 | 83.3 | 82 | |||

| K | 0.298 | 0.401 | 0.619 | 0.589 | 0.696 | 0.644 | 0.727 | 0.686 | ||||

| Mean | OA (%) | 38 | 69.8 | 78.3 | 81.6 | 86.9 | 89.1 | 88.7 | 92 | |||

| K | 0.277 | 0.55 | 0.702 | 0.75 | 0.81 | 0.834 | 0.836 | 0.879 | ||||

4.1. Comparison of Classification Results with Different Datasets

4.2. Classification Performance of the MLC and SVM Classifiers

| Class Name | SVM Classifier | MLC Classifier | |||||||

|---|---|---|---|---|---|---|---|---|---|

| PA (%) | UA(%) | EO (%) | EC (%) | PA (%) | UA (%) | EO (%) | EC (%) | ||

| Buildings | 94.78 | 99.58 | 5.22 | 0.42 | 94.73 | 97.85 | 5.27 | 2.15 | |

| Road | 91.15 | 94.58 | 8.85 | 5.42 | 70.15 | 88.42 | 29.85 | 11.58 | |

| Water bodies | 99.99 | 99.92 | 0.01 | 0.08 | 96.05 | 100 | 3.95 | 0 | |

| Forests | 84.53 | 98.07 | 15.47 | 1.93 | 81.95 | 36.33 | 18.05 | 63.67 | |

| Grassland | 93.44 | 80.13 | 6.56 | 19.87 | 89.55 | 56.01 | 10.45 | 43.99 | |

| Cropland | 99.8 | 95.42 | 0.2 | 4.58 | 99.53 | 94.2 | 0.47 | 5.8 | |

| Barren land | 94.7 | 94.48 | 5.3 | 5.52 | 89.76 | 99.4 | 10.24 | 0.6 | |

| OA (%) | 97.8 | 92.9 | |||||||

| K | 0.964 | 0.888 | |||||||

4.3. Influence of the Spatial Resolution on the Classification Accuracy

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Foody, G.M. Assessing the accuracy of land cover change with imperfect ground reference data. Remote Sens. Environ. 2010, 114, 2271–2285. [Google Scholar] [CrossRef]

- Turner, B.L.; Lambin, E.F.; Reenberg, A. The emergence of land change science for global environmental change and sustainability. Proc. Natl. Acad. Sci. USA 2007, 104, 20666–20671. [Google Scholar] [CrossRef] [PubMed]

- Gong, P.; Wang, J.; Yu, L.; Zhao, Y.; Zhao, Y.; Liang, L.; Niu, Z.; Huang, X.; Fu, H.; Liu, S.; et al. Finer resolution observation and monitoring of global land cover: First mapping results with Landsat TM and ETM+ data. Int. J. Remote Sens. 2012, 34, 2607–2654. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, B.; Wang, L.-M.; Wang, N. A self-trained semisupervised svm approach to the remote sensing land cover classification. Comput. Geosci. 2013, 59, 98–107. [Google Scholar] [CrossRef]

- Fieber, K.D.; Davenport, I.J.; Ferryman, J.M.; Gurney, R.J.; Walker, J.P.; Hacker, J.M. Analysis of full-waveform LiDAR data for classification of an orange orchard scene. ISPRS J. Photogramm. Remote Sens. 2013, 82, 63–82. [Google Scholar] [CrossRef]

- Chasmer, L.; Hopkinson, C.; Veness, T.; Quinton, W.; Baltzer, J. A decision-tree classification for low-lying complex land cover types within the zone of discontinuous permafrost. Remote Sens. Environ. 2014, 143, 73–84. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. Assessment of the effectiveness of support vector machines for hyperspectral data. Future Gener. Comput. Syst. 2004, 20, 1215–1225. [Google Scholar] [CrossRef]

- Szuster, B.W.; Chen, Q.; Borger, M. A comparison of classification techniques to support land cover and land use analysis in tropical coastal zones. Appl. Geogr. 2011, 31, 525–532. [Google Scholar] [CrossRef]

- Waske, B.; Benediktsson, J.A. Fusion of support vector machines for classification of multisensor data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3858–3866. [Google Scholar] [CrossRef]

- Berger, C.; Voltersen, M.; Hese, O.; Walde, I.; Schmullius, C. Robust extraction of urban land cover information from HSR multi-spectral and LiDAR data. IEEE J. Sel. Topics Appl. Earth Obs. Remote Sens. 2013, 6, 2196–2211. [Google Scholar] [CrossRef]

- Klein, I.; Gessner, U.; Kuenzer, C. Regional land cover mapping and change detection in central asia using MODIS time-series. Appl. Geogr. 2012, 35, 219–234. [Google Scholar] [CrossRef]

- Adam, E.; Mutanga, O.; Odindi, J.; Abdel-Rahman, E.M. Land-use/cover classification in a heterogeneous coastal landscape using Rapideye imagery: Evaluating the performance of random forest and support vector machines classifiers. Int. J. Remote Sens. 2014, 3440–3458. [Google Scholar] [CrossRef]

- Puertas, O.L.; Brenning, A.; Meza, F.J. Balancing misclassification errors of land cover classification maps using support vector machines and Landsat imagery in the Maipo River Basin (Central Chile, 1975–2010). Remote Sens. Environ. 2013, 137, 112–123. [Google Scholar] [CrossRef]

- Lu, D.; Chen, Q.; Wang, G.; Moran, E.; Batistella, M.; Zhang, M.; Vaglio Laurin, G.; Saah, D. Aboveground forest biomass estimation with Landsat and LiDAR data and uncertainty analysis of the estimates. Int. J. For. Res. 2012, 2012, 1–16. [Google Scholar] [CrossRef]

- Edenius, L.; Vencatasawmy, C.P.; Sandström, P.; Dahlberg, U. Combining satellite imagery and ancillary data to map snowbed vegetation important to Reindeer rangifer tarandus. Arct. Antarct. Alp. Res. 2003, 35, 150–157. [Google Scholar] [CrossRef]

- Amarsaikhan, D.; Blotevogel, H.H.; van Genderen, J.L.; Ganzorig, M.; Gantuya, R.; Nergui, B. Fusing high-resolution sar and optical imagery for improved urban land cover study and classification. Int. J. Image Data Fusion 2010, 1, 83–97. [Google Scholar] [CrossRef]

- Dong, P. LiDAR data for characterizing linear and planar geomorphic markers in tectonic geomorphology. J. Geophys. Remote Sens. 2015. [Google Scholar] [CrossRef]

- Richardson, J.J.; Moskal, L.M.; Kim, S.-H. Modeling approaches to estimate effective leaf area index from aerial discrete-return LiDAR. Agric. For. Meteorol. 2009, 149, 1152–1160. [Google Scholar] [CrossRef]

- Lee, H.; Slatton, K.C.; Roth, B.E.; Cropper, W.P. Prediction of forest canopy light interception using three-dimensional airborne LiDAR data. Int. J. Remote Sens. 2009, 30, 189–207. [Google Scholar] [CrossRef]

- Brennan, R.; Webster, T.L. Object-oriented land cover classification of LiDAR-derived surfaces. Can. J. Remote Sens. 2006, 32, 162–172. [Google Scholar] [CrossRef]

- Ko, C.; Sohn, G.; Remmel, T.; Miller, J. Hybrid ensemble classification of tree genera using airborne LiDAR data. Remote Sens. 2014, 6, 11225–11243. [Google Scholar] [CrossRef]

- Luo, S.; Wang, C.; Pan, F.; Xi, X.; Li, G.; Nie, S.; Xia, S. Estimation of wetland vegetation height and leaf area index using airborne laser scanning data. Ecol. Indicators 2015, 48, 550–559. [Google Scholar] [CrossRef]

- Tang, H.; Brolly, M.; Zhao, F.; Strahler, A.H.; Schaaf, C.L.; Ganguly, S.; Zhang, G.; Dubayah, R. Deriving and validating leaf area index (LAI) at multiple spatial scales through LiDAR remote sensing: A case study in Sierra National Forest. Remote Sens. Environ. 2014, 143, 131–141. [Google Scholar] [CrossRef]

- Hopkinson, C.; Chasmer, L. Testing LiDAR models of fractional cover across multiple forest EcoZones. Remote Sens. Environ. 2009, 113, 275–288. [Google Scholar] [CrossRef]

- Glenn, N.F.; Spaete, L.P.; Sankey, T.T.; Derryberry, D.R.; Hardegree, S.P.; Mitchell, J.J. Errors in LiDAR-derived shrub height and crown area on sloped terrain. J. Arid Environ. 2011, 75, 377–382. [Google Scholar] [CrossRef]

- Zhao, K.; Popescu, S.; Nelson, R. LiDAR remote sensing of forest biomass: A scale-invariant estimation approach using airborne lasers. Remote Sens. Environ. 2009, 113, 182–196. [Google Scholar] [CrossRef]

- Antonarakis, A.S.; Richards, K.S.; Brasington, J. Object-based land cover classification using airborne LiDAR. Remote Sens. Environ. 2008, 112, 2988–2998. [Google Scholar] [CrossRef]

- Qin, Y.; Li, S.; Vu, T.-T.; Niu, Z.; Ban, Y. Synergistic application of geometric and radiometric features of LiDAR data for urban land cover mapping. Opt. Express 2015, 23, 13761–13775. [Google Scholar] [CrossRef] [PubMed]

- Sherba, J.; Blesius, L.; Davis, J. Object-based classification of abandoned logging roads under heavy canopy using LiDAR. Remote Sens. 2014, 6, 4043–4060. [Google Scholar] [CrossRef]

- Mallet, C.; Bretar, F.; Roux, M.; Soergel, U.; Heipke, C. Relevance assessment of full-waveform LiDAR data for urban area classification. ISPRS J. Photogramm. Remote Sens. 2011, 66, S71–S84. [Google Scholar] [CrossRef]

- Hellesen, T.; Matikainen, L. An object-based approach for mapping shrub and tree cover on grassland habitats by use of LiDAR and CIR orthoimages. Remote Sens. 2013, 5, 558–583. [Google Scholar] [CrossRef]

- Stojanova, D.; Panov, P.; Gjorgjioski, V.; Kobler, A.; Džeroski, S. Estimating vegetation height and canopy cover from remotely sensed data with machine learning. Ecol. Inf. 2010, 5, 256–266. [Google Scholar] [CrossRef]

- Debes, C.; Merentitis, A.; Heremans, R.; Hahn, J.; Frangiadakis, N.; van Kasteren, T.; Wenzhi, L.; Bellens, R.; Pizurica, A.; Gautama, S.; et al. Hyperspectral and LiDAR data fusion: Outcome of the 2013 GRSS data fusion contest. IEEE J. Sel. Topics Appl. Earth Obs. Remote Sens. 2014, 7, 2405–2418. [Google Scholar] [CrossRef]

- Reese, H.; Nyström, M.; Nordkvist, K.; Olsson, H. Combining airborne laser scanning data and optical satellite data for classification of alpine vegetation. Int. J. Appl. Earth Obs. Geoinf. 2014, 27, 81–90. [Google Scholar] [CrossRef]

- Wulder, M.A.; Han, T.; White, J.C.; Sweda, T.; Tsuzuki, H. Integrating profiling LiDAR with Landsat data for regional boreal forest canopy attribute estimation and change characterization. Remote Sens. Environ. 2007, 110, 123–137. [Google Scholar] [CrossRef]

- Buddenbaum, H.; Seeling, S.; Hill, J. Fusion of full-waveform LiDAR and imaging spectroscopy remote sensing data for the characterization of forest stands. Int. J. Remote Sens. 2013, 34, 4511–4524. [Google Scholar] [CrossRef]

- Kim, Y. Improved classification accuracy based on the output-level fusion of high-resolution satellite images and airborne LiDAR data in urban area. IEEE Geosci. Remote Sens. Lett. 2014, 11, 636–640. [Google Scholar]

- Hartfield, K.A.; Landau, K.I.; Leeuwen, W.J.D. Fusion of high resolution aerial multispectral and LiDAR data: Land cover in the context of urban mosquito habitat. Remote Sens. 2011, 3, 2364–2383. [Google Scholar] [CrossRef]

- Singh, K.K.; Vogler, J.B.; Shoemaker, D.A.; Meentemeyer, R.K. LiDAR-Landsat data fusion for large-area assessment of urban land cover: Balancing spatial resolution, data volume and mapping accuracy. ISPRS J. Photogramm. Remote Sens. 2012, 74, 110–121. [Google Scholar] [CrossRef]

- Bork, E.W.; Su, J.G. Integrating LiDAR data and multispectral imagery for enhanced classification of rangeland vegetation: A meta analysis. Remote Sens. Environ. 2007, 111, 11–24. [Google Scholar] [CrossRef]

- Koetz, B.; Morsdorf, F.; Linden, S.; Curt, T.; Allgöwer, B. Multi-source land cover classification for forest fire management based on imaging spectrometry and LiDAR data. For. Ecol. Manag. 2008, 256, 263–271. [Google Scholar] [CrossRef]

- Liu, X.; Bo, Y. Object-based crop species classification based on the combination of airborne hyperspectral images and LiDAR data. Remote Sens. 2015, 7, 922–950. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; Castillejo-González, I.L.; de la Orden, M.S.; Porras, A.G.-F. Combining LiDAR intensity with aerial camera data to discriminate agricultural land uses. Comput. Electron. Agric. 2012, 84, 36–46. [Google Scholar] [CrossRef]

- Mutlu, M.; Popescu, S.; Stripling, C.; Spencer, T. Mapping surface fuel models using LiDAR and multispectral data fusion for fire behavior. Remote Sens. Environ. 2008, 112, 274–285. [Google Scholar] [CrossRef]

- Jones, T.G.; Coops, N.C.; Sharma, T. Assessing the utility of airborne hyperspectral and LiDAR data for species distribution mapping in the coastal Pacific Northwest, Canada. Remote Sens. Environ. 2010, 114, 2841–2852. [Google Scholar] [CrossRef]

- Holmgren, J.; Persson, Å.; Söderman, U. Species identification of individual trees by combining high resolution LiDAR data with multi-spectral images. Int. J. Remote Sens. 2008, 29, 1537–1552. [Google Scholar] [CrossRef]

- Vaglio Laurin, G.; del Frate, F.; Pasolli, L.; Notarnicola, C.; Guerriero, L.; Valentini, R. Discrimination of vegetation types in Alpine sites with alos palsar-, radarsat-2-, and LiDAR-derived information. Int. J. Remote Sens. 2013, 34, 6898–6913. [Google Scholar] [CrossRef]

- Ghosh, A.; Fassnacht, F.E.; Joshi, P.K.; Koch, B. A framework for mapping tree species combining hyperspectral and LiDAR data: Role of selected classifiers and sensor across three spatial scales. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 49–63. [Google Scholar] [CrossRef]

- Dalponte, M.; Orka, H.O.; Gobakken, T.; Gianelle, D.; Naesset, E. Tree species classification in boreal forests with hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2632–2645. [Google Scholar] [CrossRef]

- Li, X.; Cheng, G.; Liu, S.; Xiao, Q.; Ma, M.; Jin, R.; Che, T.; Liu, Q.; Wang, W.; Qi, Y.; et al. Heihe watershed allied telemetry experimental research (HiWATER): Scientific objectives and experimental design. B. Am. Meteorol. Soc. 2013, 94, 1145–1160. [Google Scholar] [CrossRef]

- Geerling, G.W.; Labrador-Garcia, M.; Clevers, J.G.P.W.; Ragas, A.M.J.; Smits, A.J.M. Classification of floodplain vegetation by data fusion of spectral (CASI) and LiDAR data. Int. J. Remote Sens. 2007, 28, 4263–4284. [Google Scholar] [CrossRef]

- Xiao, Q.; Wen, J. HiWATER: Airborne LiDAR raw data in the middle reaches of the Heihe River Basin. Inst. Remote Sens. Digi. Earth Chin. Aca. Sci. 2014. [Google Scholar] [CrossRef]

- Salah, M.; Trinder, J.C.; Shaker, A. Performance evaluation of classification trees for building detection from aerial images and LiDAR data: A comparison of classification trees models. Int. J. Remote Sens. 2011, 32, 5757–5783. [Google Scholar] [CrossRef]

- Luo, S.; Wang, C.; Xi, X.; Pan, F. Estimating fpar of maize canopy using airborne discrete-return LiDAR data. Opt. Express 2014, 22, 5106–5117. [Google Scholar] [CrossRef]

- Donoghue, D.; Watt, P.; Cox, N.; Wilson, J. Remote sensing of species mixtures in conifer plantations using LiDAR height and intensity data. Remote Sens. Environ. 2007, 110, 509–522. [Google Scholar] [CrossRef]

- Kwak, D.-A.; Cui, G.; Lee, W.-K.; Cho, H.-K.; Jeon, S.W.; Lee, S.-H. Estimating plot volume using LiDAR height and intensity distributional parameters. Int. J. Remote Sens. 2014, 35, 4601–4629. [Google Scholar] [CrossRef]

- Wang, C.; Glenn, N.F. Integrating LiDAR intensity and elevation data for terrain characterization in a forested area. IEEE Geosci. Remote Sens. Lett. 2009, 6, 463–466. [Google Scholar] [CrossRef]

- Yan, W.Y.; Shaker, A.; Habib, A.; Kersting, A.P. Improving classification accuracy of airborne LiDAR intensity data by geometric calibration and radiometric correction. ISPRS J. Photogramm. Remote Sens. 2012, 67, 35–44. [Google Scholar] [CrossRef]

- Höfle, B.; Pfeifer, N. Correction of laser scanning intensity data: Data and model-driven approaches. ISPRS J. Photogramm. Remote Sens. 2007, 62, 415–433. [Google Scholar] [CrossRef]

- Chust, G.; Galparsoro, I.; Borja, Á.; Franco, J.; Uriarte, A. Coastal and estuarine habitat mapping, using LiDAR height and intensity and multi-spectral imagery. Estuar. Coast. Shelf Sci. 2008, 78, 633–643. [Google Scholar] [CrossRef]

- Lucas, R.M.; Lee, A.C.; Bunting, P.J. Retrieving forest biomass through integration of CASI and LiDAR data. Int. J. Remote Sens. 2008, 29, 1553–1577. [Google Scholar] [CrossRef]

- Pohl, C.; Van Genderen, J.L. Review article multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef]

- Zhang, J. Multi-source remote sensing data fusion: Status and trends. Int. J. Image Data Fusion 2010, 1, 5–24. [Google Scholar] [CrossRef]

- Dópido, I.; Villa, A.; Plaza, A.; Gamba, P. A quantitative and comparative assessment of unmixing-based feature extraction techniques for hyperspectral image classification. IEEE J. Sel. Topics Appl. Earth Obs. Remote Sens. 2012, 5, 421–435. [Google Scholar] [CrossRef]

- Huang, B.; Zhang, H.; Yu, L. Improving Landsat ETM+ urban area mapping via spatial and angular fusion with misr multi-angle observations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 101–109. [Google Scholar] [CrossRef]

- Srivastava, P.K.; Han, D.; Rico-Ramirez, M.A.; Bray, M.; Islam, T. Selection of classification techniques for land use/land cover change investigation. Adv. Space Res. 2012, 50, 1250–1265. [Google Scholar] [CrossRef]

- García, M.; Riaño, D.; Chuvieco, E.; Salas, J.; Danson, F.M. Multispectral and LiDAR data fusion for fuel type mapping using support vector machine and decision rules. Remote Sens. Environ. 2011, 115, 1369–1379. [Google Scholar] [CrossRef]

- Hladik, C.; Schalles, J.; Alber, M. Salt marsh elevation and habitat mapping using hyperspectral and LiDAR data. Remote Sens. Environ. 2013, 139, 318–330. [Google Scholar] [CrossRef]

- Oommen, T.; Misra, D.; Twarakavi, N.K.C.; Prakash, A.; Sahoo, B.; Bandopadhyay, S. An objective analysis of support vector machine based classification for remote sensing. Math. Geosci. 2008, 40, 409–424. [Google Scholar] [CrossRef]

- Huang, C.; Davis, L.S.; Townshend, J.R.G. An assessment of support vector machines for land cover classification. Int. J. Remote Sens. 2002, 23, 725–749. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. Some issues in the classification of dais hyperspectral data. Int. J. Remote Sens. 2006, 27, 2895–2916. [Google Scholar] [CrossRef]

- Paneque-Gálvez, J.; Mas, J.-F.; Moré, G.; Cristóbal, J.; Orta-Martínez, M.; Luz, A.C.; Guèze, M.; Macía, M.J.; Reyes-García, V. Enhanced land use/cover classification of heterogeneous tropical landscapes using support vector machines and textural homogeneity. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 372–383. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Ke, Y.; Quackenbush, L.J.; Im, J. Synergistic use of quickbird multispectral imagery and LiDAR data for object-based forest species classification. Remote Sens. Environ. 2010, 114, 1141–1154. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, S.; Wang, C.; Xi, X.; Zeng, H.; Li, D.; Xia, S.; Wang, P. Fusion of Airborne Discrete-Return LiDAR and Hyperspectral Data for Land Cover Classification. Remote Sens. 2016, 8, 3. https://doi.org/10.3390/rs8010003

Luo S, Wang C, Xi X, Zeng H, Li D, Xia S, Wang P. Fusion of Airborne Discrete-Return LiDAR and Hyperspectral Data for Land Cover Classification. Remote Sensing. 2016; 8(1):3. https://doi.org/10.3390/rs8010003

Chicago/Turabian StyleLuo, Shezhou, Cheng Wang, Xiaohuan Xi, Hongcheng Zeng, Dong Li, Shaobo Xia, and Pinghua Wang. 2016. "Fusion of Airborne Discrete-Return LiDAR and Hyperspectral Data for Land Cover Classification" Remote Sensing 8, no. 1: 3. https://doi.org/10.3390/rs8010003

APA StyleLuo, S., Wang, C., Xi, X., Zeng, H., Li, D., Xia, S., & Wang, P. (2016). Fusion of Airborne Discrete-Return LiDAR and Hyperspectral Data for Land Cover Classification. Remote Sensing, 8(1), 3. https://doi.org/10.3390/rs8010003