Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s within-Field Spatial Variations Using Color Images Acquired from UAV-camera System

Abstract

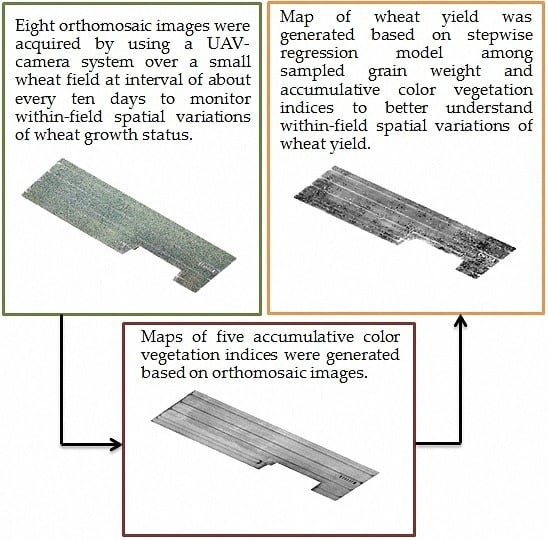

:1. Introduction

2. Materials and Methods

2.1. Field Site and Acquisition of UAV Images

2.2. Radiometric Normalization of Multi-Temporal UAV Images

2.3. Field Sampling of Wheat Yield

2.4. Generating CVI Maps Based on UAV Images

3. Result and Discussion

3.1. Monitoring of Wheat Growth Status

3.2. Evaluation of Radiometric Normalization of Multi-Temporal Orthomosaic Images

3.3. Mapping of Wheat Yield’s within-Field Spatial Variations

4. Uncertainties, Errors, and Accuracies

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. In Proceedings of the Third ERTS Symposium, NASA, Greenbelt, MD, USA, 10–14 December 1973; pp. 309–317. Available online: https://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19740022614.pdf (accessed on 10 December 2015).

- Roberto, B.; Paolo, R. On the use of NDVI profiles as a tool for agricultural statistics: The case study of wheat yield estimate and forecast in Emilia Romagna. Remote Sens. Environ. 1993, 45, 311–326. [Google Scholar] [CrossRef]

- Basnyat, P.; McConkey, B.; Lafond, G.P.; Moulin, A.; Pelcat, Y. Optimal time for remote sensing to relate to crop grain yield on the Canadian prairies. Can. J. Plant Sci. 2004, 84, 97–103. [Google Scholar]

- David, J.M. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar]

- Robert, N.C. Determining the prevalence of certain cereal crop diseases by means of aerial photography. Hilgardia 1956, 26, 223–286. [Google Scholar] [CrossRef]

- Thomas, J.; Trout, L.; Johnson, F.; Jim, G. Remote Sensing of Canopy Cover in Horticultural Crops. Hortscience 2008, 43, 333–337. [Google Scholar]

- Du, M.M.; Noboru, N. Multi-temporal Monitoring of Wheat Growth through Correlation Analysis of Satellite Images, Unmanned Aerial Vehicle Images with Ground Variable. In Proceedings of the 5th IFAC Conference on Sensing, Control and Automation Technologies for Agriculture AGRICONTROL, Seattle, WA, USA, 14–17 August 2016.

- Eisenbeiss, H. A Mini Unmanned Aerial Vehicle (UAV): System Overview and Image Acquisition. In Image Acquisition. In Proceedings of the International Workshop on Processing and Visualization Using High-Resolution Imagery, Pitsanulok, Thailand, 18–20 November 2004.

- The Third National Agricultural Census Using UAV Remote Sensing. Available online: http://www.uavwrj.com/gne/427.html (accessed on 25 October 2016).

- James, B.C.; Randolph, H.W. Introduction to Remote Sensing, 5th ed.; The Guilford Press: New York, NY, USA, 2011; pp. 72–102. [Google Scholar]

- Wang, X.Q.; Wang, M.M.; Wang, S.Q.; Wu, Y.D. Extraction of vegetation information from visible unmanned aerial vehicle images. Trans. Chin. Soc. Agric. Eng. 2015, 31, 152–159. [Google Scholar]

- Feng, J.L.; Liu, K.; Zhu, Y.H.; Li, Y.; Liu, L.; Meng, L. Application of unmanned aerial vehicles to mangrove resources monitoring. Trop. Geogr. 2015, 35, 35–42. [Google Scholar]

- Raymond Hunt, E., Jr.; Dean Hively, W.; Fujikawa, S.J.; McCarty, G.W. Acquisition of NIR-Green-Blue Digital Photographs from Unmanned Aircraft for Crop Monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

- Rasmussen, J.; Ntakos, G.; Nielsen, J.; Svensgaard, J.; Poulsen, R.N.; Christensen, S. Are vegetation indices derived from consumer-grade cameras mounted on UAVs sufficiently reliable for assessing experimental plots? Eur. J. Agron. 2016, 74, 75–92. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Peña, J.M.; de Castro, A.I.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color Indices for Weed Identification under Various Soil, Residue and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Cui, R.X.; Liu, Y.D.; Fu, J.D. Estimation of winter wheat biomass using visible spectral and BP based artificial neural networks. Spectrosc. Spectr. Anal. 2015, 35, 2596–2601. [Google Scholar]

- Global Wheat Production from 1990/1991 to 2016/2017 (in Million Metric Tons). Available online: https://www.statista.com/statistics/267268/production-of-wheat-worldwide-since-1990/ (accessed on 20 October 2016).

- Wu, Q.; Wang, C.; Fang, J.J.; Ji, J.W. Field monitoring of wheat seedling stage with hyperspectral imaging. Int. J. Agric. Biol. Eng. 2016, 9, 143–148. [Google Scholar]

- Zhang, F.; Wu, B.; Luo, Z. Winter wheat yield predicting for America using remote sensing data. J. Remote Sens. 2004, 8, 611–617. [Google Scholar]

- Ministry of Agriculture, Forestry, and Fisheries. Available online: http://www.maff.go.jp/ (accessed on 10 November 2016).

- Trimble SPS855 GNSS Modular Receiver. Available online: http://construction.trimble.com/sites/default/files/literature-files/2016-07/SPS855-Data-Sheet-EN.pdf (accessed on 12 November 2015).

- Weather Time-Memuro. Available online: https://weather.time-j.net/Stations/JP/memuro (accessed on 2 December 2016).

- Júnior, O.A.D.C.; Guimarães, R.F.; Silva, N.C.; Gillespie, A.R.; Gomes, R.A.T.; Silva, C.R.; De Carvalho, A.P.F. Radiometric normalization of temporal images combining automatic detection of pseudo-invariant features from the distance and similarity spectral measures, density scatterplot analysis, and robust regression. Remote Sens. 2013, 5, 2763–2794. [Google Scholar] [CrossRef]

- Torres-Sanchez, J.; Lopez-Granados, F.; De Castro, A.; Pena-Barragan, J.M. Configuration and Specifications of an Unmanned Aerial Vehicle (UAV) for Early Site Specific Weed Management. PLoS ONE 2013, 8, e58210. [Google Scholar] [CrossRef]

- Gamon, J.A.; Surfus, J.S. Assessing leaf pigment content and activity with a reflectometer. New Phytol. 2013, 143, 105–117. [Google Scholar] [CrossRef]

- Michael, K.; Dana, R. Algorithmic stability and sanity-check bounds for leave-one-out cross-validation. Neural Comput. 1999, 11, 1427–1453. [Google Scholar]

- Feng, Q.S.; Gao, X.H. Application of Excel in the Experiment Teaching of Leave-One-Out Cross Validation. Exp. Sci. Technol. 2015, 13, 49–51. [Google Scholar]

| UAV Specification | Camera Specification | ||

|---|---|---|---|

| Overall diameter × height (mm) | φ1009 × 254 | Weight (gram) | 345 |

| Rated operation weight (kg) | 3.2 | Camera resolution | 4000 × 6000 pixels |

| Endurance (min) | 15–20 | Focal length (mm) | 16 |

| Range (km) | 10 | Sensor size(mm) | 23.5 × 15.6 |

| Maximus flying altitude (m) | 250 | ||

| Wheat Variety | Sample ID. | Sample Position | Sampled Grain Weight (kg, Converted to 12.5% Moisture) | |

|---|---|---|---|---|

| Latitude | Longitude | |||

| Kitahonami | 1 | 42.901657 | 142.978642 | 1.01 |

| 2 | 42.901097 | 142.979570 | 0.86 | |

| 3 | 42.900532 | 142.980497 | 0.84 | |

| 4 | 42.900180 | 142.981070 | 0.91 | |

| 5 | 42.900360 | 142.981302 | 0.85 | |

| 6 | 42.900694 | 142.980759 | 0.79 | |

| 7 | 42.900972 | 142.980286 | 0.82 | |

| 8 | 42.901202 | 142.979924 | 0.80 | |

| 9 | 42.901476 | 142.979472 | 0.83 | |

| Image Date | Band | Slope | Intercept | R-Squared |

|---|---|---|---|---|

| 2 June | Blue | 1.01 | 6.55 | 0.83 |

| Green | 0.77 | 45.38 | 0.96 | |

| Red | 0.74 | 52.52 | 0.94 | |

| 10 June | Blue | 0.86 | 15.43 | 0.73 |

| Green | 0.87 | 7.96 | 0.91 | |

| Red | 0.85 | 11.55 | 0.91 | |

| 19 June | Blue | 0.77 | 42.65 | 0.91 |

| Green | 1.09 | −15.09 | 0.94 | |

| Red | 1.10 | −17.33 | 0.93 | |

| 25 June | Blue | 0.99 | 4.14 | 0.97 |

| Green | 0.95 | 9.623 | 0.99 | |

| Red | 0.92 | 12.56 | 0.99 | |

| 2 July | Blue | 0.82 | 28.49 | 0.94 |

| Green | 1.03 | −13.48 | 0.98 | |

| Red | 1.03 | −12.15 | 0.98 | |

| 10 July | Blue | 0.88 | 22.77 | 0.81 |

| Green | 1.01 | −2.42 | 0.94 | |

| Red | 1.06 | −10.30 | 0.93 | |

| 16 July | Blue | 0.79 | 47.09 | 0.97 |

| Green | 1.16 | −15.27 | 0.98 | |

| Red | 1.16 | −16.84 | 0.97 | |

| 24 July | Blue | 0.94 | 12.01 | 0.80 |

| Green | 0.89 | 17.61 | 0.97 | |

| Red | 0.9031 | 16.2754 | 0.97 |

| Sample ID | VDVI | NGRDI | NGBDI | GRRI | ExG |

|---|---|---|---|---|---|

| 1 | 0.71 | 1.00 | 0.50 | 10.40 | 333.17 |

| 2 | 0.65 | 1.16 | 0.29 | 10.86 | 264.30 |

| 3 | 0.62 | 1.04 | 0.29 | 10.49 | 286.08 |

| 4 | 0.64 | 1.11 | 0.27 | 10.70 | 296.75 |

| 5 | 0.67 | 1.10 | 0.33 | 10.65 | 301.90 |

| 6 | 0.62 | 1.12 | 0.24 | 10.70 | 276.56 |

| 7 | 0.62 | 1.19 | 0.18 | 11.00 | 259.11 |

| 8 | 0.63 | 1.16 | 0.25 | 10.81 | 244.95 |

| 9 | 0.62 | 1.19 | 0.18 | 10.96 | 259.36 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, M.; Noguchi, N. Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s within-Field Spatial Variations Using Color Images Acquired from UAV-camera System. Remote Sens. 2017, 9, 289. https://doi.org/10.3390/rs9030289

Du M, Noguchi N. Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s within-Field Spatial Variations Using Color Images Acquired from UAV-camera System. Remote Sensing. 2017; 9(3):289. https://doi.org/10.3390/rs9030289

Chicago/Turabian StyleDu, Mengmeng, and Noboru Noguchi. 2017. "Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s within-Field Spatial Variations Using Color Images Acquired from UAV-camera System" Remote Sensing 9, no. 3: 289. https://doi.org/10.3390/rs9030289

APA StyleDu, M., & Noguchi, N. (2017). Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s within-Field Spatial Variations Using Color Images Acquired from UAV-camera System. Remote Sensing, 9(3), 289. https://doi.org/10.3390/rs9030289