Papaya Tree Detection with UAV Images Using a GPU-Accelerated Scale-Space Filtering Method

Abstract

:1. Introduction

2. Materials and Methods

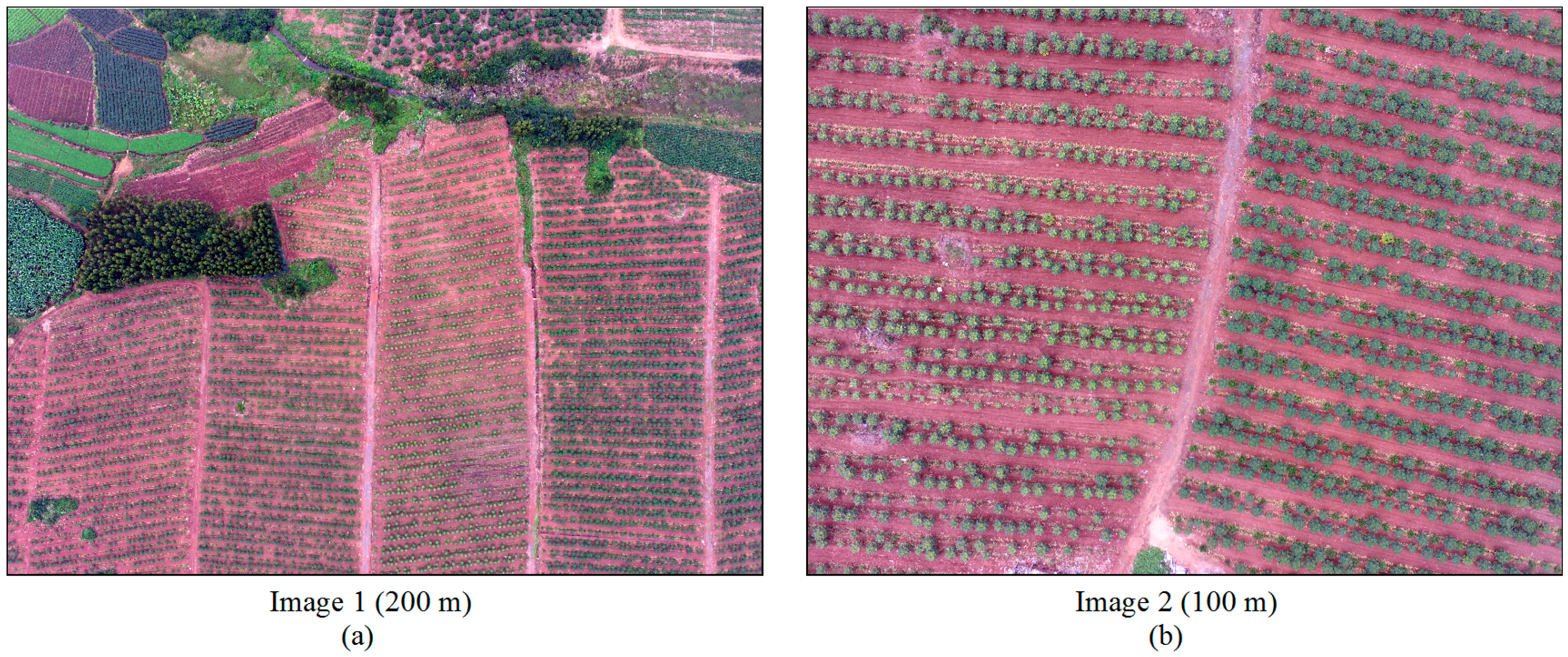

2.1. Study Area

2.2. Equipment: Unmanned Aerial Vehicles (UAV) and Computers

2.3. Validation Data

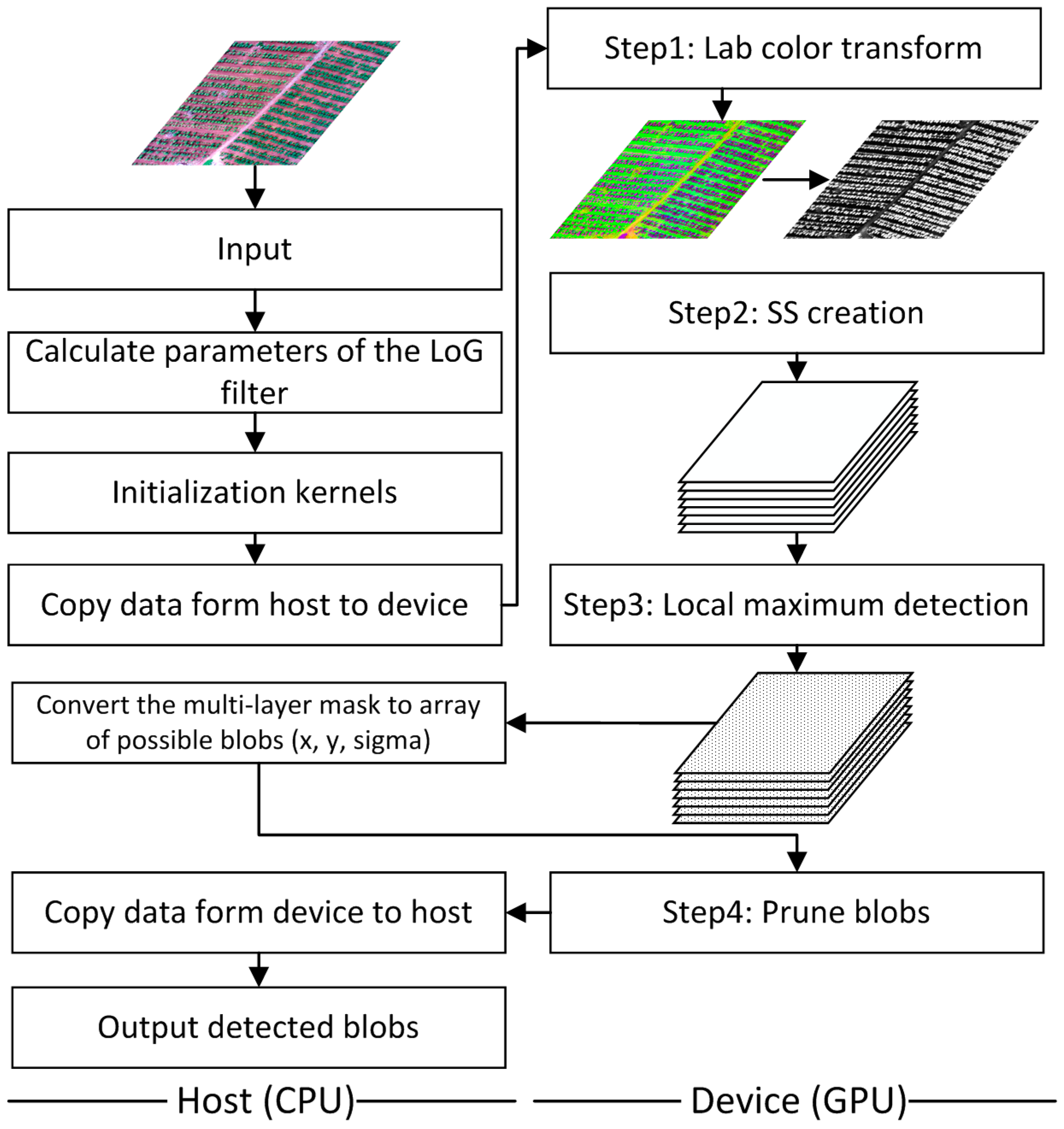

2.4. Scale-Space Filtering (SSF) Based Tree Detection Method

2.4.1. Lab Color Transformation (CT-Lab)

2.4.2. Scale-Space Creation (SSC)

2.4.3. Local Maximum Detection (LMD)

2.4.4. Prune Blobs (PB)

2.5. Graphics Processing Unit (GPU) Implementation

- (1)

- CT-Lab: We used a 32 × 32 window to arrange threads in each block, where each thread performed the RGB to Lab color transformation on a pixel-wise basis. This was due to the maximum number of threads per block being 1024 for our GPU device, and that the 2-dimensional (2-D) arrangement of threads other than 1-D may have reduced the idle threads when processing 2-D images.

- (2)

- SSC: We first calculated the parameters of the LoG operator in the host and then, together with the image, transferred them to the device. The 2-dimensional (2-D) LoG filter was isotropic, and can be separated into two 1-D components (x and y), resulting in a decrease in redundant computations. We used two different kernels to calculate the x and y components and combined them using another kernel. The key point of exploring the power of GPU was using the shared memory, an on-chip cache that operated much faster than the local and global memory. One common problem may appear when the filter window reaches the array border where part of the filter may fall outside the array and will not match corresponding values. This was undertaken by assuming that the arrays were extended beyond their boundaries as per the “reflected” boundary conditions, which involved repeating the nearest array in an inverse order. Other details can be found in previous works since Gaussian-like filtering is one of the most basic applications of CUDA [21].

- (3)

- LMD: We detected the local maximum by filtering the image sequence of SS consecutively through each x, y, and z direction. The over boundary arrays were extended as per the “constant” (repeat a constant value, e.g., zero) instead of “reflected” boundary conditions as the latter may cause over detection in maximum detection. To minimize the time cost in data transfer, only the input image and filtered results were transferred between the host and device, while the intermediate variables were stored in the GPU’s global memory and allocated in advance.

- (4)

- PB: Once the local maxima in SS were identified, the x and y coordinates and σ for each maxima were extracted into an array, which was then transferred to the GPU as the input of the blobs pruning kernel. Our arrangement intended to compare the blobs in a parallel manner over each of the two blob combinations using an associated thread. A three-part algorithm was implemented as follows:

| Algorithm 1. Pseudocode for locating blobs for each thread. |

| 1: INPUTS: index of kernel running (), size of blobs array (n) 2: compute = blockIdx.x * blockDim.x + * Nbt + threadIdx.x + 1 3: define a temporary variable L to store number remains 4: check if (< = 0 or > (n × (n − 1)/2)): True: set p = −1 and return; False: continues 5: set loop starting conditions: p = 1, L = n − p 6: start loop: while ( > L): icomb − = L; p + = 1; L = n − p; 7: Compute indices (p and i): p − = 1; q = p + (); 8: OUTPUT: indices of blobs array (p and q) |

2.6. Metrics for Evaluating Accuracy of Tree Method Detection

2.7. Metrics for Evaluating Graphics Processing Unit (GPU) Performance

3. Results and Discussion

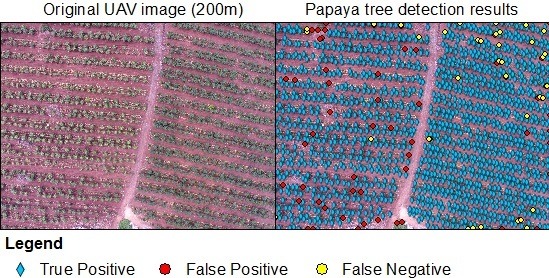

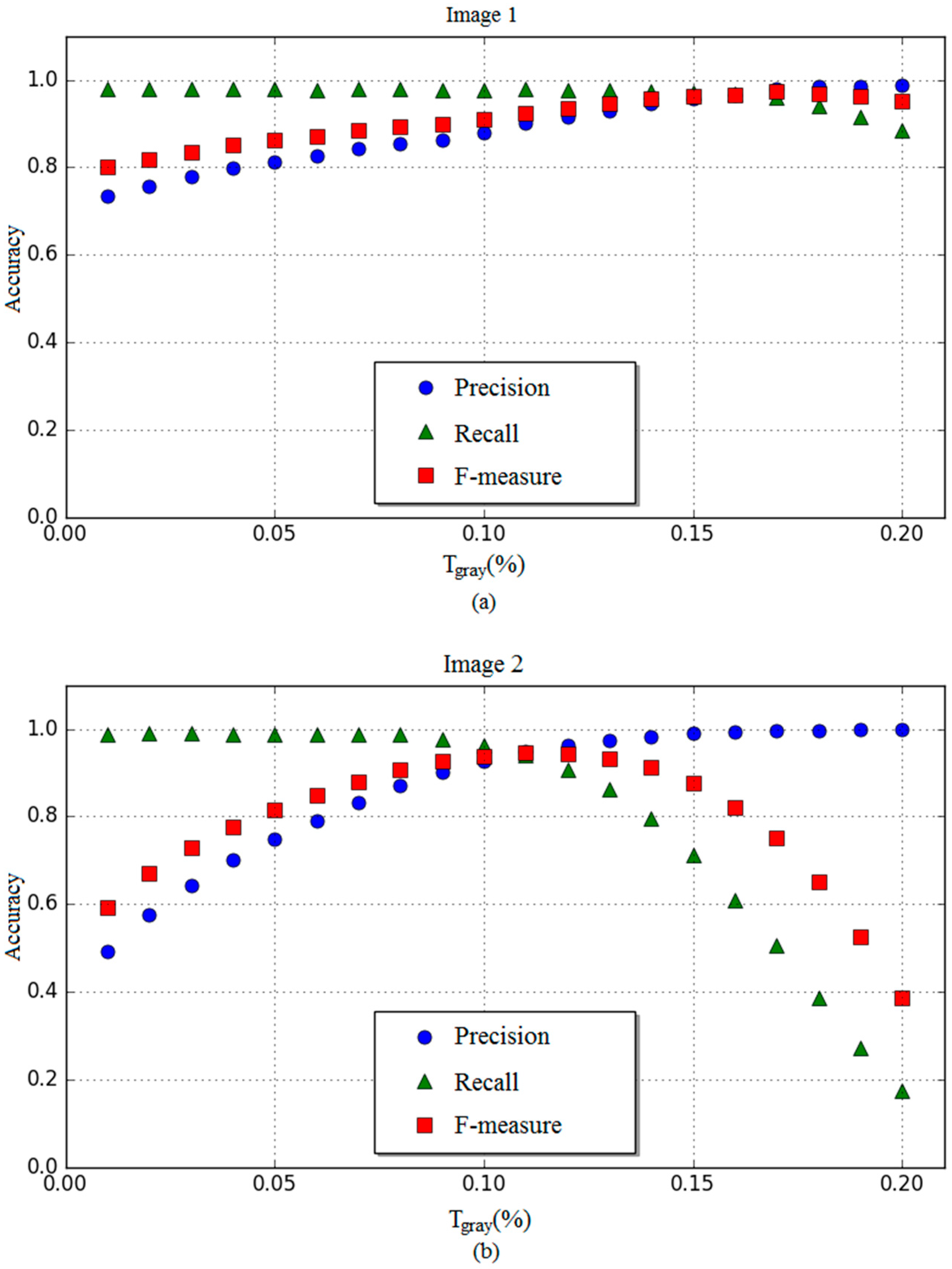

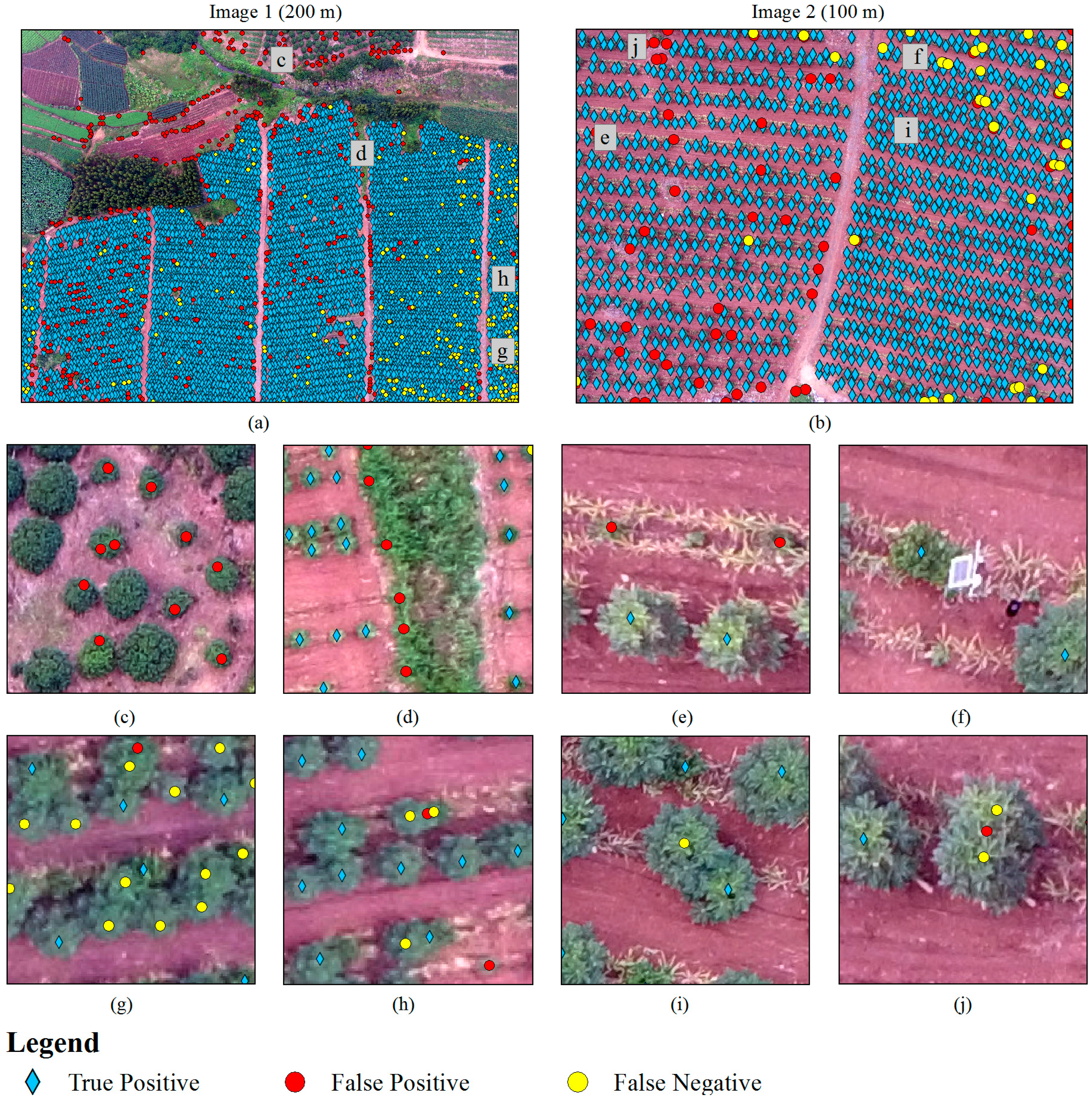

3.1. Tree Detection Accuracy

3.2. GPU Performance

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Puliti, S.; Olerka, H.; Gobakken, T.; Næsset, E. Inventory of small forest areas using an unmanned aerial system. Remote Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef]

- Gebreslasie, M.T.; Ahmed, F.B.; Van Aardt, J.A.N.; Blakeway, F. Individual tree detection based on variable and fixed window size local maxima filtering applied to ikonos imagery for even-aged eucalyptus plantation forests. Int. J. Remote Sens. 2011, 32, 4141–4154. [Google Scholar] [CrossRef]

- Hirschmugl, M.; Ofner, M.; Raggam, J.; Schardt, M. Single tree detection in very high resolution remote sensing data. Remote Sens. Environ. 2007, 110, 533–544. [Google Scholar] [CrossRef]

- Karlson, M.; Reese, H.; Ostwald, M. Tree crown mapping in managed woodlands (parklands) of semi-arid west africa using worldview-2 imagery and geographic object based image analysis. Sensors 2014, 14, 22643–22669. [Google Scholar] [CrossRef] [PubMed]

- Malek, S.; Bazi, Y.; Alajlan, N.; Alhichri, H. Efficient framework for palm tree detection in uav images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4692–4703. [Google Scholar] [CrossRef]

- Larsen, M.; Rudemo, M. Optimizing templates for finding trees in aerial photographs. Pattern Recognit. Lett. 1998, 19, 1153–1162. [Google Scholar] [CrossRef]

- Descombes, X.; Pechersky, E. Tree Crown Extraction Using a Three State Markov Random Field; INRIA: Paris, France, 2006; pp. 1–14. [Google Scholar]

- Jia, Z.; Proisy, C.; Descombes, X.; Maire, G.L.; Nouvellon, Y.; Stape, J.L.; Viennois, G.; Zerubia, J.; Couteron, P. Mapping local density of young eucalyptus plantations by individual tree detection in high spatial resolution satellite images. For. Ecol. Manag. 2013, 301, 129–141. [Google Scholar]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep learning based oil palm tree detection and counting for high-resolution remote sensing images. Remote Sens. 2017, 9, 22. [Google Scholar] [CrossRef]

- Larsen, M.; Eriksson, M.; Descombes, X.; Perrin, G.; Brandtberg, T.; Gougeon, F.A. Comparison of six individual tree crown detection algorithms evaluated under varying forest conditions. Int. J. Remote Sens. 2011, 32, 5827–5852. [Google Scholar] [CrossRef]

- Lindeberg, T. Feature detection with automatic scale selection. Int. J. Comput. Vis. 1998, 30, 79–116. [Google Scholar] [CrossRef]

- Ckner, A.; Pinto, N.; Lee, Y.; Catanzaro, B.; Ivanov, P.; Fasih, A. Pycuda and pyopencl: A scripting-based approach to gpu run-time code generation. Parallel Comput. 2011, 38, 157–174. [Google Scholar]

- Zhu, Z.; Xu, Y.; Wen, Q.; Pu, Z.; Zhou, H.; Dai, T.; Liang, J.; Liang, C.; Luo, S. The stratigraphy and chronology of multicycle quaternary volcanic rock-red soil sequence in Leizhou Peninsula, South China. Quat. Sci. 2001, 21, 270–276. [Google Scholar]

- Walczykowski, P.; Kedzierski, M. Imagery Intelligence from Low Altitudes: Chosen Aspects. In Proceedings of the XI Conference on Reconnaissance and Electronic Warfare Systems, Oltarzew, Poland, 21 November 2016. [Google Scholar]

- Srestasathiern, P.; Rakwatin, P. Oil palm tree detection with high resolution multi-spectral satellite imagery. Remote Sens. 2014, 6, 9749–9774. [Google Scholar] [CrossRef]

- Ford, A.; Roberts, A. Colour Space Conversions. Available online: http://sites.biology.duke.edu/johnsenlab/pdfs/tech/colorconversion.pdf (accessed on 17 May 2017).

- Ford, A. Colour Space Conversions; Westminster University: London, UK, 1998. [Google Scholar]

- Weisstein, E.W. Circle-Circle Intersection. Available online: http://mathworld.wolfram.com/Circle-CircleIntersection.html (accessed on 17 May 2017).

- Walt, S.V.D.; Schönberger, J.L.; Nuneziglesias, J.; Boulogne, F.; Warner, J.D.; Yager, N.; Gouillart, E.; Yu, T.; Contributors, T.S. Scikit-image: Image processing in python. PeerJ 2014, 2, e453. [Google Scholar] [CrossRef] [PubMed]

- Behnel, S.; Bradshaw, R.; Citro, C.; Dalcin, L.; Seljebotn, D.S.; Smith, K. Cython: The best of both worlds. Comput. Sci. Eng. 2010, 13, 31–39. [Google Scholar] [CrossRef]

- Liu, J. Comparation of several cuda accelerated gaussian filtering algorithms. Comput. Eng. Appl. 2013, 49, 14–18. [Google Scholar]

- He, H.; Ma, Y. Imbalanced Learning: Foundations, Algorithms, and Applications; John Wiley & Sons, Inc: Hoboken, NJ, USA, 2013. [Google Scholar]

- Martin, D.R.; Fowlkes, C.C.; Malik, J. Learning to detect natural image boundaries using local brightness, color, and texture cues. IEEE Comput. Soc. 2004, 26, 530–549. [Google Scholar] [CrossRef] [PubMed]

- Kedzierski, M.; Wierzbicki, D. Methodology of improvement of radiometric quality of images acquired from low altitudes. Measurement 2016, 92, 70–78. [Google Scholar] [CrossRef]

| Information | Image 1 | Image 2 |

|---|---|---|

| Date | 20 December 2015 | 20 December 2015 |

| Local Time | 10:53 | 12:30 |

| Latitude (°N) | 20.3693 | 20.3694 |

| Longitude (°E) | 110.0553 | 110.0574 |

| Flight altitude (m) | 200 | 100 |

| View angle (°) | 0 | 0 |

| Tree (papaya and lemon) numbers of ground truth | 7327 | 1328 |

| Camera Parameters | Values |

|---|---|

| Sensor | Sony EXMOR 1/2.3″ CMOS |

| Effective pixels | 12.4 M (total pixels: 12.76 M) |

| Sensor size | 6.16 mm wide, 4.62 mm high |

| Lens | FOV 94° 20 mm (35 mm format equivalent) f/2.8 focus at ∞ |

| ISO range | 100–1600 (photo), 100–3200 (video) |

| Electronic Shutter Speed | 8–1/8000 s |

| Image Size | 4000 × 3000 |

| Parameters | Usage | Image 1 | Image 2 |

|---|---|---|---|

| σmin | Minimum value of σ | 3 | 15 |

| σmax | Maximum value of σ | 6 | 25 |

| Nsigma | Number of scale-space | 5 | 5 |

| OA | Threshold of overlapping area ratio | 0.2 | 0.2 |

| Processing Step | Image 1 | Image 2 |

|---|---|---|

| Before PB | 7640 | 1476 |

| After PB | 7565 | 1354 |

| Images | Main Computation Device | Step 1 | Step 2 | Step 3 | Step 4 | Kernel | Total Time (ms) |

|---|---|---|---|---|---|---|---|

| CT-Lab | SSC | LMD | PB | Initialize | |||

| Time (ms) | Time (ms) | Time (ms) | Time (ms) | Time (ms) | |||

| 1 | CPU | 5137.60 | 4175.34 | 1398.84 | 33,660.95 | / | 44,418.80 |

| GPU | 157.67 | 308.92 | 149.51 | 154.22 | 175.13 | 992.22 | |

| Speedups | 32.58 | 13.52 | 9.36 | 218.26 | / | 44.77 | |

| 2 | CPU | 5086.84 | 16,338.77 | 1313.11 | 1119.63 | / | 23,905.13 |

| GPU | 153.05 | 317.89 | 137.60 | 3.97 | 178.41 | 837.62 | |

| Speedups | 33.24 | 51.40 | 9.54 | 282.02 | / | 28.54 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, H.; Chen, S.; Li, D.; Wang, C.; Yang, J. Papaya Tree Detection with UAV Images Using a GPU-Accelerated Scale-Space Filtering Method. Remote Sens. 2017, 9, 721. https://doi.org/10.3390/rs9070721

Jiang H, Chen S, Li D, Wang C, Yang J. Papaya Tree Detection with UAV Images Using a GPU-Accelerated Scale-Space Filtering Method. Remote Sensing. 2017; 9(7):721. https://doi.org/10.3390/rs9070721

Chicago/Turabian StyleJiang, Hao, Shuisen Chen, Dan Li, Chongyang Wang, and Ji Yang. 2017. "Papaya Tree Detection with UAV Images Using a GPU-Accelerated Scale-Space Filtering Method" Remote Sensing 9, no. 7: 721. https://doi.org/10.3390/rs9070721

APA StyleJiang, H., Chen, S., Li, D., Wang, C., & Yang, J. (2017). Papaya Tree Detection with UAV Images Using a GPU-Accelerated Scale-Space Filtering Method. Remote Sensing, 9(7), 721. https://doi.org/10.3390/rs9070721