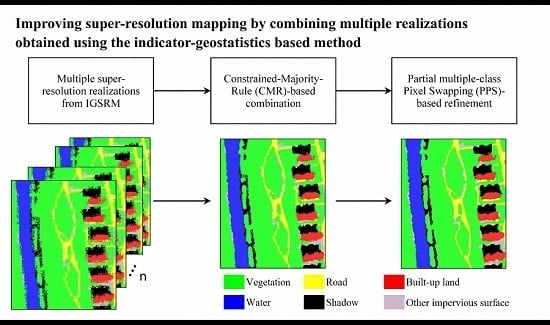

Improving Super-Resolution Mapping by Combining Multiple Realizations Obtained Using the Indicator-Geostatistics Based Method

Abstract

:1. Introduction

2. IGSRM

3. Method

3.1. Combination of Multiple Super-Resolution Realizations Using the CMR

3.2. Refinement Using PPS

3.3. Method Evaluation

4. Experimental Results

4.1. Example 1: Urban Area

4.2. Example 2: Agricultural Area

5. Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- De Jong, S.M.; Hornstra, T.; Maas, H.G. An integrated spatial and spectral approach to the classification of Mediterranean land cover types: The SSC method. Int. J. Appl. Earth Obs. Geoinf. 2001, 3, 176–183. [Google Scholar] [CrossRef]

- Kavzoglu, T.; Colkesen, I. A kernel functions analysis for support vector machines for land cover classification. Int. J. Appl. Earth Obs. Geoinf. 2009, 11, 352–359. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Otukei, J.R.; Blaschke, T. Land cover change assessment using decision trees, support vector machines and maximum likelihood classification algorithms. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, S27–S31. [Google Scholar] [CrossRef]

- Wu, C. Quantifying high-resolution impervious surfaces using spectral mixture analysis. Int. J. Remote Sens. 2009, 30, 2915–2932. [Google Scholar] [CrossRef]

- Li, J.; Khodadadzadeh, M.; Plaza, A.; Jia, X.; Bioucas-Dias, J.M. A Discontinuity Preserving Relaxation Scheme for Spectral–Spatial Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 625–639. [Google Scholar] [CrossRef]

- Lu, X.; Wu, H.; Yuan, Y.; Yan, P. Manifold Regularized Sparse NMF for Hyperspectral Unmixing. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2815–2826. [Google Scholar] [CrossRef]

- Li, J.; Agathos, A.; Zaharie, D.; Bioucas-Dias, J.M. Minimum Volume Simplex Analysis: A Fast Algorithm for Linear Hyperspectral Unmixing. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5067–5082. [Google Scholar] [CrossRef]

- Zhang, Y.; Atkinson, P.M.; Li, X.; Feng, L.; Wang, Q.; Du, Y. Learning-Based Spatial–Temporal Superresolution Mapping of Forest Cover With MODIS Images. IEEE Trans. Geosci. Remote Sens. 2017, 99. [Google Scholar] [CrossRef]

- Zhang, Y.; Du, Y.; Feng, L.; Fang, S.; Li, X. Example-Based Super-Resolution Land Cover Mapping Using Support Vector Regression. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1271–1283. [Google Scholar] [CrossRef]

- Frazier, A.E. Landscape heterogeneity and scale considerations for super-resolution mapping. Int. J. Remote Sens. 2015, 36, 2395–2408. [Google Scholar] [CrossRef]

- Xu, Y.; Huang, B. A Spatio–Temporal Pixel-Swapping Algorithm for Subpixel Land Cover Mapping. IEEE Geosci. Remote Sens. Lett. 2014, 11, 474–478. [Google Scholar] [CrossRef]

- Atkinson, P.M. Super-resolution target mapping from soft classified remotely sensed imagery. In Proceedings of the 6th International Conference on Geocomputation, University of Queensland, Brisbane, Australia, 24–26 September 2001; Volume 71, pp. 839–846. [Google Scholar]

- Atkinson, P.M. Mapping sub-pixel boundaries from remotely sensed images. In Innovations in GIS 4; Taylor & Francis: London, UK, 1997; pp. 166–180. [Google Scholar]

- Boucher, A.; Kyriakidis, P.C.; Cronkite-Ratcliff, C. Geostatistical solutions for super-resolution land cover mapping. IEEE Trans. Geosci. Remote Sens. 2008, 46, 272–283. [Google Scholar] [CrossRef]

- Jin, H.; Mountrakis, G.; Li, P. A super-resolution mapping method using local indicator variograms. Int. J. Remote Sens. 2012, 33, 7747–7773. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W.; Atkinson, P.M. Sub-pixel mapping of remote sensing images based on radial basis function interpolation. ISPRS J. Photogramm. Remote Sens. 2014, 92, 1–15. [Google Scholar] [CrossRef]

- Foody, G.M.; Muslim, A.M.; Atkinson, P.M. Super-resolution mapping of the waterline from remotely sensed data. Int. J. Remote Sens. 2005, 26, 5381–5392. [Google Scholar] [CrossRef]

- Ling, F.; Xiao, F.; Du, Y.; Xue, H.P.; Ren, X.Y. Waterline mapping at the subpixel scale from remote sensing imagery with high-resolution digital elevation models. Int. J. Remote Sens. 2008, 29, 1809–1815. [Google Scholar] [CrossRef]

- Muad, A.M.; Foody, G.M. Super-resolution mapping of lakes from imagery with a coarse spatial and fine temporal resolution. Int. J. Appl. Earth Obs. Geoinf. 2012, 15, 79–91. [Google Scholar] [CrossRef]

- Ling, F.; Li, X.; Xiao, F.; Fang, S.; Du, Y. Object-based sub-pixel mapping of buildings incorporating the prior shape information from remotely sensed imagery. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 283–292. [Google Scholar] [CrossRef]

- Ardila, J.P.; Tolpekin, V.A.; Bijker, W.; Stein, A. Markov-random-field-based super-resolution mapping for identification of urban trees in VHR images. ISPRS J. Photogramm. Remote Sens. 2011, 66, 762–775. [Google Scholar] [CrossRef]

- Li, X.; Du, Y.; Ling, F. Super-resolution mapping of forests with bitemporal different spatial resolution images based on the spatial-temporal Markov random field. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 29–39. [Google Scholar] [CrossRef]

- Tiwari, L.K.; Sinha, S.K.; Saran, S.; Tolpekin, V.A.; Raju, P.L.N. Markov random field-based method for super-resolution mapping of forest encroachment from remotely sensed ASTER image. Geocarto Int. 2015, 31, 1–30. [Google Scholar] [CrossRef]

- Huang, C.; Chen, Y.; Wu, J. DEM-based modification of pixel-swapping algorithm for enhancing floodplain inundation mapping. Int. J. Remote Sens. 2014, 35, 365–381. [Google Scholar] [CrossRef]

- Li, L.; Chen, Y.; Xu, T.; Liu, R.; Shi, K.; Huang, C. Super-resolution mapping of wetland inundation from remote sensing imagery based on integration of back-propagation neural network and genetic algorithm. Remote Sens. Environ. 2015, 164, 142–154. [Google Scholar] [CrossRef]

- Li, L.; Chen, Y.; Yu, X.; Liu, R.; Huang, C. Sub-pixel flood inundation mapping from multispectral remotely sensed images based on discrete particle swarm optimization. ISPRS J. Photogramm. Remote Sens. 2015, 101, 10–21. [Google Scholar] [CrossRef]

- Ling, F.; Li, W.; Du, Y.; Li, X. Land cover change mapping at the subpixel scale with different spatial-resolution remotely sensed imagery. IEEE Geosci. Remote Sens. Lett. 2011, 8, 182–186. [Google Scholar] [CrossRef]

- Ling, F.; Foody, G.M.; Li, X.; Zhang, Y.; Du, Y. Assessing a Temporal Change Strategy for Sub-Pixel Land Cover Change Mapping from Multi-Scale Remote Sensing Imagery. Remote Sens. 2016, 8, 1–23. [Google Scholar] [CrossRef]

- Foody, G.M. The role of soft classification techniques in the refinement of estimates of ground control point location. Photogramm. Eng. Remote Sens. 2002, 68, 897–904. [Google Scholar]

- Li, X.; Du, Y.; Ling, F.; Wu, S.; Feng, Q. Using a sub-pixel mapping model to improve the accuracy of landscape pattern indices. Ecol. Indic. 2011, 11, 1160–1170. [Google Scholar] [CrossRef]

- Boucher, A. Super resolution mapping with multiple point geostatistics. In geoENV VI–Geostatistics for Environmental Applications; Springer: Dordrecht, The Netherlands, 2008; pp. 297–305. [Google Scholar]

- Boucher, A.; Kyriakidis, P.C. Super-resolution land cover mapping with indicator geostatistics. Remote Sens. Environ. 2006, 104, 264–282. [Google Scholar] [CrossRef]

- Ling, F.; Li, X.; Du, Y.; Xiao, F. Sub-pixel mapping of remotely sensed imagery with hybrid intra- and inter-pixel dependence. Int. J. Remote Sens. 2013, 34, 341–357. [Google Scholar] [CrossRef]

- Mertens, K.C.; De Baets, B.; Verbeke, L.P.C.; de Wulf, R.R. A sub-pixel mapping algorithm based on sub-pixel/pixel spatial attraction models. Int. J. Remote Sens. 2006, 27, 3293–3310. [Google Scholar] [CrossRef]

- Lu, L.; Huang, Y.; Di, L.; Huang, D. A New Spatial Attraction Model for Improving Subpixel Land Cover Classification. Remote Sens. 2017, 9, 360. [Google Scholar] [CrossRef]

- Tatem, A.J.; Lewis, H.G.; Atkinson, P.M.; Nixon, M.S. Super-resolution target identification from remotely sensed images using a Hopfield neural network. IEEE Trans. Geosci. Remote Sens. 2001, 39, 781–796. [Google Scholar] [CrossRef]

- Chen, Y.; Ge, Y.; Heuvelink, G.B.M.; Hu, J.; Jiang, Y. Hybrid Constraints of Pure and Mixed Pixels for Soft-Then-Hard Super-Resolution Mapping With Multiple Shifted Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2040–2052. [Google Scholar] [CrossRef]

- Hu, J.; Ge, Y.; Chen, Y.; Li, D. Super-Resolution Land Cover Mapping Based on Multiscale Spatial Regularization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1–9. [Google Scholar] [CrossRef]

- Atkinson, P.M. Sub-pixel target mapping from soft-classified, remotely sensed imagery. Photogramm. Eng. Remote Sens. 2005, 71, 839–846. [Google Scholar] [CrossRef]

- Wang, Q.; Wang, L.; Liu, D. Particle Swarm Optimization-Based Sub-Pixel Mapping for Remote-Sensing Imagery. Int. J. Remote Sens. 2012, 33, 6480–6496. [Google Scholar] [CrossRef]

- He, D.; Zhong, Y.F.; Feng, R.Y.; Zhang, L.P. Spatial-temporal sub-pixel mapping based on swarm intelligence theory. Remote Sens. 2016, 8, 894. [Google Scholar] [CrossRef]

- Wang, Q.; Atkinson, P.M. The effect of the point spread function on sub-pixel mapping. Remote Sens. Environ. 2017, 193, 127–137. [Google Scholar] [CrossRef]

- Su, Y.F. Spatial continuity and self-similarity in super-resolution mapping: Self-similar pixel swapping. Remote Sens. Lett. 2016, 7, 338–347. [Google Scholar] [CrossRef]

- Makido, Y.; Shortridge, A. Weighting Function Alternatives for a Subpixel Allocation Model. Photogramm. Eng. Remote Sens. 2007, 73, 1233–1240. [Google Scholar] [CrossRef]

- Makido, Y.; Shortridge, A.; Messina, J.P. Assessing alternatives for modeling the spatial distribution of multiple land-cover classes at subpixel scales. Photogramm. Eng. Remote Sens. 2007, 73, 935–943. [Google Scholar] [CrossRef]

- Shen, Z.; Qi, J.; Wang, K. Modification of pixel-swapping algorithm with initialization from a sub-pixel/pixel spatial attraction model. Photogramm. Eng. Remote Sens. 2009, 75, 557–567. [Google Scholar] [CrossRef]

- Zhang, Y.; Du, Y.; Ling, F.; Li, X. Improvement of the Example-Regression-Based Super-Resolution Land Cover Mapping Algorithm. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1–5. [Google Scholar] [CrossRef]

- Goovaerts, P. Geostatistics for Natural Resource Evaluation; Oxford University Press on Demand: New York, NY, USA, 1997. [Google Scholar]

- Journal, A.G.; Alabert, F. Non-Gaussian data expansion in the Earth Sciences. Terra Nova 1989, 1, 123–134. [Google Scholar] [CrossRef]

- Jin, H.; Li, P. Integration of region growing and morphological analysis with super-resolution land cover mapping. In Proceedings of the Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 5099–5102. [Google Scholar]

- Kyriakidis, P.C.; Dungan, J.L. A geostatistical approach for mapping thematic classification accuracy and evaluating the impact of inaccurate spatial data on ecological model predictions. Environ. Ecol. Stat. 2001, 8, 311–330. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, S.L. Super-resolution mapping of coastline with remotely sensed data and geostatistics. J. Remote Sens. 2010, 14, 148–164. [Google Scholar] [CrossRef]

- Li, X.; Ling, F.; Foody, G.M.; Du, Y. Improving super-resolution mapping through combining multiple super-resolution land-cover maps. Int. J. Remote Sens. 2016, 37, 2415–2432. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W.; Wang, L. Allocating classes for soft-then-hard sub-pixel mapping algorithms in units of class. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2940–2959. [Google Scholar] [CrossRef]

- Atkinson, P.M. Issues of uncertainty in super-resolution mapping and their implications for the design of an inter-comparison study. Int. J. Remote Sens. 2009, 30, 5293–5308. [Google Scholar] [CrossRef]

- Ling, F.; Fang, S.; Li, W.; Li, X.; Xiao, F.; Zhang, Y.; Du, Y. Post-processing of interpolation-based super-resolution mapping with morphological filtering and fraction refilling. Int. J. Remote Sens. 2014, 35, 5251–5262. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Pontius, R.G., Jr.; Millones, M. Death to Kappa: Birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Persello, C.; Bruzzone, L. A novel protocol for accuracy assessment in classification of very high resolution images. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1232–1244. [Google Scholar] [CrossRef]

| Methods | Scale Factors | Thematic Accuracy (%) | Geometric Accuracy (%) | ||||

|---|---|---|---|---|---|---|---|

| OA | AD | QD | EE | SE | |||

| IGSRM 1 | 3 | 90.64 | 9.36 | 0.00 | 21.50 | 14.88 | |

| 5 | 85.19 | 14.81 | 0.00 | 35.50 | 17.74 | ||

| 7 | 80.69 | 19.31 | 0.00 | 45.88 | 18.93 | ||

| 9 | 76.86 | 23.14 | 0.00 | 53.75 | 20.27 | ||

| M-SRM 2 | 3 | 96.98 | 2.71 | 0.31 | 14.05 | 8.67 | |

| 5 | 91.44 | 6.68 | 1.58 | 29.95 | 15.93 | ||

| 7 | 89.49 | 8.93 | 1.57 | 32.23 | 18.13 | ||

| 9 | 85.96 | 11.45 | 2.60 | 38.47 | 19.52 | ||

| Proposed Method | Step 1 (IGSRM + CMR-based combination) | 3 | 96.11 | 3.89 | 0.00 | 11.75 | 8.31 |

| 5 | 92.56 | 7.44 | 0.00 | 20.63 | 11.24 | ||

| 7 | 89.01 | 10.99 | 0.00 | 29.25 | 13.27 | ||

| 9 | 85.52 | 14.48 | 0.00 | 36.17 | 14.76 | ||

| Step 2 (Step1 + PPS-based refinement) | 3 | 98.07 | 1.93 | 0.00 | 7.04 | 4.62 | |

| 5 | 94.88 | 5.12 | 0.00 | 15.75 | 8.53 | ||

| 7 | 91.38 | 8.62 | 0.00 | 23.94 | 11.11 | ||

| 9 | 87.82 | 12.18 | 0.00 | 31.19 | 13.59 | ||

| Methods | Scale Factors | Thematic Accuracy (%) | Geometric Accuracy (%) | ||||

|---|---|---|---|---|---|---|---|

| OA | AD | QD | EE | SE | |||

| IGSRM 1 | 3 | 96.94 | 3.06 | 0.00 | 9.60 | 19.14 | |

| 5 | 94.73 | 5.27 | 0.00 | 20.90 | 23.19 | ||

| 7 | 92.59 | 7.42 | 0.00 | 34.27 | 25.51 | ||

| 9 | 90.65 | 9.35 | 0.00 | 42.79 | 26.29 | ||

| M-SRM 2 | 3 | 99.28 | 0.63 | 0.09 | 7.00 | 4.78 | |

| 5 | 97.81 | 1.85 | 0.34 | 19.82 | 9.71 | ||

| 7 | 96.25 | 3.13 | 0.61 | 29.62 | 14.21 | ||

| 9 | 94.70 | 4.41 | 0.89 | 37.35 | 18.27 | ||

| Proposed Method | Step 1 (IGSRM + CMR-based combination) | 3 | 99.26 | 0.74 | 0.00 | 4.48 | 4.93 |

| 5 | 97.83 | 2.17 | 0.00 | 11.07 | 10.48 | ||

| 7 | 96.23 | 3.77 | 0.00 | 18.78 | 14.87 | ||

| 9 | 94.59 | 5.41 | 0.00 | 26.66 | 18.19 | ||

| Step 2 (Step1 + PPS-based refinement) | 3 | 99.36 | 0.64 | 0.00 | 4.26 | 4.16 | |

| 5 | 98.44 | 1.57 | 0.00 | 8.89 | 6.52 | ||

| 7 | 97.15 | 2.85 | 0.00 | 15.77 | 9.13 | ||

| 9 | 95.57 | 4.43 | 0.00 | 23.12 | 11.84 | ||

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, Z.; Li, P.; Jin, H.; Tian, Y.; Chen, Y.; Zhang, X. Improving Super-Resolution Mapping by Combining Multiple Realizations Obtained Using the Indicator-Geostatistics Based Method. Remote Sens. 2017, 9, 773. https://doi.org/10.3390/rs9080773

Shi Z, Li P, Jin H, Tian Y, Chen Y, Zhang X. Improving Super-Resolution Mapping by Combining Multiple Realizations Obtained Using the Indicator-Geostatistics Based Method. Remote Sensing. 2017; 9(8):773. https://doi.org/10.3390/rs9080773

Chicago/Turabian StyleShi, Zhongkui, Peijun Li, Huiran Jin, Yugang Tian, Yan Chen, and Xianfeng Zhang. 2017. "Improving Super-Resolution Mapping by Combining Multiple Realizations Obtained Using the Indicator-Geostatistics Based Method" Remote Sensing 9, no. 8: 773. https://doi.org/10.3390/rs9080773

APA StyleShi, Z., Li, P., Jin, H., Tian, Y., Chen, Y., & Zhang, X. (2017). Improving Super-Resolution Mapping by Combining Multiple Realizations Obtained Using the Indicator-Geostatistics Based Method. Remote Sensing, 9(8), 773. https://doi.org/10.3390/rs9080773