Development and Validation of a Short Sport Nutrition Knowledge Questionnaire for Athletes

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Design

2.2. Study Development

2.2.1. Generation of Items

2.2.2. Content Validity

2.2.3. Pilot Study and Item Wording Improvement

2.2.4. Construct Validity

2.2.5. Reliability

2.2.6. Sample Size Calculation

2.2.7. Statistical Analysis

2.2.8. Rasch Analysis

3. Results

3.1. Subjects

3.2. Content Validity, Feasibility and Face-Validity

3.3. Construct Validity

3.3.1. Discriminant Validity

3.3.2. Item Difficulty and Discrimination

3.3.3. Sectional Analysis

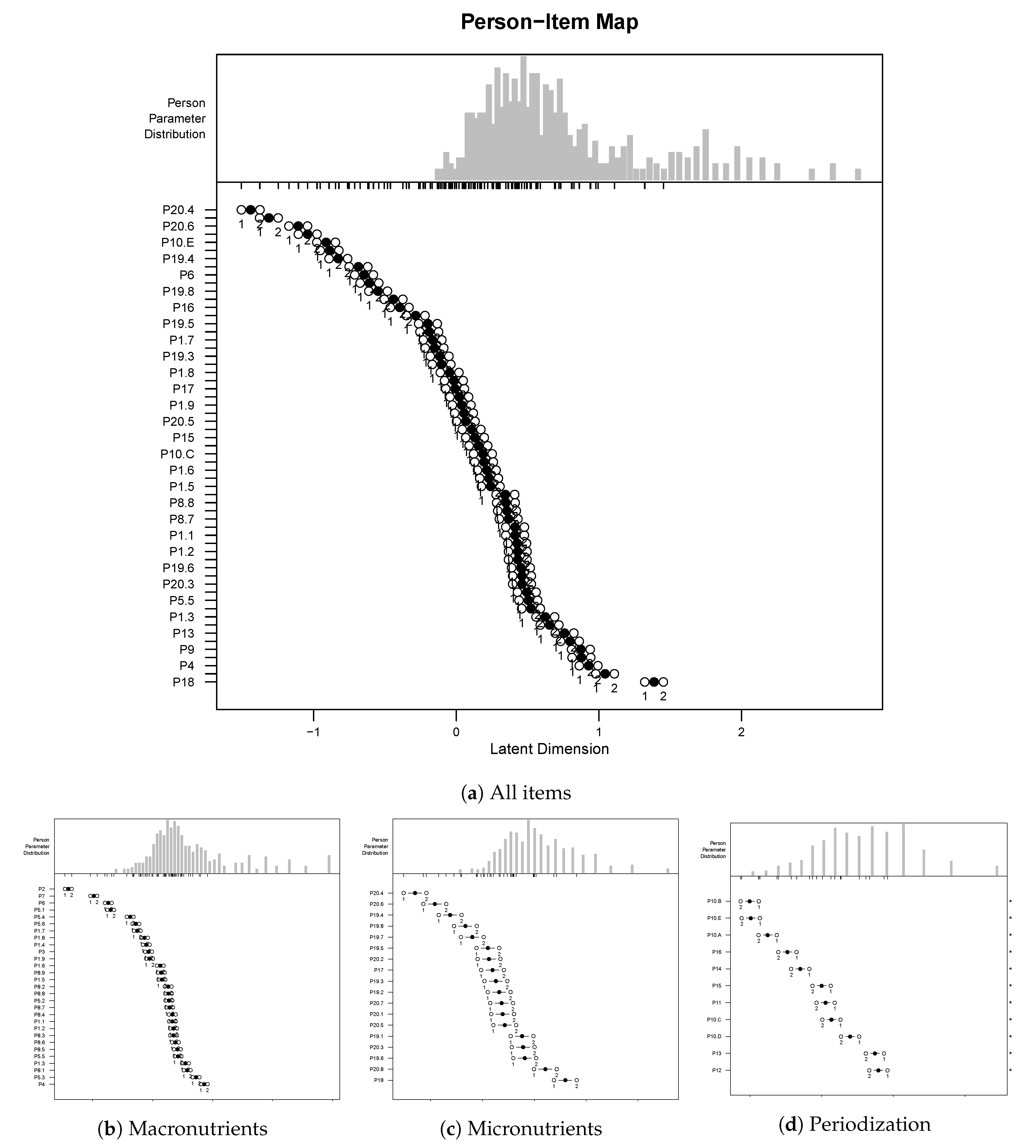

3.3.4. Rasch Analysis

3.4. Reliability

3.4.1. Internal Consistency

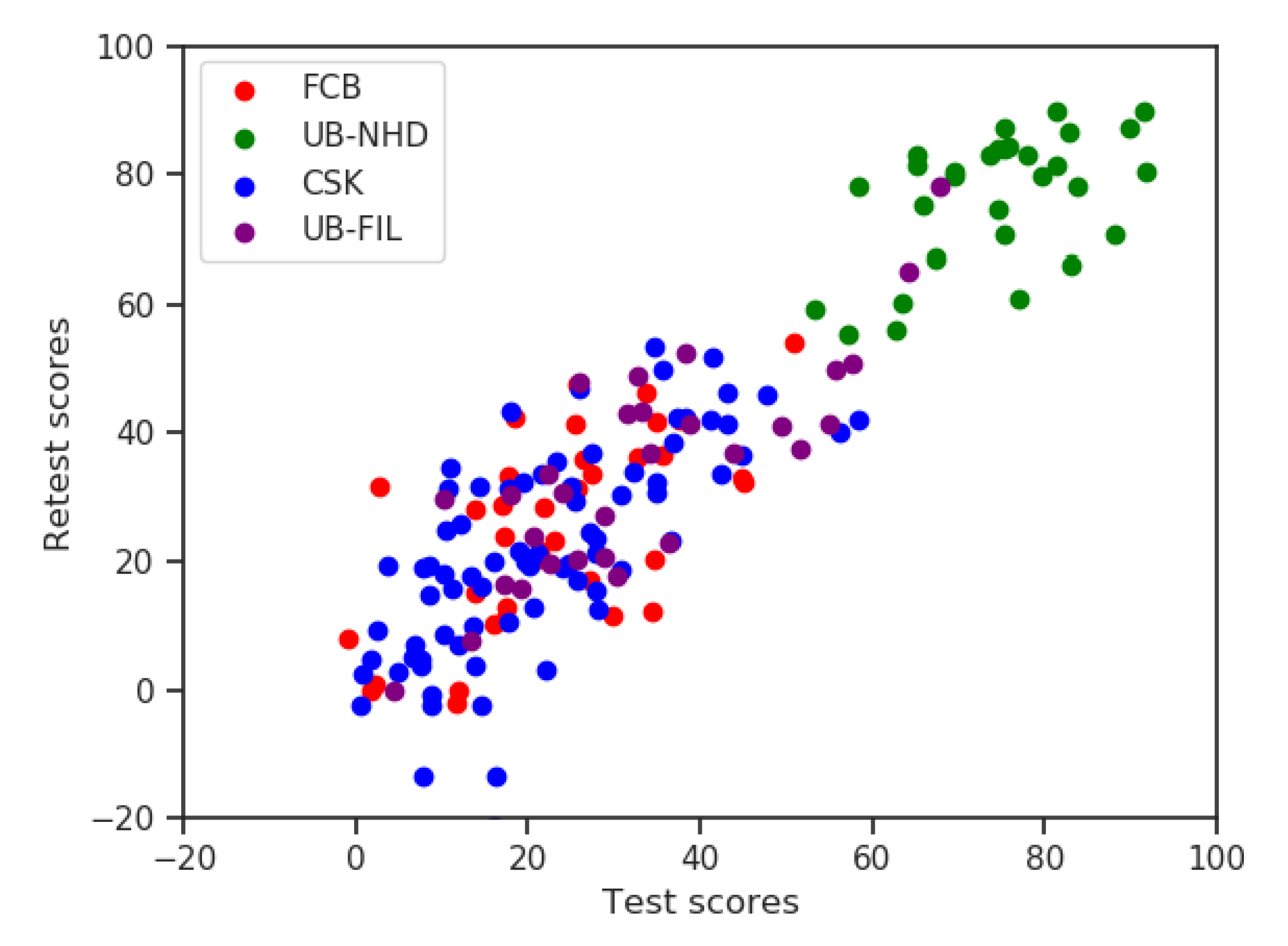

3.4.2. Stability

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| CVI | Content validity index |

| I-CVI | Item content validity index |

| S-CVI | Scale content validity index |

References

- Worsley, A. Nutrition knowledge and food consumption: Can nutrition knowledge change food behaviour? Asia Pac. J. Clin. Nutr. 2002, 11, S579–S585. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Heaney, S.; O’Connor, H.; Michael, S.; Gifford, J.; Naughton, G. Nutrition knowledge in athletes: A systematic review. Int. J. Sport Nutr. Exerc. Met. 2011, 21, 248–261. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Spronk, I.; Kullen, C.; Burdon, C.; O’Connor, H. Relationship between nutrition knowledge and dietary intake. Br. J. Nutr. 2014, 111, 1713–1726. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Trakman, G.; Forsyth, A.; Devlin, B.; Belski, R. A systematic review of athletes’ and coaches’ nutrition knowledge and reflections on the quality of current nutrition knowledge measures. Nutrients 2016, 8, 570. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- IOC consensus statement on sports nutrition 2010. J. Sports Sci. 2011, 29 (Suppl. 1), S3–S4. [CrossRef] [PubMed]

- Holden, S.L.; Schwarz, N.A.; Hudson, G.M. Sports nutrition knowledge of certified athletic trainers. J. Exerc. Nutr. 2019, 2, 13–28. [Google Scholar]

- Dowler, E. Symposium on ‘Intervention policies for deprived households’ Policy initiatives to address low-income households’ nutritional needs in the UK. Proc. Nutr. Soc. 2008, 67, 289–300. [Google Scholar] [CrossRef]

- Freeland-Graves, J.H.; Nitzke, S. Position of the academy of nutrition and dietetics: Total diet approach to healthy eating. J. Acad. Nutr. Diet. 2013, 113, 307–317. [Google Scholar] [CrossRef]

- Zinn, C.; Schofield, G.; Wall, C. Development of a psychometrically valid and reliable sports nutrition knowledge questionnaire. J. Sci. Med. Sport 2005, 8, 346–351. [Google Scholar] [CrossRef]

- Blennerhassett, C.; McNaughton, L.R.; Cronin, L.; Sparks, S.A. Development and Implementation of a Nutrition Knowledge Questionnaire for Ultraendurance Athletes. Int. J. Sport Nutr. Exerc. Met. 2019, 29, 39–45. [Google Scholar] [CrossRef]

- Heikkilä, M.; Valve, R.; Lehtovirta, M.; Fogelholm, M. Development of a nutrition knowledge questionnaire for young endurance athletes and their coaches. Scan. J. Med. Sci. Sport 2018, 28, 873–880. [Google Scholar] [CrossRef] [PubMed]

- Devlin, B.L.; Belski, R. Exploring general and sports nutrition and food knowledge in elite male Australian athletes. Int. J. Sport Nutr. Exerc. Met. 2015, 25, 225–232. [Google Scholar] [CrossRef] [PubMed]

- Alaunyte, I.; Perry, J.L.; Aubrey, T. Nutritional knowledge and eating habits of professional rugby league players: Does knowledge translate into practice? J. Int. Soc. Sport Nutr. 2015, 12, 18. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Torres-McGehee, T.M.; Pritchett, K.L.; Zippel, D.; Minton, D.M.; Cellamare, A.; Sibilia, M. Sports nutrition knowledge among collegiate athletes, coaches, athletic trainers, and strength and conditioning specialists. J. Athl. Training 2012, 47, 205–211. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Trakman, G.L.; Forsyth, A.; Hoye, R.; Belski, R. The nutrition for sport knowledge questionnaire (NSKQ): Development and validation using classical test theory and Rasch analysis. J. Int. Soc. Sport Nutr. 2017, 14, 26. [Google Scholar] [CrossRef]

- Trakman, G.L.; Forsyth, A.; Hoye, R.; Belski, R. Development and validation of a brief general and sports nutrition knowledge questionnaire and assessment of athletes’ nutrition knowledge. J. Int. Soc. Sport Nutr. 2018, 15, 17. [Google Scholar] [CrossRef] [Green Version]

- Parmenter, K.; Wardle, J. Evaluation and design of nutrition knowledge measures. J. Nutr. Educ. 2000, 32, 269–277. [Google Scholar] [CrossRef]

- MacKenzie, S.B.; Podsakoff, P.M.; Podsakoff, N.P. Construct measurement and validation procedures in MIS and behavioral research: Integrating new and existing techniques. MIS Q. 2011, 35, 293–334. [Google Scholar] [CrossRef]

- Trakman, G.L.; Forsyth, A.; Hoye, R.; Belski, R. Developing and validating a nutrition knowledge questionnaire: Key methods and considerations. Public Health Nutr. 2017, 20, 2670–2679. [Google Scholar] [CrossRef] [Green Version]

- Muñiz, J. Teoría Clásica de los Test; Ediciones Pirámide: Madrid, Spain, 2003. [Google Scholar]

- Schwarz, N.E.; Sudman, S.E. Answering Questions: Methodology for Determining Cognitive and Communicative Processes in Survey Research; Jossey-Bass: San Francisco CA, USA, 1996. [Google Scholar]

- Polit, D.F.; Beck, C.T. Nursing Research: Principles and Methods; Lippincott Williams & Wilkins: Philadelphia PA, USA, 2004. [Google Scholar]

- Faul, F.; Erdfelder, E.; Buchner, A.; Lang, A.G. Statistical power analyses using G* Power 3.1: Tests for correlation and regression analyses. Behav. Res. Meth. 2009, 41, 1149–1160. [Google Scholar] [CrossRef] [Green Version]

- Rasch, G. An item analysis which takes individual differences into account. Br. J. Math. Stat. Psychol. 1966, 19, 49–57. [Google Scholar] [CrossRef] [PubMed]

- Andrich, D. A rating formulation for ordered response categories. Psychometrika 1978, 43, 561–573. [Google Scholar] [CrossRef]

- Polit, D.F.; Beck, C.T. The content validity index: Are you sure you know what’s being reported? Critique and recommendations. Res. Nurs. Health 2006, 29, 489–497. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- González, M.Á.M.; Calvo, N.M.; Atucha, J.B.T. Comparaciones de K Medias (tres o más grupos). In Bioestadística Amigable; Elsevier: Barcelona, Spain, 2014; pp. 213–239. [Google Scholar]

- Scharfen, J.; Jansen, K.; Holling, H. Retest effects in working memory capacity tests: A meta-analysis. Psychon. Bull. Rev. 2018, 25, 2175–2199. [Google Scholar] [CrossRef] [PubMed]

| FCB | UB-NHD | CSK | UB-FIL | ||

|---|---|---|---|---|---|

| Test set | 37 | 49 | 93 | 39 | |

| Retest set | 36 | 30 | 77 | 30 | |

| Rasch set | 264 | 49 | 93 | 39 | |

| Gender (Rasch set) | Male | 234 (88.6%) | 15 (30.6%) | 42 (45.2%) | 27 (69.2%) |

| Female | 30 (11.4%) | 34 (69.4%) | 51 (54.8%) | 12 (30.8%) | |

| Age (Rasch set) | 13–15 | 80 (30.3%) | – | 88 (94.6%) | – |

| 16–18 | 88 (33.3%) | – | 5 (5.4%) | 1 (2.6%) | |

| 19–21 | 32 (12.1%) | 2 (4.1%) | – | 7 (17.9%) | |

| 22–25 | 23 (8.7%) | 34 (69.4%) | – | 16 (41.0%) | |

| >25 | 18 (6.8%) | 13 (26.5%) | – | 15 (38.5%) |

| Relevance | Clarity | Ambiguity | Simplicity | |||

|---|---|---|---|---|---|---|

| I-CVI | min | 0.86 | 0.71 | 0.86 | 0.71 | |

| Initial item set | max | 1.00 | 1.00 | 1.00 | 1.00 | |

| () | ||||||

| S-CVI | – | 0.92 | 0.81 | 0.92 | 0.81 | |

| I-CVI | min | 0.86 | 0.86 | 0.86 | 0.86 | |

| Reviewed item set | max | 1.00 | 1.00 | 1.00 | 1.00 | |

| () | 0.99 ± 0.04 | 0.98 ± 0.05 | 0.99 ± 0.03 | 0.98 ± 0.05 | ||

| S-CVI | – | 0.92 | 0.88 | 0.96 | 0.88 |

| Group | 95% CI | ||||

|---|---|---|---|---|---|

| Average | Std Dev s | Lower Bound | Upper Bound | ||

| UB-NHD | Test | 74.4 | 10.0 | 54.4 | 94.4 |

| UB-NHD | Retest | 76.6 | 10.1 | 56.5 | 96.7 |

| UB-FIL | Test | 33.4 | 15.9 | 1.6 | 65.2 |

| UB-FIL | Retest | 34.4 | 16.5 | 1.3 | 67.5 |

| FCB | Test | 23.3 | 12.2 | −1.1 | 47.7 |

| FCB | Retest | 25.3 | 14.6 | −4.0 | 54.6 |

| CSK | Test | 21.7 | 13.4 | −5.0 | 48.5 |

| CSK | Retest | 21.8 | 16.3 | −10.7 | 54.4 |

| Macronutrients | Micronutrients | Hydration | Food Intake Periodicity | |

|---|---|---|---|---|

| CSK | ||||

| FCB | ||||

| UB-FIL | ||||

| UB-NHD | ||||

| Global |

| Separation | Observed | Mean Square | |

|---|---|---|---|

| Reliability | Variance | Measurement Error | |

| All items | 0.8610 | 0.2677 | 0.0372 |

| Macronutrients | 0.8577 | 0.5368 | 0.0818 |

| Micronutrients | 0.6416 | 0.3659 | 0.1297 |

| Inner-periodization | 0.6398 | 0.5779 | 0.2122 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vázquez-Espino, K.; Fernández-Tena, C.; Lizarraga-Dallo, M.A.; Farran-Codina, A. Development and Validation of a Short Sport Nutrition Knowledge Questionnaire for Athletes. Nutrients 2020, 12, 3561. https://doi.org/10.3390/nu12113561

Vázquez-Espino K, Fernández-Tena C, Lizarraga-Dallo MA, Farran-Codina A. Development and Validation of a Short Sport Nutrition Knowledge Questionnaire for Athletes. Nutrients. 2020; 12(11):3561. https://doi.org/10.3390/nu12113561

Chicago/Turabian StyleVázquez-Espino, Karla, Carles Fernández-Tena, Maria Antonia Lizarraga-Dallo, and Andreu Farran-Codina. 2020. "Development and Validation of a Short Sport Nutrition Knowledge Questionnaire for Athletes" Nutrients 12, no. 11: 3561. https://doi.org/10.3390/nu12113561

APA StyleVázquez-Espino, K., Fernández-Tena, C., Lizarraga-Dallo, M. A., & Farran-Codina, A. (2020). Development and Validation of a Short Sport Nutrition Knowledge Questionnaire for Athletes. Nutrients, 12(11), 3561. https://doi.org/10.3390/nu12113561