Current Developments in Digital Quantitative Volume Estimation for the Optimisation of Dietary Assessment

Abstract

:1. Introduction

2. Complexity of the Southeast Asian Diet

2.1. Meal Settings and Eating Practices

2.2. Personalization of Meals

3. Limitations of Current Dietary Recording Methods

4. Digitization of Dietary Collection Methods

4.1. Feasibility of Going Digital

4.2. Benefits of Digital Healthcare Solutions

4.3. Limitations of Digital Healthcare Solutions

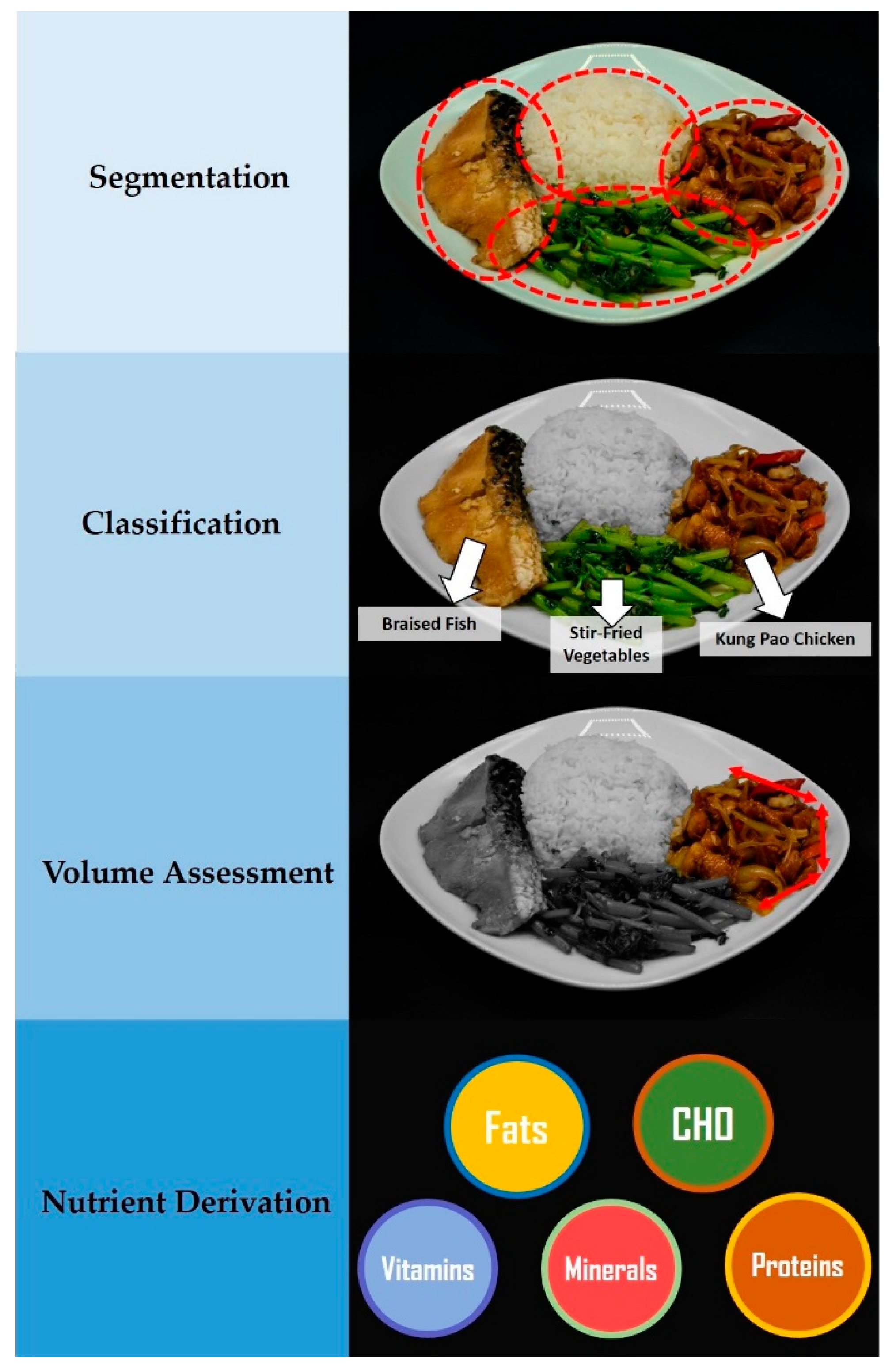

5. Recent Developments in Food Volume Estimation

5.1. Scale Calibration Principles

5.1.1. Physical Fiducial Markers

5.1.2. Digital Scale Calibration

5.2. Volume Mapping

5.2.1. Pixel Density

5.2.2. Geometric Modelling

5.2.3. Machine and Deep Learning

5.2.4. Depth Mapping

5.3. Database Dependency

6. Application to the Southeast Asian Consumer

7. Conclusions and Recommendations

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- World Health Organization. Global Report on Diabetes: Executive Summary; World Health Organization: Geneva, Switzerland, 2016. [Google Scholar]

- FAO. Asia and the Pacific Regional Overview of Food Security and Nutrition 2018—Accelerating Progress through the SDGs; FAO: Bangkok, Thailand, 2018. [Google Scholar]

- Hwang, C.K.; Han, P.V.; Zabetian, A.; Ali, M.K.; Narayan, K.V. Rural diabetes prevalence quintuples over twenty-five years in low-and middle-income countries: A systematic review and meta-analysis. Diabetes Res. Clin. Pract. 2012, 96, 271–285. [Google Scholar] [CrossRef] [PubMed]

- Lim, R.B.T.; Chen, C.; Naidoo, N.; Gay, G.; Tang, W.E.; Seah, D.; Chen, R.; Tan, N.C.; Lee, J.; Tai, E.S.; et al. Anthropometrics indices of obesity, and all-cause and cardiovascular disease-related mortality, in an Asian cohort with type 2 diabetes mellitus. Diabetes Metab. 2015, 41, 291–300. [Google Scholar] [CrossRef] [PubMed]

- Priyadi, A.; Muhtadi, A.; Suwantika, A.; Sumiwi, S. An economic evaluation of diabetes mellitus management in South East Asia. J. Adv. Pharm. Educ. Res. 2019, 9, 53–74. [Google Scholar]

- Roglic, G.; Varghese, C.; Thamarangsi, T. Diabetes in South-East Asia: Burden, gaps, challenges and ways forward. Who South-East Asia J. Public Health 2016, 5, 1–4. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Helble, M.; Francisco, K. The Upcoming Obesity Crisis in Asia and the Pacific: First Cost Estimates. ADBI Working Paper 743; Asian Development Bank Institute: Tokyo, Japan, 2017. [Google Scholar]

- Vorster, H.H.; Venter, C.S.; Wissing, M.P.; Margetts, B.M. The nutrition and health transition in the North West Province of South Africa: A review of the THUSA (Transition and Health during Urbanisation of South Africans) study. Public Health Nutr. 2005, 8, 480–490. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Steyn, N.P.; Mann, J.; Bennett, P.H.; Temple, N.; Zimmet, P.; Tuomilehto, J.; Lindström, J.; Louheranta, A. Diet, nutrition and the prevention of type 2 diabetes. Public Health Nutr. 2004, 7, 147–165. [Google Scholar] [CrossRef]

- Choi, Y.J.; Cho, Y.M.; Park, C.K.; Jang, H.C.; Park, K.S.; Kim, S.Y.; Lee, H.K. Rapidly increasing diabetes-related mortality with socio-environmental changes in South Korea during the last two decades. Diabetes Res. Clin. Pract. 2006, 74, 295–300. [Google Scholar] [CrossRef]

- Sobngwi, E.; Mbanya, J.-C.; Unwin, N.C.; Porcher, R.; Kengne, A.-P.; Fezeu, L.; Minkoulou, E.M.; Tournoux, C.; Gautier, J.-F.; Aspray, T.J.; et al. Exposure over the life course to an urban environment and its relation with obesity, diabetes, and hypertension in rural and urban Cameroon. Int. J. Epidemiol. 2004, 33, 769–776. [Google Scholar] [CrossRef] [Green Version]

- Nanditha, A.; Ma, R.C.W.; Ramachandran, A.; Snehalatha, C.; Chan, J.C.N.; Chia, K.S.; Shaw, J.E.; Zimmet, P.Z. Diabetes in Asia and the Pacific: Implications for the Global Epidemic. Diabetes Care 2016, 39, 472–485. [Google Scholar] [CrossRef] [Green Version]

- Eckert, S.; Kohler, S. Urbanization and health in developing countries: A systematic review. World Health Popul. 2014, 15, 7–20. [Google Scholar] [CrossRef]

- Tull, K. Urban Food Systems and Nutrition; Institute of Developmental Studies: Brighton, UK, 2018. [Google Scholar]

- Angkurawaranon, C.; Jiraporncharoen, W.; Chenthanakij, B.; Doyle, P.; Nitsch, D. Urban environments and obesity in southeast Asia: A systematic review, meta-analysis and meta-regression. PLoS ONE 2014, 9, e113547. [Google Scholar] [CrossRef] [PubMed]

- Allender, S.; Foster, C.; Hutchinson, L.; Arambepola, C. Quantification of urbanization in relation to chronic diseases in developing countries: A systematic review. J. Urban Health 2008, 85, 938–951. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- ASEAN/UNICEF/WHO. Regional Report on Nutrition Security in ASEAN, Volume 2; UNICEF: Bangkok, Thailand, 2016. [Google Scholar]

- Wong, L.Y.; Toh, M.P.H.S.; Tham, L.W.C. Projection of prediabetes and diabetes population size in Singapore using a dynamic Markov model. J. Diabetes 2017, 9, 65–75. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sevenhuysen, G.P. Food composition databases: Current problems and solutions. In Food Nutrition & Agriculture; Lupien, J.R., Richmond, K.R., Papetti, M.A., Cotier, J.P., Ghazali, A., Dawson, R., Eds.; FAO: Bangkok, Thailand, 1994. [Google Scholar]

- Kapsokefalou, M.; Roe, M.; Turrini, A.; Costa, H.; Martinez de Victoria, E.; Marletta, L.; Berry, R.; Finglas, P. Food Composition at Present: New Challenges. Nutrients 2019, 11, 1714. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chapparo, C.O.L.; Sethuraman, K. Overview of the Nutrition Situation in Seven Countries in Southeast Asia; Food and Nutrition Technical Assistance III Project (FANTA): Washington, DC, USA, 2014. [Google Scholar]

- Kasim, N.B.M.; Ahmad, M.H.; Shaharudin, A.B.; Naidu, B.M.; Ying, C.Y.; Tahir, H.; Aris, B. Food choices among Malaysian adults: Findings from Malaysian Adults Nutrition Survey (MANS) 2003 and MANS 2014. Malays. J. Nutr. 2018, 24, 63–75. [Google Scholar]

- Van Esterik, P. Food Culture in Southeast Asia; Greenwood Publishing Group: Westport, CT, USA, 2008. [Google Scholar]

- Shim, J.S.; Oh, K.; Kim, H.C. Dietary assessment methods in epidemiologic studies. Epidemiol. Health 2014, 36, e2014009. [Google Scholar] [CrossRef]

- Kirkpatrick, S.I.; Collins, C.E. Assessment of Nutrient Intakes: Introduction to the Special Issue. Nutrients 2016, 8, 184. [Google Scholar] [CrossRef] [Green Version]

- Subar, A.F.; Freedman, L.S.; Tooze, J.A.; Kirkpatrick, S.I.; Boushey, C.; Neuhouser, M.L.; Thompson, F.E.; Potischman, N.; Guenther, P.M.; Tarasuk, V.; et al. Addressing Current Criticism Regarding the Value of Self-Report Dietary Data. J. Nutr. 2015, 145, 2639–2645. [Google Scholar] [CrossRef] [Green Version]

- Thompson, F.E.; Subar, A.F.; Loria, C.M.; Reedy, J.L.; Baranowski, T. Need for technological innovation in dietary assessment. J. Am. Diet. Assoc. 2010, 110, 48–51. [Google Scholar] [CrossRef] [Green Version]

- Naska, A.; Lagiou, A.; Lagiou, P. Dietary assessment methods in epidemiological research: Current state of the art and future prospects. F1000Res 2017, 6, 926. [Google Scholar] [CrossRef] [Green Version]

- Hébert, J.R.; Hurley, T.G.; Steck, S.E.; Miller, D.R.; Tabung, F.K.; Peterson, K.E.; Kushi, L.H.; Frongillo, E.A. Considering the Value of Dietary Assessment Data in Informing Nutrition-Related Health Policy. Adv. Nutr. 2014, 5, 447–455. [Google Scholar] [CrossRef] [PubMed]

- Burrows, T.L.; Ho, Y.Y.; Rollo, M.E.; Collins, C.E. Validity of Dietary Assessment Methods When Compared to the Method of Doubly Labeled Water: A Systematic Review in Adults. Front. Endocrinol. 2019, 10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cade, J.E. Measuring diet in the 21st century: Use of new technologies. Proc. Nutr. Soc. 2017, 76, 276–282. [Google Scholar] [CrossRef] [PubMed]

- Cordeiro, F.; Epstein, D.A.; Thomaz, E.; Bales, E.; Jagannathan, A.K.; Abowd, G.D.; Fogarty, J. Barriers and Negative Nudges: Exploring Challenges in Food Journaling. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 1159–1162. [Google Scholar]

- Thompson, F.E.; Subar, A.F. Chapter 1—Dietary Assessment Methodology. In Nutrition in the Prevention and Treatment of Disease, 4th ed.; Coulston, A.M., Boushey, C.J., Ferruzzi, M.G., Delahanty, L.M., Eds.; Academic Press: Cambridge, MA, USA, 2017; pp. 5–48. [Google Scholar]

- OECD. Southeast Asia Going Digital: Connecting SMEs; OECD: Paris, France, 2019. [Google Scholar]

- Boushey, C.J.; Spoden, M.; Zhu, F.M.; Delp, E.J.; Kerr, D.A. New mobile methods for dietary assessment: Review of image-assisted and image-based dietary assessment methods. Proc. Nutr. Soc. 2017, 76, 283–294. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Arnhold, M.; Quade, M.; Kirch, W. Mobile applications for diabetics: A systematic review and expert-based usability evaluation considering the special requirements of diabetes patients age 50 years or older. J. Med. Internet Res. 2014, 16, e104. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- West, J.H.; Belvedere, L.M.; Andreasen, R.; Frandsen, C.; Hall, P.C.; Crookston, B.T. Controlling Your "App"etite: How Diet and Nutrition-Related Mobile Apps Lead to Behavior Change. JMIR Mhealth Uhealth 2017, 5, e95. [Google Scholar] [CrossRef] [PubMed]

- Pendergast, F.J.; Ridgers, N.D.; Worsley, A.; McNaughton, S.A. Evaluation of a smartphone food diary application using objectively measured energy expenditure. Int. J. Behav. Nutr. Phys. Act. 2017, 14, 30. [Google Scholar] [CrossRef] [Green Version]

- Tanchoco, C.C. Food- based dietary guidelines for Filipinos: Retrospects and prospects. Asia Pac. J. Clin. Nutr. 2011, 20, 462–471. [Google Scholar]

- Paik, H.Y. The issues in assessment and evaluation of diet in Asia. Asia Pac. J. Clin. Nutr. 2008, 17 (Suppl. 1), 294–295. [Google Scholar]

- Chong, K.H.; Wu, S.K.; Noor Hafizah, Y.; Bragt, M.C.; Poh, B.K. Eating Habits of Malaysian Children: Findings of the South East Asian Nutrition Surveys (SEANUTS). Asia-Pac. J. Public Health 2016, 28, 59s–73s. [Google Scholar] [CrossRef]

- Board, H.P. National Nutrition Survey 2010; Health Promotion Board: Singapore, 2010.

- Whitton, C.; Ma, Y.; Bastian, A.C.; Fen Chan, M.; Chew, L. Fast-food consumers in Singapore: Demographic profile, diet quality and weight status. Public Health Nutr. 2014, 17, 1805–1813. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Song, S.; Song, W.O. National nutrition surveys in Asian countries: Surveillance and monitoring efforts to improve global health. Asia Pac. J. Clin. Nutr. 2014, 23, 514–523. [Google Scholar] [CrossRef]

- Fellows, P.; Hilmi, M. Selling street and snack foods. In FAO Diversification Booklet; FAO: Rome, Italy, 2011. [Google Scholar]

- RYC, Q.; GH, J.; CJ, H. Energy density of ethnic cuisines in Singaporean hawker centres: A comparative study of Chinese, Malay, and Indian foods. Malays. J. Nutr. 2019, 25, 175–188. [Google Scholar]

- Johnson, R.K. Dietary Intake—How Do We Measure What People Are Really Eating? Obes. Res. 2002, 10, 63S–68S. [Google Scholar] [CrossRef] [PubMed]

- Scagliusi, F.B.; Ferriolli, E.; Pfrimer, K.; Laureano, C.; Cunha, C.S.; Gualano, B.; Lourenco, B.H.; Lancha, A.H., Jr. Underreporting of energy intake in Brazilian women varies according to dietary assessment: A cross-sectional study using doubly labeled water. J. Am. Diet. Assoc. 2008, 108, 2031–2040. [Google Scholar] [CrossRef] [PubMed]

- Rebro, S.M.; Patterson, R.E.; Kristal, A.R.; Cheney, C.L. The effect of keeping food records on eating patterns. J. Am. Diet. Assoc. 1998, 98, 1163–1165. [Google Scholar] [CrossRef]

- Archundia Herrera, M.C.; Chan, C.B. Narrative Review of New Methods for Assessing Food and Energy Intake. Nutrients 2018, 10, 1064. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Eldridge, A.L.; Piernas, C.; Illner, A.-K.; Gibney, M.J.; Gurinović, M.A.; De Vries, J.H.M.; Cade, J.E. Evaluation of New Technology-Based Tools for Dietary Intake Assessment—An ILSI Europe Dietary Intake and Exposure Task Force Evaluation. Nutrients 2018, 11, 55. [Google Scholar] [CrossRef] [Green Version]

- Amoutzopoulos, B.; Steer, T.; Roberts, C.; Cade, J.E.; Boushey, C.J.; Collins, C.E.; Trolle, E.; Boer, E.J.D.; Ziauddeen, N.; Van Rossum, C.; et al. Traditional methods v. new technologies—Dilemmas for dietary assessment in large-scale nutrition surveys and studies: A report following an international panel discussion at the 9th International Conference on Diet and Activity Methods (ICDAM9), Brisbane, 3 September 2015. J. Nutr. Sci. 2018, 7, e11. [Google Scholar] [CrossRef] [Green Version]

- Carter, M.C.; Burley, V.J.; Nykjaer, C.; Cade, J.E. ‘My Meal Mate’ (MMM): Validation of the diet measures captured on a smartphone application to facilitate weight loss. Br. J. Nutr. 2013, 109, 539–546. [Google Scholar] [CrossRef] [Green Version]

- Guan, V.X.; Probst, Y.C.; Neale, E.P.; Tapsell, L.C. Evaluation of the dietary intake data coding process in a clinical setting: Implications for research practice. PLoS ONE 2019, 14, e0221047. [Google Scholar] [CrossRef] [PubMed]

- Conway, R.; Robertson, C.; Dennis, B.; Stamler, J.; Elliott, P. Standardised coding of diet records: Experiences from INTERMAP UK. Br. J. Nutr. 2004, 91, 765–771. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Labrique, A.; Mehra, S.; M, M. The Use of New and Existing Tools and Technologies to Support the Global Nutrition Agenda: The innovation opportunity. In Good Nutrition: Perspectives for the 21st Century; Eggersdorfen, M., Kraemer, K., Cordaro, J., Fanzo, J., Gibney, M., Kennedy, E., Labrique, A., Steffen, J., Eds.; Karger: Basel, Switzerland, 2016; pp. 209–219. [Google Scholar]

- Chui, T. Validation Study of a Passive Image-Assisted Dietary Assessment Method with Automated Image Analysis Process; University of Tennessee: Knoxville, Tennessee, 2018. [Google Scholar]

- Dennison, L.; Morrison, L.; Conway, G.; Yardley, L. Opportunities and challenges for smartphone applications in supporting health behavior change: Qualitative study. J. Med. Internet Res. 2013, 15, e86. [Google Scholar] [CrossRef] [PubMed]

- Adams, S.H.; Anthony, J.C.; Carvajal, R.; Chae, L.; Khoo, C.S.H.; Latulippe, M.E.; Matusheski, N.V.; McClung, H.L.; Rozga, M.; Schmid, C.H.; et al. Perspective: Guiding Principles for the Implementation of Personalized Nutrition Approaches That Benefit Health and Function. Adv. Nutr. 2019. [Google Scholar] [CrossRef] [PubMed]

- Fu, H.N.; Adam, T.J.; Konstan, J.A.; Wolfson, J.A.; Clancy, T.R.; Wyman, J.F. Influence of Patient Characteristics and Psychological Needs on Diabetes Mobile App Usability in Adults With Type 1 or Type 2 Diabetes: Crossover Randomized Trial. JMIR Diabetes 2019, 4, e11462. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liew, M.S.; Zhang, J.; See, J.; Ong, Y.L. Usability Challenges for Health and Wellness Mobile Apps: Mixed-Methods Study Among mHealth Experts and Consumers. JMIR Mhealth Uhealth 2019, 7, e12160. [Google Scholar] [CrossRef]

- Koot, D.; Goh, P.S.C.; Lim, R.S.M.; Tian, Y.; Yau, T.Y.; Tan, N.C.; Finkelstein, E.A. A Mobile Lifestyle Management Program (GlycoLeap) for People with Type 2 Diabetes: Single-Arm Feasibility Study. JMIR Mhealth Uhealth 2019, 7, e12965. [Google Scholar] [CrossRef] [Green Version]

- Thompson-Felty, C.; Johnston, C.S. Adherence to Diet Applications Using a Smartphone Was Associated With Weight Loss in Healthy Overweight Adults Irrespective of the Application. J. Diabetes Sci. Technol. 2017, 11, 184–185. [Google Scholar] [CrossRef] [Green Version]

- Martin, C.K.; Correa, J.B.; Han, H.; Allen, H.R.; Rood, J.C.; Champagne, C.M.; Gunturk, B.K.; Bray, G.A. Validity of the Remote Food Photography Method (RFPM) for estimating energy and nutrient intake in near real-time. Obesity 2012, 20, 891–899. [Google Scholar] [CrossRef] [Green Version]

- Ming, Z.-Y.; Chen, J.; Cao, Y.; Forde, C.; Ngo, C.-W.; Chua, T. Food Photo Recognition for Dietary Tracking: System and Experiment; Springer: Berlin/Heidelberg, Germany, 2018; pp. 129–141. [Google Scholar]

- Gibney, M.; Walsh, M.; Goosens, J. Personalized Nutrition: Paving the way to better population health. In Good Nutrition: Perspectives for the 21st Century; Eggersdorfen, M., Kraemer, K., Cordaro, J., Fanzo, J., Gibney, M., Kennedy, E., Labrique, A., Steffen, J., Eds.; Karger: Basel, Switzerland, 2016; pp. 235–248. [Google Scholar]

- Samoggia, A.; Riedel, B. Assessment of nutrition-focused mobile apps’ influence on consumers’ healthy food behaviour and nutrition knowledge. Food Res. Int. 2020, 128, 108766. [Google Scholar] [CrossRef]

- Issom, D.Z.; Woldaregay, A.Z.; Chomutare, T.; Bradway, M.; Årsand, E.; Hartvigsen, G. Mobile applications for people with diabetes published between 2010 and 2015. Diabetes Manag. 2015, 5, 539–550. [Google Scholar] [CrossRef]

- De Cock, N.; Vangeel, J.; Lachat, C.; Beullens, K.; Vervoort, L.; Goossens, L.; Maes, L.; Deforche, B.; De Henauw, S.; Braet, C.; et al. Use of Fitness and Nutrition Apps: Associations With Body Mass Index, Snacking, and Drinking Habits in Adolescents. JMIR Mhealth Uhealth 2017, 5, e58. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Teixeira, V.; Voci, S.M.; Mendes-Netto, R.S.; Da Silva, D.G. The relative validity of a food record using the smartphone application MyFitnessPal. Nutr. Diet. J. Dietit. Assoc. Aust. 2018, 75, 219–225. [Google Scholar] [CrossRef] [PubMed]

- Braz, V.N.; Lopes, M. Evaluation of mobile applications related to nutrition. Public Health Nutr. 2019, 22, 1209–1214. [Google Scholar] [CrossRef] [Green Version]

- Hoj, T.H.; Covey, E.L.; Jones, A.C.; Haines, A.C.; Hall, P.C.; Crookston, B.T.; West, J.H. How Do Apps Work? An Analysis of Physical Activity App Users’ Perceptions of Behavior Change Mechanisms. JMIR Mhealth Uhealth 2017, 5, e114. [Google Scholar] [CrossRef]

- Commission, E. Green Paper on Mobile Health (“mHealth”); European Commission: Brussels, Belgium, 2014. [Google Scholar]

- Ryan, E.A.; Holland, J.; Stroulia, E.; Bazelli, B.; Babwik, S.A.; Li, H.; Senior, P.; Greiner, R. Improved A1C Levels in Type 1 Diabetes with Smartphone App Use. Can. J. Diabetes 2017, 41, 33–40. [Google Scholar] [CrossRef] [Green Version]

- Woo, I.; Otsmo, K.; Kim, S.; Ebert, D.S.; Delp, E.J.; Boushey, C.J. Automatic portion estimation and visual refinement in mobile dietary assessment. Comput. Imaging Viii 2010, 7533, 75330O. [Google Scholar]

- MacNeill, V.; Foley, M.; Quirk, A.; McCambridge, J. Shedding light on research participation effects in behaviour change trials: A qualitative study examining research participant experiences. BMC Public Health 2016, 16, 91. [Google Scholar] [CrossRef] [Green Version]

- FDA. Policy for Device Software Functions and Mobile Medical Applications: Guidance for Industry and Food and Drug Administration Staff; US Food and Drug Administration: White Oak, MA, USA, 2019.

- Illner, A.K.; Freisling, H.; Boeing, H.; Huybrechts, I.; Crispim, S.P.; Slimani, N. Review and evaluation of innovative technologies for measuring diet in nutritional epidemiology. Int J. Epidemiol 2012, 41, 1187–1203. [Google Scholar] [CrossRef] [Green Version]

- Forster, H.; Walsh, M.C.; Gibney, M.J.; Brennan, L.; Gibney, E.R. Personalised nutrition: The role of new dietary assessment methods. Proc. Nutr. Soc. 2016, 75, 96–105. [Google Scholar] [CrossRef] [Green Version]

- Burrows, T.L.; Rollo, M.E. Advancement in Dietary Assessment and Self-Monitoring Using Technology. Nutrients 2019, 11, 1648. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Anthimopoulos, M.; Dehais, J.; Mougiakakou, S. Performance Evaluation Methods of Computer Vision Systems for Meal Assessment. In Proceedings of the 2nd International Workshop on Multimedia Assisted Dietary Management, Amsterdam, The Netherlands, 16 October 2016; pp. 83–87. [Google Scholar]

- Zhu, F.; Bosch, M.; Khanna, N.; Boushey, C.J.; Delp, E.J. Multiple Hypotheses Image Segmentation and Classification With Application to Dietary Assessment. IEEE J. Biomed. Health Inform. 2015, 19, 377–388. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, Y.; Chen, J.J.; Ngo, C.W.; Chua, T.S.; Zuo, W.; Ming, Z. Mixed Dish Recognition through Multi-Label Learning. In CEA ’19: Proceedings of the 11th Workshop on Multimedia for Cooking and Eating Activities; Association for Computing Machinery: New York, NY, USA, 2019. [Google Scholar]

- Wang, Y.; He, Y.; Boushey, C.J.; Zhu, F.; Delp, E.J. Context Based Image Analysis with Application in Dietary Assessment and Evaluation. Multimed. Tools Appl. 2018, 77, 19769–19794. [Google Scholar] [CrossRef] [PubMed]

- Subhi, M.A.; Ali, S.H.; Mohammed, M.A. Vision-Based Approaches for Automatic Food Recognition and Dietary Assessment: A Survey. IEEE Access 2019, 7, 35370–35381. [Google Scholar] [CrossRef]

- Yue, Y.; Jia, W.; Sun, M. Measurement of food volume based on single 2-D image without conventional camera calibration. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 2166–2169. [Google Scholar]

- Jia, W.; Yue, Y.; Fernstrom, J.D.; Yao, N.; Sclabassi, R.J.; Fernstrom, M.H.; Sun, M. Imaged based estimation of food volume using circular referents in dietary assessment. J. Food Eng. 2012, 109, 76–86. [Google Scholar] [CrossRef] [Green Version]

- Fang, S.; Shao, Z.; Kerr, D.A.; Boushey, C.J.; Zhu, F. An End-to-End Image-Based Automatic Food Energy Estimation Technique Based on Learned Energy Distribution Images: Protocol and Methodology. Nutrients 2019, 11. [Google Scholar] [CrossRef] [Green Version]

- Dehais, J.; Anthimopoulos, M.; Shevchik, S.; Mougiakakou, S. Two-View 3D Reconstruction for Food Volume Estimation. IEEE Trans. Multimed. 2017, 19, 1090–1099. [Google Scholar] [CrossRef] [Green Version]

- Chae, J.; Woo, I.; Kim, S.; Maciejewski, R.; Zhu, F.; Delp, E.J.; Boushey, C.J.; Ebert, D.S. Volume Estimation Using Food Specific Shape Templates in Mobile Image-Based Dietary Assessment. Proc. Spie-Int. Soc. Opt. Eng. 2011, 7873, 78730k. [Google Scholar] [CrossRef] [Green Version]

- Xu, C.; He, Y.; Khanna, N.; Boushey, C.J.; Delp, E.J. Model-based food volume estimation using 3D pose. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 2534–2538. [Google Scholar]

- He, Y.; Xu, C.; Khanna, N.; Boushey, C.J.; Delp, E.J. Food image analysis: Segmentation, identification, and weight estimation. In Proceedings of the IEEE International Conference on Multimedia and Expo, San Jose, CA, USA, 15–19 July 2013. [Google Scholar]

- Rahman, M.H.; Li, Q.; Pickering, M.; Frater, M.; Kerr, D.; Bouchey, C.; Delp, E. Food volume estimation in a mobile phone based dietary assessment system. In Proceedings of the 2012 Eighth International Conference on Signal Image Technology and Internet Based Systems, Sorrento, Naples, Italy, 25–29 November 2012; pp. 988–995. [Google Scholar]

- Puri, M.; Zhiwei, Z.; Yu, Q.; Divakaran, A.; Sawhney, H. Recognition and volume estimation of food intake using a mobile device. In Proceedings of the 2009 Workshop on Applications of Computer Vision (WACV), Snowbird, UT, USA, 7–8 December 2009; pp. 1–8. [Google Scholar]

- Martin, C.K.; Kaya, S.; Gunturk, B.K. Quantification of food intake using food image analysis. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 6869–6872. [Google Scholar]

- Rhyner, D.; Loher, H.; Dehais, J.; Anthimopoulos, M.; Shevchik, S.; Botwey, R.H.; Duke, D.; Stettler, C.; Diem, P.; Mougiakakou, S. Carbohydrate estimation by a mobile phone-based system versus self-estimations of individuals with type 1 diabetes mellitus: A comparative study. J. Med. Internet Res. 2016, 18, e101. [Google Scholar] [CrossRef] [Green Version]

- Ege, T.; Shimoda, W.; Yanai, K. A New Large-scale Food Image Segmentation Dataset and Its Application to Food Calorie Estimation Based on Grains of Rice. In Proceedings of the 5th International Workshop on Multimedia Assisted Dietary Management, Nice, France, 21–25 October 2019; pp. 82–87. [Google Scholar]

- Akpa, E.H.; Suwa, H.; Arakawa, Y.; Yasumoto, K. Smartphone-Based Food Weight and Calorie Estimation Method for Effective Food Journaling. Sice J. ControlMeas. Syst. Integr. 2017, 10, 360–369. [Google Scholar] [CrossRef] [Green Version]

- Liang, Y.; Li, J. Deep Learning-Based Food Calorie Estimation Method in Dietary Assessment. arXiv 2017, arXiv:1706.04062. [Google Scholar]

- Villalobos, G.; Almaghrabi, R.; Pouladzadeh, P.; Shirmohammadi, S. An image procesing approach for calorie intake measurement. In Proceedings of the 2012 IEEE International Symposium on Medical Measurements and Applications Proceedings, Budapest, Hungary, 18–19 May 2012; pp. 1–5. [Google Scholar]

- Pouladzadeh, P.; Shirmohammadi, S.; Al-Maghrabi, R. Measuring Calorie and Nutrition from Food Image. IEEE Trans. Instrum. Meas. 2014, 63, 1947–1956. [Google Scholar] [CrossRef]

- Subhi, M.A.; Ali, S.H.M.; Ismail, A.G.; Othman, M. Food volume estimation based on stereo image analysis. Ieee Instrum. Meas. Mag. 2018, 21, 36–43. [Google Scholar] [CrossRef]

- Shang, J.; Duong, M.; Pepin, E.; Zhang, X.; Sandara-Rajan, K.; Mamishev, A.; Kristal, A. A mobile structured light system for food volume estimation. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 100–101. [Google Scholar]

- Makhsous, S.; Mohammad, H.M.; Schenk, J.M.; Mamishev, A.V.; Kristal, A.R. A Novel Mobile Structured Light System in Food 3D Reconstruction and Volume Estimation. Sensors 2019, 19, 564. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tanno, R.; Ege, T.; Yanai, K. AR DeepCalorieCam V2: Food calorie estimation with cnn and ar-based actual size estimation. In Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology, Tokyo, Japan, November 28–December 1 2018; p. 46. [Google Scholar]

- Yang, Y.; Jia, W.; Bucher, T.; Zhang, H.; Sun, M. Image-based food portion size estimation using a smartphone without a fiducial marker. Public Health Nutr. 2019, 22, 1180–1192. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.-Y.; Yang, Y.-H.; Ho, C.-J.; Wang, S.-H.; Liu, S.-M.; Chang, E.; Yeh, C.-H.; Ouhyoung, M. Automatic Chinese Food Identification and Quantity Estimation; Association for Computing Machinery: Singapore, 2012; p. 29. [Google Scholar]

- Lo, F.P.; Sun, Y.; Qiu, J.; Lo, B. Food Volume Estimation Based on Deep Learning View Synthesis from a Single Depth Map. Nutrients 2018, 10. [Google Scholar] [CrossRef] [Green Version]

- Lo, P.W.; Sun, Y.; Qiu, J.; Lo, B. Point2Volume: A Vision-based Dietary Assessment Approach using View Synthesis. IEEE Trans. Ind. Inform. 2019. [Google Scholar] [CrossRef]

- Meyers, A.; Johnston, N.; Rathod, V.; Korattikara, A.; Gorban, A.; Silberman, N.; Guadarrama, S.; Papandreou, G.; Huang, J.; Murphy, K.P. Im2Calories: Towards an automated mobile vision food diary. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1233–1241. [Google Scholar]

- Ando, Y.; Ege, T.; Cho, J.; Yanai, K. DepthCalorieCam: A mobile application for volume-based food calorie estimation using depth cameras. In MADiMa ’19: Proceedings of the 5th International Workshop on Multimedia Assisted Dietary Management; Association for Computing Machinery: New York, NY, USA, 2019. [Google Scholar]

- Zhang, W.; Yu, Q.; Siddiquie, B.; Divakaran, A.; Sawhney, H. “Snap-n-Eat” Food Recognition and Nutrition Estimation on a Smartphone. J. Diabetes Sci. Technol. 2015, 9, 525–533. [Google Scholar] [CrossRef] [Green Version]

- Okamoto, K.; Yanai, K. An automatic calorie estimation system of food images on a smartphone. In Proceedings of the 2nd International Workshop on Multimedia Assisted Dietary Management, Amsterdam, The Netherlands, 16 October 2016; pp. 63–70. [Google Scholar]

- Jia, W.; Chen, H.C.; Yue, Y.; Li, Z.; Fernstrom, J.; Bai, Y.; Li, C.; Sun, M. Accuracy of food portion size estimation from digital pictures acquired by a chest-worn camera. Public Health Nutr. 2014, 17, 1671–1681. [Google Scholar] [CrossRef] [Green Version]

- Zhou, L.; Zhang, C.; Liu, F.; Qiu, Z.; He, Y. Application of Deep Learning in Food: A Review. Compr. Rev. Food Sci. Food Saf. 2019, 18, 1793–1811. [Google Scholar] [CrossRef] [Green Version]

- Ege, T.; Yanai, K. Simultaneous estimation of food categories and calories with multi-task CNN. In Proceedings of the 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Nagoya, Japan, 8–12 May 2017; pp. 198–201. [Google Scholar]

- Runar, J.; Eirik Bø, K.; Aline Iyagizeneza, W. A Deep Learning Segmentation Approach to Calories and Weight Estimation of Food Images; University of Agder: Kristiansand, Norway, 2019. [Google Scholar]

- Chokr, M.; Elbassuoni, S. Calories Prediction from Food Images; AAAI Press: San Francisco, CA, USA, 2017; pp. 4664–4669. [Google Scholar]

- Chen, M.; Dhingra, K.; Wu, W.; Yang, L.; Sukthankar, R.; Yang, J. PFID: Pittsburgh fast-food image dataset. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 289–292. [Google Scholar]

- Christ, P.F.; Schlecht, S.; Ettlinger, F.; Grün, F.; Heinle, C.; Tatavatry, S.; Ahmadi, S.; Diepold, K.; Menze, B.H. Diabetes60—Inferring Bread Units From Food Images Using Fully Convolutional Neural Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 Octomber 2017; pp. 1526–1535. [Google Scholar]

- Elmadfa, I.; Meyer, A.L. Importance of food composition data to nutrition and public health. Eur. J. Clin. Nutr. 2010, 64, S4–S7. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hulshof, P.; Doets, E.; Seyha, S.; Bunthang, T.; Vonglokham, M.; Kounnavong, S.; Famida, U.; Muslimatun, S.; Santika, O.; Prihatini, S.; et al. Food Composition Tables in Southeast Asia: The Contribution of the SMILING Project. Matern. Child. Health J. 2019, 23, 46–54. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Merchant, A.T.; Dehghan, M. Food composition database development for between country comparisons. Nutr. J. 2006, 5, 2. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Puwastien, P. Issues in the development and use of food composition databases. Public Health Nutr. 2003, 5, 991–999. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Partridge, E.K.; Neuhouser, M.L.; Breymeyer, K.; Schenk, J.M. Comparison of Nutrient Estimates Based on Food Volume versus Weight: Implications for Dietary Assessment Methods. Nutrients 2018, 10, 973. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stumbo, P.J.; Weiss, R. Using database values to determine food density. J. Food Compos. Anal. 2011, 24, 1174–1176. [Google Scholar] [CrossRef]

- Hayowitz, D.; Ahuja, J.; Showell, B.; Somanchi, M.; Nickle, M.; Nguyen, Q.; Williams, J.; Roseland, J.; Khan, M.; Patterson, K.; et al. USDA National Nutrient Database for Standard Reference, Release 28; US Department of Agriculture ARS, Nutrient Data Laboratory, Eds.; USDA: Washington, DC, USA, 2015.

- Charrondiere, U.R.; Haytowitz, D.; Stadlmayr, B. FAO/INFOODS Density Database Version 2.0. In Food and Agriculture Organization of the United Nations Technical Workshop Report 2012; USDA: Washington, DC, USA, 2012. [Google Scholar]

- Xu, C.; He, Y.; Khannan, N.; Parra, A.; Boushey, C.; Delp, E. Image-based food volume estimation. In Proceedings of the 5th International Workshop on Multimedia for Cooking & Eating Activities, Barcelona, Spain, 21 October 2013; pp. 75–80. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tay, W.; Kaur, B.; Quek, R.; Lim, J.; Henry, C.J. Current Developments in Digital Quantitative Volume Estimation for the Optimisation of Dietary Assessment. Nutrients 2020, 12, 1167. https://doi.org/10.3390/nu12041167

Tay W, Kaur B, Quek R, Lim J, Henry CJ. Current Developments in Digital Quantitative Volume Estimation for the Optimisation of Dietary Assessment. Nutrients. 2020; 12(4):1167. https://doi.org/10.3390/nu12041167

Chicago/Turabian StyleTay, Wesley, Bhupinder Kaur, Rina Quek, Joseph Lim, and Christiani Jeyakumar Henry. 2020. "Current Developments in Digital Quantitative Volume Estimation for the Optimisation of Dietary Assessment" Nutrients 12, no. 4: 1167. https://doi.org/10.3390/nu12041167