Holographic Microwave Image Classification Using a Convolutional Neural Network

Abstract

1. Introduction

2. Materials and Method

2.1. Convolutional Neural Network

2.2. Datasets

2.3. Training and Testing Data

2.3.1. Image Segmentation

2.3.2. Image Labeling

2.4. Network Architecture

2.4.1. Modified AlexNet

2.4.2. Transfer Learning

2.5. Data Analysis and Image Processing

2.6. Performance Metrics

3. Results and Discussion

3.1. Results

3.2. Discussion

4. Conclusions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Siegel, R.; Miller, K.; Fuchs, H.; Jemal, A. Cancer statistics, 2022. CA Cancer J. Clin. 2022, 72, 7–33. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Yin, X.; Sheng, L.; Xu, S.; Dong, L.; Liu, L. Perioperative chemotherapy more of a benefit for overall survival than adjuvant chemotherapy for operable gastric cancer: An updated meta-analysis. Sci. Rep. 2015, 5, 12850. [Google Scholar] [CrossRef] [PubMed]

- Magna, G.; Casti, P.; Jayaraman, S.V.; Salmeri, M.; Mencattini, A.; Martinelli, E.; Di Natale, C. Identification of mammography anomalies for breast cancer detection by an ensemble of classification models based on artificial immune system. Knowl. Syst. 2016, 101, 60–70. [Google Scholar] [CrossRef]

- Meaney, P.M.; Golnabi, A.H.; Epstein, N.R.; Geimer, S.D.; Fanning, M.W.; Weaver, J.B.; Paulsen, K.D. Integration of microwave tomography with magnetic resonance for improved breast imaging. Med. Phys. 2013, 40, 103101. [Google Scholar] [CrossRef]

- Lazebnik, M.; McCartney, L.; Popovic, D.; Watkins, C.B.; Lindstrom, M.J.; Harter, J.; Sewall, S.; Magliocco, A.; Booske, J.H.; Okoniewski, M.; et al. A large-scale study of the ultrawideband microwave dielectric properties of normal breast tissue obtained from reduction surgeries. Phys. Med. Biol. 2007, 52, 2637. [Google Scholar] [CrossRef] [PubMed]

- Lazebnik, M.; Popovic, D.; McCartney, L.; Watkins, C.B.; Lindstrom, M.J.; Harter, J.; Sewall, S.; Ogilvie, T.; Magliocco, A.; Breslin, T.M.; et al. A large-scale study of the ultrawideband microwave dielectric properties of normal, benign and malignant breast tissues obtained from cancer surgeries. Phys. Med. Biol. 2007, 52, 6093–6115. [Google Scholar] [CrossRef]

- Elahi, M.A.; O’Loughlin, D.; Lavoie, B.R.; Glavin, M.; Jones, E.; Fear, E.C.; O’Halloran, M. Evaluation of Image Reconstruction Algorithms for Confocal Microwave Imaging: Application to Patient Data. Sensors 2018, 18, 1678. [Google Scholar] [CrossRef]

- Moloney, B.M.; O’Loughlin, D.; Abd Elwahab, S.; Kerin, M.J. Breast Cancer Detection–A Synopsis of Conventional Modalities and the Potential Role of Microwave Imaging. Diagnostics 2020, 10, 103. [Google Scholar] [CrossRef]

- Soltani, M.; Rahpeima, R.; Kashkooli, F.M. Breast cancer diagnosis with a microwave thermoacoustic imaging technique—A numerical approach. Med. Biol. Eng. Comput. 2019, 57, 1497–1513. [Google Scholar] [CrossRef]

- Rahpeima, R.; Soltani, M.; Kashkooli, F.M. Numerical Study of Microwave Induced Thermoacoustic Imaging for Initial Detection of Cancer of Breast on Anatomically Realistic Breast Phantom. Comput. Methods Programs Biomed. 2020, 196, 105606. [Google Scholar] [CrossRef]

- Meaney, P.M.; Fanning, M.W.; Li, D.; Poplack, S.P.; Paulsen, K.D. A clinical prototype for active microwave imaging of the breast. IEEE Trans. Microw. Theory Tech. 2000, 48, 1841–1853. [Google Scholar]

- Islam, M.; Mahmud, M.; Islam, M.T.; Kibria, S.; Samsuzzaman, M. A Low Cost and Portable Microwave Imaging System for Breast Tumor Detection Using UWB Directional Antenna array. Sci. Rep. 2019, 9, 15491. [Google Scholar] [CrossRef]

- Adachi, M.; Nakagawa, T.; Fujioka, T.; Mori, M.; Kubota, K.; Oda, G.; Kikkawa, T. Feasibility of Portable Microwave Imaging Device for Breast Cancer Detection. Diagnostics 2021, 12, 27. [Google Scholar] [CrossRef] [PubMed]

- Srinivasan, D.; Gopalakrishnan, M. Breast Cancer Detection Using Adaptable Textile Antenna Design. J. Med. Syst. 2019, 43, 177. [Google Scholar] [CrossRef]

- Misilmani, H.M.E.; Naous, T.; Khatib, S.K.A.; Kabalan, K.Y. A Survey on Antenna Designs for Breast Cancer Detection Using Microwave Imaging. IEEE Access 2020, 8, 102570–102594. [Google Scholar] [CrossRef]

- Sheeba, I.R.; Jayanthy, T. Design and Analysis of a Flexible Softwear Antenna for Tumor Detection in Skin and Breast Model. Wirel. Pers. Commun. 2019, 107, 887–905. [Google Scholar] [CrossRef]

- Meaney, P.M.; Kaufman, P.A.; Muffly, L.S.; Click, M.; Poplack, S.P.; Wells, W.A.; Schwartz, G.N.; di Florio-Alexander, R.M.; Tosteson, T.D.; Li, Z.; et al. Microwave imaging for neoadjuvant chemotherapy monitoring: Initial clinical experience. Breast Cancer Res. 2013, 15, R35. [Google Scholar] [CrossRef]

- Sani, L.; Ghavami, N.; Vispa, A.; Paoli, M.; Raspa, G.; Ghavami, M.; Sacchetti, F.; Vannini, E.; Ercolani, S.; Saracini, A.; et al. Novel microwave apparatus for breast lesions detection: Preliminary clinical results. Biomed. Signal Process. Control 2019, 52, 257–263. [Google Scholar] [CrossRef]

- Williams, T.C.; Fear, E.C.; Westwick, D.T. Tissue sensing adaptive radar for breast cancer detection-investigations of an improved skin-sensing method. IEEE Trans. Microw. Theory Tech. 2006, 54, 1308–1314. [Google Scholar] [CrossRef]

- Abdollahi, N.; Jeffrey, I.; LoVetri, J. Improved Tumor Detection via Quantitative Microwave Breast Imaging Using Eigenfunction- Based Prior. IEEE Trans. Comput. Imaging 2020, 6, 1194–1202. [Google Scholar] [CrossRef]

- Coşğun, S.; Bilgin, E.; Çayören, M. Microwave imaging of breast cancer with factorization method: SPIONs as contrast agent. Med. Phys. 2020, 47, 3113–3122. [Google Scholar] [CrossRef] [PubMed]

- Rana, S.P.; Dey, M.; Tiberi, G.; Sani, L.; Vispa, A.; Raspa, G.; Duranti, M.; Ghavami, M.; Dudley, S. Machine Learning Approaches for Automated Lesion Detection in Microwave Breast Imaging Clinical Data. Sci. Rep. 2019, 9, 10510. [Google Scholar] [CrossRef] [PubMed]

- Edwards, K.; Khoshdel, V.; Asefi, M.; LoVetri, J.; Gilmore, C.; Jeffrey, I. A Machine LearningWorkflow for Tumour Detection in Breasts Using 3D Microwave Imaging. Electronics 2021, 10, 674. [Google Scholar] [CrossRef]

- Mojabi, P.; Khoshdel, V.; Lovetri, J. Tissue-Type ClassificationWith Uncertainty Quantification of Microwave and Ultrasound Breast Imaging: A Deep Learning Approach. IEEE Access 2020, 8, 182092–182104. [Google Scholar] [CrossRef]

- Roslidar, R.; Rahman, A.; Muharar, R.; Syahputra, M.R.; Munadi, K. A review on recent progress in thermal imaging and deep learning approaches for breast cancer detection. IEEE Access 2020, 8, 116176–116194. [Google Scholar] [CrossRef]

- Bakx, N.; Bluemink, H.; Hagelaar, E.; Sangen, M.; Hurkmans, C. Development and evaluation of radiotherapy deep learning dose prediction models for breast cancer. Phys. Imaging Radiat. Oncol. 2021, 17, 65–70. [Google Scholar] [CrossRef]

- Chen, Y.; Ling, L.; Huang, Q. Classification of breast tumors in ultrasound using biclustering mining and neural network. In Proceedings of the 2016 9th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Datong, China, 15–17 October 2016; pp. 1788–1791. [Google Scholar]

- Li, Y.; Hu, W.; Chen, S.; Zhang, W.; Ligthart, L. Spatial resolution matching of microwave radiometer data with convolutional neural network. Remote Sens. 2019, 11, 2432. [Google Scholar] [CrossRef]

- Khoshdel, V.; Asefi, M.; Ashraf, A.; Lovetri, J. Full 3D microwave breast imaging using a deep-learning technique. J. Imaging 2020, 6, 80. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. Imagenet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Liu, G.; Mao, S.; Kim, J.H. A mature-tomato detection algorithm using machine learning and color analysis. Sensors 2019, 19, 2023. [Google Scholar] [CrossRef]

- Lu, S.; Lu, Z.; Zhang, Y.D. Pathological brain detection based on AlexNet and transfer learning. J. Comput. Sci. 2019, 30, 41–47. [Google Scholar] [CrossRef]

- Wang, L.; Simpkin, R.; Al-Jumaily, A. Holographic microwave imaging array: Experimental investigation of breast tumour detection. In Proceedings of the 2013 IEEE International Workshop on Electromagnetics, Applications and Student Innovation Competition, Hong Kong, China, 1–3 August 2013; pp. 61–64. [Google Scholar]

- Wang, L.; Fatemi, M. Compressive Sensing Holographic Microwave Random Array Imaging of Dielectric Inclusion. IEEE Access 2018, 6, 56477–56487. [Google Scholar] [CrossRef]

- Wang, L. Multi-Frequency Holographic Microwave Imaging for Breast Lesion Detection. IEEE Access 2019, 7, 83984–83993. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Burfeindt, M.J.; Colgan, T.J.; Mays, R.O.; Shea, J.D.; Behdad, N.; Van Veen, B.D.; Hagness, S.C. MRI-Derived 3-D-Printed Breast Phantom for Microwave Breast Imaging Validation. IEEE Antennas Wirel. Propag. Lett. 2012, 11, 1610–1613. [Google Scholar] [CrossRef] [PubMed]

- Italian National Research Council. An Internet Resource for the Calculation of the Dielectric Properties of Body Tissues in the Frequency Range 10 Hz–100 GHz. Available online: http://niremf.ifac.cnr.it/tissprop (accessed on 23 October 2022).

- Sahlol, A.T.; Yousri, D.; Ewees, A.A.; Al-Qaness, M.; Elaziz, M.A. COVID-19 image classification using deep features and fractional-order marine predators algorithm. Sci. Rep. 2020, 10, 15364. [Google Scholar] [CrossRef] [PubMed]

| Number | Phantom Class | Quantity | Model | Size |

|---|---|---|---|---|

| No 1 | I: fatty | 253 | RGB | |

| No 2 | I: fatty | 288 | RGB | |

| No 3 | II: dense | 307 | RGB | |

| No 4 | II: dense | 270 | RGB | |

| No 5 | II: dense | 251 | RGB | |

| No 6 | III: heterogeneously dense | 202 | RGB | |

| No 7 | III: heterogeneously dense | 248 | RGB | |

| No 8 | III: heterogeneously dense | 273 | RGB | |

| No 9 | IV: very dense | 212 | RGB | |

| No 10 | V: very dense breast contains two tumors | 212 | RGB | |

| No 11 | V: very dense breast contains two tumors | 212 | RGB | |

| No 12 | V: fatty breast contains two tumors | 253 | RGB |

| Dataset | 1 | 2 |

|---|---|---|

| Modality | Real part of HMI breast | Imaginary part of HMI breast |

| Number of phantoms | 12 | 12 |

| Classes of images | 5 | 5 |

| Number of HMI images | 1379 | 1379 |

| Image size | 227 × 227 × 3 | 227 × 227 × 3 |

| Number of training images | 966 | 966 |

| Number of validation images | 275 | 275 |

| Number of test images | 138 | 138 |

| Number of Class I | 160 | 160 |

| Number of Class II | 457 | 457 |

| Number of Class III | 444 | 444 |

| Number of Class IV | 108 | 108 |

| Number of Class V | 210 | 210 |

| Cross-validation group | 8-fold | 8-fold |

| Maximum number of epochs | 50 | 50 |

| Minimum batch size | 25 | 25 |

| Validation frequency | 30 | 30 |

| Initial learning rate | 0.0003 | 0.0003 |

| Schematic | No. | Name | Type | Activations | Weights & Bias |

|---|---|---|---|---|---|

| 1 | data | Image input | 227 × 227 × 3 | |

| 2 | conv1 | Convolution | 55 × 55 × 96 | Weights: 11 × 11 × 3 × 96; bias: 1 × 1 × 96 | |

| 3 | relu1 | ReLu | 55 × 55 × 96 | ||

| 4 | norm1 | Cross-channel normalization | 55 × 55 × 96 | ||

| 5 | pool1 | Max pooling | 27 × 27 × 96 | ||

| 6 | conv2 | Grouped convolution | 27 × 27 × 96 | ||

| 7 | relu2 | ReLU | 27 × 27 × 256 | Weights: 5 × 5 × 48 × 128; bias: 1 × 1 × 128 × 2 | |

| 8 | norm2 | Cross-channel normalization | 27 × 27 × 256 | ||

| 9 | pool2 | Max pooling | 13 × 13 × 256 | ||

| 10 | conv3 | Convolution | 13 × 13 × 384 | Weights: 3 × 3 × 25 × 384; bias: 1 × 1 × 384 | |

| 11 | relu3 | ReLU | 13 × 13 × 384 | ||

| 12 | conv4 | Grouped convolution | 13 × 13 × 384 | Weights: 3 × 3 × 192 × 192; bias: 1 × 1 × 192 × 2 | |

| 13 | relu4 | ReLU | 13 × 13 × 384 | ||

| 14 | conv5 | Grouped convolution | 13 × 13 × 256 | Weights: 3 × 3 × 192 × 128; bias: 1 × 1 × 128 × 2 | |

| 15 | relu5 | ReLU | 13 × 13 × 256 | ||

| 16 | pool5 | Max pooling | 6 × 6 × 256 | ||

| 17 | fc6 | Fully connected | 1 × 1 × 4096 | Weights: 7029 × 9216; bias: 4096 × 1 | |

| 18 | relu6 | ReLU | 1 × 1 × 4096 | ||

| 19 | drop6 | Dropout | 1 × 1 × 4096 | ||

| 20 | fc7 | Fully connected | 1 × 1 × 4096 | Weights: 4096 × 4096; bias: 4096 × 1 | |

| 21 | relu7 | ReLU | 1 × 1 × 4096 | ||

| 22 | drop7 | Dropout | 1 × 1 × 4096 | ||

| 23 | fc8 | Fully connected | 1 × 1 × 4 | Weights: 4 × 4096; bias: 4 × 1 | |

| 24 | softmax | SoftMax | 1 × 1 × 4 | ||

| 25 | output | Classification output |

| Architecture | Accuracy | Training Time | Result |

|---|---|---|---|

| MobileNet-v2 | 96.84% | 28 min 38 s |  |

| DenseNet201 | 96.01% | 132 min 25 s |  |

| SqueezeNet | 92.98% | 16 min 3 s |  |

| Inception-v3 | 86.24% | 11 mins 30 s |  |

| ResNet101 | 84.73.% | 43 min 5 s |  |

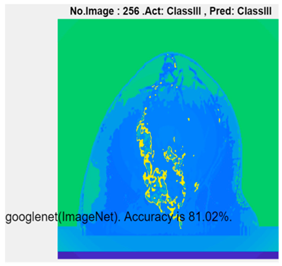

| GoogLeNet | 81.02% | 7 min 48 s |  |

| AlexNet | 80.33% | 5 min 39 s |  |

| ResNet50 | 78.40% | 36 min 16 s |  |

| ResNet18 | 77.30% | 11 min 45 s |  |

| Inception-ResNet-v2 | 73.18% | 106 mins 48 s |  |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L. Holographic Microwave Image Classification Using a Convolutional Neural Network. Micromachines 2022, 13, 2049. https://doi.org/10.3390/mi13122049

Wang L. Holographic Microwave Image Classification Using a Convolutional Neural Network. Micromachines. 2022; 13(12):2049. https://doi.org/10.3390/mi13122049

Chicago/Turabian StyleWang, Lulu. 2022. "Holographic Microwave Image Classification Using a Convolutional Neural Network" Micromachines 13, no. 12: 2049. https://doi.org/10.3390/mi13122049

APA StyleWang, L. (2022). Holographic Microwave Image Classification Using a Convolutional Neural Network. Micromachines, 13(12), 2049. https://doi.org/10.3390/mi13122049