Attachable Inertial Device with Machine Learning toward Head Posture Monitoring in Attention Assessment

Abstract

1. Introduction

2. Method

2.1. Design of the Circuit Schematic Diagram

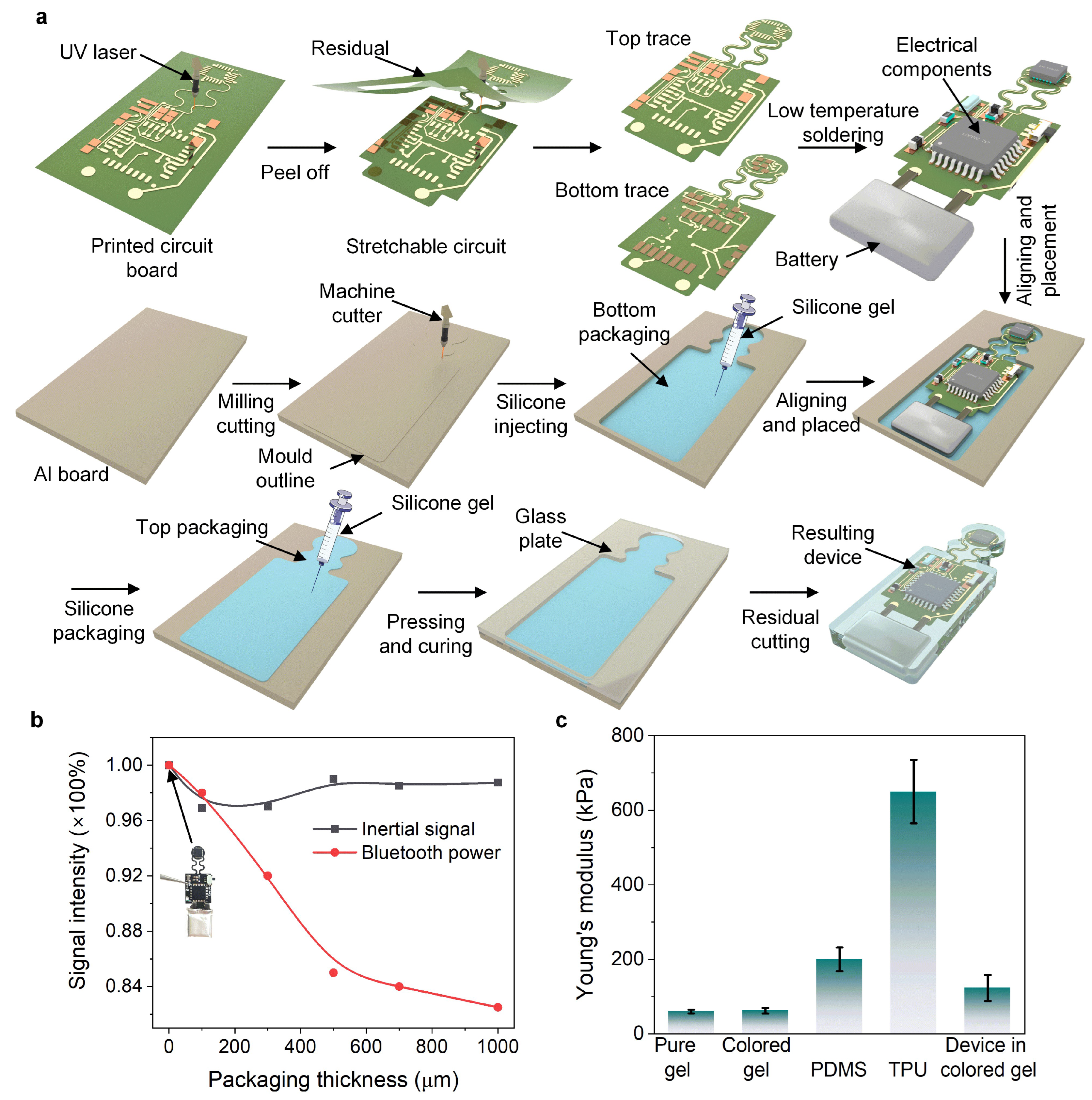

2.2. Fabrication of the Attachable Inertial Device

2.3. Tested Process of Head Postures

3. Results and Discussion

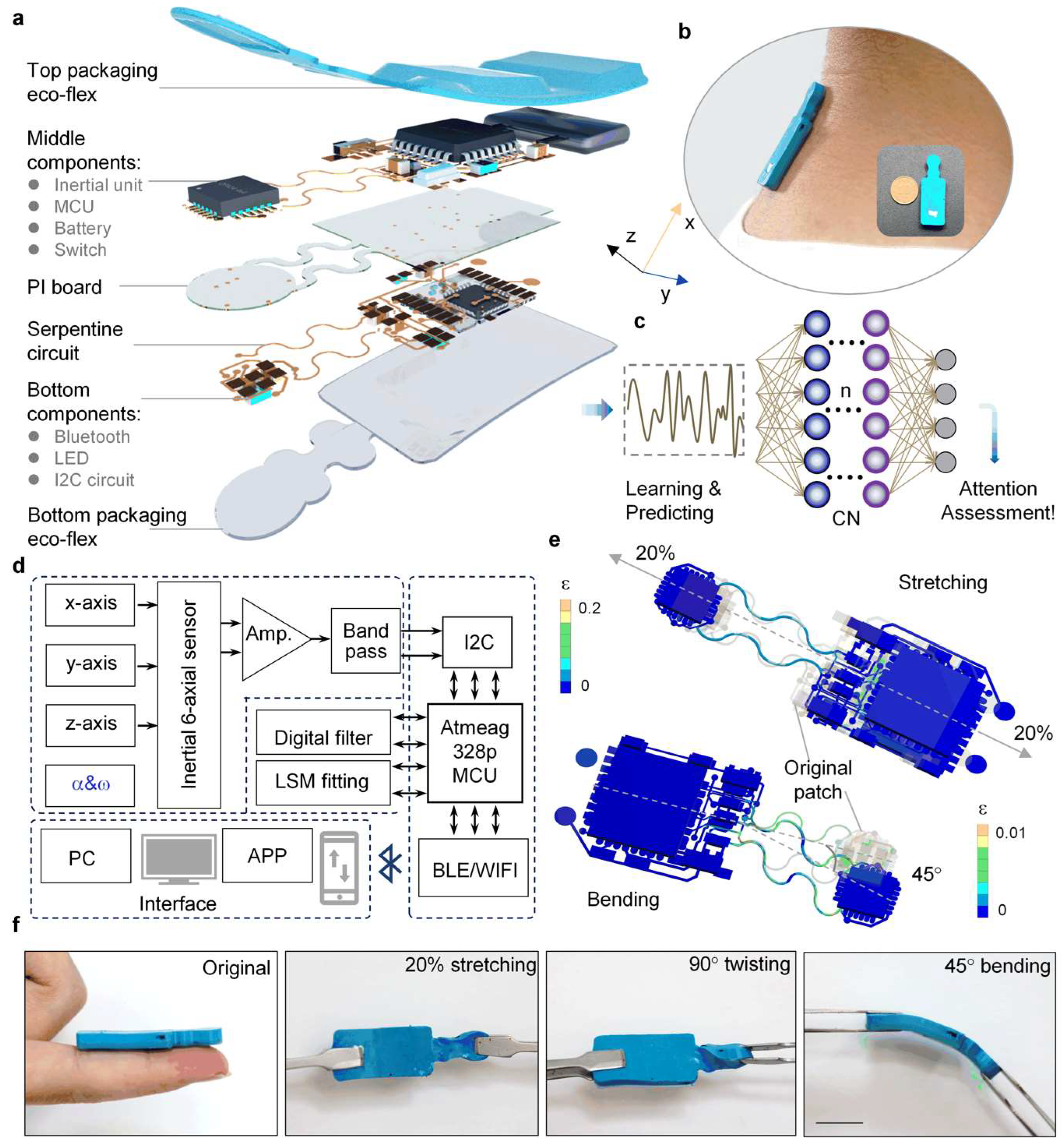

3.1. Design of the Inertial Device

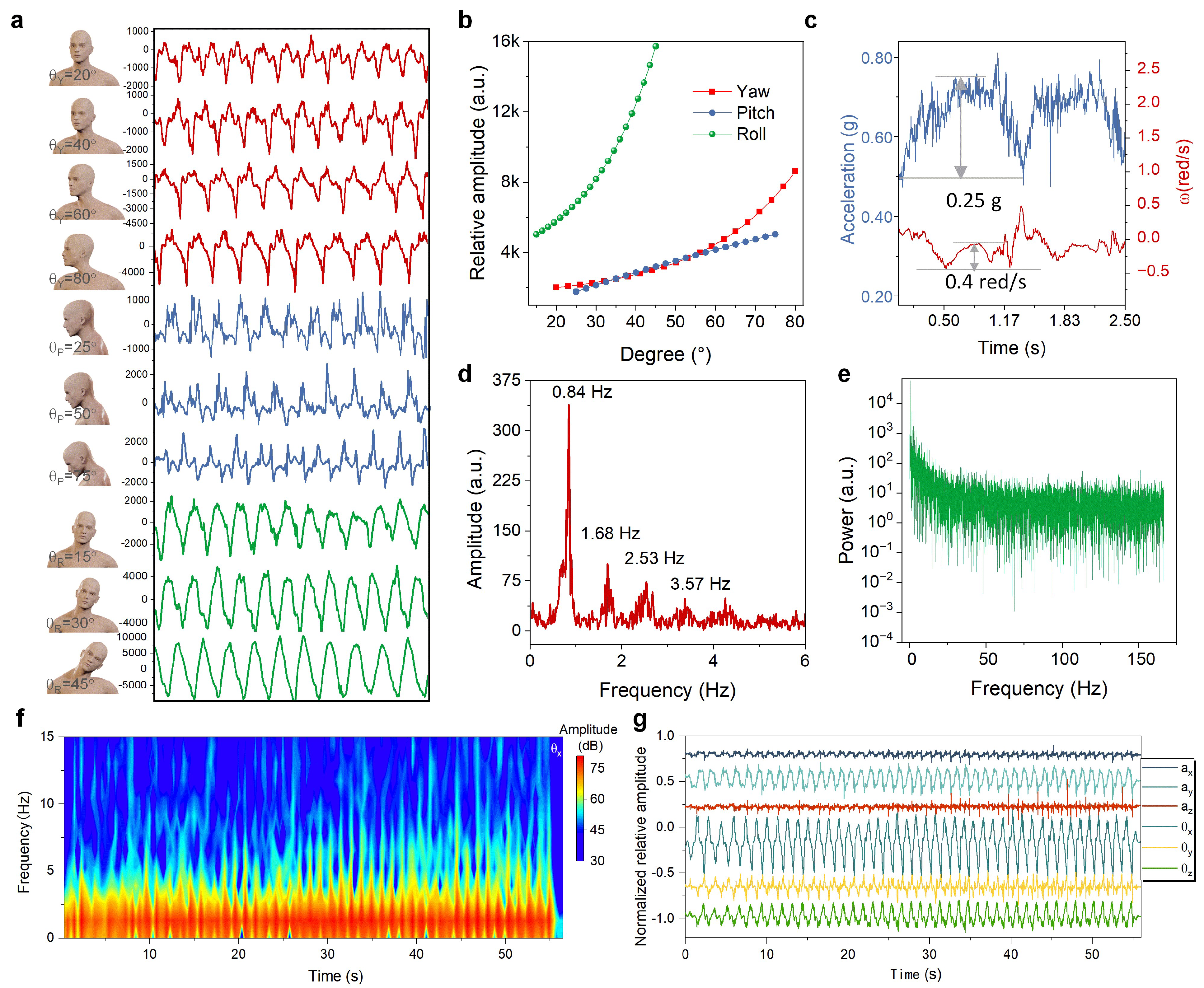

3.2. Inertial Measurements of Head Posture

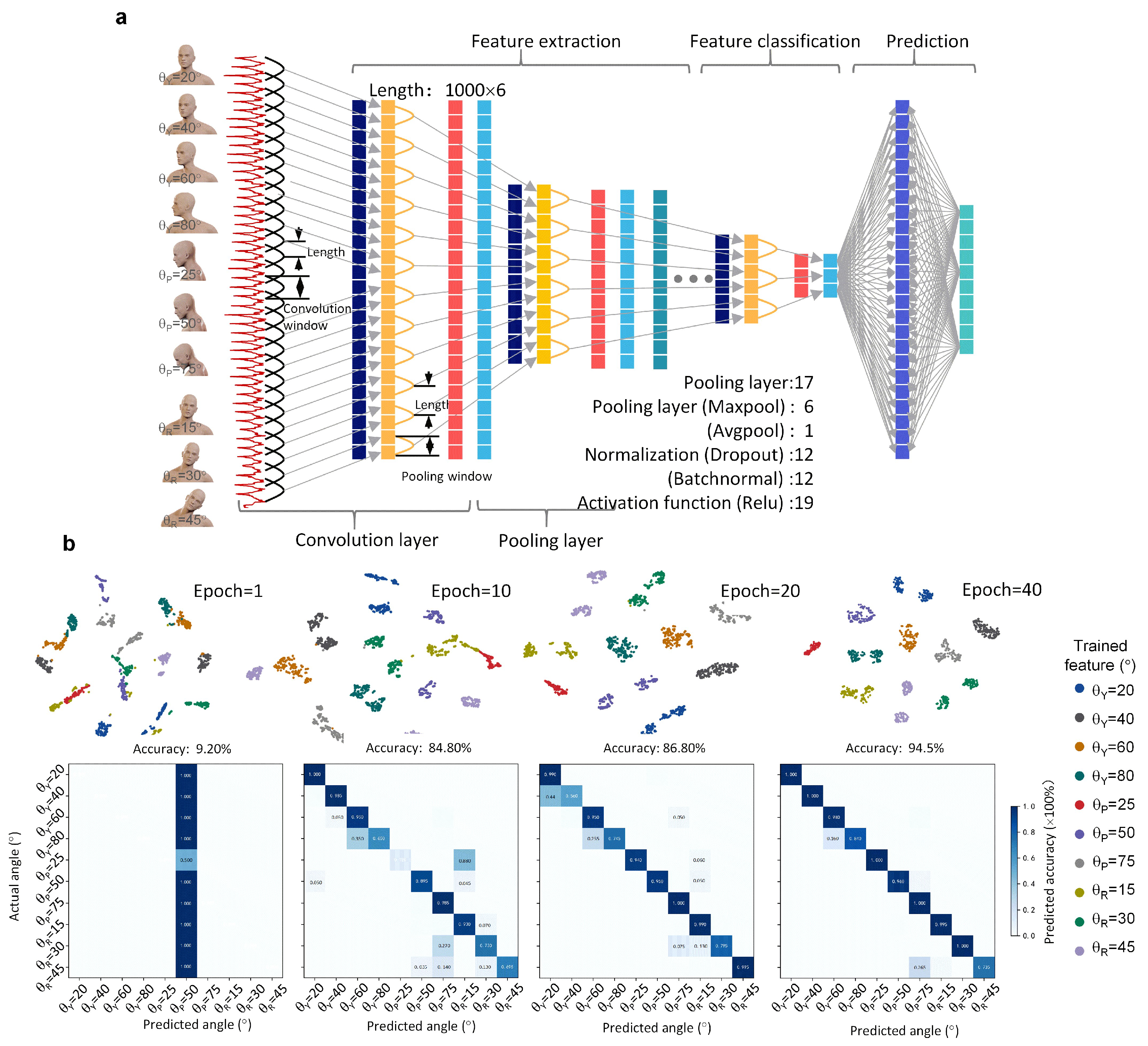

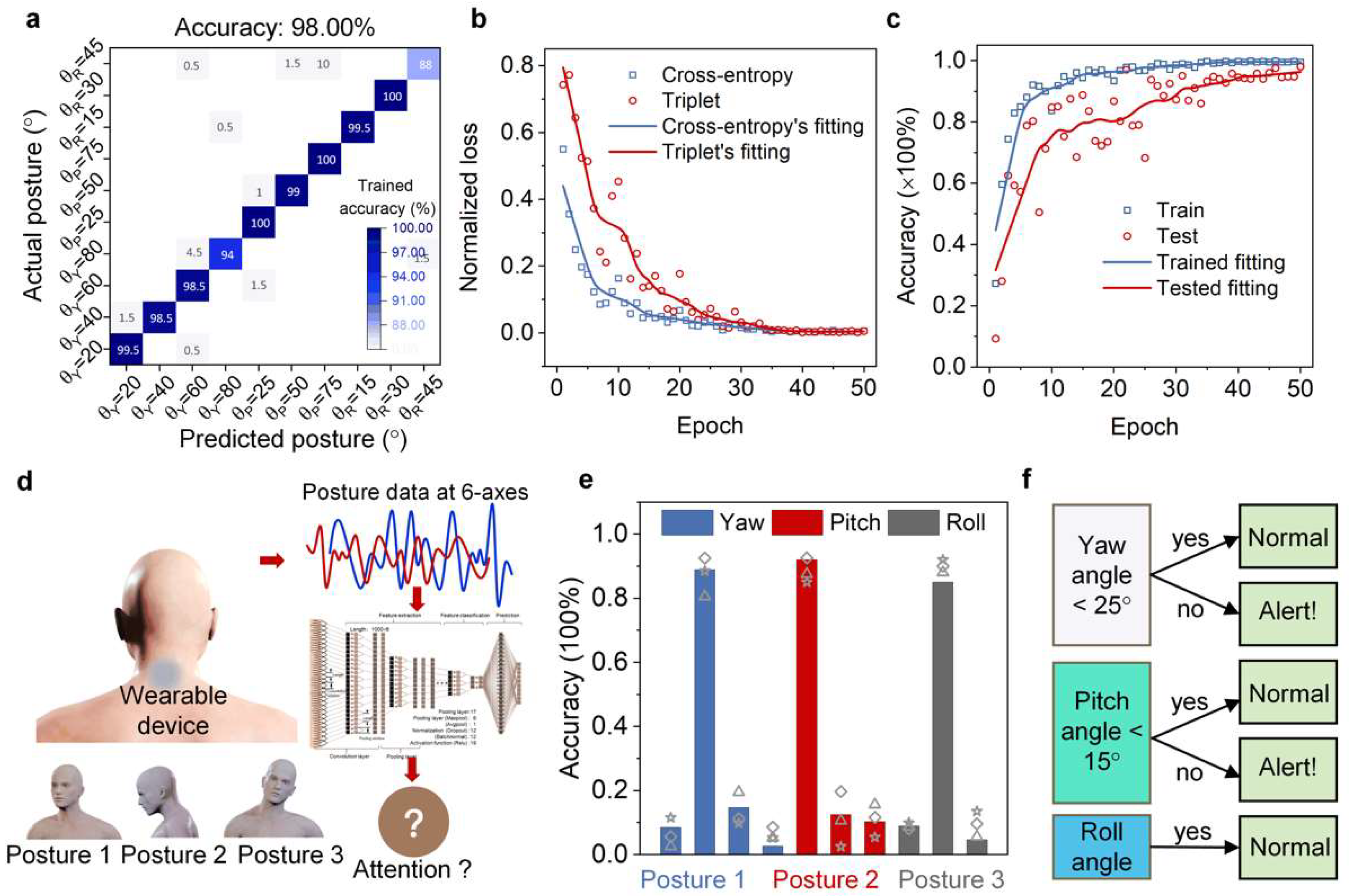

3.3. Machine Learning in Head Posture Estimation

3.4. Applications for Attention Assessment

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chen, X.; Li, Y. Interpretation of 2020 Educause Horizon ReportTM (Teaching and Learning Edition) and Its Enlightenments: Challenges and Transformation of Higher Education under the Epidemic Situation. J. Distance Educ. 2020, 38, 3–16. [Google Scholar]

- Bahasoan, A.N.; Ayuandiani, W.; Mukhram, M.; Rahmat, A. Effectiveness of Online Learning In Pandemic COVID-19. Int. J. Sci. Technol. Manag. 2020, 1, 100–106. [Google Scholar] [CrossRef]

- Syaharuddin, S.; Mutiani, M.; Handy, M.R.N.; Abbas, E.W.; Jumriani, J. Building Students’ Learning Experience in Online Learning During Pandemic. AL-ISHLAH J. Pendidik. 2021, 13, 979–987. [Google Scholar] [CrossRef]

- Agarwal, S.; Kaushik, J.S. Student’s Perception of Online Learning during COVID Pandemic. Indian J. Pediatr. 2020, 87, 554. [Google Scholar] [CrossRef]

- Rasmitadila, R.; Aliyyah, R.R.; Rachmadtullah, R.; Samsudin, A.; Syaodih, E.; Nurtanto, M.; Tambunan, A.R.S. The Perceptions of Primary School Teachers of Online Learning during the COVID-19 Pandemic Period: A Case Study in Indonesia. J. Ethn. Cult. Stud. 2020, 7, 90–109. [Google Scholar] [CrossRef]

- Yan, Y.; Cai, F.; Feng, C.C.; Chen, Y. University students’ perspectives on emergency online GIS learning amid the Covid-19 pandemic. Trans. Gis 2022, 26, 2651–2668. [Google Scholar] [CrossRef]

- Baber, H. Determinants of Students’ Perceived Learning Outcome and Satisfaction in Online Learning during the Pandemic of COVID19. J. Educ. e-Learn. Res. 2020, 7, 285–292. [Google Scholar] [CrossRef]

- Sun, S.-H.; Zhang, Y.-C.; Wang, C.; Zhang, H.-Y. Evaluation of Students’ Classroom Behavioral State Based on Deep Learning. Comput. Syst. Appl. 2022, 31, 307–314. [Google Scholar]

- Zuo, G.; Han, D.; Su, X.; Wang, H.; Wu, X. Research on classroom behavior analysis and evaluation system based on deep learning face recognition technology. Intell. Comput. Appl. 2019, 9, 135–141. [Google Scholar]

- Stanley, D. Measuring Attention Using Microsoft Kinect. Master’s Thesis, Rochester Institute of Technology, Rochester, NY, USA, 2013. [Google Scholar]

- Bearden, T.S.; Cassisi, J.E.; White, J.N. Electrophysiological Correlates of Vigilance During a Continuous Performance Test in Healthy Adults. Appl. Psychophysiol. Biofeedback 2004, 29, 175–188. [Google Scholar] [CrossRef]

- Kao, T.C.; Sun, T.Y. Head pose recognition in advanced Driver Assistance System. In Proceedings of the 2017 IEEE 6th Global Conference on Consumer Electronics (GCCE), Nagoya, Japan, 24–27 October 2017. [Google Scholar]

- Tordoff, B.; Mayol, W.; Murray, D.; de Campos, T. Head Pose Estimation for Wearable Robot Control. In Proceedings of the British Machine Vision Conference, DBLP, Cardiff, UK, 2–5 September 2002. [Google Scholar] [CrossRef][Green Version]

- Bharatharaj, J.; Huang, L.; Mohan, R.E.; Pathmakumar, T.; Krägeloh, C.; Al-Jumaily, A. Head Pose Detection for a Wearable Parrot-Inspired Robot Based on Deep Learning. Appl. Sci. 2018, 8, 1081. [Google Scholar] [CrossRef]

- Diaz-Chito, K.; Hernández-Sabaté, A.; López, A.M. A reduced feature set for driver head pose estimation. Appl. Soft Comput. 2016, 45, 98–107. [Google Scholar] [CrossRef]

- Alioua, N.; Amine, A.; Rogozan, A.; Bensrhair, A.; Rziza, M. Driver head pose estimation using efficient descriptor fusion. EURASIP J. Image Video Process. 2016, 2016, 2. [Google Scholar] [CrossRef]

- Lu, H. Study on Wearable Vision and its Application in Visual Assistant of Mobile Intelligent Survilliance. Master’s Thesis, Chongqing University, Chongqing, China, 2011. [Google Scholar]

- Tang, Y. Research on Attention Analysis Method Based on Brain-Computer Interface. Master’s Thesis, South China University of Technology, Guangzhou, China, 2021. [Google Scholar]

- Jin, J.; Gao, B.; Yang, S.; Zhao, B.; Luo, L.; Woo, W.L. Attention-Block Deep Learning Based Features Fusion in Wearable Social Sensor for Mental Wellbeing Evaluations. IEEE Access 2020, 8, 89258–89268. [Google Scholar] [CrossRef]

- Pandian, G.S.B.; Jain, A.; Raza, Q.; Sahu, K.K. Digital health interventions (DHI) for the treatment of attention deficit hyperactivity disorder in children-a comparative review of literature among various treatment and DHI—ScienceDirect. Psychiatry Res. 2021, 297, 113742. [Google Scholar] [CrossRef]

- Liu, H. Biosignal Processing and Activity Modeling for Multimodal Human Activity Recognition. Doctoral Dissertation, Universität Bremen, Bremen, Germany, 2021. [Google Scholar]

- Schultz, T.; Liu, H. A Wearable Real-time Human Activity Recognition System using Biosensors Integrated into a Knee Bandage. In Proceedings of the 12th International Joint Conference on Biomedical Engineering Systems and Technologies, Prague, Czech Republic, 22–24 February 2019; pp. 47–55. [Google Scholar]

- Liu, H.; Hartmann, Y.; Schultz, T. A Practical Wearable Sensor-based Human Activity Recognition Research Pipeline. In Proceedings of the International Conference on Health Informatics, Odisha, India, 7–9 December 2022. [Google Scholar]

- Hartmann, Y.; Liu, H.; Schultz, T. Interactive and Interpretable Online Human Activity Recognition. In Proceedings of the 2022 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops), Pisa, Italy, 21–25 March 2022; pp. 109–111. [Google Scholar]

- Barbhuiya, A.A.; Karsh, R.K.; Jain, R. Gesture recognition from RGB images using convolutional neural network-attention based system. Concurr. Comput. Pract. Exp. 2022, 34, e7230. [Google Scholar] [CrossRef]

- Barbhuiya, A.A.; Karsh, R.K.; Jain, R. A convolutional neural network and classical moments-based feature fusion model for gesture recognition. Multimedia Syst. 2022, 28, 1779–1792. [Google Scholar] [CrossRef]

- Barbhuiya, A.A.; Karsh, R.K.; Jain, R. CNN based feature extraction and classification for sign language. Multimed. Tools Appl. 2020, 80, 3051–3069. [Google Scholar] [CrossRef]

- Tango, F.; Calefato, C.; Minin, L.; Canovi, L. Moving attention from the road: A new methodology for the driver distraction evaluation using machine learning approaches. In Proceedings of the 2nd Conference on Human System Interactions, Catania, Italy, 21–23 May 2009. [Google Scholar]

- Alam, M.S.; Jalil, S.; Upreti, K. Analyzing recognition of EEG based human attention and emotion using Machine learning. Mater. Today Proc. 2021, 56, 3349–3354. [Google Scholar] [CrossRef]

- Chung, W.H.; Gu, Y.H.; Yoo, S.J. District heater load forecasting based on machine learning and parallel CNN-LSTM attention. Energy 2022, 246, 123350. [Google Scholar] [CrossRef]

- Zhong, P.; Li, Z.; Chen, Q.; Hou, B. Attention-Enhanced Gradual Machine Learning for Entity Resolution. IEEE Intell. Syst. 2021, 36, 71–79. [Google Scholar] [CrossRef]

- Wubuliaisan, W.; Yin, Z.; An, J. Development of attention measurement and feedback tool based on head posture. In Proceedings of the Society for Information Technology & Teacher Education International Conference 2021, Online, 29 March 2021; pp. 746–753. [Google Scholar]

- Schneider, E.; Villgrattner, T.; Vockeroth, J.; Bartl, K.; Kohlbecher, S.; Bardins, S.; Ulbrich, H.; Brandt, T. EyeSeeCam: An Eye Movement-Driven Head Camera for the Examination of Natural Visual Exploration. Ann. N. Y. Acad. Sci. 2009, 1164, 461–467. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y. A Study on Students’ Classroom Attention Evaluation Based on Deep Learning. Master’s Thesis, Shanxi Normal University, Xi’an, China, 2020. [Google Scholar]

- Wang, X. Research on Head Pose Estimation Method for Learning Behavior Analysis in Smart Classroom. Master’s Thesis, Central China Normal University, Wuhan, China, 2021. [Google Scholar]

- Nie, H. Research and Application of Learning Attention Detection Method Combining Head Pose and Gaze Estimation; Central China Normal University: Wuhan, China, 2020. [Google Scholar]

- Teng, X. Classroom Attention Analysis System based on Head Pose Estimation. Master’s thesis, Wuhan University of Science and Technology, Wuhan, China, 2020. [Google Scholar]

- LaValle, S.M.; Yershova, A.; Katsev, M.; Antonov, M. In Head tracking for the Oculus Rift. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 187–194. [Google Scholar]

- Guo, Y.; Zhang, J.; Lian, W. A Head-posture Based Learning Attention Assessment Algorithm. Sci. Technol. Eng. 2020, 20, 5688–5695. [Google Scholar]

- Padeleris, P.; Zabulis, X. Head pose estimation on depth data based on particle swarm optimization. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 42–49. [Google Scholar]

- Fanelli, G.; Weise, T.; Gall, J. Real time head pose estimation from consumer depth cameras. In Joint Pattern Recognition Symposium; Springer: Berlin, Germany, 2011; pp. 101–110. [Google Scholar]

- Meyer, G.P.; Gupta, S.; Frosio, I.; Reddy, D.; Kautz, J. Robust model-based 3D head pose estimation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3649–3657. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, Y.; He, C.; Xu, H. Attachable Inertial Device with Machine Learning toward Head Posture Monitoring in Attention Assessment. Micromachines 2022, 13, 2212. https://doi.org/10.3390/mi13122212

Peng Y, He C, Xu H. Attachable Inertial Device with Machine Learning toward Head Posture Monitoring in Attention Assessment. Micromachines. 2022; 13(12):2212. https://doi.org/10.3390/mi13122212

Chicago/Turabian StylePeng, Ying, Chao He, and Hongcheng Xu. 2022. "Attachable Inertial Device with Machine Learning toward Head Posture Monitoring in Attention Assessment" Micromachines 13, no. 12: 2212. https://doi.org/10.3390/mi13122212

APA StylePeng, Y., He, C., & Xu, H. (2022). Attachable Inertial Device with Machine Learning toward Head Posture Monitoring in Attention Assessment. Micromachines, 13(12), 2212. https://doi.org/10.3390/mi13122212