Lane-GAN: A Robust Lane Detection Network for Driver Assistance System in High Speed and Complex Road Conditions

Abstract

:1. Introduction

- A blurred lane line dataset is provided in this article.

- An improved GAN is used to enhance the features of the lanes and improve the detection efficiency of blurred lanes in complex road environments.

- The proposed algorithm performs well in complex road conditions (line curves, dirty lane line, illumination change, occlusions), which is greatly superior to the existing state-of-the-art detectors in high speed and complex road conditions.

2. Related Works

3. Blurred Lane Line Enhancement and Detection Algorithm

3.1. Constructing Blurred Dataset

3.2. Blur Image Enhancement

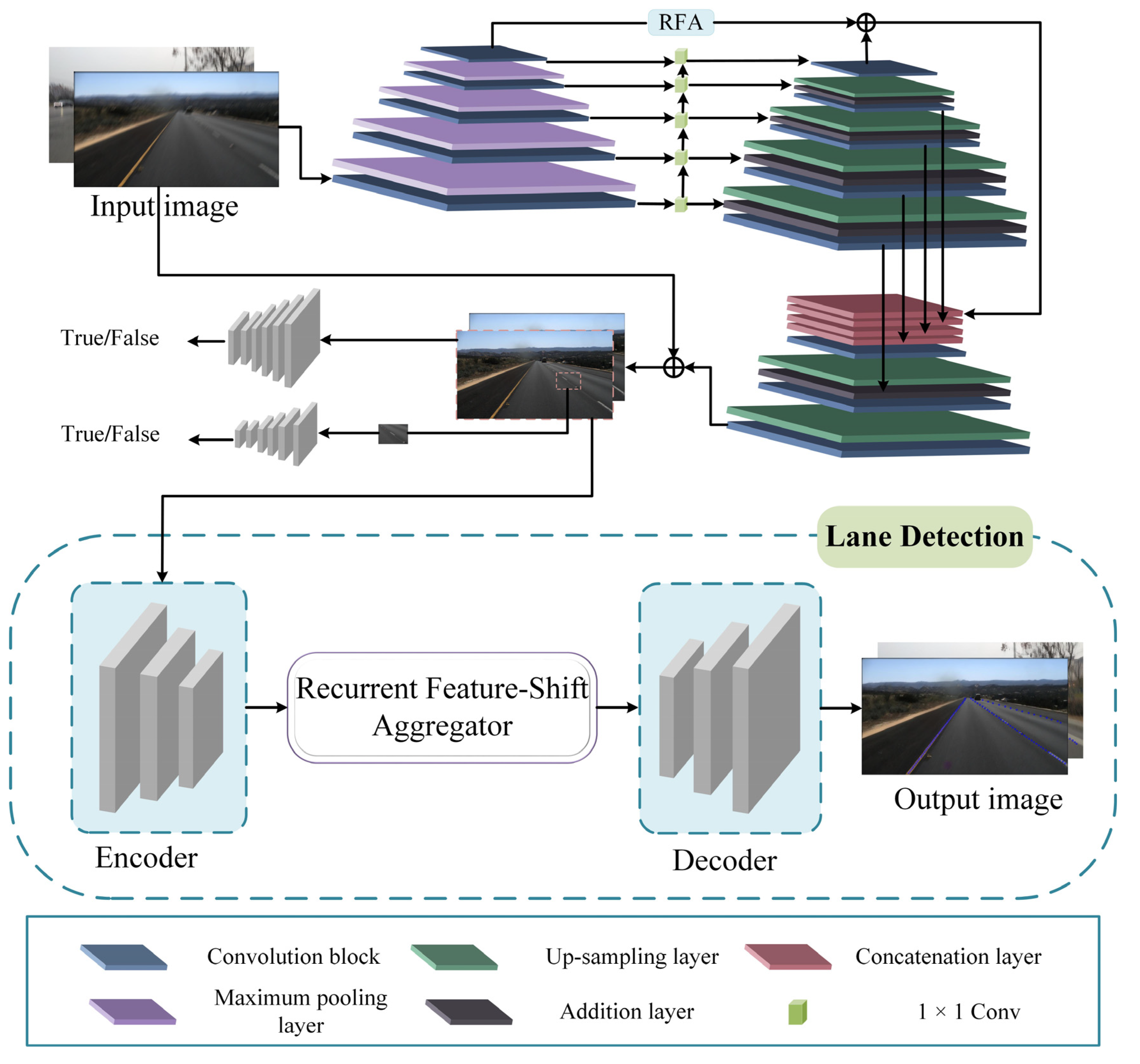

3.3. Lane Detection

The Loss of Lane Line Detection Module

4. Experiments

4.1. Datasets and Evaluation Metrics

4.2. Experimental Results

4.3. Ablation Study

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| SOTA | State of The Art |

| GAN | Generative Adversarial Networks |

| CNN | Convolutional Neural Network |

| RNN | Recurrent Neural Network |

| SCNN | Spatial Convolutional Neural Network |

| RFA | Residual Feature Augmentation |

| ResNet | Residual Network |

| ReLU | Rectified Linear Unit |

| IoU | Intersection over Union |

References

- Badue, C.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.; Berriel, R.; Paixão, T.M.; Mutz, F.; et al. Self-driving cars: A survey. Expert Syst. Appl. 2020, 165, 113816. [Google Scholar] [CrossRef]

- Vangi, D.; Virga, A.; Gulino, M.S. Adaptive intervention logic for automated driving systems based on injury risk minimization. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2020, 234, 2975–2987. [Google Scholar] [CrossRef]

- Hillel, A.B.; Lerner, R.; Levi, D.; Raz, G. Recent progress in road and lane detection: A survey. Mach. Vis. Appl. 2014, 25, 727–745. [Google Scholar] [CrossRef]

- Tang, J.; Li, S.; Liu, P. A review of lane detection methods based on deep learning. Pattern Recognit. 2021, 111, 107623. [Google Scholar] [CrossRef]

- Li, X.; Li, J.; Hu, X.; Yang, J. Line-CNN: End-to-End Traffic Line Detection with Line Proposal Unit. IEEE Trans. Intell. Transp. Syst. 2019, 21, 248–258. [Google Scholar] [CrossRef]

- Liang, D.; Guo, Y.C.; Zhang, S.K.; Mu, T.J.; Huang, X. Lane Detection: A Survey with New Results. J. Comput. Sci. Technol. 2020, 35, 493–505. [Google Scholar] [CrossRef]

- Oğuz, E.; Küçükmanisa, A.; Duvar, R.; Urhan, O. A deep learning based fast lane detection approach. Chaos Solitons Fractals 2022, 155, 111722. [Google Scholar] [CrossRef]

- Lee, M.; Lee, J.; Lee, D.; Kim, W.; Hwang, S.; Lee, S. Robust lane detection via expanded self attention. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 533–542. [Google Scholar]

- Qin, Z.; Wang, H.; Li, X. Ultra fast structure-aware deep lane detection. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 276–291. [Google Scholar]

- Long, J.; Yan, Z.; Peng, L.; Li, T. The geometric attention-aware network for lane detection in complex road scenes. PLoS ONE 2021, 16, e0254521. [Google Scholar] [CrossRef]

- Song, S.; Chen, W.; Liu, Q.; Hu, H.; Zhu, Q. A novel deep learning network for accurate lane detection in low-light environments. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2021, 236, 424–438. [Google Scholar] [CrossRef]

- Chen, Y.; Xiang, Z.; Du, W.J.T.V.C. Improving lane detection with adaptive homography prediction. Vis. Comput. 2022, 1–15. [Google Scholar] [CrossRef]

- Savant, K.V.; Meghana, G.; Potnuru, G.; Bhavana, V. Lane Detection for Autonomous Cars Using Neural Networks. In Machine Learning and Autonomous Systems; Springer: Berlin/Heidelberg, Germany, 2022; pp. 193–207. [Google Scholar]

- Feng, Z.; Guo, S.; Tan, X.; Xu, K.; Wang, M.; Ma, L. Rethinking Efficient Lane Detection via Curve Modeling. arXiv 2022, arXiv:2203.02431. [Google Scholar]

- Hoang, T.M.; Baek, N.R.; Cho, S.W.; Kim, K.W.; Park, K.R. Road Lane Detection Robust to Shadows Based on a Fuzzy System Using a Visible Light Camera Sensor. Sensors 2017, 17, 2475. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gu, J.; Zhang, Q.; Kamata, S. Robust road lane detection using extremal-region enhancement. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 519–523. [Google Scholar]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef] [Green Version]

- Vajak, D.; Vranješ, M.; Grbić, R.; Vranješ, D. Recent advances in vision-based lane detection solutions for automotive applications. In Proceedings of the 2019 International Symposium ELMAR, Zadar, Croatia, 23–25 September 2019; pp. 45–50. [Google Scholar]

- Yenıaydin, Y.; Schmidt, K.W. Lane Detection and Tracking based on Best Pairs of Lane Markings: Method and Evaluation. In Proceedings of the 2020 28th Signal Processing and Communications Applications Conference (SIU), Gaziantep, Turkey, 5–7 October 2020; pp. 1–4. [Google Scholar]

- Borkar, A.; Hayes, M.; Smith, M.T. A Novel Lane Detection System With Efficient Ground Truth Generation. IEEE Trans. Intell. Transp. Syst. 2012, 13, 365–374. [Google Scholar] [CrossRef]

- Zhou, S.; Jiang, Y.; Xi, J.; Gong, J.; Xiong, G.; Chen, H. A novel lane detection based on geometrical model and gabor filter. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, Las Vegas, NV, USA, 19 October–13 November 2020; pp. 59–64. [Google Scholar]

- Wang, Y.; Dahnoun, N.; Achim, A. A novel system for robust lane detection and tracking. Signal Process. 2012, 92, 319–334. [Google Scholar] [CrossRef]

- Muthalagu, R.; Bolimera, A.; Kalaichelvi, V. Lane detection technique based on perspective transformation and histogram analysis for self-driving cars. Comput. Electr. Eng. 2020, 85, 106653. [Google Scholar] [CrossRef]

- Wang, Y.S.; Qi, Y.; Man, Y. An improved hough transform method for detecting forward vehicle and lane in road. In Proceedings of the Journal of Physics: Conference Series, Changsha, China, 26–28 October 2021; p. 012082. [Google Scholar]

- Gonzalez, J.P.; Ozguner, U. Lane detection using histogram-based segmentation and decision trees. In Proceedings of the IEEE Intelligent Transportation Systems, Dearborn, MI, USA, 1–3 October 2000; Proceedings (Cat. No. 00TH8493). pp. 346–351. [Google Scholar]

- Kim, J.; Lee, M. Robust lane detection based on convolutional neural network and random sample consensus. In Proceedings of the International Conference on Neural Information Processing, Montreal, QC, Canada, 8–13 December 2014; pp. 454–461. [Google Scholar]

- He, B.; Ai, R.; Yan, Y.; Lang, X. Accurate and robust lane detection based on dual-view convolutional neutral network. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 1041–1046. [Google Scholar]

- Zhe, C.; Chen, Z. RBNet: A Deep Neural Network for Unified Road and Road Boundary Detection. In Proceedings of the International Conference on Neural Information Processing, Guangzhou, China, 14–18 November 2017; pp. 677–687. [Google Scholar]

- Oliveira, G.L.; Burgard, W.; Brox, T. Efficient deep models for monocular road segmentation. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 4885–4891. [Google Scholar]

- Haixia, L.; Xizhou, L. Flexible lane detection using CNNs. In Proceedings of the 2021 International Conference on Computer Technology and Media Convergence Design (CTMCD), Sanya, China, 23–25 April 2021; pp. 235–238. [Google Scholar]

- Ma, C.; Mao, L.; Zhang, Y.; Xie, M. Lane detection using heuristic search methods based on color clustering. In Proceedings of the 2010 International Conference on Communications, Circuits and Systems (ICCCAS), Chengdu, China, 28–30 July 2010; pp. 368–372. [Google Scholar]

- Sun, T.-Y.; Tsai, S.-J.; Chan, V. HSI color model based lane-marking detection. In Proceedings of the 2006 IEEE Intelligent Transportation Systems Conference, Toronto, ON, Canada, 17–20 September 2006; pp. 1168–1172. [Google Scholar]

- Wu, P.C.; Chang, C.Y.; Lin, C.H. Lane-mark extraction for automobiles under complex conditions. Pattern Recognit. 2014, 47, 2756–2767. [Google Scholar] [CrossRef]

- Yan, X.; Li, Y. A method of lane edge detection based on Canny algorithm. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 2120–2124. [Google Scholar]

- Niu, J.; Lu, J.; Xu, M.; Lv, P.; Zhao, X. Robust Lane Detection Using Two-stage Feature Extraction with Curve Fitting. Pattern Recognit. 2016, 59, 225–233. [Google Scholar] [CrossRef]

- Vajak, D.; Vranješ, M.; Grbić, R.; Teslić, N. A Rethinking of Real-Time Computer Vision-Based Lane Detection. In Proceedings of the 2021 IEEE 11th International Conference on Consumer Electronics (ICCE-Berlin), Berlin, Germany, 15–18 November 2021; pp. 1–6. [Google Scholar]

- Špoljar, D.; Vranješ, M.; Nemet, S.; Pjevalica, N. Lane Detection and Lane Departure Warning Using Front View Camera in Vehicle. In Proceedings of the 2021 International Symposium ELMAR, Zadar, Croatia, 13–15 September 2021; pp. 59–64. [Google Scholar]

- Lu, P.; Cui, C.; Xu, S.; Peng, H.; Wang, F. SUPER: A Novel Lane Detection System. IEEE Trans. Intell. Veh. 2021, 6, 583–593. [Google Scholar] [CrossRef]

- Jiao, X.; Yang, D.; Jiang, K.; Yu, C.; Yan, R. Real-time lane detection and tracking for autonomous vehicle applications. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2019, 233, 2301–2311. [Google Scholar] [CrossRef]

- Tabelini, L.; Berriel, R.; Paixao, T.M.; Badue, C.; De Souza, A.F.; Oliveira-Santos, T. Polylanenet: Lane estimation via deep polynomial regression. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 6150–6156. [Google Scholar]

- Zou, Q.; Jiang, H.; Dai, Q.; Yue, Y.; Chen, L.; Wang, Q. Robust Lane Detection from Continuous Driving Scenes Using Deep Neural Networks. IEEE Trans. Veh. Technol. 2020, 69, 41–54. [Google Scholar] [CrossRef] [Green Version]

- Medsker, L.R.; Jain, L.J.D. Applications. Recurrent neural networks. Des. Appl. 2001, 5, 64–67. [Google Scholar]

- Pan, X.; Shi, J.; Luo, P.; Wang, X.; Tang, X. Spatial as deep: Spatial cnn for traffic scene understanding. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Zheng, T.; Fang, H.; Zhang, Y.; Tang, W.; Yang, Z.; Liu, H.; Cai, D. Resa: Recurrent feature-shift aggregator for lane detection. arXiv 2020, arXiv:2008.13719. [Google Scholar]

- Lee, S.; Kim, J.; Shin Yoon, J.; Shin, S.; Bailo, O.; Kim, N.; Lee, T.-H.; Seok Hong, H.; Han, S.-H.; So Kweon, I. Vpgnet: Vanishing point guided network for lane and road marking detection and recognition. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1947–1955. [Google Scholar]

- Boracchi, G.; Foi, A. Modeling the Performance of Image Restoration From Motion Blur. IEEE Trans. Image Process. Syst. 2012, 21, 3502–3517. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Adv. Neural Inf. Process. Syst. 2014, 3, 2672–2680. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Romera, E.; Alvarez, J.M.; Bergasa, L.M.; Arroyo, R. ERFNet: Efficient Residual Factorized ConvNet for Real-Time Semantic Segmentation. IEEE Trans. Intell. Transp. Syst. 2017, 19, 263–272. [Google Scholar] [CrossRef]

- TuSimple. Tusimple Lane Detection Benchmark. Available online: https://github.com/TuSimple/tusimple-benchmark (accessed on 11 November 2019).

- Liu, L.; Chen, X.; Zhu, S.; Tan, P. Condlanenet: A top-to-down lane detection framework based on conditional convolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3773–3782. [Google Scholar]

| Methods | Input Size | Accuracy (%) | FP | FN | FPS | Runtime (ms) |

|---|---|---|---|---|---|---|

| SCNN [43] | 1280 × 720 | 91.04 | 0.1342 | 0.1600 | 8 | 133.5 |

| UFNet [9] | 1280 × 720 | 92.64 | 0.2625 | 0.1244 | 313 | 3.2 |

| CondLaneNet [53] | 1280 × 720 | 92.41 | 0.1287 | 0.1135 | 220 | 4.5 |

| RESA [44] | 1280 × 720 | 93.71 | 0.0682 | 0.0867 | 35 | 28.5 |

| Lane-GAN | 1280 × 720 | 96.56 | 0.0464 | 0.0254 | 7 | 138.9 |

| Methods | Input Size | Accuracy (%) | FP | FN | FPS | Runtime (ms) |

|---|---|---|---|---|---|---|

| SCNN [43] | 1280 × 720 | 95.56 | 0.0509 | 0.0547 | 8 | 133.5 |

| UFNet [9] | 1280 × 720 | 94.84 | 0.0445 | 0.0544 | 313 | 3.2 |

| RESA [44] | 1280 × 720 | 96.26 | 0.0350 | 0.0368 | 35 | 28.5 |

| Lane-GAN | 1280 × 720 | 96.56 | 0.0464 | 0.0254 | 7 | 138.9 |

| Methods | Input Size | F1 | TP | FP | Precision | Recall |

|---|---|---|---|---|---|---|

| SCNN [43] | 1640 × 590 | 70.5 | 73236 | 29594 | 0.7122 | 0.6982 |

| RESA [44] | 1640 × 590 | 70.1 | 72020 | 28712 | 0.7150 | 0.6867 |

| Lane-GAN | 1640 × 590 | 72.9 | 75052 | 25946 | 0.7431 | 0.7156 |

| RESA | Improved GAN | Accuracy (%) |

|---|---|---|

| √ | 96.26 | |

| √ | √ | 96.56 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Wang, J.; Li, Y.; Li, C.; Zhang, W. Lane-GAN: A Robust Lane Detection Network for Driver Assistance System in High Speed and Complex Road Conditions. Micromachines 2022, 13, 716. https://doi.org/10.3390/mi13050716

Liu Y, Wang J, Li Y, Li C, Zhang W. Lane-GAN: A Robust Lane Detection Network for Driver Assistance System in High Speed and Complex Road Conditions. Micromachines. 2022; 13(5):716. https://doi.org/10.3390/mi13050716

Chicago/Turabian StyleLiu, Yan, Jingwen Wang, Yujie Li, Canlin Li, and Weizheng Zhang. 2022. "Lane-GAN: A Robust Lane Detection Network for Driver Assistance System in High Speed and Complex Road Conditions" Micromachines 13, no. 5: 716. https://doi.org/10.3390/mi13050716