Single-Pixel Near-Infrared 3D Image Reconstruction in Outdoor Conditions

Abstract

:1. Introduction

- The work presents an experimentally validated theoretical model of the system proposed for Single-Pixel Imaging (SPI) if operating in foggy conditions, considering Mie scattering (in environments rich in 3 μm diameter particles), calculating the level of irradiance reaching the photodetector, and the amount of light being reflected from objects for surfaces with different reflection coefficients.

- Experimental validation of the SPI model presented thorough measurement of the extinction coefficient [18] to calculate the maximum imaging distance and error.

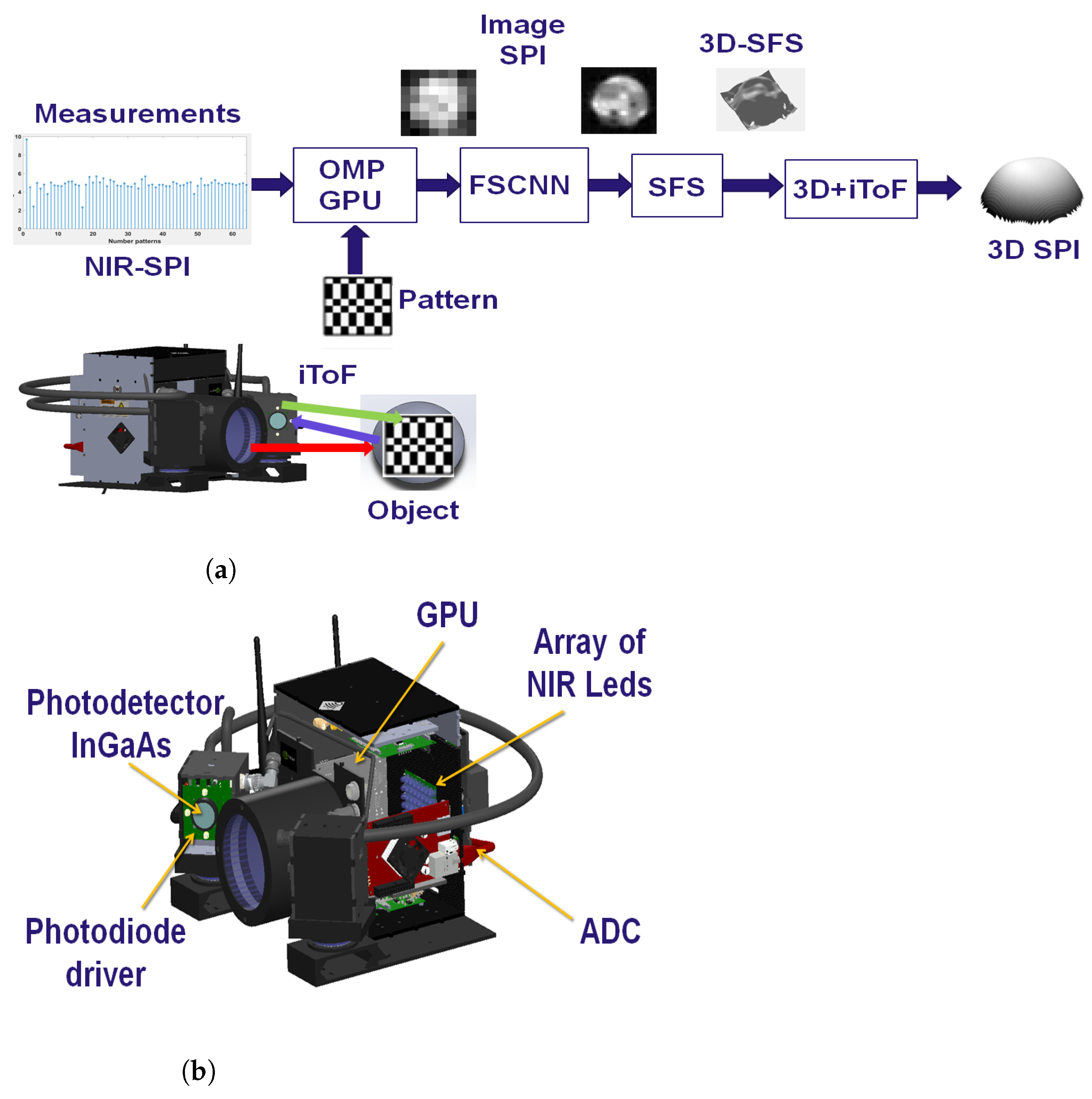

- A system based on a combination of NIR-SPI and iToF methods is developed for imaging in foggy environments. We demonstrate an improvement in image recovery using different space-filling methods.

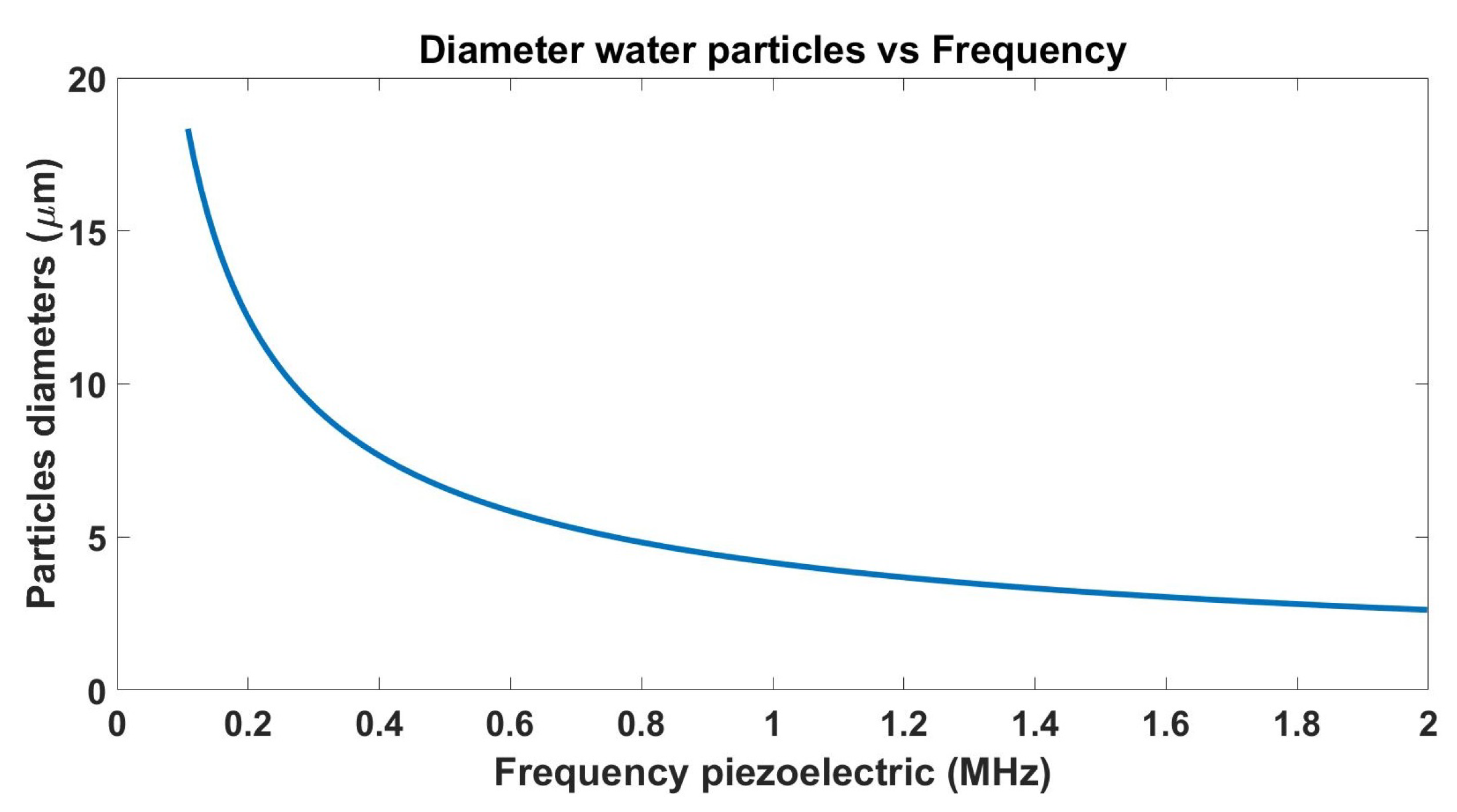

- We fabricated a test chamber to generate water droplets with 3 μm average diameter and different background illumination levels.

- We experimentally demonstrated the feasibility of our 3D NIR-SPI system for 3D image reconstruction. To evaluate the image reconstruction quality, the Structural Similarity Index Measure (SSIM), the Peak Signal-to-Noise Ratio (PSNR), Root Mean Square Error (RMSE), and skewness were implemented.

2. Single-Pixel Image Reconstruction

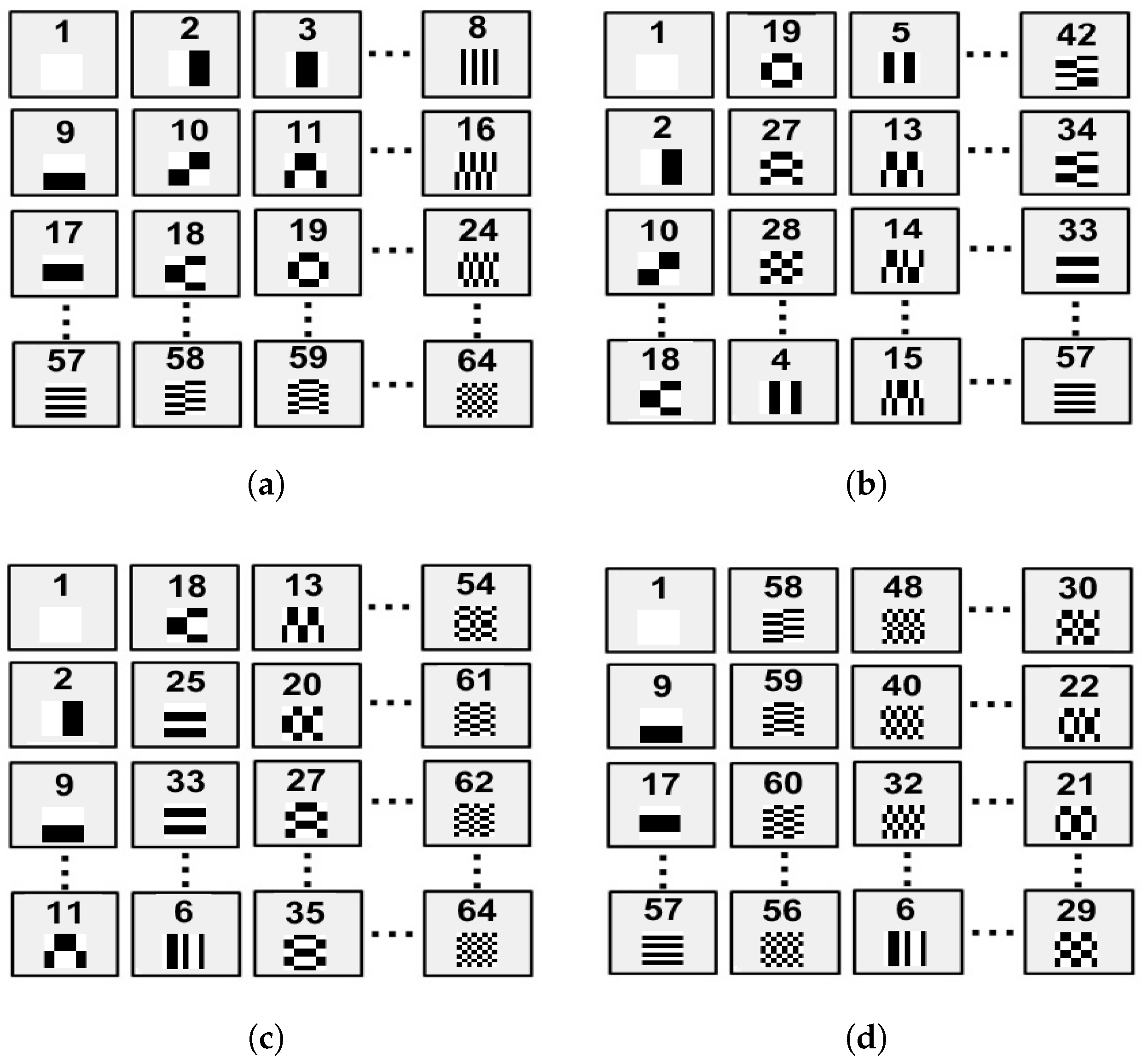

2.1. Generation of the Hadamard Active Illumination Pattern Sequence

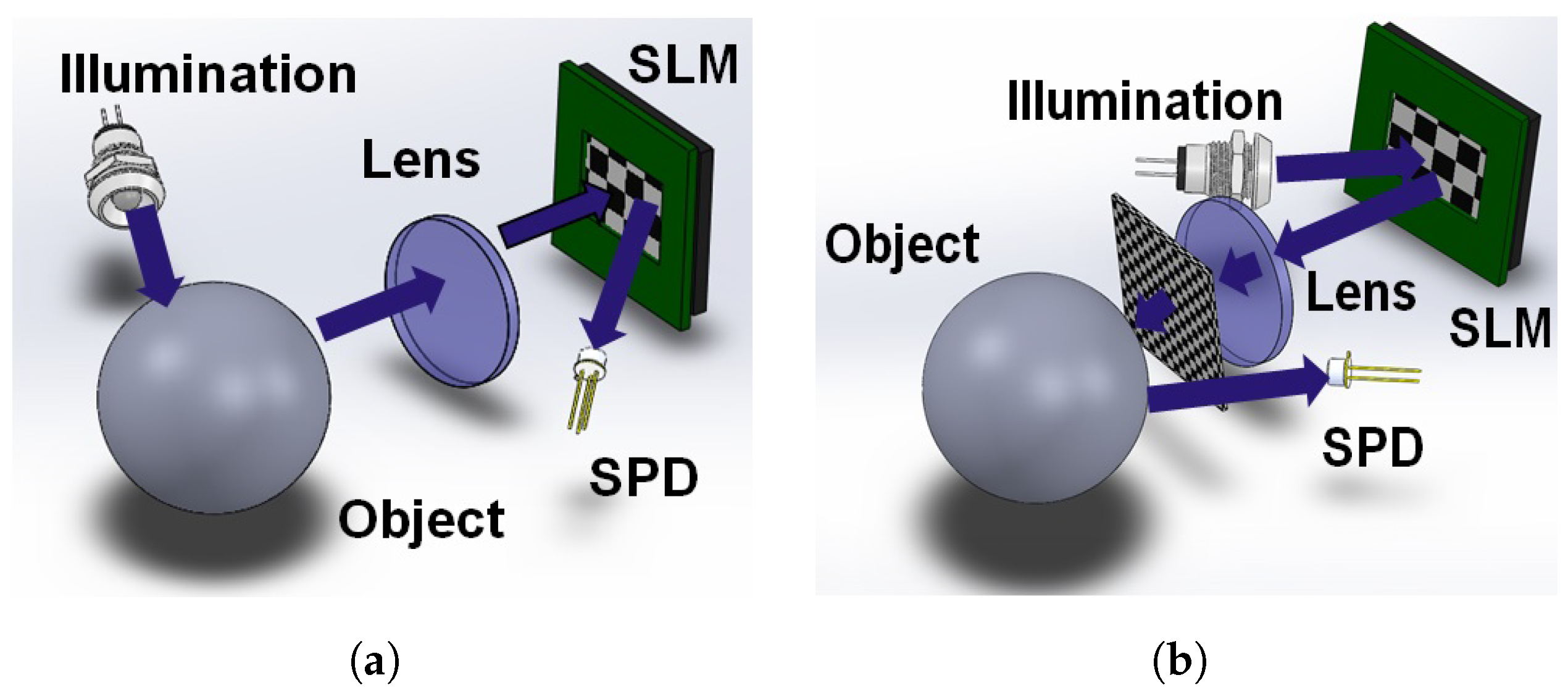

3. NIR-SPI System Test Architecture

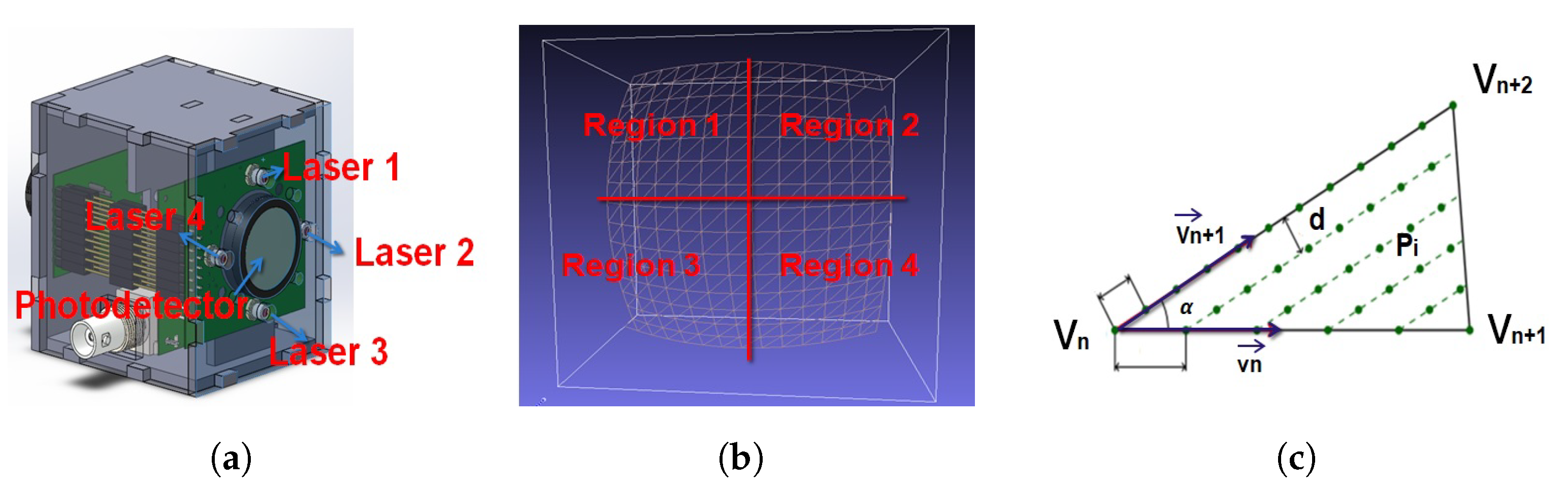

iTOF System Architecture

4. Fog Chamber Fabrication and Characterization

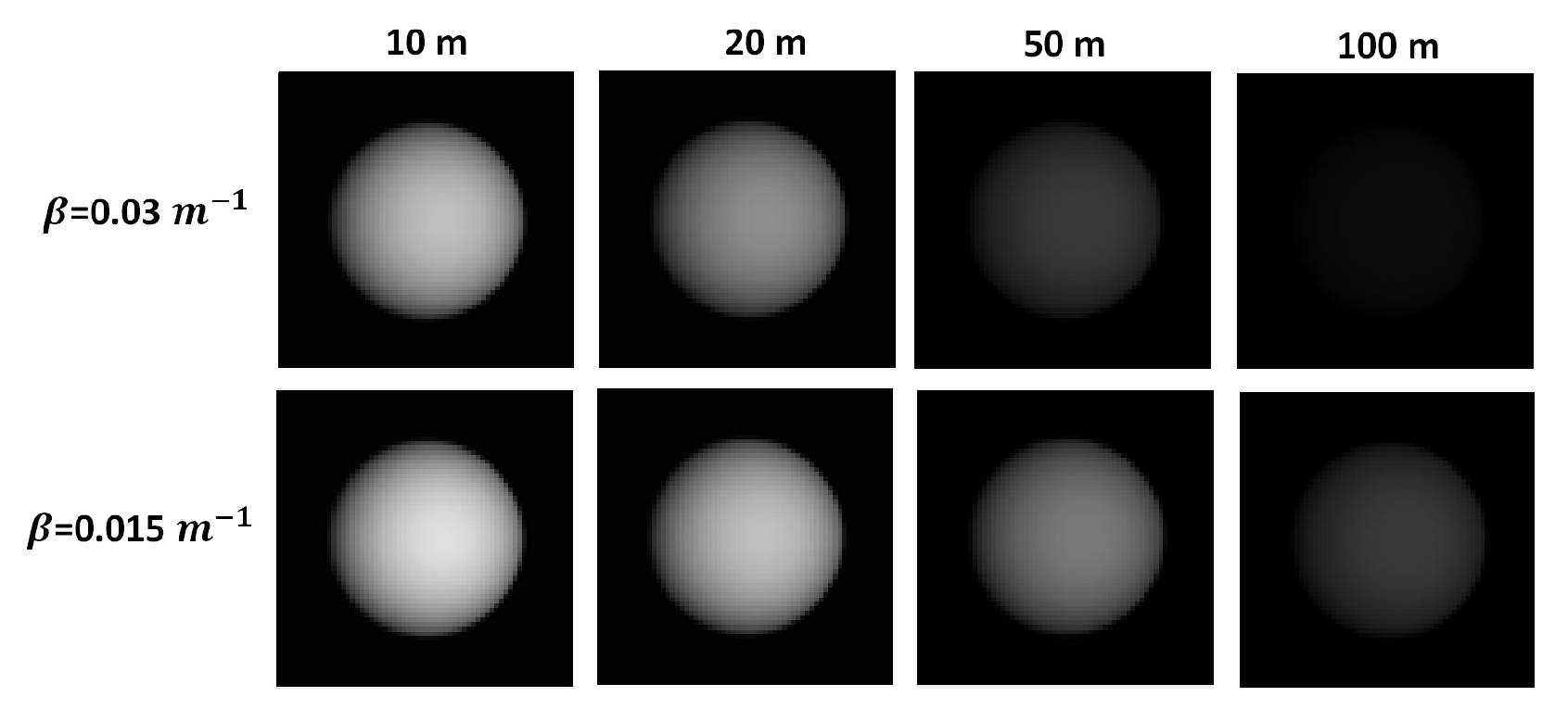

5. Modeling the Visibility and Contrast

Modeling the NIR-SPI System in Presence of Fog

6. 3D Using Unified Shape-From-Shading Model (USFSM) and iToF

7. Experimental Results

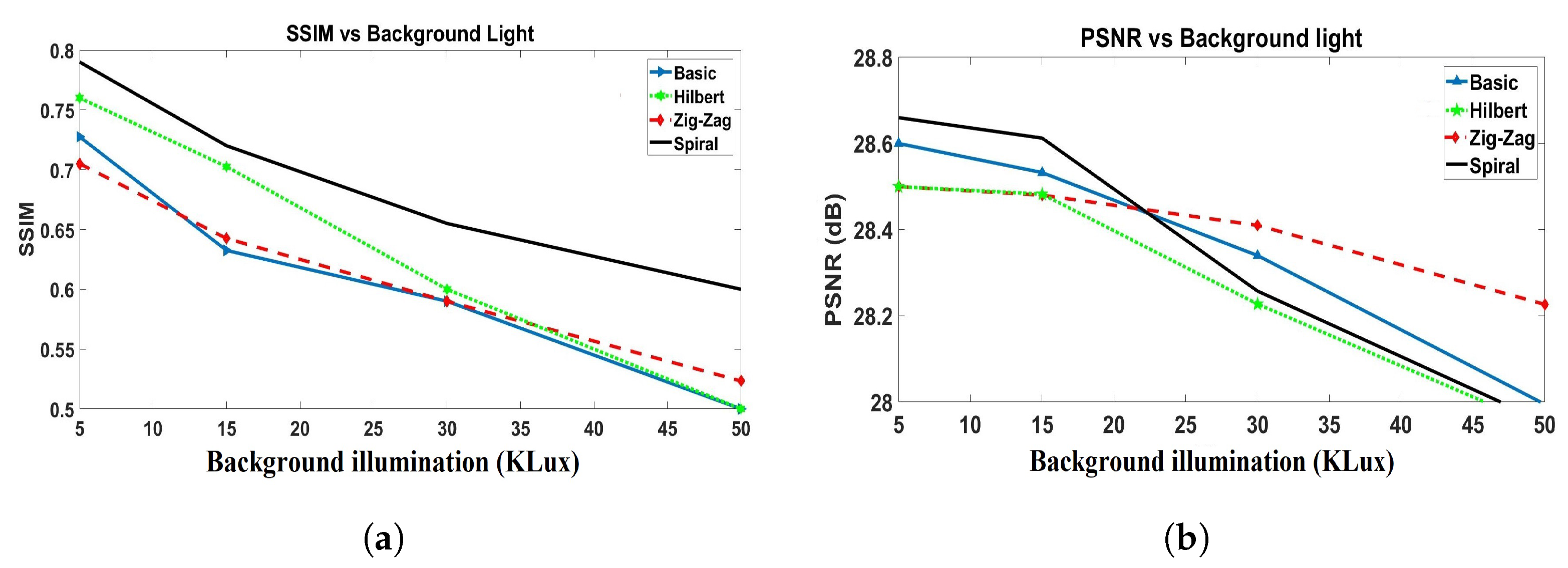

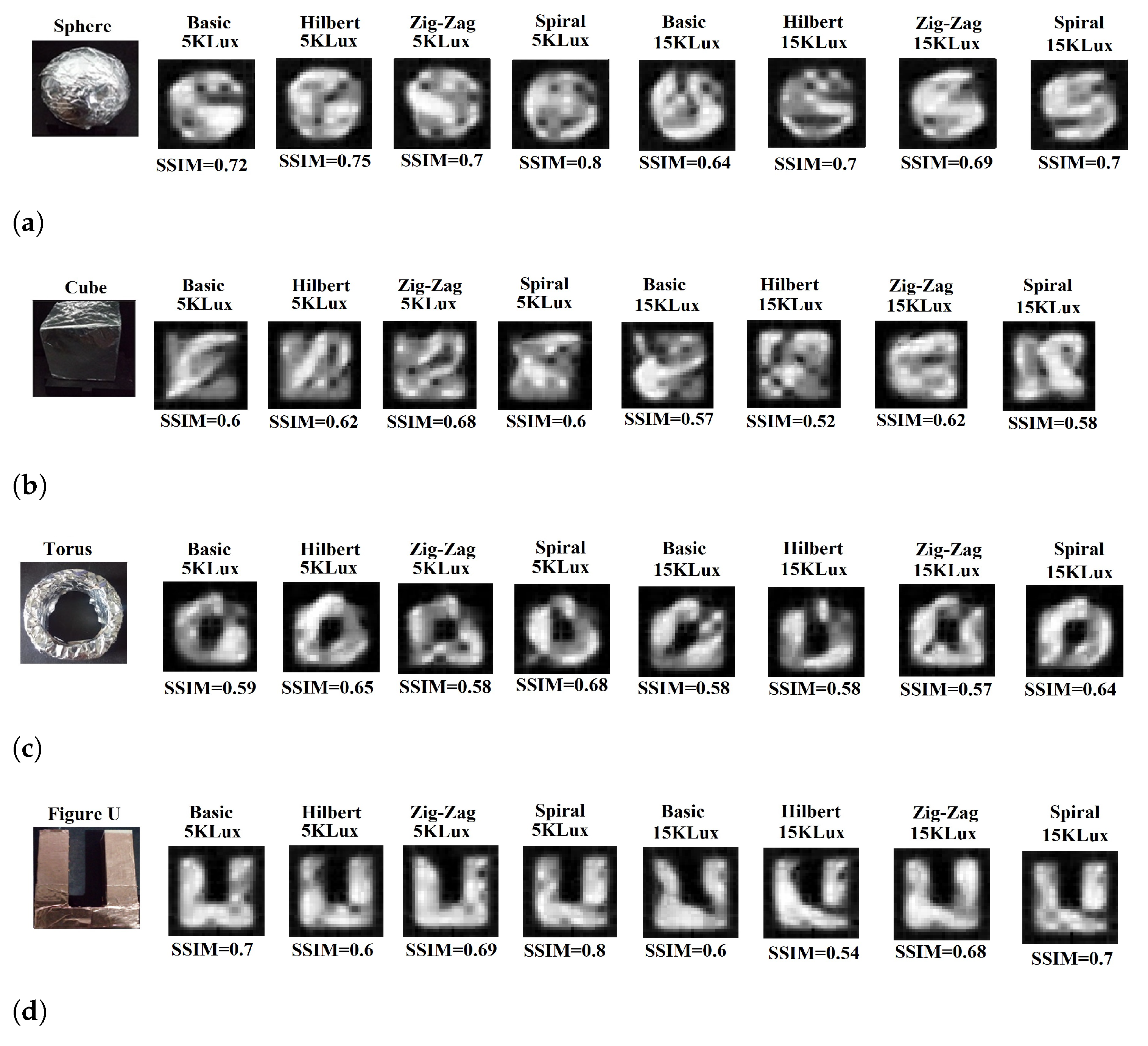

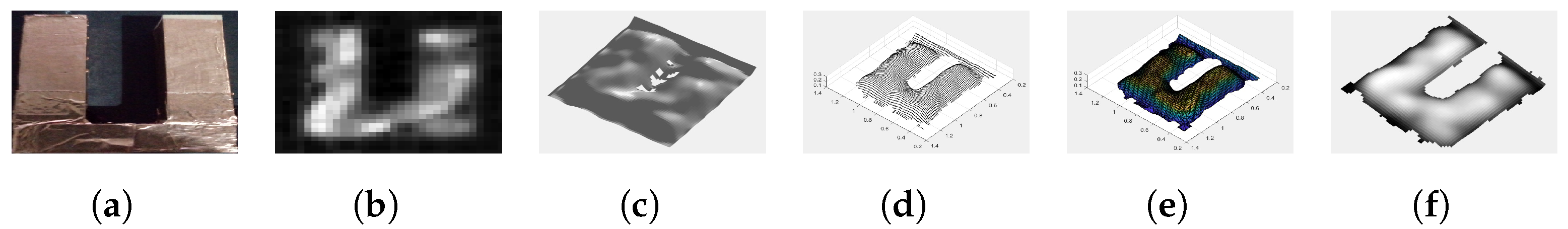

- 2D reconstruction: Two-dimensional (2D) image reconstruction with the NIR-SPI camera using respectively the , , -, and scanning methods in combination with the GPU-OMP algorithm [27] and the Fast Super-Resolution Convolutional Neural Network (FSRCNN) method with four upscaling factors [47]. For the reconstruction of 2D single-pixel images, we decided to use 100% of the illumination patterns projected. We generated the following different outdoor conditions and background light scenarios using the described test bench: (1) very cloudy conditions (5 klux), (2) half-cloudy conditions (15 klux), midday (30 klux), and clean-sky sun-glare (40–50 kLux). To evaluate the quality of the reconstructed 2D images, we used the Structural Similarity Index (SSIM) [48] and the Peak Signal-to-Noise Ratio (PSNR) [49] as fuction background illumination (see Figure 9).

- 3D reconstruction: We carried out a 3D image reconstruction from a 2D NIR-SPI image (see Figure 10) and iTOF information using Algorithms A2 and A3 under different background illumination conditions (very cloudy conditions (5 klux) and half-cloudy conditions (15 K Lux). The 3D images are shown in Figure 11. In the test, we calculated the level of RMSE, defined by Equation (16), and skewness, which defines the symmetry of the 3D shapes. A value near 0 indicates a best mesh and a value close to 1 indicates a completely degenerate mesh [50] (see Figure 12), while , as shown in Equation (17), indicates the percentage of improving the 3D image reconstruction in terms of RMSE (see Table 3).We can observe an improvement in the obtained 3D mesh compared to the first 3D reconstructions carried out using the SFS method (see Figure 12), mostly related to surface smoothing, correction of imperfections, and removal of outlying points. The space-filling method yields the best performance, with an improvement factor of 29.68%, followed by the - method, reaching an improvement of 28.68% (see Table 3). On the other hand, in case the background illumination reaches 15 Klux, the method reached 34.14% improvement, while the method reached 28.24% (see Table 3). Applying the SFS method, the Skewness and the mesh present an increase in a fog scenario from 0.6–0.7 (cell quality fair, see Table 4) to 0.8–1 (cell quality poor, see Table 5); with that, the cell quality degrades (see Figure 12a–c). For improving these values, using the power crust algorithm integrated with iToF for reaching a best range of skewness, for the case without fog, the range of skewness obtained was from 0.02 to 0.2 (cell quality excellent, see Table 4), which are the values of skewness recommended [50]. In the fog condition, we will seek to obtain a cell quality level mesh <0.5, which is considered a good mesh quality (see Table 5). Using the scanning method delivered the lowest skewness level, which was lower than if other space-filling methods were used, which indicates its sensitivity to noise.

- Evaluation of the image reconstruction time: An important parameter regarding the 3D reconstruction in vision systems is the processing time required for this task. For that, we search the method with the lowest reconstruction time (see Table 6) considering a trade-off between the image overall quality and the time required for its reconstruction.

| Scanning Method | 5 kLux | 15 kLux |

|---|---|---|

| 27.58% | 9.67% | |

| 27.52% | 28.24% | |

| 28.68% | 19.2% | |

| 29.68% | 32.14% |

| Scanning Method | ||

|---|---|---|

| 0.65 | 0.09 | |

| 0.52 | 0.02 | |

| 0.66 | 0.2 | |

| 0.69 | 0.12 |

| Scanning Method | ||

|---|---|---|

| 0.82 | 0.2 | |

| 0.73 | 0.11 | |

| 1.06 | 0.34 | |

| 0.81 | 0.17 |

| Scanning Method | |||

|---|---|---|---|

| 19.83 | 147.69 | 167.53 | |

| 19.18 | 127.36 | 146.54 | |

| 21.69 | 130.89 | 152.58 | |

| 24.95 | 133.53 | 158.49 |

8. Conclusions

9. Patents

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ADC | Analog to Digital Converters |

| BLRR | Background Light Rejection Ratio |

| Equivalent integration capacitance | |

| CS-SRCNN | Compressive Sensing-Super-Resolution Convolutional Neural Network |

| CW-iTOF | Continuous-wave-Time-of-Flight |

| DDS | Direct digital synthesis |

| DMD | Digital micromirror device |

| Fmod-eq | Frequency modulation equivalent |

| FSRCNN | Fast Super-Resolution Convolutional Neural Network |

| GPU | Graphics processing unit |

| iTOF | Indirect Time-of-Flight |

| InGaAs | Indium Gallium Arsenide |

| NIR | Near infrared imaging |

| OMP | Orthogonal matching pursuit |

| PSNR | Peak Signal-to-Noise Ratio |

| PDE | Partial differential equation |

| RGB | Red–Green–Blue |

| RMSE | Root mean square error |

| SSIM | Structural Similarity Index Measure |

| SFS | Shape-from-Shading |

| SLM | Spatial light modulator |

| SNR | Signal-to-noise ratio |

| SPD | Pixel Detector System |

| SPI | Single-Pixel Imaging |

| UFV | unmanned flight vehicles |

| USFSM | Unified Shape-From-Shading model |

| VIS | Visible wavelengths |

Appendix A

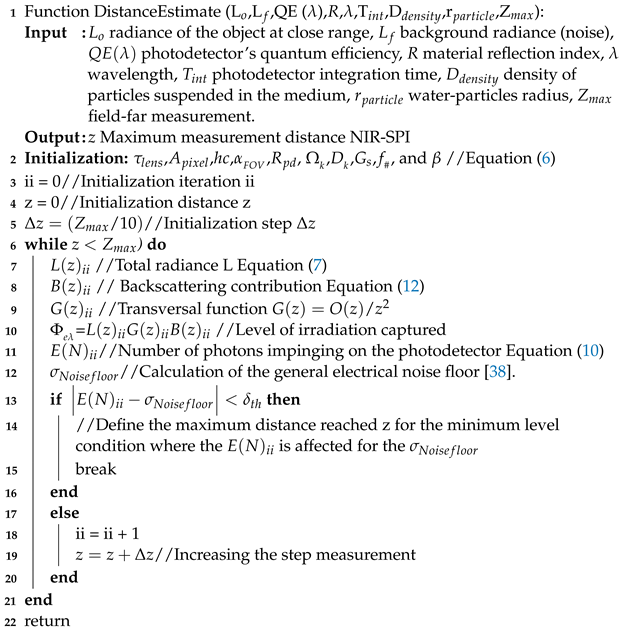

Appendix A.1. Pseudocode for Estimating the Maximum Capture Distance of NIR-SPI Vision System

| Algorithm A1: Estimatemaximum distance NIR-SPI |

|

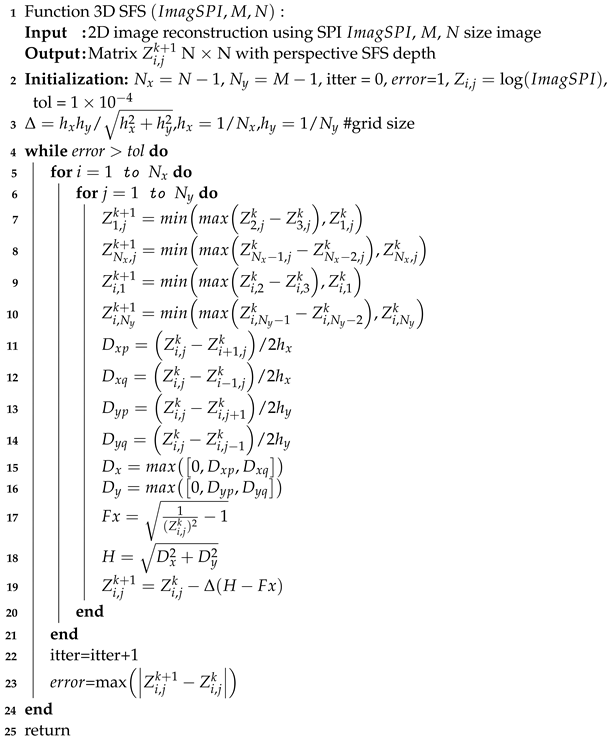

Appendix A.2. 3D Reconstruction of USFSM Using Fast Sweeping Algorithm

| Algorithm A2: Fast sweeping algorithm for H–J based on the Lax–Friedrichs method [53]. |

|

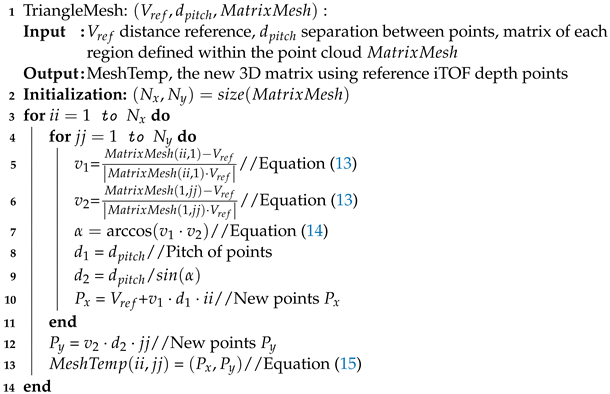

Appendix A.3. iTOF Algorithm

| Algorithm A3: Finding the points of contact of the iTOF ray to generate mesh [44]. |

1 Function Generation-to-Mesh: Input: Vectors with information distance, separation between points generated using SFS Algorithm A2, and matrix with points clouds Output: generation of the matrix with new mesh 2Initialization://size matrix points clouds 3//Defining region 1 4//Defining region 2 5//Defining region 3 6//Defining region 4 7 = 8 = 9 = 10 = 11 = TriangleMesh(,,)//We apply Algorithm A4 12 = TriangleMesh(,,)//We apply Algorithm A4 13 = TriangleMesh(,,)//We apply Algorithm A4 14 = TriangleMesh(,,)//We apply Algorithm A4 15 = [MatrixTemp MatrixTemp2 MatrixTemp3 MatrixTemp4] 16 return |

| Algorithm A4: Semi-even distribution of points on a single triangle [44]. |

|

References

- Moon, H.; Martinez-Carranza, J.; Cieslewski, T.; Faessler, M.; Falanga, D.; Simovic, A.; Scaramuzza, D.; Li, S.; Ozo, M.; De Wagter, C.; et al. Challenges and implemented technologies used in autonomous drone racing. Intell. Serv. Robot. 2019, 12, 137–148. [Google Scholar] [CrossRef]

- Valenti, F.; Giaquinto, D.; Musto, L.; Zinelli, A.; Bertozzi, M.; Broggi, A. Enabling Computer Vision-Based Autonomous Navigation for Unmanned Aerial Vehicles in Cluttered GPS-Denied Environments. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3886–3891. [Google Scholar] [CrossRef]

- Fujimura, Y.; Iiyama, M.; Hashimoto, A.; Minoh, M. Photometric Stereo in Participating Media Using an Analytical Solution for Shape-Dependent Forward Scatter. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 708–719. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Sun, C.; Zhao, Y.; Yang, L. Fog Density Estimation and Image Defogging Based on Surrogate Modeling for Optical Depth. IEEE Trans. Image Process. 2017, 26, 3397–3409. [Google Scholar] [CrossRef] [PubMed]

- Narasimhan, S.; Nayar, S. Removing weather effects from monochrome images. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001; Volume 2, p. II. [Google Scholar] [CrossRef] [Green Version]

- Chen, Z.; Ou, B. Visibility Detection Algorithm of Single Fog Image Based on the Ratio of Wavelength Residual Energy. Math. Probl. Eng. 2021, 2021, 5531706. [Google Scholar] [CrossRef]

- Liu, W.; Hou, X.; Duan, J.; Qiu, G. End-to-End Single Image Fog Removal Using Enhanced Cycle Consistent Adversarial Networks. Trans. Img. Proc. 2020, 29, 7819–7833. [Google Scholar] [CrossRef]

- Palvanov, A.; Giyenko, A.; Cho, Y. Development of Visibility Expectation System Based on Machine Learning. In Proceedings of the 17th International Conference, CISIM 2018, Olomouc, Czech Republic, 27–29 September 2018; pp. 140–153. [Google Scholar] [CrossRef]

- Katyal, S.; Kumar, S.; Sakhuja, R.; Gupta, S. Object Detection in Foggy Conditions by Fusion of Saliency Map and YOLO. In Proceedings of the 2018 12th International Conference on Sensing Technology (ICST), Limerick, Ireland, 4–6 December 2018; pp. 154–159. [Google Scholar] [CrossRef]

- Dannheim, C.; Icking, C.; Mader, M.; Sallis, P. Weather Detection in Vehicles by Means of Camera and LIDAR Systems. In Proceedings of the 2014 Sixth International Conference on Computational Intelligence, Communication Systems and Networks, Bhopal, India, 27–29 May 2014; pp. 186–191. [Google Scholar] [CrossRef]

- Guan, J.; Madani, S.; Jog, S.; Gupta, S.; Hassanieh, H. Through Fog High-Resolution Imaging Using Millimeter Wave Radar. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11461–11470. [Google Scholar] [CrossRef]

- Kijima, D.; Kushida, T.; Kitajima, H.; Tanaka, K.; Kubo, H.; Funatomi, T.; Mukaigawa, Y. Time-of-flight imaging in fog using multiple time-gated exposures. Opt. Express 2021, 29, 6453–6467. [Google Scholar] [CrossRef]

- Kang, X.; Fei, Z.; Duan, P.; Li, S. Fog Model-Based Hyperspectral Image Defogging. IEEE Trans. Geosci. Remote. Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Thornton, M.P.; Judd, K.M.; Richards, A.A.; Redman, B.J. Multispectral short-range imaging through artificial fog. In Proceedings of the Infrared Imaging Systems: Design, Analysis, Modeling, and Testing XXX; Holst, G.C., Krapels, K.A., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2019; Volume 11001, pp. 340–350. [Google Scholar] [CrossRef]

- Bashkansky, M.; Park, S.D.; Reintjes, J. Single pixel structured imaging through fog. Appl. Opt. 2021, 60, 4793–4797. [Google Scholar] [CrossRef]

- Soltanlou, K.; Latifi, H. Three-dimensional imaging through scattering media using a single pixel detector. Appl. Opt. 2019, 58, 7716–7726. [Google Scholar] [CrossRef] [PubMed]

- Zeng, X.; Chu, J.; Cao, W.; Kang, W.; Zhang, R. Visible–IR transmission enhancement through fog using circularly polarized light. Appl. Opt. 2018, 57, 6817–6822. [Google Scholar] [CrossRef]

- Tai, H.; Zhuang, Z.; Jiang, L.; Sun, D. Visibility Measurement in an Atmospheric Environment Simulation Chamber. Curr. Opt. Photon. 2017, 1, 186–195. [Google Scholar]

- Gibson, G.M.; Johnson, S.D.; Padgett, M.J. Single-pixel imaging 12 years on: A review. Opt. Express 2020, 28, 28190–28208. [Google Scholar] [CrossRef] [PubMed]

- Osorio Quero, C.A.; Durini, D.; Rangel-Magdaleno, J.; Martinez-Carranza, J. Single-pixel imaging: An overview of different methods to be used for 3D space reconstruction in harsh environments. Rev. Sci. Instrum. 2021, 92, 111501. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Wang, X.; Zheng, G.; Zhong, J. Hadamard single-pixel imaging versus Fourier single-pixel imaging. Opt. Express 2017, 25, 19619–19639. [Google Scholar] [CrossRef]

- Ujang, U.; Anton, F.; Azri, S.; Rahman, A.; Mioc, D. 3D Hilbert Space Filling Curves in 3D City Modeling for Faster Spatial Queries. Int. J. 3D Inf. Model. (IJ3DIM) 2014, 3, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Ma, H.; Sang, A.; Zhou, C.; An, X.; Song, L. A zigzag scanning ordering of four-dimensional Walsh basis for single-pixel imaging. Opt. Commun. 2019, 443, 69–75. [Google Scholar] [CrossRef]

- Cabreira, T.M.; Franco, C.D.; Ferreira, P.R.; Buttazzo, G.C. Energy-Aware Spiral Coverage Path Planning for UAV Photogrammetric Applications. IEEE Robot. Autom. Lett. 2018, 3, 3662–3668. [Google Scholar] [CrossRef]

- Zhang, R.; Tsai, P.S.; Cryer, J.; Shah, M. Shape-from-shading: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 690–706. [Google Scholar] [CrossRef] [Green Version]

- Wang, G.; Zhang, X.; Cheng, J. A Unified Shape-From-Shading Approach for 3D Surface Reconstruction Using Fast Eikonal Solvers. Int. J. Opt. 2020, 2020, 6156058. [Google Scholar] [CrossRef]

- Quero, C.O.; Durini, D.; Ramos-Garcia, R.; Rangel-Magdaleno, J.; Martinez-Carranza, J. Hardware parallel architecture proposed to accelerate the orthogonal matching pursuit compressive sensing reconstruction. In Proceedings of the Computational Imaging V; Tian, L., Petruccelli, J.C., Preza, C., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2020; Volume 11396, pp. 56–63. [Google Scholar] [CrossRef]

- Laser Safety Facts. Available online: https://www.lasersafetyfacts.com/laserclasses.html (accessed on 28 April 2021).

- Perenzoni, M.; Stoppa, D. Figures of Merit for Indirect Time-of-Flight 3D Cameras: Definition and Experimental Evaluation. Remote Sens. 2011, 3, 2461–2472. [Google Scholar] [CrossRef] [Green Version]

- Rajan, R.; Pandit, A. Correlations to predict droplet size in ultrasonic atomisation. Ultrasonics 2001, 39, 235–255. [Google Scholar] [CrossRef]

- Oakley, J.; Satherley, B. Improving image quality in poor visibility conditions using a physical model for contrast degradation. IEEE Trans. Image Process. 1998, 7, 167–179. [Google Scholar] [CrossRef] [PubMed]

- Matzler, C. MATLABfunctions for Mie scattering and absorption. IAP Res. Rep. 2002, 8. Available online: http://www.atmo.arizona.edu/students/courselinks/spring09/atmo656b/maetzler_mie_v2.pdf (accessed on 28 April 2021).

- Lee, Z.; Shang, S. Visibility: How Applicable is the Century-Old Koschmieder Model? J. Atmos. Sci. 2016, 73, 4573–4581. [Google Scholar] [CrossRef]

- Middleton, W.E.K. Vision through the Atmosphere. In Geophysik II / Geophysics II; Bartels, J., Ed.; Springer: Berlin/Heidelberg, Germany, 1957; pp. 254–287. [Google Scholar] [CrossRef]

- Hautière, N.; Tarel, J.P.; Didier, A.; Dumont, E. Blind Contrast Enhancement Assessment by Gradient Ratioing at Visible Edges. Image Anal. Stereol. 2008, 27, 87–95. [Google Scholar] [CrossRef]

- International Lighting Vocabulary = Vocabulaire International de L’éclairage. 1987. p. 365. Available online: https://cie.co.at/publications/international-lighting-vocabulary (accessed on 28 April 2021).

- Süss, A. High Performance CMOS Range Imaging: Device Technology and Systems Considerations; Devices, Circuits, and Systems; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Osorio Quero, C.A.; Romero, D.D.; Ramos-Garcia, R.; de Jesus Rangel-Magdaleno, J.; Martinez-Carranza, J. Towards a 3D Vision System based on Single-Pixel imaging and indirect Time-of-Flight for drone applications. In Proceedings of the 2020 17th International Conference on Electrical Engineering, Computing Science and Automatic Control (CCE), Mexico City, Mexico, 11–13 November 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Tozza, S.; Falcone, M. Analysis and Approximation of Some Shape-from-Shading Models for Non-Lambertian Surfaces. J. Math. Imaging Vis. 2016, 55, 153–178. [Google Scholar] [CrossRef] [Green Version]

- Peyré, G. NumericalMesh Processing. Course Notes. Available online: https://hal.archives-ouvertes.fr/hal-00365931 (accessed on 28 April 2021).

- Amenta, N.; Choi, S.; Kolluri, R.K. The Power Crust. In Proceedings of the Sixth ACM Symposium on Solid Modeling and Applications; Association for Computing Machinery: New York, NY, USA, 2001; pp. 249–266. [Google Scholar] [CrossRef]

- Möller, T.; Trumbore, B. Fast, Minimum Storage Ray-Triangle Intersection. J. Graph. Tools 1997, 2, 21–28. [Google Scholar] [CrossRef]

- Kaufman, A.; Cohen, D.; Yagel, R. Volume graphics. Computer 1993, 26, 51–64. [Google Scholar] [CrossRef]

- Kot, T.; Bobovský, Z.; Heczko, D.; Vysocký, A.; Virgala, I.; Prada, E. Using Virtual Scanning to Find Optimal Configuration of a 3D Scanner Turntable for Scanning of Mechanical Parts. Sensors 2021, 21, 5343. [Google Scholar] [CrossRef]

- Huang, J.; Yagel, R.; Filippov, V.; Kurzion, Y. An accurate method for voxelizing polygon meshes. In Proceedings of the IEEE Symposium on Volume Visualization (Cat. No.989EX300), Research Triangle Park, NC, USA, 19–20 October 1998; pp. 119–126. [Google Scholar] [CrossRef] [Green Version]

- Ravi, S.; Kurian, C. White light source towards spectrum tunable lighting—A review. In Proceedings of the 2014 International Conference on Advances in Energy Conversion Technologies (ICAECT), Manipal, India, 23–25 January 2014; pp. 203–208. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the Super-Resolution Convolutional Neural Network. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2016. [Google Scholar]

- Zhu, Q.; Mai, J.; Shao, L. A Fast Single Image Haze Removal Algorithm Using Color Attenuation Prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [CrossRef] [Green Version]

- Chen, T.; Liu, M.; Gao, T.; Cheng, P.; Mei, S.; Li, Y. A Fusion-Based Defogging Algorithm. Remote Sens. 2022, 14, 425. [Google Scholar] [CrossRef]

- Budd, C.J.; McRae, A.T.; Cotter, C.J. The scaling and skewness of optimally transported meshes on the sphere. J. Comput. Phys. 2018, 375, 540–564. [Google Scholar] [CrossRef] [Green Version]

- Rojas-Perez, L.O.; Martinez-Carranza, J. Metric monocular SLAM and colour segmentation for multiple obstacle avoidance in autonomous flight. In Proceedings of the 2017 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED-UAS), Linköping, Sweden, 3–5 October 2017; pp. 234–239. [Google Scholar]

- Dionisio-Ortega, S.; Rojas-Perez, L.O.; Martinez-Carranza, J.; Cruz-Vega, I. A deep learning approach towards autonomous flight in forest environments. In Proceedings of the 2018 International Conference on Electronics, Communications and Computers (CONIELECOMP), Cholula, Mexico, 21–23 February 2018; pp. 139–144. [Google Scholar]

- Kao, C.Y.; Osher, S.; Qian, J. Lax–Friedrichs sweeping scheme for static Hamilton–Jacobi equations. J. Comput. Phys. 2004, 196, 367–391. [Google Scholar] [CrossRef] [Green Version]

| Parameters | Value |

|---|---|

| 0.8 @ 1550 nm | |

| C | 19 fF |

| A | 235 |

| FF | 0.38 |

| T | 65 ns |

| F | 4.8 MHz |

| T | 150 s |

| 1 cm | |

| 10º | |

| NED | 1 |

| PR | 11.84 |

| SNR | 20–30 dB |

| BLRR | −50 dB |

| Reflection Coefficient | 0.2 | 0.5 | 0.8 |

|---|---|---|---|

| Theoretically calculated maximum measurement distance in absence of fog (cm) | 22.4 | 35 | 44 |

| Theoretically calculated maximum measurement distance in presence of 3 m diameter fog particles (cm) | 18 | 27 | 30.8 |

| Experimentally obtained maximum measurement distance in absence of fog using the LSM method (cm) | 22 | 34.2 | 43.4 |

| Experimentally obtained maximum measurement distance in presence of 3 m diameter fog particles using the LSM method (cm) | 17.6 | 26.21 | 30.18 |

| Scanning Method | Skewness | Improvement (%) | |

|---|---|---|---|

| 0.2 | 19 | 167.53 | |

| 0.1 | 28 | 146.54 | |

| 0.34 | 24 | 152.58 | |

| 0.17 | 31 | 158.49 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Osorio Quero, C.; Durini, D.; Rangel-Magdaleno, J.; Martinez-Carranza, J.; Ramos-Garcia, R. Single-Pixel Near-Infrared 3D Image Reconstruction in Outdoor Conditions. Micromachines 2022, 13, 795. https://doi.org/10.3390/mi13050795

Osorio Quero C, Durini D, Rangel-Magdaleno J, Martinez-Carranza J, Ramos-Garcia R. Single-Pixel Near-Infrared 3D Image Reconstruction in Outdoor Conditions. Micromachines. 2022; 13(5):795. https://doi.org/10.3390/mi13050795

Chicago/Turabian StyleOsorio Quero, C., D. Durini, J. Rangel-Magdaleno, J. Martinez-Carranza, and R. Ramos-Garcia. 2022. "Single-Pixel Near-Infrared 3D Image Reconstruction in Outdoor Conditions" Micromachines 13, no. 5: 795. https://doi.org/10.3390/mi13050795

APA StyleOsorio Quero, C., Durini, D., Rangel-Magdaleno, J., Martinez-Carranza, J., & Ramos-Garcia, R. (2022). Single-Pixel Near-Infrared 3D Image Reconstruction in Outdoor Conditions. Micromachines, 13(5), 795. https://doi.org/10.3390/mi13050795