Abstract

Electrical impedance tomography (EIT) is a non-invasive, radiation-free imaging technique with a lot of promise in clinical monitoring. However, since EIT image reconstruction is a non-linear, pathological, and ill-posed issue, the quality of the reconstructed images needs constant improvement. To increase image reconstruction accuracy, a grey wolf optimized radial basis function neural network (GWO-RBFNN) is proposed in this paper. The grey wolf algorithm is used to optimize the weights in the radial base neural network, determine the mapping between the weights and the initial position of the grey wolf, and calculate the optimal position of the grey wolf to find the optimal solution for the weights, thus improving the image resolution of EIT imaging. COMSOL and MATLAB were used to numerically simulate the EIT system with 16 electrodes, producing 1700 simulation samples. The standard Landweber, RBFNN, and GWO-RBFNN approaches were used to train the sets separately. The obtained image correlation coefficient (ICC) of the test set after training with GWO-RBFNN is 0.9551. After adding 30, 40, and 50 dB of Gaussian white noise to the test set, the attained ICCs with GWO-RBFNN are 0.8966, 0.9197, and 0.9319, respectively. The findings reveal that the proposed GWO-RBFNN approach outperforms the existing methods when it comes to image reconstruction.

1. Introduction

Electrical impedance tomography (EIT) is a novel functional imaging approach that uses electrical information at field boundaries to reconstruct an image of the electrical conductivity distribution within an object [1]. Because of its benefits of radiation-free viewing, quick reaction, non-invasive and simple structure, the EIT technique has been a focus of study and is extensively employed in industrial processes [2] and medical monitoring [3,4].

According to Maxwell’s equations, the potential distribution measured from electrodes and the exciting current density determine the electrical conductivity distribution of the internal material. On the other hand, the image reconstruction of the EIT is a non-linear, pathological, and ill-posed issue [5].

The traditional image reconstruction methods can be divided into dynamic algorithms (isotropic inverse projection method [6], sensitive matrix method, and conjugate gradient method [7] and static algorithms (Gaussian Newton method [8], layer peeling method [9], static imaging algorithm for isotropic inverse projection, etc.). Dynamic algorithms are quicker to image and are often used for online reconstruction, but they need less precision from the data gathering apparatus and produce images of lower quality, as well. On the other hand, although the quality of reconstructed images generated using static methods has been improved, the repetitive search for incredibly small value points is computationally taxing, slowing down imaging and increasing noise sensitivity. Traditional image reconstruction techniques commonly utilize a linear equation to establish a mathematical model of the relationship between border voltage levels and conductivity distribution inside the object field [10]. The linearization procedure loses a lot of crucial information, resulting in substantial distortion of the rebuilt image.

Neural networks are distributed information storage structures that avoid the linearized analysis of sensitive matrix computing and image reconstruction by having huge parallelism, non-linearity, high self-adaptability, and strong self-learning capacity [11]. Many academics have committed themselves in recent years to solving the EIT image reconstruction challenge using various neural networks [12]. The BP neural network, convolutional neural network [13], and radial basis function are three typical effective and reliable neural network models.

A radial basis function neural network (RBFNN) is a high-performing feed-forward neural network. It has a remarkable global approximation capacity for nonlinear models, and can approximate any nonlinear function with arbitrary precision, which sets it apart from other neural networks. Additionally, due to its straightforward structure, rapid rate of learning convergence, and absence of sensitive matrix computation, the RBFNN satisfies the requirements of EIT image reconstruction [14]. The weights in RBFNN have a substantial influence on the network model’s overall performance and are directly connected to the predictability of the outcomes. When there is a lot of noise in the training data, the least squares method (LSM) will lead the neural network to fit an inaccurate surface. This will make the network less versatile. Furthermore, as the number of input samples increases, the disparity between the members of the generated weight matrix also increases. This will lead to an unstable solution, ultimately resulting in low-quality EIT reconstruction images.

A grey wolf optimized radial basis function neural network (GWO-RBFNN) is proposed in this paper to improve the network’s accuracy for image reconstruction of EIT in the presence of noise. The method adjusts the network center using the K-means algorithm and determines the network base width using the KNN (K-nearest neighbors) algorithm. Then, it uses the grey wolf optimization algorithm instead of the LSM to obtain more stable network weights, achieving the goal of improving the network model’s prediction accuracy. It was shown that the GWO-RBFNN approach proposed in this paper successfully improves the reconstruction quality of EIT images and boosts the artifact removal ability by comparing the reconstruction outcomes of other algorithms.

2. Theory

2.1. Mathematical Model

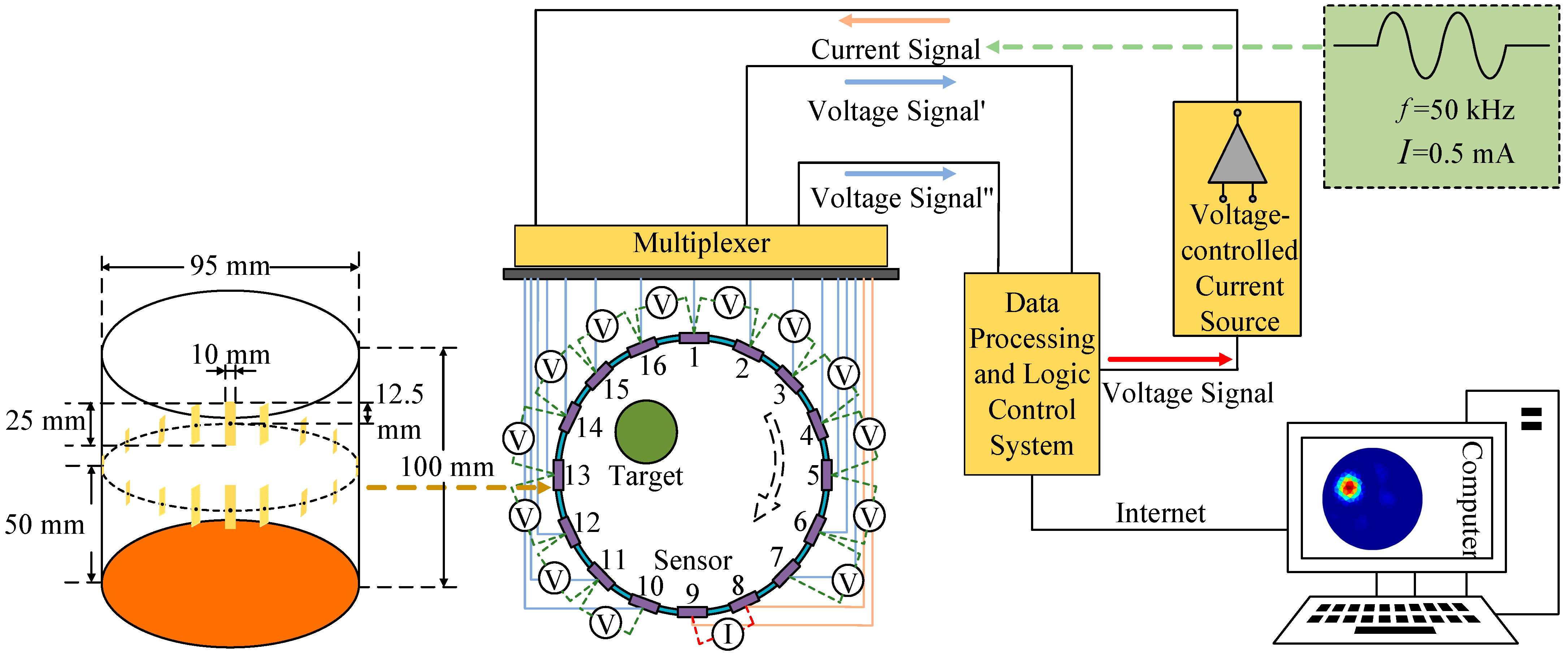

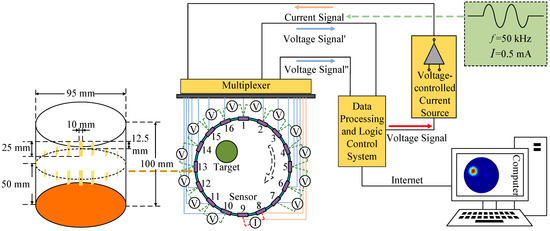

The diagram of the EIT system is shown in Figure 1. There are 16 electrodes evenly distributed on the sensor. The electrode width in this work is 10 mm. The length of the electrode is 25 mm and the height of the electrode sensor is 100 mm. The geometric center of the electrode is located 50 mm from the electrode sensor.

Figure 1.

Diagram of EIT system.

When a safe AC excitation current signal is applied to the electrode sensor at the field boundary, the multiplexer can measure the voltage signal of the remaining electrode pairs at the field boundary, send the resulting analog signal to the data acquisition section, and then uses an image reconstruction algorithm and the collected voltage data to reconstruct the conductivity distribution inside the field [15].

The EIT measures the field domain, which satisfies Maxwell’s equations and electromagnetic field theory, and can be mathematically modeled as follows:

where Ω denotes the field, indicates the internal conductivity distribution of the field, and represents the distribution function of the field potential.

The EIT field boundary condition is set to:

where denotes the field boundary, denotes the current density of the injected current on the boundary, n denotes the normal unit vector outside the field, and denotes the potential distribution at the field boundary.

The boundary excitation current j is chosen as a fixed value, and a constant current source is used as the excitation current in the experiment. The frequency and amplitude of the excitation current are constant, and then the image reconstruction becomes an investigation of the relationship between the conductivity distribution σ and the potential distribution in the field.

2.2. Building an RBFNN for EIT

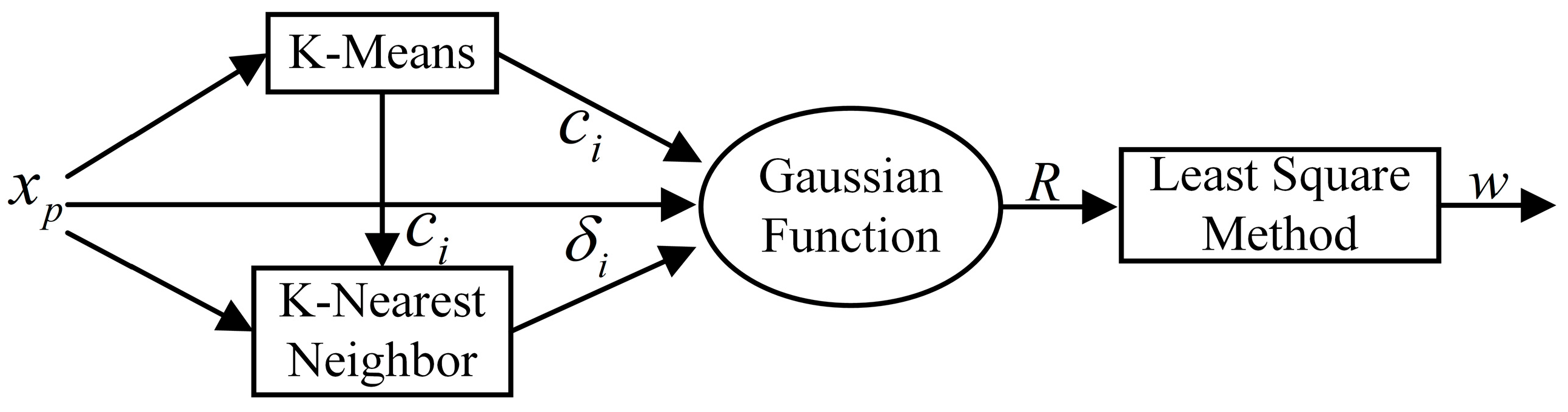

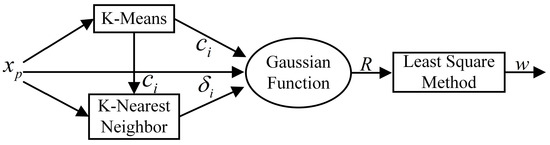

The fundamental construction of an RBFNN is shown in Figure 2, which is a kind of forward neural network. It is made up of three layers: the input layer, the concealed layer, and the output layer. The signal is sent from the input layer to the hidden layer, which completes the non-linear transformation from the input layer to the hidden layer space by using the radial basis function as the activation function. The RBFNN can approximate any non-linear function, which not only speeds up convergence and eliminates the issue of local minima, but also fits the EIT image reconstruction requirements.

Figure 2.

The classical RBFNN algorithm flow. The K-means algorithm is used to adjust the network center, the KNN algorithm to determine the network base width, and the LSM algorithm to calculate the connection weights to finally obtain the predicted conductivity of the EIT reconstructed image.

In the network operation structure, the input layer is . When a Gaussian function is used for the basis function in the radial basis neural network, the predicted conductivity can be expressed as:

where is the Euclidean parametrization, is the center of the Gaussian function, is the base width vector, and is a vector of connection weights.

The center vector of the function, the base width vector, and the vector of weights from the hidden layer to the output layer are the unknown parameters that the network must learn. The following are the stages in its learning algorithm:

1. Finding the center of the basis function based on the K-means method.

The center can be adjusted by the following formula:

where is the training sample vector of the input p, is the i center of RBF at the t iteration, j is the cluster center, is the iteration step, and . After learning all the training samples, and when the cluster center change satisfies the iteration condition, the iteration stops.

2. Solving for variance .

The basis function of this neural network is a Gaussian function, and the variance can be solved as follows:

where is the maximum distance between the selected centers.

3. Calculating the weights between the implied and output layers.

The weights of neuron connections between the implicit layer and the output layer are directly calculated by the LSM with the following equation:

Through the above learning steps, the learning algorithm of RBFNN is constructed.

2.3. Grey Wolf Algorithm for Optimizing RBFNN Models

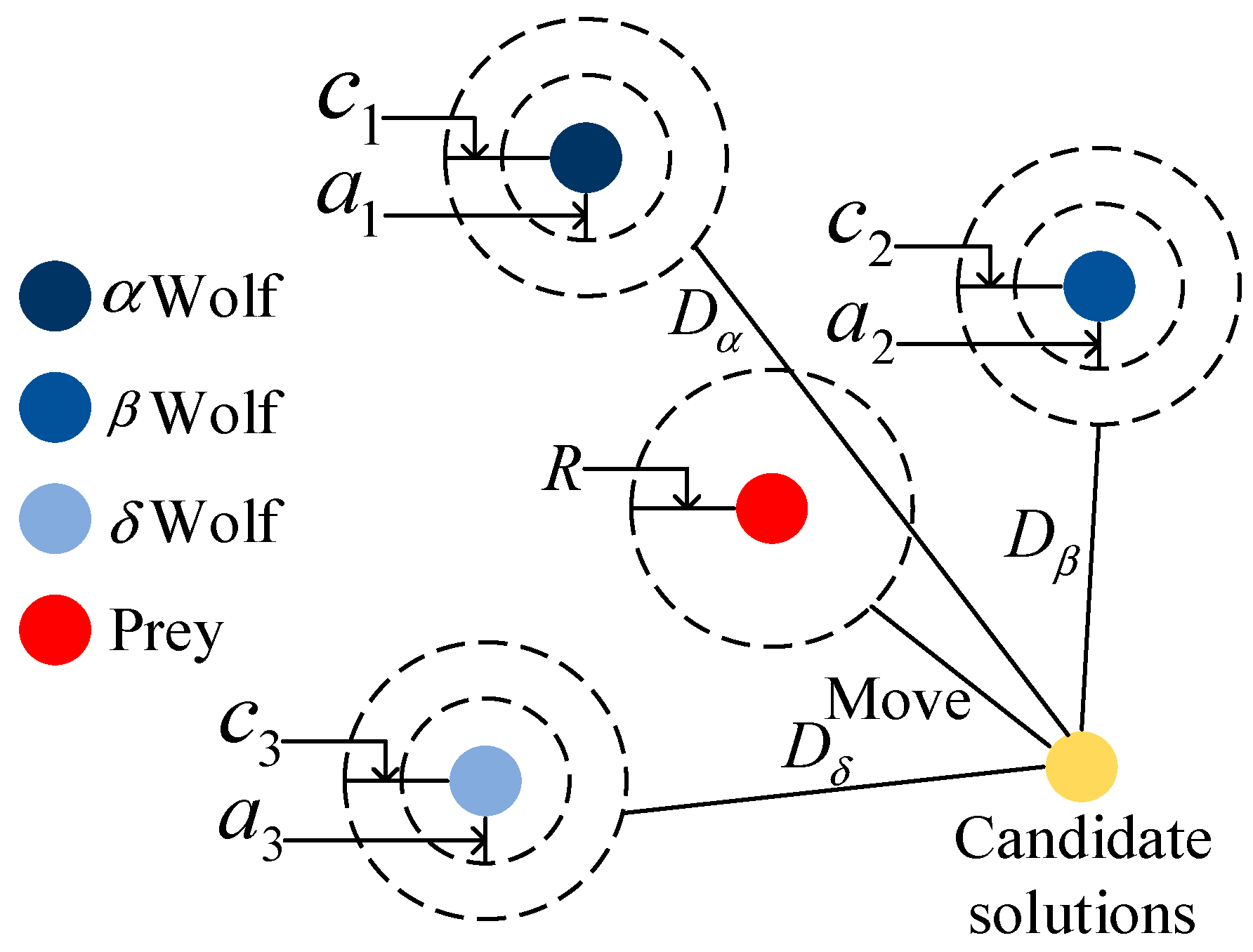

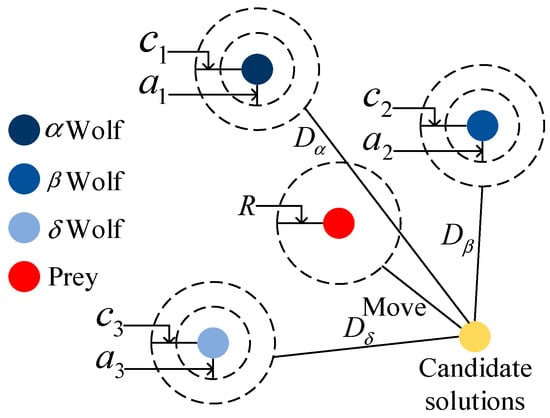

To improve the accuracy of RBFNN reconstructed images, we propose to use the improved grey wolf optimization algorithm to obtain stable network weights. Firstly, it is necessary to construct a grey wolf social hierarchy model when designing the GWO algorithm. Secondly, we should calculate the fitness of each individual in the population. Lastly, we should mark the three grey wolves with the best fitness in the pack as , , and in turn, while the remaining grey wolves are marked as . The hunting behavior is shown in Figure 3.

Figure 3.

The optimization process of the grey wolf optimization algorithm. Taking as the most suitable solution, the three wolves are guided by , , and during the hunting process, and wolf follows these three wolves.

The behavior of the grey wolf for hunting its prey is defined as shown in Equations (8) and (9):

where Equation (8) represents the distance between an individual and its prey, and Equation (9) is the position update formula for the grey wolf, where t is the number of generations of the current iteration, A and C are the coefficient vectors, and X and are the position vector of the prey and the position vector of the grey wolf, respectively. A and C are calculated as follows:

where a is a convergence factor that decreases linearly from 2 to 0 with the number of iterations, and the norms of and fall into a random number between [0, 1]. The C vector provides random weights for the prey. In order to simulate approaching prey, the value of a is gradually reduced. During the iterations, as the value of a decreases linearly from 2 to 0, and its corresponding value of A also varies within the interval .

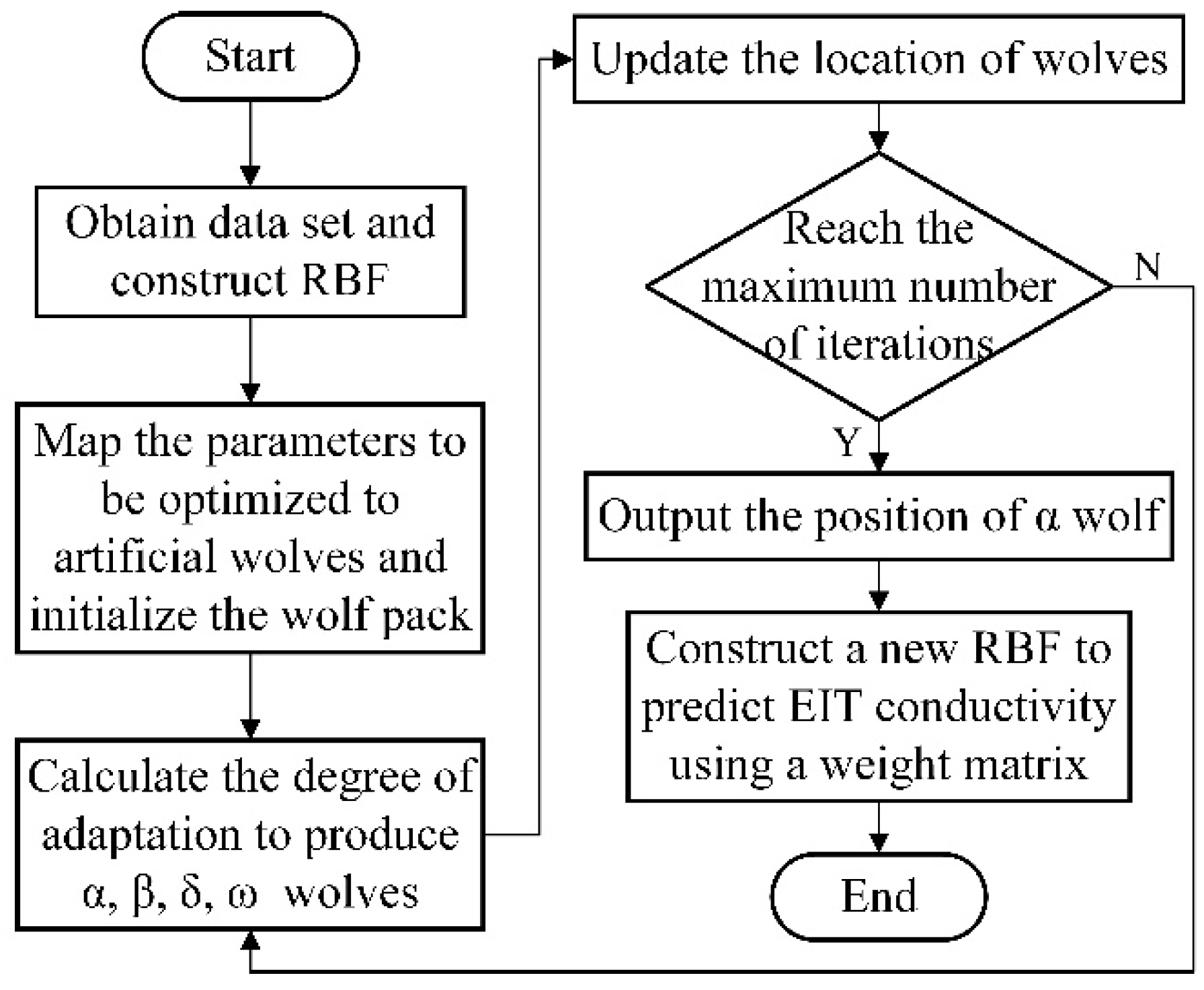

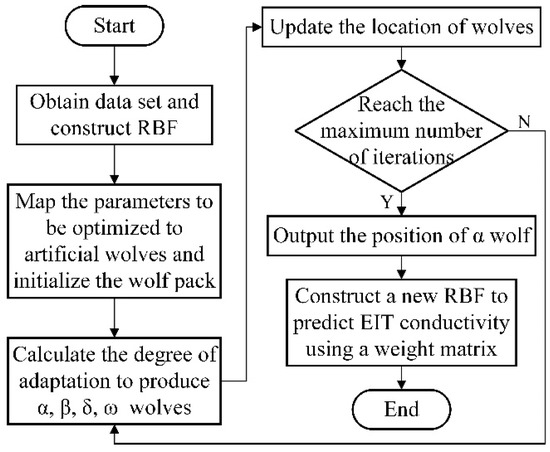

The connection weights of RBFNN are crucial parameters that directly affect the reliability of the predicted EIT reconstruction results. The LSM algorithm subjectively regards the mapping from the implicit layer to the output layer as a linear mapping and needs to calculate the inverse matrix, ignoring the circumstance that the inverse matrix does not exist. To solve this problem, we optimize the connection weights W to improve the prediction accuracy of RBFNN through the GWO algorithm, instead of calculating the inverse matrix. The specific optimization process of the proposed algorithm is shown in Figure 4, and described as follows:

Figure 4.

Flowchart of the EIT algorithm based on the proposed GWO-RBFNN. Using the optimized algorithm to calculate the internal conductivity of the EIT results in a reconstructed image.

(1) The EIT using 16 electrodes was modeled and simulated. The dataset was acquired using COMSOL in combination with MATLAB simulation and then separated into a test and training set based on this model.

(2) Initializing the grey wolf algorithm. Firstly, the positions of the individual artificial grey wolves are generated randomly in the definition domain. Secondly, a mapping between the grey wolf position dimensions and the connection weights W is established. Lastly, the weight matrix W from the hidden layer to the output layer of the neural network is mapped into the position vector of the artificial grey wolves to construct the RBFNN model.

(3) Calculating the fitness. The RMSE (root mean square error) of the output of the neural network, as described in Equation (12). It is used as the fitness function of the grey wolf algorithm. The fitness function is a measure of the merit of the position of the individual grey wolf. The smaller the value of the fitness function S, the better the position, which is defined as follows:

where is the training output, is the expected value, and N is the capacity of the entire training sample.

(4) Updating the location of the grey wolf. The optimal wolf position is calculated by the grey wolf algorithm and remapped to the connection weights of the RBFNN hidden layer to the output layer.

(5) The end condition is satisfied and the iteration is stopped. The optimized weights are obtained, and the optimal solution is applied to the RBFNN. Then, the trained RBFNN model is obtained by inputting the optimized weights into the training set, and the reconstructed image of the EIT is predicted using the test set.

3. Results

3.1. Acquisition of Datasets

The quality of the dataset has a substantial influence on the network model’s generalization capability, and neural network learning needs a high number of samples to train the network model. Simulation datasets were created using combined COMSOL and MATLAB simulations. They will be used to solve the issue, which is a large number of samples with actual conductivity distributions and accompanying boundary voltage measurements not being accessible in real systems.

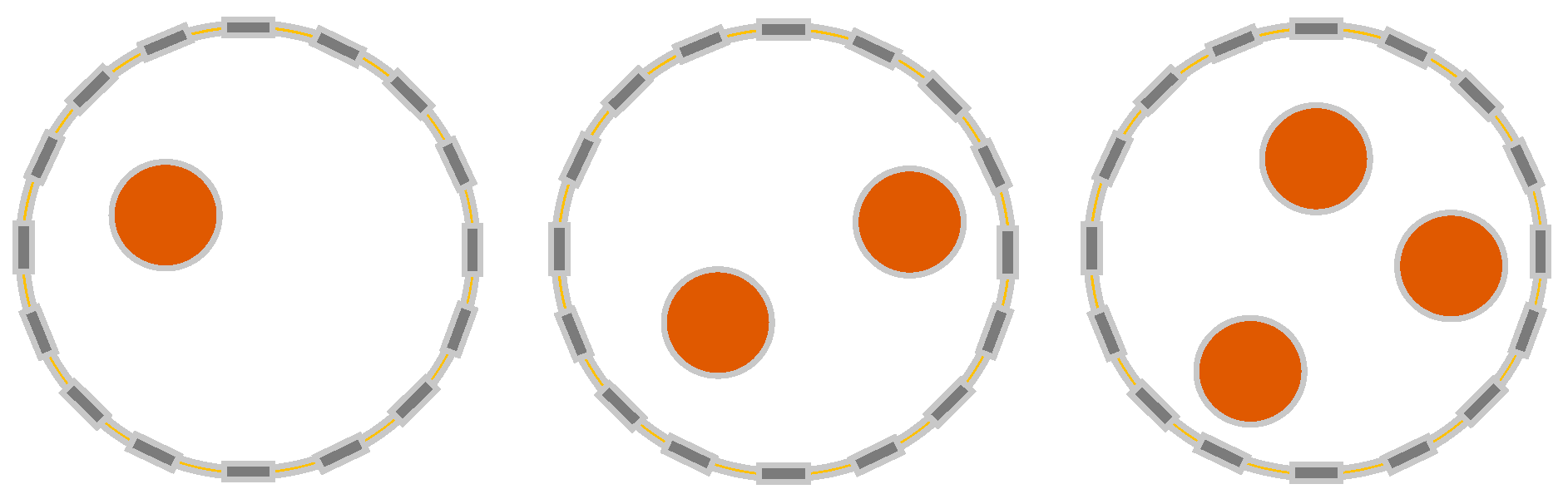

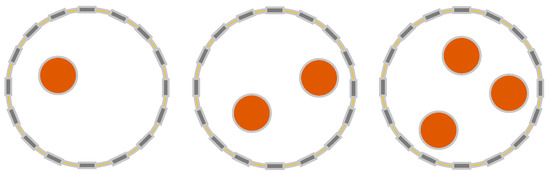

A 16-electrode configuration is chosen in this work because a 16-electrode EIT system has been widely used with a satisfactory resolution. Then, using a 16-electrode EIT system with adjacent current excitation and adjacent voltage measurement modes, simulations were run to reconstruct the field’s internal conductivity distributions. When choosing the target shape, we need to consider the convenience between the target and the reconstructed image to highlight the effect of the algorithm optimization. Compared to other shapes of targets, circles have very good image reconstruction results. Therefore, the classical circular target object is chosen. Circular targets with a diameter of 10 mm were randomly formed in a circular physical field with a diameter of 95 mm, as shown in Figure 5. When the difference between the conductivity of the background solution and the conductivity of the target is greater, the more sensitive the change in boundary voltage is. Therefore, the better the image reconstruction will be. Thus, in order to obtain good image reconstruction, the conductivity of the background solution was set to S/m and the conductivity of the circular target was chosen as S/m. To obtain the internal conductivity distributions, the 0.5 mA excitation current was applied, and the measurement frequency was set to 50 kHz. Since the targets in this paper are basically near the boundary, the adjacency excitation method was chosen so high sensitivity to changes in conductivity near the boundary can be obtained.

Figure 5.

The different EIT configurations for the simulations.

Single, double, and triple circular targets were investigated. To obtain the conductivity distributions and accompanying boundary voltage values, each group underwent 1700 numerical simulations with varied target locations. The remaining 200 samples were utilized for testing, while 1500 samples were used for training. This ensured that the training and testing sets did not overlap.

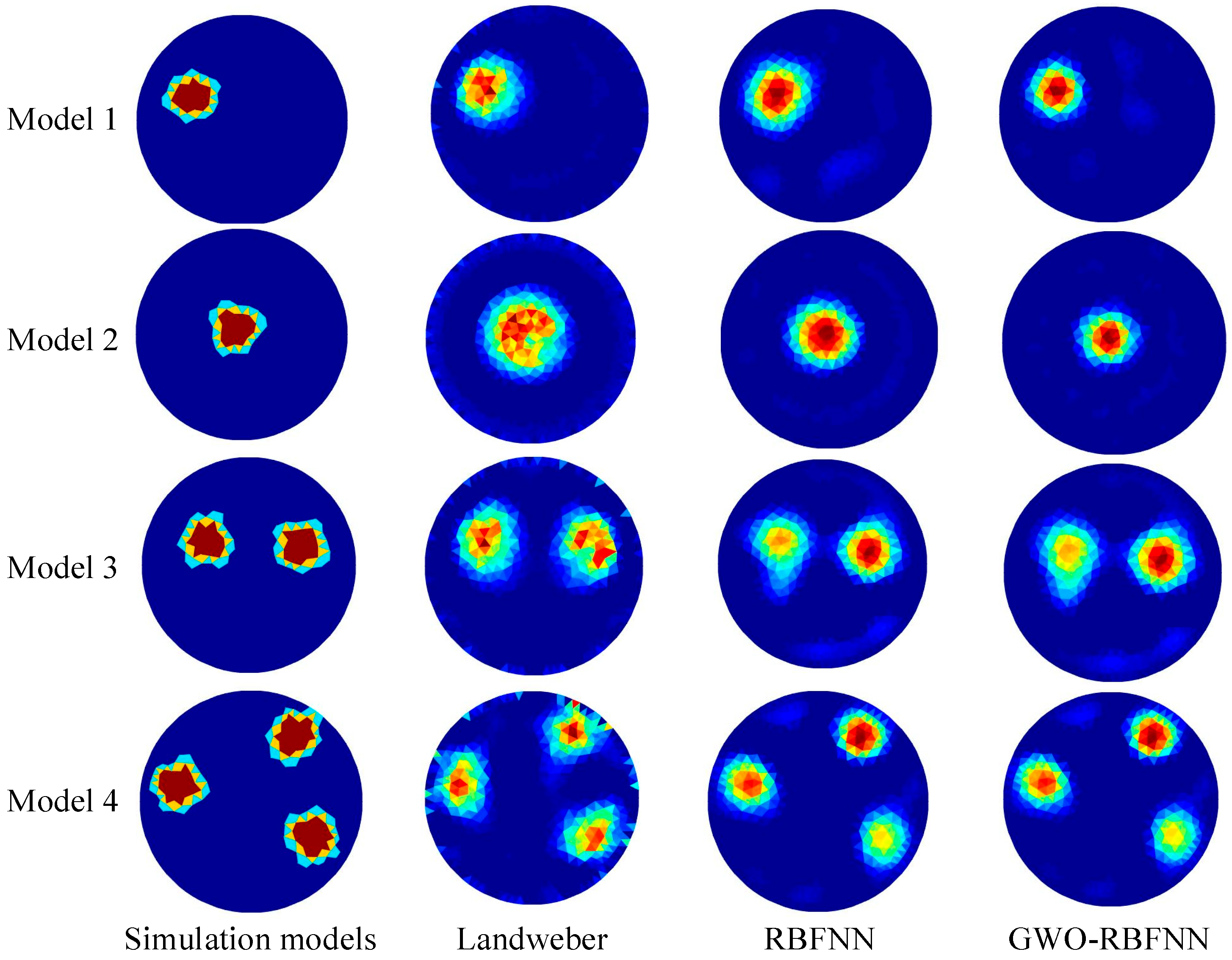

3.2. Simulation Results

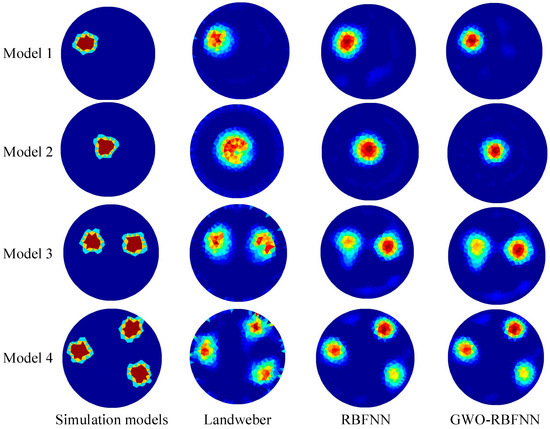

The improved model was then utilized to predict the reconstructed images of the test sets after training with 1500 samples. Typical models from the noise-free test set and their reconstructed images with different algorithms are shown in Figure 6. Two commonly-used algorithms, Landweber and RBFNN, were also employed for comparison to demonstrate the prediction accuracy of the proposed GWO-RBFNN. The findings reveal that all of the algorithms can reassemble images of targets in various configurations. At the same time, the proposed GWO-RBFNN outperforms the other two algorithms in terms of prediction precision, especially when a single circular target is positioned in the center of the background solution.

Figure 6.

Typical models from the noise-free test set and their reconstructed images with different algorithms.

The RMSE and the ICC were selected as the rating criteria to quantitatively assess the image reconstruction quality of various algorithms. The RMSE gives a good indication of the accuracy of the observations. The smaller the value of RMSE, the better the reconstruction of the conductivity value. On the other hand, the closer the correlation coefficient is to 1 or −1, the stronger the correlation. The closer the correlation coefficient is to 0, the weaker the correlation. They are described by the following formulae:

where and are the estimated conductivity and its average value, respectively; and are the original conductivity and its average value, respectively; and n is the number of elements in the finite element model. The estimated RMSE and ICC averages of the reconstructed images based on Equations (13) and (14) for all test sets with various approaches are shown in Table 1. The proposed GWO-RBFNN approach clearly outperforms the Landweber and RBFNN algorithms in terms of image reconstruction quality, with the lowest RMSE value of 0.0848 and the greatest ICC value of 0.9519. The values of RMES decreased by 46.3% and 20.2%, respectively. The values of ICC improved by 14.4% and 9%, respectively.

Table 1.

Averages of RMSE and ICC in the noiseless test set.

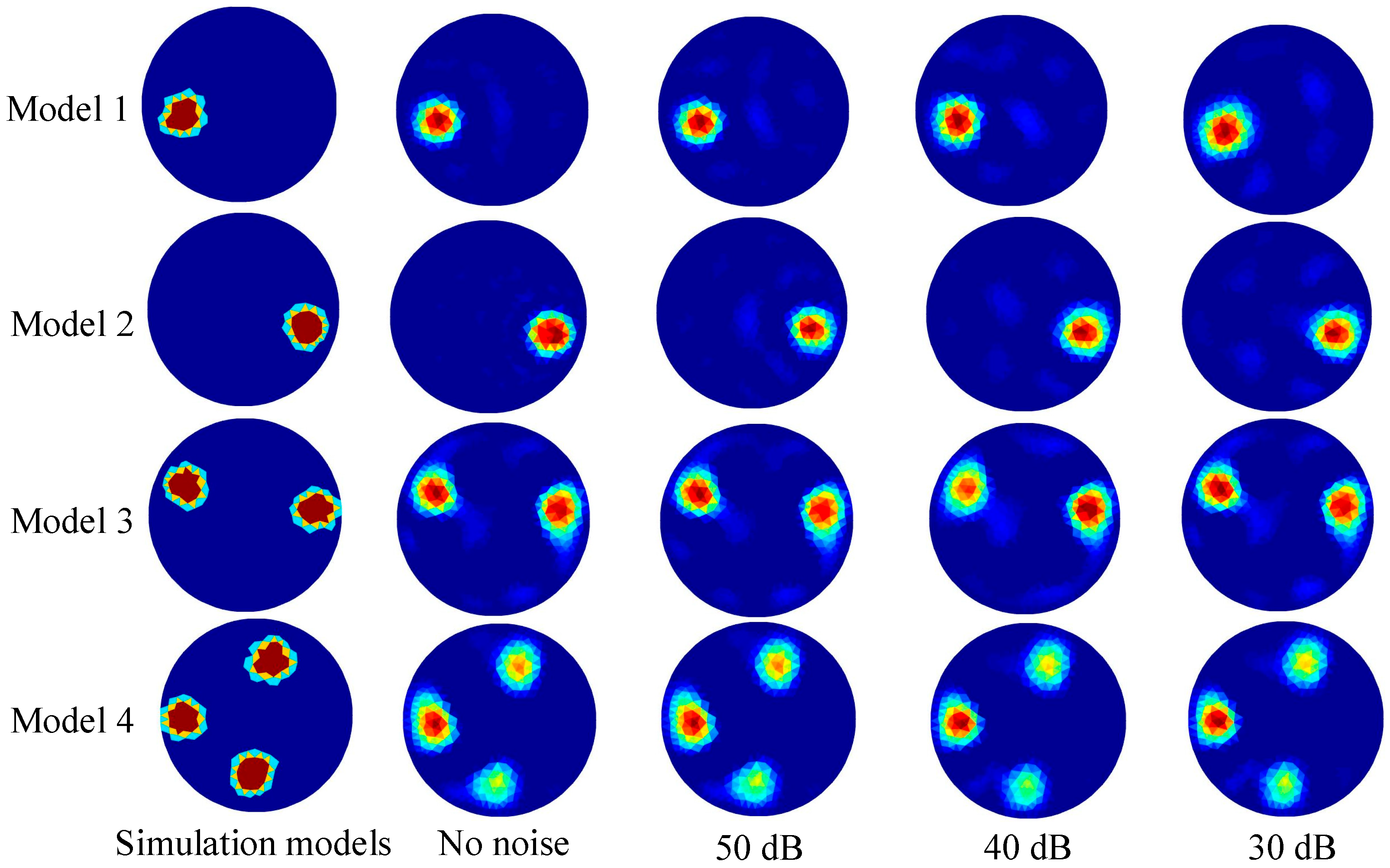

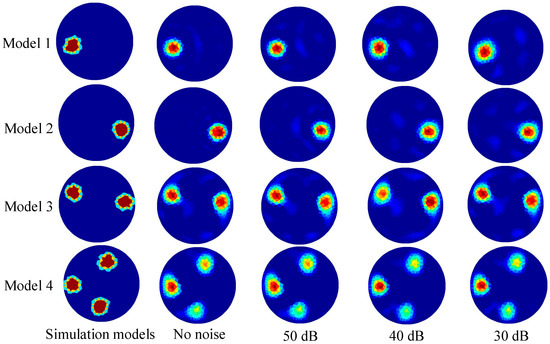

3.3. Robustness of the GWO-RBFNN

To test the robustness of the proposed method against noise, Gaussian white noises of 30 dB, 40 dB, and 50 dB were added to the test sets. Exemplary models with various Gaussian white noises created using the proposed GWO-RBFNN are shown in Figure 7. The findings reveal that all of the models have high-quality image reconstructions.

Figure 7.

The reconstructed images of typical models with different noise levels using the proposed GWO-RBFNN.

The average values of RMSE and ICC derived by the three alternative approaches, with varied noise levels in the test sets, are shown in Table 2 and Table 3. The simulation results with noise show that when different levels of noise are applied to the test set, the average RMSE increases while the average ICC decreases. Under Gaussian white noises of 30 dB, 40 dB, and 50 dB, the RMSEs of the proposed approach in this paper are raised from 0.0848 to 0.1139, 0.0962, and 0.0915, respectively. They increased by 34.3%, 13.4%, and 7.9% respectively. The ICCs of the proposed method decreased from 0.9551 to 0.8966, 0.9197, and 0.9319, respectively. They decreased by 6.1%, 3.7%, and 2.4%, respectively. However, it can be seen that all the RMSEs with the proposed GWO-RBFNN are lower than the other two algorithms, and all the ICCs with the proposed GWO-RBFNN are higher than the other two algorithms. The results show that the algorithm proposed in this paper exhibits better robustness than the frequently used Landweber and RBFNN algorithms.

Table 2.

Average RMSE with noise test set.

Table 3.

Average ICC with noise test set.

From Figure 6 and Figure 7, it can be seen that the GWO-RBFNN approach developed in this work not only has some noise immunity, but it also has some generalization capacity to adapt to the scenario of multi-target detection. According to the image reconstruction results of the multi-target test sets, when the GWO-RBFNN approach is used for multi-target imaging, the number of target objects is clearly recognized, and the position and size of the detected targets can be correctly displayed. The related RMSE and ICC averages still provide more acceptable results without considerable deterioration, indicating that the approach proposed in this paper is capable of satisfactory generalization.

4. Conclusions

A GWO-RBFNN approach is proposed in this paper to increase the accuracy of EIT image reconstruction. In order to improve the prediction accuracy of the network model, we first use the K-means method to adjust the network center. Then, we use the KNN algorithm to determine the network base width, and finally, we use the grey wolf algorithm to optimize the connection weight. A joint simulation using COMSOL and MATLAB was constructed to obtain 1700 EIT simulation samples for training and testing the performance of the proposed method. The image reconstruction results with noisy test sets demonstrate the robustness and generalization of the proposed GWO-RBFNN method. The GWO-RBFNN approach provides superior image reconstruction outcomes and artifact removal capacity compared to the Landweber and RBFNN methods, according to test findings from the 16-electrode EIT system.

Author Contributions

Conceptualization, G.W. and W.T.; software, G.W.; writing—original draft preparation, G.W. and D.F.; writing—review and editing, G.W. and W.T. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the China Postdoctoral Science Foundation (grant no. 2020M671450), the Jiangsu Planned Projects for Postdoctoral Research Funds (grant no. 2020Z042), the Natural Science Fund for Colleges and Universities in Jiangsu Province (grant no. 20KJA460004), and the Open Research Fund of Jiangsu Key Laboratory for Design and Manufacture of Micro-Nano Biomedical Instruments, Southeast University (grant no. KF202008).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jongschaap, H.C.; Wytch, R.; Hutchison, J.M.; Kulkarni, V. Electrical impedance tomography: A review of current literature. Eur. J. Radiol. 1994, 18, 165–174. [Google Scholar] [CrossRef]

- Tossavainen, O.P.; Vauhkonen, M.; Kolehmainen, V.; Kim, K.Y. Tracking of moving interfaces in sedimentation processes using electrical impedance tomography. Chem. Eng. Sci. 2006, 61, 7717–7729. [Google Scholar] [CrossRef]

- Gomez-Cortes, J.C.; Diaz-Carmona, J.J.; Padilla-Medina, J.A.; Calderon, A.E.; Gutierrez, A.I.B.; Gutierrez-Lopez, M.; Prado-Olivarez, J. Electrical Impedance Tomography Technical Contributions for Detection and 3D Geometric Localization of Breast Tumors: A Systematic Review. Micromachines 2022, 13, 496. [Google Scholar] [CrossRef] [PubMed]

- Hannan, S.; Aristovich, K.; Faulkner, M.; Avery, J.; Walker, M.C.; Holder, D.S. Imaging slow brain activity during neocortical and hippocampal epileptiform events with electrical impedance tomography. Phys. Meas. 2021, 42, 014001. [Google Scholar] [CrossRef] [PubMed]

- Martins, T.D.; Sato, A.K.; de Moura, F.S.; de Camargo, E.; Silva, O.L.; Santos, T.B.R.; Zhao, Z.Q.; Moeller, K.; Amato, M.B.P.; Mueller, J.L.; et al. A review of electrical impedance tomography in lung applications: Theory and algorithms for absolute images. Ann. Rev. Control 2019, 48, 442–471. [Google Scholar] [CrossRef] [PubMed]

- Bera, T.K.; Biswas, S.K.; Rajan, K.; Nagaraju, J. Projection Error Propagation-based Regularization (PEPR) method for resistivity reconstruction in Electrical Impedance Tomography (EIT). Measurement 2014, 49, 329–350. [Google Scholar] [CrossRef]

- Zhao, B.; Wang, H.X.; Chen, X.Y.; Shi, X.L.; Yang, W.Q. Linearized solution to electrical impedance tomography based on the Schur conjugate gradient method. Meas. Sci. Technol. 2007, 18, 3373–3383. [Google Scholar] [CrossRef]

- de Moura, B.F.; Martins, M.F.; Palma, F.H.S.; da Silva, W.B.; Cabello, J.A.; Ramos, R. Nonstationary bubble shape determination in Electrical Impedance Tomography combining Gauss-Newton Optimization with particle filter. Measurement 2021, 186, 110216. [Google Scholar] [CrossRef]

- Ping, S.; Jiang, H.L. Design of electrical impedance tomography system based on layer stripping process. Appl. Mech. Mater. 2013, 329, 392–396. [Google Scholar] [CrossRef]

- Adler, A.; Boyle, A. Electrical Impedance Tomography: Tissue Properties to Image Measures. IEEE Trans. Biomed. Eng. 2017, 64, 2494–2504. [Google Scholar] [PubMed]

- Bianchessi, A.; Akamine, R.H.; Duran, G.C.; Tanabi, N.; Sato, A.K.; Martins, T.C.; Tsuzuki, M.S.G. Electrical Impedance Tomography Image Reconstruction Based on Neural Networks. In Proceedings of the 21st IFAC World Congress on Automatic Control-Meeting Societal Challenges, Berlin, Germany, 11–17 July 2020; pp. 15946–15951. [Google Scholar]

- Martin, S.; Choi, C.T.M. Nonlinear Electrical Impedance Tomography Reconstruction Using Artificial Neural Networks and Particle Swarm Optimization. IEEE Trans. Magn. 2016, 52, 7203904. [Google Scholar] [CrossRef]

- Duran, G.C.; Sato, A.K.; Ueda, E.K.; Takimoto, R.Y.; Martins, T.C.; Tsuzuki, M.S.G. Electrical Impedance Tomography Image Reconstruction using Convolutional Neural Network with Periodic Padding. In Proceedings of the 11th IFAC Symposium on Biological and Medical Systems (BMS), Ghent, Belgium, 19–22 September 2021; pp. 418–423. [Google Scholar]

- Michalikova, M.; Prauzek, M.; Koziorek, J. Impact of the Radial Basis Function Spread Factor onto Image Reconstruction in Electrical Impedance Tomography. In Proceedings of the 13th IFAC and IEEE Conference on Programmable Devices and Embedded Systems, Cracow, Poland, 13–15 May 2015; pp. 230–233. [Google Scholar]

- Wu, Y.; Hanzaee, F.F.; Jiang, D.; Bayford, R.H.; Demosthenous, A. Electrical Impedance Tomography for Biomedical Applications: Circuits and Systems Review. IEEE Open J. Circuits Syst. 2021, 2, 380–397. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).