Low-Density sEMG-Based Pattern Recognition of Unrelated Movements Rejection for Wrist Joint Rehabilitation

Abstract

:1. Introduction

2. Methods

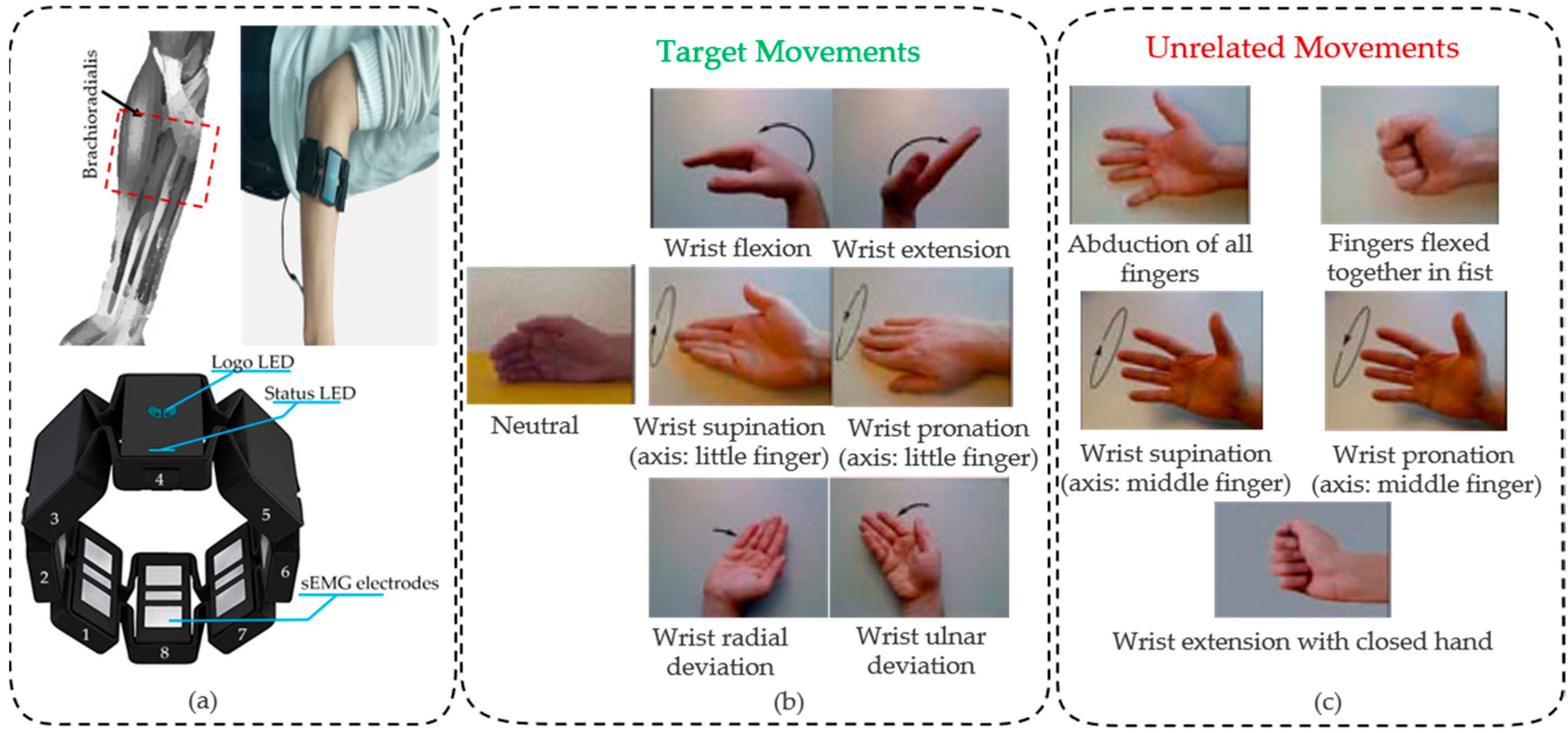

2.1. System Overview

2.2. sEMG Dataset

2.3. Data Segmentation and sEMG Image Encoding

2.4. The Discriminative Feature Extraction Network

2.5. Unrelated Movements Rejection Module

2.6. Evaluation Criteria

3. Experiment Results and Discussion

3.1. Influence of Different Hyper-Parameter

3.2. Performance of the Trained Model

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ersoy, C.; Iyigun, G. Boxing training in patients with stroke causes improvement of upper extremity, balance, and cognitive functions but should it be applied as virtual or real? Top. Stroke Rehabil. 2021, 28, 112–126. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Yan, J.; Fang, Y.; Zhou, D.; Liu, H. Simultaneous Prediction of Wrist/Hand Motion via Wearable Ultrasound Sensing. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 970–977. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Castiblanco, J.C.; Ortmann, S.; Mondragon, I.F.; Alvarado-Rojas, C.; Jöbges, M.; Colorado, J.D. Myoelectric pattern recognition of hand motions for stroke rehabilitation. Biomed. Signal Process. Control 2020, 57, 101737. [Google Scholar] [CrossRef]

- Yang, Z.; Guo, S.; Liu, Y.; Hirata, H.; Tamiya, T. An intention-based online bilateral training system for upper limb motor rehabilitation. Microsyst. Technol. 2020, 27, 211–222. [Google Scholar] [CrossRef]

- Gautam, A.; Panwar, M.; Biswas, D.; Acharyya, A. MyoNet A Transfer-Learning-Based LRCN for Lower Limb Movement Recognition and Knee Joint Angle Prediction for Remote Monitoring of Rehabilitation Progress From sEMG. IEEE J. Transl. Eng. Health Med. 2020, 8, 1–10. [Google Scholar] [CrossRef]

- Xu, H.; Xiong, A. Advances and Disturbances in sEMG-Based Intentions and Movements Recognition: A Review. IEEE Sens. J. 2021, 21, 13019–13028. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, Y.; Yu, H.; Yang, X.; Lu, W. Learning Effective Spatial–Temporal Features for sEMG Armband-Based Gesture Recognition. IEEE Internet Things 2020, 7, 6979–6992. [Google Scholar] [CrossRef]

- Yang, Z.; Guo, S.; Suzuki, K.; Liu, Y.; Kawanishi, M. An EMG-based Biomimetic Variable Stiffness Modulation Strategy for Bilateral Motor Skills Relearning of Upper Limb Elbow Joint Rehabilitation. J. Bionic Eng. 2023, 1–16. [Google Scholar] [CrossRef]

- Liu, Y.; Guo, S.; Yang, Z.; Hirata, H.; Tamiya, T. A Home-based Tele-rehabilitation System with Enhanced Therapist-patient Remote Interaction: A Feasibility Study. IEEE J. Biomed. Health Inform. 2022, 26, 4176–4186. [Google Scholar] [CrossRef]

- Liu, Y.; Guo, S.; Yang, Z.; Hirata, H.; Tamiya, T. A Home-based Bilateral Rehabilitation System with sEMG-based Real-time Variable Stiffness. IEEE J. Biomed. Health Inform. 2020, 25, 1529–1541. [Google Scholar] [CrossRef]

- Bi, L.; Feleke, A.; Guan, C. A review on EMG-based motor intention prediction of continuous human upper limb motion for human-robot collaboration. Biomed. Signal Process. Control. 2019, 51, 113–127. [Google Scholar] [CrossRef]

- Bu, D.; Guo, S.; Li, H. sEMG-Based Motion Recognition of Upper Limb Rehabilitation Using the Improved Yolo-v4 Algorithm. Life 2022, 12, 64. [Google Scholar] [CrossRef] [PubMed]

- Lambelet, C.; Temiraliuly, D.; Siegenthaler, M.; Wirth, M.; Woolley, D.G.; Lambercy, O.; Gassert, R.; Wenderoth, N. Characterization and wearability evaluation of a fully portable wrist exoskeleton for unsupervised training after stroke. J. Neuro. Eng. Rehabil. 2020, 17, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Li, J.; Zhang, S.; Yang, H.; Guo, K. Study on Flexible sEMG Acquisition System and Its Application in Muscle Strength Evaluation and Hand Rehabilitation. Micromachines 2022, 13, 2047. [Google Scholar] [CrossRef]

- Toledo-Perez, D.C.; Rodriguez-Resendiz, J.; Gomez-Loenzo, R.A. A Study of Computing Zero Crossing Methods and an Improved Proposal for EMG Signals. IEEE Access 2020, 8, 8483–8490. [Google Scholar] [CrossRef]

- Li, H.; Guo, S.; Wang, H.; Bu, D. Subject-independent Continuous Estimation of sEMG-based Joint Angles using both Multisource Domain Adaptation and BP Neural Network. IEEE Trans. Instrum. Meas. 2022, 72, 1–10. [Google Scholar] [CrossRef]

- Li, X.; Liu, J.; Huang, Y.; Wang, D.; Miao, Y. Human Motion Pattern Recognition and Feature Extraction: An Approach Using Multi-Information Fusion. Micromachines 2022, 13, 1205. [Google Scholar] [CrossRef]

- Yang, Z.; Guo, S.; Liu, Y.; Kawanishi, M.; Hirata, H. A Task Performance-based sEMG-driven Variable Stiffness Control Strategy for Upper Limb Bilateral Rehabilitation System. IEEE/ASME Trans. Mechatron. 2022, 1–12. [Google Scholar] [CrossRef]

- Xie, Q.; Meng, Q.; Zeng, Q.; Fan, Y.; Dai, Y.; Yu, H. Human-Exoskeleton Coupling Dynamics of a Multi-Mode Therapeutic Exoskeleton for Upper Limb Rehabilitation Training. IEEE Access 2021, 9, 61998–62007. [Google Scholar] [CrossRef]

- Ding, B.; Tian, F.; Zhao, L. Digital Evaluation Algorithm for Upper Limb Motor Function Rehabilitation Based on Micro Sensor. J. Med. Imaging Health Inform. 2021, 11, 391–401. [Google Scholar] [CrossRef]

- Xiong, D.; Zhang, D.; Zhao, X.; Zhao, Y. Deep Learning for EMG-based Human-Machine Interaction: A Review. IEEE/CAA J. Autom. Sin. 2021, 8, 512–533. [Google Scholar] [CrossRef]

- Bouteraa, Y.; Ben Abdallah, I.; Alnowaiser, K.; Islam, R.; Ibrahim, A.; Gebali, F. Design and Development of a Smart IoT-Based Robotic Solution for Wrist Rehabilitation. Micromachines 2022, 13, 973. [Google Scholar] [CrossRef]

- Yang, Z.; Guo, S.; Hirata, H.; Kawanishi, M. A Mirror Bilateral Neuro-Rehabilitation Robot System with the sEMG-Based Real-Time Patient Active Participant Assessment. Life 2021, 11, 1290. [Google Scholar] [CrossRef] [PubMed]

- Zhu, G.; Zhang, X.; Tang, X.; Chen, X.; Gao, X. Examining and monitoring paretic muscle changes during stroke rehabilitation using surface electromyography: A pilot study. Math. Biosci. Eng. 2020, 17, 216–234. [Google Scholar] [CrossRef] [PubMed]

- Wei, W.; Dai, Q.; Wong, Y.; Hu, Y.; Kankanhalli, M.; Geng, W. Surface electromyography-based gesture recognition by multi-view deep learning. IEEE Trans. Biomed. Eng. 2019, 66, 2964–2973. [Google Scholar] [CrossRef] [PubMed]

- Ding, Q.; Zhao, X.; Han, J.; Bu, C.; Wu, C. Adaptive hybrid classifier for myoelectric pattern recognition against the interferences of outlier motion, muscle fatigue, and electrode doffing. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1071–1080. [Google Scholar] [CrossRef]

- Robertson, J.W.; Englehart, K.B.; Scheme, E.J. Effects of confidence-based rejection on usability and error in pattern recognition-based myoelectric control. IEEE J. Biomed. Health Inf. 2018, 23, 2002–2008. [Google Scholar] [CrossRef]

- Wu, L.; Zhang, X.; Zhang, X.; Chen, X.; Chen, X. Metric learning for novel motion rejection in high-density myoelectric pattern recognition. Knowl. -Based Syst. 2021, 227, 107165. [Google Scholar] [CrossRef]

- Pizzolato, S.; Tagliapietra, L.; Cognolato, M.; Reggiani, M.; Müller, H.; Atzori, M. Comparison of six electromyography acquisition setups on hand movement classification tasks. PLoS ONE 2017, 12, e0186132. [Google Scholar] [CrossRef] [Green Version]

- Tepe, C.; Demir, M. Real-Time Classification of EMG Myo Armband Data Using Support Vector Machine. IRBM 2022, 43, 300–308. [Google Scholar] [CrossRef]

- Cote-Allard, U.; Fall, C.L.; Drouin, A.; Campeau-Lecours, A.; Gosselin, C.; Glette, K.; Laviolette, F.; Gosselin, B. Deep Learning for Electromyographic Hand Gesture Signal Classification Using Transfer Learning. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 760–771. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bi, Z.; Wang, Y.; Wang, H.; Zhou, Y.; Xie, C.; Zhu, L.; Wang, H.; Wang, B.; Huang, J.; Lu, X.; et al. Wearable EMG Bridge-a Multiple-Gesture Reconstruction System Using Electrical Stimulation Controlled by the Volitional Surface Electromyogram of a Healthy Forearm. IEEE Access 2020, 8, 137330–137341. [Google Scholar] [CrossRef]

- Farzaneh, A.; Qi, X. Facial Expression Recognition in the Wild via Deep Attentive Center Loss. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 2402–2411. [Google Scholar]

- Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Recall | Method | Target Movements | Unrelated Movements | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Averaged | R1 | R2 | R3 | R4 | R5 | ||||

| Acc (%) | (%) | Rejection Performance (%) | Averaged F-Score (%) | ||||||

| SVM | 96.9 | - | - | - | - | - | - | - | |

| LDA | 86.1 | - | - | - | - | - | - | - | |

| 0.8 | LDA-MD | 77.4 | 84.0 | 68.3 | 96.7 | 85.0 | 76.7 | 93.3 | 78.5 |

| CNN-AE-S | 80.4 | 84.3 | 88.3 | 96.7 | 86.7 | 78.3 | 71.7 | 83.7 | |

| CNN-AE-SC | 82.4 | 99.7 | 100 | 100 | 100 | 100 | 98.3 | 92.8 | |

| 0.85 | LDA-MD | 82.2 | 72.7 | 56.7 | 95.0 | 65.0 | 56.7 | 90.0 | 75.5 |

| CNN-AE-S | 85.4 | 79.3 | 85.0 | 93.3 | 81.7 | 68.3 | 68.3 | 82.5 | |

| CNN-AE-SC | 85.2 | 98.3 | 98.3 | 98.3 | 98.3 | 100 | 96.7 | 93.2 | |

| 0.9 | LDA -MD | 85.4 | 59.0 | 45.0 | 90.0 | 45.0 | 33.3 | 81.7 | 72.2 |

| CNN-AE-S | 89.8 | 71.7 | 73.3 | 88.3 | 76.7 | 63.3 | 56.7 | 83.8 | |

| CNN-AE-SC | 90.0 | 95.0 | 96.7 | 96.7 | 93.3 | 98.3 | 90.0 | 93.7 | |

| sEMG Channels | sENG Acquisition | Performance | ||

|---|---|---|---|---|

| Target Motions Recognition (%) | Unrelated Motions Rejection (%) | |||

| Q. Ding [26] | 5 | Delsys, Trigno | 91.7 | |

| Y. Zhang [28] | 6 × 8 | High-density sEMG | 88.6 | 96.4 |

| Z. Bi [32] | 6 × 8 | Wearable EMG Bridge | 94.0 | - |

| This work (recall = 0.9) | 8 | Thalmic Myo armband | 90.0 | 95.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bu, D.; Guo, S.; Guo, J.; Li, H.; Wang, H. Low-Density sEMG-Based Pattern Recognition of Unrelated Movements Rejection for Wrist Joint Rehabilitation. Micromachines 2023, 14, 555. https://doi.org/10.3390/mi14030555

Bu D, Guo S, Guo J, Li H, Wang H. Low-Density sEMG-Based Pattern Recognition of Unrelated Movements Rejection for Wrist Joint Rehabilitation. Micromachines. 2023; 14(3):555. https://doi.org/10.3390/mi14030555

Chicago/Turabian StyleBu, Dongdong, Shuxiang Guo, Jin Guo, He Li, and Hanze Wang. 2023. "Low-Density sEMG-Based Pattern Recognition of Unrelated Movements Rejection for Wrist Joint Rehabilitation" Micromachines 14, no. 3: 555. https://doi.org/10.3390/mi14030555