Research on Intelligent Wheelchair Attitude-Based Adjustment Method Based on Action Intention Recognition

Abstract

1. Introduction

- The system designed in this article deploys multiple force sensors on the upper part of the wheelchair, which provides a more comprehensive perception of human posture and is less affected by external environmental interference, enabling better recognition of human movement intentions;

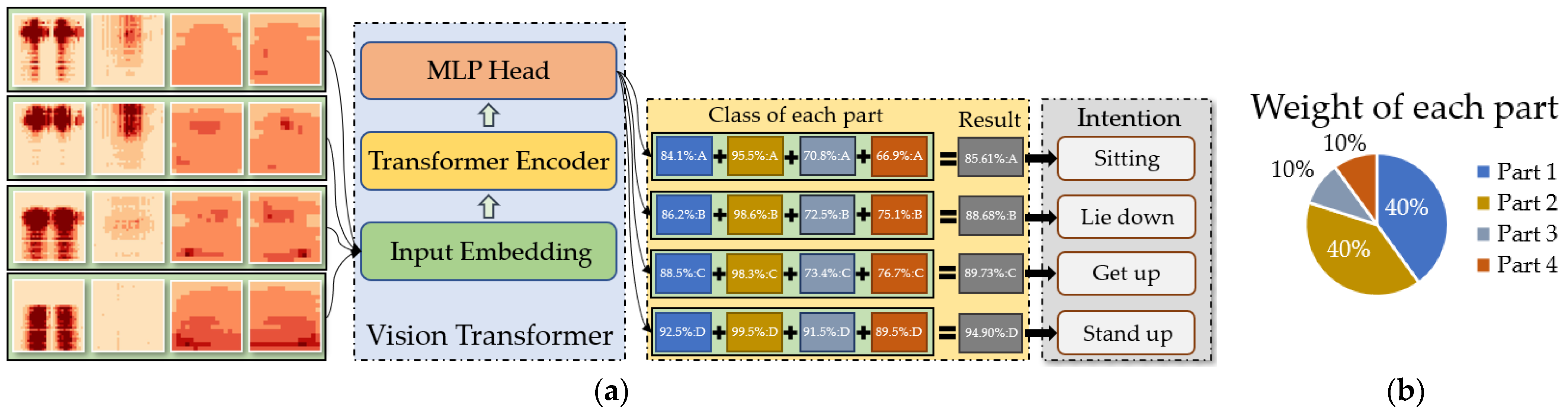

- A pressure image classification model based on VIT is proposed, which converts pressure data into image form and classifies it to achieve recognition of action intention;

- This study also proposes an adaptive pressure-data acquisition method, which is applied to the pressure sensor acquisition card at the seat cushion to ensure good recognition performance for users of different weights.

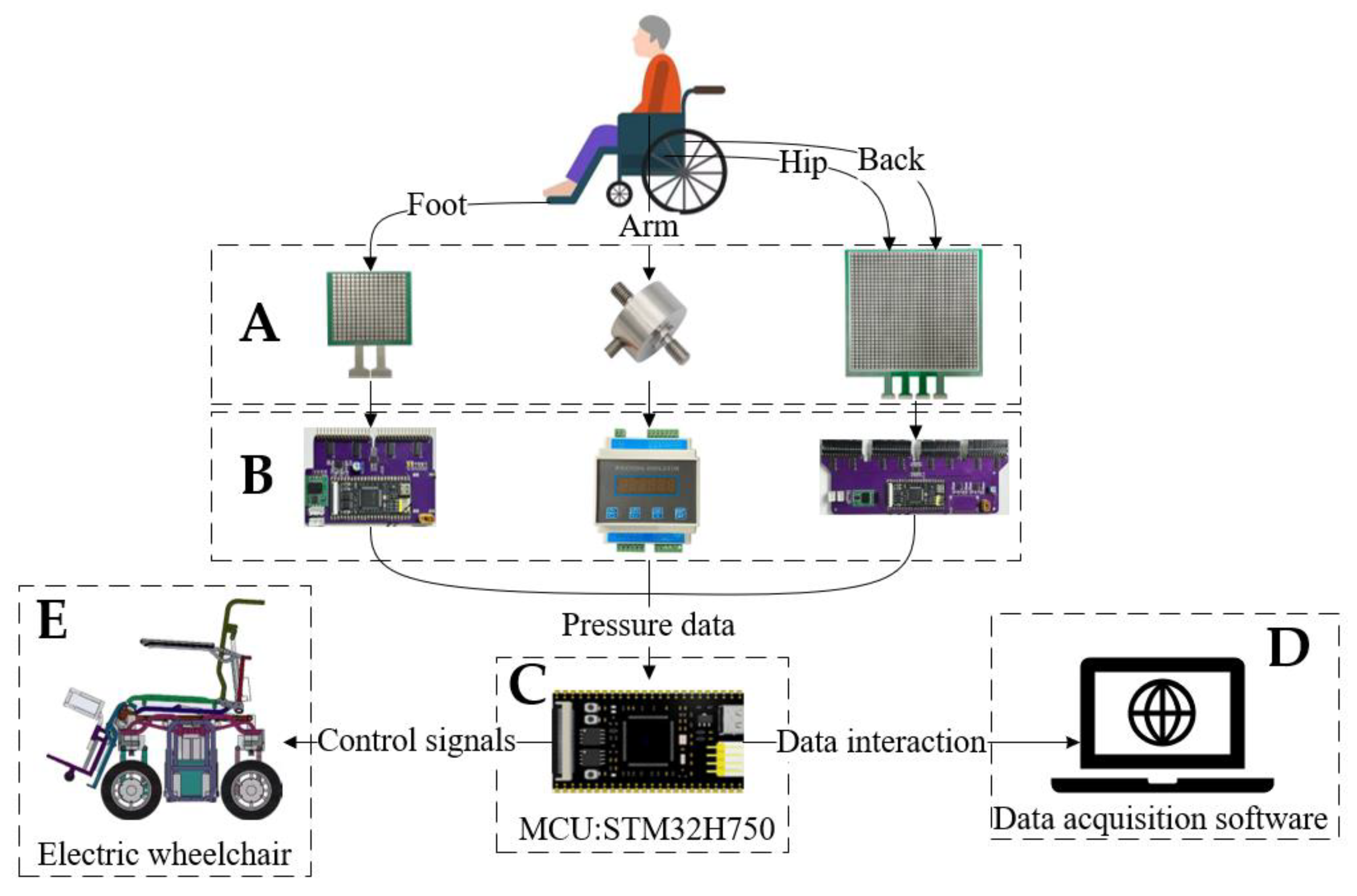

2. System Design

2.1. Electric Wheelchair Platform

2.2. Selection and Installation of Sensors

2.3. Data Acquisition Software

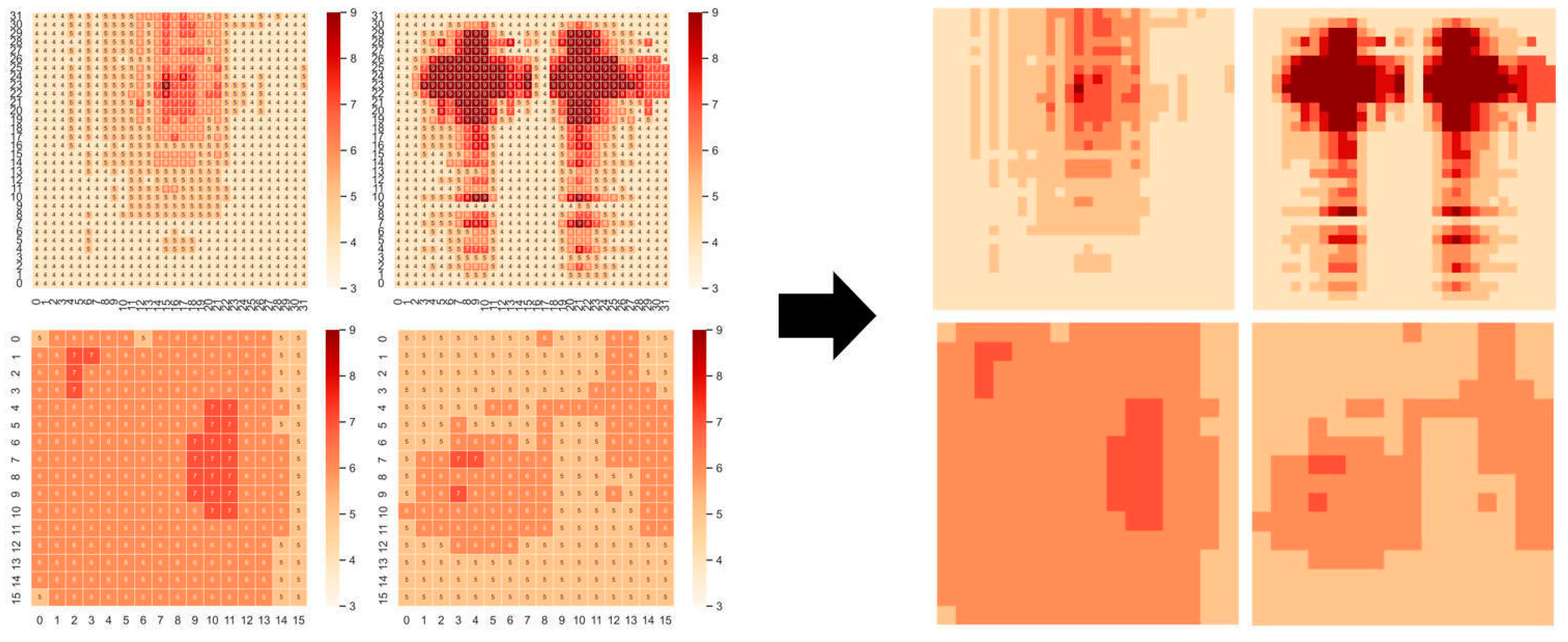

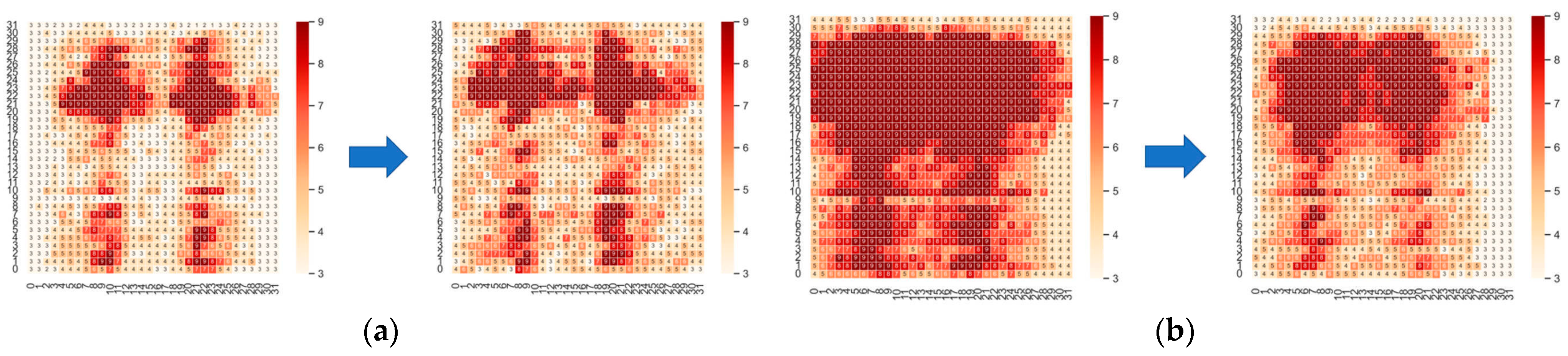

- The display area of force distribution map: The collected data are converted into visual graphics using the heatmap function provided in the Seaborn library. Seaborn is a Python visualization library based on Matplotlib, which allows for a more intuitive view of pressure distribution;

- Serial port parameter setting area: The parameters that can be set for the serial port include the baud rate and serial port number. Other default parameters include the stop bit, check bit, and data bit as 1, NONE, and 8, respectively;

- Data transmission and display area: The upper half is the data-receiving display area, and the lower half is the data-sending display area, both of which support HEX mode;

- Time display area: Used to display the date when the upper computer is running.

3. Data Acquisition and Processing

3.1. Dataset

3.2. Data Acquisition

3.3. Adaptive Pressure-Data Acquisition Method

3.4. Pressure Data Visualization Processing

4. Methods

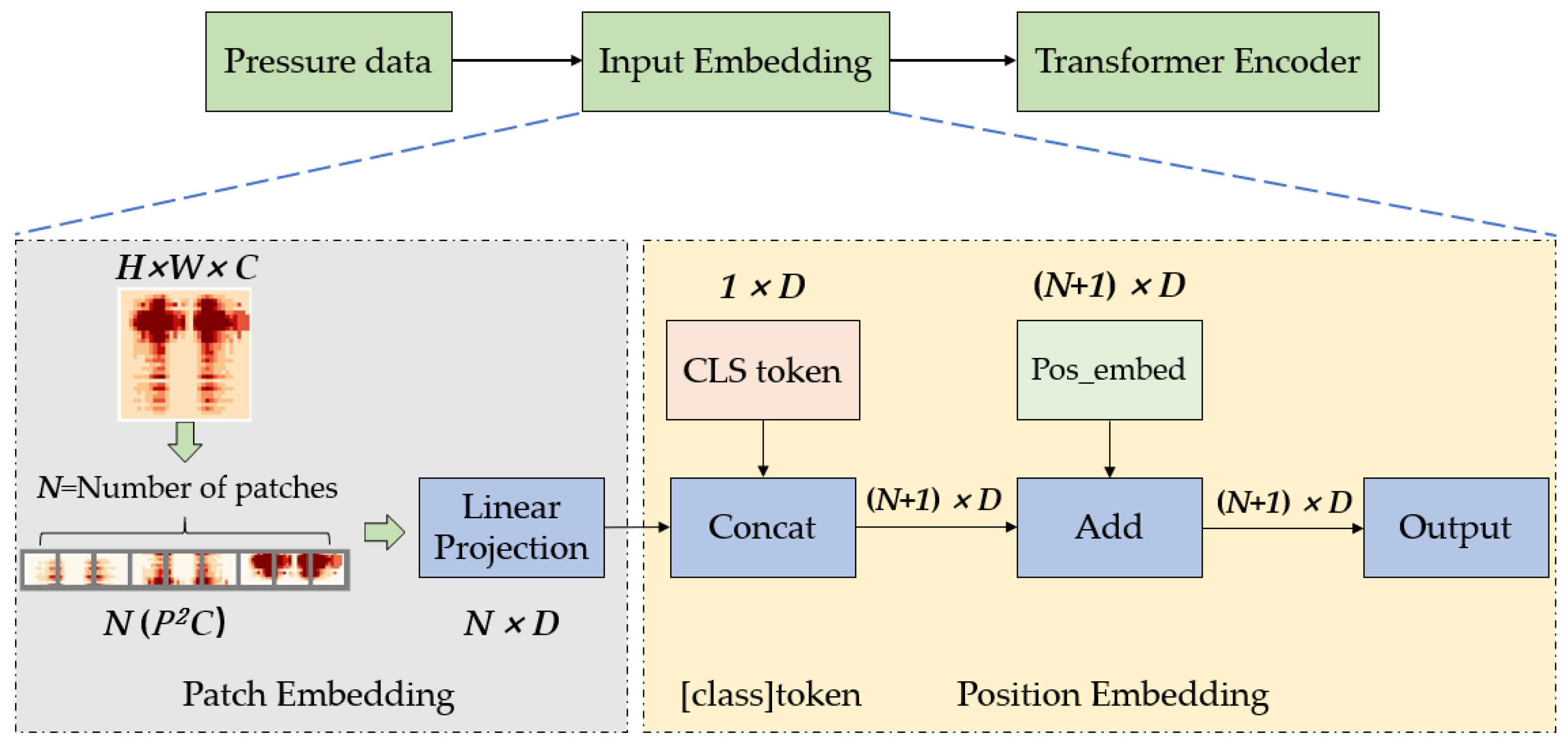

4.1. VIT-Based Pressure Image Recognition Algorithm

4.1.1. Pressure Image Input Processing

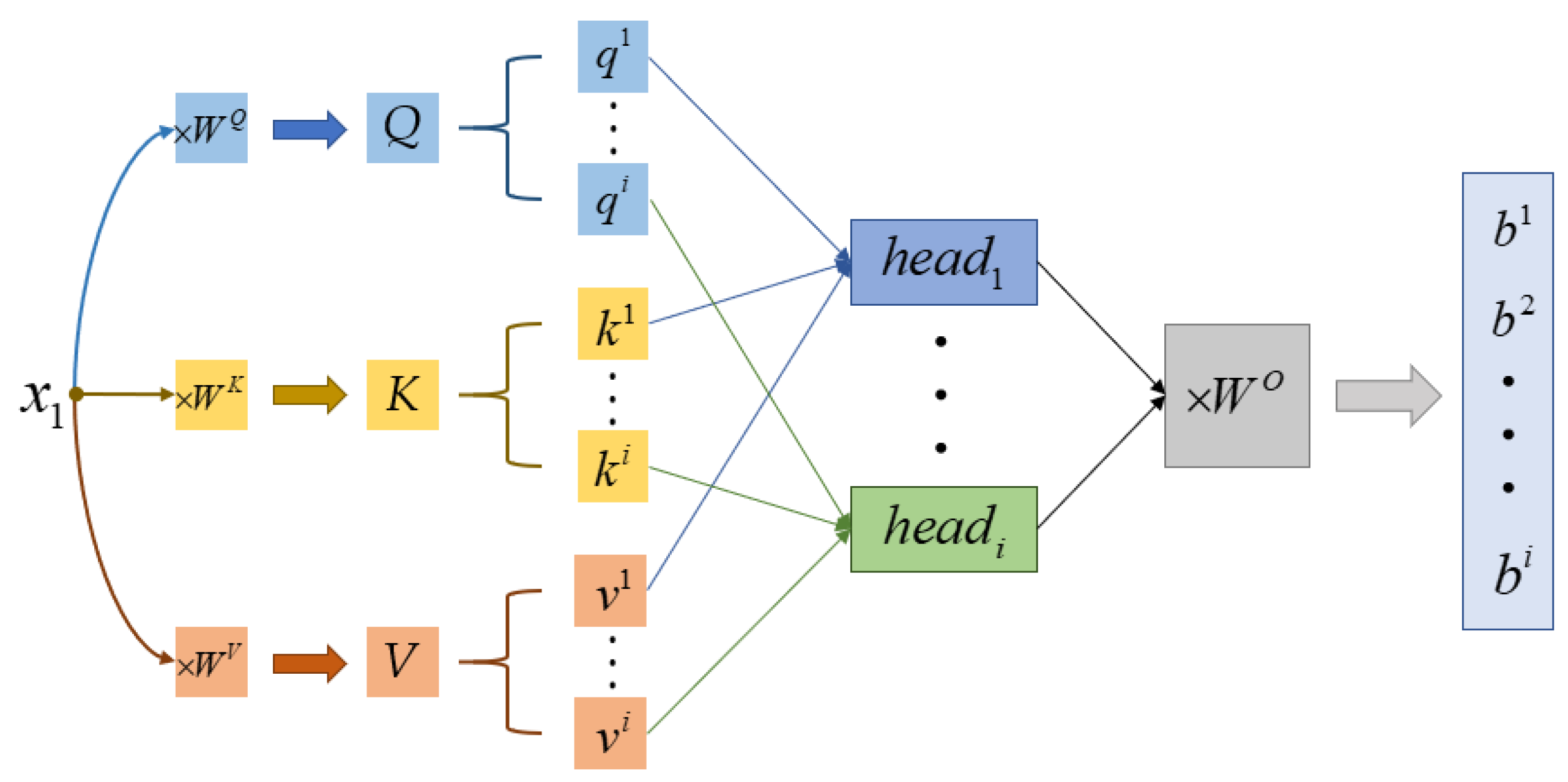

4.1.2. Feature Extraction Method

4.1.3. Stress Image Classification and Intent Recognition

4.2. Handrail Pressure Data Serialization Classification Method

5. Experiments and Results

5.1. Performance Test of Adaptive Pressure-Data Collection Algorithm

5.2. Human Movement Intention Recognition Effect Test

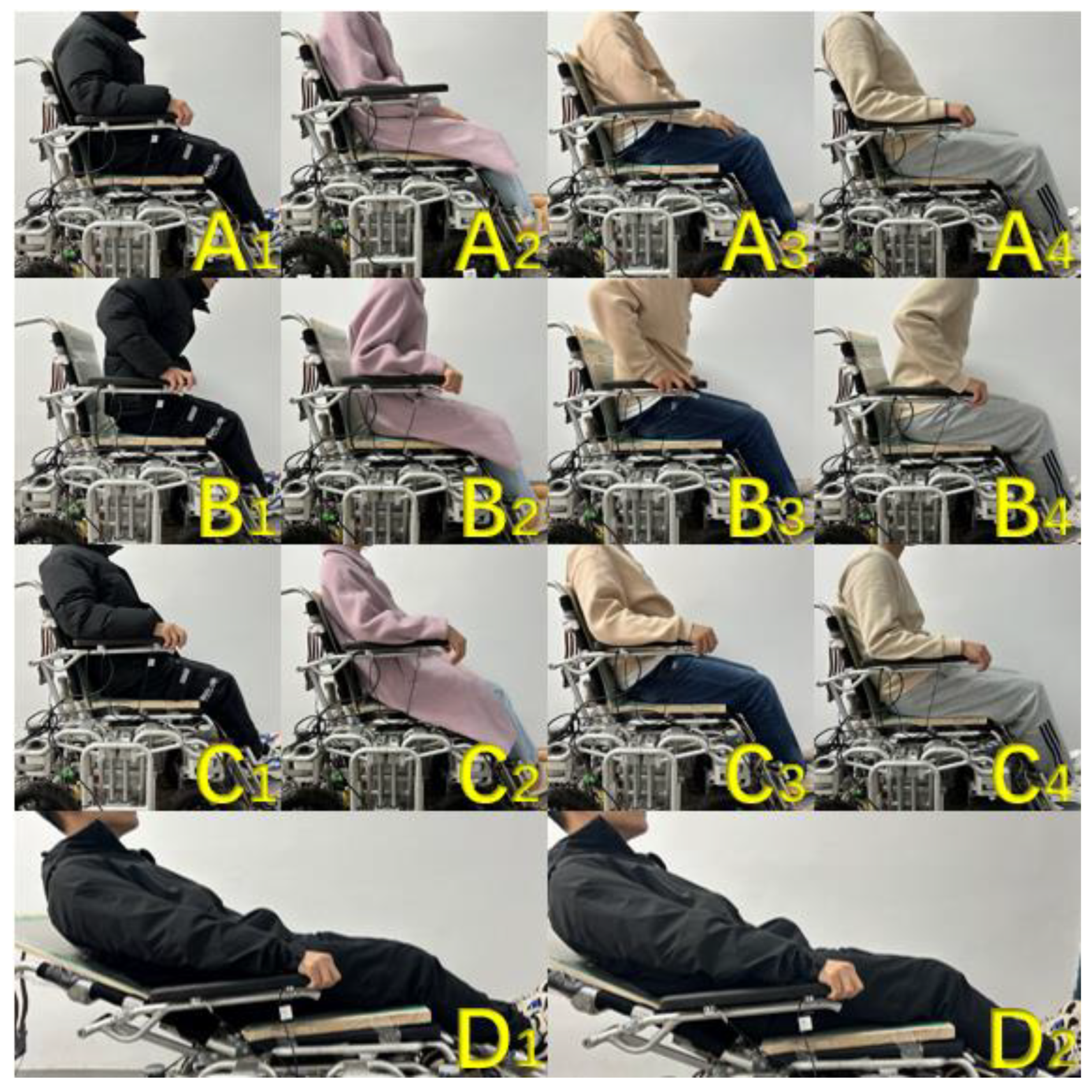

- Before the experiment began, each participant was naturally sitting in a wheelchair, with their body and limbs in free contact with various parts of the wheelchair. The system used the method described in Section 3.3 to adjust the pressure data at the seat cushion. As this part of the experiment only tested the accuracy of intention recognition, in order to maintain consistency in the experiment, the adjustment function of the wheelchair was turned off during the testing process;

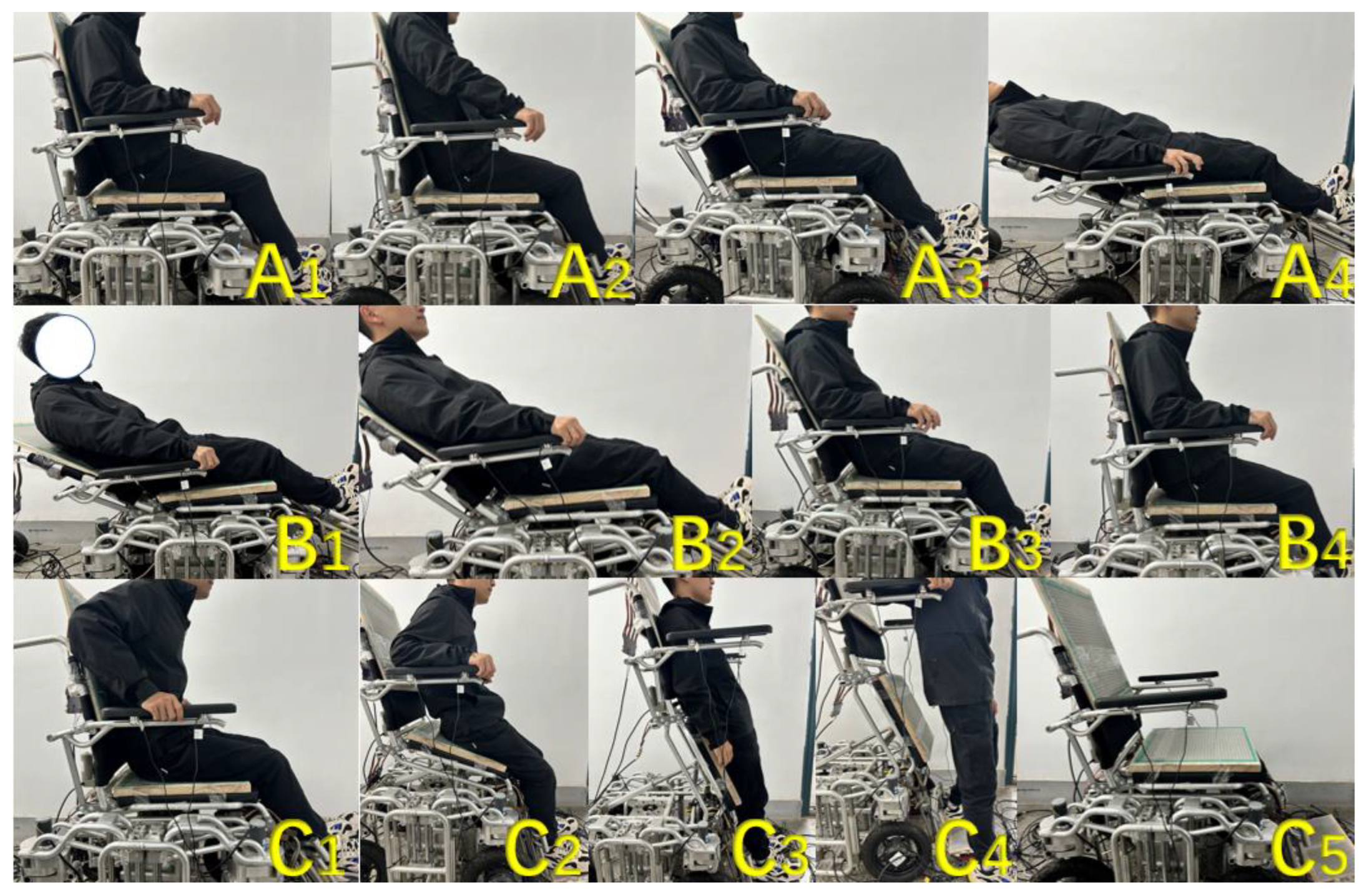

- After the adjustment was completed, the formal experimental phase began. Each participant was free to make any number of attempts to lie down, stand up, and stand up within a 5-min time frame. The experimental process is shown in Figure 13, where A1~A4 represent the normal sitting posture, B1~B4 represent the state when the human body has the intention to stand up, C1~C4 represents the state when the human body has the intention to lie down, and D1~D2 represents the state when the human body has the intention to sit up;

- The number of occurrences of actual action intentions during the experiment was recorded by on-site researchers. The system will automatically detect the human body’s action intentions and record the number of occurrences. Except for the three types of action intentions mentioned above, the number of occurrences is not counted. Finally, the accuracy of recognition is obtained by dividing the number of intentions detected by the system by the actual number of action intentions (the detection frequency of the system is 2 Hz).

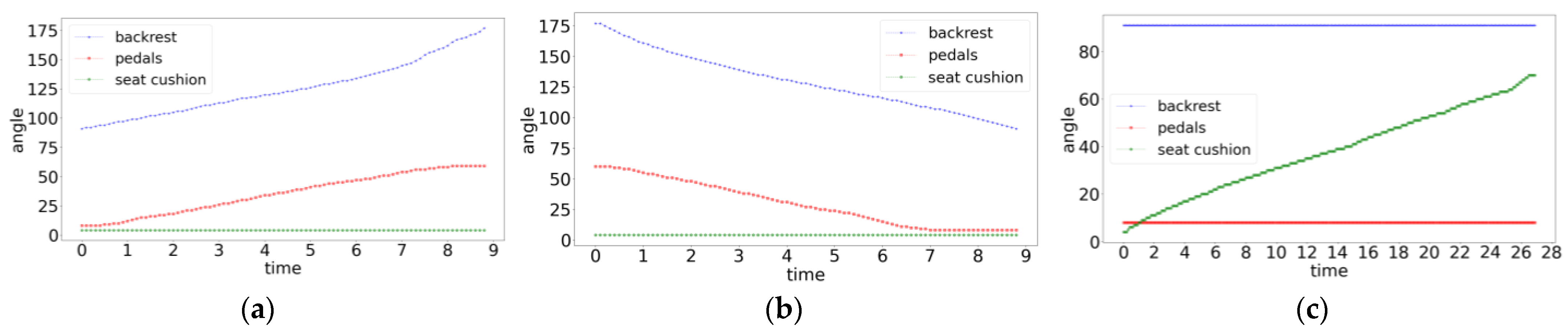

5.3. Wheelchair Posture Adjustment Function Test

- Part A in the figure shows the posture adjustment process of the wheelchair when the passenger has a lie-down intention while sitting upright. When the system detects that the user has a lie-down intention while sitting upright (Figure 15(A1)), the push rod at the backrest of the wheelchair will contract to adjust the angle of the backrest in a counterclockwise direction. The angle adjustment motor at the pedal will also synchronously rotate counterclockwise to lift the pedal, and the two electric actuators can achieve collaborative control throughout the entire process to ensure that the human body always maintains a comfortable and reasonable posture (Figure 15(A2–A4));

- Part B in the figure shows the posture adjustment process of the wheelchair when the passenger intends to sit up while lying down. When the system detects that the user in a lying state has an intention to sit up (Figure 15(B1)), the electric push rod at the backrest of the wheelchair will extend to adjust the backrest in a clockwise direction, and the angle adjustment motor at the pedal will synchronously rotate clockwise to lift the pedal. Throughout the entire process, the backrest and pedals of the wheelchair will be in a synchronized adjustment state (Figure 15(B2–B4));

- The Figure 15(C1–C5) parts in the figure show the posture adjustment process of the wheelchair when the passenger intends to stand up while lying down. When the system detects that the user is in a sitting position and has an intention to stand up (Figure 15(C1)), the push rod under the seat cushion will extend to support the cushion part. At the same time, the angle adjustment motor at the pedal will rotate clockwise to meet the standing needs and the above two functions will be performed simultaneously (Figure 15(C2,C3)). After completing the auxiliary standing function, the person can leave the wheelchair (Figure 15(C4)). When it is detected that the person has left the wheelchair, the wheelchair will automatically reset to its initial state (Figure 15(C5)).

6. Conclusions and Prospects

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yoshikawa, M.; Nagakawa, K.; Tanaka, R.; Yamawaki, K.; Mori, T.; Hiraoka, A.; Higa, C.; Nishikawa, Y.; Yoshida, M.; Tsuga, K. Improper sitting posture while eating adversely affects maximum tongue pressure. J. Dent. Sci. 2021, 16, 467–473. [Google Scholar] [CrossRef]

- Aissaoui, R.; Lacoste, M.; Dansereau, J. Analysis of sliding and pressure distribution during a repositioning of persons in a simulator chair. IEEE Trans. Neural Syst. Rehabil. Eng. 2001, 9, 215–224. [Google Scholar] [CrossRef]

- Borysiuk, Z.; Blaszczyszyn, M.; Piechota, K.; Konieczny, M.; Cynarski, W.J.J. Correlations between the EMG Structure of Movement Patterns and Activity of Postural Muscles in Able-Bodied and Wheelchair Fencers. Sensors 2023, 23, 135. [Google Scholar] [CrossRef] [PubMed]

- Ruzaij, M.F.; Neubert, S.; Stoll, N.; Thurow, K. Design and implementation of low-cost intelligent wheelchair controller for quadriplegias and paralysis patient. In Proceedings of the 2017 IEEE 15th International Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 26–28 January 2017; pp. 000399–000404. [Google Scholar]

- Leaman, J.; La, H.M.; Nguyen, L. Development of a smart wheelchair for people with disabilities. In Proceedings of the 2016 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Baden-Baden, Germany, 19–21 September 2016; pp. 279–284. [Google Scholar]

- Al Rakib, M.A.; Uddin, S.; Rahman, M.M.; Chakraborty, S.; Abbas, F.I. Smart Wheelchair with Voice Control for Physically Challenged People. Eur. J. Eng. Technol. Res. 2021, 6, 97–102. [Google Scholar] [CrossRef]

- Cao, W.; Yu, H.; Wu, X.; Li, S.; Meng, Q.; Chen, C. Voice controlled wheelchair integration rehabilitation training and posture transformation for people with lower limb motor dysfunction. Technol. Health Care 2021, 29, 609–614. [Google Scholar] [CrossRef] [PubMed]

- Shanmusundar, G.; Krishana, A.A.; Anand, S.M.; Kanna, P.K.; Yamini, A. Design and development of voice controlled robotic wheel chair for handicapped people. AIP Conf. Proc. 2022, 2393, 020213. [Google Scholar] [CrossRef]

- Dey, P.; Hasan, M.M.; Mostofa, S.; Rana, A.I. Smart wheelchair integrating head gesture navigation. In Proceedings of the 1st International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Dhaka, Bangladesh, 10–12 January 2019; pp. 329–334. [Google Scholar]

- Zgallai, W.; Brown, J.T.; Ibrahim, A.; Mahmood, F.; Mohammad, K.; Khalfan, M.; Mohammed, M.; Salem, M.; Hamood, N. Deep Learning AI Application to an EEG driven BCI Smart Wheelchair. In Proceedings of the 2019 Advances in Science and Engineering Technology International Conferences (ASET), Dubai, United Arab Emirates, 26 March–10 April 2019; pp. 1–5. [Google Scholar]

- Kaur, A. Wheelchair control for disabled patients using EMG/EOG based human machine interface: A review. J. Med. Eng. Technol. 2021, 45, 61–74. [Google Scholar] [CrossRef]

- Shao, L. Facial Movements Recognition Using Multichannel EMG Signals. In Proceedings of the 2019 IEEE Fourth International Conference on Data Science in Cyberspace (DSC), Hangzhou, China, 23–25 June 2019; pp. 561–566. [Google Scholar]

- Kumar, B.; Paul, Y.; Jaswal, R.A. Development of EMG Controlled Electric Wheelchair Using SVM and kNN Classifier for SCI Patients. In Proceedings of the 3rd International Conference on Advanced Informatics for Computing Research (ICAICR), Shimla, India, 15–16 June 2019; pp. 75–83. [Google Scholar]

- Anwer, S.; Waris, A.; Sultan, H.; Butt, S.I.; Zafar, M.H.; Sarwar, M.; Niazi, I.K.; Shafique, M.; Pujari, A.N. Eye and Voice-Controlled Human Machine Interface System for Wheelchairs Using Image Gradient Approach. Sensors 2020, 20, 5510. [Google Scholar] [CrossRef]

- Ning, M.; Yu, K.; Zhang, C.; Wu, Z.; Wang, Y. Wheelchair design with variable posture adjustment and obstacle-overcoming ability. J. Braz. Soc. Mech. Sci. Eng. 2021, 43, 197. [Google Scholar] [CrossRef]

- Duvall, J.; Gebrosky, B.; Ruffing, J.; Anderson, A.; Ong, S.S.; McDonough, R.; Cooper, R.A. Design of an adjustable wheelchair for table tennis participation. Disabil. Rehabil.-Assist. Technol. 2021, 16, 425–431. [Google Scholar] [CrossRef]

- Dawar, G.; Kejariwal, A.; Kumar, D. Design of a modular wheelchair with posture transformation capabilities from sitting to standing. Disabil. Rehabil.-Assist. Technol. 2020, 15, 670–683. [Google Scholar] [CrossRef] [PubMed]

- Kobara, K.; Fujita, D.; Osaka, H.; Ito, T.; Yoshimura, Y.; Ishida, H.; Watanabe, S. Mechanism of fluctuation in shear force applied to buttocks during reclining of back support on wheelchair. Disabil. Rehabil. Assist. Technol. 2013, 8, 220–224. [Google Scholar] [CrossRef]

- Shi, X.; Lu, H.; Chen, Z. Design and Analysis of an Intelligent Toilet Wheelchair Based on Planar 2DOF Parallel Mechanism with Coupling Branch Chains. Sensors 2021, 21, 2677. [Google Scholar] [CrossRef]

- Lee, W.c.; Yao, J.A.; Kuo, C.H. Development of a seat-adjustable power wheelchair. In Proceedings of the 2011 International Conference on System Science and Engineering, Macau, China, 8–10 June 2011; pp. 494–497. [Google Scholar]

- Ye, A.; Zou, W.; Yuan, K.; Xu, Z.; Ren, Y. A reconfigurable wheelchair/bed system for the elderly and handicapped. In Proceedings of the 2012 IEEE International Conference on Mechatronics and Automation, Chengdu, China, 5–8 August 2012; pp. 1627–1632. [Google Scholar]

- Chiang, H.-H.; You, W.-T.; Lee, J.-S. Shared Driving Assistance Design Considering Human Error Protection for Intelligent Electric Wheelchairs. Energies 2023, 16, 2583. [Google Scholar] [CrossRef]

- Tomari, M.R.M.; Kobayashi, Y.; Kuno, Y. Development of Smart Wheelchair System for a User with Severe Motor Impairment. Procedia Eng. 2012, 41, 538–546. [Google Scholar] [CrossRef]

- Cui, J.; Cui, L.; Huang, Z.; Li, X.; Han, F. IoT Wheelchair Control System Based on Multi-Mode Sensing and Human-Machine Interaction. Micromachines 2022, 13, 1108. [Google Scholar] [CrossRef] [PubMed]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in Vision: A Survey. ACM Comput. Surv. 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Odusami, M.; Maskeliunas, R.; Damasevicius, R. Pixel-Level Fusion Approach with Vision Transformer for Early Detection of Alzheimer’s Disease. Electronics 2023, 12, 1218. [Google Scholar] [CrossRef]

- Su, B.-Y.; Wang, J.; Liu, S.-Q.; Sheng, M.; Jiang, J.; Xiang, K. A CNN-Based Method for Intent Recognition Using Inertial Measurement Units and Intelligent Lower Limb Prosthesis. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1032–1042. [Google Scholar] [CrossRef]

- Bi, L.; Feleke, A.G.; Guan, C. A review on EMG-based motor intention prediction of continuous human upper limb motion for human-robot collaboration. Biomed. Signal Process. Control 2019, 51, 113–127. [Google Scholar] [CrossRef]

- Anvaripour, M.; Khoshnam, M.; Menon, C.; Saif, M. FMG- and RNN-Based Estimation of Motor Intention of Upper-Limb Motion in Human-Robot Collaboration. Front. Robot. AI 2020, 7, 573096. [Google Scholar] [CrossRef] [PubMed]

- Gassara, H.E.; Almuhamed, S.; Moukadem, A.; Schacher, L.; Dieterlen, A.; Adolphe, D. Smart wheelchair: Integration of multiple sensors. In Proceedings of the 17th World Textile Conference of the Association-of-Universities-for-Textiles (AUTEX)—Shaping the Future of Textiles, Corfu, Greece, 29–31 May 2017. [Google Scholar]

- Ahmad, J.; Andersson, H.; Siden, J. Screen-Printed Piezoresistive Sensors for Monitoring Pressure Distribution in Wheelchair. IEEE Sens. J. 2019, 19, 2055–2063. [Google Scholar] [CrossRef]

- Ma, C.; Li, W.; Gravina, R.; Fortino, G. Posture Detection Based on Smart Cushion for Wheelchair Users. Sensors 2017, 17, 719. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, J.; Siden, J.; Andersson, H. A Proposal of Implementation of Sitting Posture Monitoring System for Wheelchair Utilizing Machine Learning Methods. Sensors 2021, 21, 6349. [Google Scholar] [CrossRef]

- Ahmad, J.; Andersson, H.; Siden, J. Sitting Posture Recognition using Screen Printed Large Area Pressure Sensors. In Proceedings of the 16th IEEE Sensors Conference, Glasgow, UK, 29 October–1 November 2017; pp. 232–234. [Google Scholar]

- Zhao, Y.; Liu, Y.; Li, Y.; Hao, Q. Development and Application of Resistance Strain Force Sensors. Sensors 2020, 20, 5826. [Google Scholar] [CrossRef]

- Peiming, G.; Shiwei, L.; Liyin, S.; Xiyu, H.; Zhiyuan, Z.; Mingzhe, C.; Zhenzhen, L. A PyQt5-based GUI for Operational Verification of Wave Forcasting System. In Proceedings of the 2020 International Conference on Information Science, Parallel and Distributed Systems (ISPDS), Xi’an, China, 14–16 August 2020; pp. 204–211. [Google Scholar]

- Renyi, L.; Yingzi, T. Semi-automatic marking system for robot competition based on PyQT5. In Proceedings of the 2021 International Conference on Intelligent Computing, Automation and Systems (ICICAS), Chongqing, China, 29–31 December 2021; pp. 251–254. [Google Scholar]

| Weight | Data Status | M | P |

|---|---|---|---|

| 54 kg | Unadjusted | 99 | 0.0966 |

| Adjusted | 158 | 0.1542 | |

| 78 kg | Unadjusted | 473 | 0.4619 |

| Adjusted | 204 | 0.1992 |

| Intentions | Occurrence Times | Recognition Times | Accuracy Rates |

|---|---|---|---|

| Lie down | 82 | 78 | 95.12% |

| Get up | 77 | 76 | 98.7% |

| Stand up | 79 | 79 | 100% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cui, J.; Huang, Z.; Li, X.; Cui, L.; Shang, Y.; Tong, L. Research on Intelligent Wheelchair Attitude-Based Adjustment Method Based on Action Intention Recognition. Micromachines 2023, 14, 1265. https://doi.org/10.3390/mi14061265

Cui J, Huang Z, Li X, Cui L, Shang Y, Tong L. Research on Intelligent Wheelchair Attitude-Based Adjustment Method Based on Action Intention Recognition. Micromachines. 2023; 14(6):1265. https://doi.org/10.3390/mi14061265

Chicago/Turabian StyleCui, Jianwei, Zizheng Huang, Xiang Li, Linwei Cui, Yucheng Shang, and Liyan Tong. 2023. "Research on Intelligent Wheelchair Attitude-Based Adjustment Method Based on Action Intention Recognition" Micromachines 14, no. 6: 1265. https://doi.org/10.3390/mi14061265

APA StyleCui, J., Huang, Z., Li, X., Cui, L., Shang, Y., & Tong, L. (2023). Research on Intelligent Wheelchair Attitude-Based Adjustment Method Based on Action Intention Recognition. Micromachines, 14(6), 1265. https://doi.org/10.3390/mi14061265