Machine-Learned Recognition of Network Traffic for Optimization through Protocol Selection

Abstract

:1. Introduction

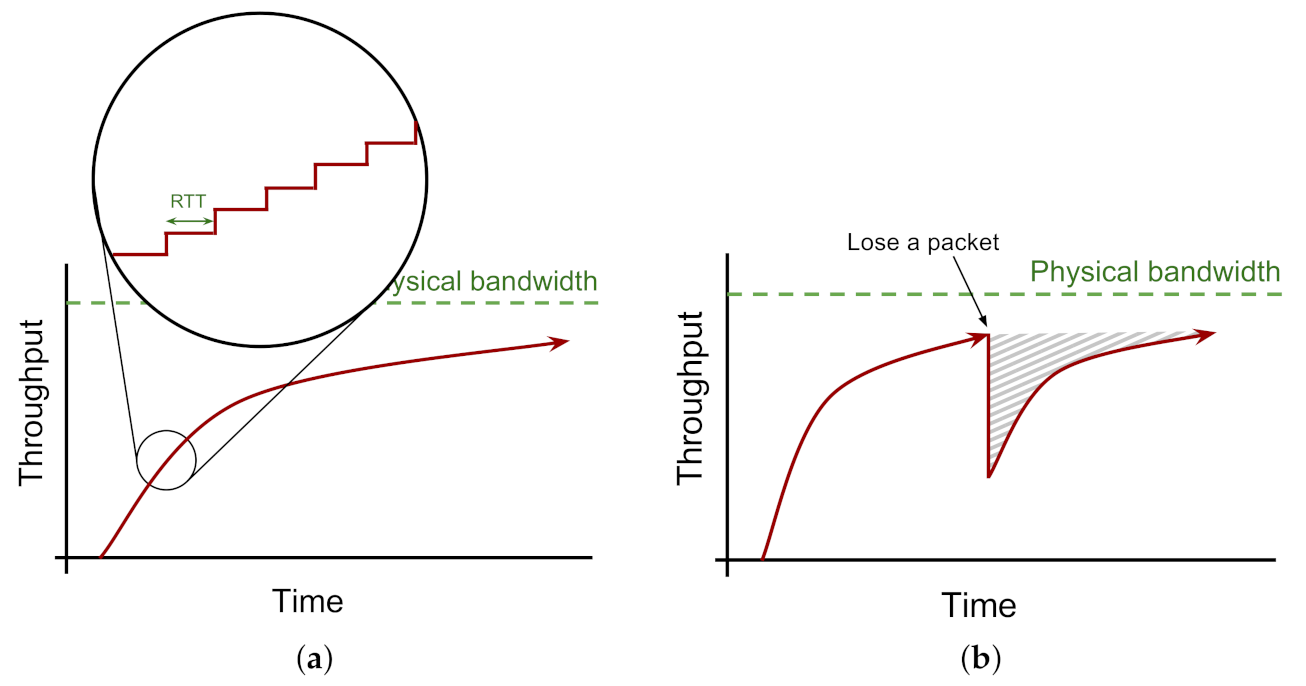

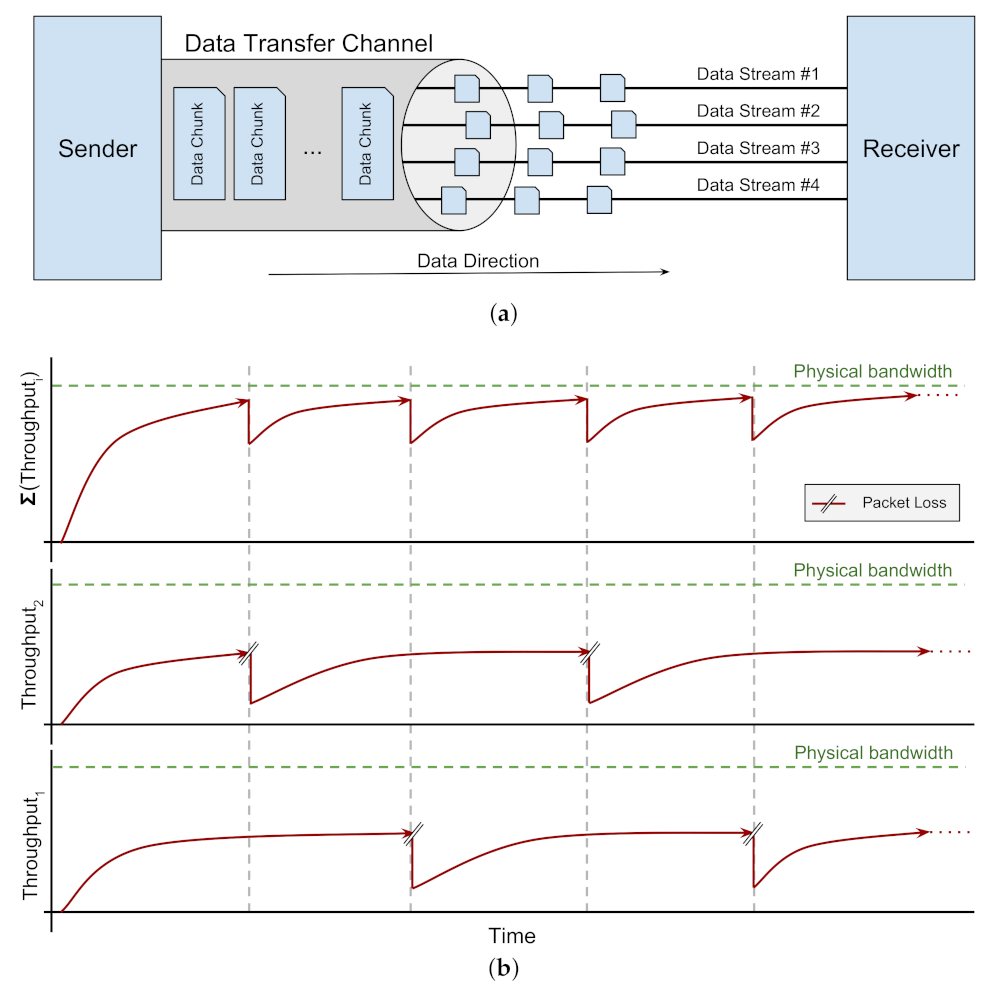

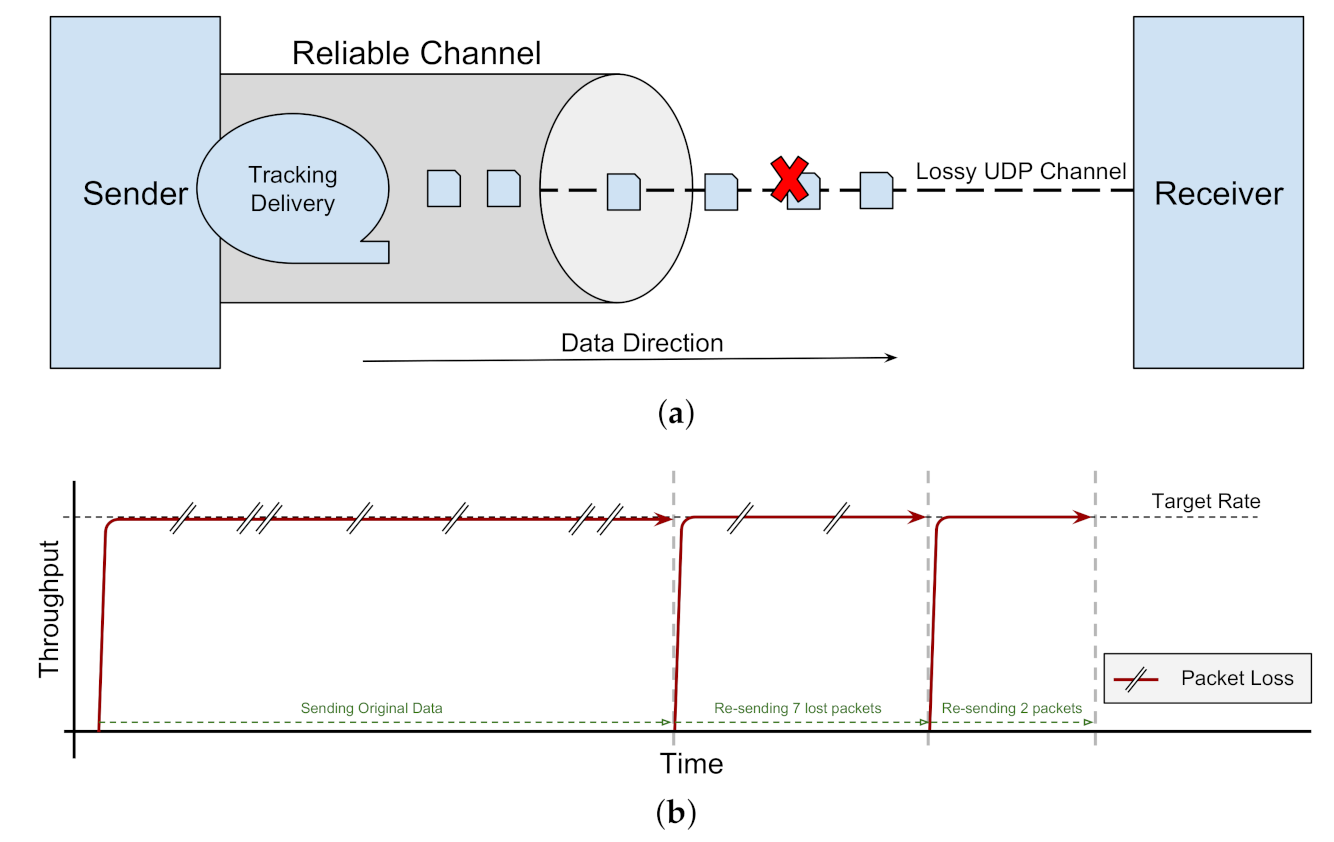

- Empirical quantification of the poor performance of some combinations of foreground and background network protocols:Many data networks are likely to share bandwidth, carrying data using a mix of protocols (both TCP-based and UDP-based). Yet most previous work on bulk-data transfer have (1) focused on TCP-only traffic, and (2) assumed a dedicated bandwidth, or negligible effect of background traffic. We detail an empirical study to quantify the throughput and fairness of high-performance protocols (TCP CUBIC, TCP BBR, UDP) and tools (GridFTP, UDT), in the context of a shared network (Section 6). We also quantify the variation in network round-trip time (RTT) latency caused by these tools (Section 6.4). Our mixed-protocol workload includes TCP-based foreground traffic with both TCP-based and UDP-based background traffic. In fact, our unique workload has led to interesting results.

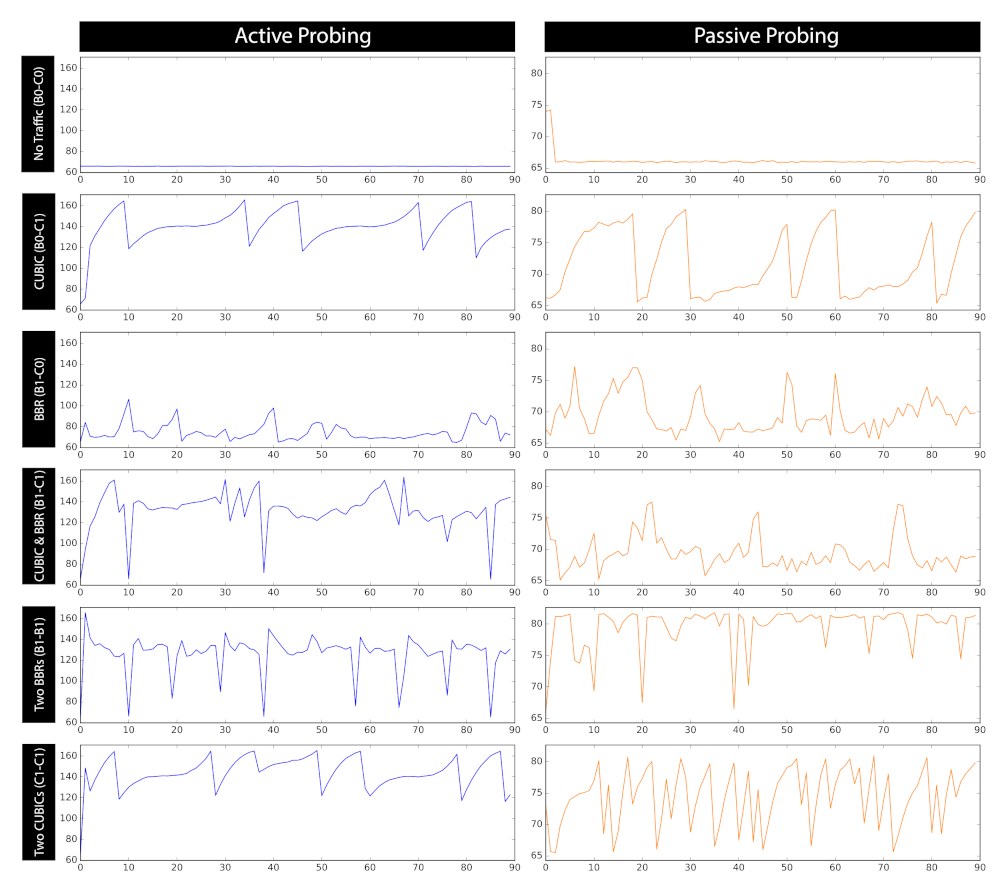

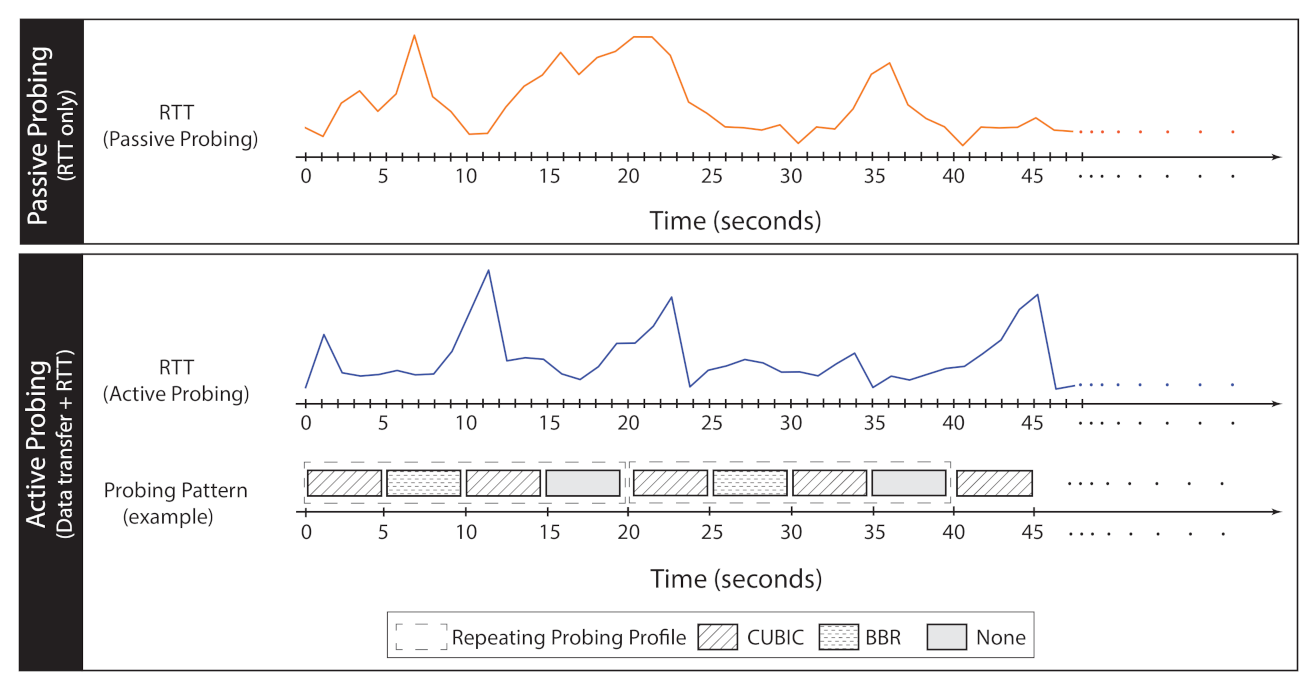

- Introducing multi-profile passive and active probing for accurate recognition of background protocol mixture:We introduce and provide a proof-of-concept of two network probing profiles, passive probing and active probing. These probing schemes enable us to gather representative end-to-end insights about the network, without any global knowledge present. Such an insight could potentially be utilised for different real-world use-cases, including workload discovery, protocol recognition, performance profiling, and more. With passive probing, we measure local, end-to-end RTT in regular time intervals, forming time-series data. We show that such a probing strategy will result in distinct time-series signatures for different background protocol mixtures. Active probing is an extension of passive probing, adding a systematic and deliberate perturbation of traffic on a network for the purpose of gathering more distilled information. The time-series data generated by active probing improves the distinguishability of the time-series for different workloads, evident by our machine-learning evaluation (Section 6.6).

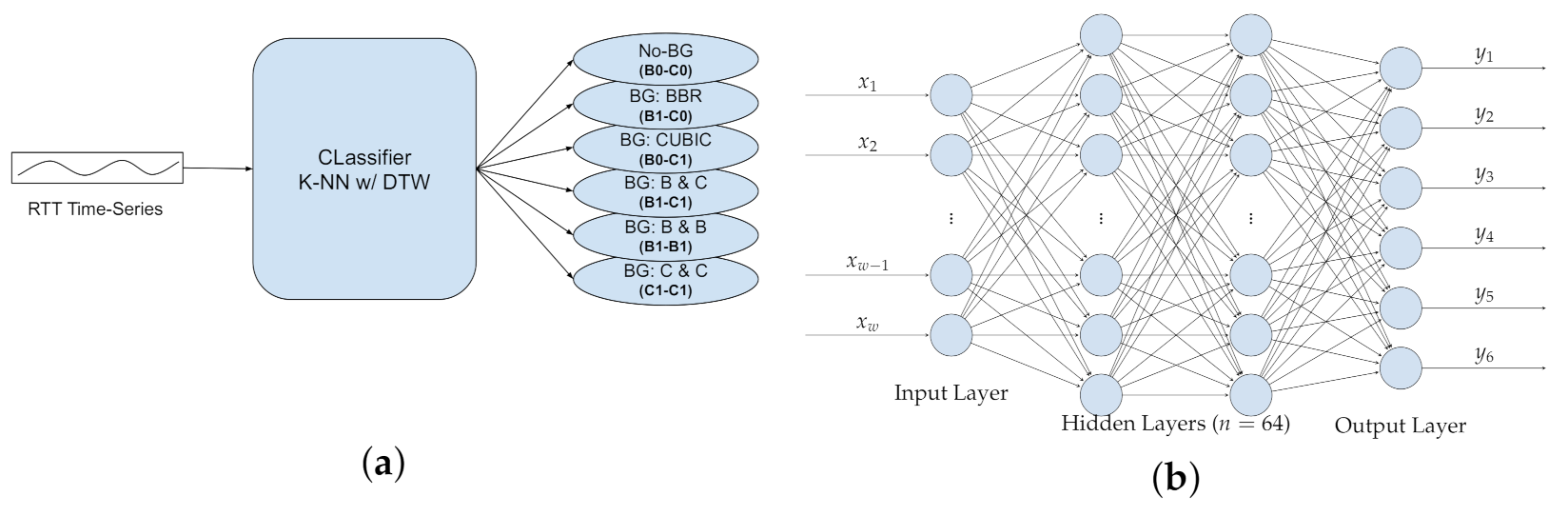

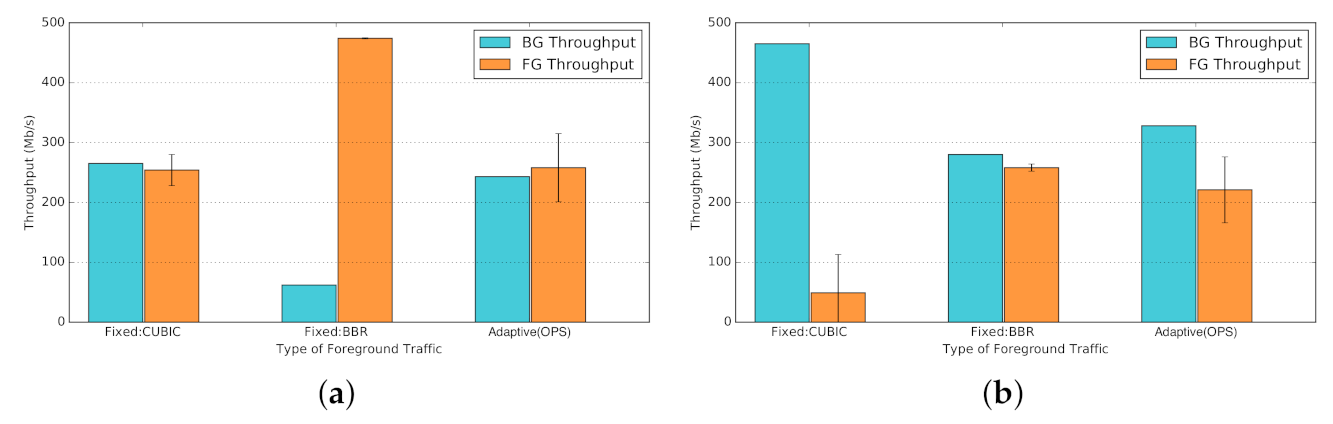

- A novel machine-learning approach for background workload recognition and foreground protocol selection:Knowledge about the protocols in use by the background traffic might influence which protocol to choose for a new foreground data transfer. Unfortunately, global knowledge can be difficult to obtain in a dynamic distributed system like a WAN. We introduce and evaluate a novel machine learning (ML) approach to network performance, called optimization through protocol selection (OPS). Using either passive or active probing, a classifier predicts the mix of TCP-based protocols in current use by the background workload. Then, we use that insight to build a decision process for selecting the best protocol to use for the new foreground transfer, so as to maximize throughput while maintaining fairness. The OPS approach’s throughput is four times higher than that achieved with a sub-optimal protocol choice (Figure 29). Furthermore, the OPS approach has a Jain fairness index of 0.96 to 0.99, as compared to a Jain fairness of 0.60 to 0.62, if a sub-optimal protocol is selected (Section 6.7).

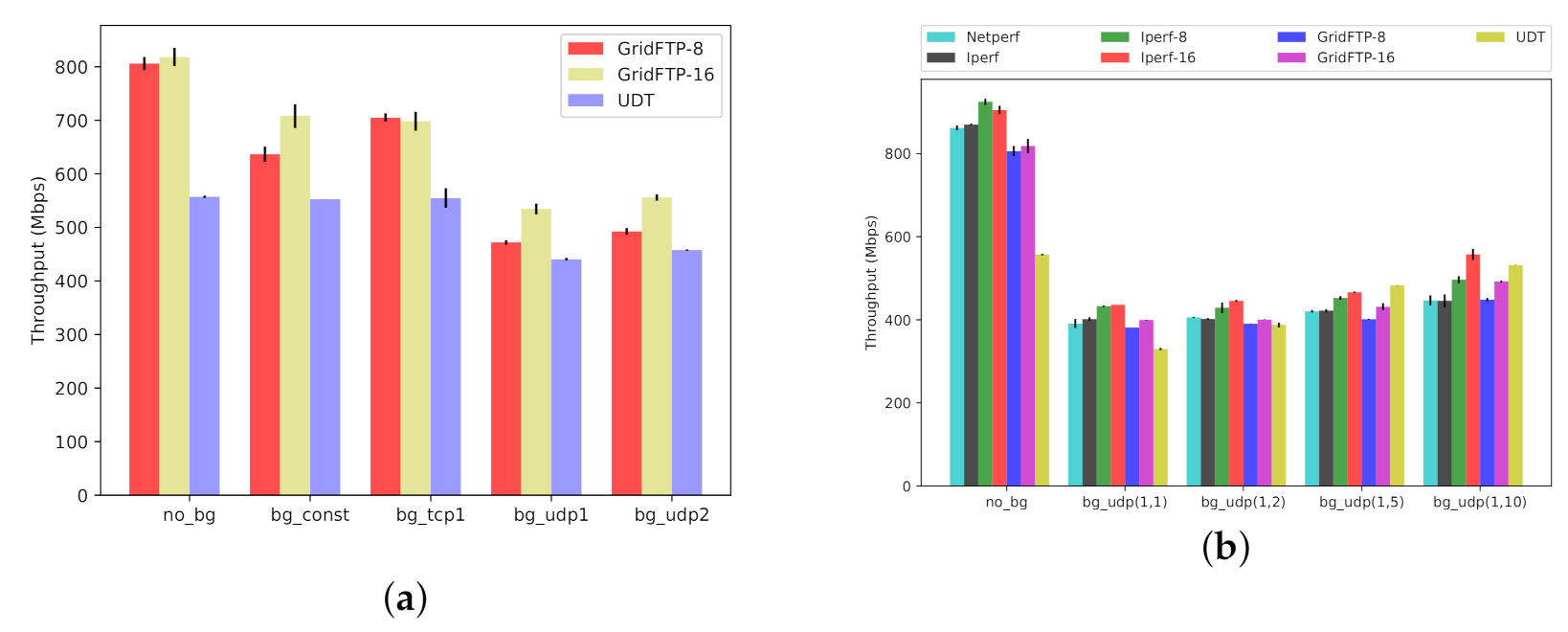

- Throughput: Despite the popularity of GridFTP, we conclude that GridFTP is not always the highest performing tool for bulk-data transfers on shared networks. We show that if there is a significant amount of UDP background traffic, especially bursty UDP traffic, GridFTP is negatively affected. The mix of TCP and UDP traffic on shared networks do change over time, but there are emerging application protocols that do use UDP (e.g., [14,15])For example, our empirical results show that if the competing background traffic is UDP-based, then UDT (which is UDP-based itself) can actually have significantly higher throughput (Figure 13a, BG-SQ-UDP1 and BG-SQ-UDP2; Figure 13b all combinations except for No-BG).

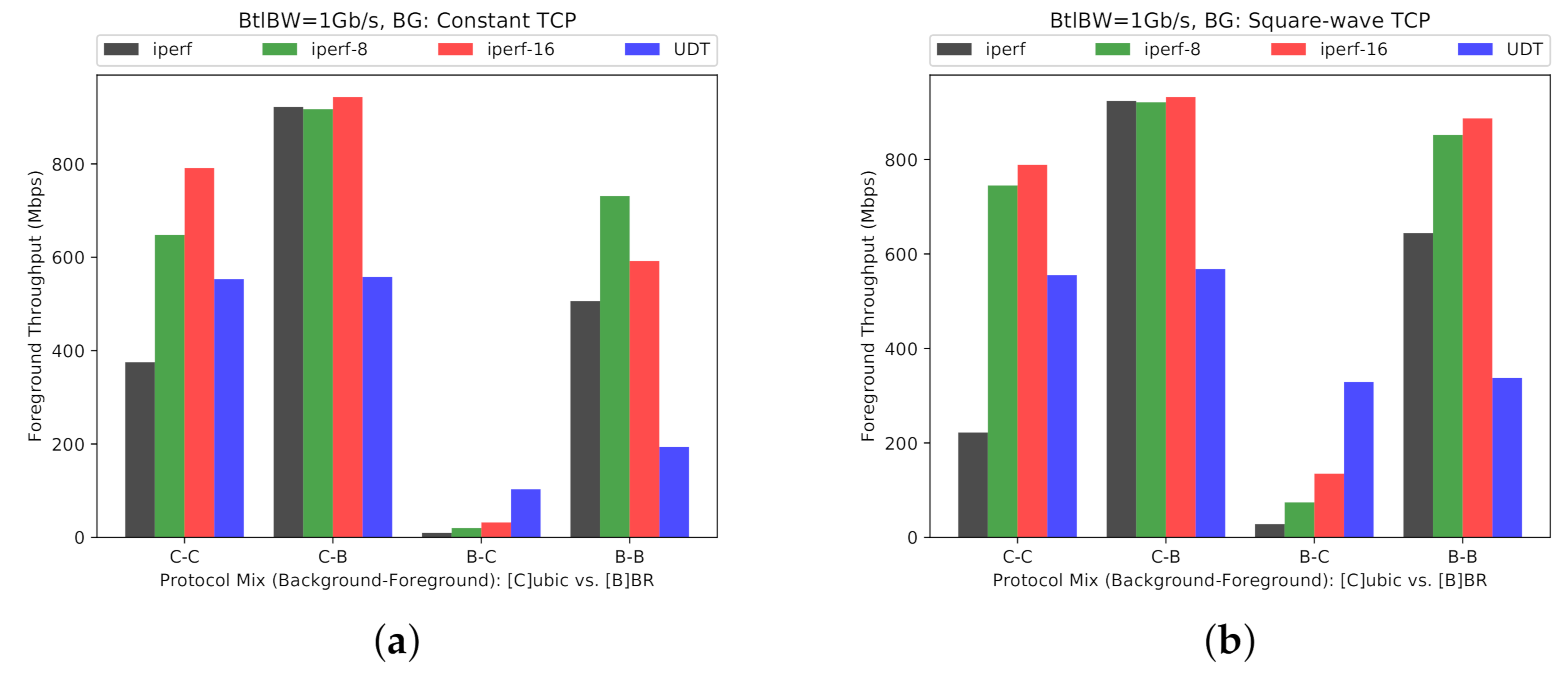

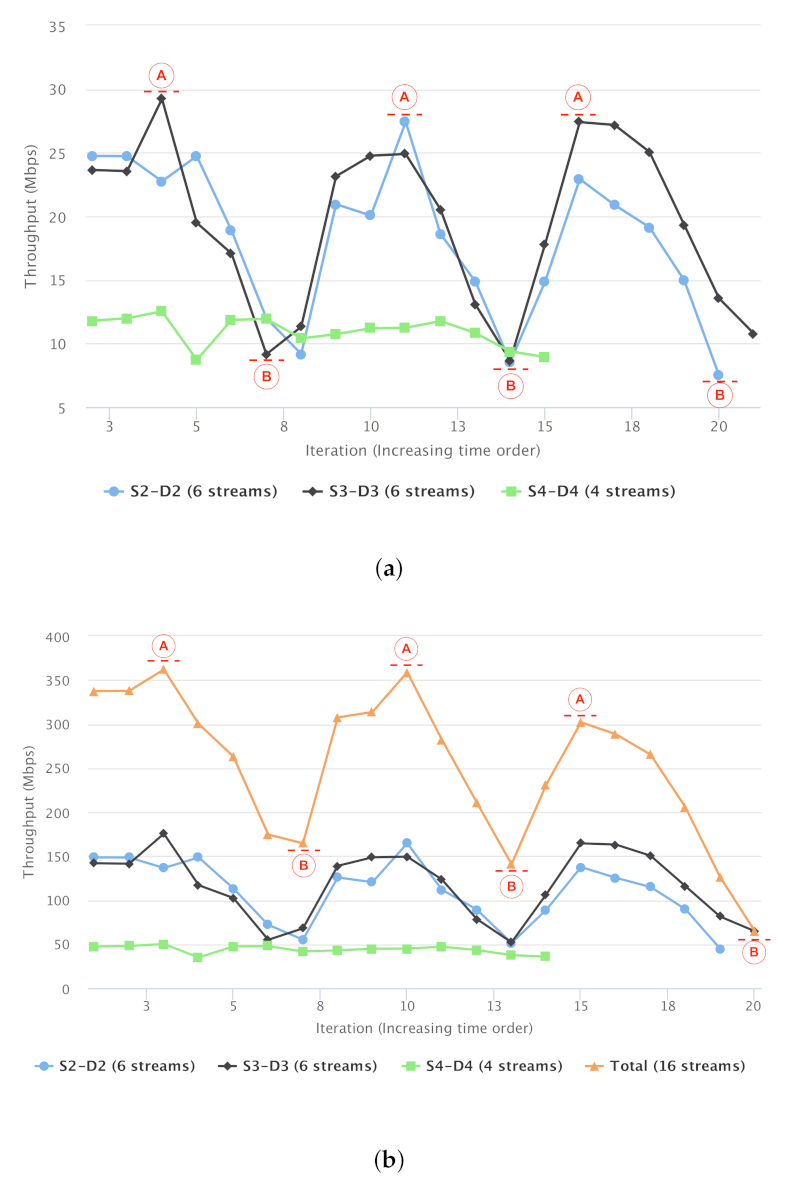

- Fairness: GridFTP can have a quantifiably large impact on other network traffic and users. Anecdotally, people know that GridFTP can use so much network bandwidth that other workloads are affected. However, it is important to quantify that effect and the large dynamic range of that effect (e.g., a range of 22 Mb/s, from a peak of 30 Mb/s to a valley of 8 Mb/s of NFS throughput, due to Grid FTP; discussed above and in Section 6.3).Similarly, in a purely TCP-based mixture of traffic, TCP BBR has a large impact on other TCP schemes, TCP CUBIC in particular (Figure 17 and Figure 18).

- Fixed vs. Adaptive Protocol Selection: When transferring data over a shared network, it is important to investigate the workload, and the type of background traffic, on the network. Despite the conventional wisdom for always selecting a specific protocol or tool (e.g., TCP BBR, or GridFTP), one size does not fit all. Distinct settings of background workload could manifest different levels of impact on different protocols. Hence selecting a fixed protocol or tool might yield sub-optimal performance on different metrics of interest (throughput, fairness, etc.). In contrast, through investigating the background workload (e.g., using passive or active probing techniques) and adaptively selecting appropriate protocol (e.g., using OPS) we could gain overall performance improvement (Figure 29).

2. High-Performance Data Transfer: Background

2.1. High-Performance Data Transfer Techniques

2.2. Bandwidth Utilisation Models in WANs

2.3. Fairness in Bandwidth-Sharing Networks

2.4. Modelling the Network Topology

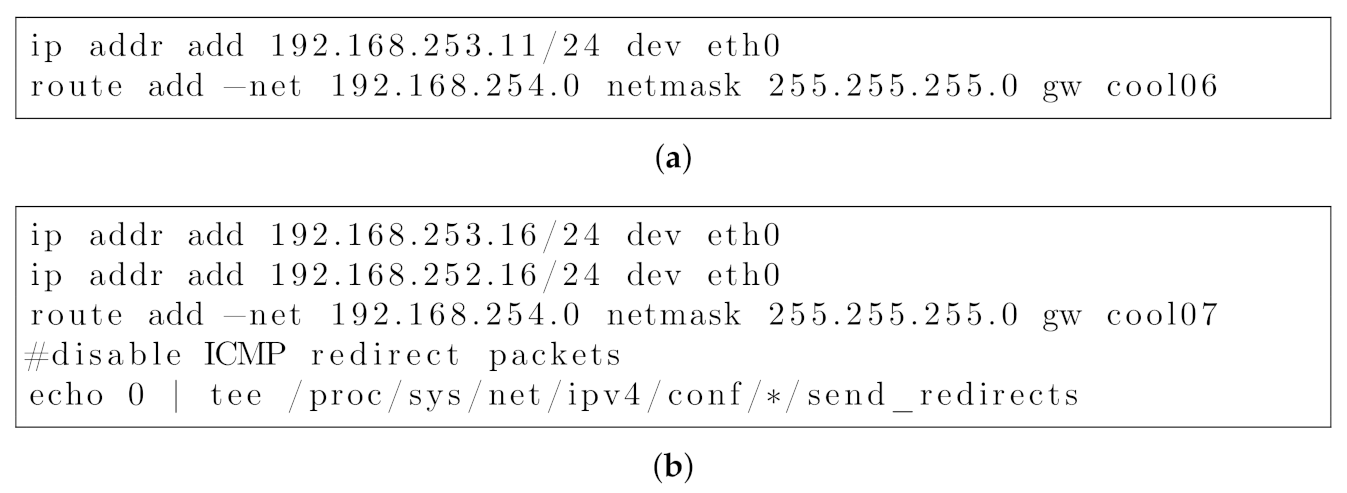

3. Testbed Network Design and Methodology

3.1. Testbed Configuration

3.2. Foreground Traffic

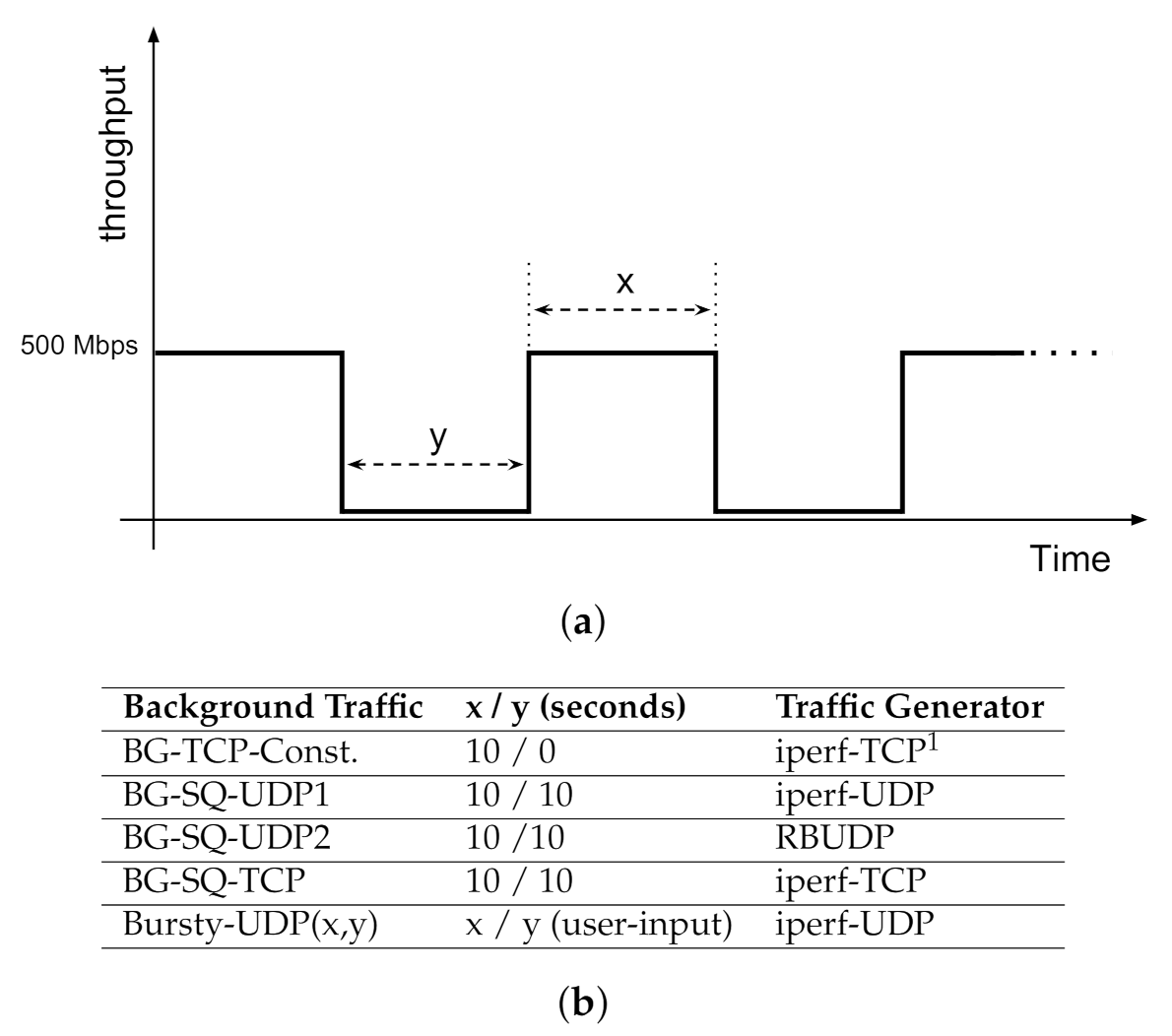

3.3. Background Traffic

- No background: as with data transfers on dedicated networks.

- Uniform TCP (BG-TCP-Const): A constant, single-stream TCP connection has been set up between two LANs.

- Square-waveform UDP 1 (BG-SQ-UDP1): A square waveform of 10 s data burst followed by 10 s of no data, using iperf generating UDP traffic.

- Square-waveform UDP 2 (BG-SQ-UDP2): same as previous scenario, except that data bursts are generated using RBUDP transferring a 1 GB file between two LANs.

- Square-waveform TCP (BG-SQ-TCP): same as previous scenario, except that data bursts are generated using iperf generating TCP traffic.

- Variable length bursty UDP (Bursty-UDP(x,y)): the UDP traffic is generated based on a square waveform pattern while data burst and gap time lengths are parametrized as user inputs.

4. Machine-Learned Recognition of Network Traffic

4.1. Recognizing Background Workload: Passive vs. Active Probing

4.2. Time-Series Classification

5. OPS: Optimization through Protocol Selection

5.1. Scope: TCP Schemes, CUBIC and BBR

5.2. Generating RTT Data

- CUBIC (5 s)

- BBR (5 s)

- CUBIC (5 s)

- None (5 s)

5.3. Data Preparation and Preprocessing

5.4. Training, Cross-Validation, and Parameter Tuning

5.5. Decision Making: Protocol Selection

6. Evaluation and Discussion

6.1. Impact of Cross Traffic on High-Performance Tools

6.2. Impact of TCP CCAs: CUBIC versus BBR

6.3. NFS Performance Over a Shared Network

6.4. Network Congestion Impact on Latency

6.5. Fairness

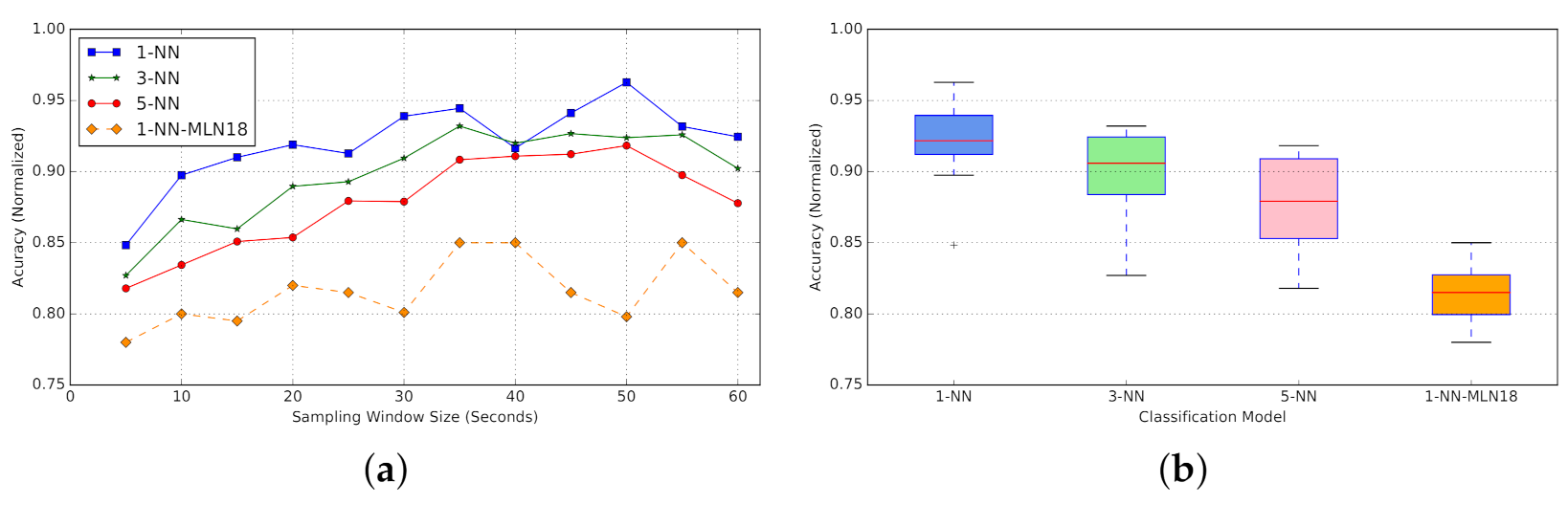

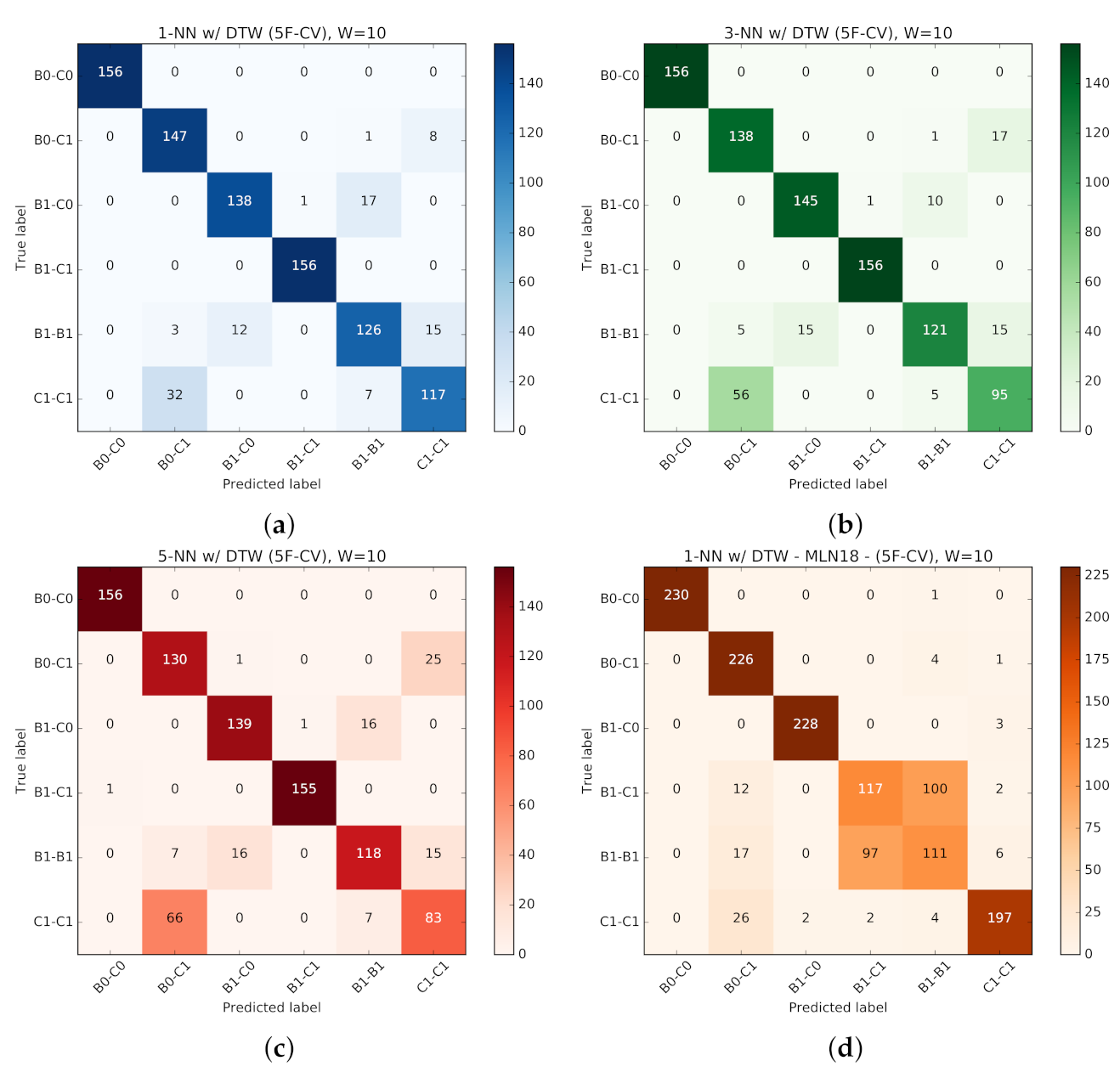

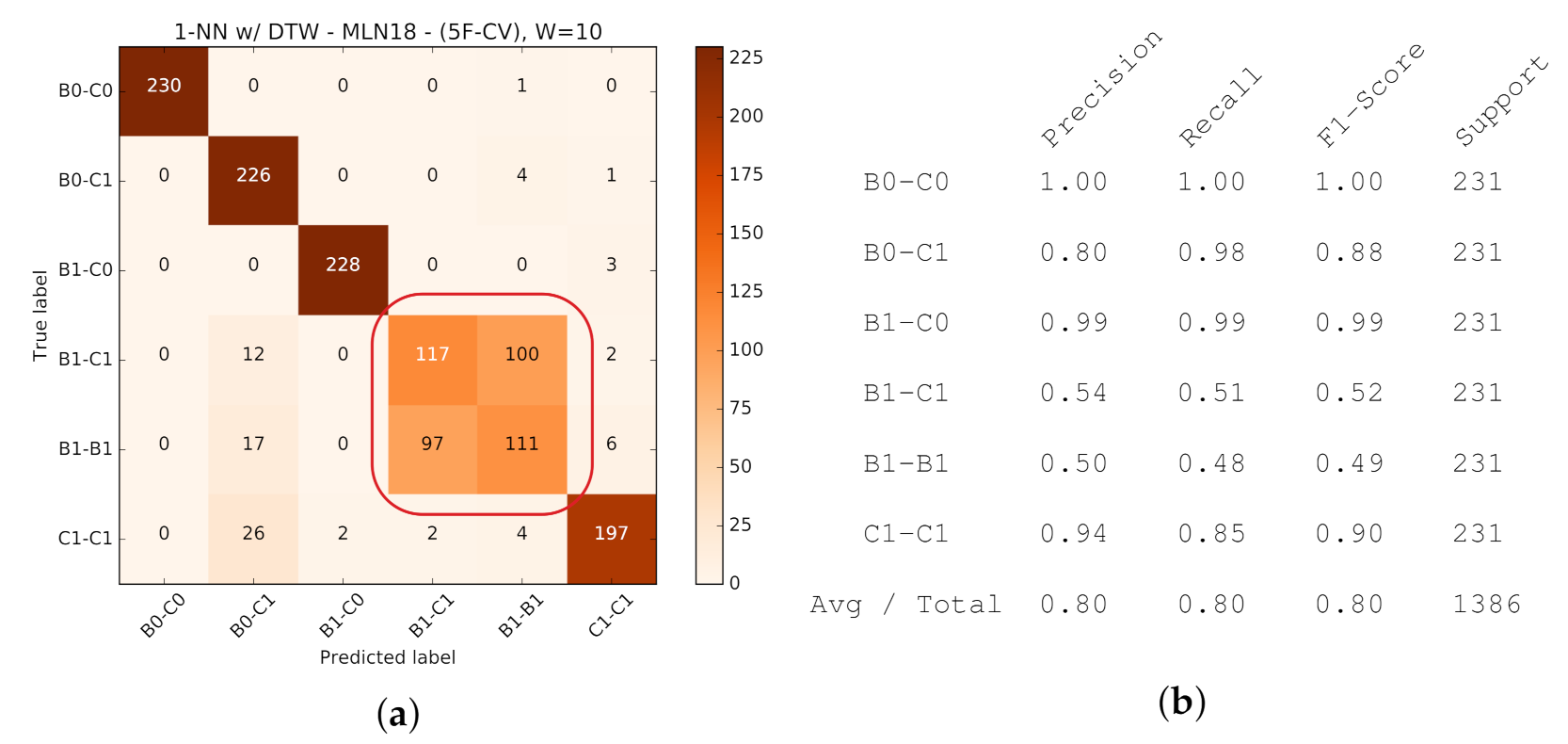

6.6. Classification of Background Traffic

6.7. Using OPS for Protocol Selection

- Probe the network and generate RTT time-series (10 s).

- Classify the RTT time-series to predict the background workload.

- Decide on which protocol (CCA) to use for data transfer (§ Section 5.5).

- Transfer data using the decided protocol (CCA).

- Fixed: CUBIC. Always CUBIC is used for data-transfer phase.

- Fixed: BBR. Always BBR is used for data-transfer phase.

- Adaptive (OPS): Dynamically determines which protocol to be used for each data-transfer cycle.

6.8. Discussion

- Throughput: A poor choice of foreground protocol can reduce throughput by up to 80% (Figure 13a, GridFTP reduced throughput versus UDT). Different combinations of protocols on shared networks have different dynamic behaviors. One might naturally assume that all network protocols designed for shared networks interact both reasonably and fairly. However, that is not necessarily true.The mixture of CUBIC and BBR, both based on TCP, can lead to poor throughput for CUBIC streams competing against BBR (e.g., Figure 17 and Figure 18). Using TCP for the foreground, with UDP as the background, results in poor foreground throughput (e.g., Figure 13 and Figure 14). For example, our empirical results show that if the competing background traffic is UDP-based, then UDT (which is UDP-based itself) can actually have significantly higher throughput (Figure 13a, BG-SQ-UDP1 and BG-SQ-UDP2; Figure 13b all except for No-BG).

- Fairness: Jain fairness (Section 6.5) varies by up to 0.35 (Table 3, between 0.64 to 0.99) representing poor fairness for some combinations of tools and protocols. On the one hand, using an aggressive tool would highly degrade the performance of the cross traffic (e.g., Jain index of 0.68 in Table 2, and 0.64 in Table 3 for GridFTP). On the other hand, depending on the type of background traffic, the performance of a traffic stream would be impacted (e.g., iperf foreground in Table 2 and Table 3) . Of course, using GridFTP is a voluntary policy choice, and we quantified the impact of such tools on fairness. GridFTP can have a quantifiably large impact on other network traffic and users. Anecdotally, people know that GridFTP can use so much network bandwidth that other workloads are affected. However, it is important to quantify that effect and the large dynamic range of that effect (e.g., a range of 22 Mb/s, from a peak of 30 Mb/s to a valley of 8 Mb/s of NFS throughput, due to GridFTP; discussed above and in Section 6.3). Similarly, in a purely TCP-based mixture of traffic, TCP BBR has a large impact on other TCP schemes, TCP CUBIC in particular (Figure 17 and Figure 18).

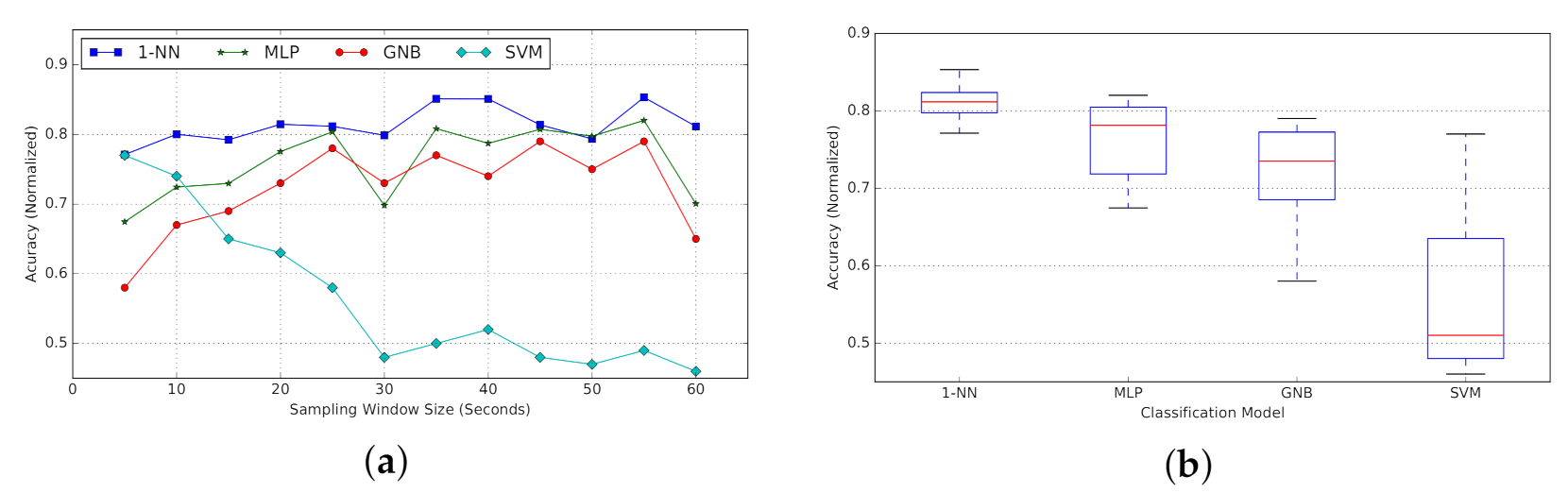

- Fixed vs. Adaptive Protocol Selection: While global knowledge about the mixture of traffic on a shared network is hard to obtain, our ML-based classifier is able, with up to 85% accuracy, to identify different mixtures of TCP-BBR and TCP-CUBIC background traffic (Figure 23). Furthermore, active probing (as compared to passive probing) improves ML-based classifiers to better distinguish between different mixtures of TCP-based background traffic (Figure 24).Without OPS, selecting a fixed protocol or tool might yield sub-optimal performance on different metrics of interest (throughput, fairness, etc.). In contrast, through investigating the background workload (e.g., using passive or active probing techniques) and adaptively selecting appropriate protocol (e.g., using OPS) we could gain overall performance improvement (Figure 29).

7. Related Work

7.1. High-Performance Data-Transfer Tools

7.1.1. Application Layer: Large Data-Transfer Tools

7.1.2. Network Design Patterns

7.2. Effects of Sharing Bandwidth

7.3. Traffic Generators

7.4. Big Data Transfer: High-Profile Use-Cases

7.5. Machine-Learning for Networking

8. Concluding Remarks

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| RTT | Round Trip Time |

| BDP | Bandwidth Delay Product |

| TCP | Transmission Control Protocol |

| CCA | Congestion Control Algorithm |

| BBR | Bottleneck-Bandwidth and RTT |

| UDP | User Datagram Protocol |

| ML | Machine-Learning |

| KNN | K Nearest Neighbours |

| DTW | Dynamic Time Warping |

| OPS | Optimization through Protocol Selection |

References

- Worldwide LHC Computing Grid. Available online: http://home.cern/about/computing/worldwide-lhc-computing-grid (accessed on 8 June 2021).

- Allcock, W.; Bresnahan, J.; Kettimuthu, R.; Link, M.; Dumitrescu, C.; Raicu, I.; Foster, I. The Globus Striped GridFTP Framework and Server. In SC ’05, Proceedings of the 2005 ACM/IEEE Conference on Supercomputing; IEEE Computer Society: Washington, DC, USA, 2005; p. 54. [Google Scholar] [CrossRef] [Green Version]

- Tierney, B.; Kissel, E.; Swany, M.; Pouyoul, E. Efficient data transfer protocols for big data. In Proceedings of the 2012 IEEE 8th International Conference on E-Science, Chicago, IL, USA, 8–12 October 2012; pp. 1–9. [Google Scholar]

- Labate, M.G.; Waterson, M.; Swart, G.; Bowen, M.; Dewdney, P. The Square Kilometre Array Observatory. In Proceedings of the 2019 IEEE International Symposium on Antennas and Propagation and USNC-URSI Radio Science Meeting, Atlanta, GA, USA, 7–12 July 2019; pp. 391–392. [Google Scholar] [CrossRef]

- Barakat, C.; Altman, E.; Dabbous, W. On TCP Performance in a Heterogeneous Network: A Survey. Comm. Mag. 2000, 38, 40–46. [Google Scholar] [CrossRef]

- Obsidian Strategics. Available online: http://www.obsidianresearch.com/ (accessed on 8 June 2021).

- Gu, Y.; Grossman, R.L. UDT: UDP-based data transfer for high-speed wide area networks. Comput. Netw. 2007, 51, 1777–1799. [Google Scholar] [CrossRef]

- Ha, S.; Rhee, I.; Xu, L. CUBIC: A New TCP-friendly High-speed TCP Variant. SIGOPS Oper. Syst. Rev. 2008, 42, 64–74. [Google Scholar] [CrossRef]

- Cardwell, N.; Cheng, Y.; Gunn, C.S.; Yeganeh, S.H.; Jacobson, V. BBR: Congestion-Based Congestion Control. ACM Queue 2016, 14, 20–53. [Google Scholar] [CrossRef]

- Anvari, H.; Lu, P. The Impact of Large-Data Transfers in Shared Wide-Area Networks: An Empirical Study. Procedia Comput. Sci. 2017, 108, 1702–1711. [Google Scholar] [CrossRef]

- Anvari, H.; Huard, J.; Lu, P. Machine-Learned Classifiers for Protocol Selection on a Shared Network. In Machine Learning for Networking; Renault, É., Mühlethaler, P., Boumerdassi, S., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 98–116. [Google Scholar]

- Anvari, H.; Lu, P. Learning Mixed Traffic Signatures in Shared Networks. In Computational Science–ICCS 2020; Krzhizhanovskaya, V.V., Závodszky, G., Lees, M.H., Dongarra, J.J., Sloot, P.M.A., Brissos, S., Teixeira, J., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 524–537. [Google Scholar]

- Anvari, H.; Lu, P. Active Probing for Improved Machine-Learned Recognition of Network Traffic. In Machine Learning for Networking; Renault, É., Boumerdassi, S., Mühlethaler, P., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 122–140. [Google Scholar]

- McQuistin, S.; Perkins, C.S. Is Explicit Congestion Notification Usable with UDP? In IMC ’15, Proceedings of the 2015 Internet Measurement Conference; ACM: New York, NY, USA, 2015; pp. 63–69. [Google Scholar] [CrossRef] [Green Version]

- Iyengar, J.; Thomson, M. QUIC: A UDP-Based Multiplexed and Secure Transport; Internet-Draft Draft-Ietf-Quic-Transport-27; Internet Engineering Task Force: Fremont, CA, USA, 2020. [Google Scholar]

- Kurose, J.; Ross, K. Computer Networking: A Top-down Approach; Pearson: London, UK, 2013. [Google Scholar]

- Afanasyev, A.; Tilley, N.; Reiher, P.; Kleinrock, L. Host-to-Host Congestion Control for TCP. Commun. Surv. Tutorials IEEE 2010, 12, 304–342. [Google Scholar] [CrossRef] [Green Version]

- Anvari, H.; Lu, P. Large Transfers for Data Analytics on Shared Wide-area Networks. In CF ’16, Proceedings of the ACM International Conference on Computing Frontiers; ACM: New York, NY, USA, 2016; pp. 418–423. [Google Scholar] [CrossRef]

- Wang, Z.; Zeng, X.; Liu, X.; Xu, M.; Wen, Y.; Chen, L. TCP congestion control algorithm for heterogeneous Internet. J. Netw. Comput. Appl. 2016, 68, 56–64. [Google Scholar] [CrossRef]

- Yu, S.; Brownlee, N.; Mahanti, A. Comparative performance analysis of high-speed transfer protocols for big data. In Proceedings of the 2013 IEEE 38th Conference on Local Computer Networks (LCN), Sydney, NSW, Australia, 21–24 October 2013; pp. 292–295. [Google Scholar] [CrossRef]

- Hacker, T.J.; Athey, B.D.; Noble, B. The End-to-End Performance Effects of Parallel TCP Sockets on a Lossy Wide-Area Network. In IPDPS ’02, Proceedings of the 16th International Parallel and Distributed Processing Symposium; IEEE Computer Society: Washington, DC, USA, 2002; p. 314. [Google Scholar]

- Altman, E.; Barman, D.; Tuffin, B.; Vojnovic, M. Parallel TCP Sockets: Simple Model, Throughput and Validation. In Proceedings of the INFOCOM 2006. 25th IEEE International Conference on Computer Communications, Barcelona, Spain, 23–29 April 2006; pp. 1–12. [Google Scholar] [CrossRef] [Green Version]

- Eghbal, N.; Lu, P. A Parallel Data Stream Layer for Large Data Workloads on WANs. In Proceedings of the 2020 IEEE 22nd International Conference on High Performance Computing and Communications, Yanuca Island, Cuvu, Fiji, 14–16 December 2020. [Google Scholar]

- Paasch, C.; Bonaventure, O. Multipath TCP. Commun. ACM 2014, 57, 51–57. [Google Scholar] [CrossRef] [Green Version]

- Habib, S.; Qadir, J.; Ali, A.; Habib, D.; Li, M.; Sathiaseelan, A. The past, present, and future of transport-layer multipath. J. Netw. Comput. Appl. 2016, 75, 236–258. [Google Scholar] [CrossRef] [Green Version]

- He, E.; Leigh, J.; Yu, O.; DeFanti, T.A. Reliable Blast UDP: Predictable High Performance Bulk Data Transfer. In CLUSTER ’02, Proceedings of the IEEE International Conference on Cluster Computing, Chicago, IL, USA, 26 September 2002; IEEE Computer Society: Washington, DC, USA, 2002; p. 317. [Google Scholar]

- Alrshah, M.A.; Othman, M.; Ali, B.; Hanapi, Z.M. Comparative study of high-speed Linux TCP variants over high-BDP networks. J. Netw. Comput. Appl. 2014, 43, 66–75. [Google Scholar] [CrossRef] [Green Version]

- Dukkipati, N.; Mathis, M.; Cheng, Y.; Ghobadi, M. Proportional rate reduction for TCP. In Proceedings of the 2011 ACM SIGCOMM Conference on Internet Measurement Conference, Berlin, Germany, 2 November 2011; pp. 155–170. [Google Scholar]

- Hock, M.; Neumeister, F.; Zitterbart, M.; Bless, R. TCP LoLa: Congestion Control for Low Latencies and High Throughput. In Proceedings of the 2017 IEEE 42nd Conference on Local Computer Networks (LCN), Singapore, 9–12 October 2017; pp. 215–218. [Google Scholar] [CrossRef]

- Jain, S.; Kumar, A.; Mandal, S.; Ong, J.; Poutievski, L.; Singh, A.; Venkata, S.; Wanderer, J.; Zhou, J.; Zhu, M.; et al. B4: Experience with a Globally-deployed Software Defined Wan. In ACM SIGCOMM Computer Communication Review; ACM: New York, NY, USA, 2013; pp. 3–14. [Google Scholar] [CrossRef]

- Liu, H.H.; Viswanathan, R.; Calder, M.; Akella, A.; Mahajan, R.; Padhye, J.; Zhang, M. Efficiently Delivering Online Services over Integrated Infrastructure. In Proceedings of the 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI 16); USENIX Association: Santa Clara, CA, USA, 2016; pp. 77–90. [Google Scholar]

- Guok, C.; Robertson, D.; Thompson, M.; Lee, J.; Tierney, B.; Johnston, W. Intra and Interdomain Circuit Provisioning Using the OSCARS Reservation System. In Proceedings of the 3rd International Conference on Broadband Communications, Networks and Systems, San Jose, CA, USA, 1–5 October 2006; pp. 1–8. [Google Scholar] [CrossRef] [Green Version]

- Ramakrishnan, L.; Guok, C.; Jackson, K.; Kissel, E.; Swany, D.M.; Agarwal, D. On-demand Overlay Networks for Large Scientific Data Transfers. In CCGRID ’10, Proceedings of the 2010 10th IEEE/ACM International Conference on Cluster, Cloud and Grid Computing, Melbourne, VIC, Australia, 17–20 May 2010; IEEE Computer Society: Washington, DC, USA, 2010; pp. 359–367. [Google Scholar] [CrossRef] [Green Version]

- Hong, C.; Kandula, S.; Mahajan, R.; Zhang, M.; Gill, V.; Nanduri, M.; Wattenhofer, R. Achieving High Utilization with Software-driven WAN. In SIGCOMM ’13, Proceedings of the ACM SIGCOMM 2013 Conference on SIGCOMM; ACM: New York, NY, USA, 2013; pp. 15–26. [Google Scholar] [CrossRef]

- Jiang, H.; Dovrolis, C. Why is the Internet Traffic Bursty in Short Time Scales? In SIGMETRICS ’05, Proceedings of the 2005 ACM SIGMETRICS International Conference on Measurement and Modeling of Computer Systems; ACM: New York, NY, USA, 2005; pp. 241–252. [Google Scholar] [CrossRef] [Green Version]

- Jain, R.; Chiu, D.M.; Hawe, W.R. A Quantitative Measure of Fairness and Discrimination for Resource Allocation in Shared Computer System; Eastern Research Laboratory, Digital Equipment Corporation: Hudson, MA, USA, 1984; Volume 38. [Google Scholar]

- Mo, J.; Walrand, J. Fair End-to-end Window-based Congestion Control. IEEE/ACM Trans. Netw. 2000, 8, 556–567. [Google Scholar] [CrossRef]

- Winstein, K.; Balakrishnan, H. TCP Ex Machina: Computer-generated Congestion Control. In SIGCOMM ’13, Proceedings of the ACM SIGCOMM 2013 Conference on SIGCOMM; ACM: New York, NY, USA, 2013; pp. 123–134. [Google Scholar] [CrossRef]

- Ros-Giralt, J.; Bohara, A.; Yellamraju, S.; Langston, M.H.; Lethin, R.; Jiang, Y.; Tassiulas, L.; Li, J.; Tan, Y.; Veeraraghavan, M. On the Bottleneck Structure of Congestion-Controlled Networks. Proc. ACM Meas. Anal. Comput. Syst. 2019, 3. [Google Scholar] [CrossRef] [Green Version]

- Carbone, M.; Rizzo, L. Dummynet Revisited. SIGCOMM Comput. Commun. Rev. 2010, 40, 12–20. [Google Scholar] [CrossRef]

- Vishwanath, K.V.; Vahdat, A. Evaluating Distributed Systems: Does Background Traffic Matter? In ATC’08, USENIX 2008 Annual Technical Conference; USENIX Association: Berkeley, CA, USA, 2008; pp. 227–240. [Google Scholar]

- Netperf: Network Throughput and Latency Benchmark. Available online: https://hewlettpackard.github.io/netperf/ (accessed on 8 June 2021).

- iperf: Bandwidth Measurement Tool. Available online: https://software.es.net/iperf/ (accessed on 8 June 2021).

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning; Springer: New York, NY, USA, 2009. [Google Scholar]

- Ismail Fawaz, H.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef] [Green Version]

- Keogh, E.; Ratanamahatana, C.A. Exact indexing of dynamic time warping. Knowl. Inf. Syst. 2005, 7, 358–386. [Google Scholar] [CrossRef]

- Christ, M.; Kempa-Liehr, A.W.; Feindt, M. Distributed and parallel time series feature extraction for industrial big data applications. arXiv 2016, arXiv:1610.07717. [Google Scholar]

- Aghabozorgi, S.; Shirkhorshidi, A.S.; Wah, T.Y. Time-series clustering—A decade review. Inf. Syst. 2015, 53, 16–38. [Google Scholar] [CrossRef]

- Berndt, D.J.; Clifford, J. Using Dynamic Time Warping to Find Patterns in Time Series. In AAAIWS’94, Proceedings of the 3rd International Conference on Knowledge Discovery and Data Mining; AAAI Press: Palo Alto, CA, USA, 1994; pp. 359–370. [Google Scholar]

- Hsu, C.J.; Huang, K.S.; Yang, C.B.; Guo, Y.P. Flexible Dynamic Time Warping for Time Series Classification. Procedia Comput. Sci. 2015, 51, 2838–2842. [Google Scholar] [CrossRef] [Green Version]

- Feldmann, A.; Gasser, O.; Lichtblau, F.; Pujol, E.; Poese, I.; Dietzel, C.; Wagner, D.; Wichtlhuber, M.; Tapiador, J.; Vallina-Rodriguez, N.; et al. The Lockdown Effect: Implications of the COVID-19 Pandemic on Internet Traffic. In IMC ’20, Proceedings of the ACM Internet Measurement Conference; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–18. [Google Scholar] [CrossRef]

- Dart, E.; Rotman, L.; Tierney, B.; Hester, M.; Zurawski, J. The Science DMZ: A Network Design Pattern for Data-intensive Science. In SC ’13, Proceedings of the International Conference on High Performance Computing, Networking, Storage and Analysis; ACM: New York, NY, USA, 2013; pp. 85:1–85:10. [Google Scholar] [CrossRef]

- Hacker, T.J.; Noble, B.D.; Athey, B.D. Adaptive data block scheduling for parallel TCP streams. In Proceedings of the HPDC-14, 14th IEEE International Symposium on High Performance Distributed Computing, Research Triangle Park, NC, USA, 24–27 July 2005; pp. 265–275. [Google Scholar]

- Yildirim, E.; Yin, D.; Kosar, T. Prediction of optimal parallelism level in wide area data transfers. Parallel Distrib. Syst. IEEE Trans. 2011, 22, 2033–2045. [Google Scholar] [CrossRef]

- Lu, D.; Qiao, Y.; Dinda, P.A.; Bustamante, F.E. Modeling and taming parallel TCP on the wide area network. In Proceedings of the 19th IEEE International Parallel and Distributed Processing Symposium, Denver, CO, USA, 4–8 April 2005; p. 68b. [Google Scholar]

- FDT: Fast Data Transfer Tool. Available online: http://monalisa.cern.ch/FDT/ (accessed on 8 June 2021).

- BBCP: Multi-Stream Data Transfer Tool. Available online: http://www.slac.stanford.edu/~abh/bbcp/ (accessed on 8 June 2021).

- Gu, Y.; Grossman, R. UDTv4: Improvements in Performance and Usability. In Networks for Grid Applications; Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Vicat-Blanc Primet, P., Kudoh, T., Mambretti, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 2, pp. 9–23. [Google Scholar] [CrossRef] [Green Version]

- Dart, E.; Rotman, L.; Tierney, B.; Hester, M.; Zurawski, J. The Science DMZ: A network design pattern for data-intensive science. Sci. Program. 2014, 22, 173–185. [Google Scholar] [CrossRef] [Green Version]

- ESnet website. Available online: https://www.es.net/science-engagement/knowledge-base/case-studies/science-dmz-case-studies (accessed on 8 June 2021).

- Yin, Q.; Kaur, J. Can Machine Learning Benefit Bandwidth Estimation at Ultra-high Speeds? In Proceedings of the Passive and Active Measurement: 17th International Conference, PAM 2016, Heraklion, Greece, 31 March–1 April 2016; Karagiannis, T., Dimitropoulos, X., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 397–411. [Google Scholar] [CrossRef]

- Wang, X.S.; Balasubramanian, A.; Krishnamurthy, A.; Wetherall, D. How Speedy is SPDY? In Proceedings of the 11th USENIX Symposium on Networked Systems Design and Implementation (NSDI 14), Seattle, WA, USA, 2–4 April 2014; USENIX Association: Seattle, WA, USA, 2014; pp. 387–399. [Google Scholar]

- Vishwanath, K.V.; Vahdat, A. Swing: Realistic and Responsive Network Traffic Generation. IEEE/ACM Trans. Netw. 2009, 17, 712–725. [Google Scholar] [CrossRef] [Green Version]

- Barford, P.; Crovella, M. Generating Representative Web Workloads for Network and Server Performance Evaluation. SIGMETRICS Perform. Eval. Rev. 1998, 26, 151–160. [Google Scholar] [CrossRef]

- Kettimuthu, R.; Sim, A.; Gunter, D.; Allcock, B.; Bremer, P.T.; Bresnahan, J.; Cheery, A.; Childers, L.; Dart, E.; Foster, I.; et al. Lessons Learned from Moving Earth System Grid Data Sets over a 20 Gbps Wide-area Network. In HPDC ’10, Proceedings of the 19th ACM International Symposium on High Performance Distributed Computing, New York, NY, USA, 21–25 June 2010; ACM: New York, NY, USA, 2010; pp. 316–319. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Tieman, B.; Kettimuthu, R.; Foster, I. A Data Transfer Framework for Large-scale Science Experiments. In HPDC ’10, Proceedings of the 19th ACM International Symposium on High Performance Distributed Computing, New York, NY, USA, 21–25 June 2010; ACM: New York, NY, USA, 2010; pp. 717–724. [Google Scholar] [CrossRef] [Green Version]

- Mirza, M.; Sommers, J.; Barford, P.; Zhu, X. A Machine Learning Approach to TCP Throughput Prediction. IEEE/ACM Trans. Netw. 2010, 18, 1026–1039. [Google Scholar] [CrossRef]

- Sivaraman, A.; Winstein, K.; Thaker, P.; Balakrishnan, H. An Experimental Study of the Learnability of Congestion Control. In SIGCOMM ’14, Proceedings of the 2014 ACM Conference on SIGCOMM, Chicago, IL, USA, 17–22 August 2014; ACM: New York, NY, USA, 2014; pp. 479–490. [Google Scholar] [CrossRef]

- Dong, M.; Li, Q.; Zarchy, D.; Godfrey, P.B.; Schapira, M. PCC: Re-architecting Congestion Control for Consistent High Performance. In Proceedings of the 12th USENIX Symposium on Networked Systems Design and Implementation (NSDI 15), Oakland, CA, USA, 4–6 May 2016; USENIX Association: Oakland, CA, USA, 2015; pp. 395–408. [Google Scholar]

- Dong, M.; Meng, T.; Zarchy, D.; Arslan, E.; Gilad, Y.; Godfrey, B.; Schapira, M. PCC Vivace: Online-Learning Congestion Control. In Proceedings of the 15th USENIX Symposium on Networked Systems Design and Implementation (NSDI 18), Renton, WA, USA, 9–11 April 2018; USENIX Association: Renton, WA, USA, 2018; pp. 343–356. [Google Scholar]

| Figure | Network | Traffic Mixture | Background | Note(s) |

|---|---|---|---|---|

| Figure 13 | Overlay-1 | TCP vs. UDP | Synthetic | Compare Figure 14 |

| Figure 14 | Overlay-2 | TCP vs. UDP | Synthetic | Compare Figure 13 |

| Figure 17 | Overlay-1 | CUBIC vs. BBR | Synthetic | Compare Figure 18 |

| Figure 18 | Overlay-2 | CUBIC vs. BBR | Synthetic | Compare Figure 17 |

| Figure 15 | Overlay-1 | TCP vs. UDP | Synthetic, NFS | No Overlay-2 results given |

| Figure 19 | Overlay-1 | TCP vs. UDP | Synthetic, NFS | No Overlay-2 results given |

| Figure 21a | Overlay-1 | TCP vs. UDP | Synthetic | Compare Figure 21b |

| Figure 21b | Overlay-2 | TCP vs. UDP | Synthetic | Compare Figure 21a |

| Type | Stream | no_bg | bg_iperf | bg_gftp16 | bg_udt |

|---|---|---|---|---|---|

| Dedicated | Background | - | 478 | 473 | 481 |

| Foreground (iperf) | 474 | 474 | 474 | 474 | |

| Shared | Background | - | 238 | 415 | 398 |

| Foreground (iperf) | 474 | 253 | 75.6 | 85.3 | |

| Jain fairness | - | 1 | 0.68 | 0.7 |

| Type | Stream | no_bg | bg_iperf | bg_gftp16 | bg_udt |

|---|---|---|---|---|---|

| Dedicated | Background | - | 848 | 811 | 625 |

| Foreground (iperf) | 872 | 872 | 872 | 872 | |

| Shared | Background | - | 412 | 738 | 591 |

| Foreground (iperf) | 872 | 485 | 109 | 161 | |

| Jain fairness | - | 0.99 | 0.64 | 0.75 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anvari, H.; Lu, P. Machine-Learned Recognition of Network Traffic for Optimization through Protocol Selection. Computers 2021, 10, 76. https://doi.org/10.3390/computers10060076

Anvari H, Lu P. Machine-Learned Recognition of Network Traffic for Optimization through Protocol Selection. Computers. 2021; 10(6):76. https://doi.org/10.3390/computers10060076

Chicago/Turabian StyleAnvari, Hamidreza, and Paul Lu. 2021. "Machine-Learned Recognition of Network Traffic for Optimization through Protocol Selection" Computers 10, no. 6: 76. https://doi.org/10.3390/computers10060076

APA StyleAnvari, H., & Lu, P. (2021). Machine-Learned Recognition of Network Traffic for Optimization through Protocol Selection. Computers, 10(6), 76. https://doi.org/10.3390/computers10060076