Learning Explainable Disentangled Representations of E-Commerce Data by Aligning Their Visual and Textual Attributes

Abstract

1. Introduction

- To the best of our knowledge, we are the first to propose an automatic method to explain the contents of disentangled multimodal item representations with text.

- We disentangle real-world multimodal e-commerce data which is challenging because (i) some attributes are shared and some are complementary between the product image and description which makes effective fusion complex, (ii) the saliency, granularity and visual variation of the product attributes complicates detection and recognition, (iii) the noise and incompleteness of product descriptions makes alignment difficult, and (iv) we lack ground truth data at the product attribute level.

- We show how the weak supervision of the two-level alignment steers the disentanglement process towards discovering factors of variation that humans use to organize, describe and distinguish fashion products and to ignore others which is essential when the visual search space is huge and noisy.

- We demonstrate that our E-VAE creates representations that are explainable while maintaining the same level of performance as the state-of-the-art or surpassing it. More precisely, we achieve state-of-the-art outfit recommendation results on the Polyvore Outfits dataset and new state-of-the-art cross-modal search results on the Amazon Dresses dataset.

2. Related Work

2.1. Disentangled Representation Learning

2.2. Multimodal Disentangled Representation Learning

2.3. Explainability

3. Methodology

3.1. Disentanglement

3.1.1. Prior

3.1.2. Decoder

3.1.3. Encoder

3.1.4. ELBO

3.2. Explainability through Two-Level Alignment

3.2.1. Textual Attribute Extraction and Representation

3.2.2. Coarse-grained Alignment of Images and Textual Attributes

3.2.3. Fine-grained Alignment of Image Regions and Textual Attributes

3.3. Complete Loss Function

4. Experimental Setup

4.1. Datasets

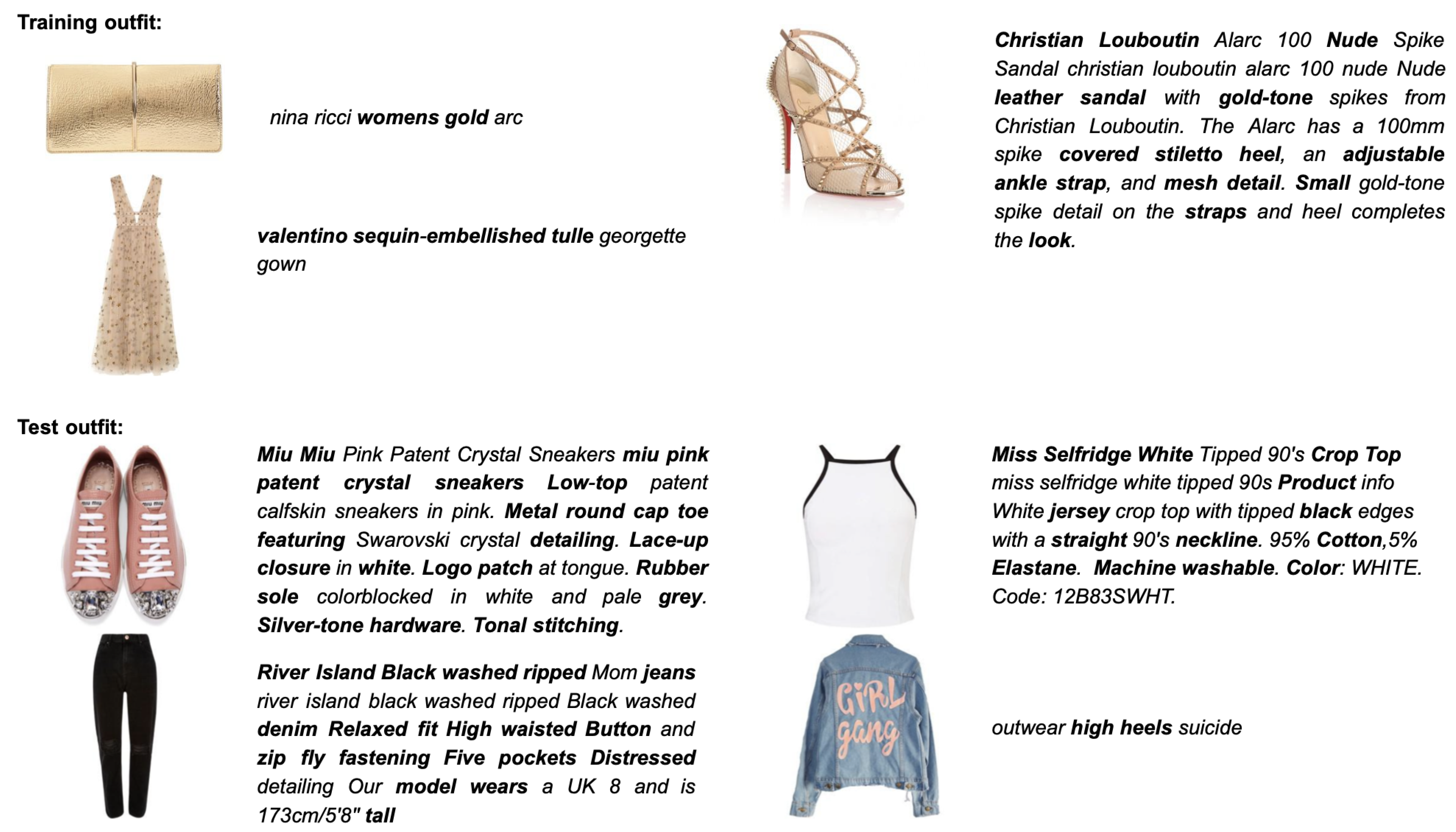

4.1.1. Polyvore Outfits

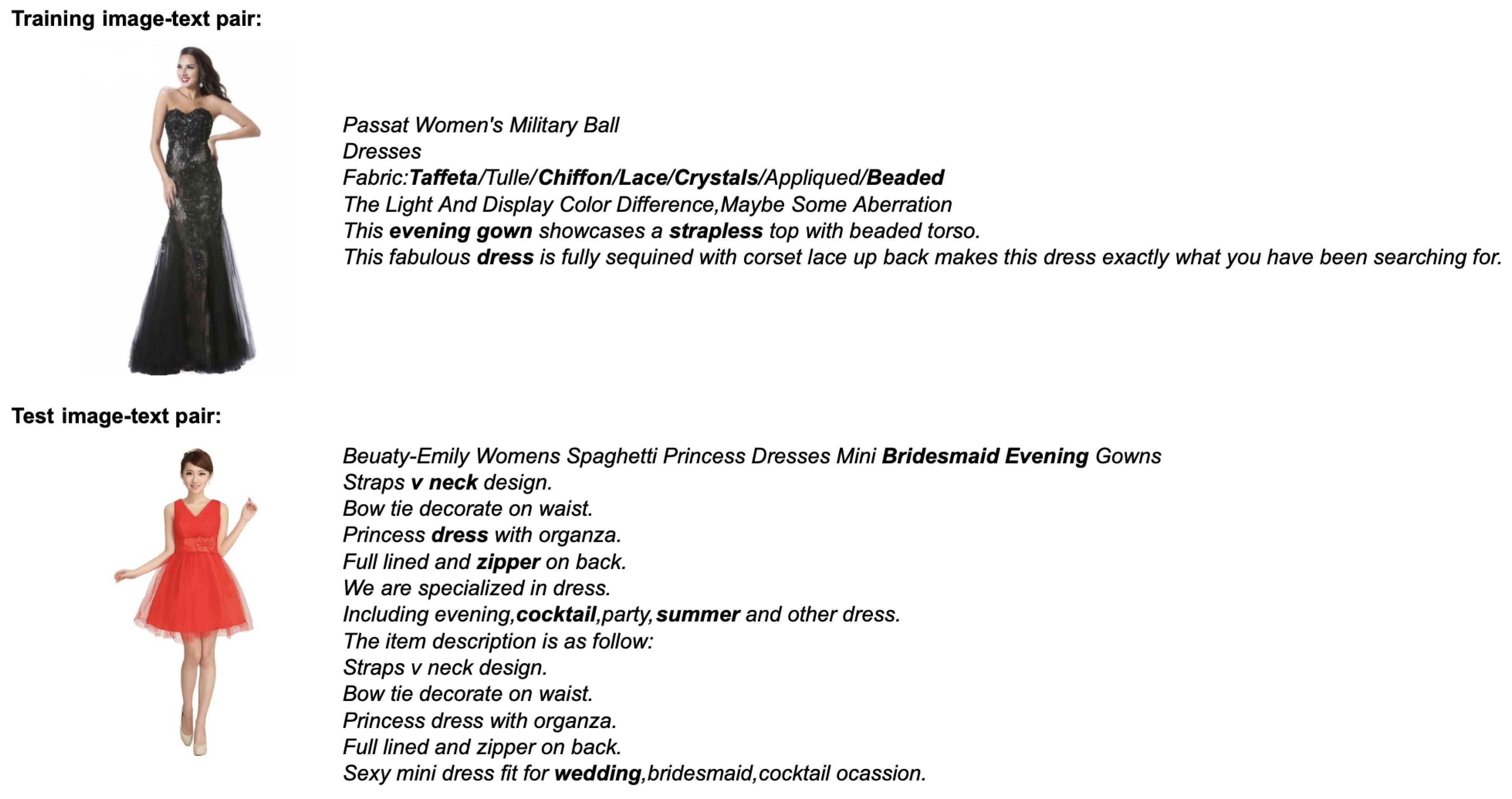

4.1.2. Amazon Dresses

4.2. Evaluation

4.2.1. Outfit Recommendation

4.2.2. Cross-modal Search

4.3. Baseline Methods

4.3.1. Outfit Recommendation

- TypeAware [31] This is a state-of-the-art multimodal outfit recommender system which infers a multimodal embedding space for item understanding where semantically related images and texts are aligned as well as images/texts of the same type. Jointly, the system learns about item compatibility in multiple type-specific compatibility spaces.

- -VAE [5] Here we first use -VAE to learn to disentangle the fashion images and afterwards learn the two-level alignment in the disentangled space and compatibility in the type-specific compatibility spaces while keeping the layers of -VAE frozen.

- DMVAE [20] This is a state-of-the-art VAE for multimodal disentangled representation learning which infers three spaces: a single shared multimodal disentangled space and a private unimodal disentangled space both for vision and language. We use a triplet matching loss (similar to Equations (5) and (15)) to learn cross-modal relations. Then, the representations of these three spaces are concatenated to represent an item and projected to the type-specific compatibility spaces for compatibility learning.

- E-BFAN This is a variant of E-VAE which infers the two-level alignment in a multimodal shared space but does not aim for disentanglement. The multimodal shared space is enforced with the same loss function as E-VAE (Equation (16)) but with the term removed. Next, the resulting explainable item representations are projected to multiple type-specific compatibility spaces.

4.3.2. Cross-Modal Search

- 3A [27] This is a state-of-the-art neural architecture for cross-modal and multimodal search of fashion items which learns intermodal representations of image regions and textual attributes using three alignment losses, i.e., a global alignment, local alignment and image cluster consistency loss. In contrast with our work, these alignment losses do not result in explainable representations.

- SCAN [33] This is a state-of-the-art model for image-text matching to find the latent alignment of image regions and words referring to objects in general, everyday scenes.

- -VAE [5] We first use -VAE to learn to disentangle the fashion images and afterwards learn the two-level alignment in the disentangled space while keeping the layers of -VAE frozen.

- VarAlign [19] This is a state-of-the-art neural architecture for image-to-text retrieval consisting of three VAEs. A mapper VAE performs cross-modal variational alignment of the latent distributions of two other VAEs, that is, one VAE that learns to reconstruct the text based on the image and a second VAE that reconstructs the text based on the text itself. We use the disentangled image representations obtained after the mapper and disentangled text representations produced by the second VAE for image-to-text retrieval.

- DMVAE [20] This is a state-of-the-art VAE for multimodal disentangled representation learning which infers a single shared multimodal disentangled space and a private unimodal disentangled space per modality. For fair comparison, we use a triplet matching loss (similar to Equations (5) and (15)) to learn the cross-modal relations and use the image shared features and text shared features for cross-modal search.

- E-BFAN This is a variant of E-VAE which creates explainable item representations but does not aim for disentanglement and therefore discards from the loss function (Equation (16)).

4.4. Training Details

5. Results and Discussion

5.1. Explainability

5.2. Outfit Recommendation

5.3. Cross-Modal Search

5.4. Summary and Future Work

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AUC | Area Under the ROC curve |

| -VAE | Beta-Variational AutoEncoder |

| BFAN | Bidirectional Focal Attention Network |

| CNN | Convolutional Neural Network |

| DMVAE | Disentangled Multimodal Variational AutoEncoder |

| FITB | Fill-In-The-Blank |

| E-BFAN | Explainable Bidirectional Focal Attention Network |

| E-VAE | Explainable Variational AutoEncoder |

| OC | Outfit Compatibility |

| POS | Part-Of-Speech |

| ROC | Receiver Operating Characteristic |

| SCAN | Stacked Cross-Attention |

| VAE | Variational AutoEncoder |

| XAI | eXplainable Artificial Intelligence |

Appendix A. Datasets

Appendix A.1. Polyvore Outfits

Appendix A.2. Amazon Dresses

| Amazon Dresses Fashion Glossary | ||||

|---|---|---|---|---|

| 3-4-sleeve | a-line | abstract | acetate | acrylic |

| action-sports | animal-print | applique | argyle | asymmetrical |

| athletic | aztec | baby-doll | ballet | banded |

| beaded | beige | black | blue | boatneck |

| bone | bows | bridesmaid | brocade | bronze |

| brown | burgundy | bustier | buttons | camo |

| canvas | career | cashmere | casual | chains |

| checkered | cheetah-print | chevron | chiffon | coat |

| cocktail | contrast-stitching | coral | cotton | cover-up |

| cowl | crew | crochet | crystals | cut-outs |

| denim | dip-dyed | dress | dropped-waist | embroidered |

| empire | epaulette | evening | fall | faux-leather |

| faux-pockets | felt | floor-length | floral-print | flowers |

| fringe | geometric | gingham | gold | gown |

| gray | green | grommets | halter | hemp |

| high-low | high-waist | homecoming | horizontal-stripes | houndstooth |

| jacquard | jersey | juniors | keyhole | khaki |

| knee-length | lace | leather | leopard-print | linen |

| little-black-dress | logo | long | long-sleeves | lycra |

| lyocell | mahogany | mandarin | maxi | mesh |

| metallic | microfiber | mock-turtleneck | modal | mother-of-the-bride |

| multi | navy | neutral | nightclub | notch-lapel |

| nylon | off-the-shoulder | office | olive | ombre |

| one-shoulder | orange | outdoor | paisley | patchwork |

| peplum | peter-pan | petite | pewter | pink |

| piping | pique | plaid | pleated | plus-size |

| point | polka-dot | polyester | ponte | prom |

| purple | ramie | rayon | red | reptile |

| resort | retro | rhinestones | ribbons | rivets |

| ruched | ruffles | satin | scalloped | scoop |

| screenprint | sequins | shawl | sheath | shift |

| shirt | short | short-sleeves | silk | silver |

| sleeveless | smocked | snake-print | snap | spandex |

| sport | sports | spread | spring | square-neck |

| strapless | street | studded | summer | surf |

| sweater | sweetheart | synthetic | taffeta | tan |

| tank | taupe | tea-length | terry | tie-dye |

| tropical | tunic | turtleneck | tweed | twill |

| v-neck | velvet | vertical-stripes | viscose | wear-to-work |

| wedding | western | white | wide | winter |

| wool | wrap-dress | yellow | zebra-print | zipper |

Appendix B. Word Embeddings

Appendix C. User Study

Appendix D. Sensitivity Analysis

| Amazon Dresses | |||||||

|---|---|---|---|---|---|---|---|

| Model | Image to Text | Text to Image | |||||

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | ||

| 3A [27] | 32 | 6.60 | 18.10 | 27.40 | 4.30 | 15.40 | 25.60 |

| 64 | 6.00 | 18.60 | 29.60 | 5.00 | 16.40 | 25.30 | |

| 128 | 6.80 | 19.30 | 30.00 | 8.80 | 21.80 | 32.10 | |

| 256 | 7.30 | 22.60 | 32.60 | 6.80 | 20.30 | 30.10 | |

| 512 | 8.90 | 23.80 | 34.20 | 7.40 | 18.60 | 29.50 | |

| BFAN [30] | 32 | 7.40 | 26.20 | 38.00 | 12.60 | 34.00 | 46.40 |

| 64 | 8.00 | 27.90 | 42.00 | 16.10 | 37.90 | 50.40 | |

| 128 | 9.20 | 28.80 | 41.90 | 16.90 | 38.60 | 52.00 | |

| 256 | 9.30 | 28.30 | 42.20 | 15.50 | 35.40 | 49.50 | |

| 512 | 8.10 | 29.80 | 41.40 | 15.50 | 37.60 | 50.60 | |

| E-VAE () | 32 | 0.50 | 1.60 | 3.10 | 0.20 | 0.90 | 1.40 |

| 64 | 6.80 | 23.20 | 36.10 | 12.40 | 32.20 | 45.10 | |

| 128 | 6.70 | 26.40 | 42.20 | 15.60 | 37.20 | 49.10 | |

| 256 | 0.30 | 1.30 | 2.80 | 0.30 | 1.20 | 2.30 | |

| 512 | 0.30 | 0.50 | 1.40 | 0.10 | 0.50 | 1.00 | |

| E-VAE () | 32 | 0.10 | 1.60 | 3.70 | 0.30 | 1.30 | 1.80 |

| 64 | 6.30 | 22.10, | 35.30 | 13.50 | 33.50 | 47.10 | |

| 128 | 9.20 | 32.10 | 47.20 | 18.20 | 42.10 | 53.40 | |

| 256 | 0.20 | 0.60 | 1.40 | 0.10 | 0.50 | 1.00 | |

| 512 | 0.10 | 0.80 | 1.30 | 0.10 | 0.50 | 1.00 | |

| E-BFAN () | 32 | 5.70 | 25.00 | 38.10 | 12.80 | 35.30 | 46.80 |

| 64 | 6.10 | 25.60 | 40.20 | 14.40 | 36.90 | 49.90 | |

| 128 | 6.90 | 27.30 | 41.30 | 17.50 | 39.60 | 52.40 | |

| 256 | 8.20 | 28.70 | 42.80 | 18.90 | 39.20 | 52.80 | |

| 512 | 9.30 | 30.00 | 44.20 | 18.20 | 42.50 | 53.50 | |

References

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Bengio, Y.; Courville, A.; Vincent, P. Representation Learning: A Review and New Perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Rezende, D.J.; Mohamed, S.; Wierstra, D. Stochastic Backpropagation and Approximate Inference in Deep Generative Models. In Proceedings of the 31st International Conference on Machine Learning, Bejing, China, 22–24 June 2014; pp. 1278–1286. [Google Scholar]

- Higgins, I.; Matthey, L.; Pal, A.; Burgess, C.P.; Glorot, X.; Botvinick, M.M.; Mohamed, S.; Lerchner, A. beta-VAE: Learning Basic Visual Concepts with a Constrained Variational Framework. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Kim, H.; Mnih, A. Disentangling by Factorising. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 2649–2658. [Google Scholar]

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep Learning Face Attributes in the Wild. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3730–3738. [Google Scholar]

- Paysan, P.; Knothe, R.; Amberg, B.; Romdhani, S.; Vetter, T. A 3D Face Model for Pose and Illumination Invariant Face Recognition. In Proceedings of the 2009 IEEE International Conference on Advanced Video and Signal Based Surveillance, Genova, Italy, 2–4 September 2009; pp. 296–301. [Google Scholar]

- Aubry, M.; Maturana, D.; Efros, A.A.; Russell, B.C.; Sivic, J. Seeing 3D Chairs: Exemplar Part-Based 2D-3D Alignment Using a Large Dataset of CAD Models. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3762–3769. [Google Scholar]

- Yang, Z.; Hu, Z.; Salakhutdinov, R.; Berg-Kirkpatrick, T. Improved Variational Autoencoders for Text Modeling using Dilated Convolutions. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 3881–3890. [Google Scholar]

- Zhu, Q.; Bi, W.; Liu, X.; Ma, X.; Li, X.; Wu, D. A Batch Normalized Inference Network Keeps the KL Vanishing Away. arXiv 2020, arXiv:2004.12585. [Google Scholar]

- Ma, J.; Zhou, C.; Cui, P.; Yang, H.; Zhu, W. Learning Disentangled Representations for Recommendation. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 5711–5722. [Google Scholar]

- Hou, Y.; Vig, E.; Donoser, M.; Bazzani, L. Learning Attribute-Driven Disentangled Representations for Interactive Fashion Retrieval. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 12147–12157. [Google Scholar]

- Bouchacourt, D.; Tomioka, R.; Nowozin, S. Multi-Level Variational Autoencoder: Learning Disentangled Representations From Grouped Observations. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Locatello, F.; Poole, B.; Raetsch, G.; Schölkopf, B.; Bachem, O.; Tschannen, M. Weakly-Supervised Disentanglement Without Compromises. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 6348–6359. [Google Scholar]

- Chen, H.; Chen, Y.; Wang, X.; Xie, R.; Wang, R.; Xia, F.; Zhu, W. Curriculum Disentangled Recommendation with Noisy Multi-feedback. In Proceedings of the Advances in Neural Information Processing Systems 34 (NeurIPS 2021), Virtual Event, 6–14 December 2021. [Google Scholar]

- Zhang, Y.; Zhu, Z.; He, Y.; Caverlee, J. Content-Collaborative Disentanglement Representation Learning for Enhanced Recommendation. In Proceedings of the Fourteenth ACM Conference on Recommender Systems, Virtual Event, 22–26 September 2020; pp. 43–52. [Google Scholar]

- Zhu, Y.; Chen, Z. Variational Bandwidth Auto-encoder for Hybrid Recommender Systems. IEEE Trans. Knowl. Data Eng. 2022. early access. [Google Scholar]

- Theodoridis, T.; Chatzis, T.; Solachidis, V.; Dimitropoulos, K.; Daras, P. Cross-modal Variational Alignment of Latent Spaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 4127–4136. [Google Scholar]

- Lee, M.; Pavlovic, V. Private-Shared Disentangled Multimodal VAE for Learning of Latent Representations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Nashville, TN, USA, 19–25 June 2021; pp. 1692–1700. [Google Scholar]

- Chen, Z.; Wang, X.; Xie, X.; Wu, T.; Bu, G.; Wang, Y.; Chen, E. Co-Attentive Multi-Task Learning for Explainable Recommendation. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence. International Joint Conferences on Artificial Intelligence Organization, Macau, China, 10–16 August 2019; pp. 2137–2143. [Google Scholar]

- Truong, Q.T.; Lauw, H. Multimodal Review Generation for Recommender Systems. In Proceedings of the The World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 1864–1874. [Google Scholar]

- Hou, M.; Wu, L.; Chen, E.; Li, Z.; Zheng, V.W.; Liu, Q. Explainable Fashion Recommendation: A Semantic Attribute Region Guided Approach. arXiv 2019, arXiv:1905.12862. [Google Scholar]

- Chen, X.; Chen, H.; Xu, H.; Zhang, Y.; Cao, Y.; Qin, Z.; Zha, H. Personalized Fashion Recommendation with Visual Explanations Based on Multimodal Attention Network: Towards Visually Explainable Recommendation. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 765–774. [Google Scholar]

- Liu, W.; Li, R.; Zheng, M.; Karanam, S.; Wu, Z.; Bhanu, B.; Radke, R.J.; Camps, O. Towards Visually Explaining Variational Autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Laenen, K.; Zoghbi, S.; Moens, M.F. Web Search of Fashion Items with Multimodal Querying. In Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, Marina Del Rey, CA, USA, 5–9 February 2018. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.S.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Yang, Z.; He, X.; Gao, J.; Deng, L.; Smola, A.J. Stacked Attention Networks for Image Question Answering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 21–29. [Google Scholar]

- Liu, C.; Mao, Z.; Liu, A.A.; Zhang, T.; Wang, B.; Zhang, Y. Focus Your Attention: A Bidirectional Focal Attention Network for Image-Text Matching. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 3–11. [Google Scholar]

- Vasileva, M.I.; Plummer, B.A.; Dusad, K.; Rajpal, S.; Kumar, R.; Forsyth, D.A. Learning Type-Aware Embeddings for Fashion Compatibility. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zoghbi, S.; Heyman, G.; Gomez, J.C.; Moens, M.F. Fashion Meets Computer Vision and NLP at e-Commerce Search. Int. J. Comput. Electr. Eng. 2016, 8, 31–43. [Google Scholar] [CrossRef]

- Lee, K.H.; Chen, X.; Hua, G.; Hu, H.; He, X. Stacked Cross Attention for Image-Text Matching. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. The International Conference on Learning Representations. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Liu, Z.; Luo, P.; Qiu, S.; Wang, X.; Tang, X. DeepFashion: Powering Robust Clothes Recognition and Retrieval with Rich Annotations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

| Polyvore Outfits | ||||||

|---|---|---|---|---|---|---|

| Disjoint | Non-Disjoint | |||||

| Model | OC | FITB | Sparsity | OC | FITB | Sparsity |

| auc | acc | % | auc | acc | % | |

| TypeAware [31] | 81.29 | 53.28 | - | 81.94 | 54.35 | - |

| -VAE [5] | 63.70 | 37.29 | - | 66.74 | 39.13 | - |

| DMVAE [20] | 80.20 | 52.97 | - | 82.36 | 55.76 | - |

| E-BFAN | 82.65 | 54.90 | 73.28 | 89.11 | 60.34 | 80.73 |

| E-VAE | 81.90 | 52.24 | 82.60 | 88.41 | 58.87 | 83.93 |

| Amazon Dresses | |||||||

|---|---|---|---|---|---|---|---|

| Model | Image to Text | Text to Image | Sparsity | ||||

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | % | |

| 3A [27] | 6.80 | 19.30 | 30.00 | 8.80 | 21.80 | 32.10 | - |

| SCAN [33] | 0.00 | 0.20 | 0.80 | 0.30 | 0.40 | 0.80 | - |

| BFAN [30] | 9.20 | 28.80 | 41.90 | 16.90 | 38.60 | 52.00 | - |

| -VAE [5] | 0.00 | 1.20 | 2.20 | 0.40 | 1.20 | 2.40 | - |

| VarAlign [19] | 5.30 | 15.10 | 21.40 | - | - | - | - |

| DMVAE [20] | 9.80 | 28.70 | 42.40 | 11.10 | 28.60 | 41.00 | - |

| E-BFAN () | 6.20 | 26.10 | 39.60 | 15.40 | 36.40 | 50.10 | 69.62 |

| E-BFAN () | 6.90 | 27.30 | 41.30 | 17.50 | 39.60 | 52.40 | 69.67 |

| E-VAE () − | 6.80 | 27.00 | 39.70 | 15.20 | 37.10 | 47.30 | 90.59 |

| E-VAE () | 6.70 | 26.40 | 42.20 | 15.60 | 37.20 | 49.10 | 88.89 |

| E-VAE () | 9.20 | 32.10 | 47.20 | 18.20 | 42.10 | 53.40 | 88.87 |

| Amazon Dresses | ||

|---|---|---|

| Attribute | Five Attributes Sharing Non-Zero Components | Factor of Variation |

| purple | {gray, navy, brown, blue, } | color |

| pink | {beige, coral, brown, yellow, )} | color |

| reptile | {plaid, aztec, chevron, ombre, horizontal-stripes } | texture/print |

| zebra-print | {aztec, chevron, snake-print, ombre, animal-print} | texture/print |

| floral-print | {paisley, gingham, aztec, snake-print, plaid} | texture/print |

| short | {tea-length, knee-length, , , } | dress length |

| viscose | {lyocell, cashmere, ramie, wool, acetate} | fabric |

| acetate | {ramie, lyocell, cashmere, wool, viscose} | fabric |

| rhinestones | {ruffles, , , chains, sequins} | accessories/detailing |

| scalloped | {crochet, metallic, fringe, , embroidered} | accessories/detailing |

| cocktail | {nightclub, homecoming, sports, , career} | style/occassion |

| wear-to-work | {career, office, , , athletic} | style/occassion |

| crew | {turtleneck, boatneck, peter-pan, , } | neckline/collar |

| scoop | {, square-neck, cowl, turtleneck, boatneck} | neckline/collar |

| spring | {summer, fall, winter, , } | season |

| Polyvore Outfits | ||

| Attribute | Five Attributes Sharing Non-Zero Components | Factor of Variation |

| brown | {khaki, camel, , tan, burgundy} | color |

| gingham | {check, plaid, polka, polka-dot, | texture/print |

| crepe | {silk, satin, twill, acetate, silk-blend} | fabric |

| beaded | {braided, crochet, feather, leaf, flower} | accessories/detailing |

| chic | {retro, stylish, boho, bold, inspired} | style |

| high-neck | {neck, scoop, halter, hollow, neckline} | neckline/collar |

| Alexander-McQueen | {dsquared, Prada, Balenciaga, Balmain, Dolce-Gabbana} | brand |

| ankle-strap | {, slingback, buckle-fastening, strappy, straps} | ankle strap type |

| bag | {canvas, crossbody, handbag, tote, clutch} | bag type |

| covered-heel | {high-heel, heel-measures, slingback, stiletto, stiletto-heel} | heel type |

| bomber | {jacket, hoodie, biker, moto, letter} | jacket type |

| distressed | {ripped, boyfriend-jeans, denim, topshop-moto, washed} | jeans style |

| crop-top | {bra, cami, crop, tank, top} | tops |

| pants | {leggings, maxi-skirt, pant, trousers, } | bottoms |

| relaxed-fit | {relaxed, , loose-fit, rounded, size-small } | size/shape |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Laenen, K.; Moens, M.-F. Learning Explainable Disentangled Representations of E-Commerce Data by Aligning Their Visual and Textual Attributes. Computers 2022, 11, 182. https://doi.org/10.3390/computers11120182

Laenen K, Moens M-F. Learning Explainable Disentangled Representations of E-Commerce Data by Aligning Their Visual and Textual Attributes. Computers. 2022; 11(12):182. https://doi.org/10.3390/computers11120182

Chicago/Turabian StyleLaenen, Katrien, and Marie-Francine Moens. 2022. "Learning Explainable Disentangled Representations of E-Commerce Data by Aligning Their Visual and Textual Attributes" Computers 11, no. 12: 182. https://doi.org/10.3390/computers11120182

APA StyleLaenen, K., & Moens, M.-F. (2022). Learning Explainable Disentangled Representations of E-Commerce Data by Aligning Their Visual and Textual Attributes. Computers, 11(12), 182. https://doi.org/10.3390/computers11120182