1. Introduction

Unexploded ordnance (UXO) is a global problem and an ongoing threat due to the possibility of its remaining active and potentially explosive even decades after a conflict has ended, as reported by L. Safatly et al. [

1]. The most significant problem posed by UXO is that it endangers civilian lives. Furthermore, the risk of explosives impacts the lives of troops and explosives specialists, as well as the country’s development. Unexploded ordnance is increasing in continued wars, such as the Russian–Ukrainian conflict. More than 54,000 unexploded ordnances were located and destroyed in the first month of the Ukraine–Russia crisis [

2], Ukraine being one of the countries most highly contaminated by unexploded ordnance. The Landmine and Cluster Munition Monitor published its 23 annual reports in 2021 [

3], which stated that 2020 was the sixth consecutive year that recorded a high number of casualties due to increased conflict and unexploded ordnance pollution since 2015. The report also said that 80% of the deaths were civilians (4437), with children constituting at least half of the civilian casualties (1872) while the remaining 20% consisted of military casualties (1105). Furthermore, as stated by the Geneva International Centre for Humanitarian Demining (GICHD), there are over 60 countries contaminated with (UXO) [

4].

Demining or explosive removal are the sole options for eliminating risks of unexploded ordnance, despite the dangers, time-consuming, and cost. Demining approaches, in general, are classified into three types: mechanical clearance, robotic clearance, and manual clearance, according to R. Achkar et al. [

5]. Animals and machine clearance methods have been increasingly used in demining operations. However, most UXO and ERW are still removed using the manual clearance method, as reported by M. A. V. Habib [

6]. Therefore, it is impossible to dispense with the intervention of specialist human operators in the removal of mines and explosives, despite the threat of wounding or loss of life. According to reports [

3] from 2017 to 2020, over 250 human operators were killed and injured, although the number may be higher, since the report’s coverage was limited to specific regions.

Recently, researchers developed artificial intelligence-based strategies to assist specialists and human operators in detecting explosives. K. Tbarki et al. [

7] used one-class classification to detect and locate whether the buried object was UXO or clutter. The GPR data were used as input to the classifier to classify whether the detected object was a UXO. The authors evaluated the proposed method by conducting a comparison study with other methods. Similarly, K. Tbarki et al. [

8] used a support vector machine (SVM) for landmine detection. The GPR data were utilised as input to the (SVM). The authors measured the performance of their proposed method with various techniques, such as receiver operating characteristic (ROC) and running time. The results indicated that the method was successful in landmine detection. The online dictionary learning technique was developed by F. Giovanneschi et al. [

9] to form the received GPR data into sparse representations (SR) to improve the feature extraction process for successful landmine detection. This method takes advantage of the fact that much of the training data are likely correlated. The authors conducted a comparison study with three online algorithms to evaluate the proposed method. F. Lombardi et al. [

10] presented adaptable demining equipment based on GPR sensors and employed convolutional neural network (CNN) to process the GPR data to detect the buried UXO. The results of the experiments showed excellent distinguishing accuracy between UXO and clutter objects.

Metal Mapper is another sensor used to detect buried objects. J. B. Sigman et al. [

11] developed an automatic detection approach based on the Metal Mapper sensor coupled with the supervised learning technique naïve Bayes classifier. The proposed method can automatically detect the UXO without requiring user intervention, reducing cost and time. Furthermore, the minimum connected component (MCC) approach based on the mathematical concept of graph theory was presented in V. Ramasamy et al. [

12] to detect buried UXO. The method explored the 2D image output from the GPR sensor. The proposed method demonstrated its effectiveness in feature extraction in the training datasets. Another form of hardware used is a metal-detector handheld device designed to identify suspicious objects containing metallic components. L. Safatly et al. [

1] proposed a landmine recognition and classification method. A robotic system with a metal detector was designed to build the dataset. The authors used several machine learning algorithms, such as boosting bagging and CNN, to evaluate the system’s precision in discrimination between UXO and clutter objects. Computer vision was also explored to detect and classify the landmines. R. Achkar et al. [

5,

13] proposed a robot to detect the landmines and identify the type and model by employing a neural network. A. Lebbad et al. [

14] suggested a system based on computer vision focused on landmine classification issues by developing an image-based technique that utilised neural networks trained on a self-built and limited dataset.

Most researchers focused on detecting the explosives or distinguishing between UXO and clutter, ignoring the identification of UXO and its properties. Identifying UXO is critical to assisting operators in the minefield in avoiding mistakes and thus saving their lives by providing valuable information regarding the recognised object. Therefore, developing an application capable of identifying explosives and providing the minefield operator with information about the recognised object is required.

Augmented reality (AR) is a promising technology for supporting users in performing complex tasks and activities due to its ability to incorporate digital information with users’ real-world perceptions, as shown by E. Marino et al. [

15]. AR is becoming increasingly widespread in supporting operators in the workplace by reducing human mistakes and reducing reliance on operator memory, according to D. Ariansyah et al. [

16]. AR applications have been developed and effectively deployed in a variety of fields, such as education, as in M. N. I. Opu et al. [

17], heritage visualisation, as in G. Trichopoulos et al. [

18], and training, as in H. Xue et al. [

19].

Various AR applications were proven to be effective, valid, practical, and reliable approaches to risk identification, safety training, and inspection in the study by X. Li et al. [

20]. AR can recognise unsafe settings and produce potential scenarios and visualisations using conventional safety training methods, as shown by K. Kim et al. [

21]. AR is also employed as a platform for presenting immersive visualisations of fall-related risks on construction sites, as reported by R. Eiris Pereira et al. [

22]. Furthermore, the capabilities of AR in presenting various visual information in real time have proven to be an advantage in emergency management and a better alternative than traditional methods, such as maps, according to Y. Zhu et al. [

23].

In the literature, two studies based on AR are presented with relevance for the UXO field. T. Maurer et al. [

24] developed a prototype that integrates AR with embedded training abilities into handheld detectors, improving the training process by enhancing the operator’s visualisation with AR in order to examine the locations of a previously scanned area. Golden West Design Lab has implemented a marker-based AR system (AROLS) in Vietnam to train the local minefield operators. A set of markers were designed that start the AR process, as reported by A. D. J. T. J. o. C. W. D. Tan [

25]. Both studies provided an AR application for training minefield operators that was only intended to be used indoors. Furthermore, these applications were unable to identify UXO or offer relevant information, and therefore did not support UXO deminers during the clearance process in real time. To the best of the author’s knowledge, there is no application designed to identify UXO types using the AR technique.

This research proposes a new augmented-reality-based approach for identifying UXO types in real-time, enhancing field operators’ productivity, and assisting in the disposal process. The main contributions of this paper are as follows.

The proposed application presents a unique, innovative, and inexpensive UXO classification method through AR technology.

The proposed application provides information in real time related to the detected object.

It can reduce the risk imposed on deminers during UXO clearance operations by displaying visual information illustrating the type and the components of the UXO.

An evaluation study in a different setting and a questionnaire were conducted to measure the performance and usability of our proposed application.

This paper is organised as follows:

Section 2 defines the research background.

Section 3 presents a detailed description of the methodology. The experimental process is described in

Section 4.

Section 5 defines the evaluation of the application through a usability test. Finally, the conclusion and future work are presented in the section.

6. Conclusions and Future Work

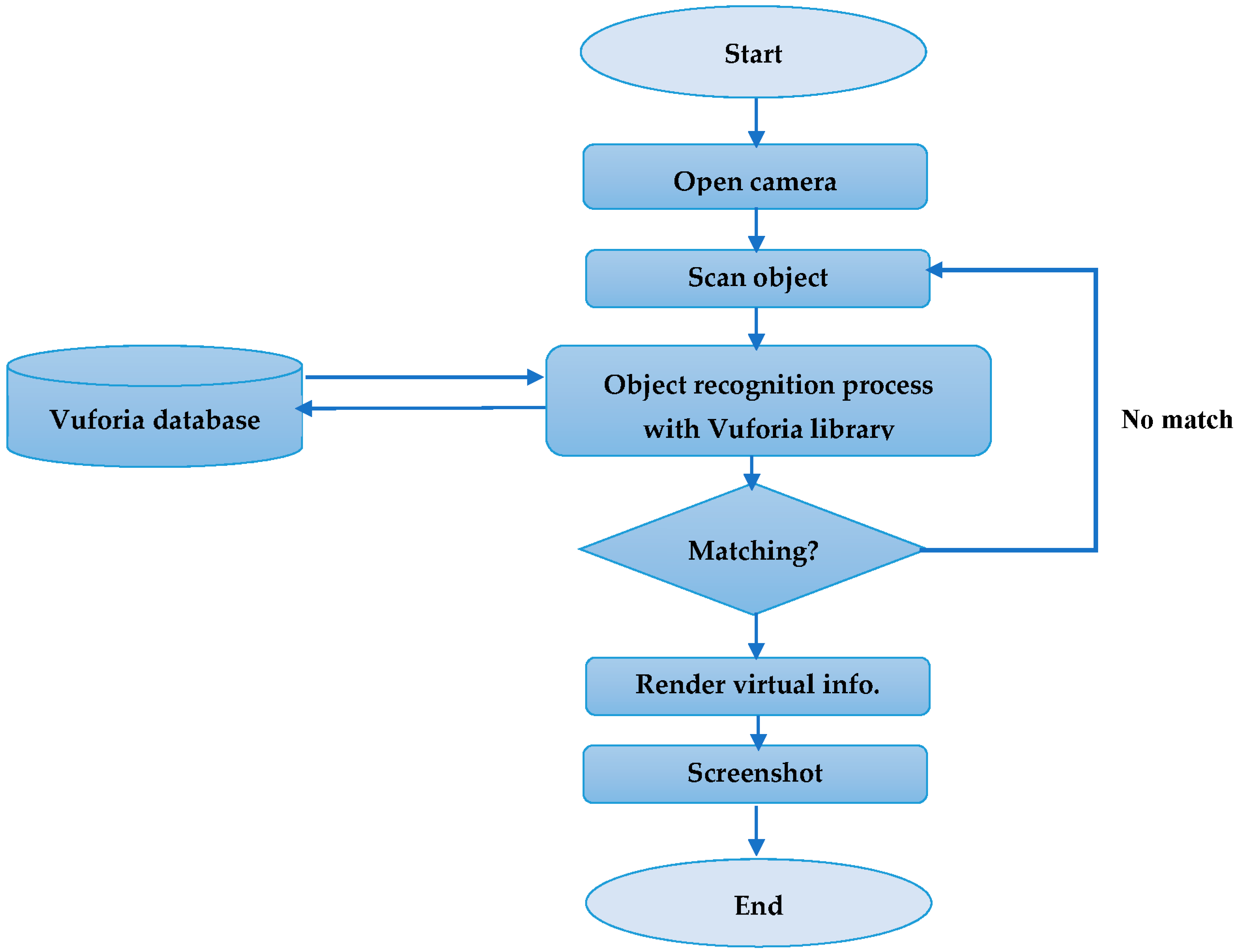

This study introduced an augmented reality (AR) mobile app designed to assist minefield operators in recognising and classifying UXO and providing information on the classified UXO and how to handle it. The AR application works on-site through a mobile device and was designed and implemented in Unity 3D software with Vuforia SDK. In addition, 2D image visualisation is also rendered that shows the components that construct the UXO in a disassembled fashion. As working with real and active UXO samples was unfeasible, 3D printing technology was employed to print replicas of four UXO samples, namely vs-50, PMN-2, VS-MK, and 45 mm mortars, to build the database. These four UXO samples were selected based on their shapes and widespread use.

The application was tested to measure and ensure the soundness of the application’s performance. A series of preliminary tests were performed to evaluate the application’s functionality according to the following aspects: accuracy, lighting, and distance. The testing results revealed that the application could successfully perform in excellent and moderate lighting with a distance of 10 to 35 cm. However, the AR application could not function in insufficient lighting. As for the recognition accuracy, each UXO sample was scanned 20 times and the total recognition accuracy was calculated. The recognition rates of VS-50 and VS-MK were 100% and 95%, respectively. In comparison, the recognition rates of PMN-2 and 45 mm mortars were 75% and 60%, respectively. The overall recognition success rate reached 82.5%. The difference in the recognition rates due to the disparity in the number of features of each object affected the accuracy of object recognition. Furthermore, usability testing based on ISO 9421-11 standards was employed to evaluate the AR application. A questionnaire of 13 questions was conducted and submitted by 20 deminers. The usability questionnaire was based on three elements: satisfaction, efficacy, and efficiency. The results showed that the application reduced the time required to classify the unexploded ordnance object, and reduced the hazards imposed on the deminer during the demining process. Based on the survey results, we can report that AR technology is an excellent medium to aid minefield operators during the demining or recognition process, help reduce cognitive load, and minimise hazardous human errors that can put the operators in a life-threatening situation. Thus, the proposed AR application has established its capability to help deminers to complete complex tasks. Future work will explore the prospects of implementing advanced computer vision algorithms, such as deep learning, to improve object recognition and test the application in real scenarios. In addition, creating a complete UXO dataset is mandatory to increase the number of UXO objects to which such technology can be applied. In addition, utilising the Microsoft HoloLens as a display device is suggested to make the AR experience more comfortable.