BigDaM: Efficient Big Data Management and Interoperability Middleware for Seaports as Critical Infrastructures

Abstract

:1. Introduction

- Volume, Velocity, Variety, Veracity, and Value: Also known as the famous “5 Vs” of big data. Regarding Volume, big data sets are characterized by their sheer size, often comprising terabytes, petabytes, or even exabytes of data. Storing and processing such vast amounts of data can strain computing resources and require specialized infrastructure. As for Velocity, data are generated at an unprecedented speed, especially in fields like IoT (Internet of Things) and social media. Real-time or near-real-time analysis of streaming data can be challenging, as it requires low-latency processing capabilities. For Variety, big data is diverse and can come in various formats, such as structured, semi-structured, and unstructured data. This variety includes text, images, videos, sensor data, and more. Integrating and analyzing data from different sources and formats can be complex. Regarding Veracity, data quality and reliability can be questionable in big data. Inaccurate or incomplete data can lead to erroneous conclusions. Cleaning and validating data to ensure accuracy is a significant challenge. And as for Value, despite the vast amount of data available, extracting valuable insights is not guaranteed. Finding meaningful patterns, trends, and correlations often requires advanced analytics techniques, including machine learning and data mining.

- Data Management and Infrastructure Challenges: Dealing with the exponential growth in data volume and ensuring systems can scale efficiently. Managing computational resources, such as CPU, memory, and storage, is complex and requires careful optimization. Efficiently storing, indexing, and retrieving data in distributed storage systems, especially with large-scale data, presents significant challenges. Balancing the performance requirements of big data analysis with the associated infrastructure costs is an ongoing concern.

- Data Integration and Processing Challenges: Implementing and fine-tuning sophisticated algorithms and models for analyzing big data can be challenging, demanding expertise in machine learning, statistics, and data science. Combining data from diverse sources, each with its own structure and format, requires robust data integration tools and techniques. Gaining meaningful insights from large and complex datasets through data exploration and visualization is a complex task. Ensuring that different tools and systems used in big data analysis can work together seamlessly is a constant challenge.

- Data Security, Ethics, and Governance Challenges: Storing and processing sensitive data in big data systems can pose significant security and privacy risks, requiring measures to ensure data confidentiality, integrity, and regulatory compliance (e.g., GDPR). Addressing ethical and legal concerns surrounding data analysis, such as data privacy, algorithmic bias, and responsible data usage, is crucial. Establishing robust data governance policies and practices is essential for maintaining data quality, security, and compliance. Additionally, overcoming the shortage of skilled data scientists and analysts who can work effectively with big data remains a significant challenge.

- Environmental and Long-term Challenges: The energy consumption of large-scale data centers and computing resources used for big data analysis can have a significant environmental impact, making sustainability an important concern. Archiving and preserving big data for future analysis can be challenging, especially given rapidly evolving technologies and data formats, requiring long-term data preservation strategies and solutions.

2. CI Data Management Proposal

3. Related Work

3.1. EU Research Projects and Initiatives

3.2. Other Important Research Work

4. Business Model and Services

- The data consumer category may be interested in data and also have the ability to provide data. Cargo, transport, and logistics companies are highly interested in people’s and trucks’ mobility information. A road traffic optimization will lead to more efficient routing of cargo transferring and lead to a revenue increase. Such a category of data consumers may benefit not only by using data and services, but also benefit by offering their data in terms of transferring routes, container volumes, etc. This will result in even optimized traffic conditions. This is one case where the data consumer can be considered also as a data producer.

- The research community and academia are in many cases the birthplace of new innovative services and algorithms. Therefore, their interest in using large volumes of data that are accurate, secured, and up to date, for them to train their algorithms and create AI-based services, positions them in a significant data consumer category. The services that may be produced by using the scenario’s available datasets could be offered as a service to a data-driven platform. This is also a case where the data consumer can be also considered as a data producer.

- Universities is a category that can be combined with the research communities in terms of data available for research, obtained by the aforementioned scenario. These institutions can benefit from the use of the data and increase/improve their research exposure and additionally offer data in terms of data streams of their online courses in the aviation industry, logistics, etc. Once again, this is a case where the data consumer can be considered also as a data producer.

- Startups and SMEs are considered among the highly developed innovators. They develop services by creating and using algorithms based on data availability. The number of startups and SMEs that are related to the aviation industry has rapidly increased over the last few years; therefore, the need to feed these companies with datasets will not only increase the revenue of the data providers but also create new innovative services that will be based on these datasets. Moreover, startups and SMEs are in pursuit of new markets to make an entrance and try to sell their products/services. Once more, this is a case where the data consumer can be considered also as a data producer.

- Public Authorities in any form are always aiming to improve the services offered to the citizens and also improve their quality of file. In order to achieve this, they need information given by available data. In almost every subcategory of public authorities, the information that is most needed is the mobility of citizens, in order to have a better view of the region and therefore set proper policies. More specifically, they need data and analytics to define policies for the cases of overtourism, increase visitors and maximize their experience, improve services and facilities/public transportation, etc.

5. Data Model and Interoperability

5.1. Data Modeling Methodology

5.2. Enabling Data Interoperability via the Adoption of Common Data Models

5.3. Interoperability Layer

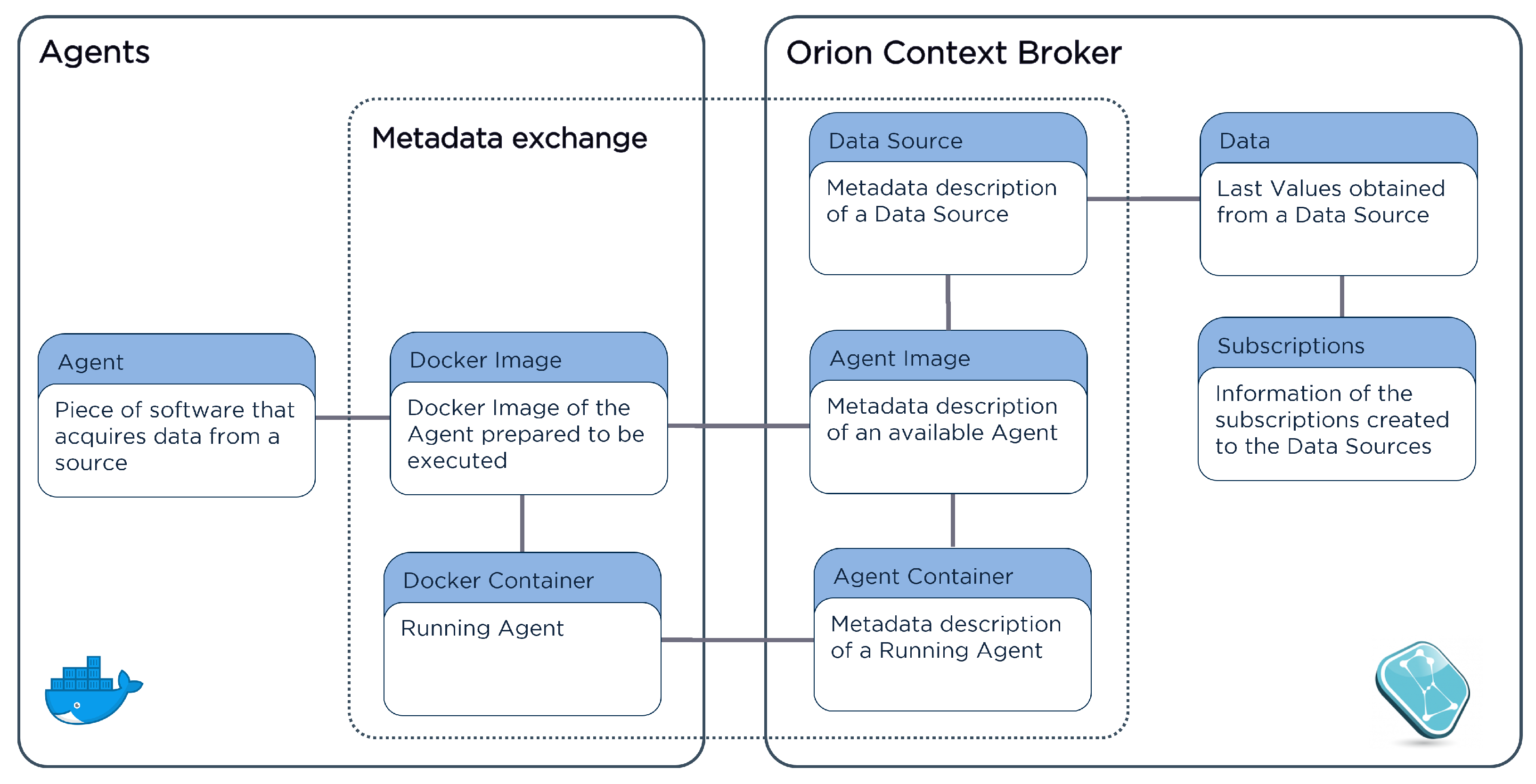

6. Data Processing and Virtualization

6.1. Pre-Processing and Filtering Tool

- Elimination of white spaces from all cells containing string-type data.

- Conversion of empty cells and instances with ‘NULL’ string values to ‘nan’ (not a number) values across all cells.

- Removal of records or rows from the dataset that lack datetime values or contain incorrect ones.

- Conversion of correct datetime values to the UTC format for consistency and standardization purposes.

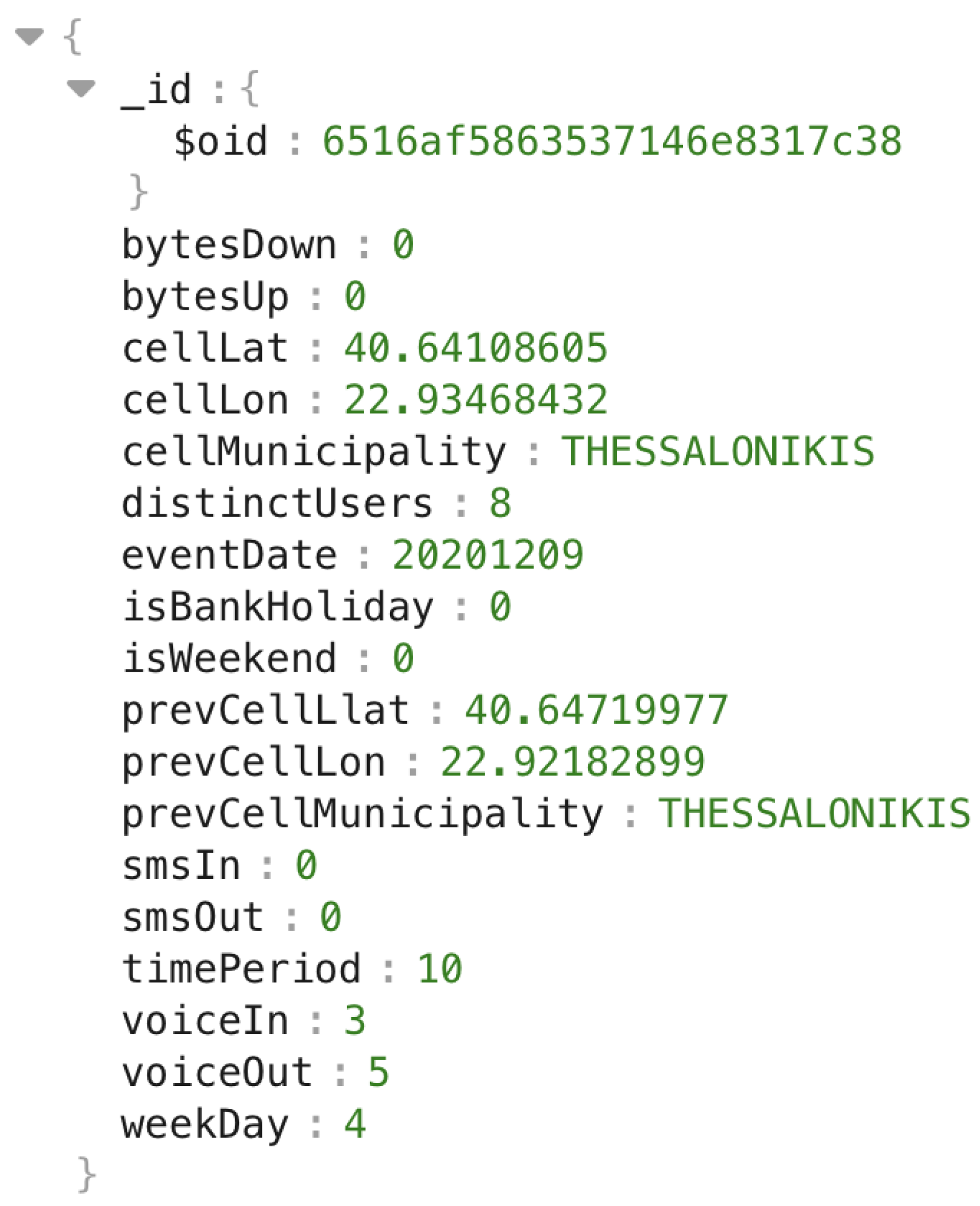

6.2. Virtual Data Repository

6.3. Virtual Data Container

- A JSON object, which includes:

- -

- A string field denoting the dataset’s name, and another one for the dataset’s ID.

- -

- A JSON array containing the rules as individual JSON objects.

- Each JSON object (rule) in the array contains:

- -

- A string field for its name.

- -

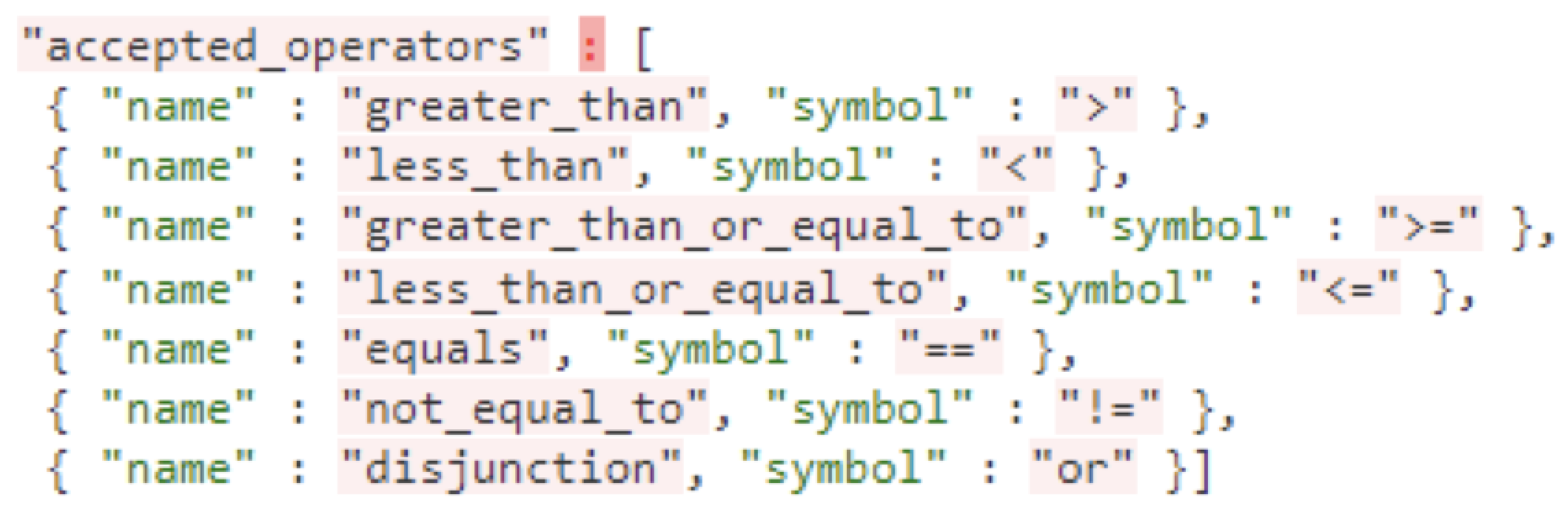

- Another JSON object representing the rule itself, comprising the “subject_column”, “operator”, and “object” fields.

- -

- If the “operator” is a disjunction (using the “or” expression), the “object” field should be a JSON array, containing two or more objects, each with single string “operator” and “object” fields.

- The primary objective of a rule is to apply filters to one subject (column) at a time and not to combine multiple subjects (columns). If a rule involves more than one column as subjects, it might be seen as more of a “pre-processing” step rather than a direct “filtering” action. Additionally, modifying the content of specific rows/values or removing rows with specific value types falls under a lower-level operation, compared to what the rules’ system suggests.

- The Data Processing and Virtualization layer, being a generic framework, is intended to be applicable to various datasets. Therefore, creating rules that exceed the standard “subject-operator-object” architecture would contradict the layer’s generic nature. Implementing basic pre-processing steps for specific datasets is relatively simple and can be achieved in just a few lines of code. However, such specific pre-processing steps may not be suitable for other datasets, given the layer’s flexibility to handle diverse data types. Consequently, data scientists would have to resort to conditional solutions like using “if the incoming dataset is X, then apply these selected lines of code”. While this may be an easy solution, it compromises the fundamental generic nature of the Data Processing and Virtualization layer.

7. Performance Evaluation and Results

7.1. Interoperability

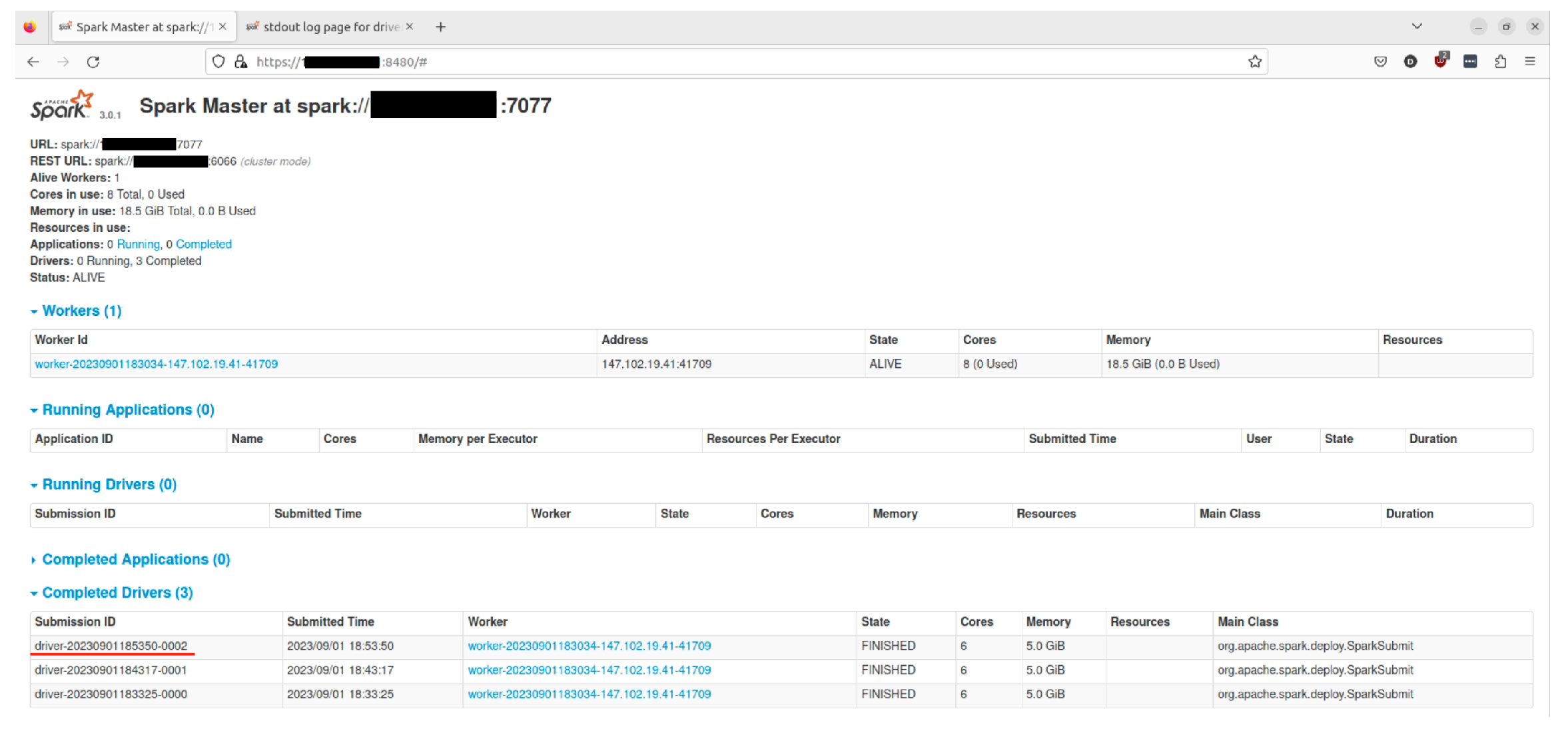

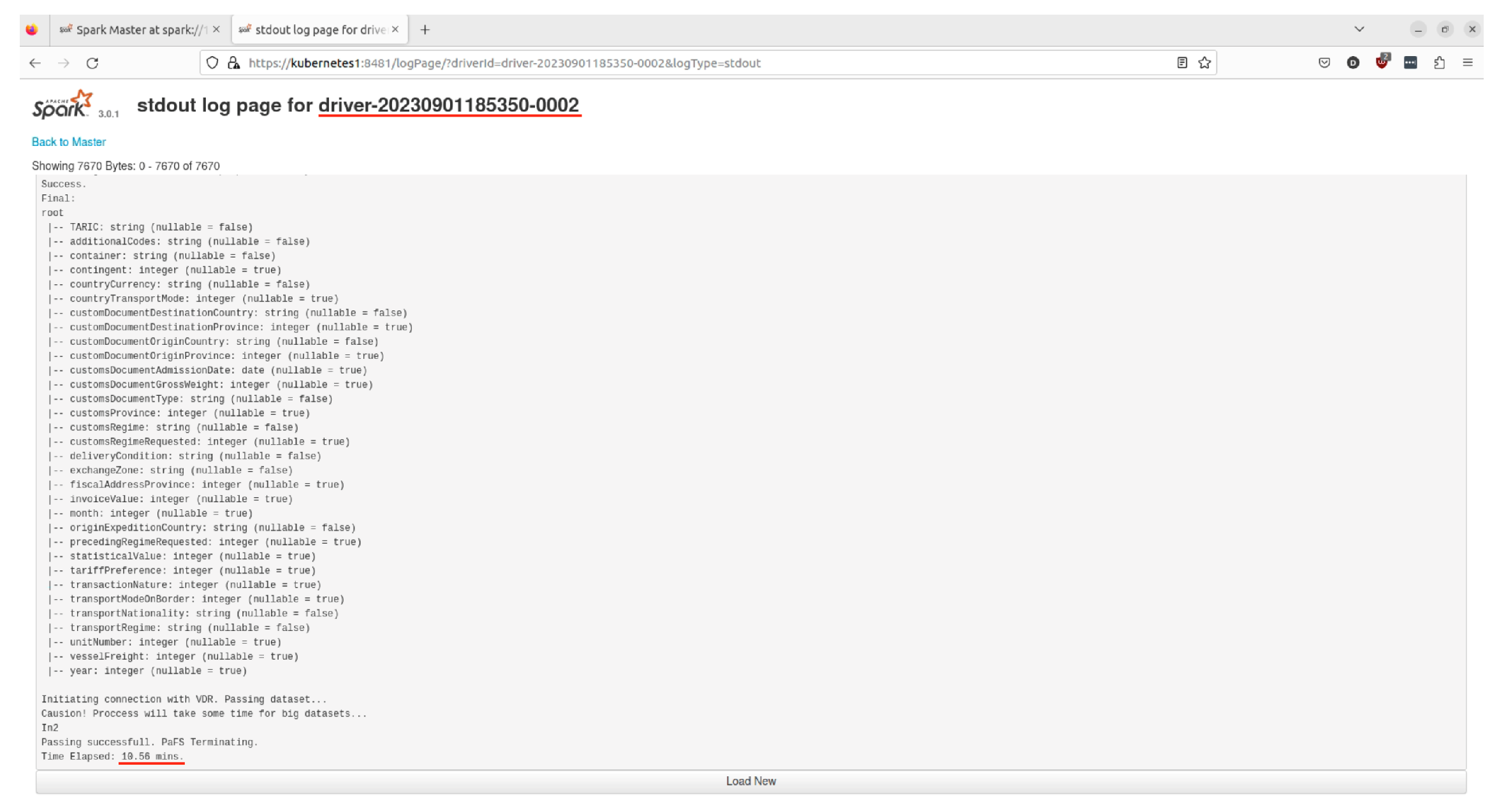

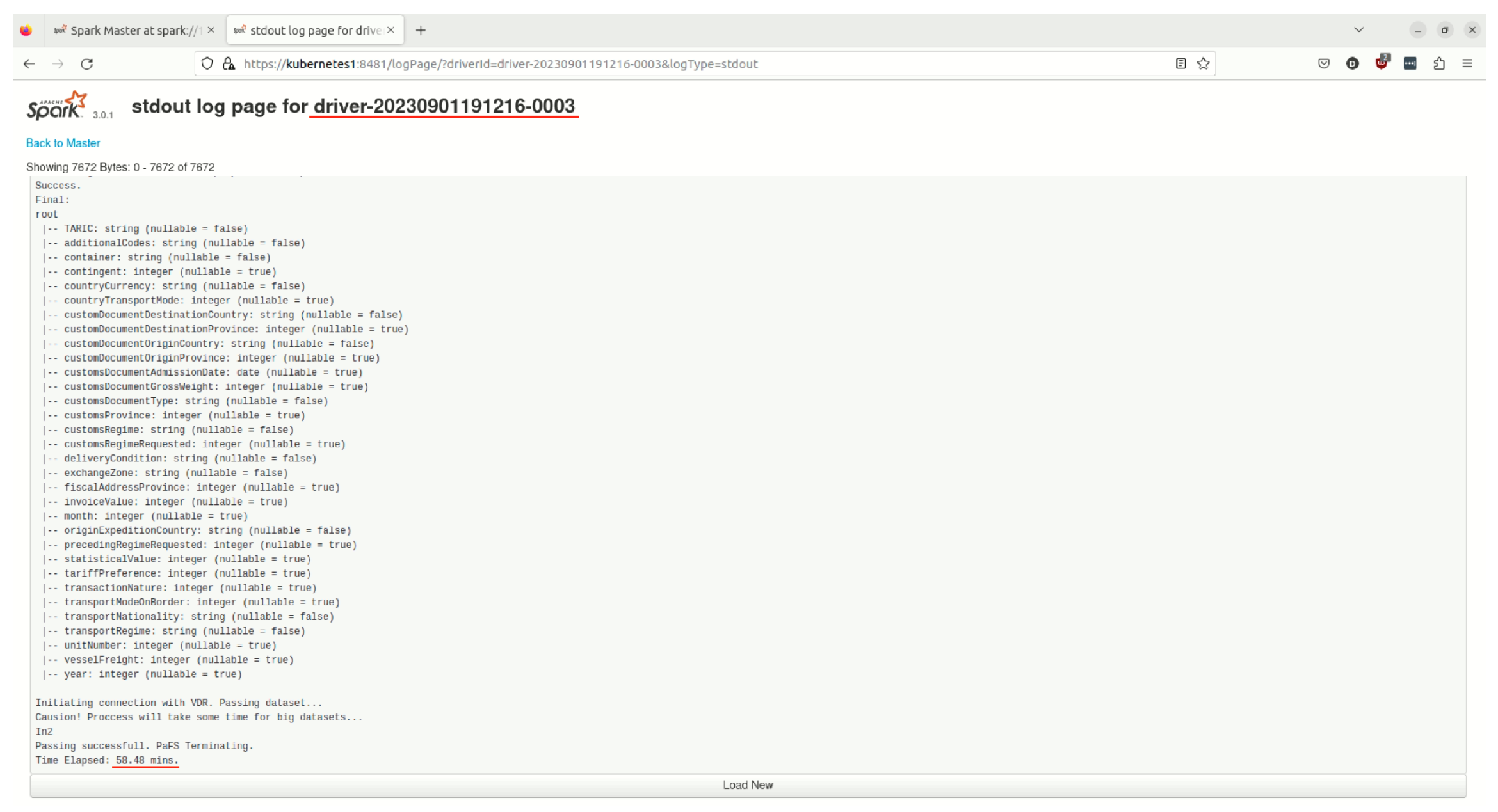

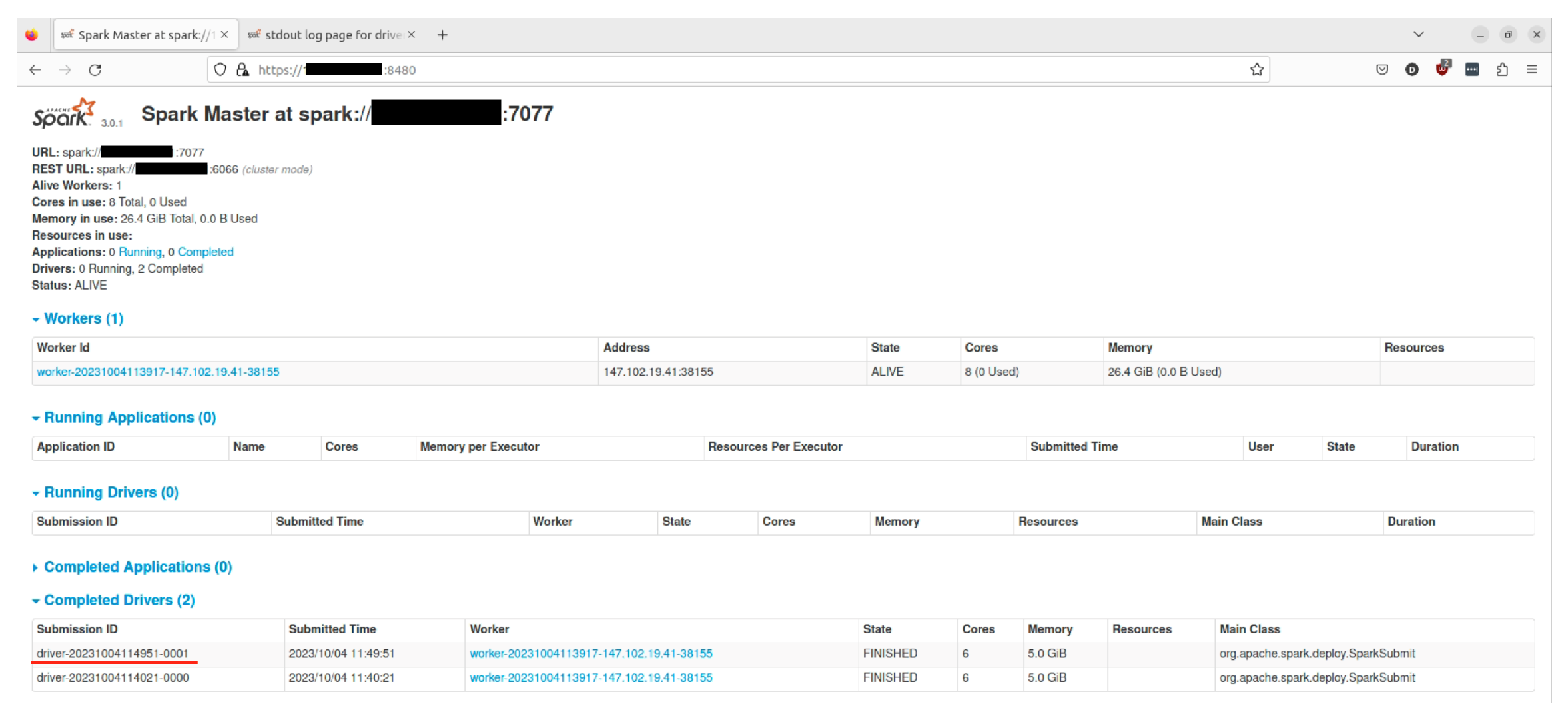

7.2. Data Processing and Virtualization

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rinaldi, S.M. Modeling and simulating critical infrastructures and their interdependencies. In Proceedings of the 37th Annual Hawaii International Conference on System Sciences, Big Island, HI, USA, 5–8 January 2004; p. 8. [Google Scholar]

- Moteff, J.D.; Copeland, C.; Fischer, J.W.; Resources, S.; Division, I. Critical Infrastructures: What Makes an Infrastructure Critical? Congressional Research Service, Library of Congress: Washington, DC, USA, 2003. [Google Scholar]

- Steve Sutch, V. Understanding and Securing our Nation’s Critical Infrastructure. Available online: https://www.valentisinc.com/blog/understanding-and-securing-our-nations-critical-infrastructure (accessed on 3 October 2023).

- Bill Sweet, I.S. The Smart Meter Avalanche. Available online: https://spectrum.ieee.org/the-smart-meter-avalanche#toggle-gdpr (accessed on 3 October 2023).

- Nate Cochrane, I.N.A. US Smart Grid to Generate 1000 Petabytes of Data a Year. Available online: https://www.itnews.com.au/news/us-smart-grid-to-generate-1000-petabytes-of-data-a-year-170290 (accessed on 3 October 2023).

- Dynamics, D. Analyzing Energy Consumption: Unleashing the Power of Data in the Energy Industry. Available online: https://www.datadynamicsinc.com/blog-analyzing-energy-consumption-unleashing-the-power-of-data-in-the-energy-industry/ (accessed on 3 October 2023).

- Big Data and Transport Understanding and Assessing Options—International Transport Forum. Available online: https://www.itf-oecd.org/sites/default/files/docs/15cpb_bigdata_0.pdf (accessed on 3 October 2023).

- Jiang, W.; Luo, J. Big data for traffic estimation and prediction: A survey of data and tools. Appl. Syst. Innov. 2022, 5, 23. [Google Scholar] [CrossRef]

- Zahid, H.; Mahmood, T.; Morshed, A.; Sellis, T. Big data analytics in telecommunications: Literature review and architecture recommendations. IEEE/CAA J. Autom. Sin. 2019, 7, 18–38. [Google Scholar] [CrossRef]

- Marinakis, A.; Segui, M.J.; Pellicer, A.B.; Palau, C.E.; Gizelis, C.A.; Nikolakopoulos, A.; Misargopoulos, A.; Nikolopoulos-Gkamatsis, F.; Kefalogiannis, M.; Varvarigou, T.; et al. Efficient Data Management and Interoperability Middleware in Business-Oriented Smart Port Use Cases. In Proceedings of the IFIP International Conference on Artificial Intelligence Applications and Innovations, León, Spain, 14–17 June 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 108–119. [Google Scholar]

- DataPorts Horizon 2020 EU Research Project. Available online: https://dataports-project.eu/ (accessed on 3 October 2023).

- Palau, C.E.; Fortino, G.; Montesinos, M.; Exarchakos, G.; Giménez, P.; Markarian, G.; Castay, V.; Fuart, F.; Pawłowski, W.; Mortara, M.; et al. Interoperability of Heterogeneous IoT Platforms; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Transforming Transport—Presentation. Available online: https://transformingtransport.eu/sites/default/files/2017-07/TTBROCHUREWEB.pdf (accessed on 3 October 2023).

- European Sea Ports Organisation—Conference. Available online: https://www.espo.be/ (accessed on 3 October 2023).

- International Association of Ports and Harbors. Available online: https://www.iaphworldports.org (accessed on 3 October 2023).

- The Worldwide Network of Port Cities. Available online: http://www.aivp.org/en/ (accessed on 4 October 2023).

- Drougkas, A.; Sarri, A.; Kyranoudi, P.; Zisi, A. Port Cybersecurity. Good practices for cybersecurity in the maritime sector. ENSISA 2019, 10, 328515. [Google Scholar]

- Kim, J.; Son, J.; Yoon, K. An implementation of integrated interfaces for telecom systems and TMS in vessels. Int. J. Eng. Technol. 2018, 10, 195–199. [Google Scholar] [CrossRef]

- The Marketplace of the European Innovation Partnership on Smart Cities and Communities. Available online: https://eu-smartcities.eu/ (accessed on 4 October 2023).

- BigDataStack H2020 Project. Available online: https://www.bigdatastack.eu (accessed on 4 October 2023).

- SmartShip H2020 Project. Available online: https://www.smartship2020.eu/ (accessed on 4 October 2023).

- Baek, J.; Vu, Q.H.; Liu, J.K.; Huang, X.; Xiang, Y. A secure cloud computing based framework for big data information management of smart grid. IEEE Trans. Cloud Comput. 2014, 3, 233–244. [Google Scholar] [CrossRef]

- Kaur, K.; Garg, S.; Kaddoum, G.; Bou-Harb, E.; Choo, K.K.R. A big data-enabled consolidated framework for energy efficient software defined data centers in IoT setups. IEEE Trans. Ind. Inform. 2019, 16, 2687–2697. [Google Scholar] [CrossRef]

- Luckow, A.; Kennedy, K.; Manhardt, F.; Djerekarov, E.; Vorster, B.; Apon, A. Automotive big data: Applications, workloads and infrastructures. In Proceedings of the 2015 IEEE International Conference on Big Data (Big Data), Santa Clara, CA, USA, 30 March–2 April 2015; pp. 1201–1210. [Google Scholar]

- Apache Hadoop—Framework. Available online: https://hadoop.apache.org (accessed on 4 October 2023).

- Dinov, I.D. Methodological challenges and analytic opportunities for modeling and interpreting Big Healthcare Data. Gigascience 2016, 5, s13742-016. [Google Scholar] [CrossRef] [PubMed]

- Bhat, S.A.; Huang, N.F.; Sofi, I.B.; Sultan, M. Agriculture-food supply chain management based on blockchain and IoT: A narrative on enterprise blockchain interoperability. Agriculture 2021, 12, 40. [Google Scholar] [CrossRef]

- Donta, P.K.; Sedlak, B.; Casamayor Pujol, V.; Dustdar, S. Governance and sustainability of distributed continuum systems: A big data approach. J. Big Data 2023, 10, 53. [Google Scholar] [CrossRef]

- Ganzha, M.; Paprzycki, M.; Pawłowski, W.; Solarz-Niesłuchowski, B.; Szmeja, P.; Wasielewska, K. Semantic Interoperability. In Interoperability of Heterogeneous IoT Platforms: A Layered Approach; Palau, C.E., Fortino, G., Montesinos, M., Exarchakos, G., Giménez, P., Markarian, G., Castay, V., Fuart, F., Pawłowski, W., Mortara, M., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 133–165. [Google Scholar] [CrossRef]

- Smart Data Models. Available online: https://github.com/smart-data-models (accessed on 4 October 2023).

- Bader, S.; Pullmann, J.; Mader, C.; Tramp, S.; Quix, C.; Müller, A.W.; Akyürek, H.; Böckmann, M.; Imbusch, B.T.; Lipp, J.; et al. The International Data Spaces Information Model—An Ontology for Sovereign Exchange of Digital Content. In International Semantic Web Conference; Springer International Publishing: Cham, Switzerland, 2020; pp. 176–192. [Google Scholar] [CrossRef]

- UN/CEFACT standards|UNECE. Available online: https://unece.org/trade/uncefact/mainstandards (accessed on 4 October 2023).

- BITA Standards Council (BITA)—Global Blockchain Business Council. Available online: https://gbbcouncil.org/bita-standards-council/ (accessed on 4 October 2023).

- Track & Trace|Container Shipping|DCSA. Available online: https://dcsa.org/standards/track-trace/ (accessed on 4 October 2023).

- IPSO Smart Objects—OMA SpecWorks. Available online: https://omaspecworks.org/develop-with-oma-specworks/ipso-smart-objects/ (accessed on 4 October 2023).

- SAREF: The Smart Applications REFerence Ontology. Available online: https://saref.etsi.org/core/v3.1.1/ (accessed on 4 October 2023).

- FIWARE—Open APIs for Open Minds. Available online: https://www.fiware.org/ (accessed on 4 October 2023).

- Privat, G.; Medvedev, A. Guidelines for Modelling with NGSI-LD. ETSI White Pap. 2021. [Google Scholar]

- ETSI-CIM. Available online: https://www.etsi.org/committee/cim (accessed on 4 October 2023).

- DataPorts Common Data Model. Available online: https://github.com/DataPortsProject/datamodel (accessed on 4 October 2023).

- pyngsi · PyPI. Available online: https://pypi.org/project/pyngsi/ (accessed on 4 October 2023).

- Fiware-Orion. Available online: https://fiware-orion.readthedocs.io/en/master/ (accessed on 4 October 2023).

- Fiware-Cygnus. Available online: https://fiware-cygnus.readthedocs.io/en/latest/ (accessed on 4 October 2023).

- Apache Flume. Available online: https://flume.apache.org/ (accessed on 4 October 2023).

- Apache Spark-Framework. Available online: https://spark.apache.org (accessed on 4 October 2023).

- García-Gil, D.; Ramírez-Gallego, S.; García, S.; Herrera, F. A comparison on scalability for batch big data processing on Apache Spark and Apache Flink. Big Data Anal. 2017, 2, 1. [Google Scholar] [CrossRef]

- MongoDB-Framework. Available online: https://www.mongodb.com (accessed on 4 October 2023).

- Kubernetes-Framework. Available online: https://kubernetes.io (accessed on 4 October 2023).

- Karypiadis, E.; Nikolakopoulos, A.; Marinakis, A.; Moulos, V.; Varvarigou, T. SCAL-E: An Auto Scaling Agent for Optimum Big Data Load Balancing in Kubernetes Environments. In Proceedings of the 2022 International Conference on Computer, Information and Telecommunication Systems (CITS), Piraeus, Greece, 13–15 July 2022; pp. 1–5. [Google Scholar]

- Apache NiFi-Framework. Available online: https://nifi.apache.org (accessed on 4 October 2023).

- Apache Kafka-Framework. Available online: https://kafka.apache.org (accessed on 4 October 2023).

- OTE Group-Telecommunications Provider. Available online: https://www.cosmote.gr/cs/otegroup/en/omilos_ote.html (accessed on 3 October 2023).

- Datamite European H2020 Project. Available online: https://datamite-horizon.eu (accessed on 3 October 2023).

| CPU Usage % | Mem. Usage | Mem. Usage % | |

|---|---|---|---|

| Baseline | 0.15 | 79.78 MB | 0.27 |

| Load test | 14.28 | 85.41 MB | 0.28 |

| 1% | 2.5% | 50% | 97.5% | Mean | Std. dev. | Min | |

|---|---|---|---|---|---|---|---|

| Requests/s | 336 | 336 | 360 | 406 | 366.2 | 21.28 | 336 |

| Data/s | 8.94 MB | 9.84 MB | 9.58 MB | 10.8 MB | 9.74 MB | 565 kB | 8.93 MB |

| 2.5% | 50% | 97.5% | 99% | Mean | Std. dev. | Max | |

|---|---|---|---|---|---|---|---|

| Latency (ms) | 4 | 26 | 51 | 56 | 26.74 | 9.46 | 86 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nikolakopoulos, A.; Julian Segui, M.; Pellicer, A.B.; Kefalogiannis, M.; Gizelis, C.-A.; Marinakis, A.; Nestorakis, K.; Varvarigou, T. BigDaM: Efficient Big Data Management and Interoperability Middleware for Seaports as Critical Infrastructures. Computers 2023, 12, 218. https://doi.org/10.3390/computers12110218

Nikolakopoulos A, Julian Segui M, Pellicer AB, Kefalogiannis M, Gizelis C-A, Marinakis A, Nestorakis K, Varvarigou T. BigDaM: Efficient Big Data Management and Interoperability Middleware for Seaports as Critical Infrastructures. Computers. 2023; 12(11):218. https://doi.org/10.3390/computers12110218

Chicago/Turabian StyleNikolakopoulos, Anastasios, Matilde Julian Segui, Andreu Belsa Pellicer, Michalis Kefalogiannis, Christos-Antonios Gizelis, Achilleas Marinakis, Konstantinos Nestorakis, and Theodora Varvarigou. 2023. "BigDaM: Efficient Big Data Management and Interoperability Middleware for Seaports as Critical Infrastructures" Computers 12, no. 11: 218. https://doi.org/10.3390/computers12110218

APA StyleNikolakopoulos, A., Julian Segui, M., Pellicer, A. B., Kefalogiannis, M., Gizelis, C.-A., Marinakis, A., Nestorakis, K., & Varvarigou, T. (2023). BigDaM: Efficient Big Data Management and Interoperability Middleware for Seaports as Critical Infrastructures. Computers, 12(11), 218. https://doi.org/10.3390/computers12110218