Revealing People’s Sentiment in Natural Italian Language Sentences

Abstract

:1. Introduction

2. Related Works

3. Proposed Approach

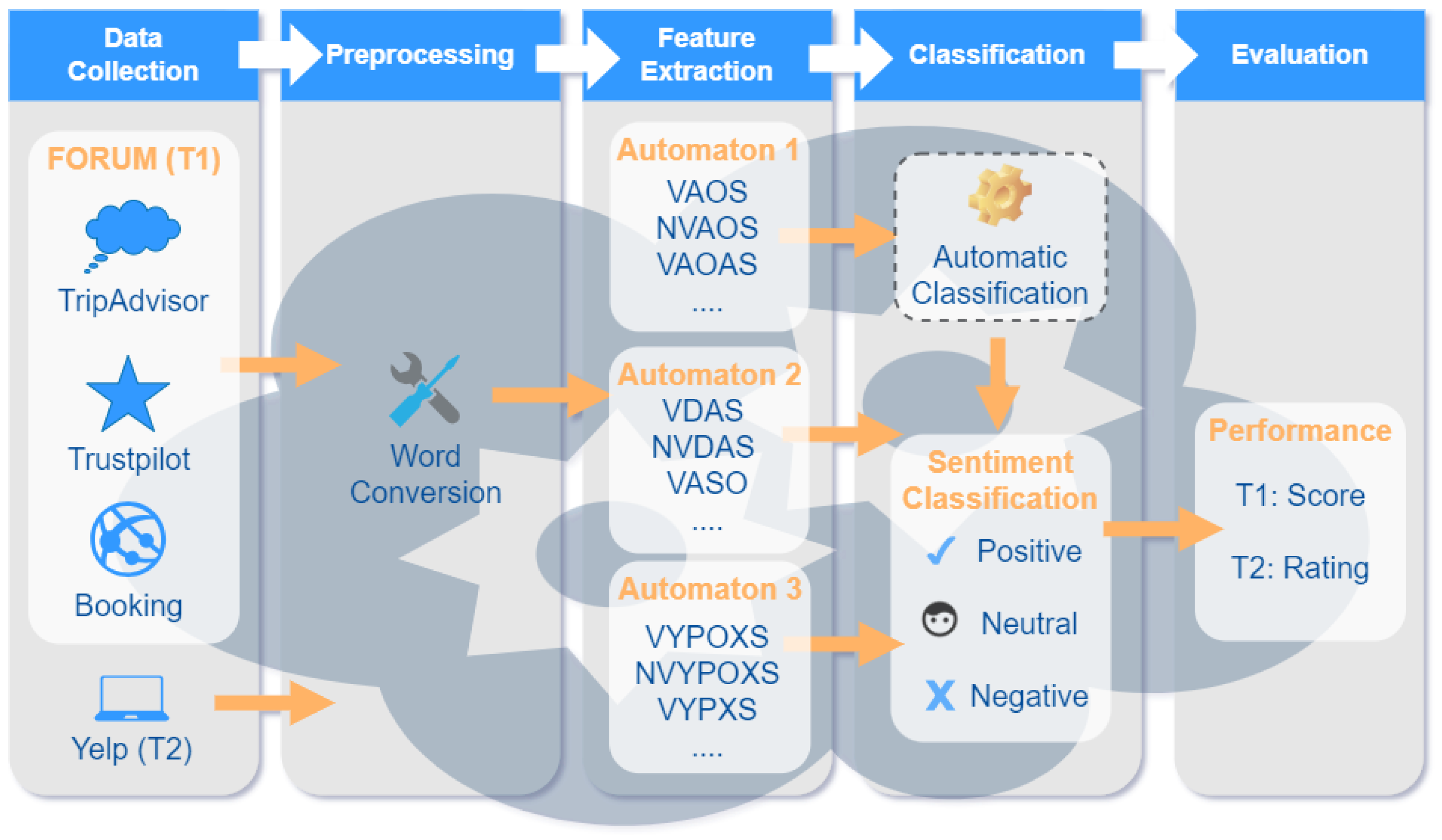

3.1. Overview

3.2. Preliminary Lexicon Analysis

- Verbs (V): a category for the Italian verbs; a subset of all the Italian verbs has been gathered, comprising the verbs that are mostly used to express an opinion. Such a subset includes seven verbs: essere (be) and avere (have) with their synonyms trovare (find), sembrare (seem, look like), restare (stay), vedere (see), and rimanere (remain, stay).

- Articles (A): a category for the entire list of articles for the Italian language, such as, e.g., il, lo (the), etc., and un (a), etc. Such a list consists of nine articles.

- Sentiments (S): a category for the words related to emotions, which are not effected by the context (except when using negations), e.g., bello (beautiful) and brutto (ugly). This list consists of two subsets: a set expressing positive sentiments, such as, e.g., carino (nice), buono (good, delicious), dolce (sweet), etc.; and a set for negative sentiments, such as e.g., cattivo (bad) or pesante (heavy), etc. Each of the two subsets is used to guide the automaton’s transitions towards one of the two corresponding final states (for positive or negative orientation, respectively). The list of positive sentiments consists of 111 words, whereas the list of negative sentiments consists of 43 words. Table 1 shows a partial list of words expressing a sentiment (positive or negative) that we used in our approach.

- Negations (N): only contains the word non (not). Negation is tricky because the same word accompanied by negation may change the orientation of a sentence. E.g., ‘Il mio nuovo computer è potente’ (my new computer is powerful) versus ‘Il mio nuovo computer non è potente’ (my new computer is not powerful). We have included in the proposed automata the ability to process negation in such a way that a negation and a positive sentiment makes it transition towards the end state expressing a negative orientation and vice versa for negation and negative sentiment.

- Adverbs (D): a list of adverbs such as veramente (really), poco (little), and molto (a lot). This list is made up of two subsets of positive adverbs, such as, e.g., molto (very) or davvero (really), and negative adverbs such as poco (little) or purtroppo (unfortunately), etc. As for the two lists of words for positive and negative sentiments, also, the two lists of adverbs guide towards one of the two final states expressing positive or negative orientation. Table 2 shows a partial list of words functioning as adverbs and a partial list of prepositions. The list of positive adverbs consists of 104 words, whereas the list of negative adverbs consists of 16 words.

- Pronoun (Y): only contains the word uno (one).

- Superlative (X): only contains the word più (most).

- Prepositions (P): a list of prepositions, such as da (from), dentro (into), in (in), etc., and with articles, such as, e.g., dagli (from the), etc. The collected prepositions are 42 (Table 2 shows a partial sample).

- Other (O): it consists of words not belonging to any of the above lists (we need not form a list for such words).

3.3. Performing Sentence Analysis

3.3.1. Automaton 1

3.3.2. Automaton 2

3.3.3. Automaton 3

3.3.4. Sequence Generation from Automata

4. Experiments and Results

4.1. Dataset Selection

- Collection T1: a set of 921 sentences that have been randomly extracted from various web forums, such as Tripadvisor (tripadvisor.com, last accessed on 4 October 2023). After gathering the sentences, each sentence was manually labelled with a score that identifies the expressed sentiment: the score identifies a negative sentiment; the score 0 identifies a neutral sentiment; the score identifies a positive sentiment. Table 5 shows some examples of the analysed sentences, the sequences of categories of words automatically determined, the automaton (1 to 3) that reached the final state, and the final state of the automaton (q6 or q7 for Automaton 1, q4 or q7 for Automaton 2, or q8 for Automaton 3).

- Collection T2: a set of 780 sentences that have been extracted from the Yelp platform. We collected data using Yelp’s API (https://www.yelp.com/developers, last accessed on 4 October 2023). Yelp is a review forum that allows users to post their comments on local restaurants and to associate a number of stars ranging from 1 to 5 to give a rating representing the user’s satisfaction; of course a low number of stars corresponds to a poor rating (and vice versa). We assume that for each user comment, the number of stars is a measure for the sentiment expressed in the review—that is: if the number of stars is less than three, then it is considered a negative sentiment; if the number of stars is equal to three then it is considered as neutral sentiment; if the number of stars is greater than three, then it is considered as positive sentiment. Table 6 shows some analysed sentences, the number of stars and the resulting score.

4.2. Serendio Algorithm

4.3. Results and Comparison

5. Parallelization Approach

| Algorithm 1 Algorithm for finding the sentiment (positive or negative) in a text |

|

- splitWords(): given a text, splits it into its constituting words and associates with each word its position in the sentence;

- concatenate(): joins the letters of categories together according to their initial positions in the original sentence;

- checkPattern(): takes a sequence of identifiers for categories and computes a score according to the automaton recognising it (as discussed in Section 3).

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Feldman, R. Techniques and applications for sentiment analysis. Commun. ACM 2013, 56, 82–89. [Google Scholar] [CrossRef]

- Pang, B.; Lee, L. Opinion mining and sentiment analysis. Found. Trends Inf. Retr. 2008, 2, 1–135. [Google Scholar] [CrossRef]

- Abbasi, A.; Chen, H.; Salem, A. Sentiment analysis in multiple languages: Feature selection for opinion classification in web forums. ACM Trans. Inf. Syst. 2008, 26, 12. [Google Scholar] [CrossRef]

- Beineke, P.; Hastie, T.; Manning, C.; Vaithyanathan, S. An exploration of sentiment summarization. In Proceedings of the AAAI Spring Symposium on Exploring Attitude and Affect in Text: Theories and Applications, Palo Alto, CA, USA, 22–24 March 2004; Volume 3, pp. 12–15. [Google Scholar]

- Wiebe, J.M.; Bruce, R.F.; O’Hara, T.P. Development and use of a gold-standard data set for subjectivity classifications. In Proceedings of the Annual Meeting of the Association for Computational Linguistics on Computational Linguistics, College Park, MD, USA, 20–26 June 1999; pp. 246–253. [Google Scholar]

- Liu, B. Sentiment analysis and opinion mining. Synth. Lect. Hum. Lang. Technol. 2012, 5, 1–167. [Google Scholar]

- Naik, M.V.; Vasumathi, D.; Siva Kumar, A. A Novel Approach for Extraction of Distinguishing Emotions for Semantic Granularity Level Sentiment Analysis in Multilingual Context. Recent Adv. Comput. Sci. Commun. 2022, 15, 77–87. [Google Scholar] [CrossRef]

- Mercha, E.M.; Benbrahim, H. Machine learning and deep learning for sentiment analysis across languages: A survey. Neurocomputing 2023, 531, 195–216. [Google Scholar] [CrossRef]

- Catelli, R.; Pelosi, S.; Esposito, M. Lexicon-based vs. Bert-based sentiment analysis: A comparative study in Italian. Electronics 2022, 11, 374. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Meetei, L.S.; Singh, T.D.; Borgohain, S.K.; Bandyopadhyay, S. Low resource language specific pre-processing and features for sentiment analysis task. Lang. Resour. Eval. 2021, 55, 947–969. [Google Scholar] [CrossRef]

- Palanisamy, P.; Yadav, V.; Elchuri, H. Serendio: Simple and Practical lexicon based approach to Sentiment Analysis. In Proceedings of the Second Joint Conference on Lexical and Computational Semantics, Atlanta, GA, USA, 14–15 June 2013; pp. 543–548. [Google Scholar]

- Dean, J.; Ghemawat, S. MapReduce: Simplified data processing on large clusters. Commun. ACM 2008, 51, 107–113. [Google Scholar] [CrossRef]

- Medhat, W.; Hassan, A.; Korashy, H. Sentiment analysis algorithms and applications: A survey. Ain Shams Eng. J. 2014, 5, 1093–1113. [Google Scholar] [CrossRef]

- Birjali, M.; Kasri, M.; Beni-Hssane, A. A comprehensive survey on sentiment analysis: Approaches, challenges and trends. Knowl.-Based Syst. 2021, 226, 107134. [Google Scholar] [CrossRef]

- Tan, K.L.; Lee, C.P.; Lim, K.M. A Survey of Sentiment Analysis: Approaches, Datasets, and Future Research. Appl. Sci. 2023, 13, 4550. [Google Scholar] [CrossRef]

- Fatima, N.; Imran, A.S.; Kastrati, Z.; Daudpota, S.M.; Soomro, A. A Systematic Literature Review on Text Generation Using Deep Neural Network Models. IEEE Access 2022, 10, 53490–53503. [Google Scholar] [CrossRef]

- Cobo, M.; Perez, I.; Velez-Estevez, A.; Cabrerizo, F. Uncovering the conceptual evolution of sentiment analysis research field during the period 2017–2021. In Proceedings of the 2022 IEEE International Conference on Evolving and Adaptive Intelligent Systems (EAIS), Larnaca, Cyprus, 25–26 May 2022; Volume 2022. [Google Scholar] [CrossRef]

- Miller, G.A.; Beckwith, R.; Fellbaum, C.; Gross, D.; Miller, K.J. Introduction to WordNet: An on-line lexical database. Int. J. Lexicogr. 1990, 3, 235–244. [Google Scholar] [CrossRef]

- Kamps, J.; Marx, M.; Mokken, R.J.; De Rijke, M. Using WordNet to Measure Semantic Orientations of Adjectives. In Proceedings of the Fourth International Conference on Language Resources and Evaluation (LREC’04), Lisbon, Portugal, 26–28 May 2004; Volume 4, pp. 1115–1118. [Google Scholar]

- Zhu, H. Sentiment Analysis of 2021 Canadian Election Tweets. In Proceedings of the International Conference on Artificial Intelligence, Virtual Reality, and Visualization (AIVRV 2022), Chongqing, China, 23–25 September 2022; Volume 12588. [Google Scholar] [CrossRef]

- Nalini, C.; Dharani, B.; Baskar, T.; Shanthakumari, R. Review on Sentiment Analysis Using Supervised Machine Learning Techniques. Lect. Notes Netw. Syst. 2023, 715, 166–177. [Google Scholar] [CrossRef]

- Ye, Q.; Zhang, Z.; Law, R. Sentiment classification of online reviews to travel destinations by supervised machine learning approaches. Expert Syst. Appl. 2009, 36, 6527–6535. [Google Scholar] [CrossRef]

- Go, A.; Bhayani, R.; Huang, L. Twitter sentiment classification using distant supervision. CS224N Proj. Rep. Stanf. 2009, 1, 2009. [Google Scholar]

- Devi, J.S.; Nandyala, S.P.; Reddy, P.V.B. A Novel Approach for Sentiment Analysis of Public Posts. In Innovations in Computer Science and Engineering; Springer: Singapore, 2019; pp. 161–167. [Google Scholar]

- Buntoro, G.A. Analisis Sentimen Calon Gubernur DKI Jakarta 2017 Di Twitter. INTEGER J. Inf. Technol. 2017, 2, 1. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; Association for Computational Linguistics: Kerrville, TX, USA, 2014; pp. 1746–1751. [Google Scholar] [CrossRef]

- Jian, Z.; Chen, X.; Wang, H.S. Sentiment classification using the theory of ANNs. J. China Univ. Posts Telecommun. 2010, 17, 58–62. [Google Scholar]

- Yadav, V.; Verma, P.; Katiyar, V. Long short term memory (LSTM) model for sentiment analysis in social data for e-commerce products reviews in Hindi languages. Int. J. Inf. Technol. 2023, 15, 759–772. [Google Scholar] [CrossRef]

- Ko, Y.; Seo, J. Automatic text categorization by unsupervised learning. In Proceedings of the 18th Conference on Computational Linguistics—Volume 1 (COLING’00), Saarbrücken, Germany, 31 July 2000; Association for Computational Linguistics (ACL): Kerrville, TX, USA, 2000; pp. 453–459. [Google Scholar]

- Suhaeni, C.; Yong, H.S. Mitigating Class Imbalance in Sentiment Analysis through GPT-3-Generated Synthetic Sentences. Appl. Sci. 2023, 13, 9766. [Google Scholar] [CrossRef]

- Jain, K.; Ghosh, P.; Gupta, S. A Hybrid Model for Sentiment Analysis Based on Movie Review Datasets. Int. J. Recent Innov. Trends Comput. Commun. 2023, 11, 424–431. [Google Scholar] [CrossRef]

- Palomino, M.A.; Varma, A.P.; Bedala, G.K.; Connelly, A. Investigating the Lack of Consensus Among Sentiment Analysis Tools. Lect. Notes Artif. Intell. 2020, 12598, 58–72. [Google Scholar] [CrossRef]

- Prabowo, R.; Thelwall, M. Sentiment analysis: A combined approach. J. Inf. 2009, 3, 143–157. [Google Scholar] [CrossRef]

- Mudinas, A.; Zhang, D.; Levene, M. Combining lexicon and learning based approaches for concept-level sentiment analysis. In Proceedings of the First International Workshop on Issues of Sentiment Discovery and Opinion Mining, Beijing China, 12 August 2012; ACM: New York, NY, USA, 2012; p. 5. [Google Scholar]

- El-Haj, M.; Kruschwitz, U.; Fox, C. Creating language resources for under-resourced languages: Methodologies, and experiments with Arab. Lang. Resour. Eval. 2015, 3, 549–580. [Google Scholar] [CrossRef]

- Le, T.A.; Moeljadi, D.; Miura, Y.; Ohkuma, T. Sentiment analysis for low resource languages: A study on informal Indonesian tweets. In Proceedings of the 12th Workshop on Asian Language Resources (ALR12), Osaka, Japan, 12 December 2016; pp. 123–131. [Google Scholar]

- Rajabi, Z.; Valavi, M. A Survey on Sentiment Analysis in Persian: A Comprehensive System Perspective Covering Challenges and Advances in Resources and Methods. Cogn. Comput. 2021, 13, 882–902. [Google Scholar] [CrossRef]

- Gangula, R.R.R.; Mamidi, R. Resource Creation Towards Automated Sentiment Analysis in Telugu (a low resource language) and Integrating Multiple Domain Sources to Enhance Sentiment Prediction. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018), Miyazaki, Japan, 7–12 May 2018; European Language Resources Association (ELRA): Paris, France, 2018. [Google Scholar]

- Nasib, A.U.; Kabir, H.; Ahmed, R.; Uddin, J. A Real Time Speech to Text Conversion Technique for Bengali Language. In Proceedings of the International Conference on Computer, Communication, Chemical, Material and Electronic Engineering (IC4ME2), Rajshahi, Bangladesh, 8–9 February 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Altaf, A.; Anwar, M.W.; Jamal, M.H.; Bajwa, U.I. Exploiting Linguistic Features for Effective Sentence-Level Sentiment Analysis in Urdu Language. Multimed. Tools. Appl. 2023, 82, 41813–41839. [Google Scholar] [CrossRef]

- Chauhan, G.S.; Nahta, R.; Meena, Y.K.; Gopalani, D. Aspect based sentiment analysis using deep learning approaches: A survey. Comput. Sci. Rev. 2023, 49, 100576. [Google Scholar] [CrossRef]

- Dang, N.C.; Moreno-García, M.N.; De la Prieta, F. Sentiment analysis based on deep learning: A comparative study. Electronics 2020, 9, 483. [Google Scholar] [CrossRef]

- Prottasha, N.J.; Sami, A.A.; Kowsher, M.; Murad, S.A.; Bairagi, A.K.; Masud, M.; Baz, M. Transfer learning for sentiment analysis using BERT based supervised fine-tuning. Sensors 2022, 22, 4157. [Google Scholar] [CrossRef] [PubMed]

- Singh, C.; Imam, T.; Wibowo, S.; Grandhi, S. A deep learning approach for sentiment analysis of COVID-19 reviews. Appl. Sci. 2022, 12, 3709. [Google Scholar] [CrossRef]

- Zhong, Q.; Ding, L.; Liu, J.; Du, B.; Tao, D. Can ChatGPT Understand Too? A Comparative Study on ChatGPT and Fine-tuned BERT. arXiv 2023, arXiv:2302.10198. [Google Scholar]

- Ikeda, D.; Takamura, H.; Ratinov, L.A.; Okumura, M. Learning to shift the polarity of words for sentiment classification. In Proceedings of the Third International Joint Conference on Natural Language Processing: Volume-I, Asian Federation of Natural Language Processing, Hyderabad, India, 7–12 January 2008. [Google Scholar]

- Jia, L.; Yu, C.; Meng, W. The effect of negation on sentiment analysis and retrieval effectiveness. In Proceedings of the 18th ACM Conference on Information and Knowledge Management, Hong Kong, China, 2–6 November 2009; ACM: New York, NY, USA, 2009; pp. 1827–1830. [Google Scholar]

- Morante, R.; Schrauwen, S.; Daelemans, W. Corpus-based approaches to processing the scope of negation cues: An evaluation of the state of the art. In Proceedings of the Ninth International Conference on Computational Semantics, Oxford, UK, 12–14 January 2011; Association for Computational Linguistics: Kerrville, TX, USA, 2011; pp. 350–354. [Google Scholar]

- Liu, B. Sentiment analysis and subjectivity. In Handbook of Natural Language Processing, 2nd ed.; Chapman and Hall/CRC: Boca Raton, FL, USA, 2010; pp. 627–666. [Google Scholar]

- Jindal, N.; Liu, B. Identifying comparative sentences in text documents. In Proceedings of the 29th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Seattle, WA, USA, 6–11 August 2006; ACM: New York, NY, USA, 2006; pp. 244–251. [Google Scholar]

- Nasukawa, T.; Yi, J. Sentiment analysis: Capturing favorability using natural language processing. In Proceedings of the International Conference on Knowledge Capture, Sanibel Island, FL, USA, 23–25 October 2003; ACM: New York, NY, USA, 2003; pp. 70–77. [Google Scholar]

| Positive Sentiment | Negative Sentiment |

|---|---|

| affascinante (charming) | brutto (ugly) |

| ampio (ample) | cattivo (bad, nasty) |

| attraente (attractive) | guerra (war) |

| bello (beautiful) | inquietante (disturbing) |

| buono (good, delicious) | odioso (hateful) |

| carino (pretty) | pesante (heavy) |

| dolce (sweet) | problema (problem) |

| gentile (dear, gentle) | scarso (poor) |

| gradevole (nice) | sporco (dirty) |

| grazioso (pretty) | |

| migliore (best) | |

| potente (powerful) | |

| pulito (clean) | |

| resistente (durable) |

| Positive Adverb | Negative Adverb | Preposition |

|---|---|---|

| esatto (exact) | per nulla (in no way) | ad (for) |

| pure (also) | niente (anything) | in (in) |

| certamente (sure) | quasi (almost) | dalla (from) |

| assolutamente (absolutely) | purtroppo (unfortunately) | alla (at the) |

| semplicemente (simply) | neanche (neither) | agli (at the) |

| molto (very) | nemmeno (neither) | sulle (on) |

| sempre (always) | appena (just) | nello (in) |

| davvero (really) | ||

| veramente (really) |

| Sentence | Category Sequence |

|---|---|

| Il nuovo iPhone è il telefono più bello di quest’anno

The new iPhone is the most beautiful phone this year | AOOVAOXSPOO |

| La villa di Catania è molto affascinante

The villa in Catania is very charming | AOPOVAS |

| L’hotel non ha un aspetto gradevole

The hotel does not look nice | AONVAOS |

| Automaton 1 | Automaton 2 | Automaton 3 |

|---|---|---|

| VAOS | VDAS | VYPOXS |

| NVAOS | NVDAS | NVYPOXS |

| VAOAS | VASO | VYPXS |

| NVAOAS | NVASO | NVYPXS |

| VDAOS | VDASO | VPAXS |

| NVDAOS | NVDASO | NVPAXS |

| VAODS | VDS | VAXS |

| NVAODS | NVDS | NVAXS |

| VAOPOAS | VDDS | VDAXS |

| NVAOPOAS | VDADS | NVDAXS |

| VAOPOS | ||

| NVAOPOS |

| Sentence | Pattern | Result (a, st) |

|---|---|---|

| Huawei P50 è stato lo smartphone migliore del 2021

(Huawei P50 was the best smartphone for 2021) | OOVAOS+PO | positive (1, q6) |

| Lo Zenfone 9 ha un prezzo molto competitivo

(Zenfone 9 is inexpensive) | AOOVAOD+S+ | negative (1, q6) |

| Lo Zenfone 9 ha un prezzo poco competitivo

(Zenfone 9 is expensive) | AOOVAOD-S+ | negative (1, q7) |

| OnePlus 8 ha una versione di Android molto leggera

(OnePlus 8 has a very light Android version) | OVAOPOD+S+ | positive (1, q6) |

| OnePlus 8 ha una versione di Android molto pesante

(OnePlus 8 has a very heavy Android version) | OVAOPOD+S- | negative (1, q7) |

| L’hotel Verdi non ha un aspetto grazioso

(Verdi hotel does not look pretty) | AOONVAOS+ | negative (1, q7) |

| L’iPhone è un bel telefono

(iPhone is a beautiful phone) | AOVASO | positive (2, q4) |

| La telecamera dell’iPhone non è per nulla competitiva

(iPhone camera is by no means competitive) | AODONVD-D-S+ | negative (2, q7) |

| L’iPhone è un ottimo dispositivo sia dal punto di vista hardware che software

(iPhone is an excellent device both from a hardware and software point of view) | AOVASO | positive (2, q4) |

| L’iPhone è davvero molto bello

(iPhone is really beautiful) | AOVD+D+S+ | positive (2, q4) |

| La camera dell’hotel Verdi è la più bella del mondo

(Verdi hotel’s room is the most beautiful in the world) | AODOOVAXS | positive (3, q8) |

| L’iPhone è uno dei più potenti

(iPhone is one of the most powerful) | AOVYPXP | positive (3, q8) |

| Post | Stars | Score |

|---|---|---|

| Un locale davvero bello. Perfetto per le cenette romantiche. L’ambiente è raffinato, ma i proprietari sanno metterti a tuo agio. Cibo ottimo e presentato in.

(A really nice place. Perfect for romantic dinners. The environment is refined, but the owners know how to put you at ease. Great food and presented in.) | 4 | |

| Esperienza pessima. Scegliamo questo ristorante per la notte di capodanno. Prezzo: 80 a persona, una cifra non bassa e che aveva creato in noi aspettative.

(Bad experience. We choose this restaurant for New Year’s Eve. Price: 80 per person, a non-low figure that had created expectations in us.) | 1 |

| Source | Algorithm | TP | FP | FN | Precision | Recall | F-Score |

|---|---|---|---|---|---|---|---|

| T1 Forum | Our approach | 628 | 16 | 182 | 0.975 | 0.775 | 0.864 |

| Serendio | 302 | 230 | 26 | 0.568 | 0.921 | 0.702 | |

| T2 Yelp | Our approach | 405 | 90 | 216 | 0.818 | 0.652 | 0.726 |

| Serendio | 402 | 92 | 217 | 0.813 | 0.649 | 0.722 |

| Orientation | Total | Unclass’d | TP | FP | FN | Precision | Recall | F-Score |

|---|---|---|---|---|---|---|---|---|

| Positive | 504 | 53 | 292 | 0 | 159 | 1.000 | 0.647 | 0.786 |

| Neutral | 324 | 38 | 276 | 10 | 0 | 0.965 | 1.000 | 0.982 |

| Negative | 93 | 4 | 60 | 6 | 23 | 0.909 | 0.723 | 0.805 |

| T1 | 921 | 95 | 628 | 16 | 182 | 0.975 | 0.775 | 0.864 |

| n. Sentences (Millions) | Sequential Time (s) | Parallel Time (s) | Speed-Up |

|---|---|---|---|

| 11 | 280 | 150 | 1.866 |

| 16 | 405 | 233 | 1.740 |

| 19 | 478 | 270 | 1.770 |

| 22 | 657 | 350 | 1.878 |

| 26 | 836 | 393 | 2.127 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Calvagna, A.; Tramontana, E.; Verga, G. Revealing People’s Sentiment in Natural Italian Language Sentences. Computers 2023, 12, 241. https://doi.org/10.3390/computers12120241

Calvagna A, Tramontana E, Verga G. Revealing People’s Sentiment in Natural Italian Language Sentences. Computers. 2023; 12(12):241. https://doi.org/10.3390/computers12120241

Chicago/Turabian StyleCalvagna, Andrea, Emiliano Tramontana, and Gabriella Verga. 2023. "Revealing People’s Sentiment in Natural Italian Language Sentences" Computers 12, no. 12: 241. https://doi.org/10.3390/computers12120241