The Applicability of Automated Testing Frameworks for Mobile Application Testing: A Systematic Literature Review

Abstract

:1. Introduction

- Analyzing the major test concerns that occurred in the mobile test automation process and providing a taxonomy of mobile automation testing research to categorize and summarize the research works;

- Examining the existing literature on test automation frameworks for mobile application testing and identifying the test challenges;

- Proposing an improved mobile automation testing framework architecture that tackles the test challenges;

- Eliciting directions for furthering the research in mobile test automation.

2. Preliminary Research on Mobile Test Automation

2.1. Mobile Automation Testing

2.2. Software Quality for Mobile Apps

- Usability: It refers to how a product can be used to reach a specified goal. This shows to what extent the application is understandable, easy to learn, easy to use, has a minimum error rate, and overall satisfaction with the application. These are generic factors of usability that a given mobile app should fulfill [13].

- Maintainability: This is a characteristic that determines the probability that a failed application is restored to its normal state within a given timeframe. A maintainable app is reasonably easy to scale and correct.

- Reliability: It indicates the application’s ability to function in given environmental conditions for a particular amount of time.

- Security: It shows the application’s ability to protect itself from hacker attacks and the techniques used to ensure the integrity and confidentiality of the data.

- Efficiency: An efficient mobile app consumes fewer resources such as less loading time and consuming less power and memory during usage of the application.

- Compatibility: A compatible mobile app is one that properly works across different mobile devices, OS platforms, and browsers.

- Functionality: This refers to the application’s ability to perform a task that meets the user’s expectations.

2.3. Test Automation Frameworks

2.3.1. Linear Automation Framework

2.3.2. Modular-Based Automation Framework

2.3.3. Library Architecture Testing Framework

2.3.4. Data-Driven Testing Framework

2.3.5. Keyword-Driven Testing Framework

2.3.6. Hybrid Test Automation Framework

3. Related Work

4. Methodology of This Review

4.1. Definition of Research Scope

- I.

- Research Objectives

- II.

- Research Questions

4.2. The Search String

4.3. Inclusion and Exclusion Criteria

- E1, E2, and E3 are applied in turn to the search result to exclude irrelevant articles.

- I1 and I2 are applied to each remaining study to include the research papers that satisfy the criteria.

- I3 is applied to duplicate articles to include mature studies.

- I4 is applied to include the most recent articles.

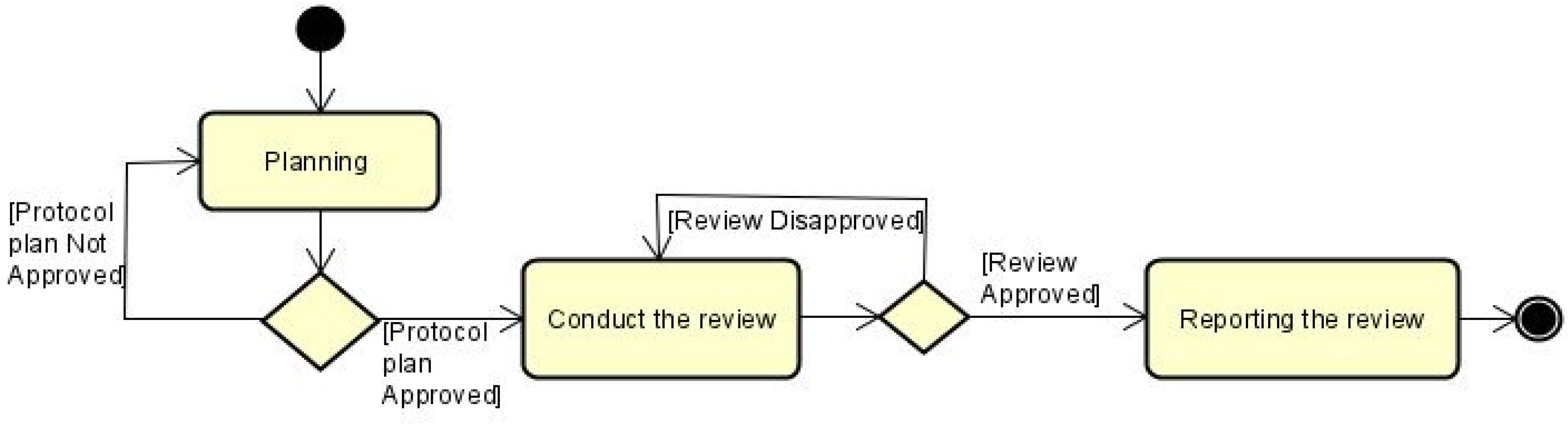

5. Primary Publication Selection

5.1. Data Extraction

5.2. Taxonomy of MATF Research

6. Review Findings

6.1. What Are the Concerns of Automation Testing Frameworks?

6.1.1. Test Objectives

6.1.2. Test Techniques

- I.

- Test Approaches

- II.

- Test Types

| Paper Id | Tool/Technique/ Framework | Reusability | Efficiency | Performance | Reliability | Reliability | Scalability | Compatibility | Functionality |

|---|---|---|---|---|---|---|---|---|---|

| SA1 | Mustafa Abdul et al. [62] | ✓ | ✓ | ✓ | |||||

| SA2 | KDT [63] | ✓ | ✓ | ||||||

| SA3 | KDT/DSL [30] | ✓ | ✓ | ✓ | |||||

| SA4 | EarlGrey [49] | ✓ | ✓ | ✓ | |||||

| SA5 | KDT [31] | ✓ | ✓ | ||||||

| SA6 | Ukwikora [28] | ✓ | |||||||

| SA7 | Mohammad et al. [11] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| SA8 | Appium [37] | ✓ | ✓ | ||||||

| SA9 | Sinaga et al. [50] | ✓ | ✓ | ||||||

| SA10 | Appium/OpenCV [40] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SA11 | Mattia Fazzini [34] | ✓ | ✓ | ✓ | |||||

| SA13 | DDT [55] | ✓ | ✓ | ✓ | |||||

| SA15 | Appium [64] | ✓ | ✓ | ✓ | ✓ | ||||

| SA16 | MDT [58] | ✓ | ✓ | ||||||

| SA17 | Hussain et al. [32] | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| SA18 | Divya Kumar et al. [8] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| SA19 | Vahid Garousi et al. [65] | ✓ | |||||||

| SA20 | Jamil et al. [5] | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| SA23 | Calabash [52] | ✓ | ✓ | ✓ | |||||

| SA24 | ISO/IEC/IEEE [27] | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| SA25 | Shauvik Roy et al. [45] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| SA26 | Nader et al. [39] | ✓ | ✓ | ✓ | ✓ | ||||

| SA27 | Gunasekaran et al. [38] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| SA28 | MAT [44] | ✓ | ✓ | ✓ | ✓ | ||||

| SA29 | Bansal et al. [61] | ✓ | ✓ | ✓ | |||||

| SA30 | Rasneet et al. [41] | ✓ | ✓ | ✓ | |||||

| SA31 | Hanna et al. [2] | ✓ | ✓ | ✓ | ✓ | ||||

| SA32 | Kannan et al. [53] | ✓ | ✓ | ||||||

| SA34 | Pallavi et al. [66] | ✓ | |||||||

| SA35 | PLC Open XML/KDT [67] | ✓ | ✓ | ||||||

| SA36 | Shiwangi et al. [3] | ✓ | ✓ | ✓ | ✓ | ||||

| SA37 | Dynodroid [35] | ✓ | ✓ | ✓ | |||||

| SA38 | KDTFA [29] | ✓ | ✓ | ||||||

| SA39 | Android KDATF [16] | ✓ | ✓ | ✓ | |||||

| SA40 | Testdroid [68] | ✓ | ✓ | ||||||

| SA41 | AndroidRipper [46] | ✓ | |||||||

| SA43 | MobTAF [69] | ✓ | ✓ | ✓ | ✓ | ||||

| SA44 | Song et al. [54] | ✓ | |||||||

| SA45 | Hu et al. [42] | ✓ | ✓ | ||||||

| SA47 | Crawler [70] | ✓ | ✓ | ||||||

| SA48 | Dominik et al. [71] | ✓ | |||||||

| SA49 | MDT/KDT [57] | ✓ | |||||||

| SA50 | Quadri et al. [10] | ✓ | ✓ | ||||||

| SA51 | MobileTest [33] | ✓ | ✓ | ||||||

| SA53 | TDD [51] | ✓ | ✓ | ✓ | ✓ | ||||

| SA54 | KDT/DDT [48] | ✓ | ✓ | ✓ | |||||

| SA55 | Adaptive KDT [72] | ✓ | |||||||

| SA56 | LKDT [36] | ✓ | ✓ | ✓ | ✓ | ||||

| Count | 23 | 30 | 17 | 12 | 7 | 9 | 37 |

| Paper Id | Tool/Technique/ Framework | Linear | Data-driven | Keyword-driven | Reliability | Model-driven | Test-driven | Hybrid |

|---|---|---|---|---|---|---|---|---|

| SA2 | KDT [63] | ✓ | ||||||

| SA3 | KDT/DSL [30] | ✓ | ||||||

| SA5 | KDT [31] | ✓ | ||||||

| SA6 | Ukwikora [28] | ✓ | ||||||

| SA13 | DDT [55] | ✓ | ||||||

| SA16 | MDT [58] | ✓ | ||||||

| SA22 | MATF/Appium [56] | ✓ | ✓ | |||||

| SA23 | Calabash [52] | ✓ | ||||||

| SA24 | ISO/IEC/IEEE [27] | ✓ | ||||||

| SA31 | Hanna et al. [2] | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| SA35 | PLC Open XML/KDT [67] | ✓ | ||||||

| SA38 | KDTFA [29] | ✓ | ||||||

| SA39 | Android KDATF [16] | ✓ | ✓ | |||||

| SA43 | MobTAF [69] | ✓ | ||||||

| SA44 | Song et al. [54] | ✓ | ||||||

| SA46 | DSML [60] | ✓ | ✓ | |||||

| SA49 | MBT/KDT [57] | ✓ | ✓ | ✓ | ||||

| SA52 | OSGi [73] | ✓ | ✓ | |||||

| SA53 | TDD [51] | ✓ | ||||||

| SA54 | KDT/DDT/XML [48] | ✓ | ✓ | ✓ | ✓ | |||

| SA55 | Adaptive KDT [72] | ✓ | ||||||

| SA56 | LKDT [36] | ✓ | ||||||

| Count | 3 | 4 | 15 | 3 | 1 | 3 |

6.2. What Are the Challenges of Automation Testing Frameworks in Mobile Testing?

| Paper Id | Tool/Technique/ Framework | Time | Maintenance cost | Complexity | Fragmentation |

|---|---|---|---|---|---|

| SA1 | Mustafa Abdul et al. [62] | ✓ | ✓ | ||

| SA2 | KDT [63] | ✓ | |||

| SA3 | KDT/DSL [30] | ✓ | ✓ | ||

| SA4 | EarlGrey [49] | ✓ | |||

| SA5 | KDT [31] | ✓ | ✓ | ||

| SA6 | Ukwikora [28] | ✓ | ✓ | ||

| SA7 | Mohammad et al. [11] | ✓ | ✓ | ✓ | |

| SA8 | Appium [37] | ✓ | |||

| SA9 | Sinaga et al. [50] | ✓ | |||

| SA10 | Appium/OpenCV [40] | ✓ | ✓ | ||

| SA11 | Mattia Fazzini [34] | ✓ | ✓ | ||

| SA12 | Anusha et al. [75] | ✓ | |||

| SA13 | DDT [55] | ✓ | ✓ | ||

| SA14 | AutoClicker [76] | ✓ | |||

| SA15 | Appium [64] | ✓ | ✓ | ||

| SA16 | MDT [58] | ✓ | ✓ | ✓ | |

| SA17 | Hussain et al. [32] | ✓ | ✓ | ||

| SA18 | Divya Kumar [8] | ✓ | ✓ | ✓ | |

| SA19 | Vahid Garousi et al. [65] | ✓ | |||

| SA20 | Muhammad Jamil et al. [5] | ✓ | ✓ | ||

| SA24 | ISO/IEC/IEEE [27] | ✓ | ✓ | ✓ | |

| SA25 | Shauvik Roy et al. [45] | ✓ | |||

| SA26 | Nader et al. [39] | ✓ | |||

| SA27 | Gunasekaran et al. [38] | ✓ | ✓ | ||

| SA28 | MAT [44] | ✓ | |||

| SA29 | Bansal et al. [61] | ✓ | |||

| SA30 | Rasneet et al. [41] | ✓ | |||

| SA31 | Hanna et al. [2] | ✓ | ✓ | ||

| SA35 | PLC Open XML/KDT [67] | ✓ | |||

| SA36 | Shiwangi et al. [3] | ✓ | |||

| SA39 | Android KDATF [16] | ✓ | ✓ | ✓ | |

| SA40 | Testdroid [68] | ✓ | |||

| SA41 | AndroidRipper [46] | ✓ | |||

| SA42 | MobiTest [77] | ✓ | |||

| SA43 | MobTAF [69] | ✓ | |||

| SA46 | DSML [60] | ✓ | ✓ | ✓ | |

| SA47 | Crawler [70] | ✓ | ✓ | ||

| SA50 | Quadri et al. [10] | ✓ | ✓ | ||

| SA51 | MobileTest [33] | ✓ | ✓ | ||

| SA53 | TDD [51] | ✓ | ✓ | ||

| SA56 | LKDT [36] | ✓ | ✓ | ✓ | |

| Count | 28 | 20 | 18 | 5 |

7. Discussion

7.1. Trend Analysis

7.2. Research Question Insights

- Editor: An MATF editor is a tool that allows testers to create and edit keyword test scripts. It provides a user-friendly interface for defining high-level and domain-specific keywords. Practically, the MATF editor can be implemented using spreadsheet applications such as Excel;

- High-level keywords: These are generic keywords that are used across multiple test cases and are not specific to any mobile application;

- Domain-specific keywords: These are keywords that are specific to a particular mobile application. They are used to describe the behavior of the mobile application under test and are typically defined by the test engineers who are familiar with the application;

- Robust keyword library: This is another component that acts as a repository of the high-level and domain-specific keywords. The library should be well-organized and easy to navigate, with clear descriptions of each keyword and its purpose;

- Domain specific language (DSL): This is a language that is used to define the high-level and domain-specific keywords in the MATF architecture. The DSL should be easy to read and use, with a clear syntax and structure;

- Reusable test scripts: These are test scripts that can be used across multiple test cases and applications. They are typically created using a combination of high-level and domain-specific keywords and are designed to be modular and easy to maintain;

- Tool bridge: The tool bridge connects the MATF editor to the execution engine, allowing test scripts and test data to be passed between the two components;

- Execution engine: This is the component that runs the test scripts and interacts with the mobile app, using the keywords defined in the test scripts to perform actions and verify behavior;

- Test data: These are the input data that are required to execute the test cases. The test data are usually stored in a separate file or database;

- Mobile app: This is the application under test and interacts with the execution engine using the keywords defined in the test scripts.

7.3. Future Research Directions and Challenges

- Addressing mobile fragmentation issue: Most of the research studies focus on functional and usability defects of mobile apps. Mobile ecosystem fragmentation is not given enough emphasis in the literature. Out of the examined literature, only five research articles discuss the fragmentation of the mobile ecosystem [34,45,60,65,68];

- Enhancing mobile automation testing frameworks: The examined literature indicates that there is still room for improvement in mobile automation testing frameworks. According to the investigated literature, a keyword-driven testing framework is a potential candidate for mobile application testing. The framework should be utilized and enhanced to make it suitable for testing mobile apps;

- Making the existing automation frameworks scalable and compatible with different mobile platforms: Scalability and compatibility are important test concerns that need to be fulfilled in mobile automation testing. However, these factors were overlooked in the investigated literature. Few research articles mentioned the limitation of the existing mobile testing tools/or frameworks in performing scalable and compatible testing [11,40,45];

- Developing automation testing techniques and guidelines: Appropriate testing techniques and guidelines need to be developed for mobile automation testing [5]. These techniques dictate developers and test engineers in creating quality mobile applications for the market. For example, the keyword-driven testing approach and other related guidelines were published by International Organization for Standardization(ISO/EC/IEEE) [27];

- Finally, in the next part of the research study, we are presenting a novel mobile automation testing framework called MATF that conducts automation testing for mobile apps based on the improved keyword-driven testing technology.

8. Threats to Validity

9. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Paper Id | Year | Venue | Publication Title |

|---|---|---|---|

| SA1 | 2022 | NILES | Advanced framework for automated testing of mobile applications |

| SA2 | 2021 | ICAECT | Test automation framework using soft computing techniques |

| SA3 | 2020 | ICTSS | Architecture based on keyword driven testing with domain specific language for a testing system |

| SA4 | 2019 | MOBILESoft | EarlGrey: iOS UI automation testing framework |

| SA5 | 2019 | ICST | On the evolution of keyword-driven test suites |

| SA6 | 2019 | ISSTA | Ukwikora: continuous inspection for keyword-driven testing |

| SA7 | 2019 | CCWC | A comparative analysis of quality assurance automated testing tools for windows mobile applications |

| SA8 | 2018 | ICSST | Environment for automated functional testing of mobile applications |

| SA9 | 2018 | ICITEE | Performance of automation testing tools for android applications |

| SA10 | 2018 | SBGAMES | Automated tests for mobile games: an experience report |

| SA11 | 2018 | ESEC/FSE | Automated support for mobile application testing and maintenance |

| SA12 | 2017 | IRJET | Comparative study on different mobile application frameworks |

| SA13 | 2017 | IJMECS | Novel framework for automation testing of mobile applications using Appium |

| SA14 | 2017 | MOBISYS | Fully automated UI testing system for large-scale android apps using multiple devices |

| SA15 | 2017 | ICICCS | Uberisation of mobile automation testing |

| SA16 | 2017 | Software Qual J | Testing of mobile-driven development applications |

| SA17 | 2017 | JEST | The perceived usability of automated testing tools for mobile applications |

| SA18 | 2016 | J.Procs | The impacts of test automation on software’s Cost, quality and time to market |

| SA19 | 2016 | IEEE Software | Test automation not just for test execution |

| SA20 | 2016 | ICT4M | Software testing techniques: a literature review |

| SA21 | 2016 | ICCSNT | The design and implement of the cross-platform mobile automated testing framework |

| SA22 | 2016 | CCIS | Research on automated testing framework for multi-platform mobile applications |

| SA23 | 2016 | J4R | Deployment of Calabash automation framework to analyze the performance of an android application |

| SA24 | 2016 | ISO/IEC/IEEE | Software and systems engineering- software testing- keyword-driven testing |

| SA25 | 2015 | ASE | Automated test input generation for android: are we their yet? |

| SA26 | 2015 | ASE | Testing cross-platform mobile app development frameworks |

| SA27 | 2015 | IJAERS | Survey on automation testing tools for mobile applications |

| SA28 | 2014 | ICACCCT | An automated testing framework fortesting android mobile applications in the cloud |

| SA29 | 2014 | IJCSMC | A comparative study of software testing techniques |

| SA30 | 2014 | IJCET | Latest research and development on software testing techniques and tools |

| SA31 | 2014 | IJACSA | A review of scripting techniques used in automated software testing |

| SA32 | 2014 | IJCSE | A study on variations of bottlenecks in software testing |

| SA33 | 2014 | IJICT | A strategic approach to software testing |

| SA34 | 2014 | IJMAS | Android mobile automation framework |

| SA35 | 2014 | ETFA | Adapting keyword driven test automation framework to IEC 61131-3 industrial control applications using PLCopen XML |

| SA36 | 2014 | IJCET | Automated testing of mobile applications using scripting technique: a study on Appium |

| SA37 | 2013 | ESEC/FSE | Dynodroid: An input generation system for android apps |

| SA38 | 2013 | AMM | Keyword-driven automation test |

| SA39 | 2013 | ICCSEE | Keyword-driven testing framework for android applications |

| SA40 | 2012 | MUM | Testdroid: automated remote UI testing on android |

| SA41 | 2012 | ASE | Using GUI ripping for automated testing of android applications |

| SA42 | 2012 | ICSEA | MobiTest: a cross-platform tool for testing mobile applications |

| SA43 | 2012 | ICCIS | A novel approach of automation testing on mobile devices |

| SA44 | 2011 | ACIS | An integrated test automation framework fortesting on heterogeneous mobile platforms |

| SA45 | 2011 | AST | Automating GUI testing for android applications |

| SA46 | 2011 | ECSA | A model-driven approach for automating mobile applications testing |

| SA47 | 2011 | ICSTW | A GUI crawling-based technique for android mobile application testing |

| SA48 | 2011 | ICST | Providing a software quality framework for testing of mobile applications |

| SA49 | 2011 | ICSTW | Model-based testing with a general purpose keyword-driven test automation framework |

| SA50 | 2010 | IJCA | Software testing-goals, principles, and limitations |

| SA51 | 2010 | ICSE | Test automation on mobile device |

| SA52 | 2009 | QSIC | An adapter framework for keyword-driven testing |

| SA53 | 2009 | ICUIMC | Performance testing based on test-driven development for mobile applications |

| SA54 | 2009 | WCSE | Design and implementation of GUI Automated testing framework based on XML |

| SA55 | 2008 | ICAL | Towards adaptive framework of keyword-driven automation testing |

| SA56 | 2008 | CSSE | LKDT: A keyword-driven based distributed test framework |

References

- Rafi, D.M.; Moses, K.R.K.; Petersen, K.; Mäntylä, M.V. Benefits and limitations of automated software testing: Systematic literature review and practitioner survey. In Proceedings of the 2012 7th International Workshop on Automation of Software Test, Zurich, Switzerland, 2–3 June 2012; pp. 36–42. [Google Scholar] [CrossRef]

- Hanna, M.; El-Haggar, N.; Sami, M. A Review of Scripting Techniques Used in Automated Software Testing. Int. J. Adv. Comput. Sci. Appl. 2014, 5, 194–202. [Google Scholar] [CrossRef]

- Singh, Ȧ.S.; Gadgil, Ȧ.R.; Chudgor, Ȧ.A. Automated Testing of Mobile Applications using Scripting Technique: A Study on Appium. Int. J. Curr. Eng. Technol. India Accept. 2014, 362744, 3627–3630. [Google Scholar]

- Aebersold, K. Test Automation Framework. Available online: https://smartbear.com/learn/automated-testing/test-automation-frameworks/ (accessed on 1 June 2021).

- Jamil, M.A.; Arif, M.; Abubakar, N.S.A.; Ahmad, A. Software testing techniques: A literature review. In Proceedings of the Proceedings—6th International Conference on Information and Communication Technology for the Muslim World, ICT4M, Jakarta, Indonesia, 22–24 November 2016. [Google Scholar] [CrossRef]

- Muccini, H.; Informatica, D.; Di Francesco, A.; Informatica, D.; Esposito, P.; Informatica, D. Software Testing of Mobile Applications: Challenges and Future Research Directions. In Proceedings of the 7th International Workshop on Automation of Software Test (AST), Zurich, Switzerland, 2–3 June 2012; pp. 29–35. [Google Scholar] [CrossRef]

- Tramontana, P.; Amalfitano, D.; Amatucci, N. Automated functional testing of mobile applications: A systematic mapping study. Softw. Qual. J. 2019, 149–201. [Google Scholar] [CrossRef]

- Kumar, D.; Mishra, K.K. The Impacts of Test Automation on Software’s Cost, Quality and Time to Market. In Proceedings of the Procedia Computer Science, Mumbai, India, 26–27 February 2016; Volume 79. [Google Scholar] [CrossRef]

- Idri, A.; Moumane, K.; Abran, A. On the use of software quality standard ISO/IEC9126 in mobile environments. In Proceedings of the 2013 20th Asia-Pacific Software Engineering Conference (APSEC), Bangkok, Thailand, 2–5 December 2013; Volume 1, pp. 1–8. [Google Scholar] [CrossRef]

- Quadri, S.M.; Farooq, S.U. Software Testing—Goals, Principles, and Limitations. Int. J. Comput. Appl. 2010, 6, 7–10. [Google Scholar] [CrossRef]

- Mohammad, D.R.; Al-Momani, S.; Tashtoush, Y.M.; Alsmirat, M. A comparative analysis of quality assurance automated testing tools for windows mobile applications. In Proceedings of the 2019 IEEE 9th Annual Computing and Communication Workshop and Conference, CCWC 2019, Las Vegas, NV, USA, 7–9 January 2019; pp. 414–419. [Google Scholar] [CrossRef]

- Kirubakaran, B.; Karthikeyani, V. Mobile application testing—Challenges and solution approach through automation. In Proceedings of the 2013 International Conference on Pattern Recognition, Informatics and Mobile Engineering, Salem, India, 21–22 February 2013; pp. 79–84. [Google Scholar] [CrossRef]

- Usability. 2021. Available online: https://www.interaction-design.org/literature/topics/usability (accessed on 1 August 2021).

- Sheetal Sharma, A.J. An efficient Keyword Driven Test Automation Framework for Web Applications. Int. J. Eng. Sci. Adv. Technol. 2012, 2, 600–604. [Google Scholar]

- Hayes, L.G. The Automated Testing Handbook, 2nd ed.; Software Testing Institute, 1 March 2004; Available online: https://books.google.com.hk/books/about/The_Automated_Testing_Handbook.html?id=-jangThcGIkC&redir_esc=y (accessed on 1 August 2021).

- Wu, Z.; Liu, S.; Li, J.; Liao, Z. Keyword-Driven Testing Framework For Android Applications. In Proceedings of the 2nd International Conference on Computer Science and Electronics Engineering (ICCSEE 2013); Atlantis Press: Paris, France, 2013; pp. 1096–1102. [Google Scholar] [CrossRef]

- Corral, L.; Sillitti, A.; Succi, G. Software assurance practices for mobile applications. Computing 2015, 97, 1001–1022. [Google Scholar] [CrossRef]

- Sahinoglu, M.; Incki, K.; Aktas, M.S. Mobile Application Verification: A Systematic Mapping Study. In Proceedings of the Computational Science and Its Applications—ICCSA; Springer International Publishing: Cham, Switzerland; Banff, AB, Canada, 22-25 June 2015; Volume 9159, pp. 147–163. [Google Scholar] [CrossRef]

- Zein, S.; Salleh, N.; Grundy, J. A systematic mapping study of mobile application testing techniques. J. Syst. Softw. 2016, 117, 334–356. [Google Scholar] [CrossRef]

- Kong, P.; Li, L.; Gao, J.; Liu, K.; Bissyande, T.F.; Klein, J. Automated Testing of Android Apps: A Systematic Literature Review. IEEE Trans. Reliab. 2019, 68, 45–66. [Google Scholar] [CrossRef]

- Singh, J.; Sahu, S.K.; Singh, A.P. Implementing Test Automation Framework Using Model-Based Testing Approach. In Intelligent Computing and Information and Communication; Advances in Intelligent Systems and Computing; Springer: Singapore, 2018; pp. 695–704. [Google Scholar] [CrossRef]

- Linares-Vasquez, M.; Moran, K.; Poshyvanyk, D. Continuous, Evolutionary and Large-Scale: A New Perspective for Automated Mobile App Testing. In Proceedings of the 2017 IEEE International Conference on Software Maintenance and Evolution (ICSME), Shanghai, China, 17–22 September 2017; pp. 399–410. [Google Scholar] [CrossRef]

- Ahmad, A.; Li, K.; Feng, C.; Asim, S.M.; Yousif, A.; Ge, S. An Empirical Study of Investigating Mobile Applications Development Challenges. IEEE Access 2018, 6, 17711–17728. [Google Scholar] [CrossRef]

- Wang, J.; Wu, J. Research on Mobile Application Automation Testing Technology Based on Appium. In Proceedings of the 2019 International Conference on Virtual Reality and Intelligent Systems (ICVRIS), 14–15 September 2019; IEEE: Jishou, China; pp. 247–250. [Google Scholar] [CrossRef]

- Luo, C.; Goncalves, J.; Velloso, E.; Kostakos, V. A Survey of Context Simulation for Testing Mobile Context-Aware Applications. ACM Comput. Surv. 2020, 53, 1–39. [Google Scholar] [CrossRef]

- Keele, S. Guidelines for performing Systematic Literature Reviews in Software Engineering; ACM: New York, NY, USA, 2007; Available online: https://dl.acm.org/doi/10.1145/1134285.1134500 (accessed on 1 March 2020).

- ISO/IEC/IEEE 29119-5:2016; Software and Systems Engineering—Software Testing—Part 5: Keyword-Driven Testing. International Organization for Standardization; International Electrotechnical Commission; Institute of Electrical and Electronics Engineers: Geneva, Switzerland, 2016. Available online: https://standards.ieee.org/ieee/29119-5/5563/ (accessed on 16 April 2020).

- Rwemalika, R.; Kintis, M.; Papadakis, M.; Le Traon, Y.; Lorrach, P. Ukwikora: Continuous inspection for keyword-driven testing. In Proceedings of the 28th ACM SIGSOFT International Symposium on Software Testing and Analysis, Beijing, China, 15–19 July 2019; pp. 402–405. [Google Scholar] [CrossRef]

- Wu, Z.Q.; Li, J.Z.; Liao, Z.Z. Keyword Driven Automation Test. Appl. Mech. Mater. 2013, 427-429, 652–655. [Google Scholar] [CrossRef]

- Pereira, R.B.; Brito, M.A.; Machado, R.J. Architecture Based on Keyword Driven Testing with Domain Specific Language for a Testing System. In Proceedings of the International Conference on Testing Software and Systems(ICTSS), Naples, Italy, 9–11 December 2020; pp. 310–316. [Google Scholar] [CrossRef]

- Rwemalika, R.; Kintis, M.; Papadakis, M.; Le Traon, Y.; Lorrach, P. On the Evolution of Keyword-Driven Test Suites. In Proceedings of the 2019 12th IEEE Conference on Software Testing, Validation and Verification (ICST), Xi’an, China, 22–27 April 2019; pp. 335–345. [Google Scholar] [CrossRef]

- Hussain, A.; Razak, H.A.; Mkpojiogu, E.O.C. The perceived usability of automated testing tools for mobile applications. J. Eng. Sci. Technol. 2017, 12, 86–93. [Google Scholar]

- Zhifang, L.; Bin, L.; Xiaopeng, G. Test automation on mobile device. In Proceedings of the 5th Workshop on Automation of Software Test, 3–4 May 2010; ACM: Cape Town, South Africa; pp. 1–7. [Google Scholar] [CrossRef]

- Fazzini, M. Automated support for mobile application testing and maintenance. In Proceedings of the 2018 26th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Lake Buena Vista, FL, USA, 4–9 November 2018; pp. 932–935. [Google Scholar] [CrossRef]

- Machiry, A.; Tahiliani, R.; Naik, M. Dynodroid: An input generation system for android apps. In Proceedings of the 2013 9th Joint Meeting of the European Software Engineering Conference and the ACM SIGSOFT Symposium on the Foundations of Software Engineering, ESEC/FSE 2013—Proceedings, Saint Petersburg, Russia, 18–26 August 2013; pp. 224–234. [Google Scholar] [CrossRef]

- Jie, H.; Lan, Y.; Luo, P.; Guo, S.; Gao, J. LKDT: A Keyword—Driven based distributed test framework. In Proceedings of the Proceedings—International Conference on Computer Science and Software Engineering, CSSE 2008, Wuhan, China, 12–14 December 2008; Volume 2, pp. 719–722. [Google Scholar] [CrossRef]

- Vajak, D.; Grbic, R.; Vranjes, M.; Stefanovic, D. Environment for Automated Functional Testing of Mobile Applications. In Proceedings of the 2018 International Conference on Smart Systems and Technologies (SST), Osijek, Croatia, 10–12 October 2018; pp. 125–130. [Google Scholar] [CrossRef]

- Gunasekaran, S.; Bargavi, V. Survey on Automation Testing Tools for Mobile Applications. Int. J. Adv. Eng. Res. Sci. 2015, 2, 2349–6495. Available online: www.ijaers.com (accessed on 10 July 2021).

- Boushehrinejadmoradi, N.; Ganapathy, V.; Nagarakatte, S.; Iftode, L. Testing Cross-Platform Mobile App Development Frameworks (T). In Proceedings of the 2015 30th IEEE/ACM International Conference on Automated Software Engineering (ASE), Lincoln, NE, USA, 9–13 November 2015; pp. 441–451. [Google Scholar] [CrossRef]

- Lovreto, G.; Endo, A.T.; Nardi, P.; Durelli, V.H.S. Automated Tests for Mobile Games: An Experience Report. In Proceedings of the 2018 17th Brazilian Symposium on Computer Games and Digital Entertainment (SBGames), Foz do Iguacu, Brazil, 29 October–1 November 2018; Volume 2018-Novem, pp. 48–488. [Google Scholar] [CrossRef]

- Kaur Chauhan, Ȧ.R.; Singh, Ḃ.Ȧ.I. Latest Research and Development on Software Testing Techniques and Tools. Int. J. Curr. Eng. Technol. 2014, 4, 2368–2372. Available online: http://inpressco.com/category/ijcet (accessed on 10 September 2021).

- Hu, C.; Neamtiu, I. Automating GUI testing for Android applications. In Proceedings of the 6th International Workshop on Automation of Software Test, 23–24 May 2011; ACM: Honolulu, HI, USA; pp. 77–83. [Google Scholar] [CrossRef]

- Singh, K.; Mishra, S.K. A Strategic Approach to Software Testing. Int. J. Inf. Comput. Technol. 2014, 4, 1387–1394. [Google Scholar]

- Prathibhan, C.M.; Malini, A.; Venkatesh, N.; Sundarakantham, K. An automated testing framework for testing Android mobile applications in the cloud. In Proceedings of the 2014 IEEE International Conference on Advanced Communications, Control and Computing Technologies, Ramanathapuram, India, 8–10 May 2014; pp. 1216–1219. [Google Scholar] [CrossRef]

- Choudhary, S.R.; Gorla, A.; Orso, A. Automated Test Input Generation for Android: Are We There Yet? (E). In Proceedings of the 2015 30th IEEE/ACM International Conference on Automated Software Engineering (ASE), Lincoln, NE, USA, 9–13 November 2015; pp. 429–440. [Google Scholar] [CrossRef]

- Amalfitano, D.; Fasolino, A.R.; Tramontana, P.; De Carmine, S.; Memon, A.M. Using GUI ripping for automated testing of Android applications. In Proceedings of the 27th IEEE/ACM International Conference on Automated Software Engineering, Essen, Germany, 3–5 September 2012; pp. 258–261. [Google Scholar] [CrossRef]

- Azim, T.; Neamtiu, I. Targeted and depth-first exploration for systematic testing of android apps. In Proceedings of the 2013 ACM SIGPLAN International Conference on Object Oriented Programming Systems Languages & Applications, Indianapolis, IN, USA, 29–31 October 2013; pp. 641–660. [Google Scholar] [CrossRef]

- Mu, B.; Zhan, M.; Hu, L. Design and Implementation of GUI Automated Testing Framework Based on XML. In Proceedings of the 2009 WRI World Congress on Software Engineering, Xiamen, China, 19–21 May 2009; pp. 194–199. [Google Scholar] [CrossRef]

- Tirodkar, A.A.; Khandpur, S.S. EarlGrey: iOS UI Automation Testing Framework. In Proceedings of the 2019 IEEE/ACM 6th International Conference on Mobile Software Engineering and Systems (MOBILESoft), Montreal, QC, Canada, 25–25 May 2019; pp. 12–15. [Google Scholar] [CrossRef]

- Sinaga, A.M.; Wibowo, P.A.; Silalahi, A.; Yolanda, N. Performance of Automation Testing Tools for Android Applications. In Proceedings of the 2018 10th International Conference on Information Technology and Electrical Engineering (ICITEE), Bali, Indonesia, 24–26 July 2018; pp. 534–539. [Google Scholar] [CrossRef]

- Kim, H.; Choi, B.; Yoon, S. Performance testing based on test-driven development for mobile applications. In Proceedings of the International Conference on Ubiquitous Information Management and Communication; ACM: Suwon, Republic of Korea, 2009; pp. 612–617. [Google Scholar] [CrossRef]

- Kishan Kulkarni Soumya, M.A. Deployment of Calabash Automation Framework to Analyze the Performance of an Android Application. J. Res. 2016, 02, 70–75. Available online: www.journalforresearch.org (accessed on 5 July 2022).

- Kannan, S.; Pushparaj, T. A Study on Variations of Bottlenecks in Software Testing. Int. J. Comput. Sci. Eng. 2014, 2, 8–14. Available online: https://www.ijcseonline.org/pdf_paper_view.php?paper_id=150&IJCSE-00256.pdf (accessed on 5 October 2021).

- Song, H.; Ryoo, S.; Kim, J.H. An Integrated Test Automation Framework for Testing on Heterogeneous Mobile Platforms. In Proceedings of the 2011 First ACIS International Symposium on Software and Network Engineering, Seoul, Republic of Korea, 19–20 December 2011; pp. 141–145. [Google Scholar] [CrossRef]

- Alotaibi, A.A.; Qureshi, R.J. Novel Framework for Automation Testing of Mobile Applications using Appium. Int. J. Mod. Educ. Comput. Sci. 2017, 9, 34–40. [Google Scholar] [CrossRef]

- Zun, D.; Qi, T.; Chen, L. Research on automated testing framework for multi-platform mobile applications. In Proceedings of the 2016 4th International Conference on Cloud Computing and Intelligence Systems (CCIS), Beijing, China, 17–19 August 2016; pp. 82–87. [Google Scholar] [CrossRef]

- Pajunen, T.; Takala, T.; Katara, M. Model-Based Testing with a General Purpose Keyword-Driven Test Automation Framework. In Proceedings of the 2011 IEEE Fourth International Conference on Software Testing, Verification and Validation Workshops, Berlin, Germany, 21–25 March 2011; pp. 242–251. [Google Scholar] [CrossRef]

- Marín, B.; Gallardo, C.; Quiroga, D.; Giachetti, G.; Serral, E. Testing of model-driven development applications. Softw. Qual. J. 2017, 25, 407–435. [Google Scholar] [CrossRef]

- Kolawole, G. Model Based Testing Mobile Applications: A Case Study of Moodle Mobile Application. Master’s Thesis, Tallinn University of Technology, Tallinn, Estonia, 2017. [Google Scholar]

- Ridene, Y.; Barbier, F. A model-driven approach for automating mobile applications testing. In Proceedings of the 5th European Conference on Software Architecture: Companion Volume, 13–16 September 2011; ACM: Essen, Germany; pp. 1–7. [Google Scholar] [CrossRef]

- Bansal, A. A Comparative Study of Software Testing Techniques. Int. J. Comput. Sci. Mob. Comput. 2014, 3, 579–584. Available online: http://link.springer.com/10.1007/978-3-319-59647-1_27 (accessed on 1 March 2020).

- Salam, M.A.; Taha, S.; Hamed, M.G. Advanced Framework for Automated Testing of Mobile Applications. In Proceedings of the 2022 4th Novel Intelligent and Leading Emerging Sciences Conference (NILES), Giza, Egypt, 22–24 October 2022; pp. 233–238. [Google Scholar] [CrossRef]

- Swathi, B.; Tiwari, H. Test Automation Framework using Soft Computing Techniques. In Proceedings of the 2021 International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT), Bhilai, India, 19–20 February 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Seth, P.; Rane, N.; Wagh, A.; Katade, A.; Sahu, S.; Malhotra, N. Uberisation of mobile automation testing. In Proceedings of the 2017 International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 15–16 June 2017; pp. 181–184. [Google Scholar] [CrossRef]

- Garousi, V.; Elberzhager, F. Test Automation: Not Just for Test Execution. IEEE Softw. 2017, 34, 90–96. [Google Scholar] [CrossRef]

- Raut, P.; Tomar, S. Android Mobile Automation Framework. Int. J. Multidiscip. Approach Stud. (IJMAS) 2014, 1, 1–12. Available online: http://ijmas.com/upcomingissue/1.06.2014.pdf (accessed on 1 March 2020).

- Peltola, J.; Sierla, S.; Vyatkin, V. Adapting Keyword driven test automation framework to IEC 61131-3 industrial control applications using PLCopen XML. In Proceedings of the 2014 IEEE Emerging Technology and Factory Automation (ETFA), Barcelona, Spain, 16–19 September 2014; pp. 1–8. [Google Scholar] [CrossRef]

- Kaasila, J.; Ferreira, D.; Kostakos, V.; Ojala, T. Testdroid:automated remote UI testing on Android. In Proceedings of the 11th International Conference on Mobile and Ubiquitous Multimedia, Ulm, Germany, 4–6 December 2012; pp. 1–4. [Google Scholar] [CrossRef]

- Nagowah, L.; Sowamber, G. A novel approach of automation testing on mobile devices. In Proceedings of the 2012 International Conference on Computer & Information Science (ICCIS), Kuala Lumpur, Malaysia, 12–14 June 2012; Volume 2, pp. 924–930. [Google Scholar] [CrossRef]

- Amalfitano, D.; Fasolino, A.R.; Tramontana, P. A GUI Crawling-Based Technique for Android Mobile Application Testing. In Proceedings of the 2011 IEEE Fourth International Conference on Software Testing, Verification and Validation Workshops, Berlin, Germany, 21–25 March 2011; pp. 252–261. [Google Scholar]

- Franke, D.; Weise, C. Providing a Software Quality Framework for Testing of Mobile Applications. In Proceedings of the 2011 Fourth IEEE International Conference on Software Testing, Verification and Validation, Berlin, Germany, 21–25 March 2011; pp. 431–434. [Google Scholar]

- Tang, J.; Cao, X.; Ma, A. Towards adaptive framework of keyword driven automation testing. In Proceedings of the 2008 IEEE International Conference on Automation and Logistics, Qingdao, China, 1–3 September 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1631–1636. [Google Scholar] [CrossRef]

- Takala, T.; Maunumaa, M.; Katara, M. An Adapter Framework for Keyword-Driven Testing. In Proceedings of the 2009 Ninth International Conference on Quality Software, Jeju, Republic of Korea, 24–25 August 2009; pp. 201–210. [Google Scholar]

- Cherednichenko, S. What’s the Cost to Maintain and Support an App in 2021. 2021. Available online: https://www.mobindustry.net/blog/whats-the-cost-to-maintain-and-support-an-app-in-2020/ (accessed on 20 December 2021).

- Anusha, M.; Kn, S. Comparative Study on Different Mobile Application Frameworks. Int. Res. J. Eng. Technol. 2017, 4, 1299–1300. Available online: https://irjet.net/archives/V4/i3/IRJET-V4I3306.pdf (accessed on 1 March 2020).

- Ki, T.; Simeonov, A.; Park, C.M.; Dantu, K.; Ko, S.Y.; Ziarek, L. Demo:Fully Automated UI Testing System for Large-scale Android Apps Using Multiple Devices. In Proceedings of the 15th Annual International Conference on Mobile Systems, Applications, and Services, 19–23 June 2017; ACM: Niagara Falls, ON, Canada; New York, NY, USA; p. 185. [Google Scholar] [CrossRef]

- Bayley, I.; Flood, D.; Harrison, R.; Martin, C. MobiTest: A Cross-Platform Tool for Testing Mobile Applications. In Proceedings of the ICSEA 2012: The Seventh International Conference on Software Engineering Advances, Lisbon, Portugal, 18–23 November 2012; pp. 619–622. Available online: http://www.thinkmind.org/index.php?view=article&articleid=icsea_2012_22_20_10114 (accessed on 3 July 2022).

| Title of Paper | Limitations |

|---|---|

| Software testing of mobile applications: challenges and future research directions [6] | Did not perform a detailed investigation on automation testing techniques and frameworks. |

| Software assurance practices for mobile applications [17] | A rigorous systematic literature review is not conducted that aid testers to choose an appropriate testing framework. |

| Mobile application verification: a systematic mapping study [18] | Mobile application testing is not reviewed in detail and a suitable framework needs to be developed. |

| A systematic mapping study of mobile application testing techniques [19] | The authors did not propose an improved architecture for solving mobile application test challenges. |

| Automated testing of android apps: a systematic literature review [20] | The authors did not propose an improved architecture for solving mobile application test challenges. |

| Implementing test automation framework using model-based testing approach [21] | The implemented framework is not associated with the test challenges. |

| Continuous, evolutionary and large-scale: a new perspective for automated mobile app testing [22] | A detailed investigation on the current test frameworks is not conducted and the test challenges addressed by the framework are not discussed. |

| Research on mobile application automation testing technology based on Appium [24] | The study is limited to the Appium testing tool and other testing tools are not incorporated. |

| A survey of context simulation for testing mobile context-aware applications [25] | The study conducts a survey on testing context-aware mobile systems and did not suggest a solution for it. |

| Group | Keywords |

|---|---|

| Mobile | Mobile, android, iOS |

| Automation testing | Automation testing, mobile automation testing, automated testing |

| Automation testing framework | Testing framework, automation testing framework, mobile automation testing framework |

| Challenge | Challenge, limitation, constraint, drawback |

| Framework | Frameworks, tool, model |

| #Try | Search String | Returned Results | #Relevant Articles |

|---|---|---|---|

| Try1 | ((“automation test *”) AND (mobile * OR android OR ios) AND (applicable * OR appropriate * OR suitable *)) | 3 | 1 |

| Try2 | ((“automation test *”) AND (mobile * OR android OR ios)) | 45 | 18 |

| Try3 | ((“automation test *”) AND (mobile application *)) | 29 | 12 |

| Try4 | ((“automation test *”) AND (mobile *) AND (framework * OR model *) AND (challenge *)) | 4 | 1 |

| Try5 | ((“automation test *”) AND (mobile application test *)) | 29 | 13 |

| Step | Count |

|---|---|

| Repository (subject database) search without restriction | 4917 |

| After performing a manual walkthrough of the papers | 345 |

| After an in-depth review of titles/abstracts | 251 |

| After skimming/scanning full paper | 61 |

| After removing duplicate papers | 56 |

| Data Item | Description |

|---|---|

| Paper ID | The unique ID assigned for the research paper |

| Title | The title given for the research paper |

| Author(s) | The authors of the paper |

| Year | Year of publication of the research paper |

| Publication venue | The publisher’s name for the study |

| Venue type | The type of the research article |

| Mobile automation techniques | Mobile automated testing techniques used in the study |

| Mobile testing tools | Mobile testing tools used/discussed in the paper |

| Framework/tool | A framework or a tool proposed in the study |

| Challenges | The challenge or limitation of mobile automation frameworks discussed in the study |

| Applicability | The degree of the applicability of the test automation framework for mobile apps |

| MATF Architecture | Test Challenges | Test Challenges Description | What MATF Offers |

| Fragmentation | Mobile apps and operating systems (OSs) are highly fragmented due to compatibility issues | Allows testers to create modular and reusable test scripts that can be easily adapted to different device configurations and OSs. | |

| Complexity | Mobile apps have multiple screens, features, and interactions | Provides a clear and structured way to define and organize test scripts to manage the test suites easily. | |

| Maintenance cost | Mobile apps are evolving, and new features and updates are released on a regular basis | It provides a modular and reusable framework that can easily be changed and maintained. | |

| Time consumption | Testing mobile apps across multiple devices can be time-consuming | Allows testers to create test scripts that can be executed across multiple devices. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Berihun, N.G.; Dongmo, C.; Van der Poll, J.A. The Applicability of Automated Testing Frameworks for Mobile Application Testing: A Systematic Literature Review. Computers 2023, 12, 97. https://doi.org/10.3390/computers12050097

Berihun NG, Dongmo C, Van der Poll JA. The Applicability of Automated Testing Frameworks for Mobile Application Testing: A Systematic Literature Review. Computers. 2023; 12(5):97. https://doi.org/10.3390/computers12050097

Chicago/Turabian StyleBerihun, Natnael Gonfa, Cyrille Dongmo, and John Andrew Van der Poll. 2023. "The Applicability of Automated Testing Frameworks for Mobile Application Testing: A Systematic Literature Review" Computers 12, no. 5: 97. https://doi.org/10.3390/computers12050097

APA StyleBerihun, N. G., Dongmo, C., & Van der Poll, J. A. (2023). The Applicability of Automated Testing Frameworks for Mobile Application Testing: A Systematic Literature Review. Computers, 12(5), 97. https://doi.org/10.3390/computers12050097