Abstract

In the past ten years, rates of forest fires around the world have increased significantly. Forest fires greatly affect the ecosystem by damaging vegetation. Forest fires are caused by several causes, including both human and natural causes. Human causes lie in intentional and irregular burning operations. Global warming is a major natural cause of forest fires. The early detection of forest fires reduces the rate of their spread to larger areas by speeding up their extinguishing with the help of equipment and materials for early detection. In this research, an early detection system for forest fires is proposed called Forest Defender Fusion. This system achieved high accuracy and long-term monitoring of the site by using the Intermediate Fusion VGG16 model and Enhanced Consumed Energy-Leach protocol (ECP-LEACH). The Intermediate Fusion VGG16 model receives RGB (red, green, blue) and IR (infrared) images from drones to detect forest fires. The Forest Defender Fusion System provides regulation of energy consumption in drones and achieves high detection accuracy so that forest fires are detected early. The detection model was trained on the FLAME 2 dataset and obtained an accuracy of 99.86%, superior to the rest of the models that track the input of RGB and IR images together. A simulation using the Python language to demonstrate the system in real time was performed.

1. Introduction

A growing issue as a result of the intensifying effects of global climate change is forest fires which are acknowledged as a major disruption in the world’s forest ecosystems. Major risks to biodiversity, ecological balance, and human settlements are posed by their growing frequency and severity. Rising temperatures, extended droughts, and shifting weather patterns provide favorable conditions for the ignition and rapid spread of fires.

Many studies have reported about the effects and issues of forest fires in many countries of the world. According to Evelpidou, N. [1], Greece’s summer 2021 wildfires ranked among the worst forest fire incidents the nation had seen in the previous ten years. The forest fire lasted for a 20-day period (from 27 July 2021 to 16 August 2021) and left almost 3600 km2 completely destroyed. Meier, S. et al. [2] conducted an extreme value study using geospatial data from the European Forest Fire Information System (EFFIS) spanning from 2006 to 2019. After a 10-year analysis, they concluded that Portugal has the highest risk of forest fire with 50,338 ha, followed by Greece 33,242 ha, Spain 25,165 ha, and Italy 896,630 ha. Pang, Y. [3] stated that 111,446 forest fires occurred, consuming a sizable 3,289,500 hectares of land, between 2003 and 2018 in China. It can be seen from previous studies that forest fires have consumed many lands and are on the increase. There has been a need to adopt methods to detect forest fires early. A forest fire begins small before it increases in size and becomes uncontrollable. Mehta, K. [4] claims that based on data gathered over the years, 75–80% of the many disasters brought on by forest fires may have been avoided had the event been recognized and addressed sooner.

Reducing the uncontrolled spread of forest fires and protecting wider regions from their disastrous effects requires early detection and a timely response. Furthermore, it is important to recognize the additional difficulty of optimizing energy efficiency. Drone operations are made more complex by the requirement to control energy usage which calls for creative ways to balance the usage of sustainable resources with early detection accuracy. The main objective is to create all-encompassing solutions that maximize energy efficiency and improve fire detection accuracy hence reducing the ecological damage caused by these fires globally. The pressing need to solve the forest fire issue is the requirement for early detection that is both proactive and technologically advanced.

There are some tools that help in the early detection of forest fires and which help in making the right decision. Using a wireless sensor network, Dampage, U. [5] proposed a system and approach that can be utilized to identify forest fires in the early stages. Kang, Y. [6] notes that while sensors of active fires are effective in detecting forest fires, early detection response has not received as much attention due to generalization issues with basic threshold approaches based on contextual statistical analysis. Satellite images later emerged that provide a visual picture of the fire site. The image can be analyzed to detect the existence of a forest fire. Kalaivani, V. [7] used a dataset from the Landsat satellite for forest fire early detection. Then drones appeared, which provided more facilities for the early detection of forest fires. According to Yandouzi, M. [8], drones are distinguished by their ability to fly at low altitudes, collect more and accurate data, and have a low cost of use. Yandouzi, M. also adds that satellites are unable to detect small fires and can send photos every few days or weeks, whereas drones can send images every day. Drones are therefore considered to have the potential to assist in the early detection of forest fires. Many studies have proved the success of using drones for the early detection of forest fires. Mashraqi, A. M. [9] proposed a model for the early detection of forest fires using RGB images taken from drones, while Wang, Y. [10] proposed a model using IR images. Chen, X. [11] tested various models in which the inputs for the forest fire detection model are RGB and IR images.

In order to create a system for the early detection of forest fires, a solution must address the problem of the unstable battery power consumption of drones. Energy-efficient routing protocols have the ability to manage a group of drones so that they use energy as efficiently as possible by organizing the work between them. Many studies have used energy-efficient routing protocols for drones. The Low-Energy Adaptive Clustering Hierarchy (LEACH) protocol is designed to save energy by applying the cluster head method, where the rest of the drones transmit information for the cluster head only, and the cluster head changes during a specific time frame [12,13,14]. Optimized link state routing (OLSR) is an energy-saving protocol that relies on finding the shortest route to reduce energy consumption [15,16]. The ECP-LEACH protocol is a recently proposed energy-efficient protocol based on two components (a threshold monitoring module and sleep scheduling module) [17].

The strategic integration of advanced detection models and energy-efficient protocols is the focal point of early forest fire detection. The effectiveness of detection algorithms in early fire detection is dependent on their ability to provide accurate results. These algorithms are essential for the reliable operation of early fire detection since they guarantee the accurate detection of forest fires. At the same time, energy-efficient measures must be put in place in order to achieve early detection and add a long-term and strategic aspect to monitoring activities. Accurate detection algorithms and energy-efficient protocols cooperate in order to maximize monitoring capabilities and enhance fire detection skills. This paper essentially goes beyond individual research studies by introducing a system that synchronizes the accuracy of forest fire detection with a deliberate dedication to energy efficiency.

In Section 2, the protocol used for drones in the system is presented. In Section 3, the Intermediate Fusion VGG16 model is proposed for forest fire detection. In Section 4, the Forest Defender Fusion System is proposed. In Section 5, a simulation is described. The results are displayed and discussed in Section 6. In Section 7, the conclusion of the paper is presented.

2. Routing Protocol for Drones

In our previous work [17] we demonstrated that the ECP-LEACH protocol has significant energy savings by comparing it with the LEACH protocol. The comparison was made at energy consumption rates in three cases (50, 100, and 150 nodes) with different duty cycles, where each node starts with an initial energy equal to 18,720 joules. The ECP-LEACH protocol showed significant energy savings. Table 1 shows the average energy consumed when using the default LEACH protocol. Table 2 shows the average energy consumed when using the Enhanced LEACH protocol (ECP-LEACH).

Table 1.

Average energy consumed when using the default LEACH protocol.

Table 2.

Average energy consumed when using ECP-LEACH protocol.

To detect forest fires early, drones must be in operation for most of the day sending images to detect the fire. This process consumes a lot of battery power for the drone. In this paper, the use of the ECP-LEACH protocol is adopted to significantly save battery power. The ECP-LEACH protocol includes two basic parts: the threshold monitoring unit and the sleep scheduling unit. The threshold monitoring module plays a crucial role by constantly tracking the individual power consumption rates of each drone. The drone’s sleep mode is intelligently initiated by the ECP-LEACH protocol when it exceeds a specified threshold. The accurate use of battery power is ensured through strategic decision making that prevents waste.

The energy resources which are available for drones are limited. Energy conservation and extending the network’s lifetime are the goals. Drones can be placed into a sleep mode to conserve energy when they are not needed for data transmission, moving, or taking photos. Nevertheless, it might be difficult to judge when to put drones into sleep mode. To overcome this difficulty, the ECP-LEACH protocol includes a threshold unit. The threshold unit compares the drones’ energy consumption to a preset threshold and puts the drones into sleep mode if the threshold is exceeded.

Drones are signaled to go to sleep if their energy consumption exceeds the predefined threshold which is monitored by the threshold monitoring unit during each round. Preventing energy waste is aided by this strategic decision-making process based on individual power consumption rates. The sleep scheduling unit uses an algorithm that takes into account variables like energy levels and communication history to schedule the drones’ sleep modes based on signals from the threshold monitoring unit. The protocol successfully preserves energy which increases the network lifetime and lowers overall energy consumption by employing a threshold to put drones into sleep mode.

This approach ensures energy conservation without compromising network connectivity which is especially useful in applications like environmental monitoring and forest fire detection. It is crucial that the threshold and sleep scheduling unit is only intended for drones that are not cluster heads because cluster heads are responsible for network traffic and data distribution. The implementation of a threshold and sleep scheduling unit offers major advantages as it prevents potential connectivity issues and ensures the network operates smoothly and effectively.

To create an early detection system for forest fires, the energy consumed by the drone must be taken into account in order to obtain the longest possible time for the drone to operate. Therefore, the ECP-LEACH protocol is good for the early detection of forest fires.

3. Intermediate Fusion VGG16 Model for Detecting Forest Fires

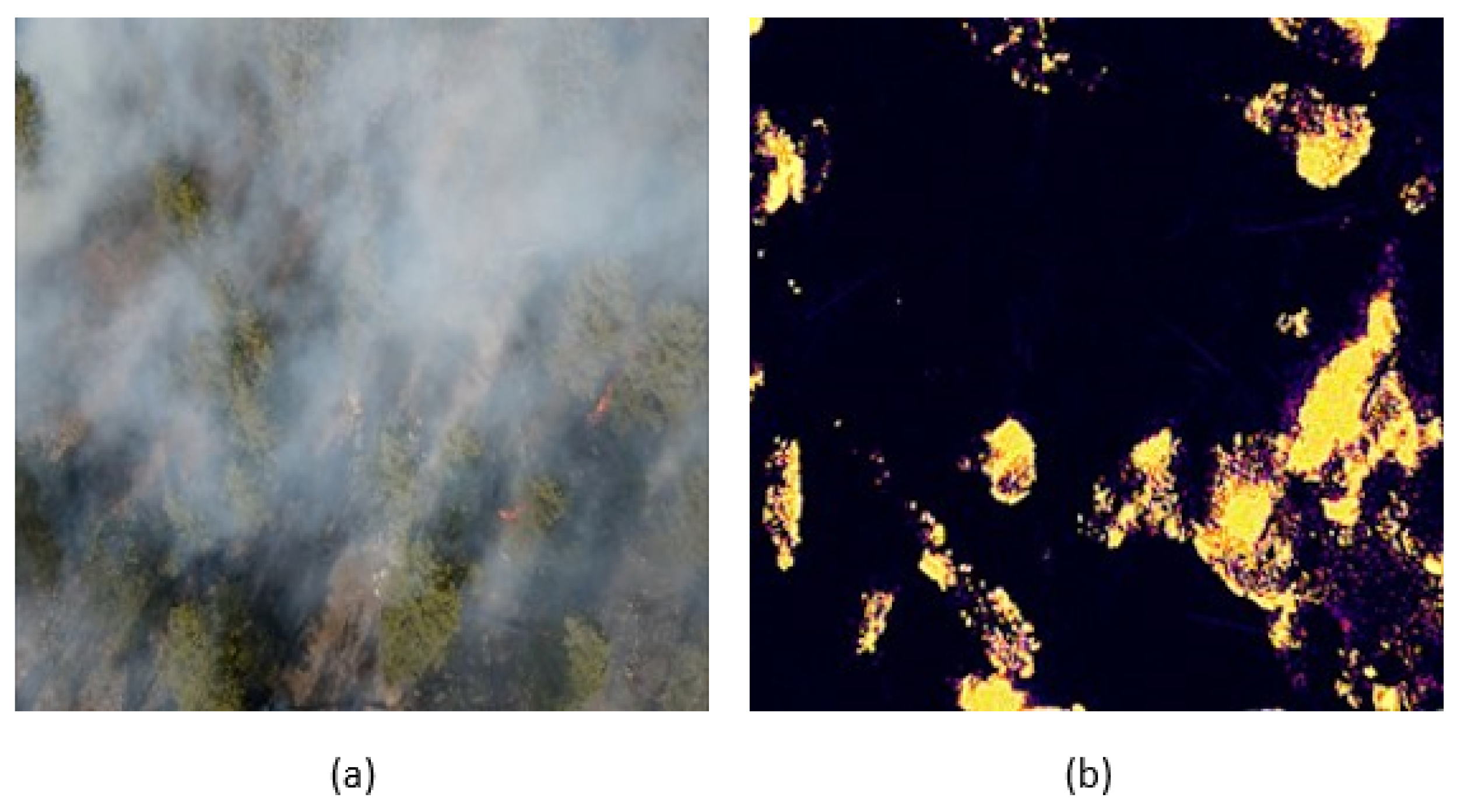

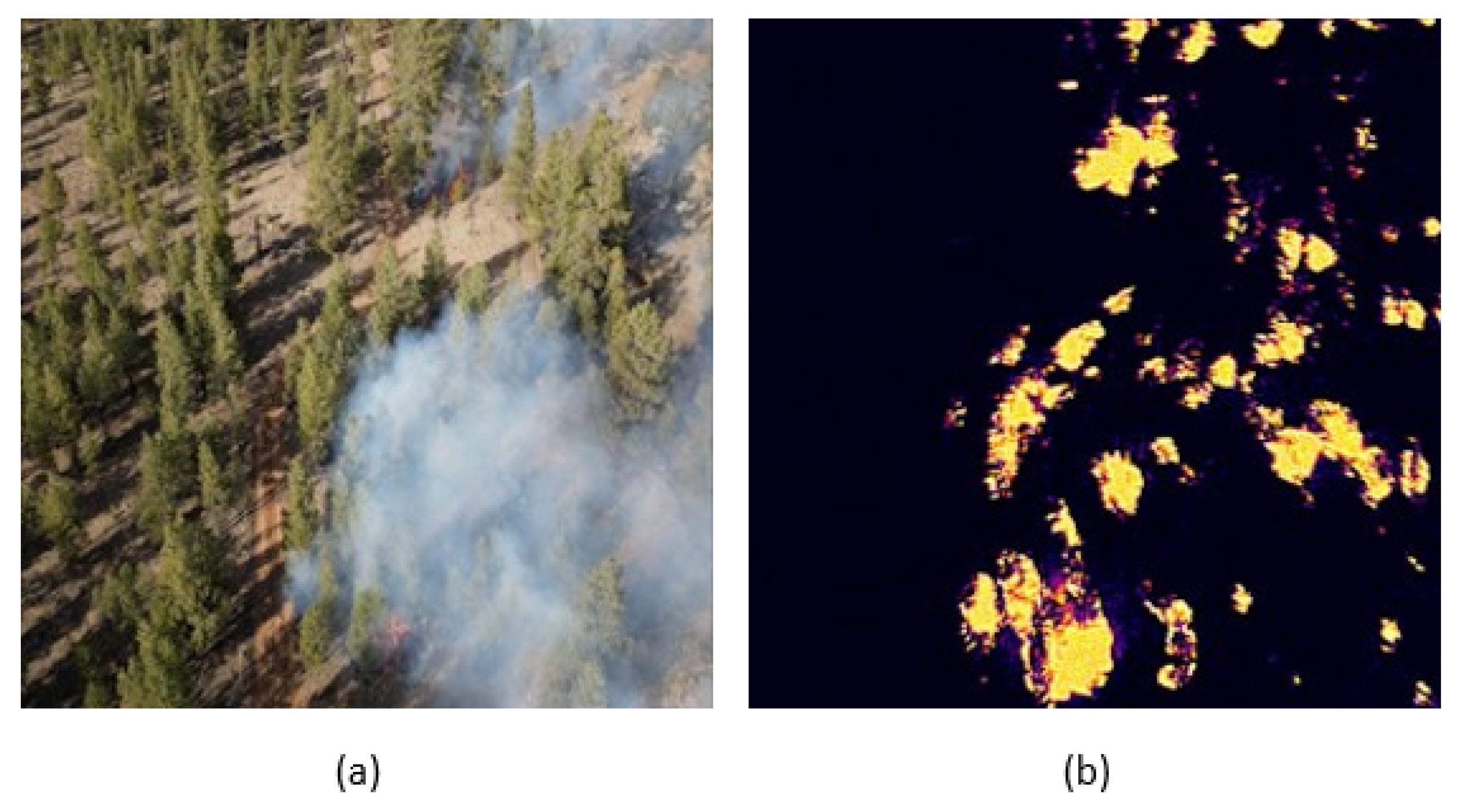

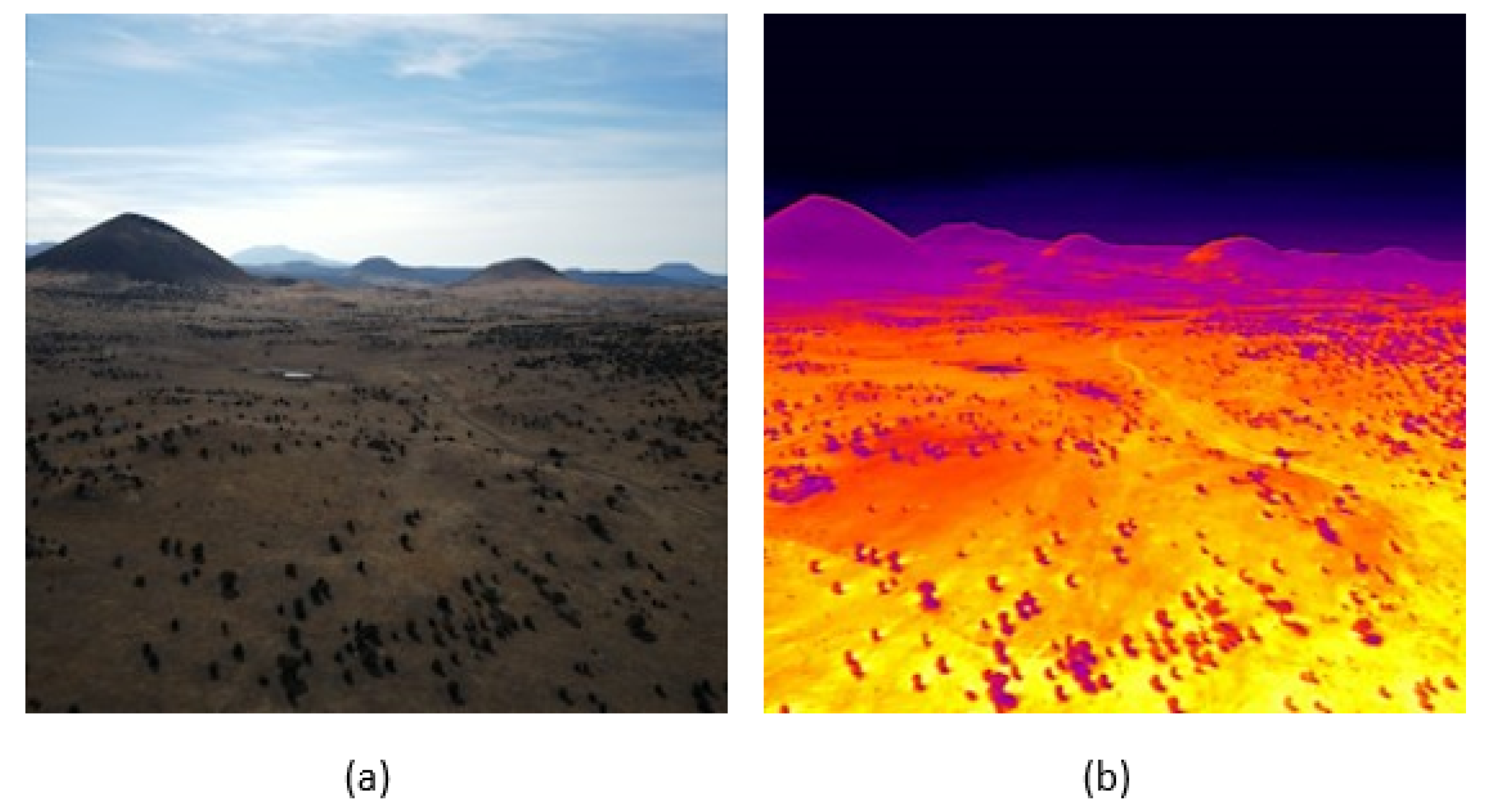

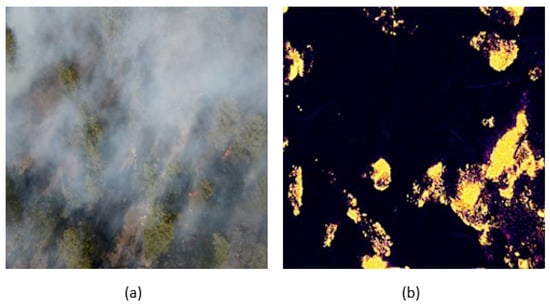

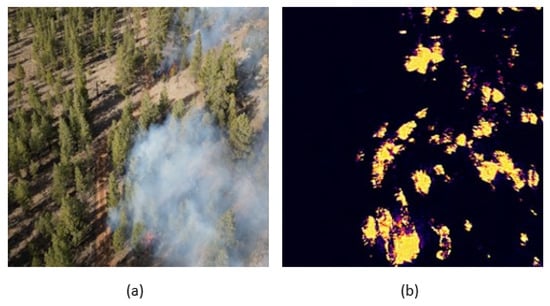

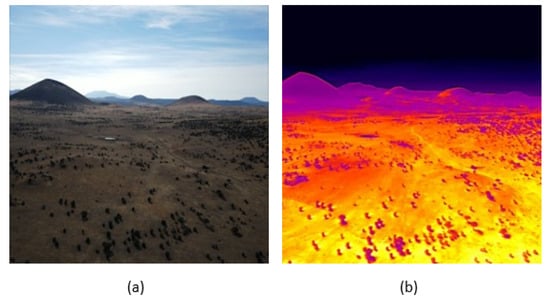

The lack of well-annotated and high-quality forest fire datasets has hampered the development of drone-based fire monitoring systems partly because of drone flight restrictions for planned wildfires. In November 2021, the FLAME 2 dataset was created by Fule, P. F., et al. [18] using drones to record streams of visible and IR spectrum video pairs together during a planned open canopy fire in northern Arizona. The main dataset and the supplemental dataset are the two main components of the FLAME 2 dataset. The main dataset consists of seven unlabeled raw RGB and IR video pairs, a set of 254p × 254p RGB/IR frame pairs, and a collection of original resolution RGB/IR frame pairs. The seven raw video pairs are the source of both sets of frame pairings. Both sets of frame pairs were labeled frame by frame. The “Fire/NoFire” label for each pair indicates if there is a fire or no fire in the RGB or IR image. The “Smoke/No Smoke” label for the RGB or IR image frame indicates if the smoke occupies at least 50% of the RGB image. The supplementary dataset consists of weather data, raw pre-burn videos, a geo-referenced pre-burn point cloud, a burn plan, an RGB pre-burn orthomosaic, and other data. The supplementary dataset gives context for the aerial photography in the main dataset. A set of 254p × 254p RGB/IR frame pairs was considered for training the forest fire detection model. The set contained 25,434 images for Fire/Smoke label as shown in Figure 1, 14,317 images for Fire/No Smoke label as shown in Figure 2, and 13,700 images for No Fire/No Smoke label as shown in Figure 3.

Figure 1.

Sample of class Fire/Smoke: (a) shows the RGB image. (b) shows the IR image.

Figure 2.

Sample of class Fire/No Smoke: (a) shows the RGB image. (b) shows the IR image.

Figure 3.

Sample of class No Fire/No Smoke: (a) shows the RGB image. (b) shows the IR image.

The first stage of preprocessing involves transforming the images in order to improve their appropriateness. This transformation entails converting the photos to the BGR (blue, green, red) from the commonly used RGB (red, green, blue). Then, every color channel in the BGR representation undergoes a zero-centering process. A technique known as zero-centering is achieved by modifying each pixel’s intensity values so that the mean value drops to zero while maintaining the original pixel value scale. One of the methods in the case of two or more inputs to the deep learning model architecture is the fusion method. Stahlschmidt, S. R. [19] explained that fusion methods were divided according to the condition of the fusion layers input into three categories: early, intermediate, and late. Early fusion involves concatenating the original input data and treating the resultant vector as unimodal input which means that the network architecture does not distinguish between the origins of the modality features. Intermediate fusion learns from marginal representations in the form of feature vectors rather than fusing the original multimodal input. Intermediate fusion can be divided into two parts: homogeneous and heterogeneous. In the homogeneous part, the neural networks of the same sort (convolutional neural network, fully connected, etc.) can be used to learn marginal representations while in the heterogeneous part, the peripheral representations are acquired through various network types. In late fusion, judgments from distinct unimodal sub models are integrated into a final conclusion rather than merging the original data or learned features.

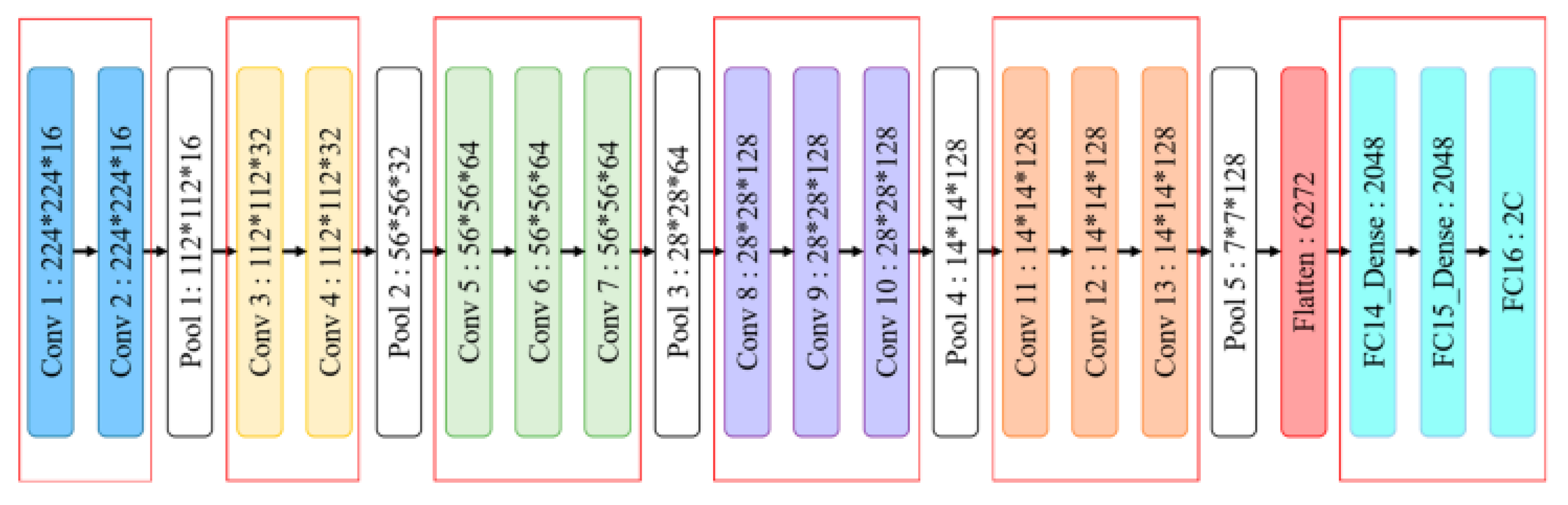

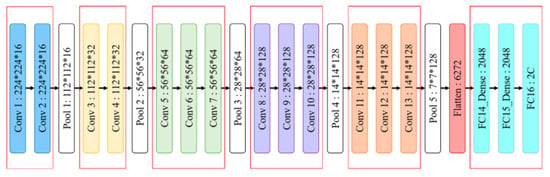

One of the most popular deep learning models specialized in image classification is VGG16. VGG16 is divided into two parts as shown in the Figure 4. The first section is a convolutional section and the second section is a dense section. The convolutional section consists of thirteen convolutional layers and five max-pooling layers. The dense section consists of three fully connected dense layers. The simple architecture of the VGG16 gives it the ability to produce outputs quickly, which is important for forest fire detection [20].

Figure 4.

VGG16 architecture [21].

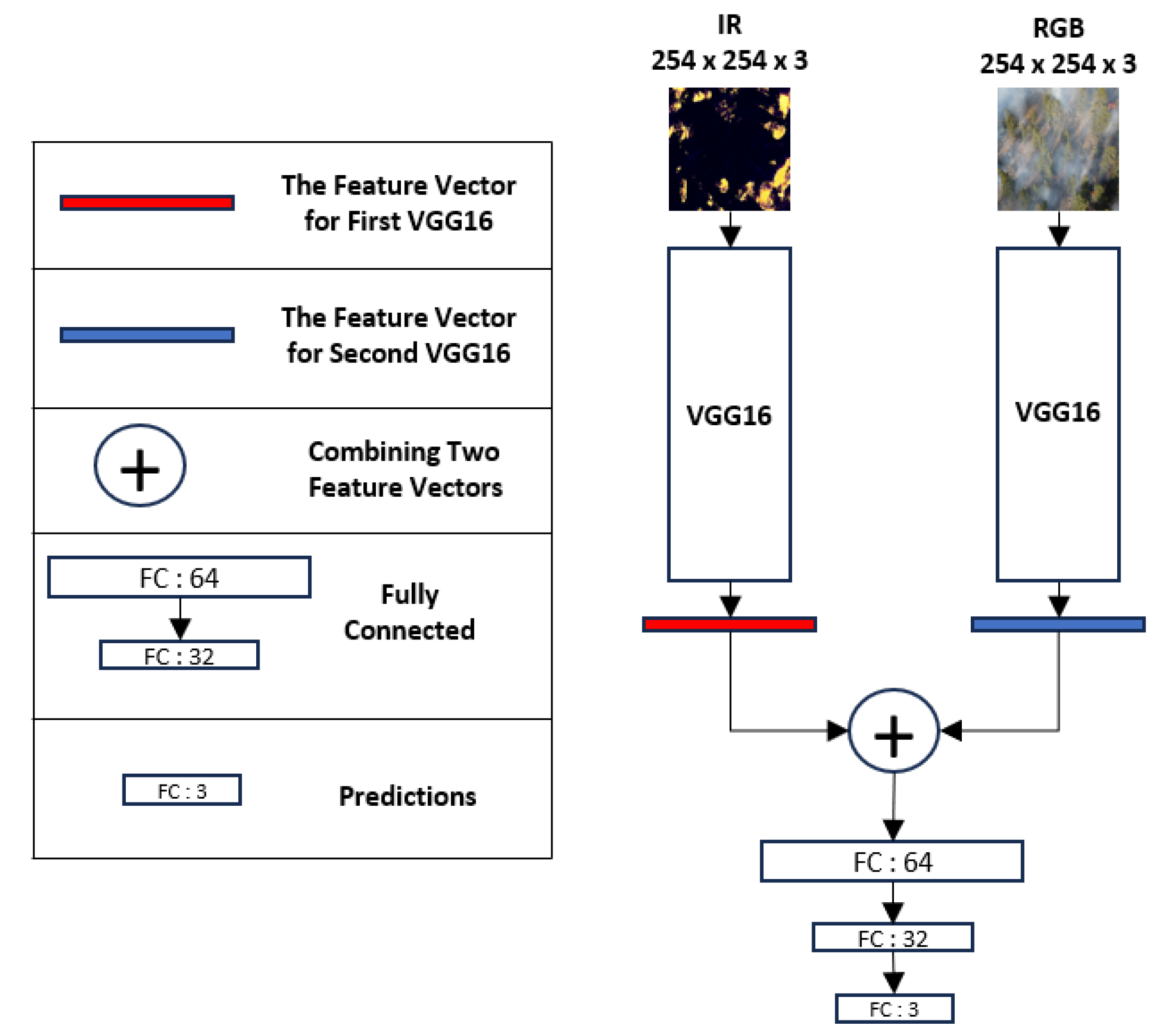

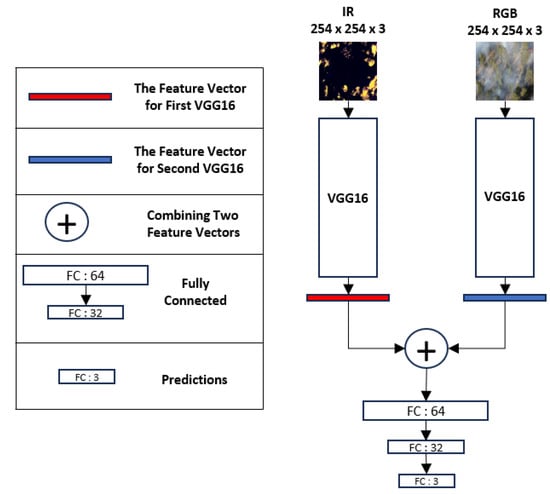

The proposed model for fire detection belongs to the intermediate fusion method of homogeneous parts and is called Intermediate Fusion VGG16. The early detection model comprises two VGG16 models, with the first VGG16 receiving an IR image as input and the second VGG16 receiving an RGB image as input. Two feature vectors are created after the inputs are fed to the two VGG16 models. Those vectors are merged to have a single vector and then fed into a fully connected network with 64 neurons and RELU activation function. The output is fed into a fully connected network with 32 neurons and a RELU activation function. In the final layer, the output is fed in a fully connected layer with three neurons and a SOFTMAX activation function to obtain the predictions. Figure 5 depicts the model architecture of detecting forest fire that is used in the Forest Defender Fusion system.

Figure 5.

Forest fire detection model architecture.

4. The Forest Defender Fusion System

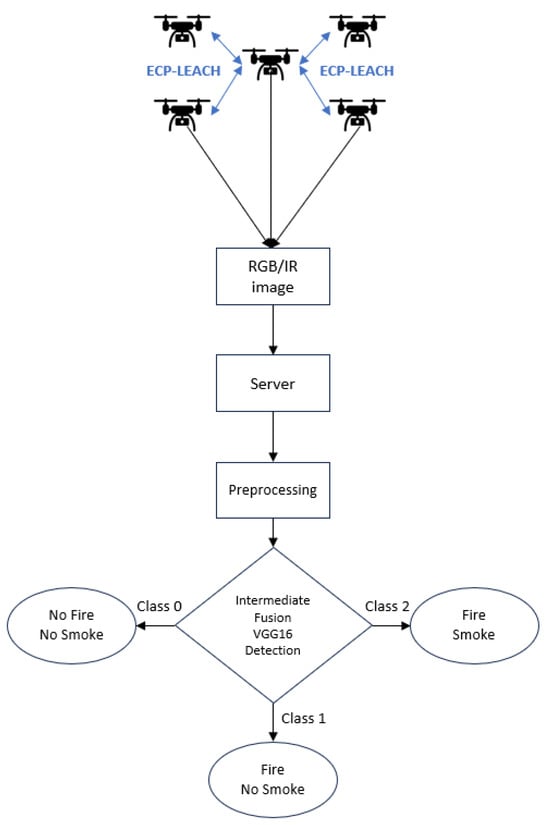

When we talk about forest fire early detection systems, they should have the latest materials and high detection accuracy to avoid alarm delay and the expansion of forest fire size. In this paper, a system with modern and accurate methods using drones, an energy-efficient routing protocol, and an accurate detection model for the early detection of forest fires is proposed called the Forest Defender Fusion System. These methods are integrated to create a powerful and accurate system that helps in the early detection of forest fires.

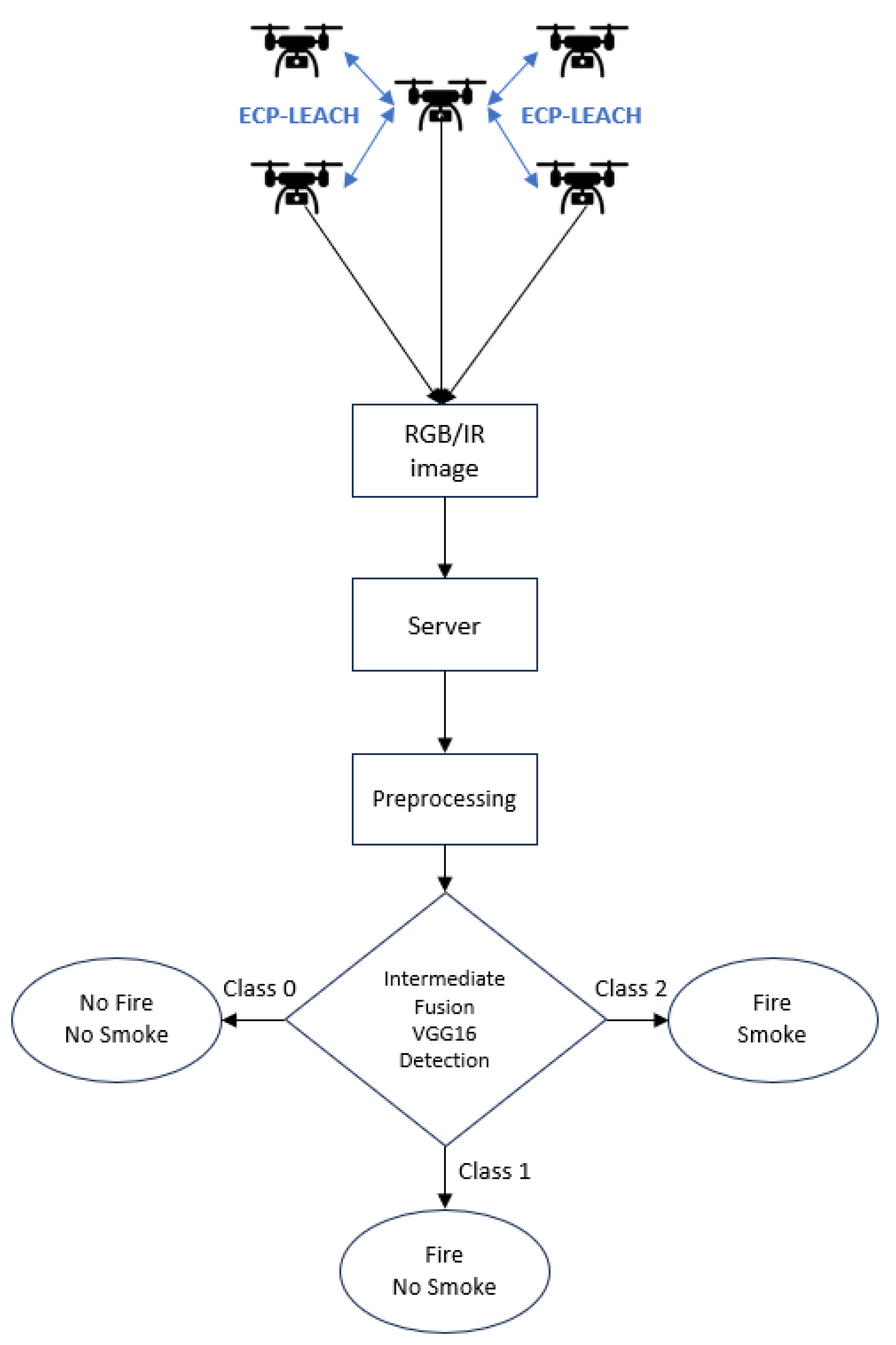

The drones are a valuable addition to the system due to their distinctive capabilities. The ECP-LEACH protocol allows drones to be used over a longer period of time and thus to further monitor the area in order to detect forest fires. The ECP-LEACH protocol puts the drone into sleep mode by the threshold monitoring unit. The drones transmit RGB images and IR images continuously to the base station based on the schedule specified by the ECP-LEACH protocol. The base station receives the images and sends them to the cloud, which in turn sends them to the server. The server has an Intermediate Fusion VGG16 model that is able to determine whether or not there is a forest fire. When the images arrive at the server, they enter the Intermediate Fusion VGG16 network to detect the existence of a forest fire. Each image (RGB and IR) is entered into the VGG16 model to form two feature vectors. The two vectors are combined to make an accurate decision about the situation. The system can issue alerts to the concerned authorities to inform them of the presence of a forest fire in the area of the drone sending the images to quickly extinguish the forest fire. Figure 6 shows the flowchart of the Forest Defender Fusion system.

Figure 6.

Forest Defender Fusion system flowchart.

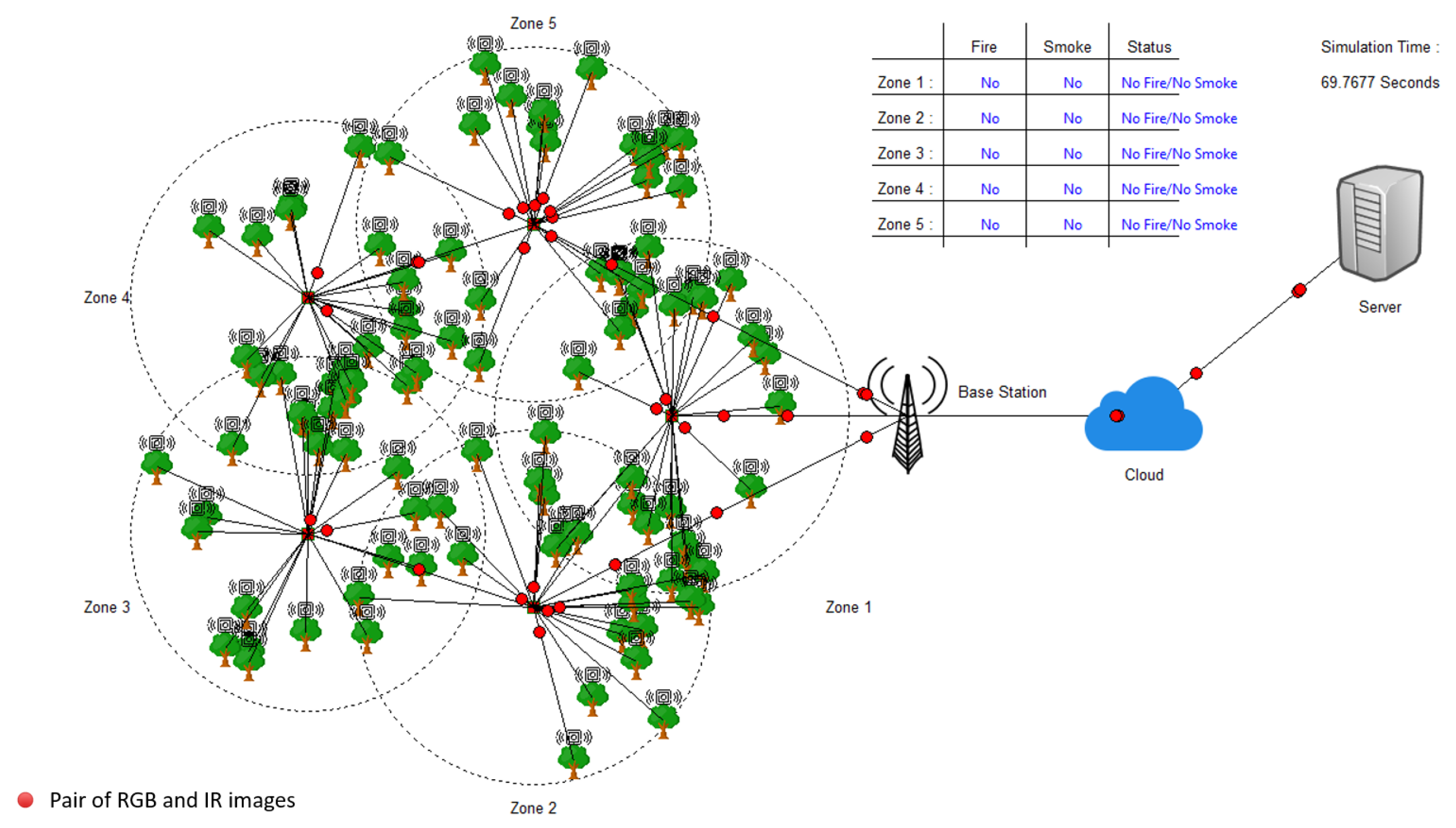

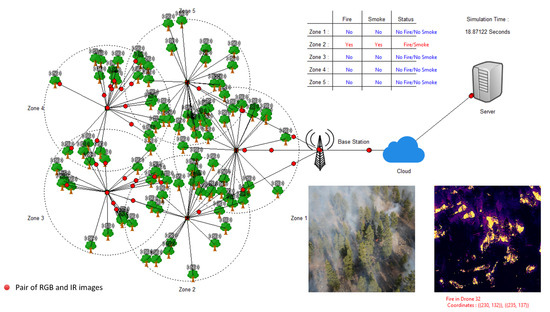

5. Deploying the Forest Defender Fusion System in Simulation

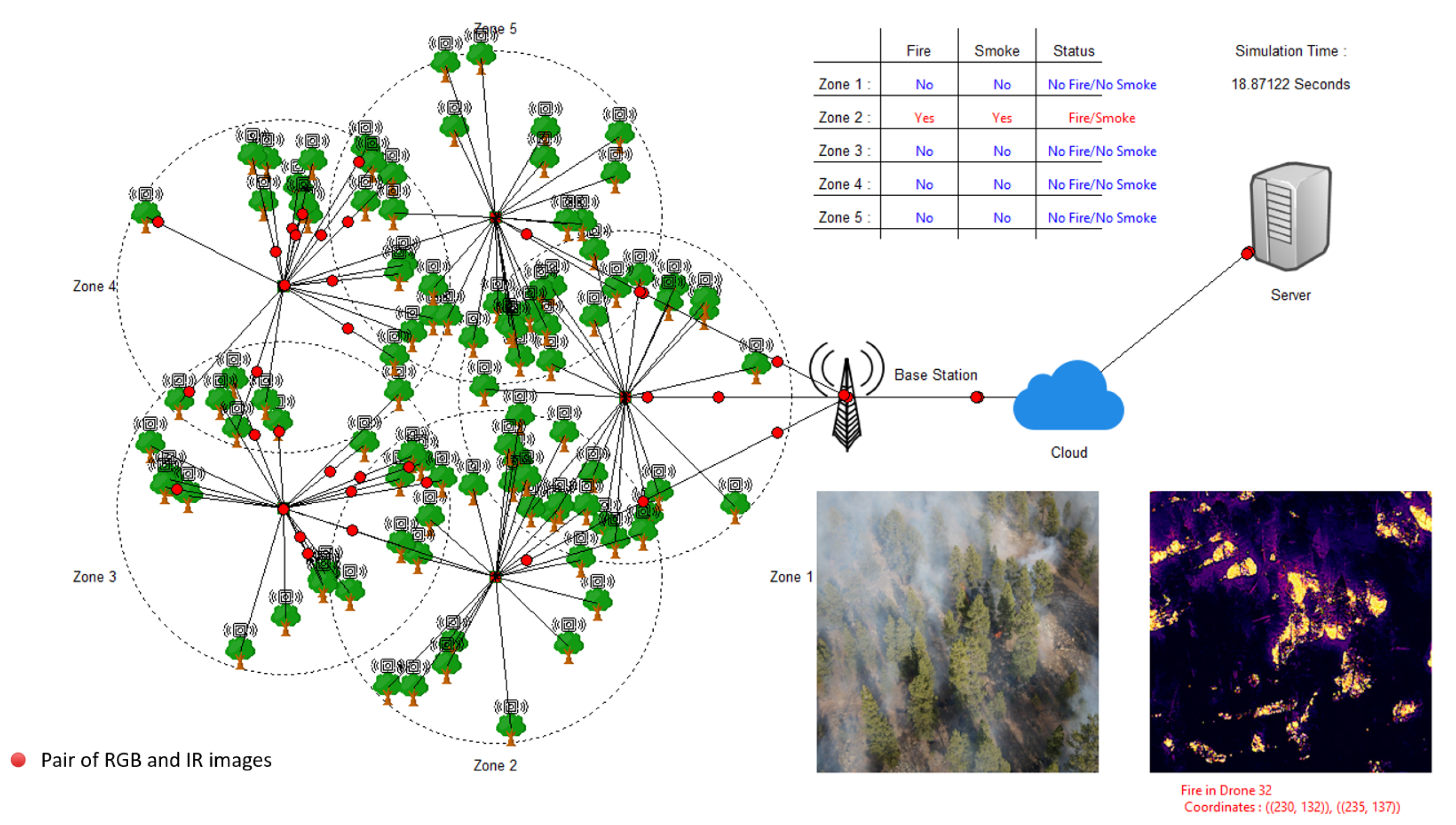

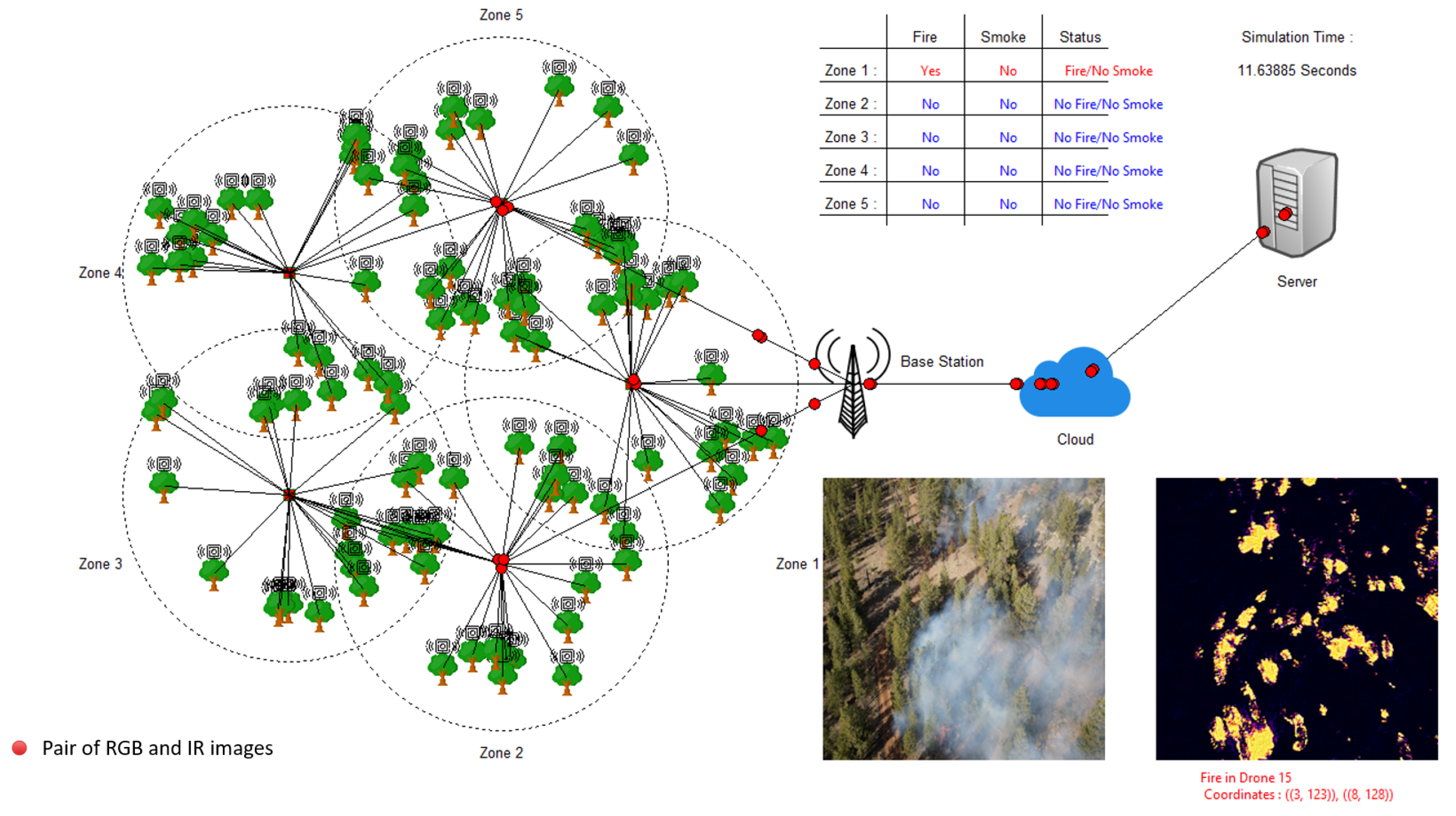

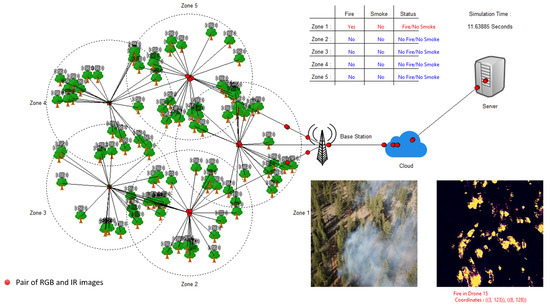

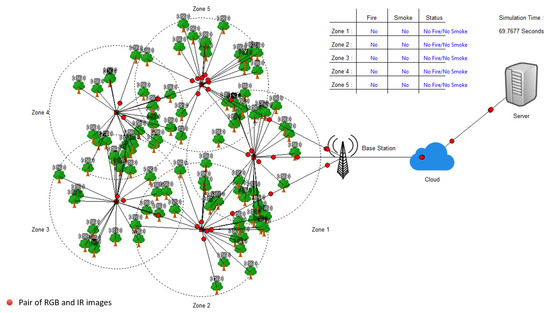

To demonstrate the Forest Defender Fusion system, a simulation was performed using Python version 3.11.4. The simulation consists of 100 drones that communicate with each other using the ECP-LEACH protocol. These drones are distributed in five zones within an area of 2000 m × 2000 m. The drones continuously send RGB and IR images to the base station. Then, the base station sends the images to the cloud which in turn transmits them to the server that contains the Intermediate Fusion VGG16 model. When the images arrive at the server, they are pre-processed and then the decision is made by the Intermediate Fusion VGG16. In Table 3, the simulation parameters are presented.

Table 3.

Network parameters.

The Intermediate Fusion VGG16 detects whether the image is one of three situations: (1) There is fire and there is smoke. Therefore, an RGB and IR image is displayed along with the drone’s location to extinguish the forest fire as shown in Figure 7.

Figure 7.

Fire/Smoke detection.

(2) There is a fire and no smoke. This also represents a forest fire so an RGB and IR image is displayed with the location of the drone to extinguish the forest fire as shown in the Figure 8.

Figure 8.

Fire/No Smoke detection.

(3) There is no fire and no smoke. This case represents there is no forest fire so no action is taken as shown in Figure 9. This simulation was carried out to show how the system works in the real world.

Figure 9.

No Fire/No Smoke detection.

6. Results and Discussions

This Section makes a contribution by providing a thorough analysis of the proposed Forest Defender Fusion System through comparisons with fusion methods and models for detecting forest fires. Four performance metrics including F1 Score, Accuracy, Recall, and Precision have been performed to evaluate the proposed system. These metrics are crucial markers to evaluate the model’s accuracy in detecting forest fires while reducing false positives and negatives. The next sections provide a detailed comparison of the performance and highlight the advantages and disadvantages of each approach for detecting forest fires.

6.1. Performance Metrics

The accuracy of the model signifies its overall correctness. The percentage of accurately predicted instances out of all instances is measured. Equation (1) is used to calculate the accuracy.

Precision calculates the ratio of accurately predicted positive observations to all predicted positives in order to determine how many expected positive occurrences are truly positive. Equation (2) is used for calculating the precision.

Recall quantifies the model’s capacity to identify each pertinent incident. How many of the actual positive cases were correctly predicted is determined by calculating the ratio of correctly predicted positive observations to the total number of actual positives. Equation (3) is used for calculating the recall.

The F1 score describes the harmonic mean of recall and precision. It offers recall and precision in a balanced manner. Equation (4) is used for measuring the F1 score.

6.2. Comparison with Fusion Methods

In order to evaluate the performance of the proposed system, it is compared with other fusion-method algorithms which were trained on the FLAME2 dataset.

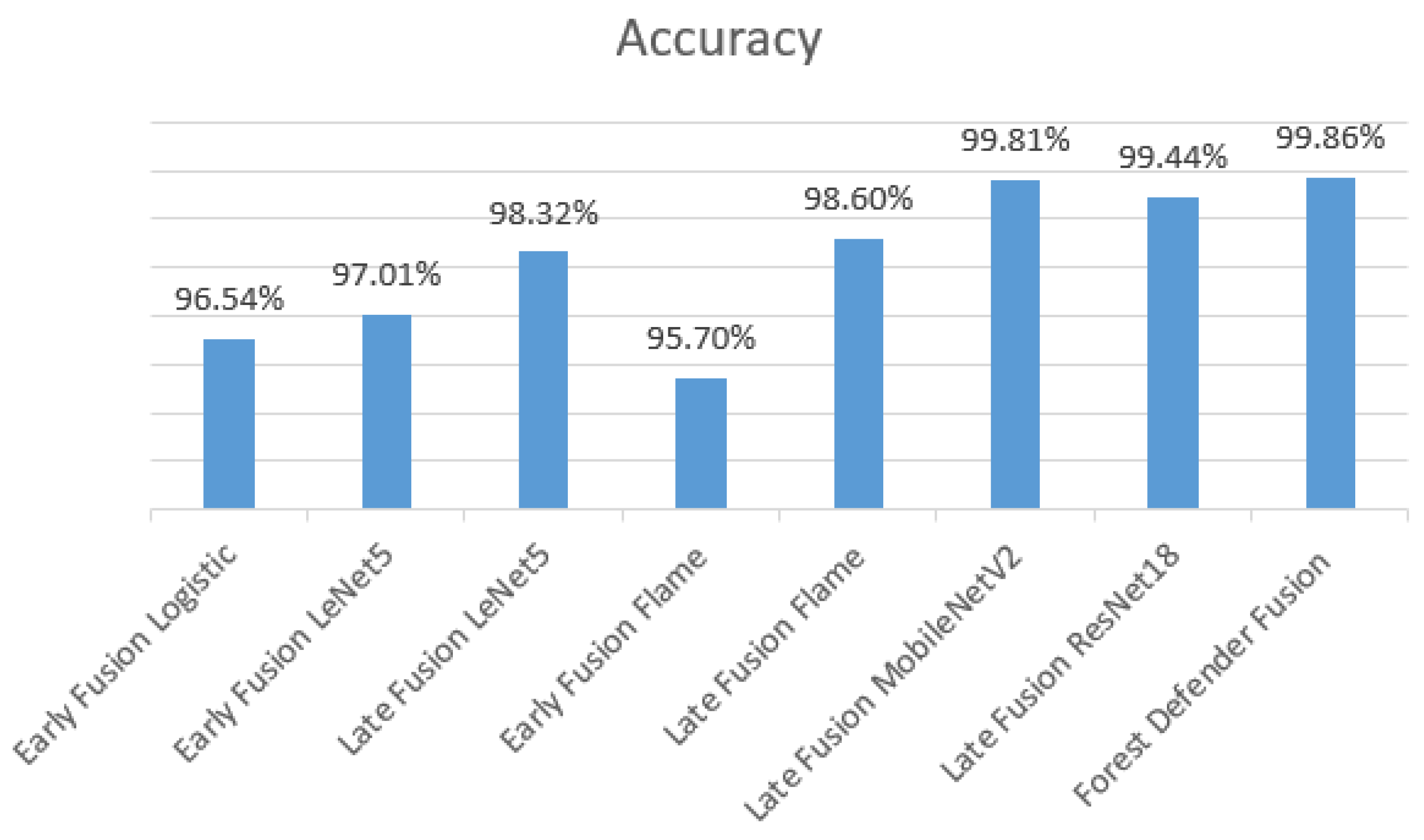

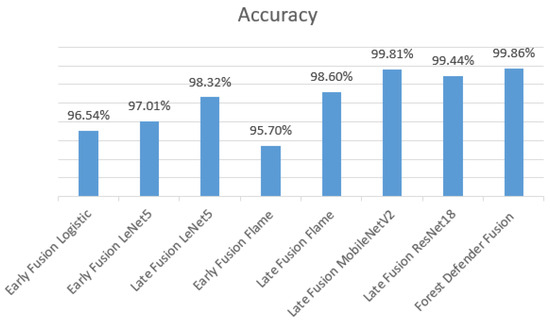

The results displayed in Figure 10 highlight the comparisons between the Forest Defender Fusion System and Fusion methods by Chen, X [11] in an accuracy metric. The Forest Defender Fusion System performs with 99.86% accuracy. The accuracy values shown by the Early Fusion Logistic (96.54%), Early Fusion LeNet5 (97.01%), and Early Fusion Flame (95.7%) models indicate the value of merging data early in the network. The Late Fusion Flame (98.60%), Late Fusion LeNet5 (98.32%), and Late Fusion ResNet18 (99.44%) models highlight the benefits of integrating information at later stages by improving accuracy even more. The improved performance of Late Fusion MobileNetV2 (99.81%) over its competitors highlights the importance of MobileNetV2’s architecture in obtaining better results. The proposed Forest Defender Fusion System outperforms all other models. This highlights the value of the VGG16 design in conjunction with intermediate fusion methods which offer a very precise solution for early detection systems in the monitoring of forest fires.

Figure 10.

Comparison of accuracy between models.

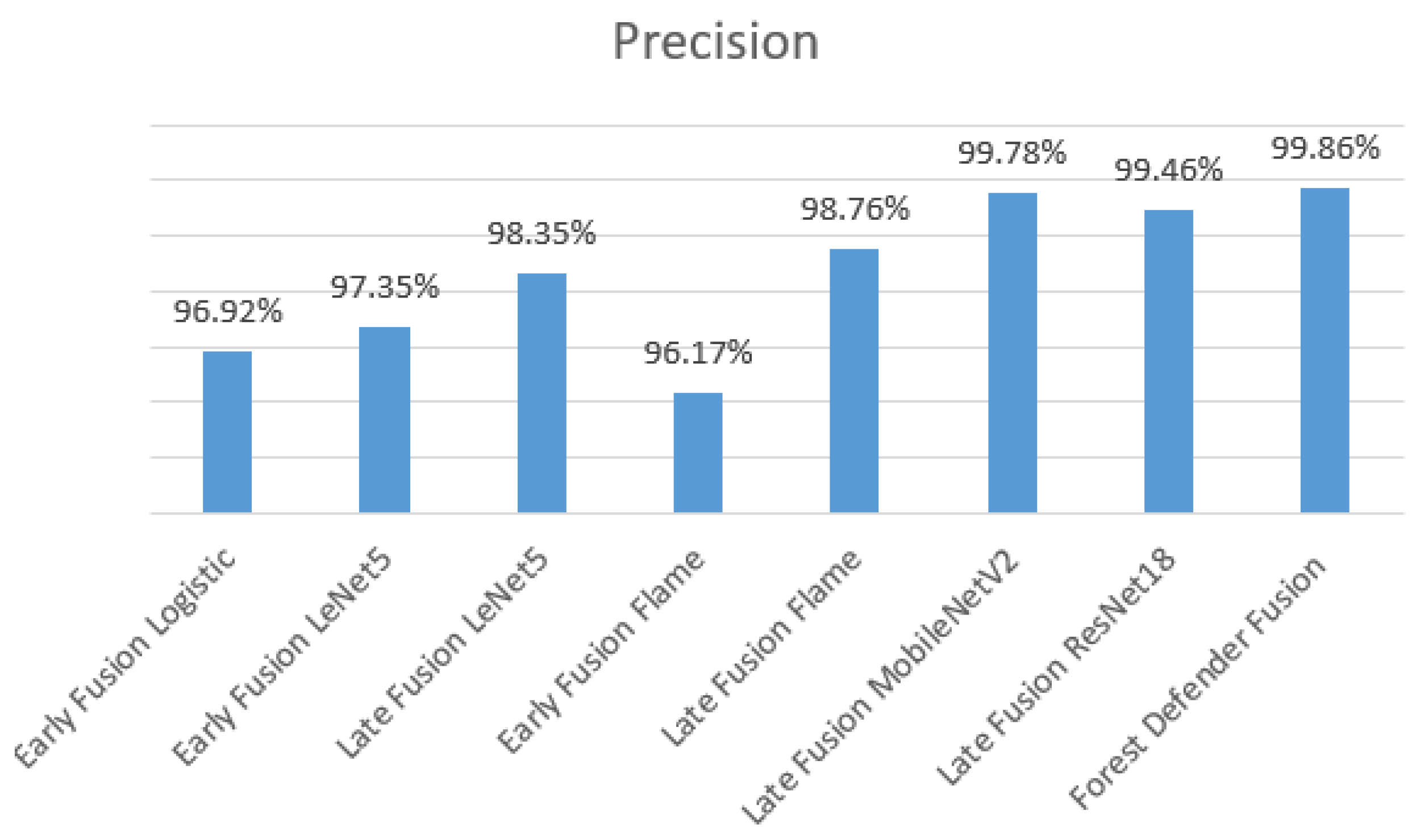

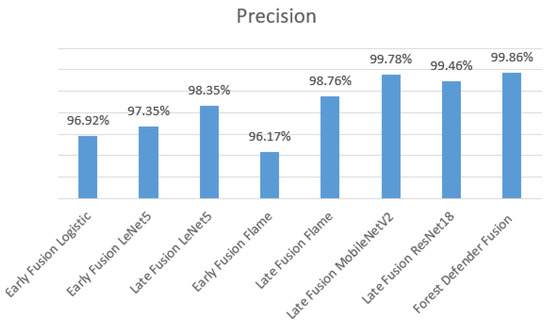

The Forest Defender Fusion System stands out among the assessed models with a precision of 99.86% as shown in Figure 11. This indicates that the system performs well in accurately detecting positives, which demonstrates its effectiveness in detecting forest fires. Late Fusion MobileNetV2 stands out as the closest competitor to the proposed system as it obtained a precision of 99.78%. The rest of the algorithms obtained 96.92%, 97.35%, 98.35%, 96.17%, 98.76%, and 99.46% for Early Fusion Logistic, Early Fusion LeNet5, Late Fusion LeNet5, Early Fusion Flame, Late Fusion Flame, and Late Fusion ResNet18, respectively. Interestingly, the late fusion techniques are able to consistently produce improved precision in various designs, indicating that they are useful for improving positive prediction accuracy. The Forest Defender Fusion System’s precision highlights the importance of this system in producing better outcomes and supports its potential for detecting forest fires.

Figure 11.

Comparison of precision between models.

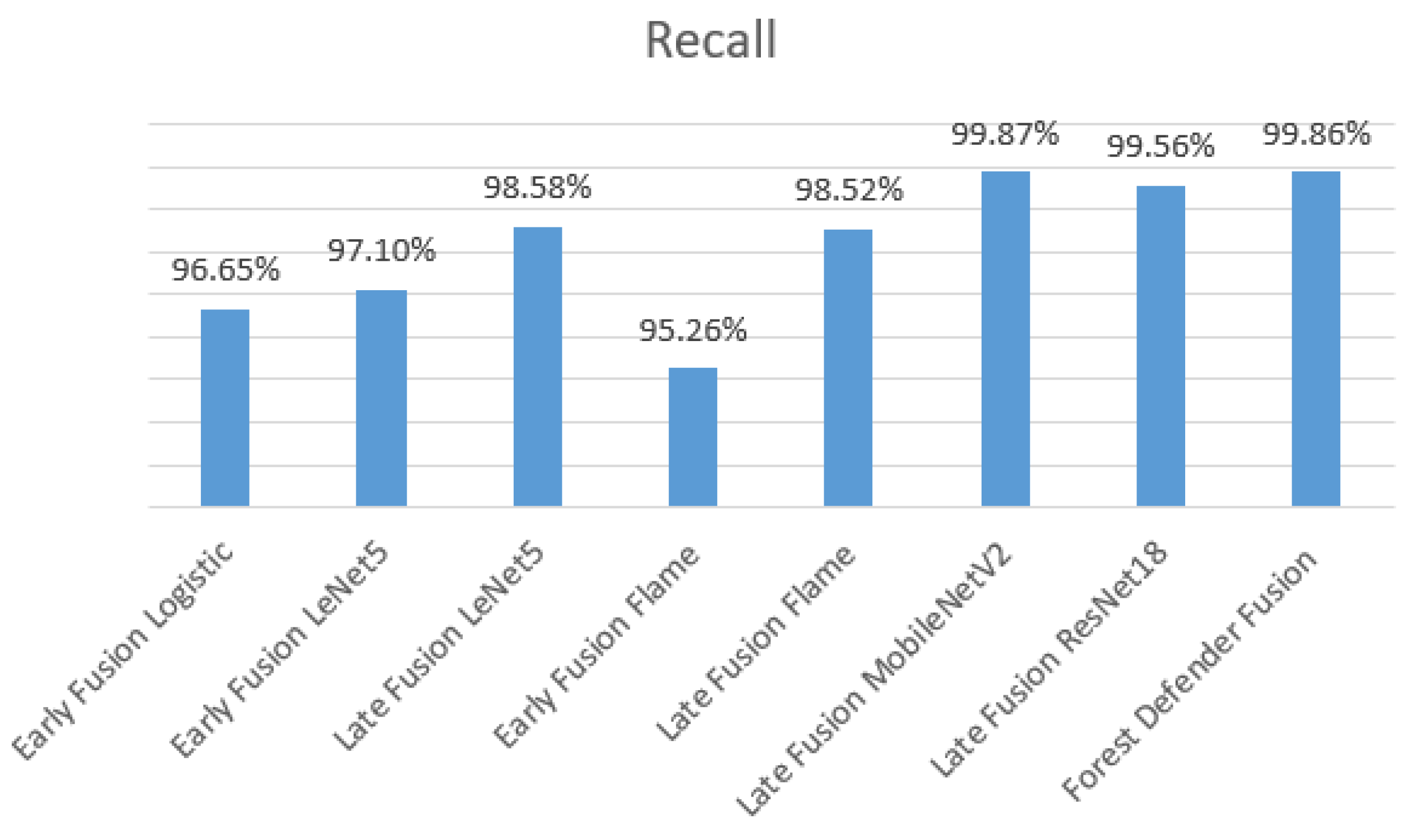

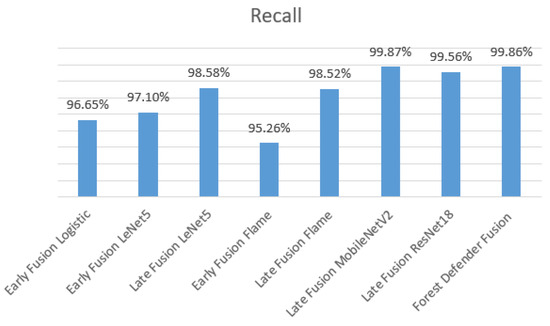

The Forest Defender Fusion System demonstrates commendable performance with a recall value of 99.86% as shown in Figure 12. This signifies the system’s proficiency in accurately identifying and retrieving relevant instances. The Forest Defender Fusion System outperforms the rest of the models except Late Fusion MobileNetV2, where it obtained a recall of 99.87% while the rest of the algorithms obtained 96.65%, 97.10%, 98.58%, 95.26%, 98.52%, and 99.56% for Early Fusion Logistic, Early Fusion LeNet5, Late Fusion LeNet5, Early Fusion Flame, Late Fusion Flame, and Late Fusion ResNet18, respectively.

Figure 12.

Comparison of recall between models.

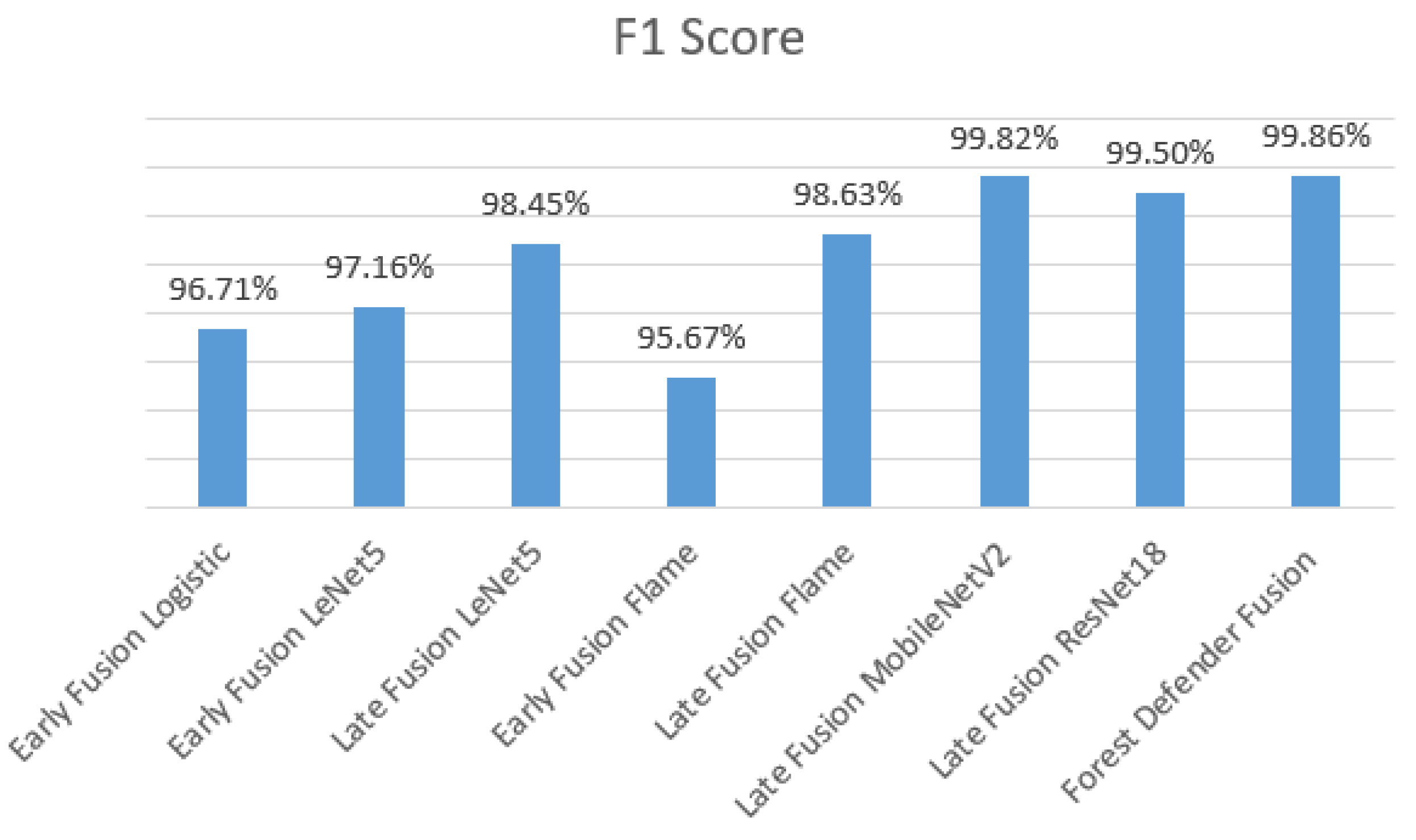

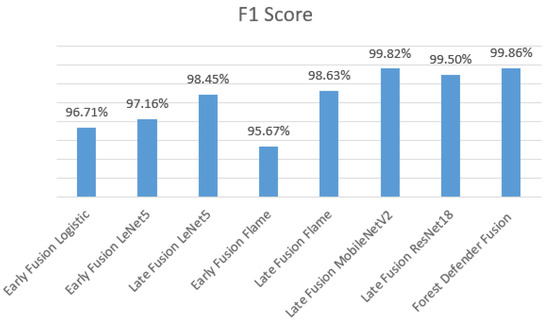

Figure 13 displays the performance of different models according to their F1 scores. With an F1 score of 99.86%, the Forest Defender Fusion System was successful. This result demonstrates how well the system works to detect forest fires. Notably, high F1 scores of 99.82% and 99.50% are shown by the Late Fusion MobileNetV2 and Late Fusion ResNet18 models, respectively, demonstrating their proficiency in detecting forest fires. The rest of the models obtained 96.71%, 97.16%, 98.45%, 95.67%, and 98.63% for Early Fusion Logistic, Early Fusion LeNet5, Late Fusion LeNet5, Early Fusion Flame, and Late Fusion Flame, respectively. These results collectively emphasize the strong performance of the Forest Defender Fusion System and its potential for detecting forest fires.

Figure 13.

Comparison of F1 scores between models.

6.3. Comparison with Forest Fire Detection Models

For more performance evaluation, the proposed system is compared with other forest fire detection algorithms which were trained on a different dataset. The Forest Defender Fusion performance is assessed in Table 4 against many of the other models in detecting forest fire. With an accuracy of 99.86%, the Forest Defender Fusion System outperforms all other models taken into consideration for this comparison. The model that is closest to the proposed system is the one developed by Mashraqi et al. [9] with an accuracy of 99.38%. This implies that the Forest Defender Fusion System has a high detection accuracy. Competitive performance is also shown by Wang et al. [10], Khan et al. [22], and Khan et al. [23] at 98.73%, 98.42%, and 95.0%, respectively. The lower accuracy of Sousa et al. [24], Tang et al. [25], and Sun et al. [26] at 93.6%, 92.0%, and 94.1%, respectively, suggest the need for improvement.

Table 4.

Performance metrics for Intermediate Fusion VGG16 model and other forest fire detection models.

The Forest Defender Fusion System outperforms the other models with a precision of 99.86%. Mashraqi et al. [9] and Khan et al. [22] had a precision close to that of the system with precision scores of 99.38% and 97.42%, respectively, which highlights their efficacy for detecting forest fires. However, the precisions of 95.7% and 94.1% shown by Khan et al. [23] and Sousa et al. [24] were lower.

The performance of the Forest Defender Fusion System was evaluated by comparing it to other fire detection models proposed by Khan et al. [23], Mashraqi et al. [9], Sousa et al. [24], and Khan et al. [22]. The Forest Defender Fusion System demonstrates a superior Recall value of 99.86%, outperforming the compared models (94.2%, 99.38%, 93.1%, and 99.47%).

The results show that the Forest Defender Fusion System performs better than the other models with an F1 score of 99.86%. This performance demonstrates how well the system works to detect forest fires. The system obtained high F1 scores compared with 99.38%, 94.96%, and 98.43% obtained by Mashraqi et al. [9], Khan, A. et al. [23], and Khan, S. et al. [22], respectively.

While the Forest Defender Fusion System exhibits promising capabilities in forest fire early detection through simulations, the complexities of real-world scenarios cannot be fully captured in a simulated environment. Resource constraints encompassing financial, security, and temporal considerations have hindered the execution of real-world experiments. However, the components of the Forest Defender Fusion System including the ECP-LEACH protocol, drone functionalities, and the Intermediate Fusion VGG16 model exhibit consistent behavior in both simulated and real-world environments, minimizing the impact of the simulation–real-world disparity on the overall evaluation of the system’s efficacy.

6.4. Information Results from the Simulation

The simulation produces an Excel file in which the resulting information is stored. The file includes the arrival time of images sent from a drone to the server (Time), the zone number in which the drone is located (Zone Number), the drone number, whether there is a fire or not (Fire Situation), and whether there is smoke or not (Smoke Condition) as shown in Table 5.

Table 5.

Stored information.

7. Conclusions

In order to address the growing global concern of increasing forest fires, this paper proposed a Forest Defender Fusion System for the early detection of forest fires. Proactive methods for early identification and containment are required because of the catastrophic effects that forest fires have on ecosystems due to a variety of variables including natural and human causes. The proposed Forest Defender Fusion System offers a thorough method for the early detection of forest fires. The essential contribution of the proposed system is to ensure the accurate detection of forest fires with early detection as well as regulating energy consumption to achieve the longest period of monitoring. Continuous monitoring of possible fire-prone areas is made possible by the ECP-LEACH protocol in conjunction with drones. Drones take RGB and IR images which are sent to a server where the Intermediate Fusion VGG16 model analyzes the images and accurately detects forest fires. The solution guarantees extended monitoring durations which increase the likelihood of early detection and prompt response by controlling drone energy usage. The efficacy of the system is further reinforced by the 99.86% accuracy obtained during the training of the Intermediate Fusion VGG16 detection model on the FLAME 2 dataset. The system is deployed in a simulation to demonstrate the operation of the Forest Defender Fusion System in the real time. The system is positioned as a trustworthy resource for detecting and managing forest fires. The proposed early detection system for forest fires is a proactive response to the increasing frequency of forest fires caused by human activity and rising global temperatures. The system helps to conserve ecosystems and valuable biodiversity by the provision of timely alerts and the facilitation of swift response actions. Improvements and optimizations might be investigated as future work to improve the system’s functionality and adaptation to changing environmental conditions. Maintaining a lead in the face of changing fire dynamics requires ongoing study and development in this field. The system can strengthen efforts toward sustainable forest management and the preservation of the natural environment by putting such innovations into practice. Future research should prioritize collaborating with stakeholders, securing resources, and addressing safety considerations to conduct a real testbed.

Author Contributions

Conceptualization, M.K.I.I.; methodology, M.K.I.I.; software, M.K.I.I.; validation, M.K.I.I., M.B.M. and A.F.; formal analysis, M.K.I.I.; investigation, M.K.I.I.; resources, M.K.I.I.; data curation, M.K.I.I.; writing—original draft preparation, M.K.I.I.; writing—review and editing, M.B.M. and A.F.; visualization, M.K.I.I.; supervision, M.B.M. and A.F.; project administration, M.K.I.I., M.B.M. and A.F.; funding acquisition, M.K.I.I. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The FLAME 2 dataset can be found in IEEE DataPort (https://ieee-dataport.org/open-access/flame-2-fire-detection-and-modeling-aerial-multi-spectral-image-dataset (accessed on 10 November 2023)).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| RGB | Red, Green, and Blue |

| BGR | Blue, Green, and Red |

| IR | Infrared |

| EFFIS | European Forest Fire Information System |

| TP | True Positives |

| TN | True Negatives |

| FP | False Positives |

| FN | False Negatives |

References

- Evelpidou, N.; Tzouxanioti, M.; Gavalas, T.; Spyrou, E.; Saitis, G.; Petropoulos, A.; Karkani, A. Assessment of Fire Effects on Surface Runoff Erosion Susceptibility: The Case of the Summer 2021 Forest Fires in Greece. Land 2022, 11, 21. [Google Scholar] [CrossRef]

- Meier, S.; Strobl, E.; Elliott, R.J.; Kettridge, N. Cross-country risk quantification of extreme wildfires in Mediterranean Europe. Risk Anal. 2023, 43, 1745–1762. [Google Scholar] [CrossRef] [PubMed]

- Pang, Y.; Li, Y.; Feng, Z.; Feng, Z.; Zhao, Z.; Chen, S.; Zhang, H. Forest Fire Occurrence Prediction in China Based on Machine Learning Methods. Remote Sens. 2022, 14, 5546. [Google Scholar] [CrossRef]

- Mehta, K.; Sharma, S.; Mishra, D. Internet-of-Things Enabled Forest Fire Detection System. In Proceedings of the 2021 Fifth International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Palladam, India, 11–13 November 2021; pp. 20–23. [Google Scholar] [CrossRef]

- Dampage, U.; Bandaranayake, L.; Wanasinghe, R.; Kottahachchi, K.; Jayasanka, B. Forest fire detection system using wireless sensor networks and machine learning. Sci. Rep. 2022, 12, 46. [Google Scholar] [CrossRef] [PubMed]

- Kang, Y.; Jang, E.; Im, J.; Kwon, C. A deep learning model using geostationary satellite data for forest fire detection with reduced detection latency. GISci. Remote Sens. 2022, 59, 2019–2035. [Google Scholar] [CrossRef]

- Kalaivani, V.; Chanthiya, P. An Improved Forest Fire Detection Method Based on the Detectron2 Model and a Deep Learning Approach. Sensors 2022, 15, 1285–1295. [Google Scholar] [CrossRef]

- Yandouzi, M.; Grari, M.; Idrissi, I.; Moussaoui, O.; Azizi, M.; Ghoumid, K.; Elmiad, A.K. Review on forest fires detection and prediction using deep learning and drones. J. Theor. Appl. Inf. Technol. 2022, 100, 4565–4576. [Google Scholar]

- Mashraqi, A.M.; Asiri, Y.; Algarni, A.D.; Abu-Zinadah, H. DeepFire: Drone imagery forest fire detection and classification using modified deep learning model. Therm. Sci. 2022, 26, 411–423. [Google Scholar] [CrossRef]

- Wang, Y.; Ning, W.; Wang, X.; Zhang, S.; Yang, D. A Novel Method for Analyzing Infrared Images Taken by Unmanned Aerial Vehicles for Forest Fire Monitoring. Trait. Signal 2023, 40, 1219–1226. [Google Scholar] [CrossRef]

- Chen, X.; Hopkins, B.; Wang, H.; O’Neill, L.; Afghah, F.; Razi, A.; Fulé, P.; Coen, J.; Rowell, E.; Watts, A. Wildland Fire Detection and Monitoring Using a Drone-Collected RGB/IR Image Dataset. IEEE Access 2022, 10, 121301–121317. [Google Scholar] [CrossRef]

- Behera, T.M.; Samal, U.C.; Mohapatra, S.K.; Khan, M.S.; Appasani, B.; Bizon, N.; Thounthong, P. Energy-Efficient Routing Protocols for Wireless Sensor Networks: Architectures, Strategies, and Performance. Electronics 2022, 11, 2282. [Google Scholar] [CrossRef]

- Shingare, R.; Agnihotri, S. Energy-Efficient and Fast Data Collection in WSN Using Genetic Algorithm. In Proceedings of the International Conference on Recent Trends in Computing ICRTC 2022; Springer: Singapore, 2023; pp. 361–374. [Google Scholar] [CrossRef]

- Bharany, S.; Sharma, S.; Badotra, S.; Khalaf, O.I.; Alotaibi, Y.; Alghamdi, S.; Alassery, F. Energy-Efficient Clustering Scheme for Flying Ad-Hoc Networks Using an Optimized LEACH Protocol. Energies 2021, 14, 6016. [Google Scholar] [CrossRef]

- Lansky, J.; Rahmani, A.M.; Malik, M.H.; Yousefpoor, E.; Yousefpoor, M.S.; Khan, M.U.; Hosseinzadeh, M. An energy-aware routing method using firefly algorithm for flying ad hoc networks. Sci. Rep. 2023, 13, 1323. [Google Scholar] [CrossRef]

- Ibrahim, M.S.; Shanmugaraja, P.; Raj, A.A. Energy-Efficient OLSR Routing Protocol for Flying Ad Hoc Networks. In Advances in Information Communication Technology and Computing, Proceedings of AICTC 2021; Springer: Singapore, 2022; pp. 75–88. [Google Scholar] [CrossRef]

- Ibraheem, M.K.; Mohamed, M.B.; Fakhfakh, A. SmokeNet: SEnergy Optimization Efficiency in Wireless Sensor Networks for Forest Fire Detection: An Innovative Sleep Technique. Int. J. Recent Innov. Trends Comput. Commun. 2023, 11, 253–260. [Google Scholar] [CrossRef]

- Fule, P.F.; Watts, A.W.; Afghah, F.A.; Hopkins, B.H.; O’Neill, L.O.; Razi, A.R.; Coen, J.C. FLAME 2: Fire Detection and ModeLing: Aerial Multi-Spectral ImagE Dataset; IEEE DataPort: New York, NY, USA, 2022. [Google Scholar] [CrossRef]

- Stahlschmidt, S.R.; Ulfenborg, B.; Synnergren, J. Multimodal deep learning for biomedical data fusion: A review. Brief. Bioinform. 2022, 23, 569. [Google Scholar] [CrossRef]

- Omiotek, Z.; Kotyra, A. Flame Image Processing and Classification Using a Pre-Trained VGG16 Model in Combustion Diagnosis. Sensors 2021, 21, 500. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Z.-P.; Liu, Y.-Y.; Shao, Z.-E.; Huang, K.-W. An Improved VGG16 Model for Pneumonia Image Classification. Appl. Sci. 2021, 11, 11185. [Google Scholar] [CrossRef]

- Khan, S.; Khan, A. FFireNet: Deep Learning Based Forest Fire Classification and Detection in Smart Cities. Symmetry 2022, 14, 2155. [Google Scholar] [CrossRef]

- Khan, A.; Hassan, B.; Khan, S.; Ahmed, R.; Abuassba, A. DeepFire: A Novel Dataset and Deep Transfer Learning Benchmark for Forest Fire Detection. Mob. Inf. Syst. 2022, 2022, 5358359. [Google Scholar] [CrossRef]

- Sousa, M.J.; Moutinho, A.; Almeida, M. Wildfire detection using transfer learning on augmented datasets. Expert Syst. Appl. 2019, 142, 112975. [Google Scholar] [CrossRef]

- Tang, Y.; Feng, H.; Chen, J.; Chen, Y. ForestResNet: A Deep Learning Algorithm for Forest Image Classification. J. Phys. Conf. Ser. 2021, 2024, 012053. [Google Scholar] [CrossRef]

- Sun, X.; Sun, L.; Huang, Y. Forest fire smoke recognition based on convolutional neural network. J. For. Res. 2020, 32, 1921–1927. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).