A Detection Model for Cucumber Root-Knot Nematodes Based on Modified YOLOv5-CMS

Abstract

:1. Introduction

- (1)

- A cucumber root-knot nematode image dataset was constructed, which provided data support for the training and validation of the root-knot nematode detection model.

- (2)

- The dual-attention mechanism CBAM-CA was adopted to allow the model to focus on key regions of the target, which improved the model’s ability of capturing distinguishable features from a small target and enhanced the overall detection performance.

- (3)

- The following methods were used to improve the model performance: the K-means++ algorithm was used to help the initial clustering center to jump out of the local optimal solution to achieve the global optimal solution; the SIoU loss function was used to replace the original CIoU function in order to fully consider the influence of the vector direction between the ground-truth box and the predicted box so as to speed up the model convergence.

2. Materials and Methods

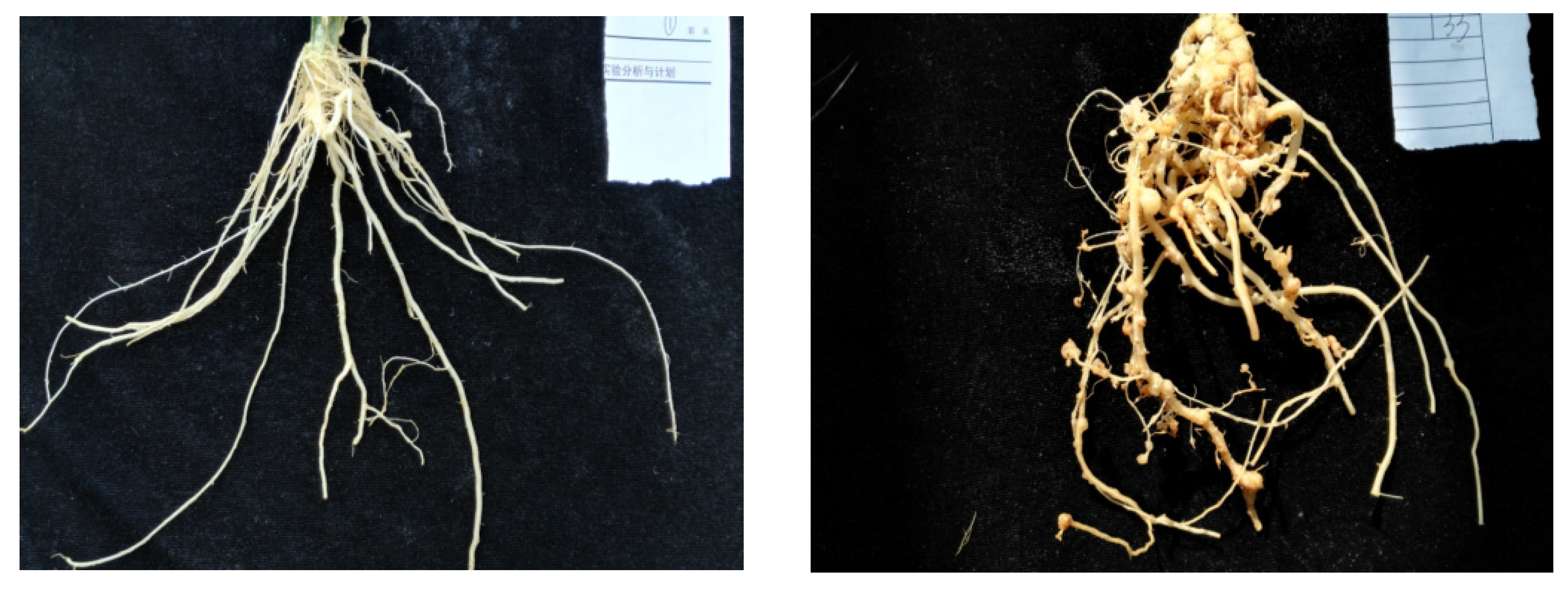

2.1. Image Acquisition

2.2. Image Processing

2.2.1. Image Preprocessing

2.2.2. Image Augmentation

3. YOLOv5s Target Detection Algorithm

3.1. Adaptive Anchor Box Calculation

3.2. Backbone

3.3. Neck

4. Modified YOLOv5s Model

4.1. The Embedded Dual Attention Mechanism CBAM-CA

4.2. K-Means++ Customized Anchor Box

- (1)

- A cluster center point C1 is randomly selected in the dataset, and the distance between the target xi and C1 is calculated one by one, which is denoted as L(x). Thus, the probability that the current target is selected as the next cluster center point is . The roulette method is applied to select the next cluster center according to this method. This step is repeated until N cluster center points are determined.

- (2)

- The distances between the remaining targets and the N cluster center points are calculated. According to the obtained distances, the remaining data samples are assigned into the subset where the center point with the smallest distance CN is located.

- (3)

- The center point of each subset is recalculated.

- (4)

- Steps (2) and (3) are repeated until the cluster center point no longer moves.

4.3. Improved Loss Function

5. Experiment and Analysis

5.1. Model Training

5.1.1. Experiment Environment

5.1.2. Parameter Settings

5.2. Evaluation Indicators

5.3. Experiment Results and Analysis

5.3.1. Comparison of Algorithms Incorporated with Different Attention Mechanisms

5.3.2. YOLOv5s-CMS Results Analysis

5.3.3. Ablation Test

5.4. Comparison of Detection Results

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Atkinson, H.J.; Lilley, C.J.; Urwin, P.E. Strategies for transgenic nematode control in developed and developing world crops. Curr. Opin. Biotechnol. 2012, 23, 251–256. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Rostamza, M.; Song, Z.; Wang, L.; McNickle, G.; Iyer-Pascuzzi, A.S.; Qiu, Z.; Jin, J. SegRoot: A high throughput segmentation method for root image analysis. Comput. Electron. Agric. 2019, 162, 845–854. [Google Scholar] [CrossRef]

- Kang, J.; Liu, L.; Zhang, F.; Shen, C.; Wang, N.; Shao, L. Semantic segmentation model of cotton roots in-situ image based on attention mechanism. Comput. Electron. Agric. 2021, 189, 106370. [Google Scholar] [CrossRef]

- Smith, A.G.; Petersen, J.; Selvan, R.; Rasmussen, C.R. Segmentation of roots in soil with U-Net. Plant Methods 2020, 16, 1–15. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yasrab, R.; Atkinson, J.A.; Wells, D.M.; French, A.P.; Pridmore, T.P.; Pound, M.P. RootNav 2.0: Deep learning for automatic navigation of complex plant root architectures. GigaScience 2019, 8, giz123. [Google Scholar] [CrossRef] [Green Version]

- Keller, K.; Kirchgessner, N.; Khanna, R.; Siegwart, R.; Walter, A.; Aasen, H. Soybean leaf coverage estimation with machine learning and thresholding algorithms for field phenotyping. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018; BMVC Press: Newcastle, UK, 2018; pp. 3–6. [Google Scholar]

- Atanbori, J.; Chen, F.; French, A.P.; Pridmore, T. Towards low-cost image-based plant phenotyping using reduced-parameter CNN. In Proceedings of the Workshop Is Held at 29th British Machine Vision Conference, Northumbria, UK, 4–6 September 2018. [Google Scholar]

- Wang, C.; Li, X.; Caragea, D.; Bheemanahallia, R.; Jagadish, S.K. Root anatomy based on root cross-section image analysis with deep learning. Comput. Electron. Agric. 2020, 175, 105549. [Google Scholar] [CrossRef]

- Ostovar, A.; Talbot, B.; Puliti, S.; Astrup, R.; Ringdahl, O. Detection and classification of Root and Butt-Rot (RBR) in stumps of Norway Spruce using RGB images and machine learning. Sensors 2019, 19, 1579. [Google Scholar] [CrossRef] [Green Version]

- Pun, T.B.; Neupane, A.; Koech, R. Quantification of Root-Knot Nematode Infestation in Tomato Using Digital Image Analysis. Agronomy 2021, 11, 2372. [Google Scholar] [CrossRef]

- Mazurkiewicz, M.; Górska, B.; Jankowska, E.; Włodarska-Kowalczuk, M. Assessment of nematode biomass in marine sediments: A semi-automated image analysis method. Limnol. Oceanogr. Methods 2016, 14, 816–827. [Google Scholar] [CrossRef]

- Evangelisti, E.; Turner, C.; McDowell, A.; Shenhav, L.; Yunusov, T.; Gavrin, A.; Servante, E.K.; Quan, C.; Schornack, S. Deep learning-based quantification of arbuscular mycorrhizal fungi in plant roots. New Phytol. 2021, 232, 2207–2219. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Shi, R.; Li, T.; Yamaguchi, Y. An attribution-based pruning method for real-time mango detection with YOLO network. Comput. Electron. Agric. 2020, 169, 105214. [Google Scholar] [CrossRef]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Wang, D.; He, D. Channel pruned YOLO V5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning. Biosyst. Eng. 2021, 210, 271–281. [Google Scholar] [CrossRef]

- Malta, A.; Mendes, M.; Farinha, T. Augmented reality maintenance assistant using YOLOv5. Appl. Sci. 2021, 11, 4758. [Google Scholar] [CrossRef]

- Chaudhari, S.; Mithal, V.; Polatkan, G.; Ramanath, R. An Attentive Survey of Attention Models. ACM Trans. Intell. Syst. Technol. 2019, 12, 1–32. [Google Scholar] [CrossRef]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, Attend and Tell: Neural Image Caption Generation with Visual Attention. Comput. Sci. 2015, 37, 2048–2057. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Volume 11211, pp. 3–19. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2021, 52, 8574–8586. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:2205.12740. [Google Scholar]

- Li, S.; Li, C.; Yang, Y.; Zhang, Q.; Wang, Y.; Guo, Z. Underwater scallop recognition algorithm using improved YOLOv5. Aquac. Eng. 2022, 98, 102273. [Google Scholar] [CrossRef]

- Guo, G.; Zhang, Z. Road damage detection algorithm for improved YOLOv5. Sci. Rep. 2022, 12, 15523. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. Yolov3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

| Feature Map Scale | Anchor Box Size | ||

|---|---|---|---|

| Anchor Box 1 | Anchor Box 2 | Anchor Box 3 | |

| Small scale | (10, 13) | (16, 30) | (33, 23) |

| Middle scale | (30, 61) | (62, 45) | (59, 119) |

| Large scale | (116, 90) | (156, 198) | (373, 326) |

| Parameter Name | Value |

|---|---|

| Learning rate | 0.01 |

| Momentum factor | 0.937 |

| Weight decay | 0.0005 |

| Batch size | 16 |

| Epoch | 300 |

| Module | P/% | R/% | F1/% | mAP/% | No. of Total Parameters |

|---|---|---|---|---|---|

| / | 91 | 85.5 | 88.2 | 91.1 | 7,012,822 |

| CA | 91.2 | 87.4 | 89.3 | 92.8 | 7,169,542 |

| simAM | 90.9 | 87.3 | 89.1 | 93 | 7,143,894 |

| SE | 70.2 | 54.1 | 61.1 | 57.6 | 7,169,782 |

| CBAM | 92.3 | 85.8 | 88.9 | 93.1 | 7,160,376 |

| CBAM+CA | 92.2 | 87.8 | 89.9 | 93.5 | 7,195,528 |

| Model | P/% | R/% | F1/% | mAP/% |

|---|---|---|---|---|

| YOLOv3 | 74.2 | 12.2 | 21 | 39.3 |

| YOLOv4 | 75.5 | 33.3 | 46 | 51.2 |

| Faster R-CNN | 67.7 | 36.2 | 47 | 51.4 |

| YOLOv5s | 91 | 85.5 | 88.2 | 91.1 |

| YOLOv5m | 87.8 | 77.9 | 82.6 | 85.2 |

| YOLOv5n | 84.8 | 82.9 | 83.8 | 88.3 |

| YOLOv5l | 88.3 | 79.1 | 83.4 | 87.7 |

| YOLOv5x | 82.8 | 78.5 | 80.1 | 84.7 |

| YOLOv5s-CMS | 94.3 | 88.5 | 91.3 | 94.8 |

| CBAM+CA | K-Means++ | SIoU | P/% | R/% | mAP/% |

|---|---|---|---|---|---|

| - | - | - | 91 | 85.5 | 91.1 |

| √ | - | - | 91.2 | 87.4 | 92.8 |

| - | √ | - | 92.2 | 87.8 | 93.5 |

| - | - | √ | 91.1 | 86.8 | 93.1 |

| √ | √ | - | 93.1 | 88.4 | 94.4 |

| √ | - | √ | 93.3 | 86.3 | 93.6 |

| - | √ | √ | 93.1 | 86.3 | 93.6 |

| √ | √ | √ | 94.3 | 88.5 | 94.8 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Sun, S.; Zhao, C.; Mao, Z.; Wu, H.; Teng, G. A Detection Model for Cucumber Root-Knot Nematodes Based on Modified YOLOv5-CMS. Agronomy 2022, 12, 2555. https://doi.org/10.3390/agronomy12102555

Wang C, Sun S, Zhao C, Mao Z, Wu H, Teng G. A Detection Model for Cucumber Root-Knot Nematodes Based on Modified YOLOv5-CMS. Agronomy. 2022; 12(10):2555. https://doi.org/10.3390/agronomy12102555

Chicago/Turabian StyleWang, Chunshan, Shedong Sun, Chunjiang Zhao, Zhenchuan Mao, Huarui Wu, and Guifa Teng. 2022. "A Detection Model for Cucumber Root-Knot Nematodes Based on Modified YOLOv5-CMS" Agronomy 12, no. 10: 2555. https://doi.org/10.3390/agronomy12102555