Advance of Target Visual Information Acquisition Technology for Fresh Fruit Robotic Harvesting: A Review

Abstract

:1. Introduction

1.1. Urgent Need of Fresh Fruit Robotic Harvesting

1.2. Target Visual Information Acquisition of Harvesting Robots

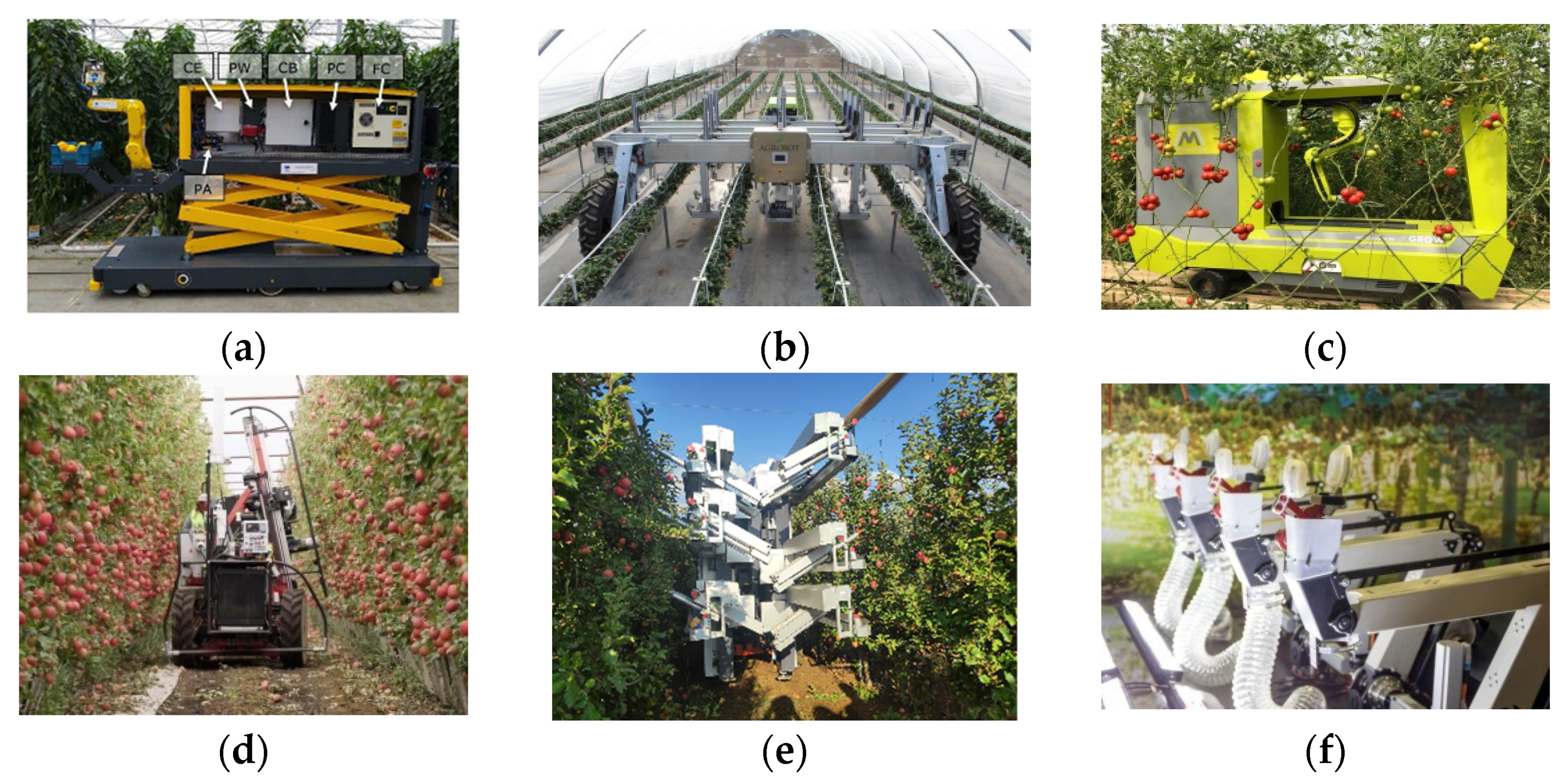

2. Current Status of Fresh Fruit Harvesting Robots

2.1. Typical Harvesting Robots

2.2. Characteristics of the Robot’s Visual Unit

3. Image Acquisition under Agricultural Environment

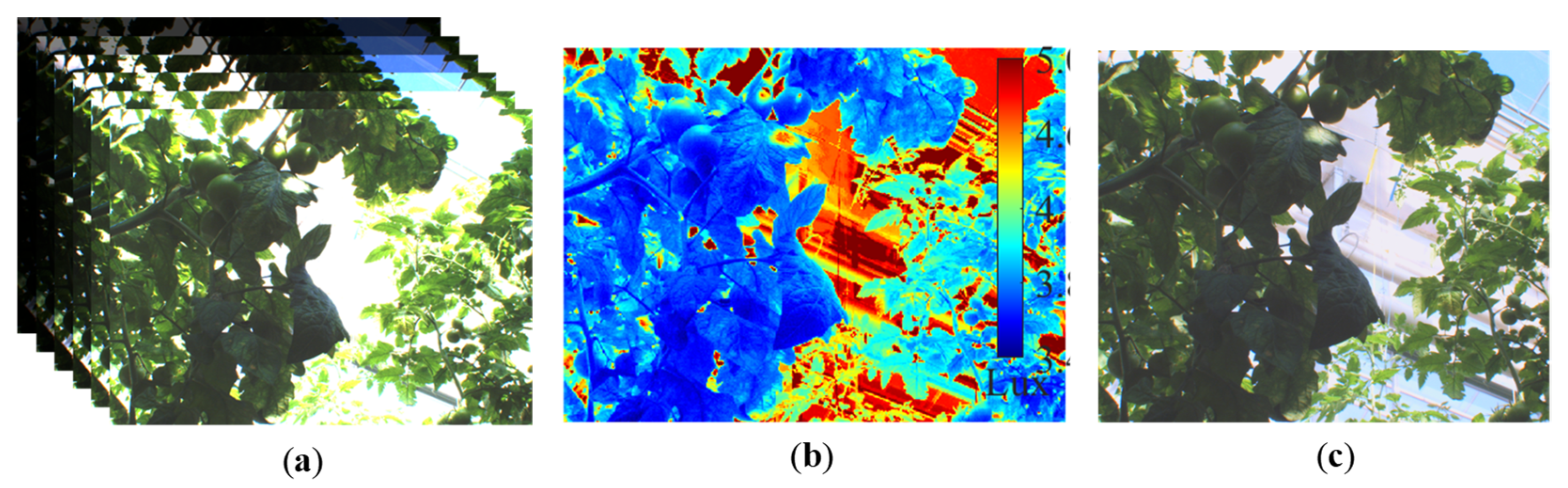

3.1. Image Color Correction for Various Sunlight Conditions

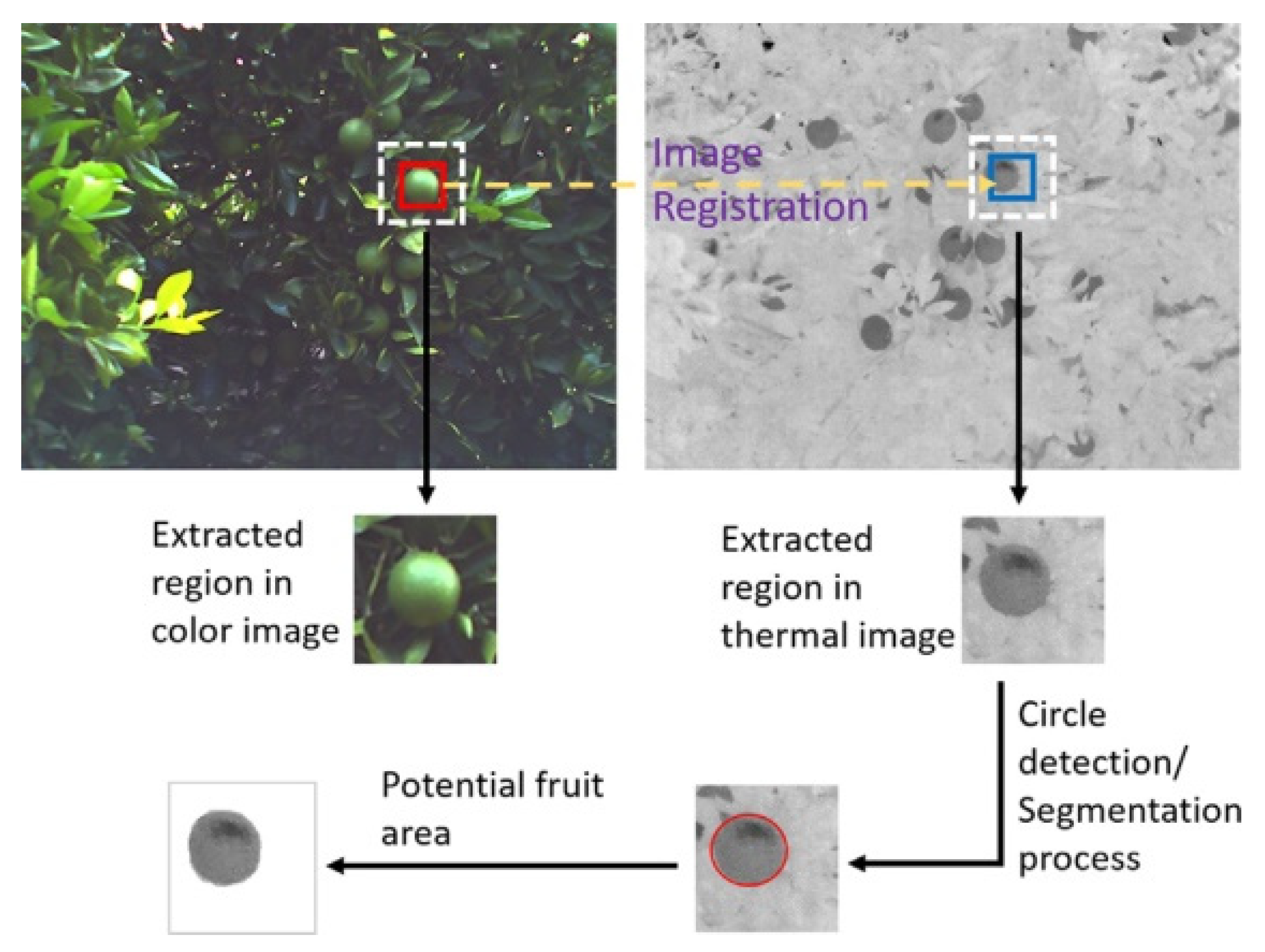

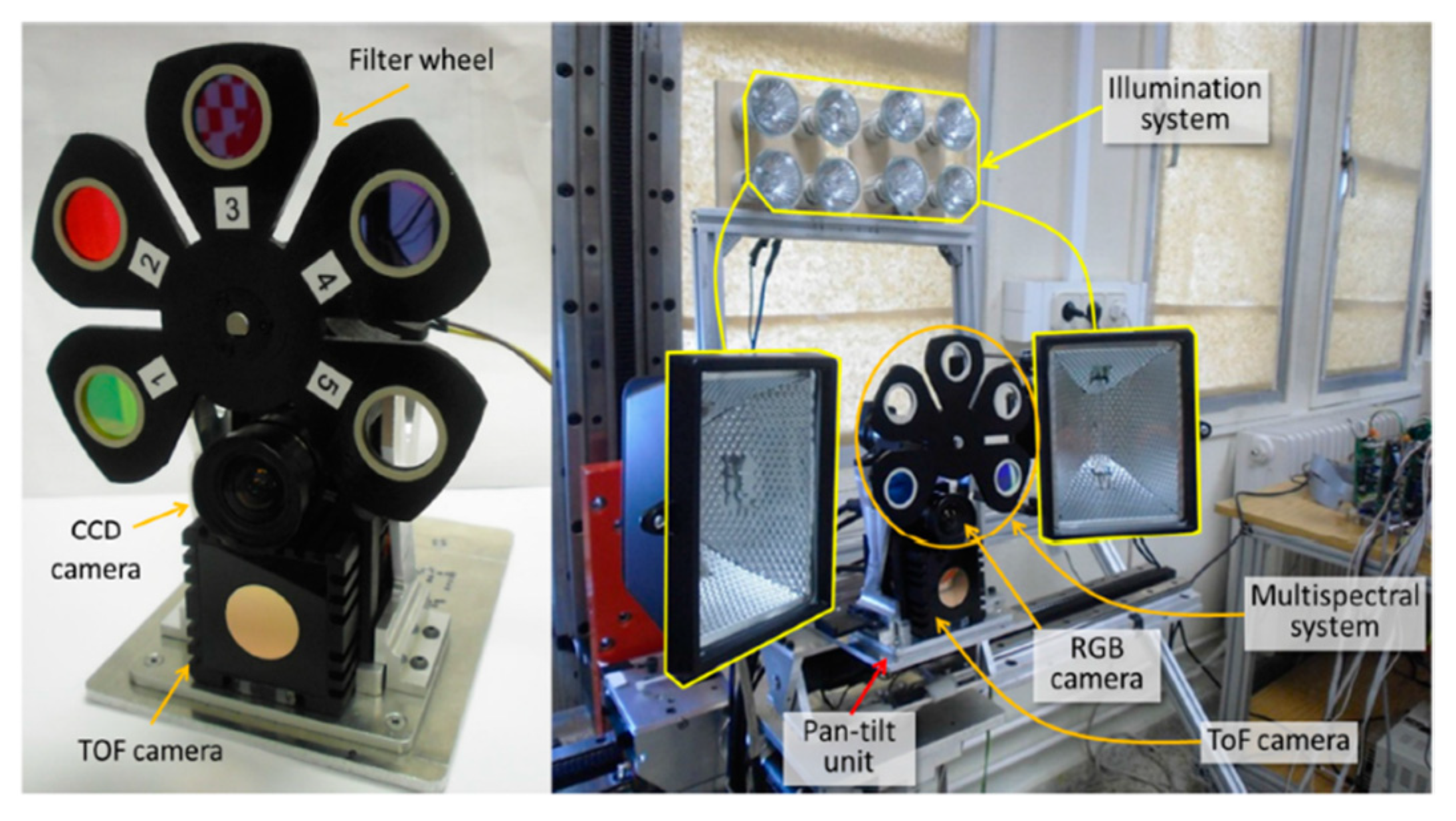

3.2. Similar-Colored Target Image Acquisition

4. Fruit Target Identification from Complex Background

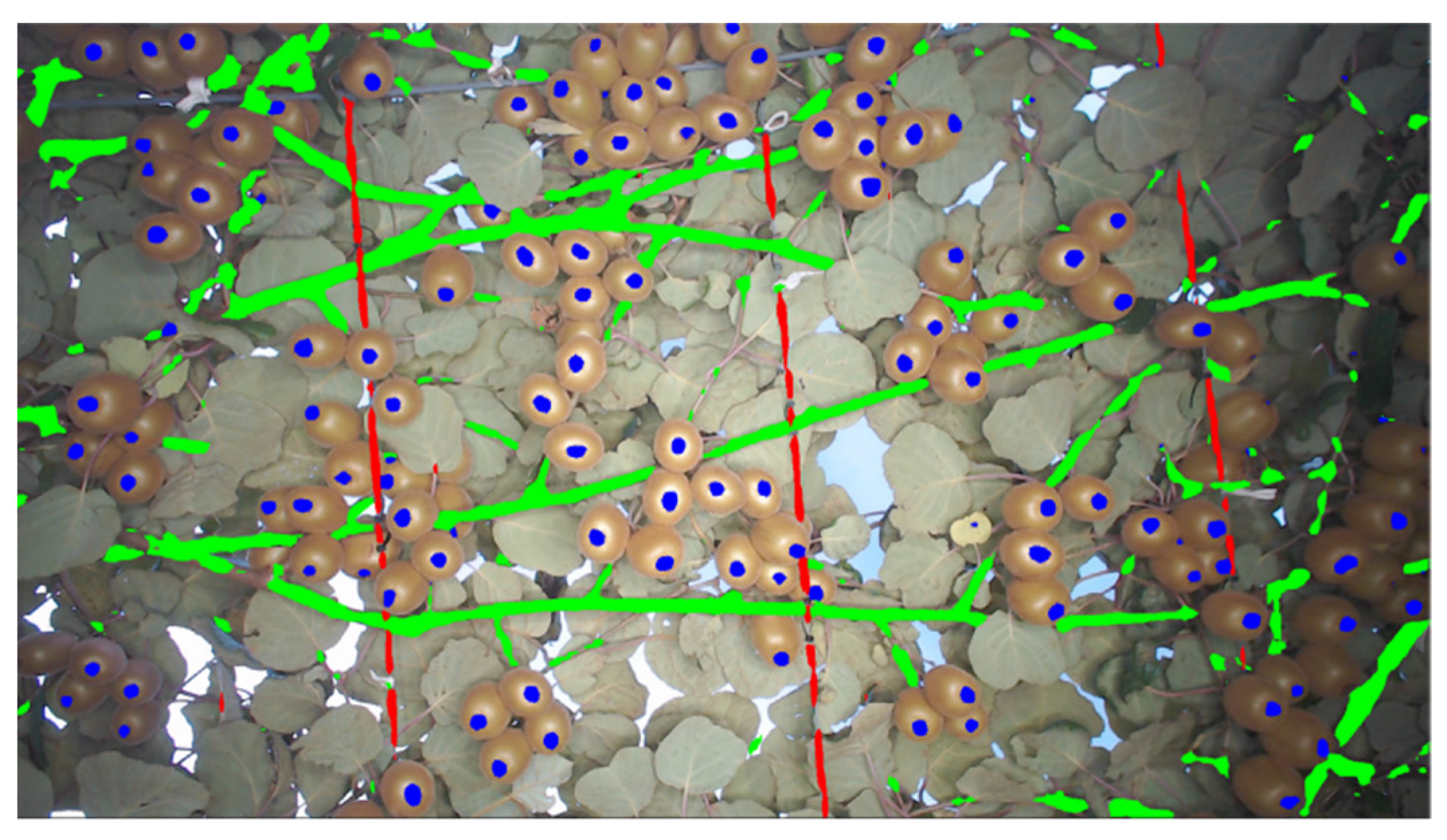

4.1. Visual Feature Extraction and Fusion

4.2. Classic Machine Learning Algorithms Application

4.3. Deep Learning Model Application

5. Fruit’s Stereo Location and Measurement

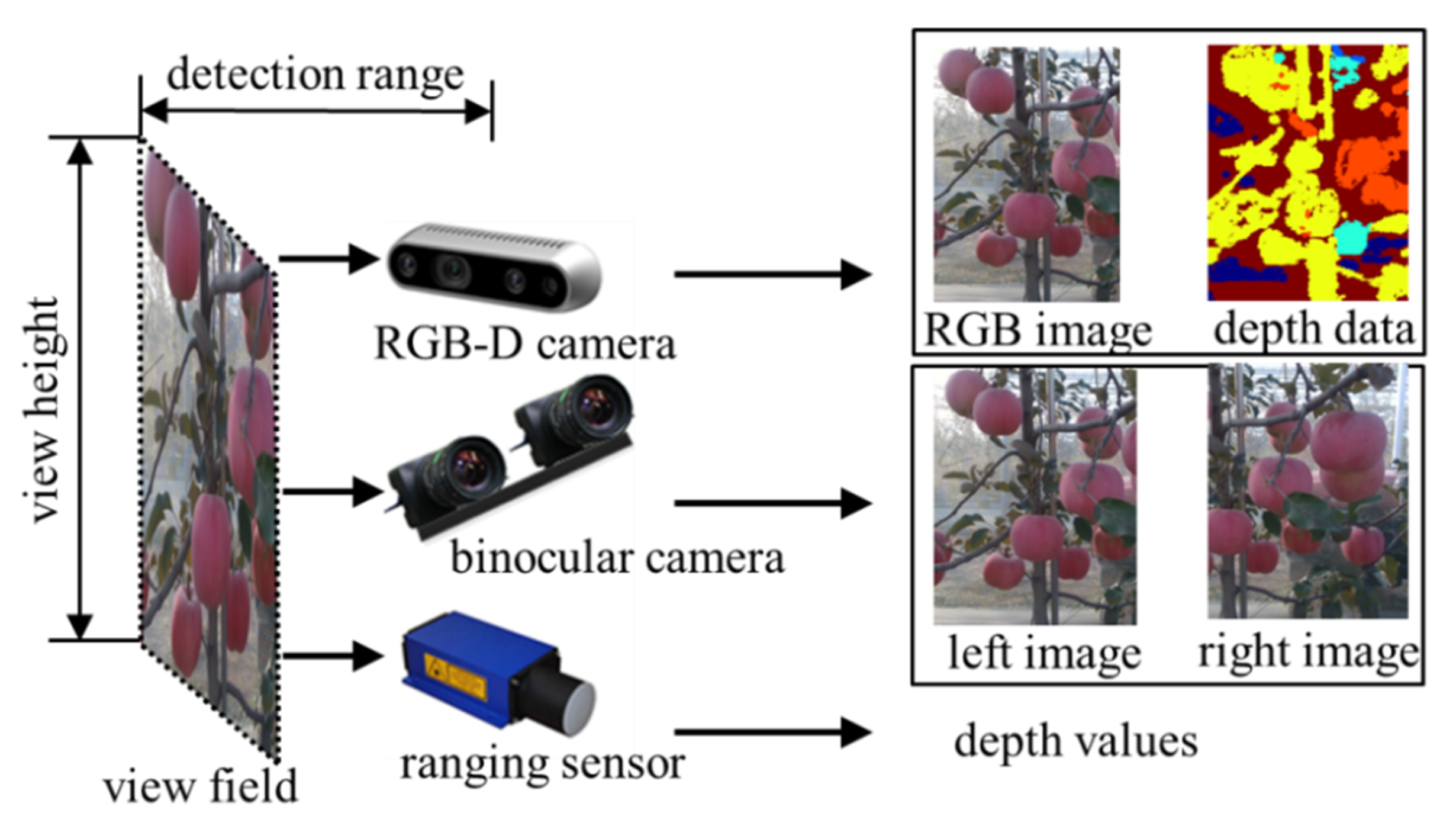

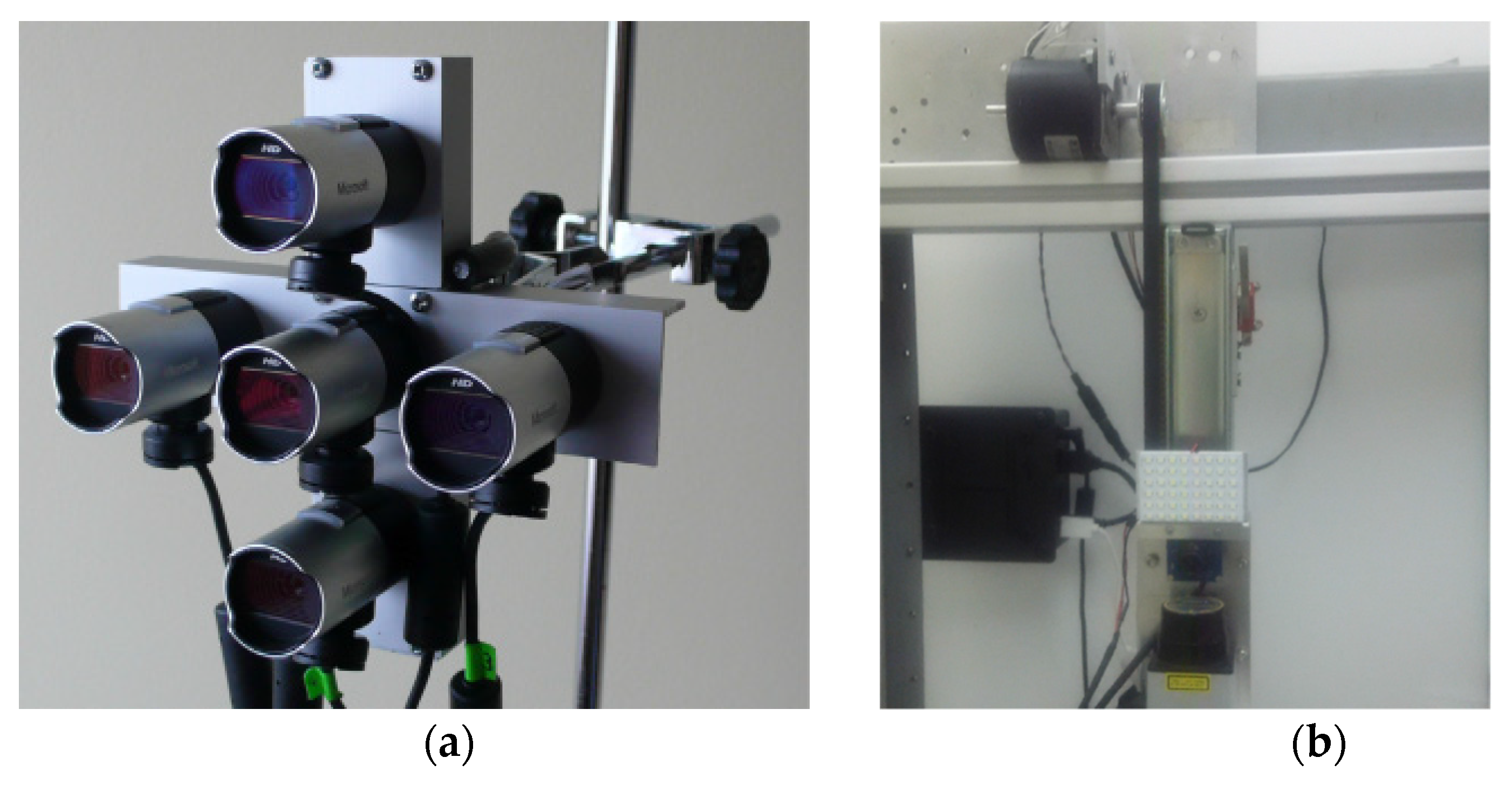

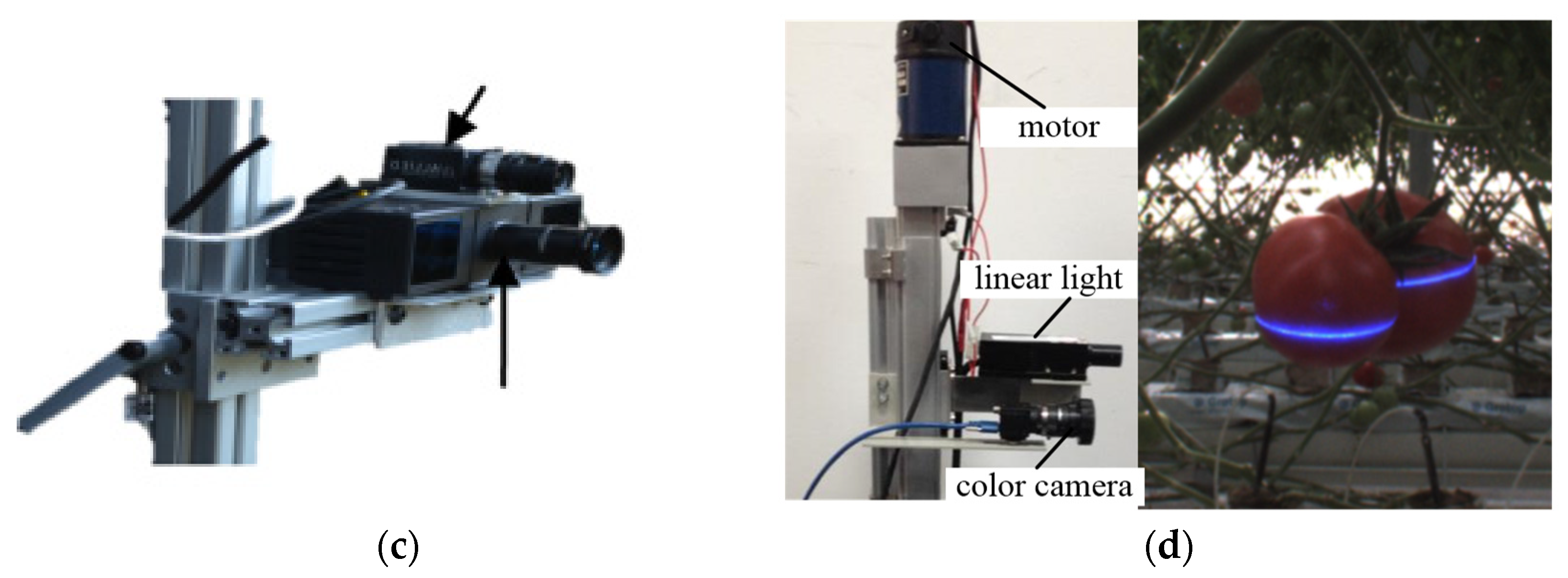

5.1. Hardware Unit of Stereo Vision

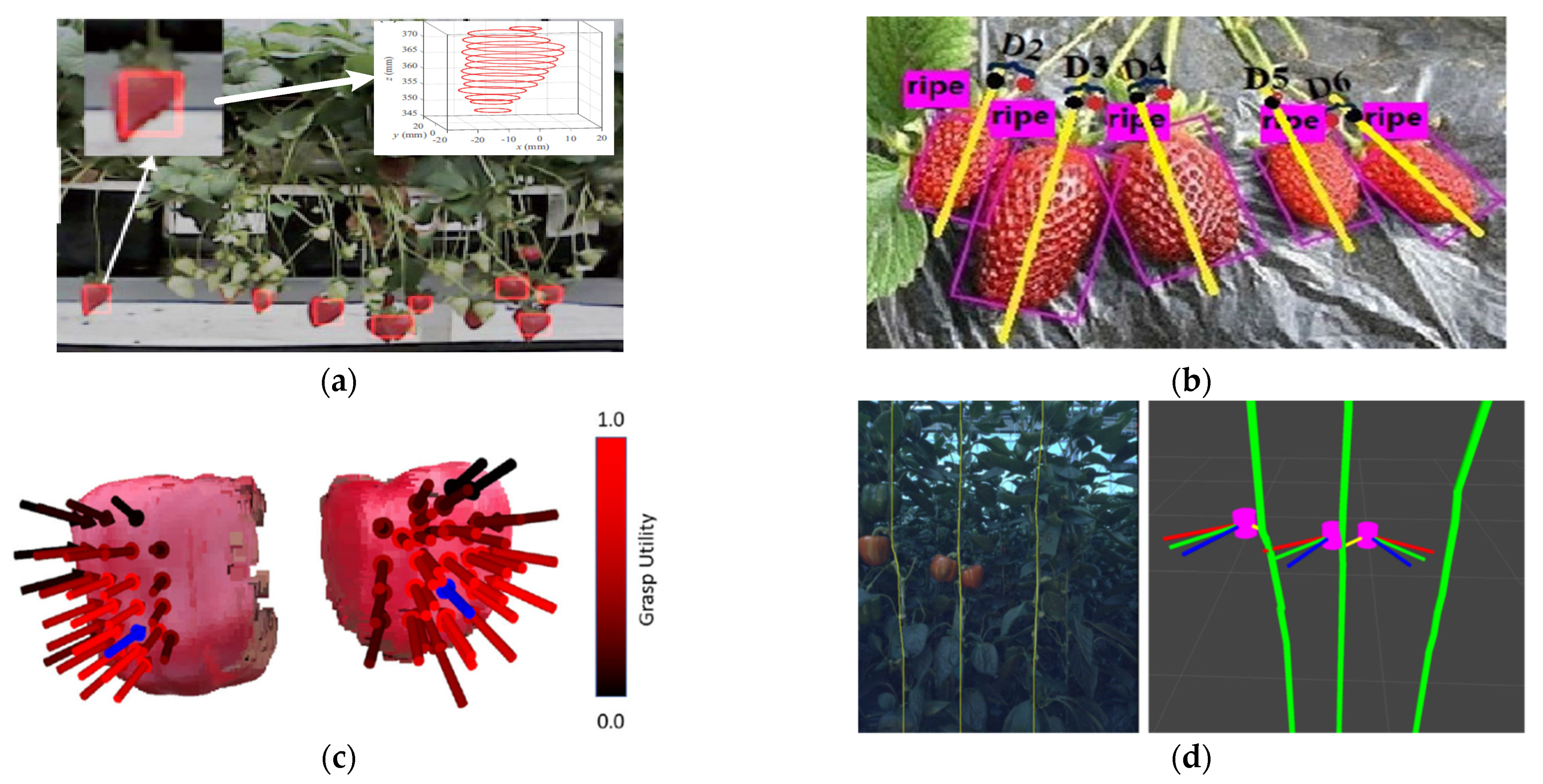

5.2. Measurement of Position and Posture

6. Disordered Fruits Search from Plants

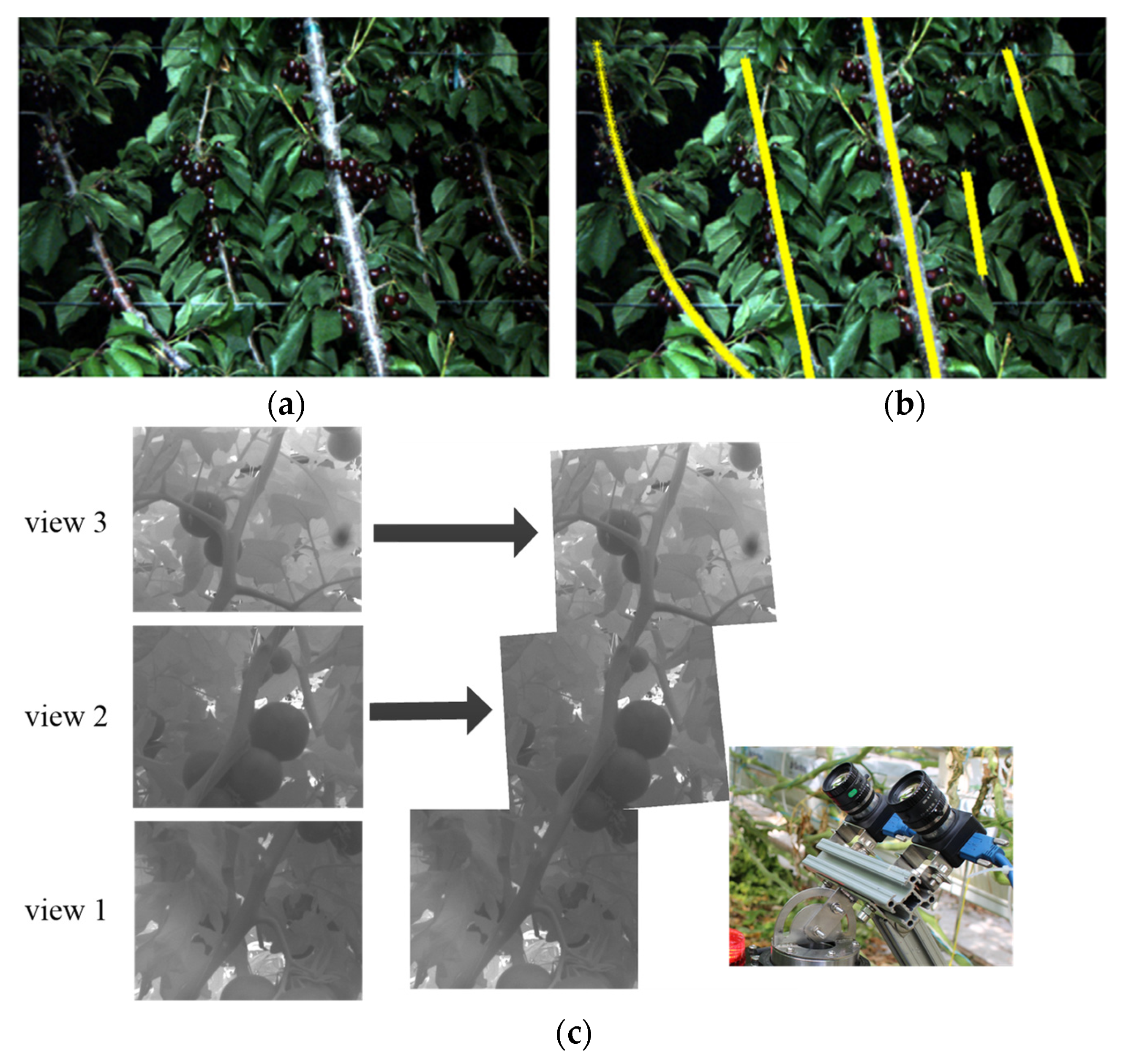

6.1. Passive Detection with Fixed View Field

6.2. Active Detection with Multiple View Field

7. Challenges and Trends

7.1. Challenge Summaries

7.2. Potential Trends

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tomato Production 2018. Available online: https://ourworldindata.org/grapher/tomato-production (accessed on 20 April 2022).

- Crops and Livestock Products. Available online: https://www.fao.org/faostat/en/#data/QCL (accessed on 20 April 2022).

- Apple Production 2018. Available online: https://ourworldindata.org/grapher/apple-production (accessed on 20 April 2022).

- Strawberry. Available online: https://en.wikipedia.org/wiki/Strawberry (accessed on 20 April 2022).

- Xiong, Y.; Ge, Y.; Grimstad, L.; From, P.J. An autonomous strawberry-harvesting robot: Design, development, integration, and field evaluation. J. Field Robot. 2020, 37, 202–224. [Google Scholar] [CrossRef] [Green Version]

- Zhang, K.; Lammers, K.; Chu, P.; Li, Z.; Lu, R. System design and control of an apple harvesting robot. Mechatronics 2021, 79, 102644. [Google Scholar] [CrossRef]

- Silwal, A.; Davidson, J.R.; Karkee, M.; Mo, C.; Zhang, Q.; Lewis, K. Design, integration, and field evaluation of a robotic apple harvester. J. Field Robot. 2017, 34, 1140–1159. [Google Scholar] [CrossRef]

- King, A. Technology: The Future of Agriculture. Nature 2017, 544, S21–S23. [Google Scholar] [CrossRef] [Green Version]

- Feng, Q.; Zou, W.; Fan, P.; Zhang, C.; Wang, X. Design and test of robotic harvesting system for cherry tomato. Int. J. Agric. Biol. Eng. 2018, 11, 96–100. [Google Scholar] [CrossRef]

- Arad, B.; Balendonck, J.; Barth, R.; Ben-Shahar, O.; Edan, Y.; Hellström, T.; Hemming, J.; Kurtser, P.; Ringdahl, O.; Tielen, T.; et al. Development of a sweet pepper harvesting robot. J. Field Robot. 2020, 37, 1027–1039. [Google Scholar] [CrossRef]

- Williams, H.; Ting, C.; Nejati, M.; Jones, M.H.; Penhall, N.; Lim, J.; Seabright, M.; Bell, J.; Ahn, H.S.; Scarfe, A.; et al. Improvements to and large-scale evaluation of a robotic kiwifruit harvester. J. Field Robot. 2020, 37, 187–201. [Google Scholar] [CrossRef]

- Mehta, S.S.; Burks, T.F. Vision-based control of robotic manipulator for citrus harvesting. Comput. Electron. Agric. 2014, 102, 146–158. [Google Scholar] [CrossRef]

- Wang, Z.H.; Xun, Y.; Wang, Y.K.; Yang, Q.H. Review of smart robots for fruit and vegetable picking in agriculture. Int. J. Agric. Biol. Eng. 2022, 15, 33–54. [Google Scholar] [CrossRef]

- Tang, Y.; Chen, M.; Wang, C.; Luo, L.; Li, J.; Lian, G.; Zou, X. Recognition and Localization Methods for Vision-Based Fruit Picking Robots: A Review. Front. Plant Sci. 2020, 11, 510. [Google Scholar] [CrossRef]

- Thorne, J. Apple-Picking Robots Gear up for U.S. Debut in Washington State. Available online: https://www.geekwire.com/2019/apple-picking-robots-gear-u-s-debut-washington-state/ (accessed on 20 April 2022).

- Zitter, L. Berry Picking at Its Best with AGROBOT Technology. Available online: https://www.foodandfarmingtechnology.com/news/harvesting-technology/berry-picking-at-its-best-with-agrobot-technology.html (accessed on 20 April 2022).

- Leichman, A.K. World’s First Tomato-Picking Robot Set to Be Rolled Out. Available online: https://www.israel21c.org/israeli-startup-develops-first-tomato-picking-robot (accessed on 20 April 2022).

- Saunders, S. The Robots That Can Pick Kiwi-Fruit. Available online: https://www.bbc.com/future/bespoke/follow-the-food/the-robots-that-can-pick-kiwifruit.html (accessed on 20 April 2022).

- Ji, C.; Feng, Q.C.; Yuan, T.; Tan, Y.Z.; Li, W. Development and performance analysis on cucumber harvesting robot system in greenhouse. Robot 2011, 33, 726–730. [Google Scholar] [CrossRef]

- The Latest on FF Robotics’ Machine Harvester. Available online: https://basinbusinessjournal.com/news/2021/apr/12/machine-picked-apples/ (accessed on 20 April 2022).

- Lehnert, C.; McCool, C.; Sa, I.; Perez, T. Performance improvements of a sweet pepper harvesting robot in protected cropping environments. J. Field Robot. 2020, 37, 1197–1223. [Google Scholar] [CrossRef]

- Bac, C.W.; Hemming, J.; Van Tuijl, B.A.J.; Barth, R.; Wais, E.; van Henten, E.J. Performance evaluation of a harvesting robot for sweet pepper. J. Field Robot. 2017, 34, 1123–1139. [Google Scholar] [CrossRef]

- Lee, B.; Kam, D.; Min, B.; Hwa, J.; Oh, S. A Vision Servo System for Automated Harvest of Sweet Pepper in Korean Greenhouse Environment. Appl. Sci. 2019, 9, 2395. [Google Scholar] [CrossRef] [Green Version]

- Han, K.S.; Kim, S.C.; Lee, Y.B.; Kim, S.C.; Im, D.H.; Choi, H.K.; Hwang, H. Strawberry harvesting robot for bench-type cultivation. J. Biosyst. Eng. 2012, 37, 65–74. [Google Scholar] [CrossRef] [Green Version]

- De Preter, A.; Anthonis, J.; De Baerdemaeker, J. Development of a robot for harvesting strawberries. IFAC-PapersOnLine 2018, 51, 14–19. [Google Scholar] [CrossRef]

- Feng, Q.; Wang, X.; Zheng, W.; Qiu, Q.; Jiang, K. New strawberry harvesting robot for elevated-trough culture. Int. J. Agric. Biol. Eng. 2012, 5, 1–8. [Google Scholar] [CrossRef]

- Xiong, Y.; Peng, C.; Grimstad, L.; From, P.J.; Isler, V. Development and field evaluation of a strawberry harvesting robot with a cable-driven gripper. Comput. Electron. Agric. 2019, 157, 392–402. [Google Scholar] [CrossRef]

- Yamamoto, S.; Hayashi, S.; Saito, S.; Ochiai, Y.; Yamashita, T.; Sugano, S. Development of robotic strawberry harvester to approach target fruit from hanging bench side. IFAC Proc. Vol. 2010, 43, 95–100. [Google Scholar] [CrossRef]

- Kondo, N.; Yata, K.; Iida, M.; Shiigi, T.; Monta, M.; Kurita, M.; Omori, H. Development of an end-effector for a tomato cluster harvesting robot. Eng. Agric. Environ. Food 2010, 3, 20–24. [Google Scholar] [CrossRef]

- Ling, X.; Zhao, Y.; Gong, L.; Liu, C.; Wang, T. Dual-arm cooperation and implementing for robotic harvesting tomato using binocular vision. Robot. Auton. Syst. 2019, 114, 134–143. [Google Scholar] [CrossRef]

- Fujinaga, T.; Yasukawa, S.; Ishii, K. Development and Evaluation of a Tomato Fruit Suction Cutting Device. In Proceedings of the 2021 IEEE/SICE International Symposium on System Integration (SII), Fukushima, Japan, 11–14 January 2021; pp. 628–633. [Google Scholar] [CrossRef]

- Wang, L.L.; Zhao, B.; Fan, J.; Hu, X.; Wei, S.; Li, Y.; Zhou, Q.; Wei, C. Development of a tomato harvesting robot used in greenhouse. Int. J. Agric. Biol. Eng. 2017, 10, 140–149. [Google Scholar] [CrossRef]

- Feng, Q.; Wang, X.; Wang, G.; Li, Z. Design and test of tomatoes harvesting robot. In Proceedings of the 2015 IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015; pp. 949–952. [Google Scholar] [CrossRef]

- Kang, H.; Zhou, H.; Chen, C. Visual perception and modeling for autonomous apple harvesting. IEEE Access 2020, 8, 62151–62163. [Google Scholar] [CrossRef]

- Yu, X.; Fan, Z.; Wang, X.; Wan, H.; Wang, P.; Zeng, X.; Jia, F. A lab-customized autonomous humanoid apple harvesting robot. Comput. Electr. Eng. 2021, 96, 107459. [Google Scholar] [CrossRef]

- Chu, P.; Li, Z.; Lammers, K.; Lu, R.; Liu, X. Deep learning-based apple detection using a suppression mask R-CNN. Pattern Recognit. Lett. 2021, 147, 206–211. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, Y.; Yang, C.; Zhao, H.; Chen, G.; Zhang, Z.; Fu, S.; Zhang, M.; Xu, H. End-effector with a bite mode for harvesting citrus fruit in random stalk orientation environment. Comput. Electron. Agric. 2019, 157, 454–470. [Google Scholar] [CrossRef]

- Hu, X.; Yu, H.; Lv, S.; Wu, J. Design and experiment of a new citrus harvesting robot. In Proceedings of the 2021 International Conference on Control Science and Electric Power Systems (CSEPS), Shanghai, China, 28–30 May 2021; pp. 179–183. [Google Scholar] [CrossRef]

- Yang, C.; Liu, Y.; Wang, Y.; Xiong, L.; Xu, H.; Zhao, W. Research and Experiment on Recognition and Location System for Citrus Picking Robot in Natural Environment. Trans. Chin. Soc. Agric. Mach. 2019, 50, 14–22. [Google Scholar] [CrossRef]

- Zhang, F.; Li, Z.; Wang, B.; Su, S.; Fu, L.; Cui, Y. Study on recognition and non-destructive picking end-effector of kiwifruit. In Proceeding of the 11th World Congress on Intelligent Control and Automation, Shenyang, China, 29 June–4 July 2014; pp. 2174–2179. [Google Scholar] [CrossRef]

- Barnett, J.; Duke, M.; Au, C.K.; Lim, S.H. Work distribution of multiple Cartesian robot arms for kiwifruit harvesting. Comput. Electron. Agric. 2020, 169, 105202. [Google Scholar] [CrossRef]

- Mu, L.; Cui, G.; Liu, Y.; Cui, Y.; Fu, L.; Gejima, Y. Design and simulation of an integrated end-effector for picking kiwifruit by robot. Inf. Process. Agric. 2020, 7, 58–71. [Google Scholar] [CrossRef]

- Williams, H.A.; Jones, M.H.; Nejati, M.; Seabright, M.J.; Bell, J.; Penhall, N.D.; Barnett, J.J.; Duke, M.D.; Scarfe, A.J.; Ahn, H.S.; et al. Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 2019, 181, 140–156. [Google Scholar] [CrossRef]

- Debevec, P.E.; Malik, J. Recovering high dynamic range radiance maps from photographs. In Proceedings of the SIGGRAPH ’08: Special Interest Group on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 11–15 August 2008; pp. 1–10. [Google Scholar] [CrossRef]

- Yuan, T.; Kondo, N.; Li, W. Sunlight fluctuation compensation for tomato flower detection using web camera. Procedia Eng. 2012, 29, 4343–4347. [Google Scholar] [CrossRef] [Green Version]

- Fu, L.S.; Wang, B.; Cui, Y.J.; Su, S.; Gejima, Y.; Kobayashi, T. Kiwifruit recognition at nighttime using artificial lighting based on machine vision. Int. J. Agric. Biol. Eng. 2015, 8, 52–59. [Google Scholar] [CrossRef]

- Zhang, K.; Lammers, K.; Chu, P.; Dickinson, N.; Li, Z.; Lu, R. Algorithm Design and Integration for a Robotic Apple Harvesting System. arXiv 2022, arXiv:2203.00582. [Google Scholar] [CrossRef]

- Arad, B.; Kurtser, P.; Barnea, E.; Harel, B.; Edan, Y.; Ben-Shahar, O. Controlled Lighting and Illumination-Independent Target Detection for Real-Time Cost-Efficient Applications. The Case Study of Sweet Pepper Robotic Harvesting. Sensors 2019, 19, 1390. [Google Scholar] [CrossRef] [Green Version]

- Xiong, J.; Zou, X.; Wang, H.; Peng, H.; Zhu, M.; Lin, G. Recognition of ripe litchi in different illumination conditions based on Retinex image enhancement. Trans. Chin. Soc. Agric. Eng. 2013, 29, 170–178. [Google Scholar] [CrossRef]

- Kurtulmus, F.; Lee, W.S.; Vardar, A. Immature peach detection in colour images acquired in natural illumination conditions using statistical classifiers and neural network. Precis. Agric. 2014, 15, 57–79. [Google Scholar] [CrossRef]

- Vitzrabin, E.; Edan, Y. Changing task objectives for improved sweet pepper detection for robotic harvesting. IEEE Robot. Autom. Lett. 2016, 1, 578–584. [Google Scholar] [CrossRef]

- Lv, J.; Wang, Y.; Xu, L.; Gu, Y.; Zou, L.; Yang, B.; Ma, Z. A method to obtain the near-large fruit from apple image in orchard for single-arm apple harvesting robot. Sci. Hortic. 2019, 257, 108758. [Google Scholar] [CrossRef]

- Feng, Q.; Wang, X.; Li, J.; Cheng, W.; Chen, J. Image Color Correction Method for Greenhouse Tomato Plant Based on HDR Imaging. Trans. Chin. Soc. Agric. Mach. 2020, 51, 235–242. [Google Scholar] [CrossRef]

- Kondo, N.; Namba, K.; Nishiwaki, K.; Ling, P.P.; Monta, M. An illumination system for machine vision inspection of agricultural products. In Proceedings of the 2006 ASABE Annual International Meeting, Portland, OR, USA, 9–12 July 2006; p. 063078. [Google Scholar] [CrossRef]

- Gan, H.; Lee, W.; Alchanatis, V.; Ehsani, R.; Schueller, J.K. Immature green citrus fruit detection using color and thermal images. Comput. Electron. Agric. 2018, 152, 117–125. [Google Scholar] [CrossRef]

- Bac, C.; Hemming, J.; Henten, E. Robust pixel-based classification of obstacle for robotic harvesting of sweet-pepper. Comput. Electron. Agric. 2013, 96, 148–162. [Google Scholar] [CrossRef]

- Li, W.; Feng, Q.; Yuan, T. Spectral imaging for greenhouse cucumber fruit detection based on binocular stereovision. In Proceedings of the 2010 ASABE Annual International Meeting, Pittsburgh, PA, USA, 20–23 June 2010; p. 1009345. [Google Scholar] [CrossRef]

- Yuan, T.; Ji, C.; Chen, Y.; Li, W.; Zhang, J. Greenhouse Cucumber Recognition Based on Spectral Imaging Technology. Trans. Chin. Soc. Agric. Mach. 2011, 42, 172–176. [Google Scholar]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. DeepFruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fernández, R.; Salinas, C.; Montes, H.; Sarria, J. Multisensory system for fruit harvesting robots. experimental testing in natural scenarios and with different kinds of crops. Sensors 2014, 14, 23885–23904. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Feng, Q.; Chen, J.; Cheng, W.; Wang, X. Multi-band image fusion method for visually identifying tomato plant’s organs with similar color. Smart Agric. 2020, 2, 126–134. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, J.; Fu, L.; Majeed, Y.; Feng, Y.; Li, R.; Cui, Y. Improved kiwifruit detection using pre-trained VGG16 with RGB and NIR information fusion. IEEE Access 2019, 8, 2327–2336. [Google Scholar] [CrossRef]

- Choi, D.; Lee, W.S.; Schueller, J.K.; Ehsani, R.; Roka, F.; Diamond, J. A performance comparison of RGB, NIR, and depth images in immature citrus detection using deep learning algorithms for yield prediction. In Proceedings of the 2017 ASABE Annual International Meeting, Spokane, WA, USA, 16–19 July 2017; p. 1700076. [Google Scholar] [CrossRef]

- Feng, J.; Zeng, L.; He, L. Apple Fruit Recognition Algorithm Based on Multi-Spectral Dynamic Image Analysis. Sensors 2019, 19, 949. [Google Scholar] [CrossRef] [Green Version]

- Hamuda, E.; Glavin, M.; Jones, E. A survey of image processing techniques for plant extraction and segmentation in the field. Comput. Electron. Agric. 2016, 125, 184–199. [Google Scholar] [CrossRef]

- Giselsson, T.M.; Midtiby, H.S. Seedling discrimination with shape features derived from a distance transform. Sensors 2013, 13, 5585–5602. [Google Scholar] [CrossRef] [Green Version]

- Pastrana, J.C.; Rath, T. Novel image processing approach for solving the overlapping problem in agriculture. Biosyst. Eng. 2013, 115, 106–115. [Google Scholar] [CrossRef]

- Senthilnath, J.; Dokania, A.; Kandukuri, M.; Ramesh, K.N.; Anand, G.; Omkar, S.N. Detection of tomatoes using spectral-spatial methods in remotely sensed RGB images captured by UAV. Biosyst. Eng. 2016, 146, 16–32. [Google Scholar] [CrossRef]

- Vitzrabin, E.; Edan, Y. Adaptive thresholding with fusion using a RGB-D sensor for red sweet-pepper detection. Biosyst. Eng. 2016, 146, 45–56. [Google Scholar] [CrossRef]

- Barnea, E.; Mairon, R.; Ben-Shahar, O. Colour-agnostic shape-based 3D fruit detection for crop harvesting robots. Biosyst. Eng. 2016, 146, 57–70. [Google Scholar] [CrossRef]

- Rakun, J.; Stajnko, D.; Zazula, D. Detecting fruits in natural scenes by using spatial-frequency based texture analysis and multiview geometry. Comput. Electron. Agric. 2011, 76, 80–88. [Google Scholar] [CrossRef]

- Kurtulmus, F.; Lee, W.S.; Vardar, A. Green citrus detection using ‘eigenfruit’, color and circular Gabor texture features under natural outdoor conditions. Comput. Electron. Agric. 2011, 78, 140–149. [Google Scholar] [CrossRef]

- Song, Y.; Glasbey, C.A.; Horgan, G.W.; Polder, G.; Dieleman, J.A.; Van der Heijden, G.W.A.M. Automatic fruit recognition and counting from multiple images. Biosyst. Eng. 2014, 118, 203–215. [Google Scholar] [CrossRef]

- Ostovar, A.; Ringdahl, O.; Hellström, T. Adaptive image thresholding of yellow peppers for a harvesting robot. Robotics 2018, 7, 11. [Google Scholar] [CrossRef] [Green Version]

- Zhao, D.A.; Lv, J.D.; Ji, W.; Zhang, Y.; Chen, Y. Design and control of an apple harvesting robot. Biosyst. Eng. 2011, 110, 112–122. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How Transferable Are Features in Deep Neural Networks? In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 3320–3328. [Google Scholar]

- Sun, H.; Li, S.; Li, M.; Liu, H.; Qing, L.; Zhang, Y. Research Progress of Image Sensing and Deep Learning in Agriculture. Trans. Chin. Soc. Agric. Mach. 2020, 51, 1–17. [Google Scholar] [CrossRef]

- Wan, S.; Goudos, S. Faster R-CNN for multi-class fruit detection using a robotic vision system. Comput. Netw. 2020, 168, 107036. [Google Scholar] [CrossRef]

- Zhao, D.; Wu, R.; Liu, X.; Zhao, Y. Apple positioning based on YOLO deep convolutional neural network for picking robot in complex background. Trans. Chin. Soc. Eng. 2019, 35, 164–173. [Google Scholar] [CrossRef]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Kounalakis, N.; Kalykakis, E.; Pettas, M.; Makris, A.; Kavoussanos, M.M.; Sfakiotakis, M.; Fasoulas, J. Development of a Tomato Harvesting Robot: Peduncle Recognition and Approaching. In Proceedings of the 2021 3rd International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 11–13 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Birrell, S.; Hughes, J.; Cai, J.Y.; Iida, F. A field-tested robotic harvesting system for iceberg lettuce. J. Field Robot. 2020, 37, 225–245. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yu, Y.; Zhang, K.; Liu, H.; Yang, L.; Zhang, D. Real-time visual localization of the picking points for a ridge-planting strawberry harvesting robot. IEEE Access 2020, 8, 116556–116568. [Google Scholar] [CrossRef]

- Kirk, R.; Cielniak, G.; Mangan, M. L*a*b*Fruits: A Rapid and Robust Outdoor Fruit Detection System Combining Bio-Inspired Features with One-Stage Deep Learning Networks. Sensors 2020, 20, 275. [Google Scholar] [CrossRef] [Green Version]

- Cui, Z.; Sun, H.M.; Yu, J.T.; Yin, R.N.; Jia, R.S. Fast detection method of green peach for application of picking robot. Appl. Intell. 2022, 52, 1718–1739. [Google Scholar] [CrossRef]

- Zhang, X.; Karkee, M.; Zhang, Q.; Whiting, M.D. Computer vision-based tree trunk and branch identification and shaking points detection in Dense-Foliage canopy for automated harvesting of apples. J. Field Robot. 2021, 38, 476–493. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, K.; Yang, L.; Zhang, D. Fruit detection for strawberry harvesting robot in non-structural environment based on Mask-RCNN. Comput. Electron. Agric. 2019, 163, 104846. [Google Scholar] [CrossRef]

- Jia, W.; Tian, Y.; Luo, R.; Zhang, Z.; Lian, J.; Zheng, Y. Detection and segmentation of overlapped fruits based on optimized mask R-CNN application in apple harvesting robot. Comput. Electron. Agric. 2020, 172, 105380. [Google Scholar] [CrossRef]

- Kaczmarek, A.L. Stereo vision with Equal Baseline Multiple Camera Set (EBMCS) for obtaining depth maps of plants. Comput. Electron. Agric. 2017, 135, 23–37. [Google Scholar] [CrossRef]

- Xiang, R.; Jiang, H.; Ying, Y. Recognition of clustered tomatoes based on binocular stereo vision. Comput. Electron. Agric. 2014, 106, 75–90. [Google Scholar] [CrossRef]

- Si, Y.; Liu, G.; Feng, J. Location of apples in trees using stereoscopic vision. Comput. Electron. Agric. 2015, 112, 68–74. [Google Scholar] [CrossRef]

- Eizentals, P.; Oka, K. 3D pose estimation of green pepper fruit for automated harvesting. Comput. Electron. Agric. 2016, 128, 127–140. [Google Scholar] [CrossRef]

- Gongal, A.; Silwal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Apple crop-load estimation with over-the-row machine vision system. Comput. Electron. Agric. 2016, 120, 26–35. [Google Scholar] [CrossRef]

- Feng, Q.; Cheng, W.; Zhou, J.; Wang, X. Design of structured-light vision system for tomato harvesting robot. Int. J. Agric. Biol. Eng. 2014, 7, 19–26. [Google Scholar] [CrossRef]

- Lehnert, C.; English, A.; McCool, C.; Tow, A.W.; Perez, T. Autonomous sweet pepper harvesting for protected cropping systems. IEEE Robot. Autom. Lett. 2017, 2, 872–879. [Google Scholar] [CrossRef] [Green Version]

- Feng, Q.; Wang, X.; Zhang, M.; Zhang, Z.; Chen, J. Visual system with distant and close combined views for agricultural robot. Int. Agric. Eng. J. 2019, 28, 324–329. [Google Scholar]

- Nguyen, T.T.; Vandevoorde, K.; Wouters, N. Detection of red and bicoloured apples on tree with an RGB-D camera. Biosyst. Eng. 2016, 146, 33–44. [Google Scholar] [CrossRef]

- Kang, H.; Zhou, H.; Wang, X.; Chen, C. Real-Time Fruit Recognition and Grasping Estimation for Robotic Apple Harvesting. Sensors 2020, 20, 5670. [Google Scholar] [CrossRef]

- Lehnert, C.; Tsai, D.; Eriksson, A.; McCool, C. 3d move to see: Multi-perspective visual servoing for improving object views with semantic segmentation. arXiv 2018, arXiv:1809.07896. [Google Scholar] [CrossRef]

- Mehta, S.S.; MacKunis, W.; Burks, T.F. Robust visual servo control in the presence of fruit motion for robotic citrus harvesting. Comput. Electron. Agric. 2016, 123, 362–375. [Google Scholar] [CrossRef]

- Barth, R.; Hemming, J.; Van Henten, E.J. Angle estimation between plant parts for grasp optimisation in harvest robots. Biosyst. Eng. 2019, 183, 26–46. [Google Scholar] [CrossRef]

- Hemming, J.; Ruizendaal, J.; Hofstee, J.W.; Van Henten, E.J. Fruit detectability analysis for different camera positions in sweet-pepper. Sensors 2014, 14, 6032–6044. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bac, C.W.; Hemming, J.; Van Henten, E.J. Stem localization of sweet-pepper using the support wire as a visual cue. Comput. Electron. Agric. 2014, 105, 111–120. [Google Scholar] [CrossRef]

- Amatya, S.; Karkee, M.; Gongal, A.; Zhang, Q.; Whiting, M.D. Detection of cherry tree branches with full foliage in planar architecture for automated sweet-cherry harvesting. Biosyst. Eng. 2016, 146, 3–15. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Tang, Y.; Zou, X.; Lin, G.; Wang, H. Detection of fruit-bearing branches and localization of litchi clusters for vision-based harvesting robots. IEEE Access 2020, 8, 117746–117758. [Google Scholar] [CrossRef]

- Feng, Q.; Wang, X.; Liu, J.; Cheng, W.; Chen, J. Tracking and Measuring Method of Tomato Main-stem Based on Visual Servo. Trans. Chin. Soc. Agric. Mach. 2020, 51, 221–228. [Google Scholar] [CrossRef]

- Liu, J.; Zhou, F.Y.; Yin, L.; Wang, Y. A novel cloud platform for service robots. IEEE Access 2019, 7, 182951–182961. [Google Scholar] [CrossRef]

| Object Fruit | Sensor | Visual Information | View Field |

|---|---|---|---|

| Pepper | RGB-D [10,21], Binocular camera [22] | Fruit color [21], 3D point cloud [21], spatial coordinates [21], fruit stalk posture [21], plant main stem morphology [10] | Detection range 200~600 mm [22], Height range 1000 mm [23] |

| Strawberry | Laser ranging sensor [24], 3 CCD cameras [25], binocular camera [26], RGB-D [5], infrared sensor [5] | Fruit color [25], position [26], and stem posture [5] | Detection range 200~700 mm [26,27], Width range 350~670 mm [26,27], Height range 200~300 mm [28] |

| Tomato | Photoelectric sensor [29], binocular camera [30], laser sensor [9], RGB-D [31] | Fruit color [9], size [9] and position [30], fruit stalk posture [31] | Detection range 400~1000 mm [32], Height range 600 mm [33] |

| Apple | Binocular camera [34], RGB-D [35] | Fruit color [34,35], size [34,35] and position [34,35] | Detection range 1000~2000 mm [36], Height range 1000~1500 mm [7] |

| Citrus | Binocular camera [37], RGB-D [38] | Fruit color [37,38], size [37,38] and position [37,38] | Detection range 500~1000 mm [38], Height range 1850 mm [39] |

| Kiwi | Monocular camera + infrared position switch [40], binocular camera [41], RGB-D [42] | Fruit color [40], size [40] and position [40], trunk shape [43] and position [11] | Detection range 500~1000 mm [42], Visible area 3170 × 968 mm [43], 1250 mm × 1800 mm [11] |

| Fruit Object | Sensor | Optimal Imaging Wavelength |

|---|---|---|

| Green Citrus | Color camera + thermal camera [55] | 750~1400 nm [55], 827~850 nm [63] |

| Green Pepper | CCD camera + 6 wavelength filters [56] Multispectral camera [59] | 447 nm [56], 562 nm [56], 624 nm [56], 692 nm [56], 716 nm [56], 900~1000 nm [56], 750~1400 nm [59] |

| Apple | Color camera + 2 band-pass interference filters [60] Thermal imager [64] | 635 nm [60], 880 nm [60], 750~1400 nm [64] |

| Tomato | Near infrared camera + filter wheel with 3 filters [61] | 450 nm [61], 600 nm [61], 900 nm [61] |

| Kiwi | Near infrared image from Kinect camera [63] | 827~850 nm [63] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Feng, Q.; Li, T.; Xie, F.; Liu, C.; Xiong, Z. Advance of Target Visual Information Acquisition Technology for Fresh Fruit Robotic Harvesting: A Review. Agronomy 2022, 12, 1336. https://doi.org/10.3390/agronomy12061336

Li Y, Feng Q, Li T, Xie F, Liu C, Xiong Z. Advance of Target Visual Information Acquisition Technology for Fresh Fruit Robotic Harvesting: A Review. Agronomy. 2022; 12(6):1336. https://doi.org/10.3390/agronomy12061336

Chicago/Turabian StyleLi, Yajun, Qingchun Feng, Tao Li, Feng Xie, Cheng Liu, and Zicong Xiong. 2022. "Advance of Target Visual Information Acquisition Technology for Fresh Fruit Robotic Harvesting: A Review" Agronomy 12, no. 6: 1336. https://doi.org/10.3390/agronomy12061336

APA StyleLi, Y., Feng, Q., Li, T., Xie, F., Liu, C., & Xiong, Z. (2022). Advance of Target Visual Information Acquisition Technology for Fresh Fruit Robotic Harvesting: A Review. Agronomy, 12(6), 1336. https://doi.org/10.3390/agronomy12061336