Dynamic Mapping of Paddy Rice Using Multi-Temporal Landsat Data Based on a Deep Semantic Segmentation Model

Abstract

:1. Introduction

- Variations between crops and different years make it more difficult to find a unified model. The differentiation is dynamic between images derived at different dates. The phenological similarity of images in different years is not explored to identify the rice [29];

- These methods rely on hand-engineered features, and most appearance descriptors depend on a set of free parameters, which are commonly set by user experience via experimental trial-and-error or cross-validation [30];

2. Materials and Process

2.1. Study Area

2.2. Data Collection and Pre-Processing

2.2.1. Landsat Imagery

2.2.2. Reference Data

2.3. Landsat Time Series Construction

- Removing cloud contaminated pixels

- Liner interpolation

2.4. The Optimal Bands Combination Based on the OIF Index

3. Methodology

3.1. U-Net Neural Network

3.1.1. Network Structure

3.1.2. Structure of Training and Test Samples

3.1.3. Data Augmentation and Training Parameters

3.2. Other Classifier

3.3. Experiment Design

3.4. Accuracy Assessment and t-SNE(t-Distributed Stochastic Neighbor Embedding) Visualization

4. Results

4.1. Seasonal Profiles of Rice Spectral Characteristics

4.2. U-Net and RF Classifications Comparisons

4.3. Dynamic Mapping of Paddy Rice

4.3.1. Influence of Band Selection

4.3.2. Influence of Time Series Sampling

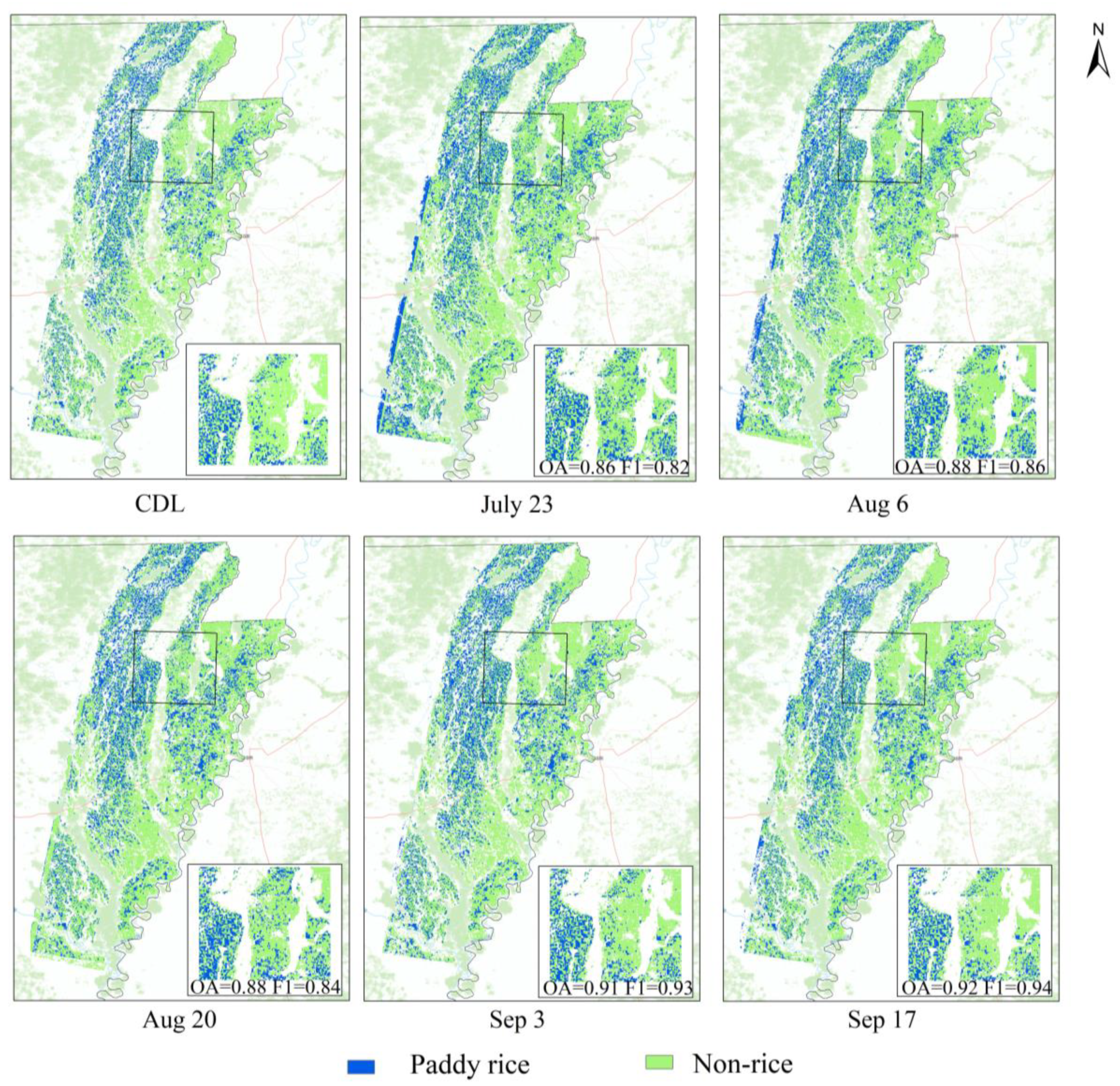

4.3.3. Dynamic Extraction of Paddy Rice

4.3.4. Dynamic Mapping and Comparison between the Paddy Rice Mapped and Statistical Data

5. Discussion

5.1. Comparison with Other Crop Extraction Studies

5.2. The Choice of Time Interval and Band Combination

5.3. Application of Dynamic Maps of Paddy Rice

6. Conclusions

- The end-to-end semantic segmentation model outperformed the traditional classifier Random Forest for multi-temporal remote sensing data;

- The band combination including red band, near-infrared band, and Swir-1 band could identify rice and decreased the training time compared with using all bands from Landsat;

- The time interval of fourteen days could better capture the spectral characteristics of rice. A clear increase in performance occurred when more years of data were included with the performance increase plateauing after approximately five years;

- During early rice paddy growth, the heading phase was proven to be an important time window for rice mapping. The overall accuracy and F1 score of the rice paddy planting area were 0.86 and 0.82, respectively.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kuenzer, C.; Knauer, K. Remote sensing of rice crop areas. Int. J. Remote Sens. 2012, 34, 2101–2139. [Google Scholar] [CrossRef]

- Kim, M.K.; Tejeda, H.; Yu, T.E.U.S. milled rice markets and integration across regions and types. Int. Food Agribus. Manag. Rev. 2017, 20, 623–636. [Google Scholar] [CrossRef]

- Qin, Y.W.; Xiao, X.M.; Dong, J.W.; Zhou, Y.; Zhu, Z.; Zhang, G.; Du, G.; Jin, C.; Kou, W.; Wang, J.; et al. Mapping paddy rice planting area in cold temperate climate region through analysis of time series Landsat 8 (OLI), Landsat 7 (ETM+) and MODIS imagery. ISPRS J. Photogramm. Remote Sens. 2015, 105, 220–233. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dong, J.W.; Xiao, X.M.; Menarguez, M.A.; Zhang, G.; Qin, Y.; Thau, D.; Biradar, C.; Moore, B. Mapping paddy rice planting area in northeastern Asia with Landsat 8 images, phenology-based algorithm and Google Earth Engine. Remote Sens. Environ. 2016, 185, 142–154. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Y.T.; Xiao, X.M.; Qin, Y.W.; Dong, J.; Zhang, G.; Kou, W.; Jin, C.; Wang, J.; Li, X. Mapping paddy rice planting area in rice-wetland coexistent areas through analysis of Landsat 8 OLI and MODIS images. Int. J. Appl. Earth Obs. Geoinf. 2016, 46, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Espe, M.B.; Cassman, K.G.; Yang, H.; Guilpart, N.; Grassini, P.; Van Wart, J.; Anders, M.; Beighley, D.; Harrell, D.; Linscombe, S.; et al. Yield gap analysis of US rice production systems shows opportunities for improvement. Field Crops Res. 2016, 196, 276–283. [Google Scholar] [CrossRef] [Green Version]

- FAO; IFAD; UNICEF; WFP; WHO. The State of Food Security and Nutrition in the World 2020; Food and Agriculture Organization of the United Nations: Rome, Italy, 2020; ISBN 978-92-5-132901-6. [Google Scholar]

- Dong, J.W.; Xiao, X.M. Evolution of regional to global paddy rice mapping methods: A review. ISPRS J. Photogramm. Remote Sens. 2016, 119, 214–227. [Google Scholar] [CrossRef] [Green Version]

- Liu, L.; Xiao, X.M.; Qin, Y.W.; Wang, J.; Xu, X.; Hu, Y.; Qiao, Z. Mapping cropping intensity in China using time series Landsat and Sentinel-2 images and Google Earth Engine. Remote Sens. Environ. 2020, 239, 111624. [Google Scholar] [CrossRef]

- Gandharum, L.; Mulyani, M.E.; Hartono, D.M.; Karsidi, A.; Ahmad, M. Remote sensing versus the area sampling frame method in paddy rice acreage estimation in Indramayu regency, West Java province, Indonesia. Int. J. Remote Sens. 2020, 42, 1738–1767. [Google Scholar] [CrossRef]

- Rawat, A.; Kumar, A.; Upadhyay, P.; Kumar, S. Deep learning-based models for temporal satellite data processing: Classification of paddy transplanted fields. Ecol. Inform. 2021, 61, 101214. [Google Scholar] [CrossRef]

- Gusso, A.; Guo, W.; Rolim, S.B.A. Reflectance-based model for soybean mapping in United States at common land unit scale with Landsat 8. Eur. J. Remote Sens. 2019, 52, 522–531. [Google Scholar] [CrossRef] [Green Version]

- Jin, C.; Xiao, X.M.; Dong, J.W.; Qin, Y.; Wang, Z. Mapping paddy rice distribution using multi-temporal Landsat imagery in the Sanjiang Plain, northeast China. Front. Earth Sci. 2016, 10, 49–62. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, J.F.; Zhu, Y.; Zhong, R.H.; Lin, Z.X.; Xu, J.; Jiang, H.; Huang, J.; Li, H.; Lin, T. DeepCropMapping: A multi-temporal deep learning approach with improved spatial generalizability for dynamic corn and soybean mapping. Remote Sens. Environ. 2020, 247, 111946. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Continuous change detection and classification of land cover using all available Landsat data. Remote Sens. Environ. 2014, 144, 152–171. [Google Scholar] [CrossRef] [Green Version]

- Inglada, J.; Arias, M.; Tardy, B.; Hagolle, O.; Valero, S.; Morin, D.; Dedieu, G.; Sepulcre, G.; Bontemps, S.; Defourny, P.; et al. Assessment of an operational system for crop type map production using high temporal and spatial resolution satellite optical imagery. Remote Sens. 2015, 7, 12356–12379. [Google Scholar] [CrossRef] [Green Version]

- Xiao, X.M.; Boles, S.; Liu, J.Y.; Zhuang, D.; Frolking, S.; Li, C.; Salas, W.; Moore, B. Mapping paddy rice agriculture in southern China using multi-temporal MODIS images. Remote Sens. Environ. 2005, 95, 480–492. [Google Scholar] [CrossRef]

- Singha, M.; Wu, B.F.; Zhang, M. Object-based paddy rice mapping using HJ-1A/B data and temporal features extracted from time series MODIS NDVI data. Sensors 2016, 17, 10. [Google Scholar] [CrossRef] [Green Version]

- Wardlow, B.D.; Egbert, S.L. Large-area crop mapping using time-series MODIS 250 m NDVI data: An assessment for the U.S. Central Great Plains. Remote Sens. Environ. 2008, 112, 1096–1116. [Google Scholar] [CrossRef]

- Yang, L.B.; Wang, L.M.; Abubakar, G.A.; Huang, J. High-resolution rice mapping based on SNIC segmentation and multi-source remote sensing images. Remote Sens. 2021, 13, 1148. [Google Scholar] [CrossRef]

- You, N.S.; Dong, J.W. Examining earliest identifiable timing of crops using all available Sentinel 1/2 imagery and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2020, 161, 109–123. [Google Scholar] [CrossRef]

- Hao, P.Y.; Zhan, Y.L.; Wang, L.; Niu, Z.; Shakir, M. Feature selection of time series MODIS data for early crop classification using Random Forest: A case study in Kansas, USA. Remote Sens. 2015, 7, 5347–5369. [Google Scholar] [CrossRef] [Green Version]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Dedieu, G. Assessing the robustness of Random Forests to map land cover with high resolution satellite image time series over large areas. Remote Sens. Environ. 2016, 187, 156–168. [Google Scholar] [CrossRef]

- Chen, C.F.; Son, N.T.; Chen, C.R.; Chang, L.Y. Wavelet filtering of time-series moderate resolution imaging spectroradiometer data for rice crop mapping using support vector machines and maximum likelihood classifier. Med. Eng. Phys. 2011, 5, 3525. [Google Scholar] [CrossRef]

- Ghassemi, B.; Dujakovic, A.; Zoltak, M.; Immitzer, M.; Atzberger, C.; Vuolo, F. Designing a European-wide crop type mapping approach based on machine learning algorithms using LUCAS field survey and Sentinel-2 data. Remote Sens. 2022, 14, 541. [Google Scholar] [CrossRef]

- Son, N.T.; Chen, C.F.; Chen, C.R.; Minh, V.Q. Assessment of Sentinel-1A data for rice crop classification using random forests and support vector machines. Geocarto Int. 2018, 33, 587–601. [Google Scholar] [CrossRef]

- Saini, R.; Ghosh, S.K. Crop classification in a heterogeneous agricultural environment using ensemble classifiers and single-date Sentinel-2A imagery. Geocarto Int. 2021, 36, 2141–2159. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Wei, P.L.; Chai, D.F.; Lin, T.; Tang, C.; Du, M.; Huang, J. Large-scale rice mapping under different years based on time-series Sentinel-1 images using deep semantic segmentation model. ISPRS J. Photogramm. Remote Sens. 2021, 174, 198–214. [Google Scholar] [CrossRef]

- Du, Z.R.; Yang, J.Y.; Ou, C.; Zhang, T. Smallholder crop area mapped with a semantic segmentation deep learning method. Remote Sens. 2019, 11, 888. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.C.; Liu, H.B.; Wu, W.; Zhan, L.; Wei, J. Mapping rice paddy based on machine learning with Sentinel-2 multi-temporal data: Model comparison and transferability. Remote Sens. 2020, 12, 1620. [Google Scholar] [CrossRef]

- Ding, J.; Chen, B.; Liu, H.W.; Huang, M. Convolutional neural network with data augmentation for SAR target recognition. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1–5. [Google Scholar] [CrossRef]

- Ji, S.P.; Zhang, Z.L.; Zhang, C.; Wei, S.; Lu, M.; Duan, Y. Learning discriminative spatiotemporal features for precise crop classification from multi-temporal satellite images. Int. J. Remote Sens. 2019, 41, 3162–3174. [Google Scholar] [CrossRef]

- Li, F.; Zhang, C.M.; Zhang, W.W.; Xu, Z.; Wang, S.; Sun, G.; Wang, Z. Improved winter wheat spatial distribution extraction from high-resolution remote sensing imagery using semantic features and statistical analysis. Remote Sens. 2020, 12, 538. [Google Scholar] [CrossRef] [Green Version]

- Hajj, M.E.; Baghdadi, N.; Zribi, M.; Bazzi, H. Synergic use of Sentinel-1 and Sentinel-2 images for operational soil moisture mapping at high spatial resolution over agricultural areas. Remote Sens. 2017, 9, 1292. [Google Scholar] [CrossRef] [Green Version]

- Boryan, C.; Yang, Z.; Mueller, R.; Craig, M. Monitoring US agriculture: The US department of agriculture, national agricultural statistics service, Cropland Data Layer program. Geocarto Int. 2011, 26, 341–358. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Zhong, L.H.; Hu, L.N.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Wang, J.; Xiao, X.M.; Liu, L.; Wu, X.; Qin, Y.; Steiner, J.L.; Dong, J. Mapping sugarcane plantation dynamics in Guangxi, China, by time series Sentinel-1, Sentinel-2 and Landsat images. Remote Sens. Environ. 2020, 247, 111951. [Google Scholar] [CrossRef]

- Griffiths, P.; Nendel, C.; Hostert, P. Intra-annual reflectance composites from Sentinel-2 and Landsat for national-scale crop and land cover mapping. Remote Sens. Environ. 2019, 220, 135–151. [Google Scholar] [CrossRef]

- Cai, Y.P.; Guan, K.Y.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A high-performance and in-season classification system of field-level crop types using time-series Landsat data and a machine learning approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- Motschenbacher, J.M.; Brye, K.R.; Anders, M.M.; Gbur, E.E.; Slaton, N.A.; Evans-White, M.A. Daily soil surface CO2 flux during non-flooded periods in flood-irrigated rice rotations. Agron. Sustain. Dev. 2014, 35, 771–782. [Google Scholar] [CrossRef] [Green Version]

- Brouwer, C.; Prins, K.; Heibloem, M. Irrigation Water Management: Irrigation Scheduling; Training manual No. 4; FAO: Rome, Italy, 1989. [Google Scholar]

- Chavez, P.S.; Berlin, G.L.; Sowers, L.B. Statistical-method for selecting Landsat MSS ratios. J. Appl. Photogr. Eng. 1982, 8, 23–30. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 640–651. [Google Scholar]

- Chai, D.F.; Newsam, S.; Zhang, H.K.; Qiu, Y.F.; Huang, J. Cloud and cloud shadow detection in Landsat imagery based on deep convolutional neural networks. Remote Sens. Environ. 2019, 225, 307–316. [Google Scholar] [CrossRef]

- Waldner, F.; Diakogiannis, F.I. Deep learning on edge: Extracting field boundaries from satellite images with a convolutional neural network. Remote Sens. Environ. 2020, 245, 111741. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Y.Y.; Feng, D.L.; Yu, L.; Wang, X.Y.; Chen, Y.; Bai, Y.; Hernández, H.J.; Galleguillos, M.; Estades, C.; Biging, G.S.; et al. Detailed dynamic land cover mapping of Chile: Accuracy improvement by integrating multi-temporal data. Remote Sens. Environ. 2016, 183, 170–185. [Google Scholar] [CrossRef]

- Belgiu, M.; Stein, A. Spatiotemporal image fusion in Remote Sensing. Remote Sens. 2019, 11, 818. [Google Scholar] [CrossRef] [Green Version]

- Rodriguez-Galiano, V.F.; Chica-Olmo, M.; Abarca-Hernandez, F.; Atkinson, P.M.; Jeganathan, C. Random Forest classification of Mediterranean land cover using multi-seasonal imagery and multi-seasonal texture. Remote Sens. Environ. 2012, 121, 93–107. [Google Scholar] [CrossRef]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Son, N.T.; Chen, C.F.; Chen, C.R.; Duc, H.N.; Chang, L.Y. A phenology-based classification of time-series MODIS data for rice crop monitoring in Mekong Delta, Vietnam. Remote Sens. 2013, 6, 135–156. [Google Scholar] [CrossRef] [Green Version]

- Ding, M.J.; Guan, Q.H.; Li, L.H.; Zhang, H.; Liu, C.; Zhang, L. Phenology-based rice paddy mapping using multi-source satellite imagery and a fusion algorithm applied to the Poyang Lake Plain, Southern China. Remote Sens. 2020, 12, 1022. [Google Scholar] [CrossRef] [Green Version]

- Linquist, B.A.; Marcos, M.; Adviento-Borbe, M.A.; Anders, M.; Harrell, D.; Linscombe, S.; Reba, M.L.; Runkle, B.R.K.; Tarpley, L.; Thomson, A. Greenhouse Gas Emissions and Management Practices that Affect Emissions in US Rice Systems. J. Environ. Qual. 2018, 47, 395–409. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, H.J.; Pan, B.; Wu, W.F.; Tai, J. Field-based rice classification in Wuhua county through integration of multi-temporal Sentinel-1A and Landsat-8 OLI data. Int. J. Appl. Earth Obs. Geoinf. 2018, 69, 226–236. [Google Scholar] [CrossRef]

- Su, D.; Kong, H.; Qiao, Y.L.; Sukkarieh, S. Data augmentation for deep learning based semantic segmentation and crop-weed classification in agricultural robotics. Comput. Electron. Agric. 2021, 190, 106418. [Google Scholar] [CrossRef]

- Giannopoulos, M.; Tsagkatakis, G.; Tsakalides, P. 4D U-Nets for multi-temporal remote sensing data classification. Remote Sens. 2022, 14, 634. [Google Scholar] [CrossRef]

- Boulila, W.; Sellami, M.; Driss, M.; Al-Sarem, M.; Safaei, M.; Ghaleb, F.A. RS-DCNN: A novel distributed convolutional-neural-networks based-approach for big remote-sensing image classification. Comput. Electron. Agric. 2021, 182, 106014. [Google Scholar] [CrossRef]

- Yaramasu, R.; Bandaru, V.; Pnvr, K. Pre-season crop type mapping using deep neural networks. Comput. Electron. Agric. 2020, 176, 105664. [Google Scholar] [CrossRef]

- Li, H.; Fu, D.J.; Huang, C.; Su, F.Z.; Liu, Q.S.; Liu, G.H.; Wu, S. An approach to high-resolution rice paddy mapping using time-series Sentinel-1 SAR data in the Mun River Basin, Thailand. Remote Sens. 2020, 12, 3959. [Google Scholar] [CrossRef]

- Vuolo, F.; Neuwirth, M.; Immitzer, M.; Atzberger, C.; Ng, W.-T. How much does multi-temporal Sentinel-2 data improve crop type classification? Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 122–130. [Google Scholar] [CrossRef]

- Yuan, Q.Q.; Shen, H.F.; Li, T.W.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Ozdogan, M.; Yang, Y.; Allez, G.; Cervantes, C. Remote sensing of irrigated agriculture: Opportunities and challenges. Remote Sens. 2010, 2, 2274–2304. [Google Scholar] [CrossRef] [Green Version]

- Wang, F.L.; Wang, F.M.; Zhang, Y.; Hu, J.H.; Huang, J.F.; Xie, J.K. Rice yield estimation using Parcel-Level relative spectra variables from UAV-based hyperspectral imagery. Front. Plant Sci. 2019, 10, 453. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cheng, Y.X.; Huang, J.F.; Han, Z.L.; Guo, J.P.; Zhao, Y.X.; Wang, X.Z.; Guo, R.F. Cold damage risk assessment of Double Cropping Rice in Hunan, China. J. Integr. Agric. 2013, 12, 352–363. [Google Scholar] [CrossRef]

- Singha, M.; Dong, J.W.; Sarmah, S.; You, N.S.; Zhou, Y.; Zhang, G.L.; Doughty, R.; Xiao, X.M. Identifying floods and flood-affected paddy rice fields in Bangladesh based on Sentinel-1 imagery and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2020, 166, 278–293. [Google Scholar] [CrossRef]

- Zhang, G.; Zhang, S.; Wang, L.; Xiao, Y.; Tang, W.; Chen, G.; Chen, L. Effects of high temperature at different times during the heading and filling periods on rice quality. Sci. Agric. Sin. 2013, 46, 2869–2879. [Google Scholar]

- Boschetti, M.; Nelson, A.; Nutini, F.; Manfron, G.; Busetto, L.; Barbieri, M.; Laborte, A.; Raviz, J.; Holecz, F.; Mabalay, M.R.O.; et al. Rapid assessment of crop status: An application of MODIS and SAR data to rice areas in Leyte, Philippines affected by typhoon Haiyan. Remote Sens. 2015, 7, 6535–6557. [Google Scholar] [CrossRef] [Green Version]

- Fu, X.; Zhao, G.N.; Wu, W.C.; Xu, B.; Li, J.; Zhou, X.T.; Ke, X.X.; Li, Y.; Li, W.J.; Zhou, C.M.; et al. Assessing the impacts of natural disasters on rice production in Jiangxi, China. Int. J. Remote Sens. 2022, 43, 1919–1941. [Google Scholar] [CrossRef]

- Skakun, S.; Franch, B.; Vermote, E.; Roger, J.C.; Becker-Reshef, I.; Justice, C.; Kussul, N. Early season large-area winter crop mapping using MODIS NDVI data, growing degree days information and a Gaussian mixture model. Remote Sens. Environ. 2017, 195, 244–258. [Google Scholar] [CrossRef]

- McNairn, H.; Kross, A.; Lapen, D.; Caves, R.; Shang, J. Early season monitoring of corn and soybeans with TerraSAR-X and RADARSAT-2. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 252–259. [Google Scholar] [CrossRef]

| Blue | Green | Red | Nir | Swir-1 | Swir-2 | |

|---|---|---|---|---|---|---|

| Blue | 1 | 0.973 | 0.973 | 0.159 | 0.831 | 0.903 |

| Green | 0.977 | 1 | 0.974 | 0.266 | 0.822 | 0.878 |

| Red | 0.971 | 0.989 | 1 | 0.139 | 0.840 | 0.906 |

| Nir | 0.824 | 0.872 | 0.847 | 1 | 0.451 | 0.294 |

| Swir-1 | 0.887 | 0.863 | 0.874 | 0.859 | 1 | 0.964 |

| Swir-2 | 0.906 | 0.869 | 0.880 | 0.780 | 0.978 | 1 |

| Blue/Nir/Swir-1 | Blue /Nir/Swir-2 | Green/Nir/Swir-1 | Green/Nir/Swir-2 | Red/Nir/Swir-1 | Red/Nir/Swir-2 | ||

|---|---|---|---|---|---|---|---|

| All pixels | OIF | 7069.6 | 6730.2 | 7266.5 | 6932.1 | 7433.4 | 7080.2 |

| Rice pixels | OIF | 4305.3 | 4088.5 | 4438.3 | 4221.6 | 4537.3 | 4320.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, M.; Huang, J.; Wei, P.; Yang, L.; Chai, D.; Peng, D.; Sha, J.; Sun, W.; Huang, R. Dynamic Mapping of Paddy Rice Using Multi-Temporal Landsat Data Based on a Deep Semantic Segmentation Model. Agronomy 2022, 12, 1583. https://doi.org/10.3390/agronomy12071583

Du M, Huang J, Wei P, Yang L, Chai D, Peng D, Sha J, Sun W, Huang R. Dynamic Mapping of Paddy Rice Using Multi-Temporal Landsat Data Based on a Deep Semantic Segmentation Model. Agronomy. 2022; 12(7):1583. https://doi.org/10.3390/agronomy12071583

Chicago/Turabian StyleDu, Meiqi, Jingfeng Huang, Pengliang Wei, Lingbo Yang, Dengfeng Chai, Dailiang Peng, Jinming Sha, Weiwei Sun, and Ran Huang. 2022. "Dynamic Mapping of Paddy Rice Using Multi-Temporal Landsat Data Based on a Deep Semantic Segmentation Model" Agronomy 12, no. 7: 1583. https://doi.org/10.3390/agronomy12071583