Precision Chemical Weed Management Strategies: A Review and a Design of a New CNN-Based Modular Spot Sprayer

Abstract

:1. Introduction

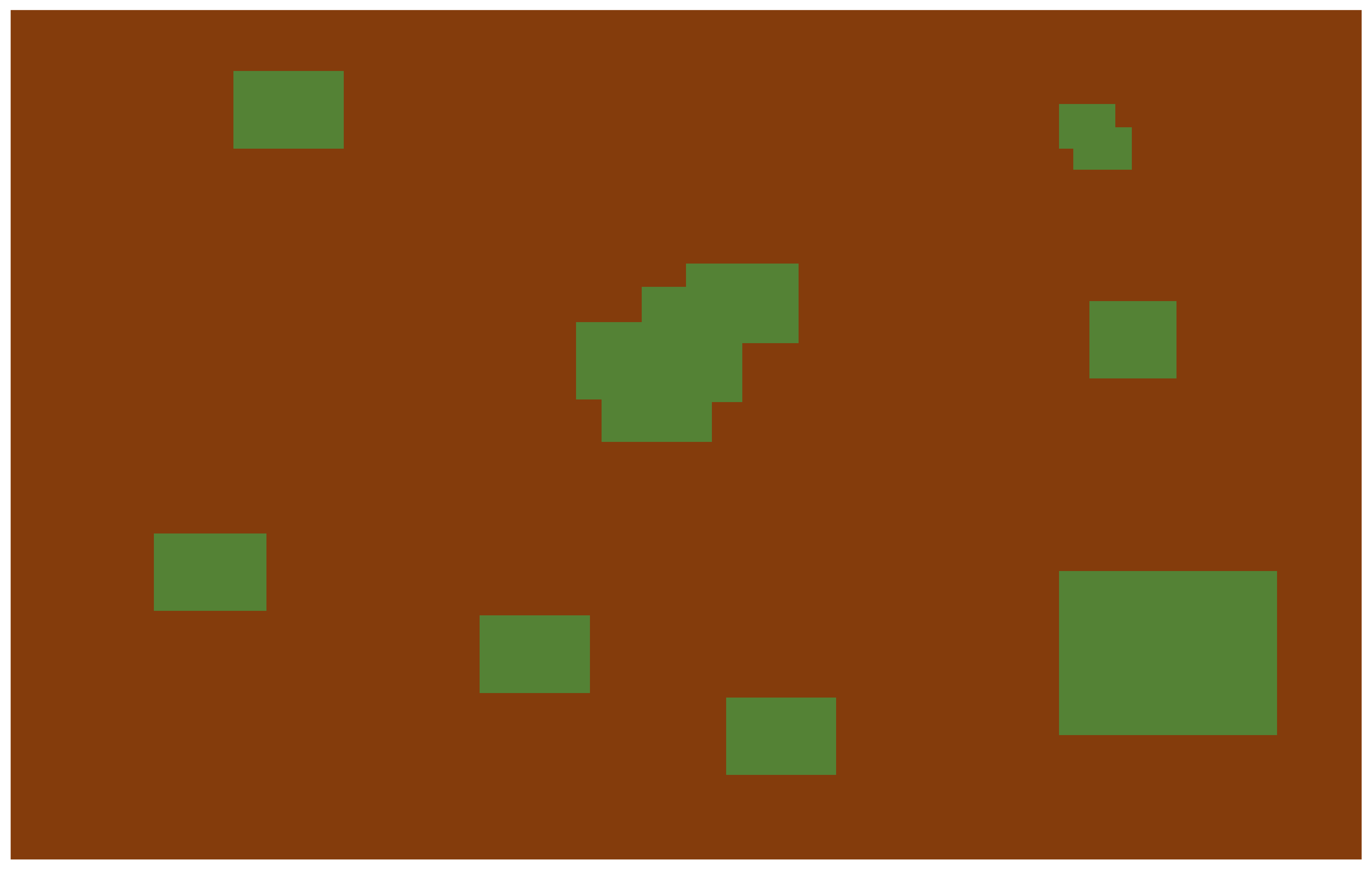

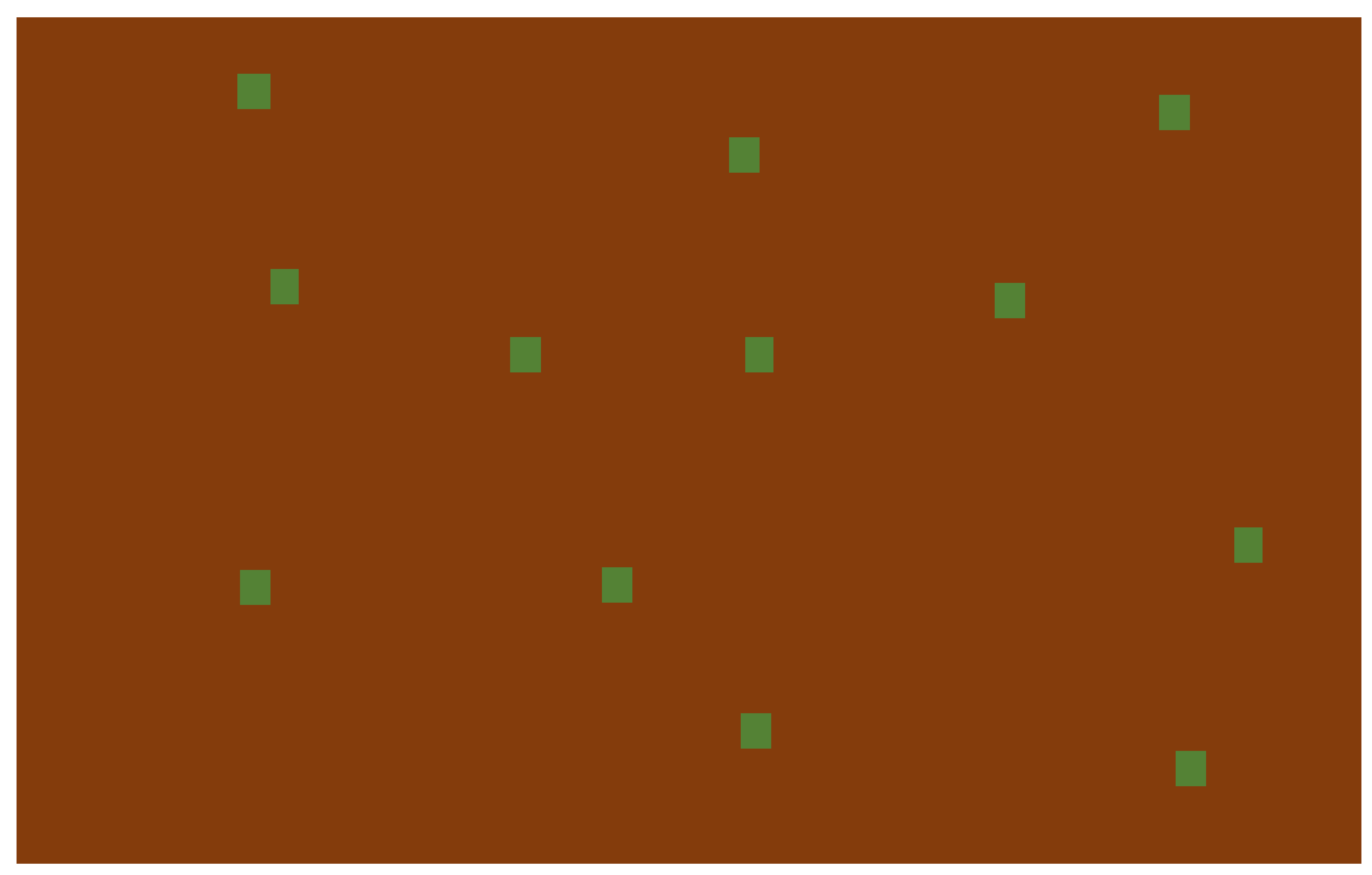

2. Patch Spraying

3. Spot Spraying

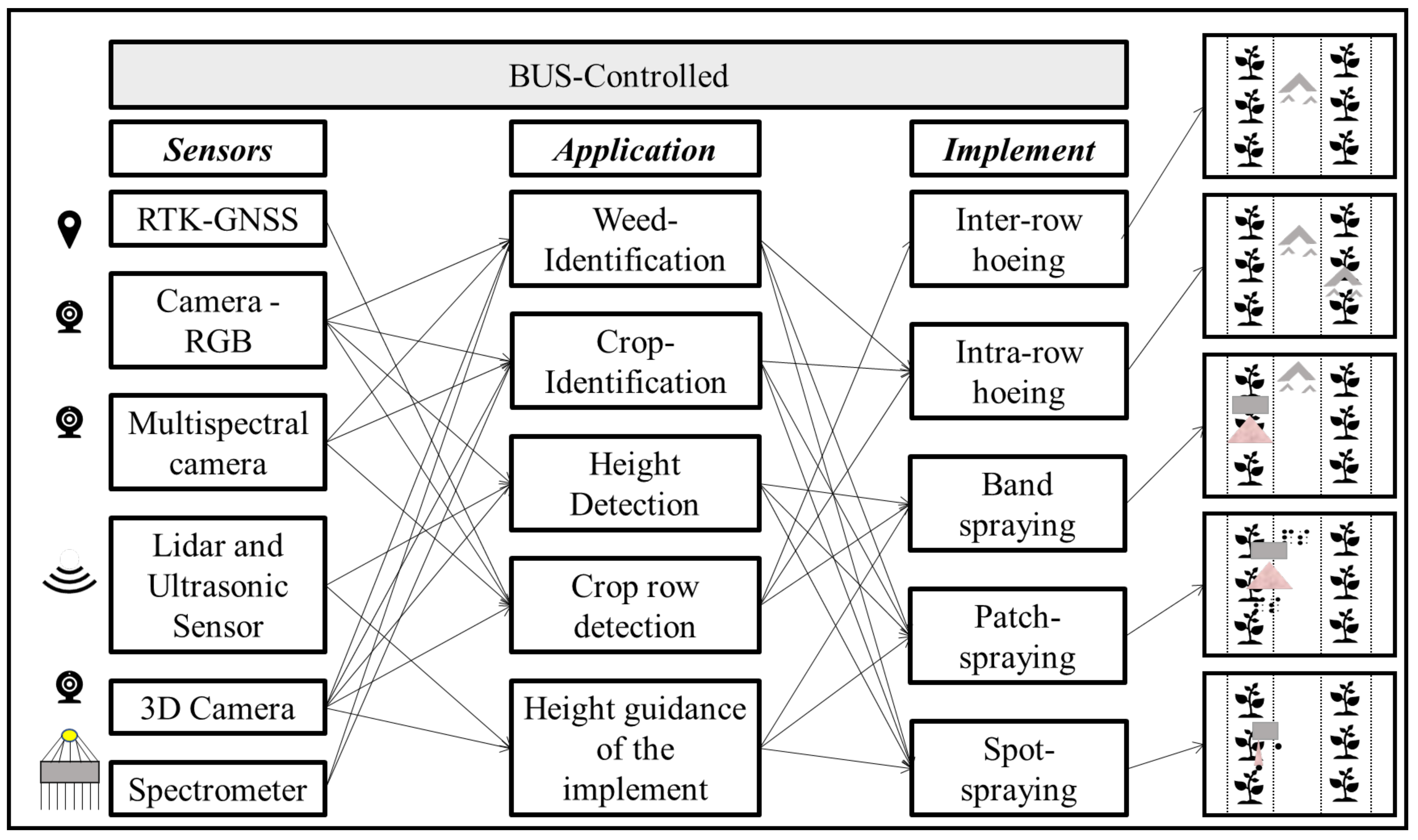

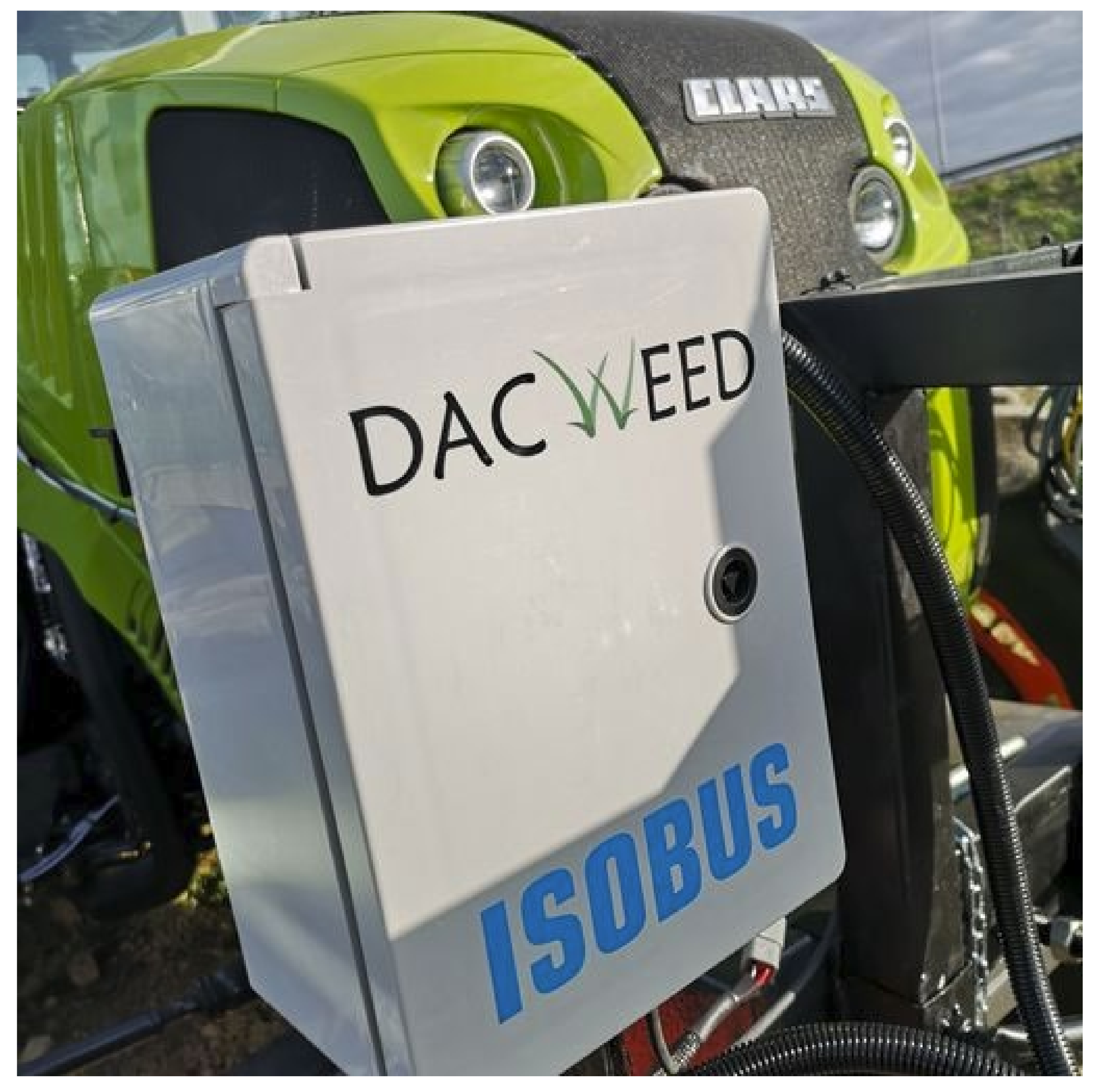

4. Integration of Precision Systems on Tractors

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| GMOs | Genetic modified organisms |

| GoG | Green on Green |

| GoB | Green on Brown |

| DL | Deep Learning |

| CNN | Convolutional Neural Network |

| RICAP | Random Image Cropping and Patching |

| ILSVRC | Large Scale Visual Recognition Challenge |

| ReLUs | Rectified Linear Units |

References

- Marshall, E. Field-scale estimates of grass weed populations in arable land. Weed Res. 1988, 28, 191–198. [Google Scholar] [CrossRef]

- Rasmussen, J.; Nielsen, J.; Streibig, J.C.; Jensen, J.E.; Pedersen, K.S.; Olsen, S.I. Pre-harvest weed mapping of Cirsium arvense in wheat and barley with off-the-shelf UAVs. Precis. Agric. 2021, 20, 983–999. [Google Scholar] [CrossRef]

- Slaughter, D.; Giles, D.; Downey, D. Autonomous robotic weed control systems: A review. Comput. Electron. Agric. 2007, 61, 63–78. [Google Scholar] [CrossRef]

- Gerhards, R.; Christensen, S. Real-time weed detection, decision making and patch spraying in maize, sugarbeet, winter wheat and winter barley. Weed Res. 2003, 43, 385–392. [Google Scholar] [CrossRef]

- Gerhards, R.; Andújar Sanchez, D.; Hamouz, P.; Peteinatos, G.G.; Christensen, S.; Fernandez-Quintanilla, C. Advances in site-specific weed management in agriculture—A review. Weed Res. 2022, 62, 123–133. [Google Scholar] [CrossRef]

- Thornton, P.K.; Fawcett, R.H.; Dent, J.B.; Perkins, T.J. Spatial weed distribution and economic thresholds for weed control. Crop Prot. 1990, 9, 337–342. [Google Scholar] [CrossRef]

- Gerhards, R.; Oebel, H. Practical experiences with a system for site-specific weed control in arable crops using real-time image analysis and GPS-controlled patch spraying. Weed Res. 2006, 46, 185–193. [Google Scholar] [CrossRef]

- European Commission. The European Green Deal. Brussels 2019, 11, 24. [Google Scholar]

- Pannacci, E.; Tei, F. Effects of mechanical and chemical methods on weed control, weed seed rain and crop yield in maize, sunflower and soyabean. Crop Prot. 2014, 64, 51–59. [Google Scholar] [CrossRef]

- Lowenberg-DeBoer, J.; Huang, I.; Grigoriadis, V.; Blackmore, S. Economics of robots and automation in field crop production. Precision Agric. 2020, 21, 278–299. [Google Scholar] [CrossRef] [Green Version]

- McCarthy, C.; Rees, S.; Baillie, C. (Eds.) Machine Vision-Based Weed Spot Spraying: A Review and Where Next for Sugarcane? In Proceedings of the 32nd Annual Conference of the Australian Society of Sugar Cane Technologists (ASSCT 2010), Bundaberg, Australia, 11–14 May 2010. [Google Scholar]

- Gibson, P.J.; Power, C.H. Introductory Remote Sensing: Digital Image Processing and Applications; Routledge: London, UK, 2000; ISBN 0415189616. [Google Scholar]

- Gerhards, R.; Sökefeld, M.; Timmermann, C.; Kühbauch, W.; Williams II, M.M. Site-Specific Weed Control in Maize, Sugar Beet, Winter Wheat, and Winter Barley. Precision Agric. 2002, 3, 25–35. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, C.; Qiao, Y.; Zhang, Z.; Zhang, W.; Song, C. CNN feature based graph convolutional network for weed and crop recognition in smart farming. Comput. Electron. Agric. 2019, 174, 105450. [Google Scholar] [CrossRef]

- Christensen, S.; Søgaard, H.T.; Kudsk, P.; Nørremark, M.; Lund, I.; Nadimi, E.S.; Jørgensen, R. Site-specific weed control technologies. Weed Res. 2009, 49, 233–241. [Google Scholar] [CrossRef]

- Berge, H.F.M.; van der Meer, H.G.; Steenhuizen, J.W.; Goedhart, P.W.; Knops, P.; Verhagen, J. Olivine weathering in soil, and its effects on growth and nutrient uptake in Ryegrass (Lolium perenne L.): A pot experiment. PLoS ONE 2012, 7, e42098. [Google Scholar] [CrossRef] [Green Version]

- Gutjahr, C.; Sökefeld, M.; Gerhards, R. Evaluation of two patch spraying systems in winter wheat and maize. Weed Res. 2012, 52, 510–519. [Google Scholar] [CrossRef]

- Lutman, P.; Miller, P. Spatially variable herbicide application technology; opportunities for herbicide minimisation and protection of beneficial weeds. Res. Rev. 2007, 62, 64. [Google Scholar]

- Gerhards, R.; Kollenda, B.; Machleb, J.; Möller, K.; Butz, A.; Reiser, D.; Griegentrog, H.-W. Camera-guided Weed Hoeing in Winter Cereals with Narrow Row Distance. Gesunde Pflanz. 2020, 72, 403–411. [Google Scholar] [CrossRef]

- Jensen, P.; Lund, I. Static and dynamic distribution of spray from single nozzles and the influence on biological efficacy of band applications of herbicides. Crop Prot. 2006, 25, 1201–1209. [Google Scholar] [CrossRef]

- Mink, R.; Dutta, A.; Peteinatos, G.; Sökefeld, M.; Engels, J.; Hahn, M.; Gerhards, R. Multi-Temporal Site-Specific Weed Control of Cirsium arvense (L.) Scop. and Rumex crispus L. in Maize and Sugar Beet Using Unmanned Aerial Vehicle Based Mapping. Agriculture 2018, 8, 65. [Google Scholar] [CrossRef] [Green Version]

- Wiles, L.J. Beyond patch spraying: Site-specific weed management with several herbicides. Precision Agric. 2009, 10, 277–290. [Google Scholar] [CrossRef]

- Audsley, E. Operational research analysis of patch spraying. Crop Prot. 1993, 12, 111–119. [Google Scholar] [CrossRef]

- Rasmussen, J.; Nielsen, J.; Garcia-Ruiz, F.; Christensen, S.; Streibig, J.C. Potential uses of small unmanned aircraft systems (UAS) in weed research. Weed Res. 2013, 53, 242–248. [Google Scholar] [CrossRef]

- Fernández-Quintanilla, C.; Peña, J.M.; Andújar, D.; Dorado, J.; Ribeiro, A.; López-Granados, F. Is the current state of the art of weed monitoring suitable for site-specific weed management in arable crops? Weed Res. 2018, 58, 259–272. [Google Scholar] [CrossRef]

- Longchamps, L.; Panneton, B.; Simard, M.-J.; Leroux, G. An Imagery-Based Weed Cover Threshold Established Using Expert Knowledge. Weed Sci. 2014, 62, 177–185. [Google Scholar] [CrossRef]

- Agricon. H-Sensor. Available online: https://www.agricon.de/?gclid=EAIaIQobChMI556z4o769wIVS7TVCh0zjATfEAAYASAAEgK9m_D_BwE (accessed on 2 March 2022).

- Böttger, H.; Langner, H. Neue Technik zur variablen Spritzmitteldosierung. Landtechnik 2003, 58, 142–143. [Google Scholar] [CrossRef]

- Amazone. Anhängefeldspritze UX AmaSpot. Available online: https://amazone.de/de-de/produkte-digitale-loesungen/landtechnik/pflanzenschutztechnik/anhaengefeldspritzen/anhaengefeldspritze-ux-amaspot-76572?gclid=EAIaIQobChMI76LQg5yl9wIVl-N3Ch3H_gcqEAAYASAAEgJfSPD_BwE (accessed on 2 March 2022).

- Agrifac. Camera Spraying. Available online: https://www.agrifac.com/de (accessed on 25 May 2022).

- El Abdellah, A. A Feasibility Study of Direct Injection Spraying Technology for Small Scale Farming: Modeling and Design of a Process Control System. Ph.D. Thesis, Universite de Liege, Liege, Belgium, 2015. [Google Scholar]

- Pohl, J.; Rautmann, D.; Nordmeyer, H.; van Hörsten, D. Direkteinspeisung im Präzisionspflanzenschutz—Teilflächenspezifische Applikation von Pflanzenschutzmitteln. Gesunde Pflanz. 2019, 71, 51–55. [Google Scholar] [CrossRef]

- Gonzales-de-Soto, M.; Emmi, L.; Perez-Ruiz, M.; Aguera, J.; Gonzales-de-Santos, P. Autonomous systems for precise spraying e Evaluation of a robotised patch sprayer. Biosyst. Eng. 2016, 146, 165–182. [Google Scholar] [CrossRef]

- Kunz, C.; Schröllkamp, C.; Koch, H.-J.; Eßer, C.; Schulze Lammers, P.; Gerhards, R. Potentials of post-emergent mechanical weed control in sugar beet to reduce herbicide inputs. Landtech. Agric. Eng. 2015, 70, 67–81. [Google Scholar] [CrossRef]

- Paraforos, D.; Sharipov, G.; Griepentrog, H. ISO 11783—Compatible industrial sensor and control systems and related research: A review. Comput. Electron. Agric. 2019, 163, 104863. [Google Scholar] [CrossRef]

- Auernhammer, H. ISOBUS in European Precision Agriculture. In Proceedings of the Second International Summit on Precision Agriculture, Beijing, China, 11–15 September 2014. [Google Scholar]

- Oebel, H.; Gerhards, R.; Beckers, G.; Dicke, D.; Sökefeld, M.; Lock, R.; Nabout, A.; Therburg, R.-D. (Eds.) Site-specific weed control using digital image analysis and georeferenced application maps—First field experiences. In Proceedings of the 22nd German Conference on Weed Biology and Weed Control, Stuttgart-Hohenheim, Germany, 2–4 March 2004. [Google Scholar]

- Andújar, D.; Weis, M.; Gerhards, R. An ultrasonic system for weed detection in cereal crops. Sensors 2012, 12, 17343–17357. [Google Scholar] [CrossRef]

- Christensen, S.; Heisel, T.; Walter, A.M.; Graglia, E. A decision algorithm for patch spraying. Weed Res. 2003, 43, 276–284. [Google Scholar] [CrossRef]

- Griepentrog, H.; Ruckelshausen, A.; Jørgensen, R.; Lund, I. Precision Crop Protection—The Challenge and Use of Heterogeneity: Autonomous Systems for Plant Protection; Springer: Dordrecht, The Netherlands, 2010; ISBN 9789048192779. [Google Scholar]

- Miller, P. Patch spraying: Future role of electronics in limiting pesticide use. Pest Manag. Sci. 2003, 59, 566–574. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Liu, Y.; Gong, C.; Chen, Y.; Yu, H. Applications of Deep Learning for Dense Scenes Analysis in Agriculture: A Review. Sensors 2020, 20, 1520. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Razavian, A.; Azizpour, H.; Sullivan, J.; Carlsson, S. (Eds.) CNN Features off-the-shelf: An Astounding Baseline for Recognition. In Proceedings of the 2014 Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Peteinatos, G.; Reichel, P.; Karouta, J.; Andújar, D.; Gerhards, R. Weed Identification in Maize, Sunflower, and Potatoes with the Aid of Convolutional Neural Networks. Remote Sens. 2020, 12, 4185. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Alom, M.; Taha, T.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.; van Esesn, B.; Awwal, A.; Asari, V. The History Began from AlexNet: A Comprehensive Survey on Deep Learning Approaches. arXiv 2018, arXiv:1803.01164. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. (Eds.) ImageNet classification with deep convolutional neural networks. In Proceedings of the 26th Annual Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012. [Google Scholar]

- Theckedath, D.; Sedamkar, R.R. Detecting Affect States Using VGG16, ResNet50 and SE-ResNet50 Networks. SN Comput. Sci. 2020, 1, 79. [Google Scholar] [CrossRef] [Green Version]

- Bah, M.; Hafiane, A.; Canals, R. Deep learning with unsupervised data labeling for weed detection in line crops in uav images. Remote Sens. 2018, 10, 1690. [Google Scholar] [CrossRef] [Green Version]

- Kounalakis, T.; Triantafyllidis, G.; Nalpantidis, L. Deep learning-based visual recognition of rumex for robotic precision farming. Comput. Electron. Agric. 2019, 165, 104973. [Google Scholar] [CrossRef]

- Partel, V.; Kakarla, S.; Ampatzidis, Y. Development and evaluation of a low-cost and smart technology for precision weed management utilizing artificial intelligence. Comput. Electron. Agric. 2019, 157, 339–350. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef] [Green Version]

- Milioto, A.; Lottes, P.; Stachniss, C. (Eds.) Real-time Semantic Segmentation of Crop and Weed for Precision Agriculture Robots Leveraging Background Knowledge in CNN’s. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- dos Santos Ferreira, A.; Matte Freitas, D.; Da Gonçalves Silva, G.; Pistori, H.; Theophilo Folhes, M. Weed detection in soybean crops using ConvNets. Comput. Electron. Agric. 2017, 143, 314–324. [Google Scholar] [CrossRef]

- Potena, C.; Nardi, D.; Pretto, A. Fast and Accurate Crop and Weed Identification with Summarized Train Sets for Precision Agriculture. In Intelligent Autonomous Systems 14: Proceedings of the 14th International Conference IAS-14, Shanghai, China, 3–7 July 2016; Chen, W., Hosoda, K., Menegatti, E., Shimizu, M., Wang, H., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 105–121. ISBN 9783319480367. [Google Scholar]

- Elnemr, H. Convolutional Neural Network Architecture for Plant Seedling Classification. IJACSA 2019, 10, 146–150. [Google Scholar] [CrossRef]

- Olsen, A.; Konovalov, D.; Philippa, B.; Ridd, P.; Wood, J.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J.; et al. Deepweeds: A multiclass weed species image dataset for deep learning. Sci. Rep. 2019, 9, 2058. [Google Scholar] [CrossRef]

- Villain, E.; Mattia, G.; Nemmi, F.; Peran, P.; Franceries, X.; Le Lann, M. Visual interpretation of CNN decision-making process using Simulated Brain MRI. In Proceedings of the 2021 IEEE 34th International Symposium on Computer-Based Medical Systems (CBMS), Aveiro, Portugal, 7–9 June 2021; pp. 515–520, ISBN 978-1-6654-4121-6. [Google Scholar]

- Shahin, S.; Sadeghian, R.; Sareh, S. Faster R-CNN-based Decision Making in a Novel Adaptive Dual-Mode Robotic Anchoring System. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 11010–11016, ISBN 978-1-7281-9077-8. [Google Scholar]

- Rautaray, S.; Pandey, M.; Gourisaria, M.; Sharma, R.; Das, S. Paddy Crop Disease Prediction—A Transfer Learning Technique. IJRTE 2020, 8, 1490–1495. [Google Scholar] [CrossRef]

- Gao, H.; Cheng, B.; Wang, J.; Li, K.; Zhao, K.; Li, D. Object Classification Using CNN-Based Fusion of Vision and LIDAR in Autonomous Vehicle Environment. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5506812. [Google Scholar] [CrossRef]

- Gupta, S.; Girshick, R.; Arbeláez, P.; Malik, J. Learning Rich Features from RGB-D Images for Object Detection and Segmentation. arXiv 2014, arXiv:1407.5736v1. Available online: https://arxiv.org/pdf/1407.5736 (accessed on 2 May 2022).

- Kim, M.-H.; Park, J.; Choi, S. Road Type Identification Ahead of the Tire Using D-CNN and Reflected Ultrasonic Signals. Int. J. Automot. Technol. 2021, 22, 47–54. [Google Scholar] [CrossRef]

- Jankowski, S.; Buczynski, R.; Wielgus, A.; Pleskacz, W.; Szoplik, T.; Veretennicoff, I.; Thienpont, H. Digital CNN with Optical and Electronic Processing. In Proceedings of the ECCTD’99 European Conference on Circuit Theory and Design, Stresa, Italy, 29 August–2 September 1999. [Google Scholar]

- Gou, L.; Li, H.; Zheng, H.; Pei, X. Aeroengine Control System Sensor Fault Diagnosis Based on CWT and CNN. Math. Probl. Eng. 2020, 2020, 5357146. [Google Scholar] [CrossRef] [Green Version]

- Blue River Technology. See & Spray: The Next Generation of Weed Control. Available online: https://bluerivertechnology.com/ (accessed on 3 March 2022).

- Bilberry. AiCPlus Camera System. Available online: https://bilberry.io/ (accessed on 16 March 2022).

- Redaktion Profi. Agrifac AiCPlus: Spot Spraying Mit Kamera. Profi. Available online: https://www.profi.de/spot-spraying-mit-kamera-11962018.html (accessed on 2 March 2022).

- Zanin, A.; Neves, D.; Teodora, L.; Silva Junior, C.; Silva, S.; Teodora, P.; Baio, F. Reduction of pesticide application via real-time precision spraying. Sci. Rep. 2022, 12, 5638. [Google Scholar] [CrossRef]

- Shanmugasundar, G.; Gowtham, M.; Aswin, E.; Surya, S.; Arujun, D. Design and fabrication of multi utility agricultural vehicle for village farmers. In Recent Trends in Science and Engineering; AIP Publishing: Melville, NY, USA, 2022; p. 20212. [Google Scholar]

- Meshram, A.T.; Vanalkar, A.V.; Kalambe, K.B.; Badar, A.M. Pesticide spraying robot for precision agriculture: A categorical literature review and future trends. J. Field Robot. 2022, 39, 153–171. [Google Scholar] [CrossRef]

- Peteinatos, G.; Andújar, D.; Engel, T.; Supervía, D.; Gerhards, R. (Eds.) DACWEED. A Project to Integrate Sensor Identification into Tractor Actuation for Weed Management; Sustainable Integrated Weed Management and Herbicide Tolerant Varieties; University of Southern Denmark Odense: Odense, Denmark, 2019. [Google Scholar]

- Kunz, C.; Weber, J.; Gerhards, R. Benefits of Precision Farming Technologies for Mechanical Weed Control in Soybean and Sugar Beet—Comparison of Precision Hoeing with Conventional Mechanical Weed Control. Agronomy 2015, 5, 130–142. [Google Scholar] [CrossRef] [Green Version]

- Gupta, S.D. Plant Image Analysis: Fundamentals and Applications; Taylor and Francis: Hoboken, NJ, USA, 2014; ISBN 9781466583016. [Google Scholar]

- Sa, I.; Chen, Z.; Popocić, M.; Khanna, R.; Liebisch, F.; Nieto, J.; Siegwart, R. Weednet: Dense semantic weed classification using multispectral images and mav for smart farming. IEEE Robot. Autom. Lett. 2017, 3, 588–595. [Google Scholar] [CrossRef] [Green Version]

- Oebel, H. Teilschlagspezifische Unkrautbekämpfung Durch Raumbezogene Bildverarbeitung im Offline- (und Online-) Verfahren (TURBO). Ph.D. Thesis, Universität Hohenheim, Hohenheim, Germany, 2006. [Google Scholar]

- Llorens, J.; Gil, E.; Llop, J.; Escolà, A. Ultrasonic and LIDAR sensors for electronic canopy characterization in vineyards: Advances to improve pesticide application methods. Sensors 2011, 11, 2177–2194. [Google Scholar] [CrossRef] [Green Version]

- Rueda-Ayala, V.; Peteinatos, G.; Gerhards, R.; Andújar, D. A Non-Chemical System for Online Weed Control. Sensors 2015, 15, 7691–7707. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Tang, L. Crop recognition under weedy conditions based on 3D imaging for robotic weed control. J. Field Robot. 2018, 35, 596–611. [Google Scholar] [CrossRef] [Green Version]

- Borregaard, T.; Nielsen, H.; Nørgaard, L.; Have, H. Crop–weed Discrimination by Line Imaging Spectroscopy. J. Agric. Eng. Res. 2000, 75, 389–400. [Google Scholar] [CrossRef]

- Lopez Correa, J.; Karouta, J.; Bengochea-Guevara, J.; Ribeiro, A.; Peteinatos, G.; Gerhards, R.; Andújar, D. (Eds.) Neural-network-based classifier for on-line weed control in corn and tomato fields. In Proceedings of the XVI European Society for Agronomy Congress, Sevilla, Spain, 1–4 September 2020. [Google Scholar]

- Trimble. Weedseeker. Available online: https://agriculture.trimble.de/product/weedseeker-2-system-zur-punktgenauen-spritzung/ (accessed on 24 May 2022).

- Timmermann, C.; Gerhards, R.; Kühbauch, W. The Economic Impact of Site-Specific Weed Control. Precis. Agric. 2003, 4, 249–260. [Google Scholar] [CrossRef]

- Bürger, J.; Küzmič, F.; Šilc, U.; Jansen, F.; Bergmeier, E.; Chytrý, M.; Cirujeda, A.; Fogliatto, S.; Fried, G.; Dostatny, D.; et al. Two sides of one medal: Arable weed vegetation of Europe in phytosociological data compared to agronomical weed surveys. Appl. Veg. Sci. 2022, 25, e12460. [Google Scholar] [CrossRef]

- Steward, B.; Gai, J.; Tang, L. The Use of Agriculture Robots in Weed Management and Control. In Robotics and Automation for Improving Agriculture; Burleigh Dodds Science Publishing: Cambridge, UK, 2019; ISBN 9781786762726. [Google Scholar]

- Esau, T.; Zaman, Q.; Groulx, D.; Corscadden, K.; Ki Chang, Y. Economic Analysis for Smart Sprayer Application in Wild Blueberry Fields. In Proceedings of the 2015 ASABE Annual International Meeting, New Orleans, LA, 26–29 July 2015; Volume 17, pp. 753–765. [Google Scholar] [CrossRef]

- Tona, E.; Calcante, A.; Oberti, R. The profitability of precision spraying on specialty crops: A technical–economic analysis of protection equipment at increasing technological levels. Precis. Agric. 2017, 19, 606–629. [Google Scholar] [CrossRef] [Green Version]

- Melland, A.R.; Silburn, D.M.; McHugh, A.D.; Fillols, E.; Rojas-Ponce, S.; Baillie, C.; Lewis, S. Spot Spraying Reduces Herbicide Concentrations in Runoff. J. Agric. Food Chem. 2016, 64, 4009–4020. [Google Scholar] [CrossRef] [PubMed]

- Su, D.; Qiao, Y.; Kong, H.; Sukkarieh, S. Real time detection of inter-row ryegrass in wheat farms using deep learning. Biosyst. Eng. 2021, 204, 198–211. [Google Scholar] [CrossRef]

- Hasan, A.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M. A Survey of Deep Learning Techniques for Weed Detection from Images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- Su, D.; Kong, H.; Qiao, Y.; Sukkarieh, S. Data augmentation for deep learning based semantic segmentation and crop-weed classification in agricultural robotics. Comput. Electron. Agric. 2021, 190, 106418. [Google Scholar] [CrossRef]

- Machleb, J.; Peteinatos, G.; Kollenda, B.; Andújar, D.; Gerhards, R. Sensor-based mechanical weed control: Present state and prospect. Comput. Electron. Agric. 2019, 176, 105638. [Google Scholar] [CrossRef]

- Giles, D.K.; Slaughter, D.C. Precision band spraying with machine-vision guidance and adjustable yaw nozzles. Trans. ASAE 1997, 40, 29–36. [Google Scholar] [CrossRef]

- Perez-Ruiz, M.; Carballido, J.; Agüera, J.; Rodríguez-Lizana, A. Development and evaluation of a combined cultivator and band sprayer with a row-centering RTK-GPS guidance system. Sensors 2013, 13, 3313–3330. [Google Scholar] [CrossRef] [PubMed]

| Product/Trade Mark | Company | Technology | Sensors | Access | Herbicide Reduce | Application |

|---|---|---|---|---|---|---|

| Robotti | Agrointelli | Combining Deep Learning and BigData | RTK-GPS, autonomous, Lidar, Camera | Close | 40–60% | Robot |

| ARA | Ecorobotix | CNN-based weed detection in sugar beet and spot spraying | Multi-camera vision system | Open | Up to 95% | Tractor-mounted |

| Bilberry | Bilberry | AI-based weed detection and spot spraying | RGB camera | Open | More than 80% | Robot |

| Weedseeker | Trimble Agriculture | Infrared Sensors | High-resolution blue LED-spectrometer | Open | 60–90% | Tractor-mounted |

| Weed-It | Weed-It | Detection of green vegetation | Blue LED-lighting and spectrometer | Open | 95% (only in crop-free areas) | Tractor-mounted |

| FD20 | Farmdroid | RTK-GPS recorded position of crop seeds and spot spraying | RTK-GPS | Open | unknown | Robot |

| H-Sensor | AgriCon | AI-based weed detection in cereals and maize | Bi-spectral camera | Close | 50% | Tractor-mounted |

| Blue River’s see and spray | Blue-River Technologies | CNN-based weed detection in cotton and spot spraying | RGB-cameras | Close | Up to 90% | Tractor-mounted |

| EcoPatch | Dimensions Agri Technologies | AI-based weed detection and spot spraying | RGB-camera | Closed | unknown | Tractor-mounted |

| Kilter AX-1 | Kilter Systems | RTK-based crop detection and selective spraying in vegetables | robot | Open | unknown | Robot |

| Greeneye | GreeneyeTechnology | AI-based weed detection and spot spraying | RGB-camera | Open | unknown | Tractor-mounted |

| Avirtech-MIMO | Avirtech | UAV-based weed mapping and patch spraying | 4D Radar imaging | Close | unknown | Drone |

| Smart Spraying | BASF, Bosch, Amazone | Camera-based weed coverage measurement and spot spraying | Bi-spectral camera | Close | 70% | Tractor-mounted |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Allmendinger, A.; Spaeth, M.; Saile, M.; Peteinatos, G.G.; Gerhards, R. Precision Chemical Weed Management Strategies: A Review and a Design of a New CNN-Based Modular Spot Sprayer. Agronomy 2022, 12, 1620. https://doi.org/10.3390/agronomy12071620

Allmendinger A, Spaeth M, Saile M, Peteinatos GG, Gerhards R. Precision Chemical Weed Management Strategies: A Review and a Design of a New CNN-Based Modular Spot Sprayer. Agronomy. 2022; 12(7):1620. https://doi.org/10.3390/agronomy12071620

Chicago/Turabian StyleAllmendinger, Alicia, Michael Spaeth, Marcus Saile, Gerassimos G. Peteinatos, and Roland Gerhards. 2022. "Precision Chemical Weed Management Strategies: A Review and a Design of a New CNN-Based Modular Spot Sprayer" Agronomy 12, no. 7: 1620. https://doi.org/10.3390/agronomy12071620

APA StyleAllmendinger, A., Spaeth, M., Saile, M., Peteinatos, G. G., & Gerhards, R. (2022). Precision Chemical Weed Management Strategies: A Review and a Design of a New CNN-Based Modular Spot Sprayer. Agronomy, 12(7), 1620. https://doi.org/10.3390/agronomy12071620