Surface Defect Detection of “Yuluxiang” Pear Using Convolutional Neural Network with Class-Balance Loss

Abstract

:1. Introduction

2. Materials and Methods

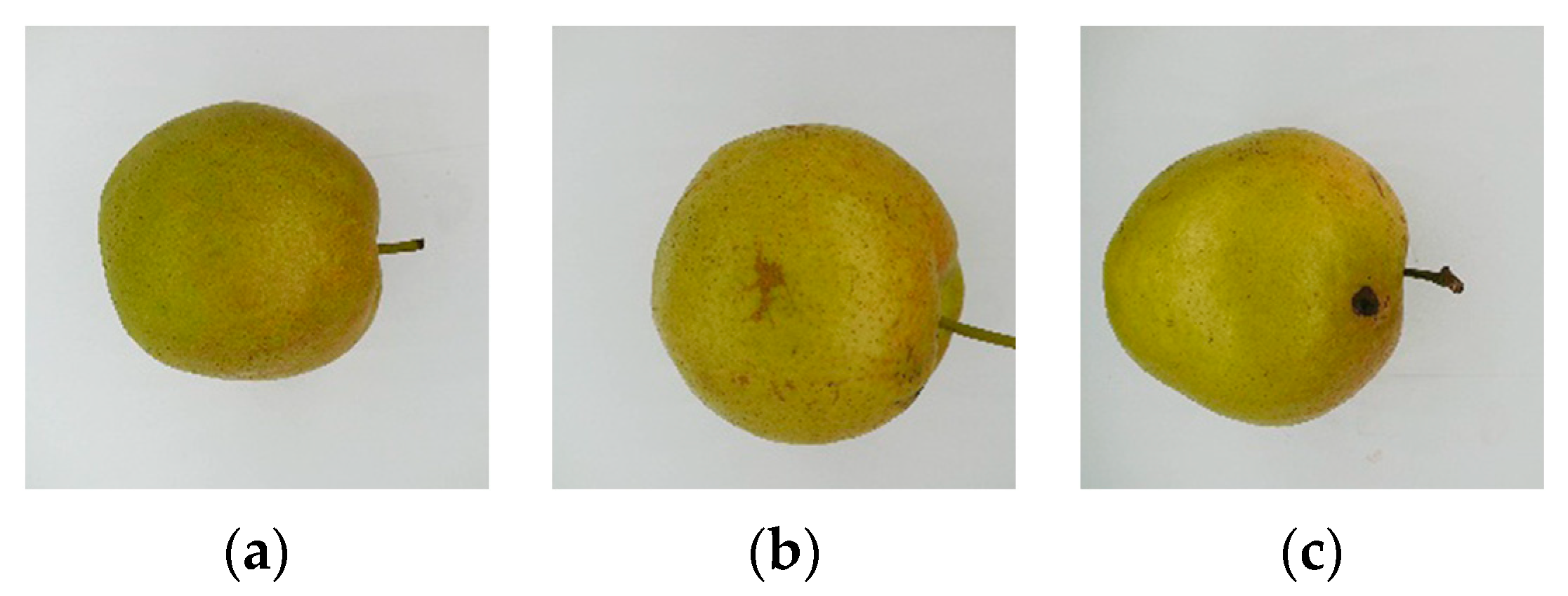

2.1. Dataset Construction

2.2. Class Balance

2.3. CNN Networks

2.4. Experimental Environment and Parameter

2.5. Evaluation Indicators

3. Results and Discussion

3.1. Effect of Class Balance Loss on Detection Models

3.1.1. Modeling Based on Class Balance Loss

3.1.2. Model Checking

3.2. Model Comparison

3.2.1. Comparison of CNN Models

3.2.2. Comparison of Traditional Machine Learning Models

3.3. Model Test

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhao, C. State-of-the-art and recommended developmental strategic objectives of smart agriculture. Smart Agric. 2019, 1, 1–7. [Google Scholar] [CrossRef]

- Yang, S.; Bai, M.; Hao, G.; Zhang, X.; Guo, H.; Fu, B. Transcriptome survey and expression analysis reveals the adaptive mechanism of ‘Yulu Xiang’ Pear in response to long-term drought stress. PLoS ONE 2021, 16, e0246070. [Google Scholar] [CrossRef]

- Wu, X.; Shi, X.; Bai, M.; Chen, Y.; Li, X.; Qi, K.; Cao, P.; Li, M.; Yin, H.; Zhang, S. Transcriptomic and Gas Chromatography-Mass Spectrometry Metabolomic Profiling Analysis of the Epidermis Provides Insights into Cuticular Wax Regulation in Developing ‘Yuluxiang’ Pear Fruit. J. Agric. Food Chem. 2019, 67, 8319–8331. [Google Scholar] [CrossRef]

- Guo, Z.; Wang, Q.; Song, Y.; Zou, X.; Cai, J. Research progress of sensing detection and monitoring technology for fruit and vegetable quality control. Smart Agric. 2021, 3, 14–28. [Google Scholar] [CrossRef]

- Shi, H.; Wang, Q.; Gu, W.; Wang, X.; Gao, S. Non-destructive Firmness Detection and Grading of Bunches of Red Globe Grapes Based on Machine Vision. Food Sci. 2021, 42, 232–239. [Google Scholar] [CrossRef]

- Dhakshina Kumar, S.; Esakkirajan, S.; Bama, S.; Keerthiveena, B. A microcontroller based machine vision approach for tomato grading and sorting using SVM classifier. Microprocess. Microsyst. 2020, 76, 103090. [Google Scholar] [CrossRef]

- Azarmdel, H.; Jahanbakhshi, A.; Mohtasebi, S.S.; Muñoz, A.R. Evaluation of image processing technique as an expert system in mulberry fruit grading based on ripeness level using artificial neural networks (ANNs) and support vector machine (SVM). Postharvest Biol. Technol. 2020, 166, 111201. [Google Scholar] [CrossRef]

- Patel, K.K.; Kar, A.; Khan, M.A. Common External Defect Detection of Mangoes Using Color Computer Vision. J. Inst. Eng. Ser. A 2019, 100, 559–568. [Google Scholar] [CrossRef]

- Ireri, D.; Belal, E.; Okinda, C.; Makange, N.; Ji, C. A computer vision system for defect discrimination and grading in tomatoes using machine learning and image processing. Artif. Intell. Agric. 2019, 2, 28–37. [Google Scholar] [CrossRef]

- Narendra, V.; Pinto, A. Defects detection in fruits and vegetables using image processing and soft computing techniques. In Proceedings of the 6th International Conference on Harmony Search, Soft Computing and Applications, Istanbul, Turkey, 22–24 April 2020; Springer: Singapore, 2021. [Google Scholar]

- Xie, W.; Wei, S.; Zheng, Z.; Yang, D. A CNN-based lightweight ensemble model for detecting defective carrots. Biosyst. Eng. 2021, 208, 287–299. [Google Scholar] [CrossRef]

- Zhang, S.; Gao, T.; Ren, R.; Sun, H. Detection of Walnut Internal Quality Based on X-ray Imaging Technology and Convolution Neural Network. Trans. Chin. Soc. Agric. Mach. 2022, 53, 383–388. [Google Scholar] [CrossRef]

- Li, X.; Ma, B.; Yu, G.; Chen, J.; Li, Y.; Li, C. Surface defect detection of Hami melon using deep learning and image processing. Trans. Chin. Soc. Agric. Eng. 2021, 37, 223–232. [Google Scholar] [CrossRef]

- Fan, S.; Li, J.; Zhang, Y.; Tian, X.; Wang, Q.; He, X.; Zhang, C.; Huang, W. On line detection of defective apples using computer vision system combined with deep learning methods. J. Food Eng. 2020, 286, 110102. [Google Scholar] [CrossRef]

- Xue, Y.; Wang, L.; Zhang, Y.; Shen, Q. Defect Detection Method of Apples Based on GoogLeNet Deep Transfer Learning. Trans. Chin. Soc. Agric. Mach. 2020, 51, 30–35. [Google Scholar] [CrossRef]

- Jiang, L. Identification of DangShan Pears Surface Defects Based on Machine Vision. Master’s Thesis, Nanjing Forestry University, Nanjing, China, 2018. [Google Scholar]

- Chen, F. Research on On-line Detection of External Defects of Korla Fragrant Pear. Master’s Thesis, Tarim University, Talimu, China, 2021. [Google Scholar]

- Zhang, Y.; Wa, S.; Sun, P.; Wang, Y. Pear Defect Detection Method Based on ResNet and DCGAN. Information 2021, 12, 397. [Google Scholar] [CrossRef]

- Johnson, J.M.; Khoshgoftaar, T.M. The Effects of Data Sampling with Deep Learning and Highly Imbalanced Big Data. Inf. Syst. Front. 2020, 22, 1113–1131. [Google Scholar] [CrossRef]

- Krawczyk, B. Learning from imbalanced data: Open challenges and future directions. Prog. Artif. Intell. 2016, 5, 221–232. [Google Scholar] [CrossRef]

- Guo, H.; Li, Y.; Shang, J.; Gu, M.; Huang, Y.; Gong, B. Learning from class-imbalanced data: Review of methods and applications. Expert Syst. Appl. 2017, 73, 220–239. [Google Scholar] [CrossRef]

- Gao, J.; Ni, J.; Yang, H.; Han, Z. Pistachio visual detection based on data balance and deep learning. Trans. Chin. Soc. Agric. Mach. 2021, 52, 367–372. [Google Scholar] [CrossRef]

- Li, Z.; Xu, J.; Zheng, L.; Tie, J.; Yu, S. Small sample recognition method of tea disease based on improved DenseNet. Trans. Chin. Soc. Agric. Eng. 2022, 38, 182–190. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Cui, Y.; Jia, M.; Lin, T.; Song, Y.; Belongie, S. Class-Balanced loss based on effective number of samples. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 9260–9269. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar] [CrossRef]

- Pan, S.; Yang, Q. A survey on transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Gong, X.; Chen, Z.; Wu, L.; Xie, Z.; Xu, Y. Transfer learning based mixture of experts classification model for high-resolution remote sensing scene classification. Acta Opt. Sin. 2021, 41, 2301003. [Google Scholar] [CrossRef]

- Su, S.; Qiao, Y.; Rao, Y. Recognition of grape leaf diseases and mobile application based on transfer learning. Trans. Chin. Soc. Agric. Eng. 2021, 37, 127–134. [Google Scholar] [CrossRef]

- Rismiyati, R.; Luthfiarta, A. VGG 16 transfer learning architecture for salak fruit quality classification. Telematika 2021, 18, 37–48. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale. In Proceedings of the Image Recognition, IEEE Conference on Learning Representations, San Diego, CA, USA, 10 April 2015. [Google Scholar]

- Szegedy, C.; Liu, W.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7 June 2015. [Google Scholar]

- Howard, A.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. In Proceedings of the Computer Vision and Pattern Recognition, Honolulu, HI, USA, 17 April 2017. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobilenetV2: Inverted residuals and linear bottlenecks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Iandola, F.N.; Song, H.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-Level Accuracy with 50x Fewer Parameters and <0.5 MB Model Size. Available online: https://arxiv.org/abs/1602.07360 (accessed on 4 November 2016).

- Suykens, J.; Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Geladi, P.; Kowalski, B. Partial least-squares regression: A tutoria. Anal. Chim. Acta 1986, 185, 1–17. [Google Scholar] [CrossRef]

- Luciano, A.C.d.S.; Picoli, M.C.A.; Duft, D.G.; Rocha, J.V.; Leal, M.R.L.V.; Maire, G. Empirical model for forecasting sugarcane yield on a local scale in Brazil using Landsat imagery and random forest algorithm. Comput. Electron. Agric. 2021, 184, 106063. [Google Scholar] [CrossRef]

- Li, Z.; Li, Y.; Yang, Y.; Guo, R.; Yang, J.; Yue, J.; Wang, Y. A high-precision detection method of hydroponic lettuce seedlings status based on improved Faster RCNN. Comput. Electron. Agric. 2021, 182, 106054. [Google Scholar] [CrossRef]

| Loss Function | Accuracy of Train/% | Loss Value of Train | Accuracy of Validation/% | Loss Value of Validation |

|---|---|---|---|---|

| SGM-CE | 82.27 | 0.5355 | 89.12 | 0.4906 |

| CB-SGM-CE | 100.00 | 0.0230 | 98.41 | 0.0950 |

| SM-CE | 92.76 | 0.2167 | 96.94 | 0.1849 |

| CB-SM-CE | 99.92 | 0.0160 | 98.64 | 0.0495 |

| FL | 91.89 | 0.0815 | 96.94 | 0.0593 |

| CB-FL | 100.00 | 0.0030 | 99.55 | 0.0428 |

| Loss Function | Precision/% | Recall/% | F1 Score | Accuracy/% | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Russet | Intact | Canker | Russet | Intact | Canker | Russet | Intact | Canker | ||

| SGM-CE | 100.00 | 85.93 | 92.48 | 42.85 | 100.00 | 100.00 | 0.600 | 0.924 | 0.961 | 90.55 |

| CB-SGM-CE | 100.00 | 100.00 | 98.27 | 94.56 | 100.00 | 100.00 | 0.972 | 1.000 | 0.991 | 99.10 |

| SM-CE | 99.26 | 99.29 | 97.63 | 91.16 | 100.00 | 99.78 | 0.950 | 0.996 | 0.987 | 98.43 |

| CB-SM-CE | 100.00 | 100.00 | 98.70 | 95.92 | 100.00 | 100.00 | 0.979 | 1.000 | 0.993 | 99.33 |

| FL | 99.25 | 98.58 | 97.63 | 89.80 | 100.00 | 99.78 | 0.943 | 0.993 | 0.987 | 98.20 |

| CB-FL | 100.00 | 100.00 | 99.56 | 98.64 | 100.00 | 100.00 | 0.993 | 1.000 | 0.998 | 99.78 |

| Network | Accuracy of Train/% | Accuracy of Validation/% | Accuracy of Test/% |

|---|---|---|---|

| GoogLeNet | 100.00 | 99.55 | 99.78 |

| VGG 16 | 99.51 | 97.85 | 98.43 |

| AlexNet | 99.92 | 96.03 | 98.54 |

| SqueezeNet | 89.24 | 93.08 | 90.10 |

| MobileNet V2 | 99.51 | 95.81 | 98.99 |

| Network | Precision/% | Recall/% | F1 Score | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Russet | Intact | Canker | Russet | Intact | Canker | Russet | Intact | Canker | |

| GoogLeNet | 100.00 | 100.00 | 99.56 | 98.64 | 100.00 | 100.00 | 0.993 | 1.000 | 0.998 |

| SqueezeNet | 96.83 | 84.82 | 92.86 | 41.50 | 99.30 | 100.00 | 0.581 | 0.915 | 0.963 |

| MobileNet V2 | 97.92 | 100.00 | 98.70 | 95.92 | 98.95 | 100.00 | 0.969 | 0.995 | 0.993 |

| VGG 16 | 98.54 | 99.65 | 97.64 | 91.84 | 99.65 | 99.78 | 0.951 | 0.997 | 0.987 |

| AlexNet | 97.14 | 99.65 | 98.30 | 93.79 | 98.61 | 100.00 | 0.954 | 0.991 | 0.991 |

| Model | Precision/% | Recall/% | F1 Score | Accuracy/% | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Russet | Intact | Canker | Russet | Intact | Canker | Russet | Intact | Canker | ||

| LS-SVM | 57.58 | 100.00 | 96.83 | 90.48 | 75.61 | 93.85 | 0.704 | 0.861 | 0.953 | 87.40 |

| BPNN | 54.55 | 93.55 | 98.41 | 85.71 | 70.73 | 95.39 | 0.667 | 0.806 | 0.969 | 85.83 |

| RF | 63.64 | 91.89 | 94.12 | 66.67 | 82.93 | 98.46 | 0.651 | 0.872 | 0.962 | 88.19 |

| PLS | 54.29 | 93.75 | 100.00 | 90.48 | 73.17 | 92.31 | 0.679 | 0.822 | 0.960 | 85.83 |

| DT | 42.86 | 91.89 | 81.58 | 28.57 | 82.93 | 95.39 | 0.343 | 0.872 | 0.879 | 80.32 |

| True Class | Number of Samples for the Predicted Class | Recall/% | F1 Score | ||

|---|---|---|---|---|---|

| Russet | Intact | Canker | |||

| Russet | 32 | 2 | 0 | 94.12 | 0.928 |

| Intact | 1 | 55 | 0 | 98.21 | 0.965 |

| Canker | 2 | 1 | 34 | 91.89 | 0.958 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, H.; Zhang, S.; Ren, R.; Su, L. Surface Defect Detection of “Yuluxiang” Pear Using Convolutional Neural Network with Class-Balance Loss. Agronomy 2022, 12, 2076. https://doi.org/10.3390/agronomy12092076

Sun H, Zhang S, Ren R, Su L. Surface Defect Detection of “Yuluxiang” Pear Using Convolutional Neural Network with Class-Balance Loss. Agronomy. 2022; 12(9):2076. https://doi.org/10.3390/agronomy12092076

Chicago/Turabian StyleSun, Haixia, Shujuan Zhang, Rui Ren, and Liyang Su. 2022. "Surface Defect Detection of “Yuluxiang” Pear Using Convolutional Neural Network with Class-Balance Loss" Agronomy 12, no. 9: 2076. https://doi.org/10.3390/agronomy12092076