Detection of the Grassland Weed Phlomoides umbrosa Using Multi-Source Imagery and an Improved YOLOv8 Network

Abstract

:1. Introduction

- (1)

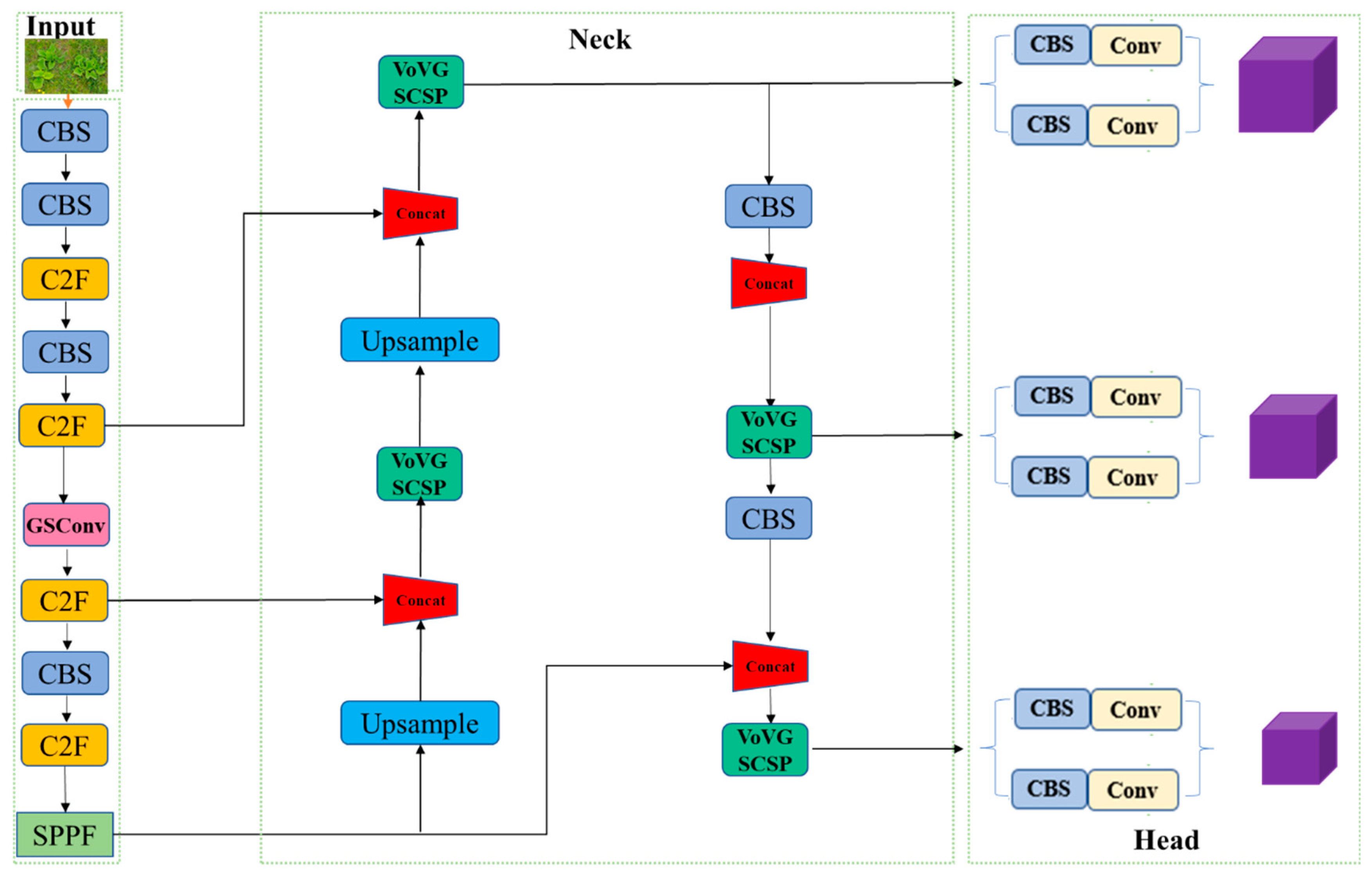

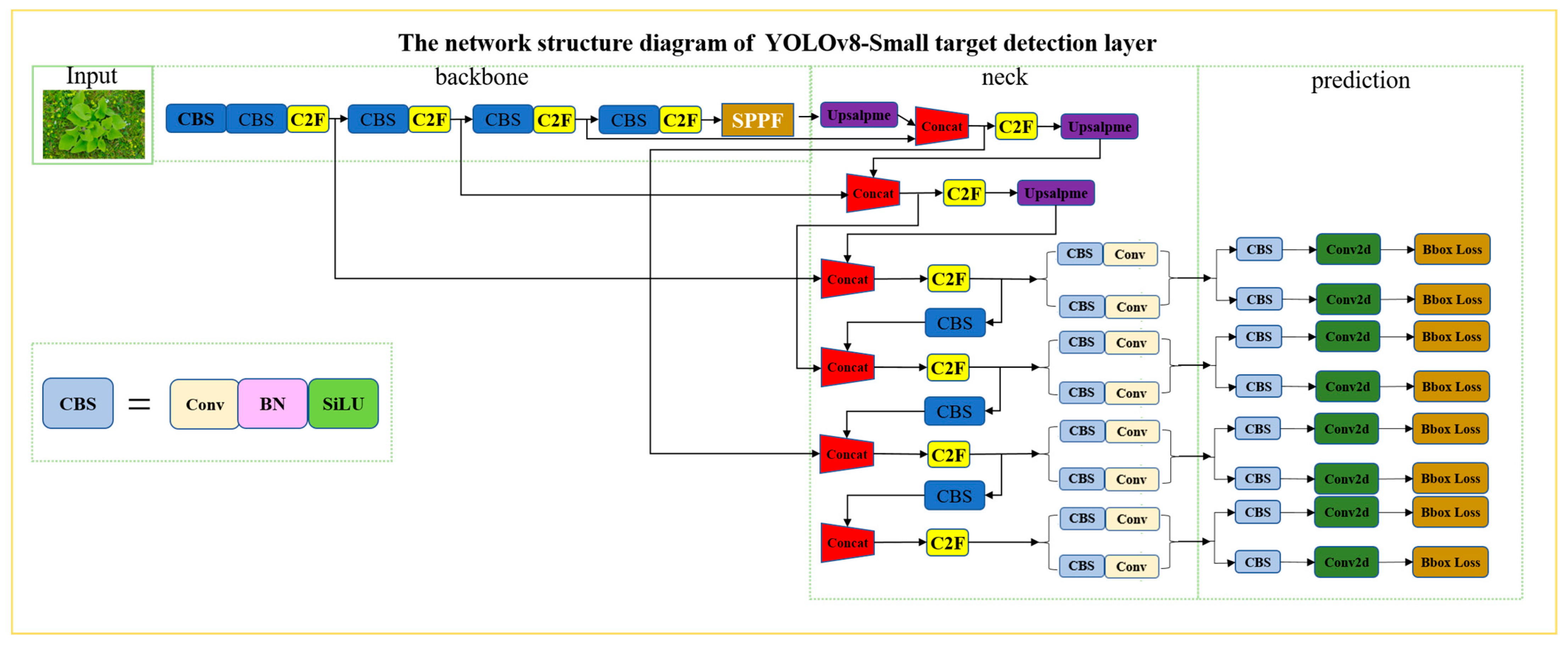

- In the neck structure of YOLOv8n, an additional small target detection layer with an output feature map scale of 160 × 160 is added to extract the details and features of P. umbrosa through a multi-receptive field, reducing the loss of small target feature information.

- (2)

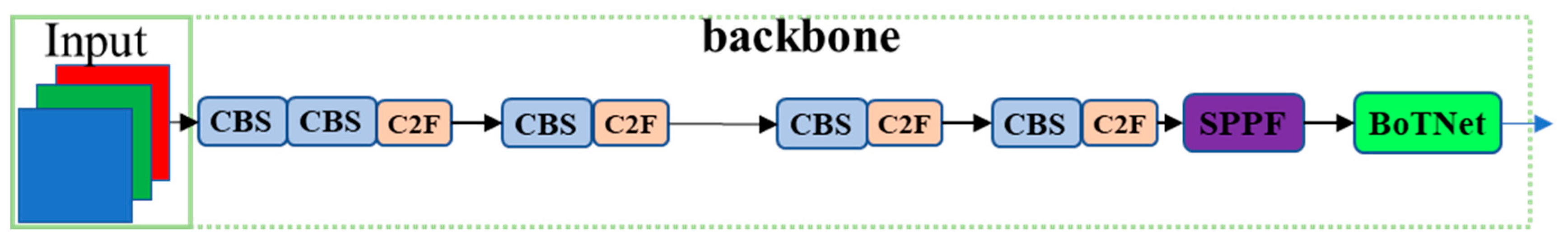

- Introduce the BoTNet module into the backbone network of YOLOv8n, combining convolution operations and a multi-head self-attention mechanism (MHSA) to address both local and global features, enhancing the richness and completeness of feature extraction.

- (3)

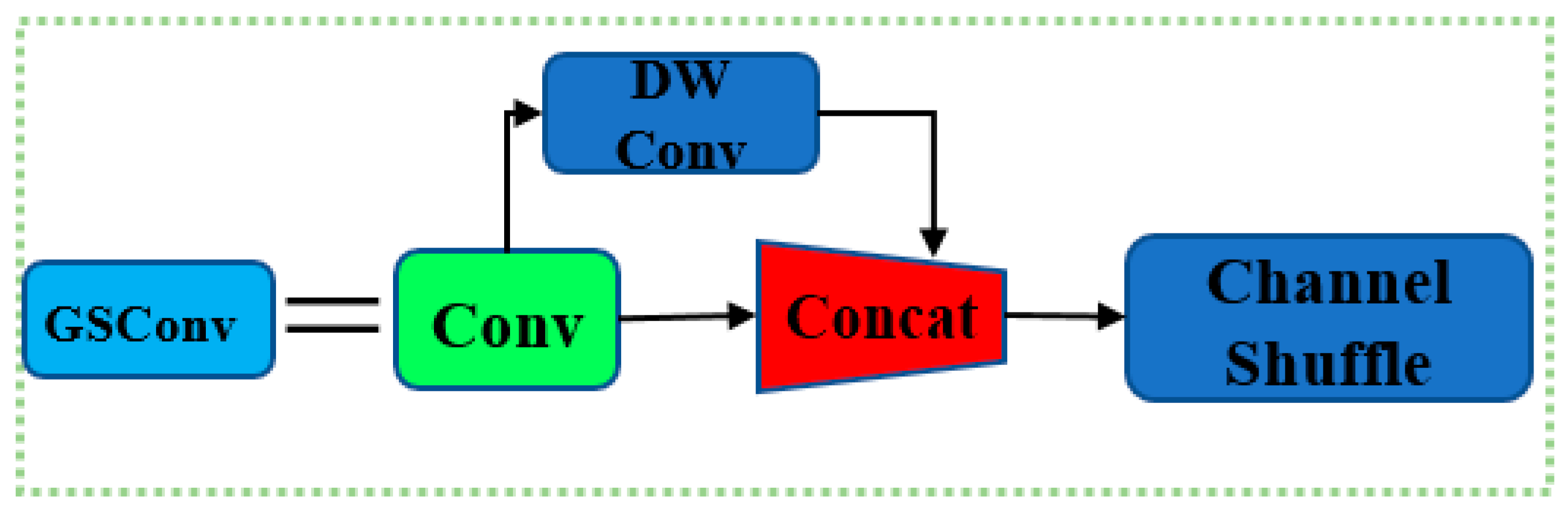

- GSConv is integrated into the YOLOv8n network to replace some standard convolutions. By fusing feature information extracted from standard convolution and depth-separable convolution, the computational complexity of the model is reduced and the diversity of feature extraction is increased, thereby improving the detection accuracy of the model.

2. Materials and Methods

2.1. Image Collection and Dataset Construction

2.1.1. Study Area and Data Collection

2.1.2. Image Preprocessing and Dataset Construction

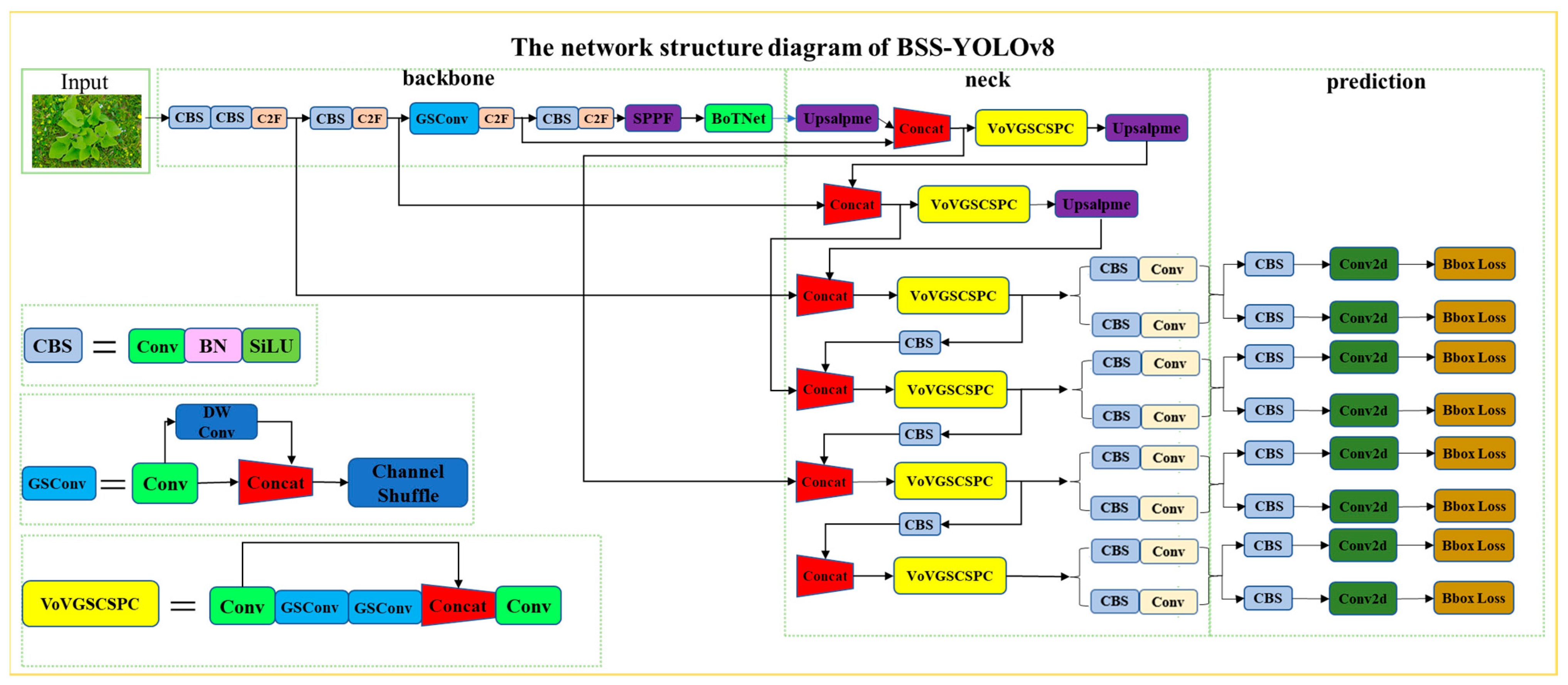

2.2. Construction of the BSS-YOLOv8 Network Model

2.2.1. YOLOv8 Network Model

2.2.2. YOLOv8-BoTNet

2.2.3. YOLOv8-GSConv

2.2.4. YOLOv8- Small Target Detection Layer

2.2.5. BSS-YOLOv8 Network Model

2.3. Experimental Design

2.4. Model Evaluation Metrics

3. Results

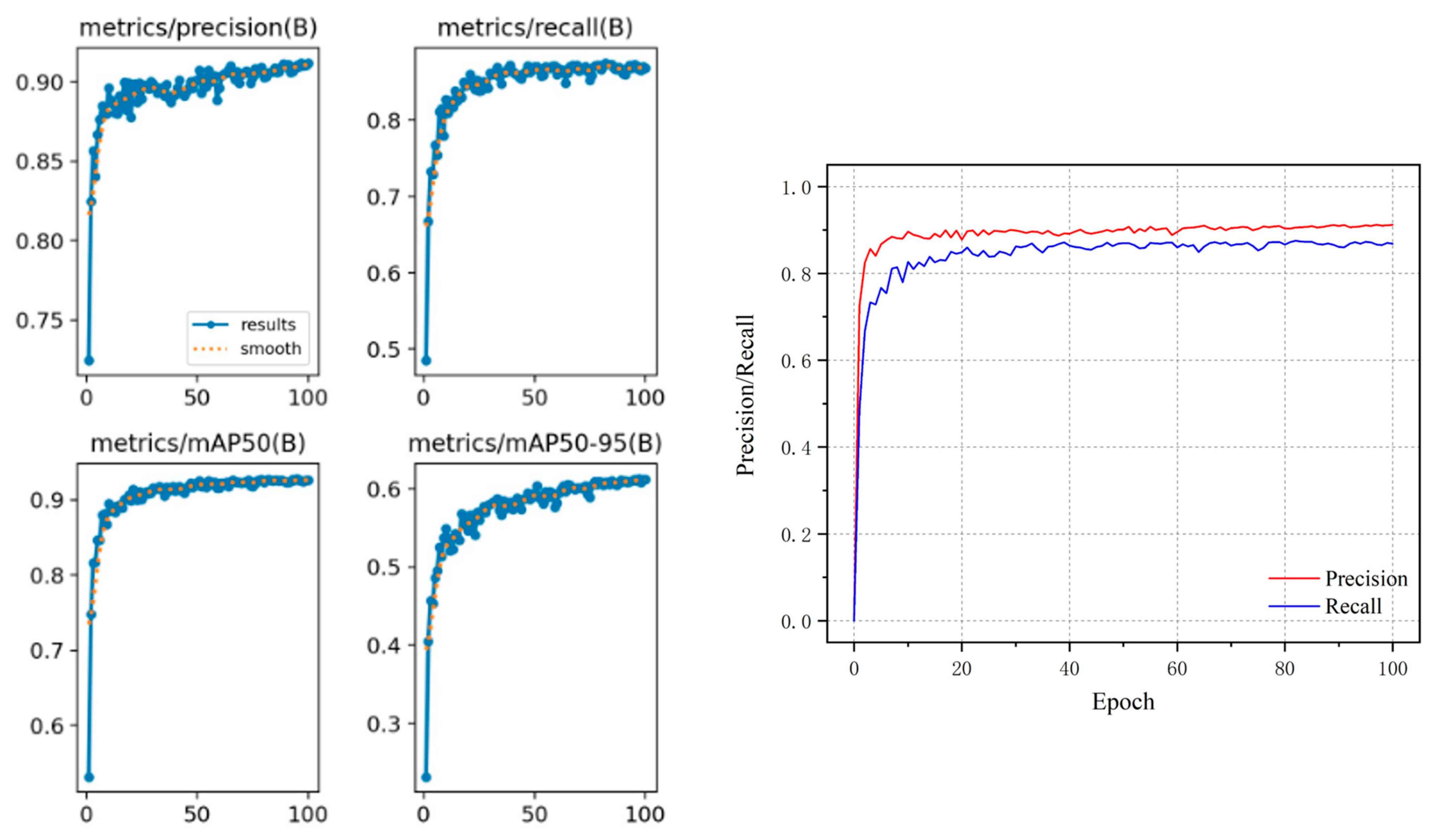

3.1. Improved BSS-YOLOv8 Object Detection Network

3.1.1. Loss Variations of BSS-YOLOv8

3.1.2. Detection Performance of BSS-YOLOv8

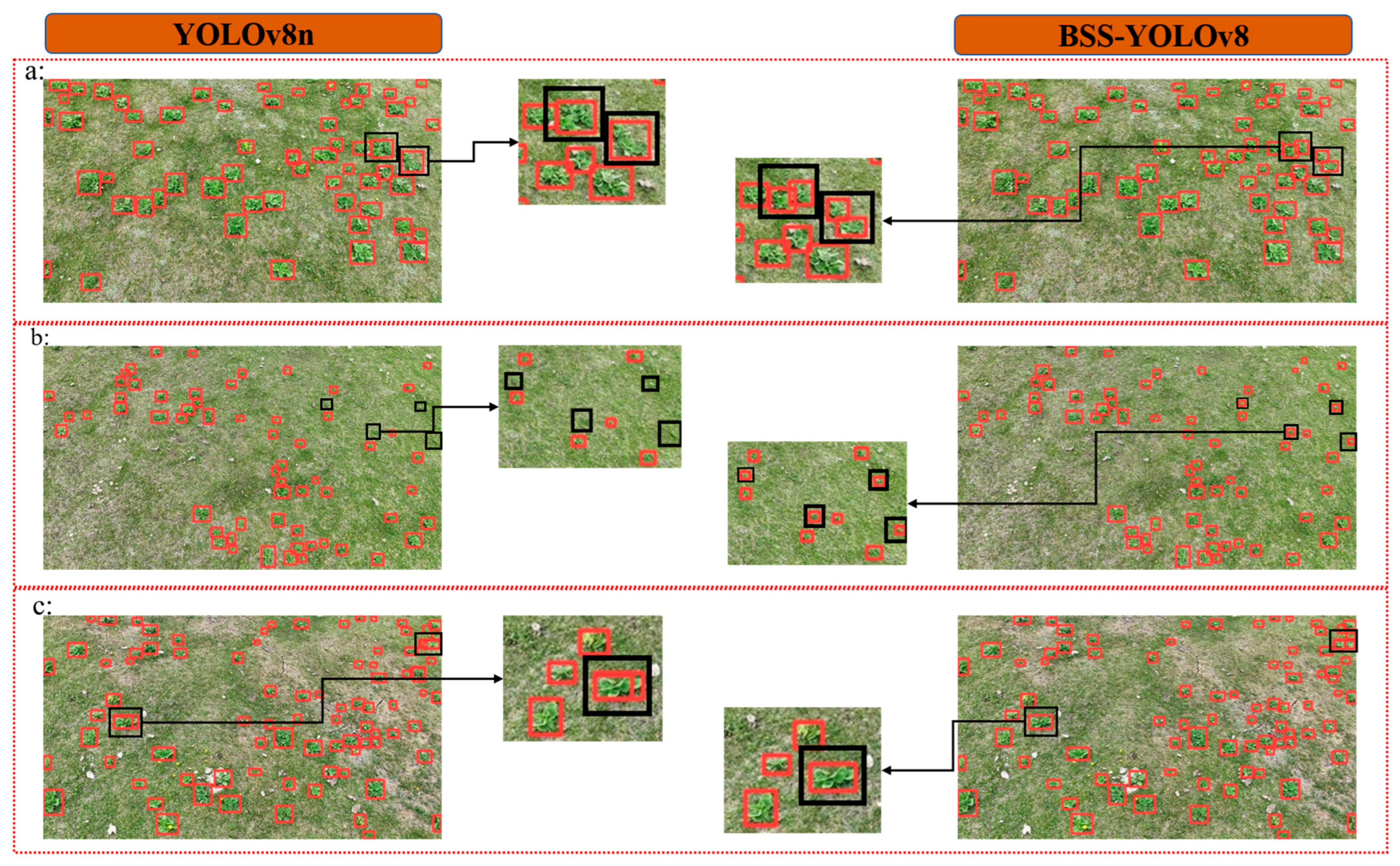

- (1)

- In group a images, YOLOv8 identifies densely distributed P. umbrosa plants as individual P. umbrosa, while BSS-YOLOv8 accurately recognizes each individual P. umbrosa plant. This indicates that, compared to YOLOv8, the BSS-YOLOv8 model proposed in this study excels in detecting densely distributed and mutually occluded P. umbrosa.

- (2)

- In group b images, BSS-YOLOv8 detects more and smaller P. umbrosa than YOLOv8n, reducing the likelihood of missing and misidentifying small target P. umbrosa. This indicates that BSS-YOLOv8 enhances the detection capability for small P. umbrosa seedlings.

- (3)

- In group c images, some P. umbrosa have lush growth with distinct stem and leaf branches. YOLOv8n incorrectly identifies different stem and leaf branches of the same P. umbrosa as multiple P. umbrosa plants, resulting in duplicate detections, while BSS-YOLOv8 effectively avoided this issue.

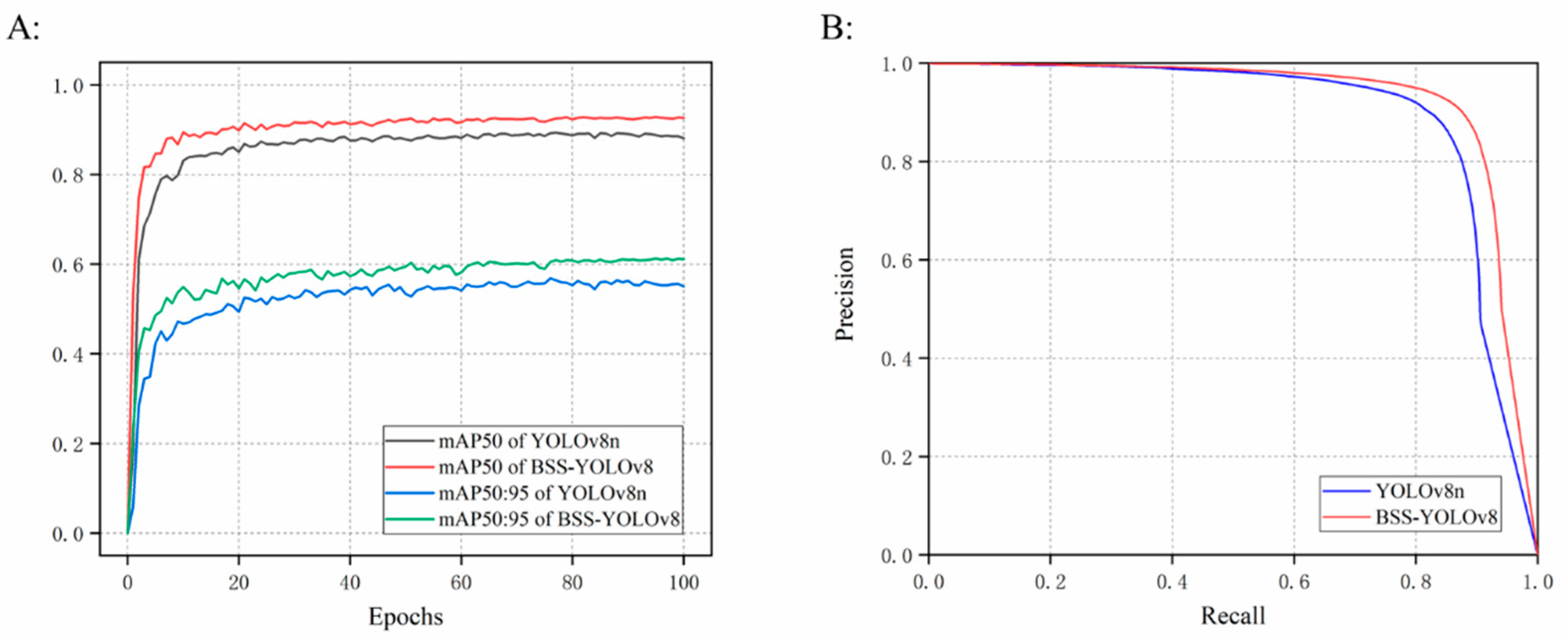

3.2. Results of Ablation Experiments

3.3. Comparison with Other Object Detection Models

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lemaire, G.; Hodgson, J.; Chabbi, A. Grassland Productivity and Ecosystem Services; CABI: Wallingford, UK, 2011. [Google Scholar]

- O’Mara, F.P. The role of grasslands in food security and climate change. Ann. Bot. 2012, 110, 1263–1270. [Google Scholar] [CrossRef] [PubMed]

- Bugalho, M.N.; Abreu, J.M. The multifunctional role of grasslands. In Sustainable Mediterranean Grasslands and Their Multi-Functions; CIHEAM/FAO/ENMP/SPPF: Zaragoza, Spain, 2008; pp. 25–30. [Google Scholar]

- Boval, M.; Dixon, R.M. The importance of grasslands for animal production and other functions: A review on management and methodological progress in the tropics. Animal 2012, 6, 748–762. [Google Scholar] [CrossRef] [PubMed]

- Soussana, J.F.; Klumpp, K.; Ehrhardt, F. The role of grasslands in mitigating climate change. In EGF at 50: The Future of European Grasslands; Grassland Science in Europe; Hopkins, A., Collins, R.P., Fraser, M.D., King, V.R., Lloyd, D.C., Moorby, J.M., Robson, P.R.H., Eds.; EGF: Gogerddan, UK, 2014; Volume 19, pp. 75–89. [Google Scholar]

- Huguenin-Elie, O.; Delaby, L.; Klumpp, K.; Lemauviel-Lavenant, S. The role of grasslands in biogeochemical cycles and biodiversity conservation. In Improving grassland and Pasture Management in Temperate Agriculture; Burleigh Dodds Science Publishing: London, UK, 2019; pp. 23–50. [Google Scholar]

- Kachler, J.; Benra, F.; Bolliger, R.; Isaac, R.; Bonn, A.; Felipe-Lucia, M.R. Can we have it all? The role of grassland conservation in supporting forage production and plant diversity. Landsc. Ecol. 2023, 1–15. [Google Scholar] [CrossRef]

- Guo, Y.Z.; Zhang, R.H.; Sun, T.; Zhao, S.J.; You, Y.F.; Lu, H.; Wu, C.C.; Zhao, B.Y. Harm, control and comprehensive utilization of poisonous weeds in natural grasslands of Gansu Province. Acta Agrestia Sin. 2017, 25, 243. [Google Scholar]

- Shang, Z.H.; Dong, Q.M.; Shi, J.J.; Zhou, H.K.; Dong, S.K.; Shao, X.Q.; Li, S.X.; Wang, Y.L.; Ma, Y.S.; Ding, L.M. Research progress in recent ten years of ecological restoration for ‘Black Soil Land’ degraded grassland on Tibetan Plateau—Concurrently discuss of ecological restoration in Sangjiangyuan region. Acta Agrestia Sin. 2018, 26, 1. [Google Scholar]

- Xing, F.; An, R.; Wang, B.; Miao, J.; Jiang, T.; Huang, X.; Hu, Y. Mapping the occurrence and spatial distribution of noxious weed species with multisource data in degraded grasslands in the Three-River Headwaters Region, China. Sci. Total Environ. 2021, 801, 149714. [Google Scholar] [CrossRef]

- Zhao, B.-Y.; Liu, Z.-Y.; Lu, H.; Wang, Z.-X.; Sun, L.-S.; Wan, X.-P.; Guo, X.; Zhao, Y.-T.; Wang, J.-J.; Shi, Z.-C. Damage and control of poisonous weeds in western grassland of China. Agric. Sci. China 2010, 9, 1512–1521. [Google Scholar] [CrossRef]

- Chang, S.H.; Wang, L.; Jiang, J.C.; Liu, Y.J.; Peng, Z.C.; Han, T.H.; Huang, W.G.; Hou, F.J. Developments Course and Prospect of Grassland Survey and Monitoring Domestic and Abroad. Acta Agrestia Sin. 2023, 31, 1281. [Google Scholar]

- Li, Y.; Guo, Z.; Shuang, F.; Zhang, M.; Li, X. Key technologies of machine vision for weeding robots: A review and benchmark. Comput. Electron. Agric. 2022, 196, 106880. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.; Pu, R.; Gonzalez-Moreno, P.; Yuan, L.; Wu, K.; Huang, W. Monitoring plant diseases and pests through remote sensing technology: A review. Comput. Electron. Agric. 2019, 165, 104943. [Google Scholar] [CrossRef]

- Neupane, K.; Baysal-Gurel, F. Automatic identification and monitoring of plant diseases using unmanned aerial vehicles: A review. Remote Sens. 2021, 13, 3841. [Google Scholar] [CrossRef]

- Jin, X.; Liu, S.; Baret, F.; Hemerlé, M.; Comar, A. Estimates of plant density of wheat crops at emergence from very low altitude UAV imagery. Remote Sens. Environ. 2017, 198, 105–114. [Google Scholar] [CrossRef]

- Kitano, B.T.; Mendes, C.C.T.; Geus, A.R.; Oliveira, H.C.; Souza, J.R. Corn plant counting using deep learning and UAV images. IEEE Geosci. Remote Sens. Lett. 2019, 1–5. [Google Scholar] [CrossRef]

- Bayraktar, E.; Basarkan, M.E.; Celebi, N. A low-cost UAV framework towards ornamental plant detection and counting in the wild. ISPRS J. Photogramm. Remote Sens. 2020, 167, 1–11. [Google Scholar] [CrossRef]

- Kattenborn, T.; Eichel, J.; Fassnacht, F.E. Convolutional Neural Networks enable efficient, accurate and fine-grained segmentation of plant species and communities from high-resolution UAV imagery. Sci. Rep. 2019, 9, 17656. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Wang, X. Plant diseases and pests detection based on deep learning: A review. Plant Methods 2021, 17, 22. [Google Scholar] [CrossRef] [PubMed]

- Fatih, B.; Kayaalp, F. Review of machine learning and deep learning models in agriculture. Int. Adv. Res. Eng. J. 2021, 5, 309–323. [Google Scholar]

- Pinheiro, I.; Moreira, G.; da Silva, D.Q.; Magalhães, S.; Valente, A.; Oliveira, P.M.; Cunha, M.; Santos, F. Deep Learning YOLO-Based Solution for Grape Bunch Detection and Assessment of Biophysical Lesions. Agronomy 2023, 13, 1120. [Google Scholar] [CrossRef]

- Maity, M.; Banerjee, S.; Chaudhuri, S.S. Faster R-CNN and yolo based vehicle detection: A survey. In Proceedings of the 2021 5th International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 8–10 April 2021. [Google Scholar]

- Hu, B.; Wang, J. Detection of PCB surface defects with improved faster-RCNN and feature pyramid network. IEEE Access 2020, 8, 108335–108345. [Google Scholar] [CrossRef]

- Liu, B.; Bruch, R. Weed detection for selective spraying: A review. Curr. Robot. Rep. 2020, 1, 19–26. [Google Scholar] [CrossRef]

- Chen, J.; Wang, H.; Zhang, H.; Luo, T.; Wei, D.; Long, T.; Wang, Z. Weed detection in sesame fields using a YOLO model with an enhanced attention mechanism and feature fusion. Comput. Electron. Agric. 2022, 202, 107412. [Google Scholar] [CrossRef]

- Wang, Q.; Cheng, M.; Huang, S.; Cai, Z.; Zhang, J.; Yuan, H. A deep learning approach incorporating YOLO v5 and attention mechanisms for field real-time detection of the invasive weed Solanum rostratum Dunal seedlings. Comput. Electron. Agric. 2022, 199, 107194. [Google Scholar] [CrossRef]

- Zhang, R.; Wang, C.; Hu, X.; Liu, Y.; Chen, S.; Su, B. Weed location and recognition based on UAV imaging and deep learning. Int. J. Precis. Agric. Aviat. 2020, 3, 23–29. [Google Scholar] [CrossRef]

- Tsai, F.-T.; Nguyen, V.-T.; Duong, T.-P.; Phan, Q.-H.; Lien, C.-H. Tomato Fruit Detection Using Modified Yolov5m Model with Convolutional Neural Networks. Plants 2023, 12, 3067. [Google Scholar] [CrossRef]

- Feng, J.; Yu, C.; Shi, X.; Zheng, Z.; Yang, L.; Hu, Y. Research on Winter Jujube Object Detection Based on Optimized Yolov5s. Agronomy 2023, 13, 810. [Google Scholar] [CrossRef]

- Yao, J.; Qi, J.; Zhang, J.; Shao, H.; Yang, J.; Li, X. A real-time detection algorithm for Kiwifruit defects based on YOLOv5. Electronics 2021, 10, 1711. [Google Scholar] [CrossRef]

- Li, S.; Zhang, S.; Xue, J.; Sun, H. Lightweight target detection for the field flat jujube based on improved YOLOv5. Comput. Electron. Agric. 2022, 202, 107391. [Google Scholar] [CrossRef]

- Terven, J.; Cordova-Esparza, D. A comprehensive review of YOLO: From YOLOv1 to YOLOv8 and beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Li, Y.; Fan, Q.; Huang, H.; Han, Z.; Gu, Q. A Modified YOLOv8 Detection Network for UAV Aerial Image Recognition. Drones 2023, 7, 304. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Cao, Y.; Chen, K.; Loy, C.C.; Lin, D. Prime sample attention in object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Srinivas, A.; Lin, T.Y.; Parmar, N.; Shlens, J.; Abbeel, P.; Vaswani, A. Bottleneck transformers for visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021. [Google Scholar]

- Datta, S. A review on convolutional neural networks. In Advances in Communication, Devices and Networking: Proceedings of ICCDN 2019, Sikkim, India, 9–10 December 2019; Springer: Singapore, 2020; Volume 3, pp. 445–452. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Wu, T.; Zhang, Q.; Wu, J.; Liu, Q.; Su, J.; Li, H. An improved YOLOv5s model for effectively predict sugarcane seed replenishment positions verified by a field re-seeding robot. Comput. Electron. Agric. 2023, 214, 108280. [Google Scholar] [CrossRef]

- Liu, Q.; Zhang, Y.; Yang, G. Small unopened cotton boll counting by detection with MRF-YOLO in the wild. Comput. Electron. Agric. 2023, 204, 107576. [Google Scholar] [CrossRef]

- Choi, H.-T.; Lee, H.-J.; Kang, H.; Yu, S.; Park, H.-H. SSD-EMB: An improved SSD using enhanced feature map block for object detection. Sensors 2021, 21, 2842. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Dai, J.; Chen, Z.; Huang, Z.; Li, Z.; Zhu, X.; Hu, X.; Lu, T.; Lu, L.; Li, H.; et al. InternImage: Exploring large-scale vision foundation models with deformable convolutions. arXiv 2022, arXiv:2211.05778. [Google Scholar]

- Zong, Z.; Song, G.; Liu, Y. DETRs with collaborative hybrid assignments training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

| Appellation | Parameter | Numerical Value |

|---|---|---|

| Aircraft | Product type | quadcopter |

| Product positioning | professional grade | |

| Flight time | 35 min | |

| Operating temperature | −10–40 °C | |

| Sensor | Hasselblad camera | 4/3-inch CMOS, 20 million pixels |

| Long-focus camera | 1/2-inch CMOS, 12 million pixels | |

| Other parameters | Product weight | 899 g |

| Control distance | 8000 m |

| Image Acquisition Equipment | Image Resolution | The Average Pixel Proportion of Each P. umbrosa in the Image |

|---|---|---|

| Smartphone | 1890 × 1261 | 10.15% |

| Canon EOX Kiss X10 | 2400 × 1600 | 42.85% |

| Dji Mavic 3 | 3840 × 2160 | 0.093% |

| Configuration | Parameter |

|---|---|

| CPU | RTX3090 |

| GPU | Intel(R) Xeon(R) Gold 6330 CPU |

| Operating system | Windows 10 |

| PyTorch versions | PyTorch 1.9.0 |

| Python versions | Python 3.8 |

| Cuda versions | Cuda 11.1 |

| Model | Precision (%) | Recall (%) | mAP50 (%) | F1-Score (%) | mAP50:95 (%) |

|---|---|---|---|---|---|

| YOLOv8n | 88.6 | 82.9 | 89.2 | 85.66 | 56.9 |

| +BoTNet | 89.3 | 83.4 | 89.7 | 86.30 | 57.4 |

| +Small target detection layer | 90.2 | 84.7 | 91.3 | 87.36 | 58.9 |

| +GSConv | 89.4 | 85.5 | 91.1 | 87.35 | 59.3 |

| +GSConv+BoTNet | 89.4 | 84.9 | 91.3 | 87.09 | 59.5 |

| +GSConv+ small target detection layer | 90.5 | 83.7 | 92.3 | 86.97 | 60.4 |

| +Small target detection layer+BoTNet | 90.1 | 86.6 | 91.8 | 88.32 | 59.1 |

| BSS-YOLOv8 | 91.1 | 86.7 | 92.6 | 88.85 | 61.3 |

| Model | Precision (%) | Recall (%) | mAP50 (%) | F1 Score (%) | mAP50:95 |

|---|---|---|---|---|---|

| YOLOv8 | 88.6 | 82.9 | 89.2 | 85.66 | 56.9 |

| YOLOv3-tiny | 84.1 | 63.1 | 76.1 | 72.10 | 46.6 |

| YOLOv5 | 88.7 | 82.5 | 89.3 | 85.49 | 55.8 |

| YOLOv6 | 88.2 | 82.2 | 88.6 | 85.09 | 56.4 |

| Faster R-CNN | 82.4 | 71.6 | 81.2 | 85.20 | 50.3 |

| SSD | 78.5 | 69.2 | 74.1 | 73.56 | 45.2 |

| BSS-YOLOv8 | 91.1 | 86.7 | 92.6 | 88.85 | 61.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, B.; Ling, S.; Tan, H.; Wang, S.; Wu, C.; Yang, D. Detection of the Grassland Weed Phlomoides umbrosa Using Multi-Source Imagery and an Improved YOLOv8 Network. Agronomy 2023, 13, 3001. https://doi.org/10.3390/agronomy13123001

Guo B, Ling S, Tan H, Wang S, Wu C, Yang D. Detection of the Grassland Weed Phlomoides umbrosa Using Multi-Source Imagery and an Improved YOLOv8 Network. Agronomy. 2023; 13(12):3001. https://doi.org/10.3390/agronomy13123001

Chicago/Turabian StyleGuo, Baoliang, Shunkang Ling, Haiyan Tan, Sen Wang, Cailan Wu, and Desong Yang. 2023. "Detection of the Grassland Weed Phlomoides umbrosa Using Multi-Source Imagery and an Improved YOLOv8 Network" Agronomy 13, no. 12: 3001. https://doi.org/10.3390/agronomy13123001