Blueberry Ripeness Detection Model Based on Enhanced Detail Feature and Content-Aware Reassembly

Abstract

:1. Introduction

- A blueberry dataset containing five different maturity levels is constructed. To expand the original dataset, data augmentation techniques are used, and then it is named “Blueberry—Five Datasets”.

- An EDFM that enhances detail feature extraction is designed and proposed. By focusing on the two dimensions of space and channel to improve the ability of blueberry detail feature extraction, the experiment proves that this module can effectively improve the detection performance of the network model.

- By using the Space-to-depth operation to redesign the MP module, a new module, MP-S, is obtained, which eliminates the loss of fine-grained information due to the use of convolution step size and can effectively learn more information on the characteristics of blueberry.

- By integrating EDFM, MP-S, RFB (Receptive Field Block), and CARAFE (Content-Aware ReAssembly of FEatures), a new blueberry ripeness detection model based on EDFM and content-aware reassembly is proposed, which provides a premise and method for the realization of automatic picking technology in the future.

2. Related Work

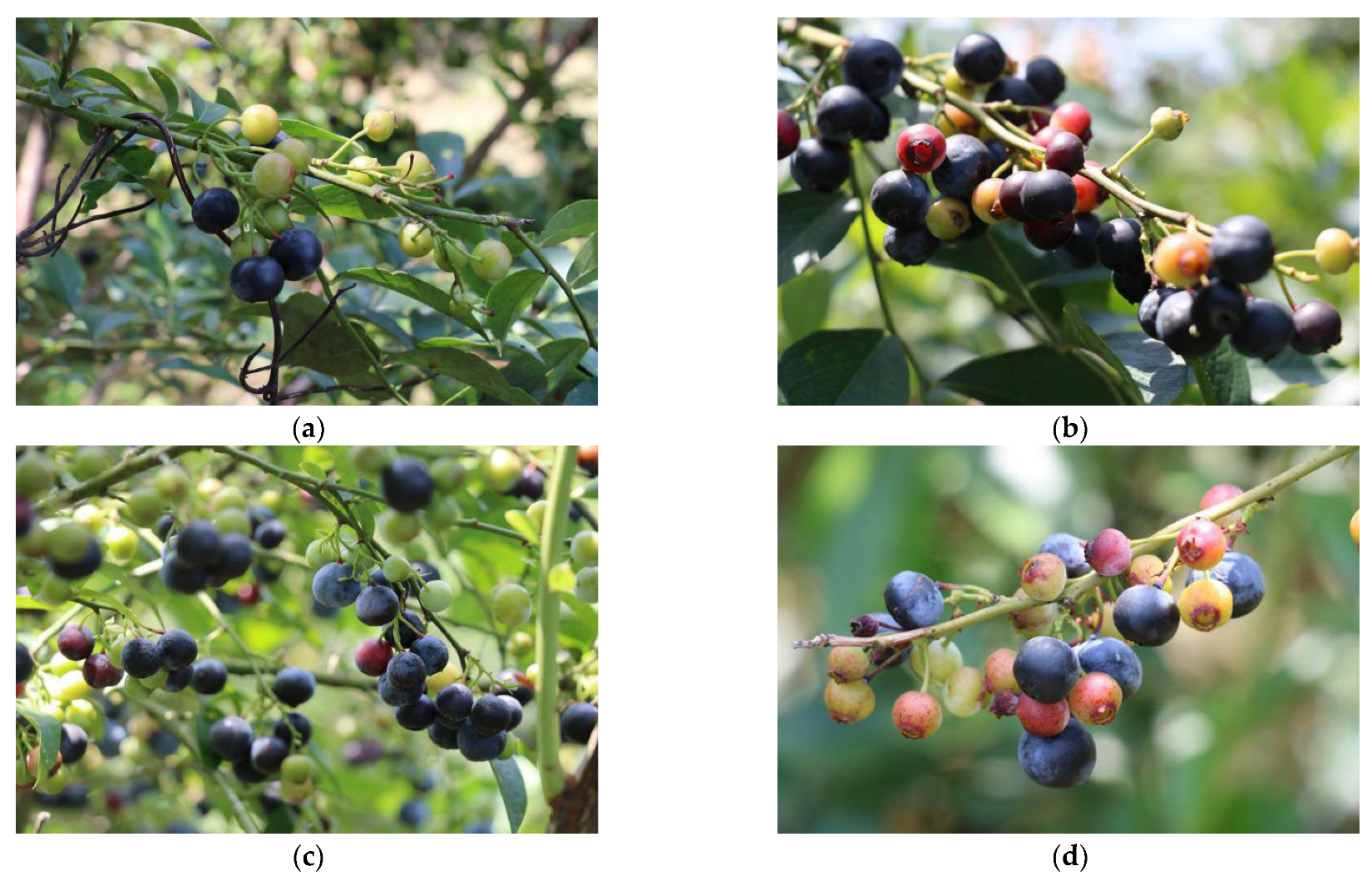

3. Blueberry Dataset Construction

3.1. Blueberry Image Collection

3.2. Data Preprocessing

3.3. Data Augmentation

4. The Proposed Method

4.1. YOLOv7 Original Network Structure

4.2. Our Proposed Network

4.2.1. Enhanced Detail Feature Module

4.2.2. Receptive Field Block

4.2.3. Design of MP-S Module

4.2.4. Content-Aware Reassembly of Features Module

5. Results and Analysis

5.1. Model Evaluation Metrics

5.2. Lab Environment

5.3. Experimental Results and Analysis

5.3.1. Model Selection

5.3.2. Comparison of Model Performance before and after Data Augmentation

5.3.3. The Impact of EDFM Module on Network Performance

5.3.4. Comparison of Performance of Different Enhanced Receptive Field Modules

5.3.5. Replacement Position of MP-S

5.3.6. Ablation Experiment

5.3.7. Comparison with Other Methods

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Krishna, P.; Pandey, G.; Thomas, R.; Parks, S. Improving Blueberry Fruit Nutritional Quality through Physiological and Genetic Interventions: A Review of Current Research and Future Directions. Antioxidants 2023, 12, 810. [Google Scholar] [CrossRef] [PubMed]

- Herrera-Balandrano, D.D.; Chai, Z.; Beta, T.; Feng, J.; Huang, W. Blueberry anthocyanins: An updated review on approaches to enhancing their bioavailability. Trends Food Sci. Technol. 2021, 118, 808–821. [Google Scholar] [CrossRef]

- Kuang, L.; Wang, Z.; Zhang, J.; Li, H.; Xu, G.; Li, J. Factor analysis and cluster analysis of mineral elements contents in different blueberry cultivars. J. Food Compos. Anal. 2022, 109, 104507. [Google Scholar] [CrossRef]

- Yang, W.; Guo, Y.; Liu, M.; Chen, X.; Xiao, X.; Wang, S.; Gong, P.; Ma, Y.; Chen, F. Structure and function of blueberry anthocyanins: A review of recent advances. J. Funct. Foods 2022, 88, 104864. [Google Scholar] [CrossRef]

- Rodriguez-Saona, C.; Vincent, C.; Isaacs, R. Blueberry IPM: Past Successes and Future Challenges. Annu. Rev. Entomology 2019, 64, 95–114. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Chen, B.; Zhang, Z.; Li, H.; Zhang, M. Applications of machine vision in agricultural robot navigation: A review. Comput. Electron. Agric. 2022, 198, 107085. [Google Scholar] [CrossRef]

- Xie, D.; Chen, L.; Liu, L.; Chen, L.; Wang, H. Actuators and sensors for application in agricultural robots: A review. Machines 2022, 10, 913. [Google Scholar] [CrossRef]

- Oliveira, L.F.P.; Moreira, A.P.; Silva, M.F. Advances in agriculture robotics: A state-of-the-art review and challenges ahead. Robotics 2021, 10, 52. [Google Scholar] [CrossRef]

- Fountas, S.; Malounas, I.; Athanasakos, L.; Avgoustakis, I.; Espejo-Garcia, B. AI-Assisted Vision for Agricultural Robots. Agriengineering 2022, 4, 674–694. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Wang, L.; Qin, M.; Lei, J.; Wang, X. Blueberry maturity recognition method based on improved YOLOv4-Tiny. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2021, 37, 170–178. [Google Scholar]

- Chen, F.; Zhang, X.; Zhu, X.; Li, Z.; Lin, J. Detection of the olive fruit maturity based on improved EfficientDet. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2022, 38, 158–166. [Google Scholar]

- Parvathi, S.; Selvi, S.T. Detection of maturity stages of coconuts in complex background using Faster R-CNN model. Biosyst. Eng. 2021, 202, 119–132. [Google Scholar] [CrossRef]

- Gulzar, Y. Fruit Image Classification Model Based on MobileNetV2 with Deep Transfer Learning Technique. Sustainability 2023, 15, 1906. [Google Scholar] [CrossRef]

- Albarrak, K.; Gulzar, Y.; Hamid, Y.; Mehmood, A.; Soomro, A.B. A deep learning-based model for date fruit classification. Sustainability 2022, 14, 6339. [Google Scholar] [CrossRef]

- Mamat, N.; Othman, M.F.; Abdulghafor, R.; Alwan, A.A.; Gulzar, Y. Enhancing Image Annotation Technique of Fruit Classification Using a Deep Learning Approach. Sustainability 2023, 15, 901. [Google Scholar] [CrossRef]

- Aggarwal, S.; Gupta, S.; Gupta, D.; Gulzar, Y.; Juneja, S.; Alwan, A.A.; Nauman, A. An Artificial Intelligence-Based Stacked Ensemble Approach for Prediction of Protein Subcellular Localization in Confocal Microscopy Images. Sustainability 2023, 15, 1695. [Google Scholar] [CrossRef]

- Gulzar, Y.; Hamid, Y.; Soomro, A.B.; Alwan, A.A.; Journaux, L. A convolution neural network-based seed classification system. Symmetry 2020, 12, 2018. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Jiang, K.; Xie, T.; Yan, R.; Wen, X.; Li, D.; Jiang, H.; Jiang, N.; Feng, L.; Duan, X.; Wang, J. An Attention Mechanism-Improved YOLOv7 Object Detection Algorithm for Hemp Duck Count Estimation. Agriculture 2022, 12, 1659. [Google Scholar] [CrossRef]

- Chen, J.; Liu, H.; Zhang, Y.; Zhang, D.; Ouyang, H.; Chen, X. A Multiscale Lightweight and Efficient Model Based on YOLOv7: Applied to Citrus Orchard. Plants 2022, 11, 3260. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Zhang, H.; Zhao, Y. Yolov7-sea: Object detection of maritime uav images based on improved yolov7. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–7 January 2023; pp. 233–238. [Google Scholar]

- Pham, V.; Nguyen, D.; Donan, C. Road Damage Detection and Classification with YOLOv7. arXiv 2022, arXiv:2211.00091. [Google Scholar]

- Tzutalin, D. LabelImg.Git Code. 2015. Available online: https://github.com/tzutalin/labelImg (accessed on 20 November 2022).

- Hao, W.; Zhili, S. Improved Mosaic: Algorithms for more Complex Images. J. Phys. Conf. Ser. 2020, 1684, 012094. [Google Scholar] [CrossRef]

- Zhang, Q.L.; Yang, Y.B. Sa-net: Shuffle attention for deep convolutional neural networks. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, Canada, 2–12 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 2235–2239. [Google Scholar]

- Liu, S.; Huang, D. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 385–400. [Google Scholar]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.C.; Lin, D. Carafe: Content-aware reassembly of features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, South Korea, 27 October–2 November 2019; pp. 3007–3016. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430, 2021. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; Le, Q.V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF international conference on computer vision, Seoul, South Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

| Percentage | Blueberry Ripeness | Number of Labels | Number of Images | |

|---|---|---|---|---|

| train set | 60% | level 1 | 41,210 | 5120 |

| level 2 | 4440 | 2320 | ||

| level 3 | 4000 | 2490 | ||

| level 4 | 54,320 | 6000 | ||

| level 5 | 2090 | 970 | ||

| val set | 20% | level 1 | 13,730 | 1770 |

| level 2 | 1360 | 800 | ||

| level 3 | 1810 | 990 | ||

| level 4 | 17,240 | 2000 | ||

| level 5 | 630 | 290 | ||

| test set | 20% | level 1 | 15,110 | 1780 |

| level 2 | 1320 | 780 | ||

| level 3 | 1720 | 970 | ||

| level 4 | 19,650 | 2000 | ||

| level 5 | 620 | 350 |

| Actual Results | |||

|---|---|---|---|

| 1 | 0 | ||

| Predicted results | 1 | TP | FP |

| 0 | FN | TN | |

| Name | Value |

|---|---|

| Learning Rate | 0.01 |

| Image Size | 640 × 640 |

| Batch Size | 4 |

| P (%) | R (%) | mAP (%) | Parameters | GFLOPS | |

|---|---|---|---|---|---|

| YOLOv7-Tiny | 70.8 | 71.9 | 75.4 | 6,018,420 | 13.1 |

| YOLOv7 | 71.7 | 75.1 | 77.5 | 36,503,348 | 103.4 |

| YOLOv7x | 71.7 | 78.9 | 80.0 | 70,809,396 | 188.3 |

| Datasets | Train | Val | Test |

|---|---|---|---|

| Original | 600 | 200 | 200 |

| Data Augmentation | 6000 | 2000 | 2000 |

| Datasets | Level 1AP (%) | Level 2AP (%) | Level 3AP (%) | Level 4AP (%) | Level 5AP (%) | mAP (%) |

|---|---|---|---|---|---|---|

| Original | 84.7 | 43.0 | 62.2 | 84.1 | 34.5 | 61.7 |

| Data Augmentation | 88.5 | 72.3 | 81.2 | 89.9 | 55.9 | 77.5 |

| Improvement | 3.8 | 29.3 | 19.0 | 5.8 | 21.4 | 15.8 |

| P (%) | R (%) | mAP (%) | |

|---|---|---|---|

| YOLOv7 | 71.7 | 75.1 | 77.5 |

| YOLOv7 + EDFM | 72.6 | 75.2 | 78.5 |

| P (%) | R (%) | mAP (%) | Parameters | ms | |

|---|---|---|---|---|---|

| SPPCPPC | 71.7 | 75.1 | 77.5 | 36,503,348 | 11.2 |

| SPP | 72.7 | 74.2 | 77.8 | 30,471,476 | 11.0 |

| SPPF | 73.8 | 73.7 | 78.6 | 30,471,476 | 10.9 |

| SimSPPF | 72.2 | 76.4 | 78.3 | 30,472,500 | 10.9 |

| ASPP | 71.5 | 75.5 | 78.3 | 45,415,732 | 12.9 |

| RFB | 73.9 | 75.1 | 78.8 | 33,237,428 | 11.0 |

| mAP (%) | |

|---|---|

| Original | 77.5 |

| Backbone | 78.1 |

| Neck | 78.5 |

| Backbone + Neck | 78.2 |

| RFB | EDFM | MP-S | CARAFE | mAP (%) | Parameters | |

|---|---|---|---|---|---|---|

| 1 | 77.5 | 36,503,348 | ||||

| 2 | √ | 78.8 | 33,237,428 | |||

| 3 | √ | √ | 79.6 | 70,052,692 | ||

| 4 | √ | √ | √ | 80.4 | 69,643,092 | |

| 5 | √ | √ | √ | √ | 80.7 | 70,514,428 |

| Level 1AP (%) | Level 2AP (%) | Level 3AP (%) | Level 4AP (%) | Level 5AP (%) | mAP (%) | |

|---|---|---|---|---|---|---|

| YOLOv5 | 83.2 | 68.0 | 76.2 | 83.5 | 39.2 | 70.0 |

| YOLOX | 83.0 | 67.2 | 75.2 | 85.1 | 40.4 | 70.2 |

| EfficientDet | 79.0 | 65.0 | 74.7 | 78.7 | 39.7 | 67.4 |

| Faster RCNN | 53.8 | 55.9 | 60.4 | 66.8 | 39.6 | 55.3 |

| YOLOv7-GhostNet | 84.7 | 71.7 | 81.3 | 87.9 | 50.3 | 75.2 |

| YOLOv7-MobileNetV3 | 85.3 | 70.3 | 80.7 | 87.8 | 54.5 | 75.7 |

| YOLOv7 | 88.5 | 72.3 | 81.2 | 89.9 | 55.9 | 77.5 |

| Ours | 89.1 | 74.4 | 82.3 | 90.6 | 67.3 | 80.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, W.; Ma, X.; An, H. Blueberry Ripeness Detection Model Based on Enhanced Detail Feature and Content-Aware Reassembly. Agronomy 2023, 13, 1613. https://doi.org/10.3390/agronomy13061613

Yang W, Ma X, An H. Blueberry Ripeness Detection Model Based on Enhanced Detail Feature and Content-Aware Reassembly. Agronomy. 2023; 13(6):1613. https://doi.org/10.3390/agronomy13061613

Chicago/Turabian StyleYang, Wenji, Xinxin Ma, and Hang An. 2023. "Blueberry Ripeness Detection Model Based on Enhanced Detail Feature and Content-Aware Reassembly" Agronomy 13, no. 6: 1613. https://doi.org/10.3390/agronomy13061613

APA StyleYang, W., Ma, X., & An, H. (2023). Blueberry Ripeness Detection Model Based on Enhanced Detail Feature and Content-Aware Reassembly. Agronomy, 13(6), 1613. https://doi.org/10.3390/agronomy13061613