YOLO-C: An Efficient and Robust Detection Algorithm for Mature Long Staple Cotton Targets with High-Resolution RGB Images

Abstract

1. Introduction

2. Materials and Methods

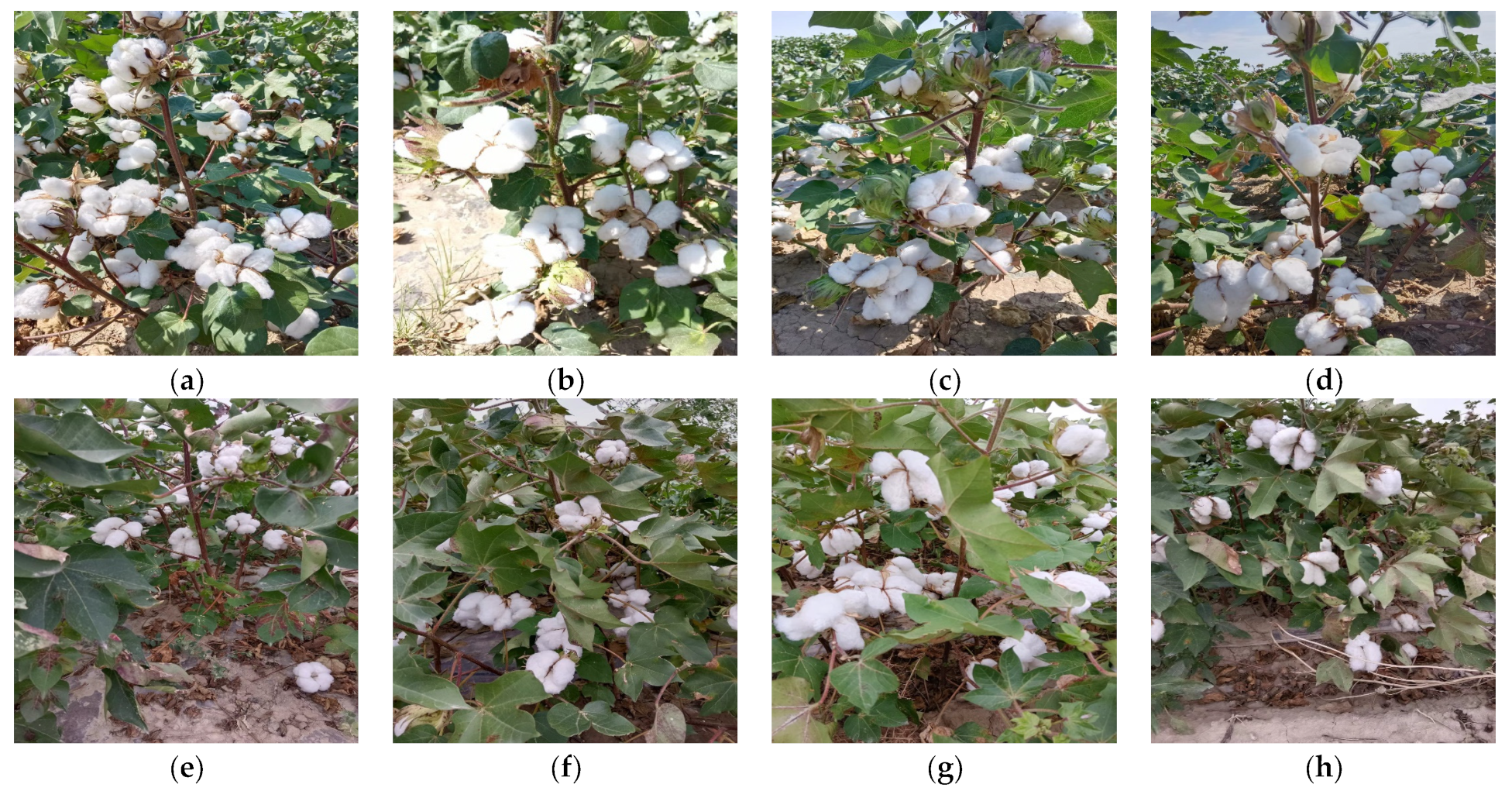

2.1. Data Acquisition

2.2. Data Preprocessing and Enhancement

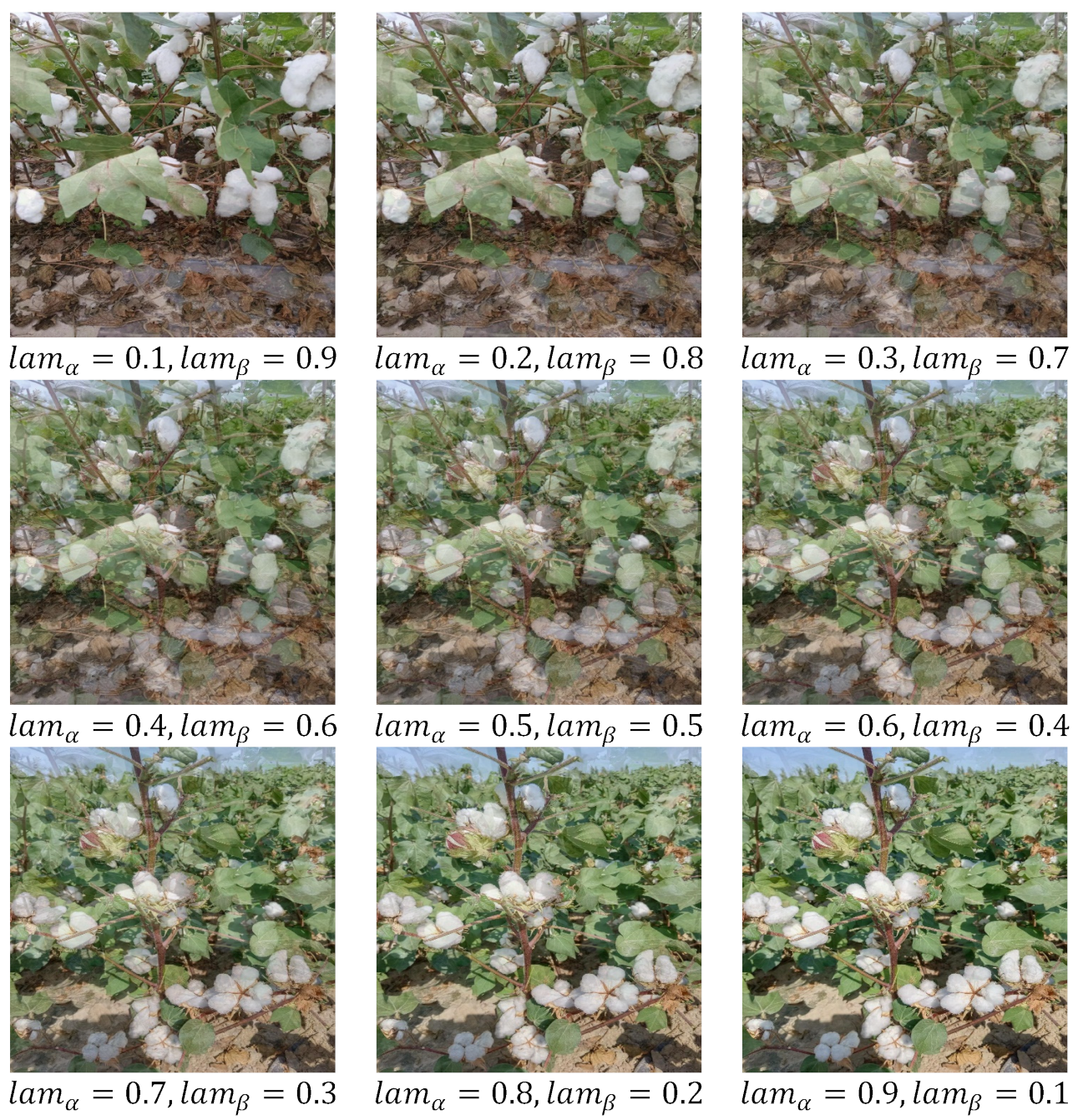

2.2.1. Mixup Data Enhancement

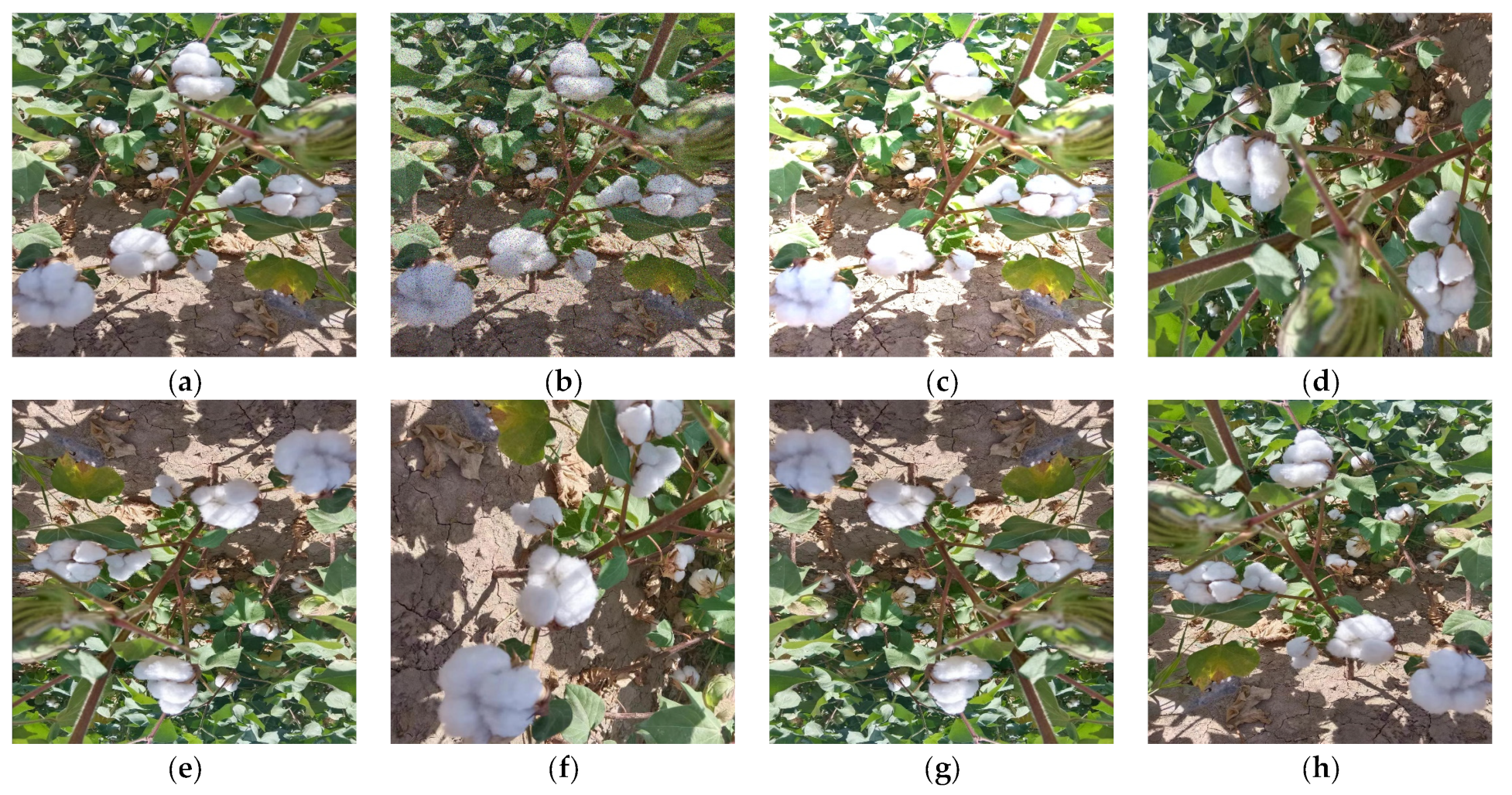

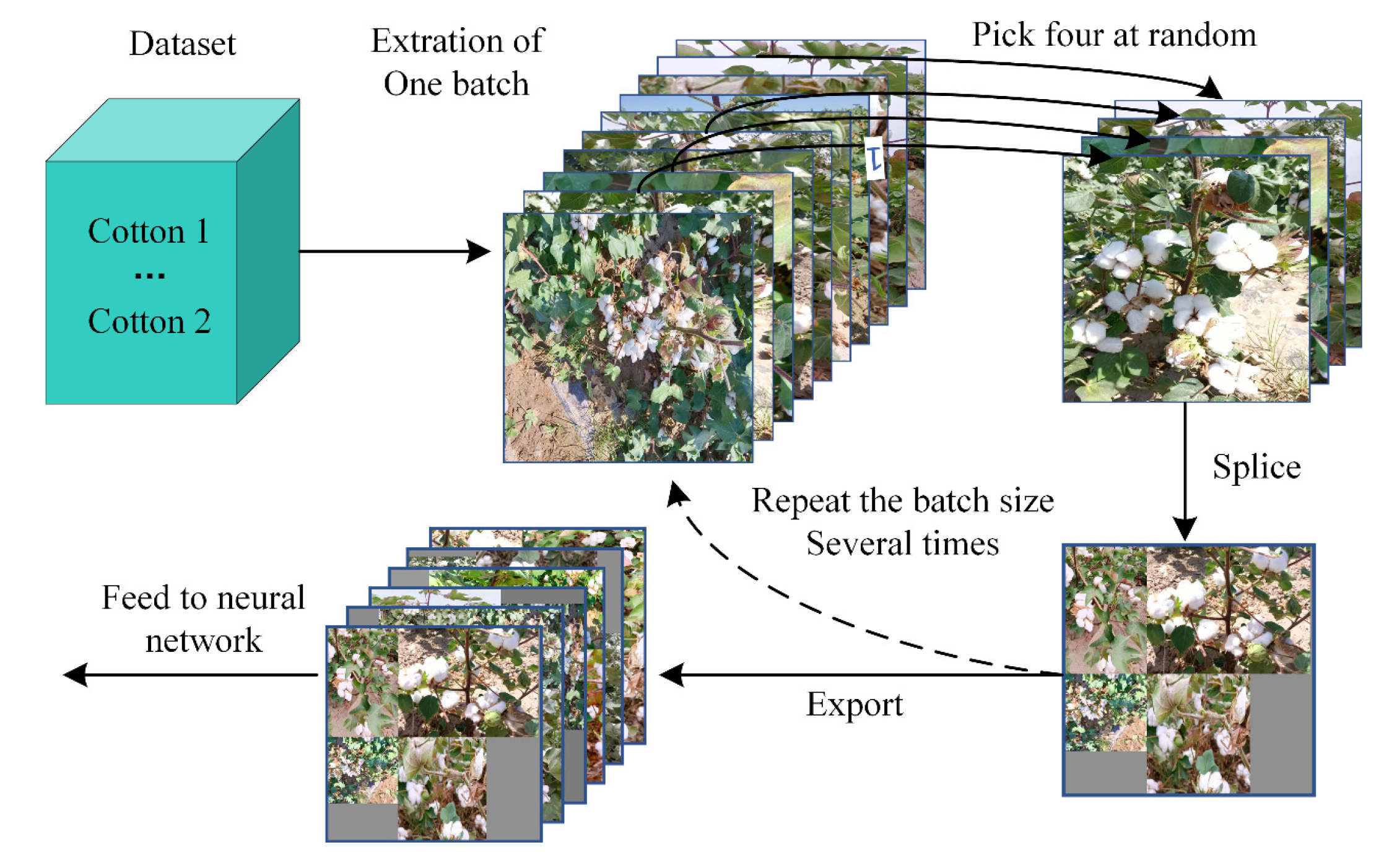

2.2.2. Mosaic Data Enhancement

2.3. Experimental Environment

2.4. Evaluation Indicators

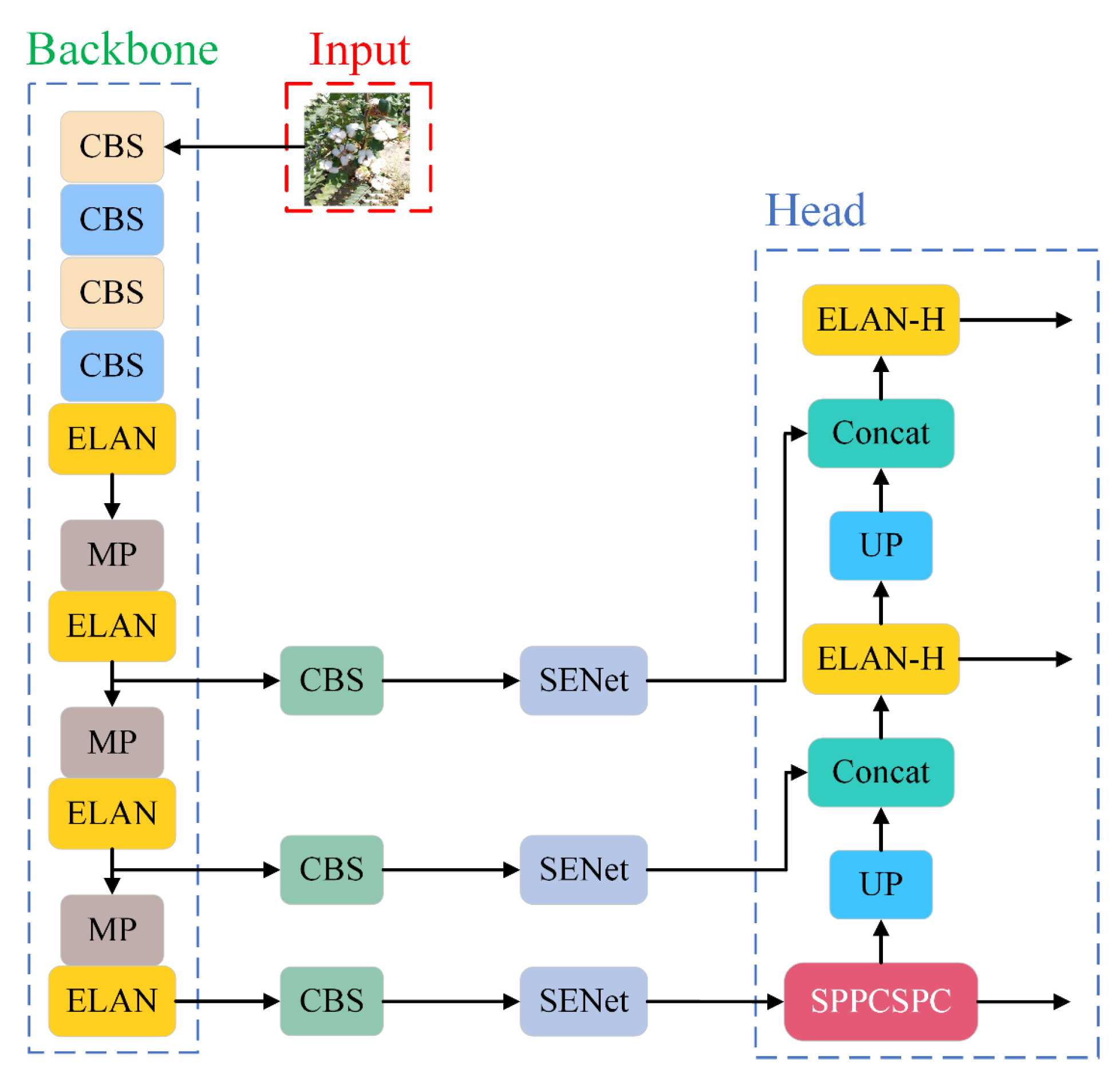

2.5. Improved Algorithm Construction

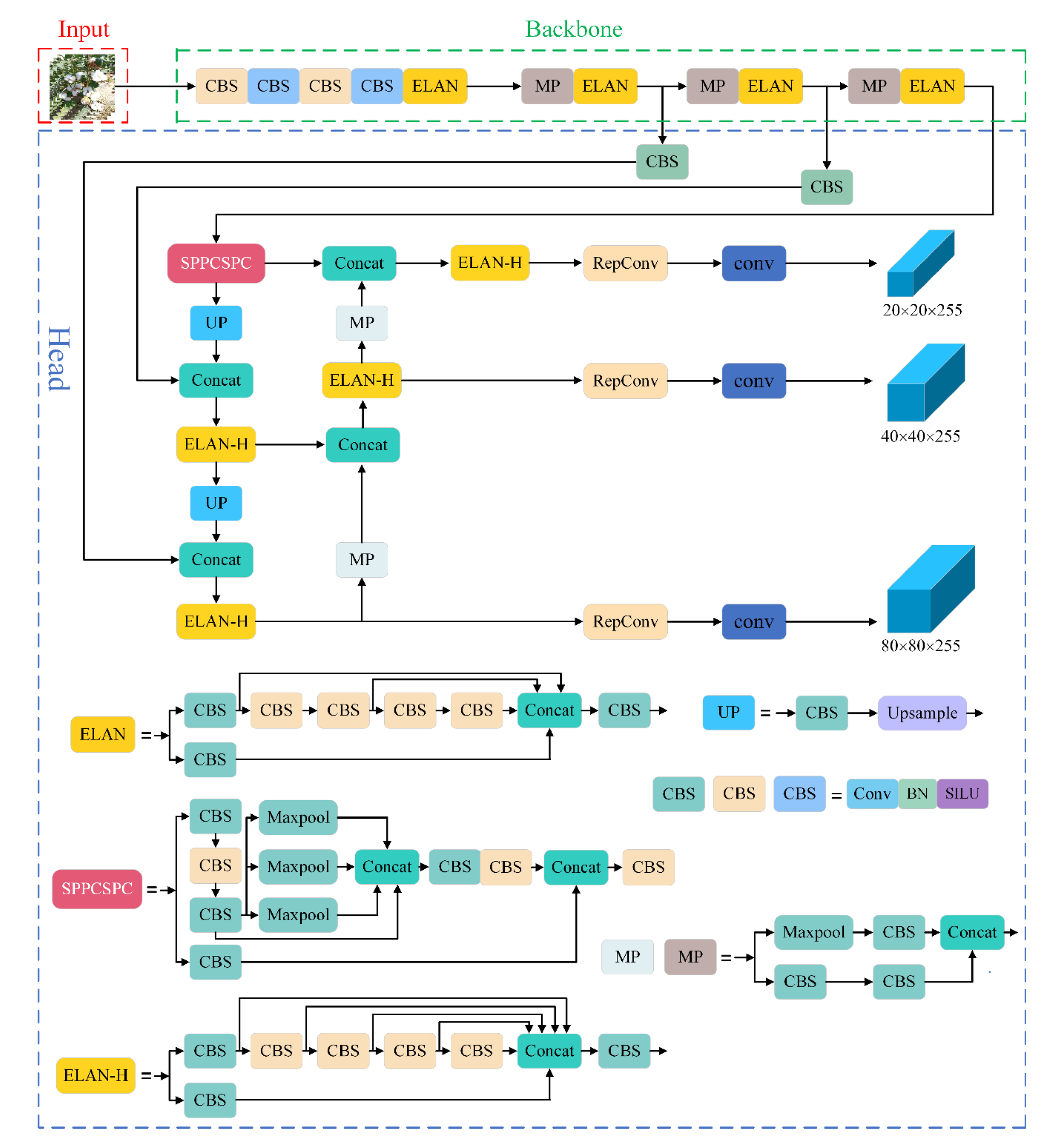

2.5.1. YOLOv7 Model Structure

2.5.2. Model Improvements

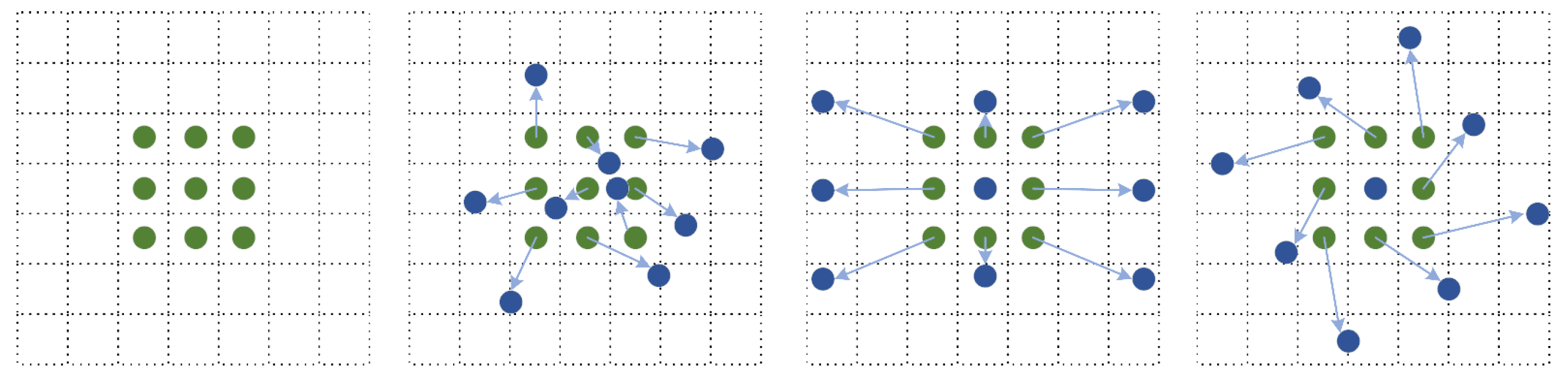

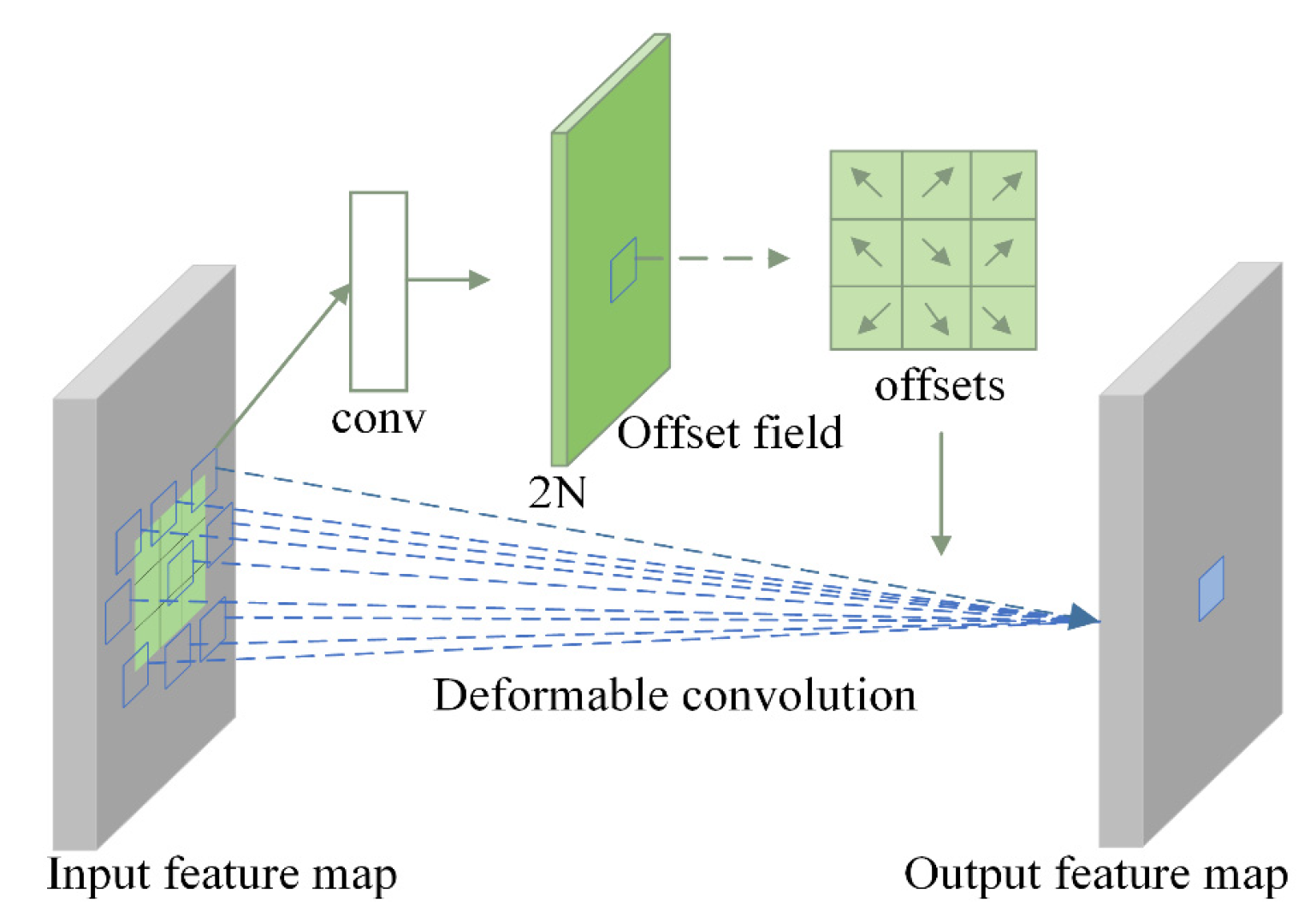

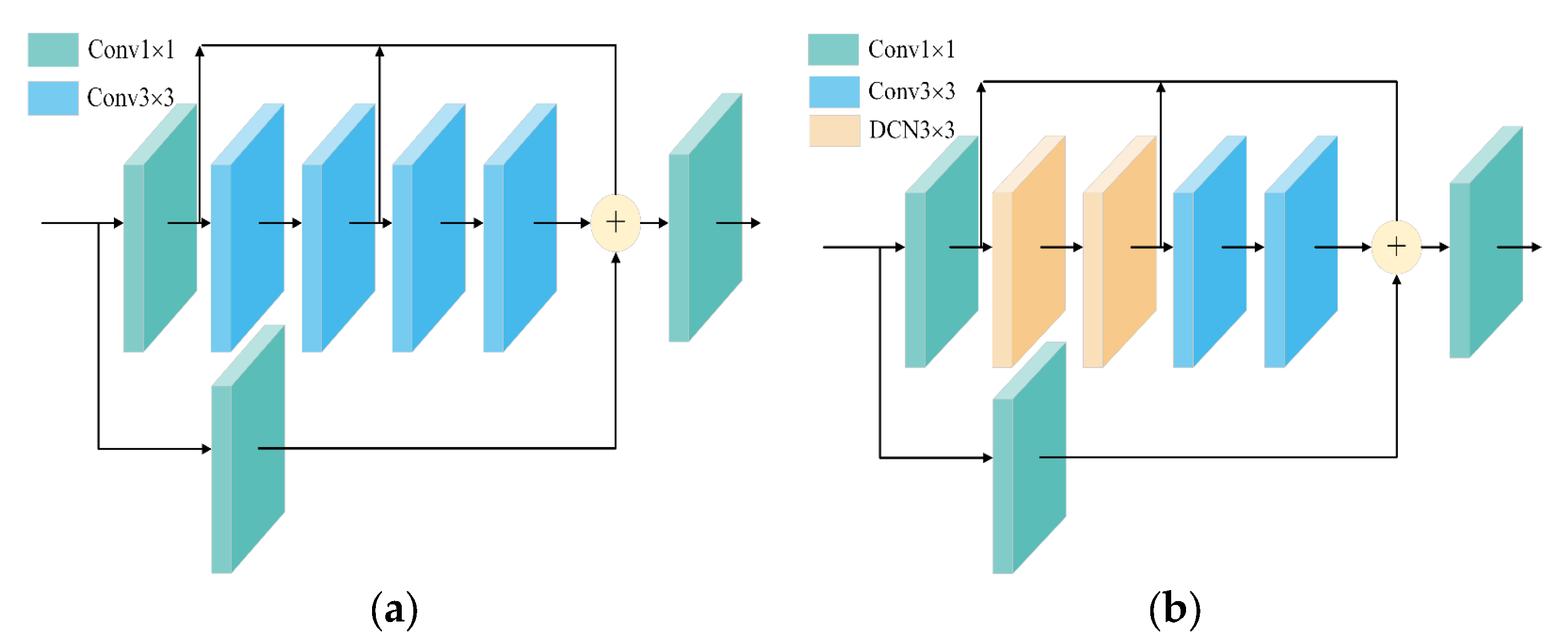

- (1)

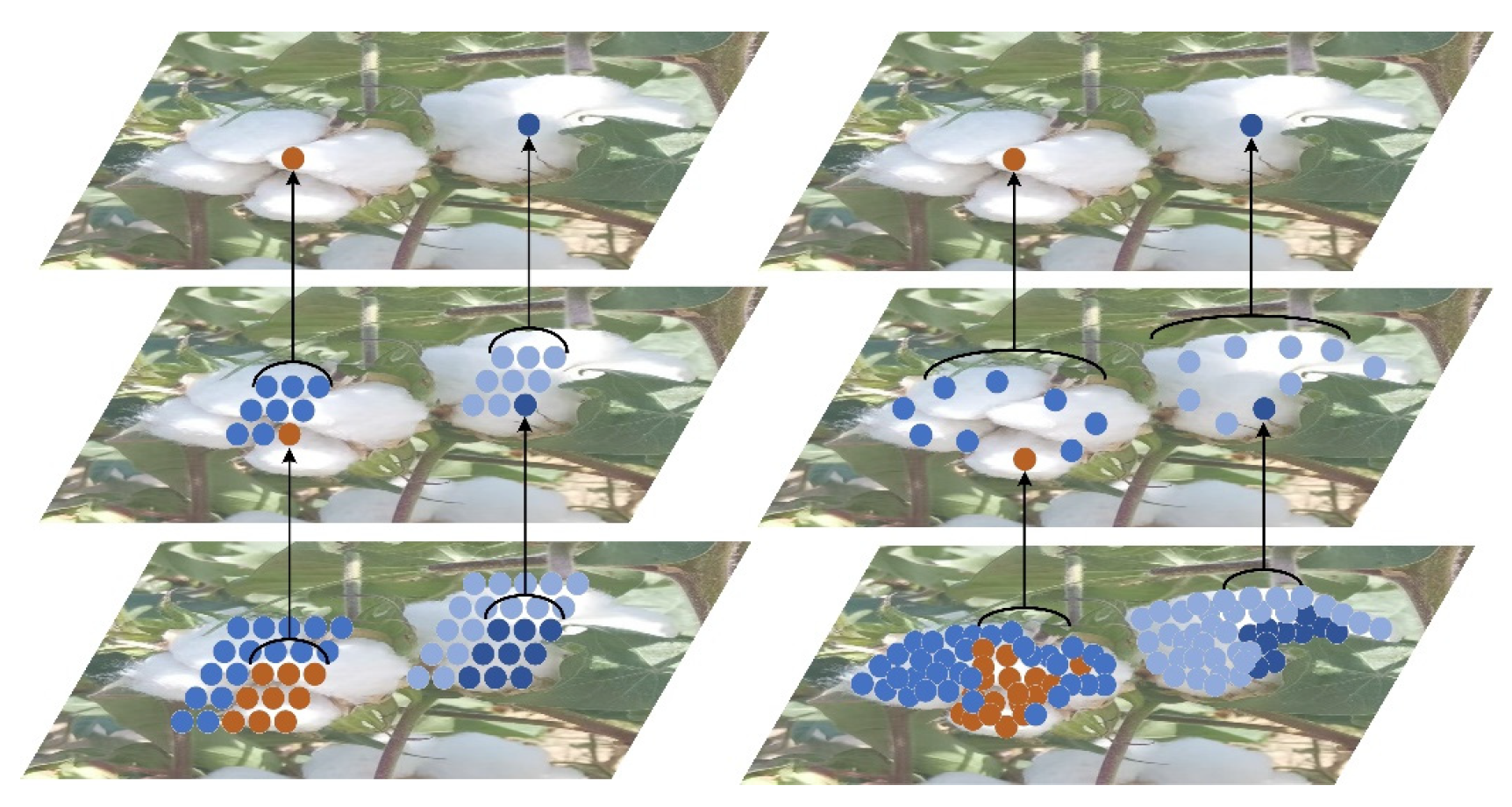

- Introducing deformable convolution

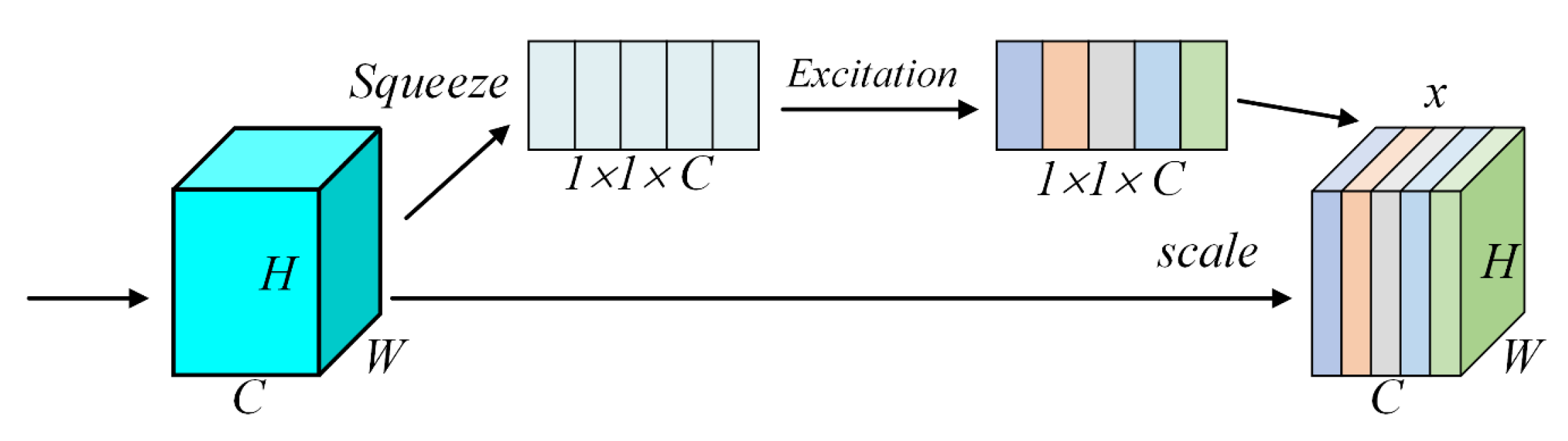

- (2)

- YOLOv7 introduces SENet attention mechanism

- (3)

- WIoU loss

3. Results

3.1. Ablation Experiments

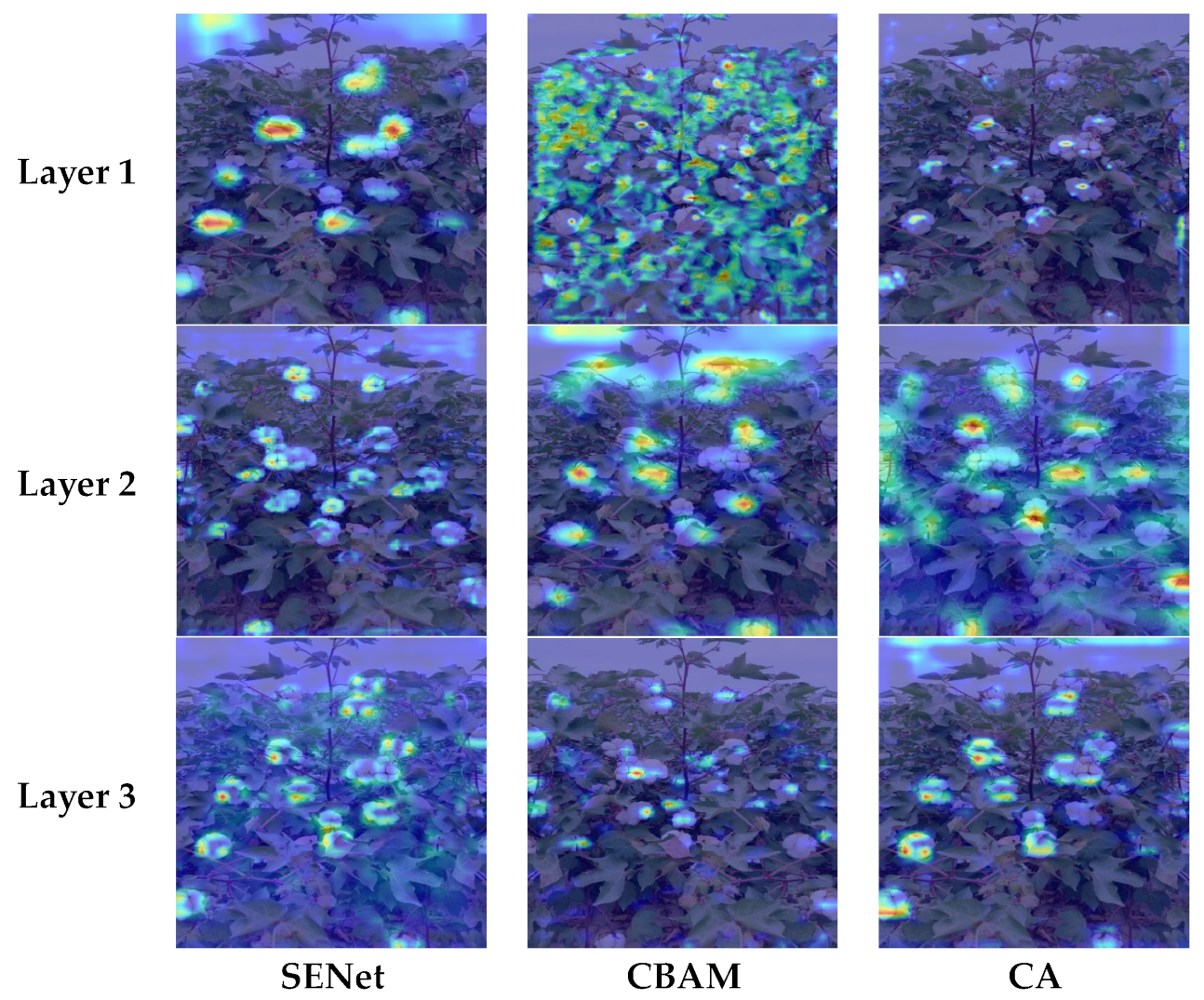

3.2. Introducing Attentional Mechanisms to Compare Experimental Results

3.3. Impact of Improved Methods on the Model

3.4. Comparison of the Improved Model with Other Models

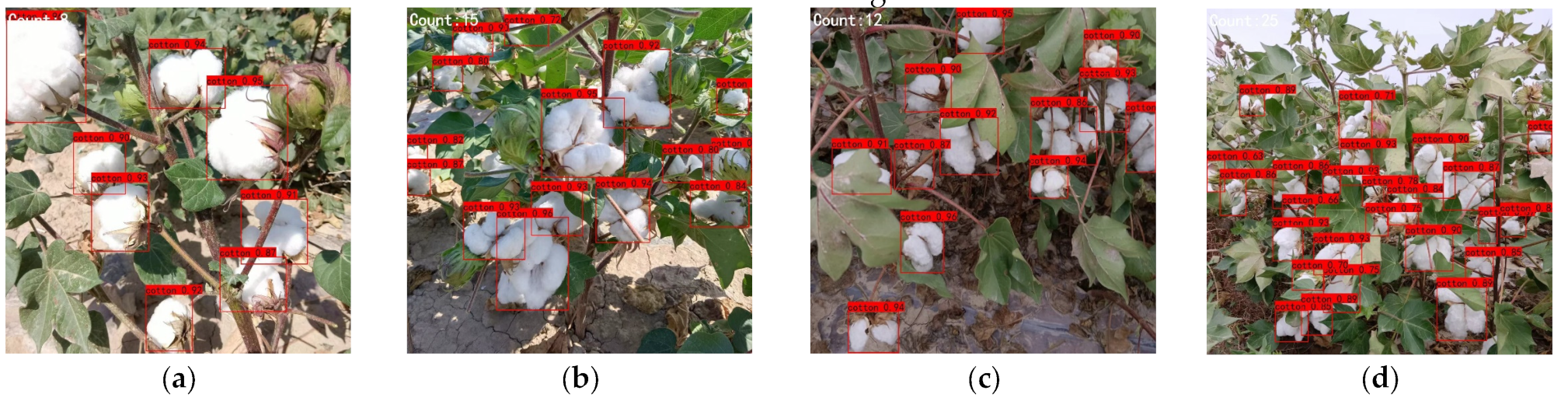

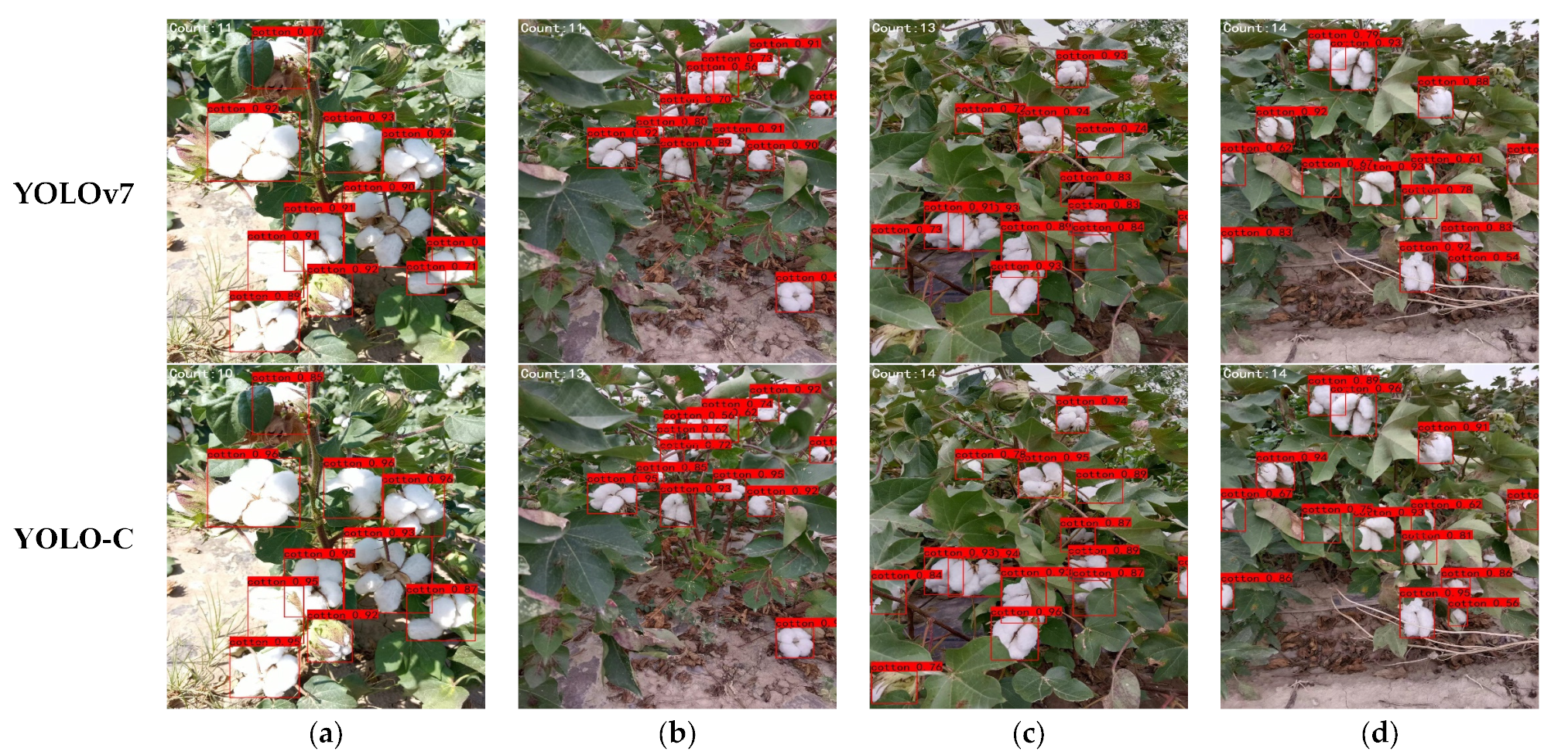

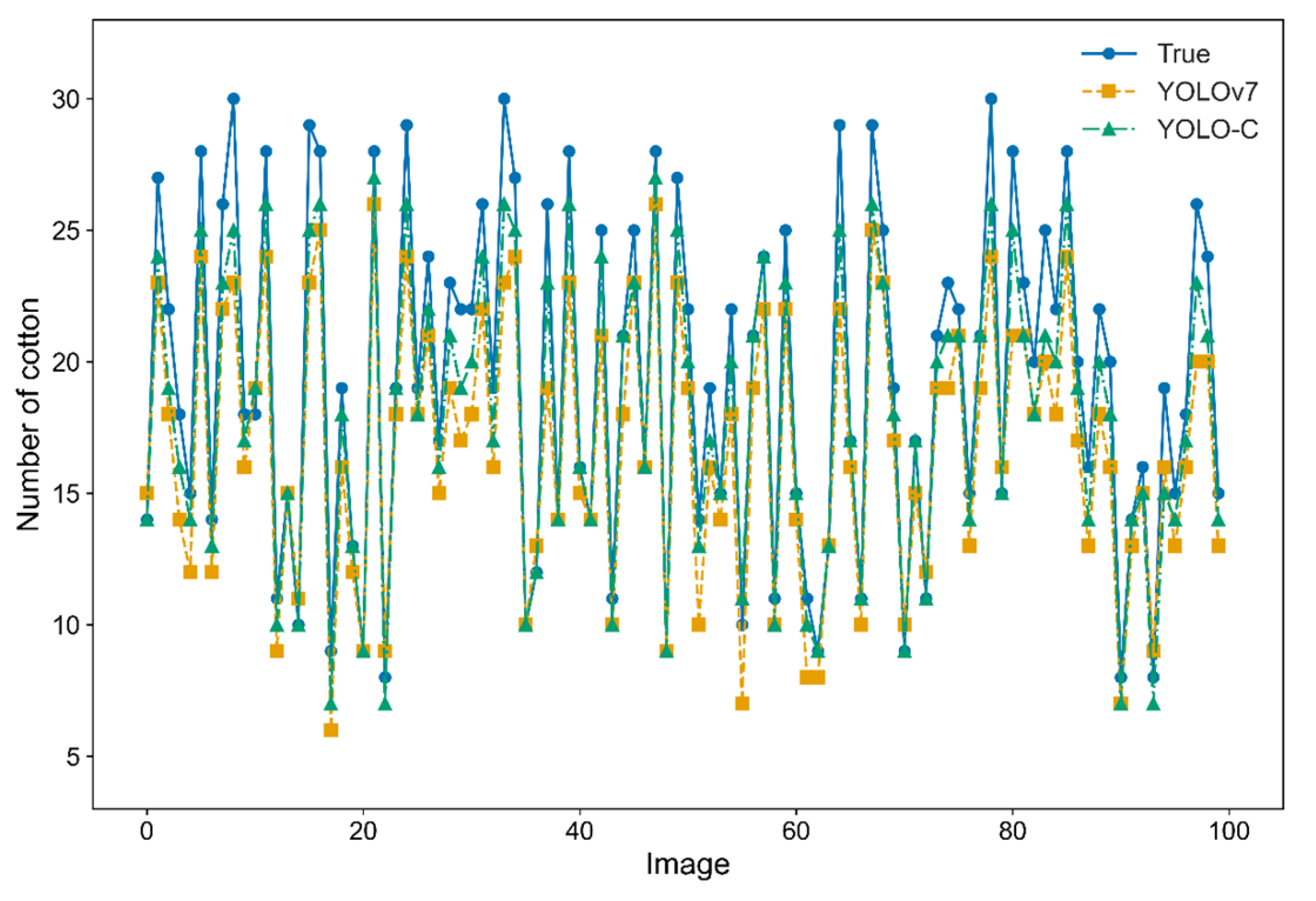

3.5. Evaluation of Experimental Results of Cotton Boll Testing

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Felgueiras, C.; Azoia, N.G.; Goncalves, C.; Gama, M.; Dourado, F. Trends on the cellulose-based textiles: Raw materials and technologies. Front. Bioeng. Biotechnol. 2021, 9, 608826. [Google Scholar] [CrossRef] [PubMed]

- Auernhammer, H. Precision farming—The environmental challenge. Comput. Electron. Agric. 2001, 30, 31–43. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Automation in agriculture by machine and deep learning techniques: A review of recent developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Liu, J.; Lai, H.; Jia, Z. Image segmentation of cotton based on ycbccr color space and fisher discrimination analysis. Acta Agron. Sin. 2011, 37, 1274–1279. [Google Scholar]

- Sun, S.; Li, C.; Paterson, A.H.; Chee, P.W.; Robertson, J.S. Image processing algorithms for infield single cotton boll counting and yield prediction. Comput. Electron. Agric. 2019, 166, 104976. [Google Scholar] [CrossRef]

- Yeom, J.; Jung, J.; Chang, A.; Maeda, M.; Landivar, J. Automated open cotton boll detection for yield estimation using unmanned aircraft vehicle (uav) data. Remote Sens. 2018, 10, 1895. [Google Scholar] [CrossRef]

- Bawa, A.; Samanta, S.; Himanshu, S.K.; Singh, J.; Kim, J.; Zhang, T.; Chang, A.; Jung, J.; Delaune, P.; Bordovsky, J.; et al. A support vector machine and image processing based approach for counting open cotton bolls and estimating lint yield from uav imagery. Smart Agric. Technol. 2023, 3, 100140. [Google Scholar] [CrossRef]

- Li, Y.; Cao, Z.; Lu, H.; Xiao, Y.; Zhu, Y.; Cremers, A.B. In-field cotton detection via region-based semantic image segmentation. Comput. Electron. Agric. 2016, 127, 475–486. [Google Scholar] [CrossRef]

- Rodriguez-Sanchez, J.; Li, C.; Paterson, A.H. Cotton yield estimation from aerial imagery using machine learning approaches. Front. Plant Sci. 2022, 13, 870181. [Google Scholar] [CrossRef] [PubMed]

- Zeng, T.; Li, S.; Song, Q.; Zhong, F.; Wei, X. Lightweight tomato real-time detection method based on improved yolo and mobile deployment. Comput. Electron. Agric. 2023, 205, 107625. [Google Scholar] [CrossRef]

- Wang, D.; He, D. Channel pruned yolo v5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning. Biosyst. Eng. 2021, 210, 271–281. [Google Scholar] [CrossRef]

- Sozzi, M.; Cantalamessa, S.; Cogato, A.; Kayad, A.; Marinello, F. Automatic bunch detection in white grape varieties using yolov3, yolov4, and yolov5 deep learning algorithms. Agronomy 2022, 12, 319. [Google Scholar] [CrossRef]

- Cardellicchio, A.; Solimani, F.; Dimauro, G.; Petrozza, A.; Summerer, S.; Cellini, F.; Renò, V. Detection of tomato plant phenotyping traits using yolov5-based single stage detectors. Comput. Electron. Agric. 2023, 207, 107757. [Google Scholar] [CrossRef]

- Xu, R.; Li, C.; Paterson, A.H.; Jiang, Y.; Sun, S.; Robertson, J.S. Aerial images and convolutional neural network for cotton bloom detection. Front. Plant Sci. 2018, 8, 2235. [Google Scholar] [CrossRef]

- Singh, N.; Tewari, V.K.; Biswas, P.K.; Dhruw, L.K. Lightweight convolutional neural network models for semantic segmentation of in-field cotton bolls. Artif. Intell. Agric. 2023, 8, 1–19. [Google Scholar] [CrossRef]

- Fue, K.G.; Porter, W.M.; Rains, G.C. Deep Learning Based Real-Time Gpu-Accelerated Tracking and Counting of Cotton Bolls under Field Conditions Using a Moving Camera; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2018; p. 1. [Google Scholar]

- Tedesco-Oliveira, D.; Da Silva, R.P.; Maldonado, W., Jr.; Zerbato, C. Convolutional neural networks in predicting cotton yield from images of commercial fields. Comput. Electron. Agric. 2020, 171, 105307. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. Mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.; Bochkovskiy, A.; Liao, H.M. Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection; Cornell University Library: Ithaca, NY, USA, 2016; pp. 779–788. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. Repvgg: Making vgg-style convnets great again, Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. arXiv 2021, arXiv:2101.03697. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. Adv. Neural Inf. Process. Syst. 2017, 30, 1195–1204. [Google Scholar]

- Yusuf, A.A.; Chong, F.; Xianling, M. An analysis of graph convolutional networks and recent datasets for visual question answering. Artif. Intell. Rev. 2022, 55, 6277–6300. [Google Scholar] [CrossRef]

- Wang, W.; Dai, J.; Chen, Z.; Huang, Z.; Li, Z.; Zhu, X.; Hu, X.; Lu, T.; Lu, L.; Li, H.; et al. Internimage: Exploring large-scale vision foundation models with deformable convolutions. arXiv 2022, arXiv:2211.05778. [Google Scholar]

- Guo, M.; Xu, T.; Liu, J.; Liu, Z.; Jiang, P.; Mu, T.; Zhang, S.; Martin, R.R.; Cheng, M.; Hu, S. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-excitation networks. arXiv 2019, arXiv:1709.01507. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.; Kweon, I.S. Cbam: Convolutional block attention module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. arXiv 2021, arXiv:2103.02907. [Google Scholar]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. Unitbox: An Advanced Object Detection Network; Cornell University Library, arXiv.org: Ithaca, NY, USA, 2016; pp. 516–520. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, California, CA, USA, 15–18 October 2019. [Google Scholar]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. arXiv 2018, arXiv:1708.02002. [Google Scholar]

- Zhang, Y.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient iou loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-iou: Bounding box regression loss with dynamic focusing mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; pp. 850–855. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-nms—Improving object detection with one line of code. arXiv 2017, arXiv:1704.04503. [Google Scholar]

| Configuration | Parameter |

|---|---|

| CPU | Intel (R) Xeon (R) Platinum 8350C |

| GPU | NVIDIA GeForce RTX3090 |

| Operating system | Windows 10 |

| Accelerated environment | CUDA 11.1 |

| Development environment | Pycharm 2021 |

| Libraries | PyTorch 1.11.0 Python 3.8 |

| Mosaic | Mixup | P | R | F1 | mAP@0.5 | mAP@0.5:0.95 | FPS |

|---|---|---|---|---|---|---|---|

| × | × | 93.30% | 91.64% | 0.92 | 95.66% | 63.12% | 44 |

| √ | × | 93.47% | 91.75% | 0.93 | 95.67% | 63.51% | 45 |

| × | √ | 93.58% | 91.64% | 0.93 | 95.30% | 62.45% | 45 |

| √ | √ | 93.82% | 91.64% | 0.93 | 95.59% | 63.52% | 48 |

| CBAM | SE | CA | P | R | F1 | mAP@0.5 | mAP@0.5:0.95 | FLOPS (G) |

|---|---|---|---|---|---|---|---|---|

| × | × | × | 93.82% | 91.64% | 0.93 | 95.59% | 63.52% | 105.47 |

| √ | × | × | 93.38% | 91.53% | 0.92 | 95.50% | 63.12% | 105.49 |

| × | √ | × | 94.86% | 92.57% | 0.93 | 96.74% | 64.12% | 105.49 |

| × | × | √ | 93.57% | 91.75% | 0.93 | 95.43% | 63.22% | 105.49 |

| DCN | SENet | WIoU | P | R | F1 | mAP@0.5 | mAP@0.5:0.95 | FPS |

|---|---|---|---|---|---|---|---|---|

| × | × | × | 93.82% | 91.64% | 0.93 | 95.59% | 63.52% | 48 |

| √ | × | × | 94.34% | 91.53% | 0.94 | 95.17% | 63.10% | 46 |

| √ | √ | × | 95.25% | 92.44% | 0.94 | 96.90% | 64.17% | 48 |

| √ | √ | √ | 95.75% | 92.65% | 0.94 | 97.19% | 64.31% | 49 |

| Methods | P | R | mAP@0.5 | mAP@0.5:0.95 | FPS |

|---|---|---|---|---|---|

| Faster R-CNN | 85.74% | 86.12% | 89.43% | 58.68% | 13 |

| SSD | 78.18% | 76.40% | 80.05% | 50.40% | 26 |

| EfficientDet | 85.68% | 89.98% | 92.93% | 59.80% | 24 |

| RetinaNet | 86.02% | 87.17% | 91.06% | 56.35% | 19 |

| YOLOv5 | 90.28% | 85.08% | 90.84% | 59.19% | 49 |

| YOLOv7 | 93.82% | 91.64% | 95.59% | 63.52% | 48 |

| YOLOX | 89.52% | 84.92% | 91.16% | 59.67% | 51 |

| YOLO-C | 95.75% | 92.65% | 97.19% | 64.31% | 49 |

| Model | RMSE | MAE | MAPE | |

|---|---|---|---|---|

| YOLOv7 | 3.20 | 2.65 | 12.02% | 0.90 |

| YOLO-C | 1.88 | 1.42 | 6.72% | 0.96 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liang, Z.; Cui, G.; Xiong, M.; Li, X.; Jin, X.; Lin, T. YOLO-C: An Efficient and Robust Detection Algorithm for Mature Long Staple Cotton Targets with High-Resolution RGB Images. Agronomy 2023, 13, 1988. https://doi.org/10.3390/agronomy13081988

Liang Z, Cui G, Xiong M, Li X, Jin X, Lin T. YOLO-C: An Efficient and Robust Detection Algorithm for Mature Long Staple Cotton Targets with High-Resolution RGB Images. Agronomy. 2023; 13(8):1988. https://doi.org/10.3390/agronomy13081988

Chicago/Turabian StyleLiang, Zhi, Gaojian Cui, Mingming Xiong, Xiaojuan Li, Xiuliang Jin, and Tao Lin. 2023. "YOLO-C: An Efficient and Robust Detection Algorithm for Mature Long Staple Cotton Targets with High-Resolution RGB Images" Agronomy 13, no. 8: 1988. https://doi.org/10.3390/agronomy13081988

APA StyleLiang, Z., Cui, G., Xiong, M., Li, X., Jin, X., & Lin, T. (2023). YOLO-C: An Efficient and Robust Detection Algorithm for Mature Long Staple Cotton Targets with High-Resolution RGB Images. Agronomy, 13(8), 1988. https://doi.org/10.3390/agronomy13081988