Detection and Positioning of Camellia oleifera Fruit Based on LBP Image Texture Matching and Binocular Stereo Vision

Abstract

:1. Introduction

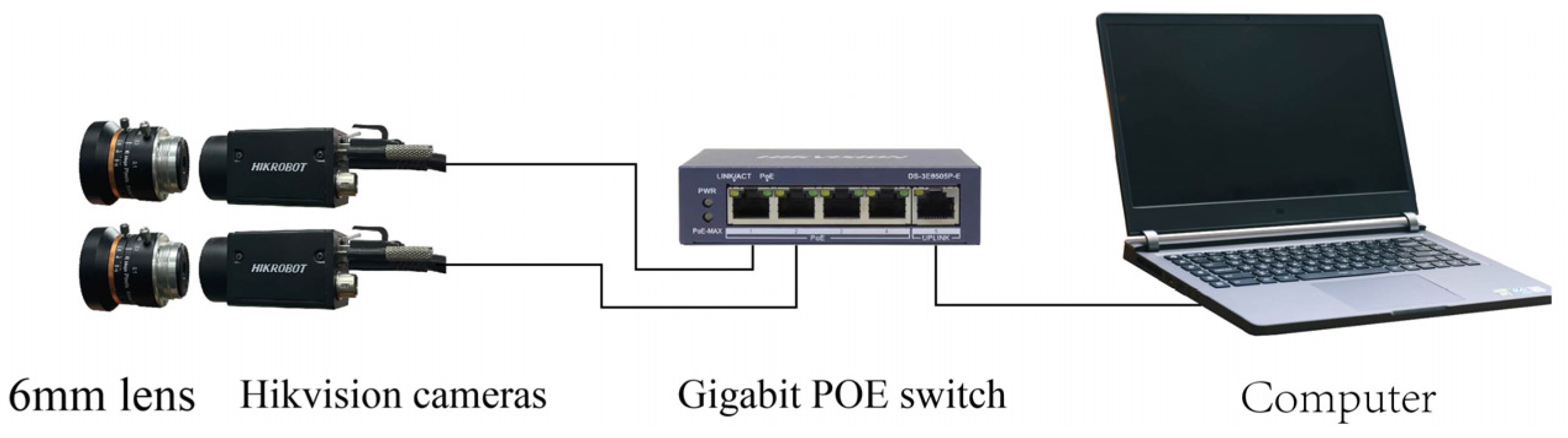

- Two industrial cameras are set up to form a binocular stereo vision system for recognizing and locating C. oleifera fruits.

- A robust detection model is obtained by using the YOLOv7 neural network to train the dataset of C. oleifera fruits.

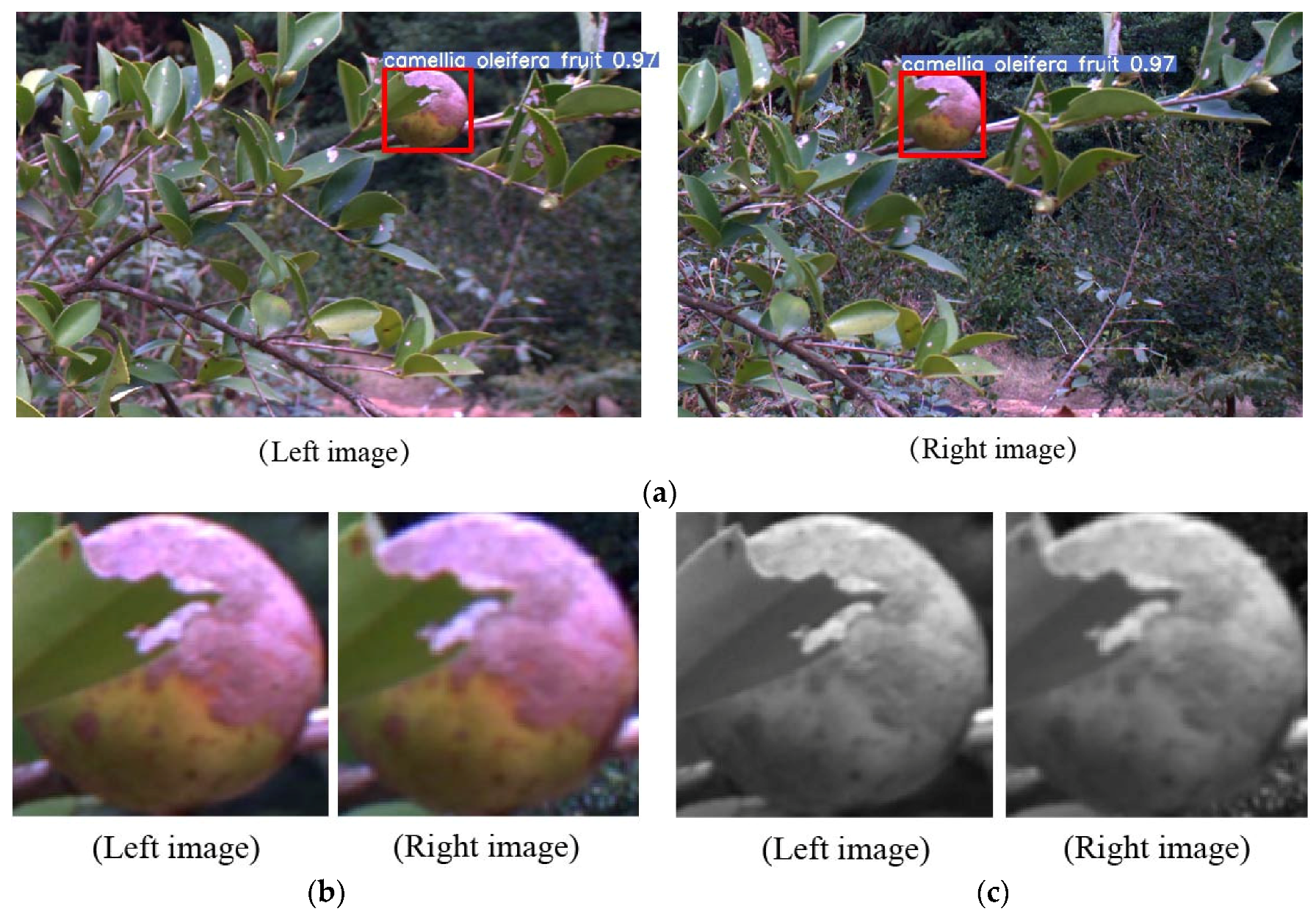

- A binocular vision localization algorithm based on image matching with local binary patterns (LBP) maps is proposed.

2. Materials and Methods

2.1. Experimental Materials and Acquisition Equipment

2.2. Object Detection Algorithms of C. oleifera Fruit Based on YOLOv7

2.3. Extraction of Center Point Coordinates of C. oleifera Fruit

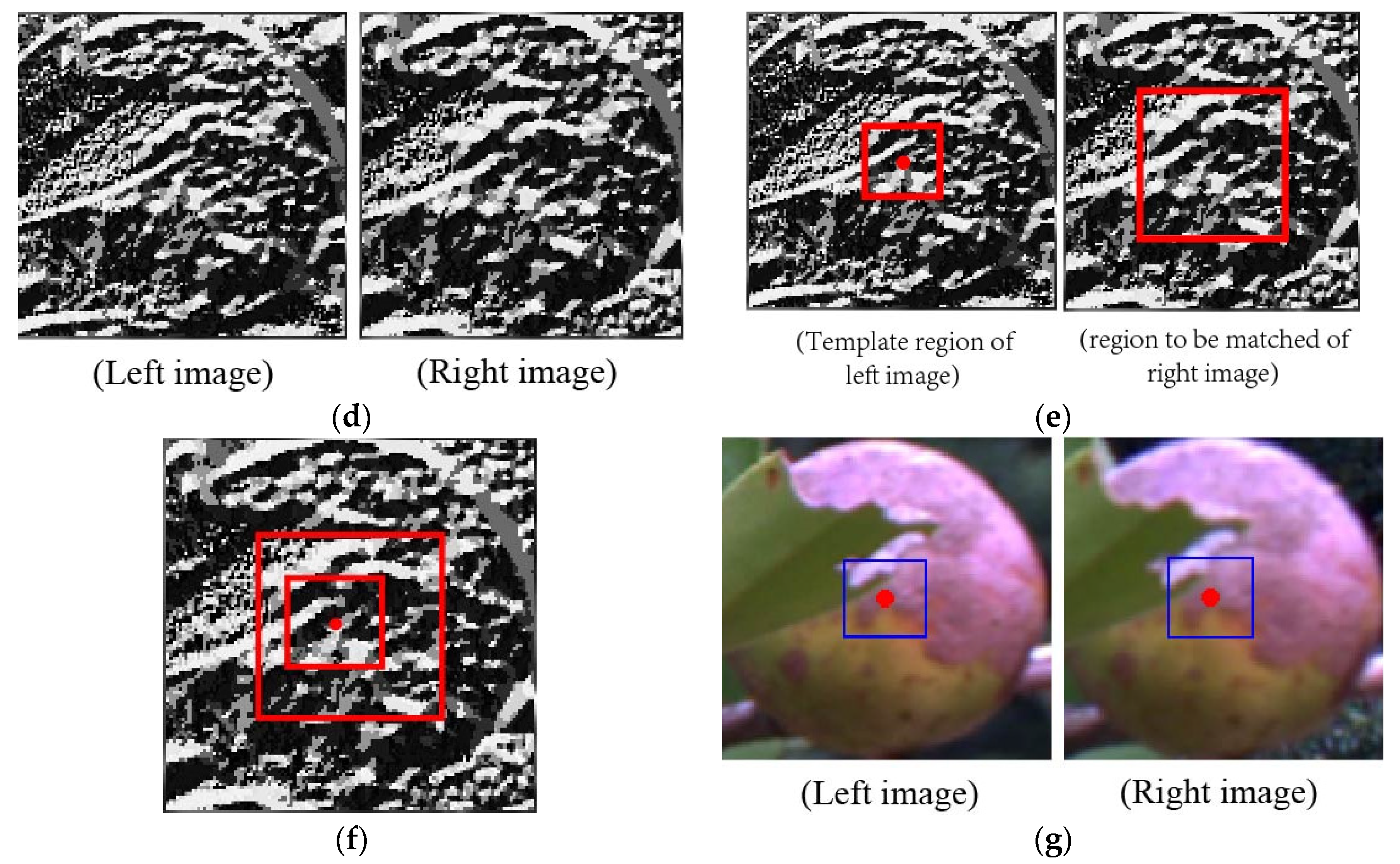

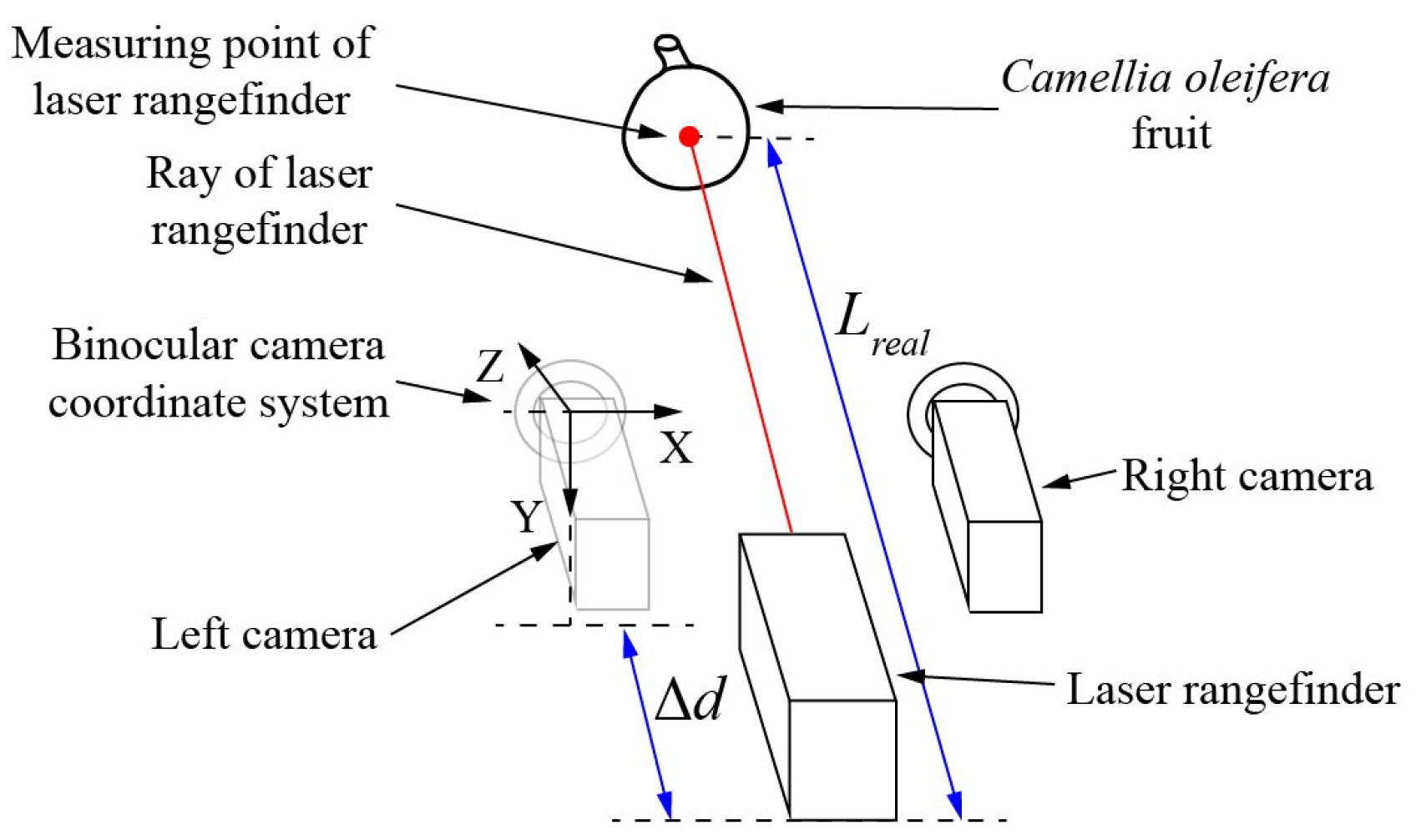

2.4. Binocular Stereo Vision Ranging Principle

3. Results

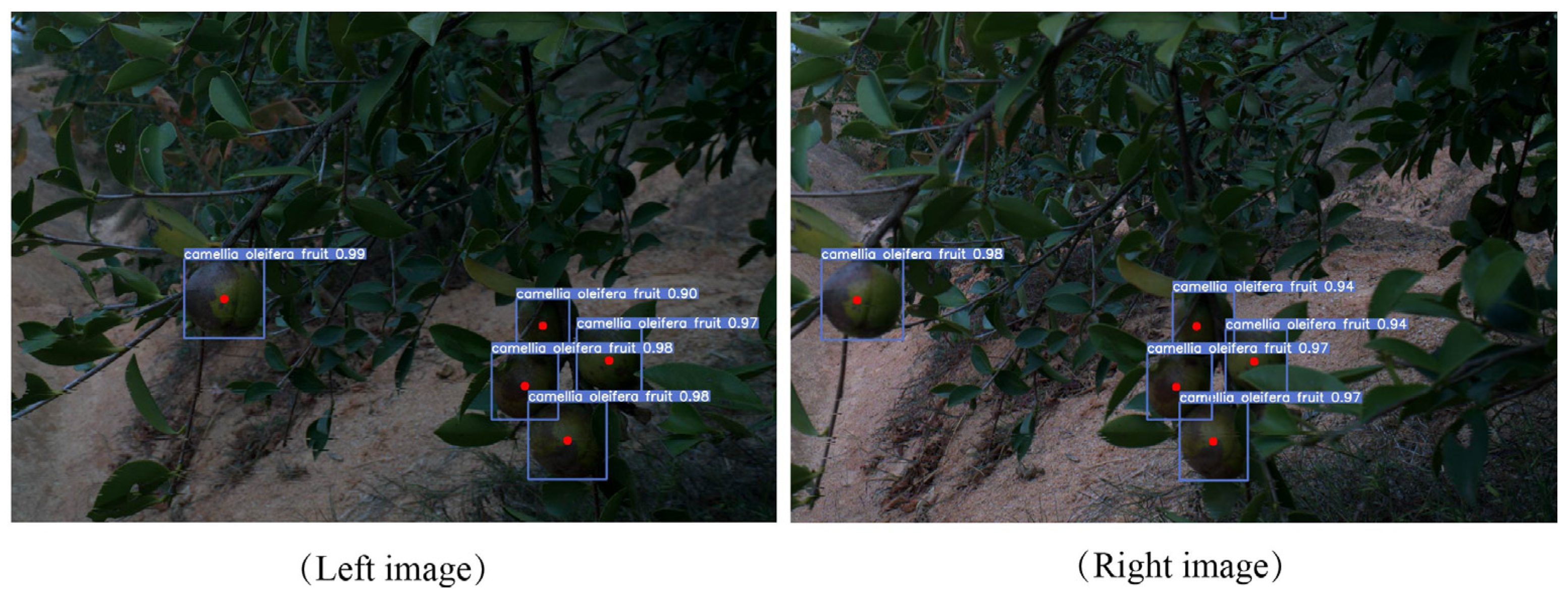

3.1. C. oleifera Fruit Recognition Experiment

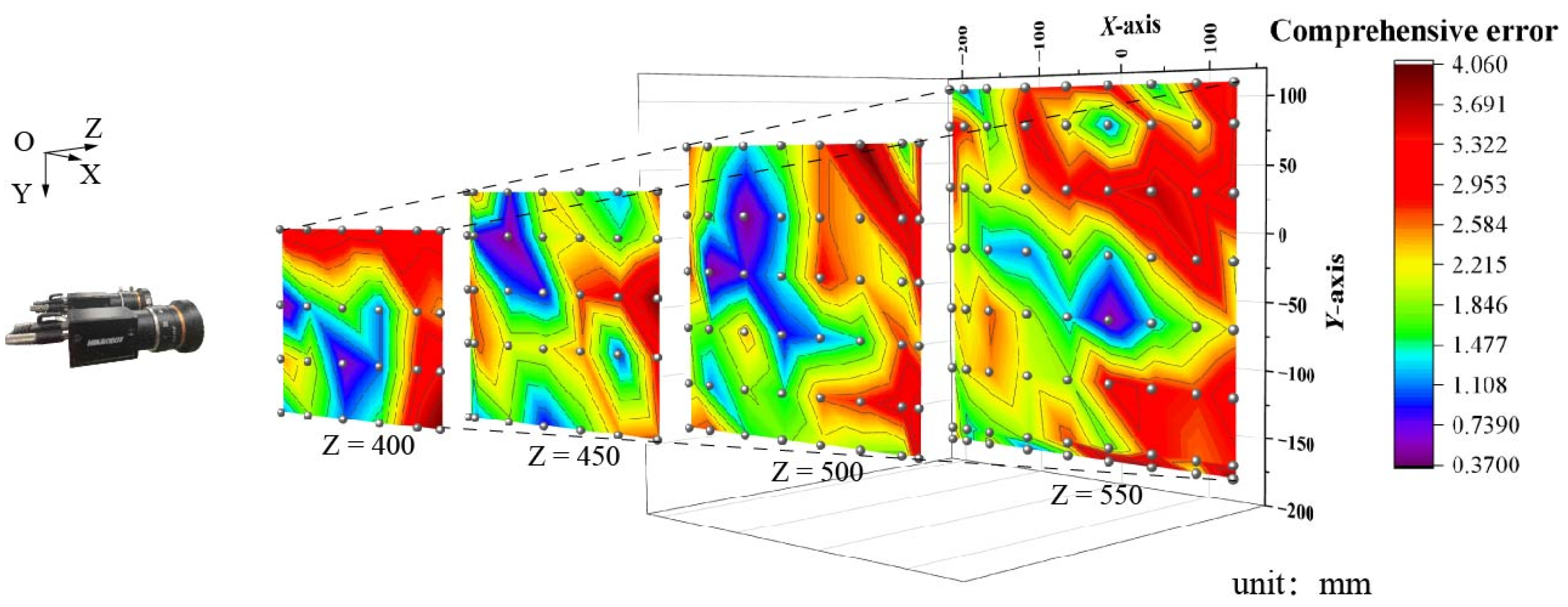

3.2. Experiment on Indoor Positioning Accuracy of Binocular Stereo Vision

3.3. Experiment in Natural Orchard Environment

4. Discussion

4.1. Analysis of the Recognition Results of C. oleifera Fruit

4.2. Analysis of the Location Results of C. oleifera Fruit

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, S.; Zou, X.; Zhou, X.; Xiang, Y.; Wu, M. Study on Fusion Clustering and Improved YOLOv5 Algorithm Based on Multiple Occlusion of Camellia Oleifera Fruit. Comput. Electron. Agric. 2023, 206, 107706. [Google Scholar] [CrossRef]

- Lu, S.; Chen, W.; Zhang, X.; Karkee, M. Canopy-Attention-YOLOv4-Based Immature/Mature Apple Fruit Detection on Dense-Foliage Tree Architectures for Early Crop Load Estimation. Comput. Electron. Agric. 2022, 193, 106696. [Google Scholar] [CrossRef]

- Liu, A.; Xiang, Y.; Li, Y.; Hu, Z.; Dai, X.; Lei, X.; Tang, Z. 3D Positioning Method for Pineapple Eyes Based on Multiangle Image Stereo-Matching. Agriculture 2022, 12, 2039. [Google Scholar] [CrossRef]

- Zhu, X.; Zhang, X.; Sun, Z.; Zheng, Y.; Su, S.; Chen, F. Identification of Oil Tea (Camellia Oleifera C. Abel) Cultivars Using EfficientNet-B4 CNN Model with Attention Mechanism. Forests 2022, 13, 1. [Google Scholar] [CrossRef]

- Zhou, Y.; Tang, Y.; Zou, X.; Wu, M.; Tang, W.; Meng, F.; Zhang, Y.; Kang, H. Adaptive Active Positioning of Camellia Oleifera Fruit Picking Points: Classical Image Processing and YOLOv7 Fusion Algorithm. Appl. Sci. 2022, 12, 12959. [Google Scholar] [CrossRef]

- Wu, D.; Jiang, S.; Zhao, E.; Liu, Y.; Zhu, H.; Wang, W.; Wang, R. Detection of Camellia Oleifera Fruit in Complex Scenes by Using YOLOv7 and Data Augmentation. Appl. Sci. 2022, 12, 11318. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, Y.; Wang, J. RDE-YOLOv7: An Improved Model Based on YOLOv7 for Better Performance in Detecting Dragon Fruits. Agronomy 2023, 13, 1042. [Google Scholar] [CrossRef]

- Chen, M.; Tang, Y.; Zou, X.; Huang, K.; Huang, Z.; Zhou, H.; Wang, C.; Lian, G. Three-Dimensional Perception of Orchard Banana Central Stock Enhanced by Adaptive Multi-Vision Technology. Comput. Electron. Agric. 2020, 174, 105508. [Google Scholar] [CrossRef]

- Li, D.; Xu, L.; Tang, X.; Sun, S.; Cai, X.; Zhang, P. 3D Imaging of Greenhouse Plants with an Inexpensive Binocular Stereo Vision System. Remote Sens. 2017, 9, 508. [Google Scholar] [CrossRef]

- Gao, K.; Gui, C.; Wang, J.; Miu, H. Research on Recognition and Positioning Technology of Dragon Fruit Based on Binocular Vision. In Proceedings of the Advances in Natural Computation, Fuzzy Systems and Knowledge Discovery, Kunming, China, 20–22 July 2019; Meng, H., Lei, T., Li, M., Li, K., Xiong, N., Wang, L., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 1257–1264. [Google Scholar]

- Hsieh, K.-W.; Huang, B.-Y.; Hsiao, K.-Z.; Tuan, Y.-H.; Shih, F.-P.; Hsieh, L.-C.; Chen, S.; Yang, I.-C. Fruit Maturity and Location Identification of Beef Tomato Using R-CNN and Binocular Imaging Technology. J. Food Meas. Charact. 2021, 15, 5170–5180. [Google Scholar] [CrossRef]

- Liu, T.-H.; Nie, X.-N.; Wu, J.-M.; Zhang, D.; Liu, W.; Cheng, Y.-F.; Zheng, Y.; Qiu, J.; Qi, L. Pineapple (Ananas Comosus) Fruit Detection and Localization in Natural Environment Based on Binocular Stereo Vision and Improved YOLOv3 Model. Precis. Agric. 2022, 24, 139–160. [Google Scholar] [CrossRef]

- Tang, Y.; Zhou, H.; Wang, H.; Zhang, Y. Fruit Detection and Positioning Technology for a Camellia Oleifera C. Abel Orchard Based on Improved YOLOv4-Tiny Model and Binocular Stereo Vision. Expert Syst. Appl. 2023, 211, 118573. [Google Scholar] [CrossRef]

- Li, T.; Yu, J.; Qiu, Q.; Zhao, C. Hybrid Uncalibrated Visual Servoing Control of Harvesting Robots With RGB-D Cameras. IEEE Trans. Ind. Electron. 2023, 70, 2729–2738. [Google Scholar] [CrossRef]

- Sun, M.; Xu, L.; Luo, R.; Lu, Y.; Jia, W. Fast Location and Recognition of Green Apple Based on RGB-D Image. Front. Plant Sci. 2022, 13, 864458. [Google Scholar] [CrossRef]

- Wang, X.; Kang, H.; Zhou, H.; Au, W.; Chen, C. Geometry-Aware Fruit Grasping Estimation for Robotic Harvesting in Apple Orchards. Comput. Electron. Agric. 2022, 193, 106716. [Google Scholar] [CrossRef]

- Yu, L.; Xiong, J.; Fang, X.; Yang, Z.; Chen, Y.; Lin, X.; Chen, S. A Litchi Fruit Recognition Method in a Natural Environment Using RGB-D Images. Biosyst. Eng. 2021, 204, 50–63. [Google Scholar] [CrossRef]

- Wang, R.; Wan, W.; Di, K.; Chen, R.; Feng, X. A High-Accuracy Indoor-Positioning Method with Automated RGB-D Image Database Construction. Remote Sens. 2019, 11, 2572. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Xiong, J.; Li, J. Guava Detection and Pose Estimation Using a Low-Cost RGB-D Sensor in the Field. Sensors 2019, 19, 428. [Google Scholar] [CrossRef]

- Li, Y.; He, L.; Jia, J.; Lv, J.; Chen, J.; Qiao, X.; Wu, C. In-Field Tea Shoot Detection and 3D Localization Using an RGB-D Camera. Comput. Electron. Agric. 2021, 185, 106149. [Google Scholar] [CrossRef]

- Yu, T.; Hu, C.; Xie, Y.; Liu, J.; Li, P. Mature Pomegranate Fruit Detection and Location Combining Improved F-PointNet with 3D Point Cloud Clustering in Orchard. Comput. Electron. Agric. 2022, 200, 107233. [Google Scholar] [CrossRef]

- Lin, J. Online Recognition and Location of Camellia Oleifera Fruit Based on Stereo Vision. Master Thesis, Hunan Agricultural University, Changsha, China, 2020. [Google Scholar]

- Xu, C.; Wang, C.; Kong, B.; Yi, B.; Li, Y. Citrus Positioning Method Based on Camera and Lidar Data Fusion. In 3D Imaging—Multidimensional Signal Processing and Deep Learning; Jain, L.C., Kountchev, R., Tai, Y., Kountcheva, R., Eds.; Springer Nature: Singapore, 2022; pp. 153–168. [Google Scholar]

- Fu, L.; Gao, F.; Wu, J.; Li, R.; Karkee, M.; Zhang, Q. Application of Consumer RGB-D Cameras for Fruit Detection and Localization in Field: A Critical Review. Comput. Electron. Agric. 2020, 177, 105687. [Google Scholar] [CrossRef]

- Li, R.; Ji, Z.; Hu, S.; Huang, X.; Yang, J.; Li, W. Tomato Maturity Recognition Model Based on Improved YOLOv5 in Greenhouse. Agronomy 2023, 13, 603. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; Available online: https://arxiv.org/abs/2207.02696 (accessed on 1 February 2023).

- Sotoodeh, M.; Moosavi, M.R.; Boostani, R. A Novel Adaptive LBP-Based Descriptor for Color Image Retrieval. Expert Syst. Appl. 2019, 127, 342–352. [Google Scholar] [CrossRef]

- Li, Y.; Feng, Q.; Lin, J.; Hu, Z.; Lei, X.; Xiang, Y. 3D Locating System for Pests’ Laser Control Based on Multi-Constraint Stereo Matching. Agriculture 2022, 12, 766. [Google Scholar] [CrossRef]

- Foley, J.M. Binocular Distance Perception: Egocentric Distance Tasks. J. Exp. Psychol. Hum. Percept. Perform. 1985, 11, 133–149. [Google Scholar] [CrossRef]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Yang, C.; Liu, Y.; Wang, Y.; Xiong, L.; Xu, H.; Zhao, W. Research and Experiment on Recognition and Location System for Citrus Picking Robot in Natural Environment. Trans. Chin. Soc. Agric. Mach. 2019, 50, 12. [Google Scholar] [CrossRef]

- Chen, C.; Lu, J.; Zhou, M.; Yi, J.; Liao, M.; Gao, Z. A YOLOv3-Based Computer Vision System for Identification of Tea Buds and the Picking Point. Comput. Electron. Agric. 2022, 198, 107116. [Google Scholar] [CrossRef]

| P (%) | R (%) | mAP (%) | F1 (%) | t (s/pic) |

|---|---|---|---|---|

| 97.3 | 97.6 | 97.7 | 97.4 | 0.021 |

| The Degree of Occlusion | Number of Fruits Detected | Number of Actual Fruits | Recognition Rate |

|---|---|---|---|

| No occlusion | 141 | 142 | 99.29% |

| Slight occlusion | 95 | 102 | 93.13% |

| Severe occlusion | 88 | 117 | 75.21% |

| Light Conditions | Number of Fruits Detected | Number of Actual Fruits | Recognition Rate |

|---|---|---|---|

| Sunlight | 126 | 139 | 90.64% |

| Shading | 95 | 104 | 91.34% |

| Preprocessing Stage | Recognition Stage | Positioning Stage | |

|---|---|---|---|

| Average elapsed time | 0.012 s/pic | 0.021 s/pic | 0.028 s/fruit |

| Fruit 1 | Fruit 2 | Fruit 3 | Fruit 4 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Measurement Values/mm | Real Values/ mm | Absolute Value of Error/mm | Measurement Values/mm | Real Values/mm | Absolute Value of Error/mm | Measurement Values/mm | Real Values/mm | Absolute Value of Error/mm | Measurement Values/mm | Real Values/mm | Absolute Value of Error/mm |

| 402.588 | 402 | 0.588 | 407.695 | 409 | 1.305 | 417.166 | 414 | 3.166 | 419.354 | 418 | 1.354 |

| 412.537 | 411 | 1.537 | 430.052 | 433 | 2.948 | 430.727 | 430 | 0.727 | 449.692 | 448 | 1.692 |

| 438.867 | 438 | 0.867 | 470.623 | 472 | 1.377 | 450.293 | 452 | 1.707 | 475.559 | 475 | 0.559 |

| 453.269 | 452 | 1.269 | 490.684 | 492 | 1.316 | 471.795 | 473 | 1.205 | 500.251 | 496 | 4.251 |

| 458.737 | 458 | 0.737 | 515.419 | 517 | 1.581 | 483.054 | 485 | 1.946 | 520.364 | 521 | 0.636 |

| 459.869 | 458 | 1.869 | 536.142 | 533 | 3.142 | 556.961 | 553 | 3.961 | 540.336 | 542 | 1.664 |

| 489.375 | 487 | 2.375 | 549.279 | 545 | 4.279 | 586.748 | 590 | 3.252 | 566.849 | 566 | 0.849 |

| 515.174 | 513 | 2.174 | 563.983 | 567 | 3.017 | 600.135 | 598 | 2.135 | 590.793 | 592 | 1.207 |

| 547.412 | 544 | 3.412 | 575.975 | 572 | 3.975 | 603.923 | 603 | 0.923 | 614.576 | 611 | 3.576 |

| 587.386 | 585 | 2.386 | 594.847 | 594 | 0.847 | 628.874 | 625 | 3.874 | 630.859 | 634 | 3.141 |

| Standard deviation | 0.847 | Standard deviation | 1.172 | Standard deviation | 1.139 | Standard deviation | 1.235 | ||||

| correlation coefficient R2 | 0.9991 | correlation coefficient R2 | 0.9985 | correlation coefficient R2 | 0.9989 | correlation coefficient R2 | 0.9990 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, X.; Wu, M.; Li, Y.; Liu, A.; Tang, Z.; Chen, S.; Xiang, Y. Detection and Positioning of Camellia oleifera Fruit Based on LBP Image Texture Matching and Binocular Stereo Vision. Agronomy 2023, 13, 2153. https://doi.org/10.3390/agronomy13082153

Lei X, Wu M, Li Y, Liu A, Tang Z, Chen S, Xiang Y. Detection and Positioning of Camellia oleifera Fruit Based on LBP Image Texture Matching and Binocular Stereo Vision. Agronomy. 2023; 13(8):2153. https://doi.org/10.3390/agronomy13082153

Chicago/Turabian StyleLei, Xiangming, Mingliang Wu, Yajun Li, Anwen Liu, Zhenhui Tang, Shang Chen, and Yang Xiang. 2023. "Detection and Positioning of Camellia oleifera Fruit Based on LBP Image Texture Matching and Binocular Stereo Vision" Agronomy 13, no. 8: 2153. https://doi.org/10.3390/agronomy13082153