Integrating Unmanned Aerial Vehicle-Derived Vegetation and Texture Indices for the Estimation of Leaf Nitrogen Concentration in Drip-Irrigated Cotton under Reduced Nitrogen Treatment and Different Plant Densities

Abstract

:1. Introduction

2. Materials and Methods

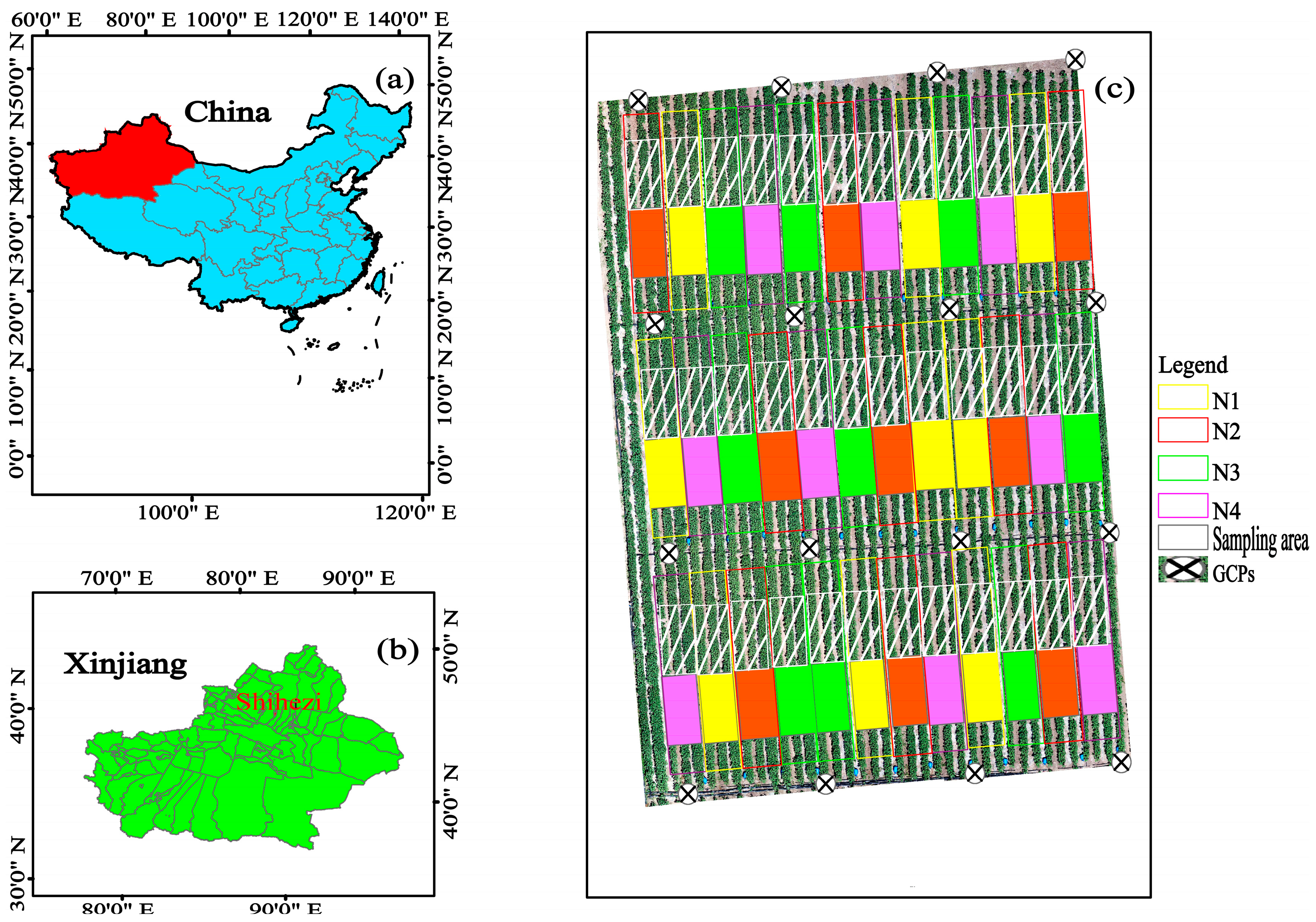

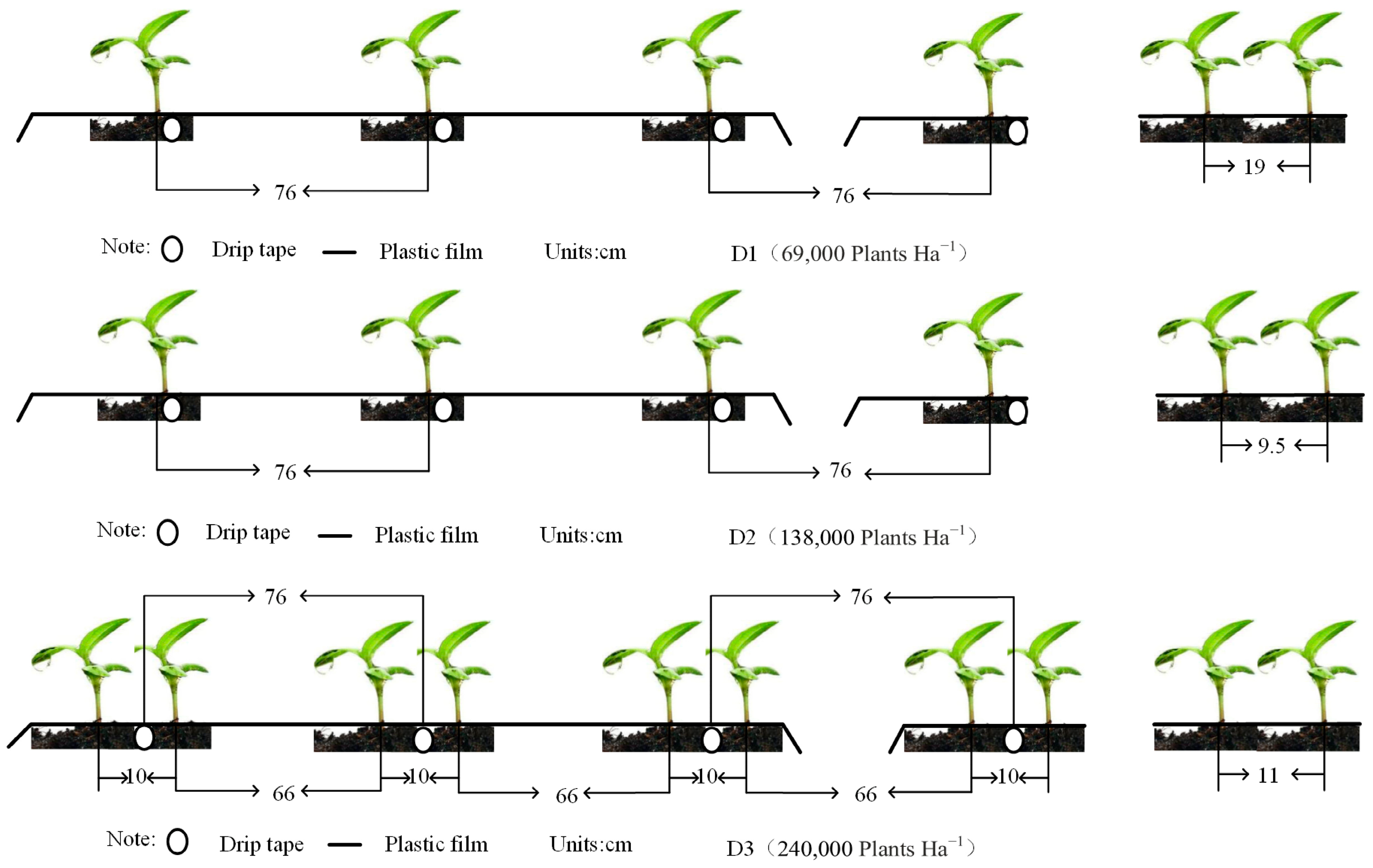

2.1. Experimental Design

2.2. Ground Sampling and UAV Data Acquisition and Pre-Processing

2.3. Selection of Image Textures

2.4. Selection of Vegetation Indices

2.5. Regression Modeling Methods

2.6. Accuracy Assessment

3. Results

3.1. Correlation between LNC, Vis, and Texture

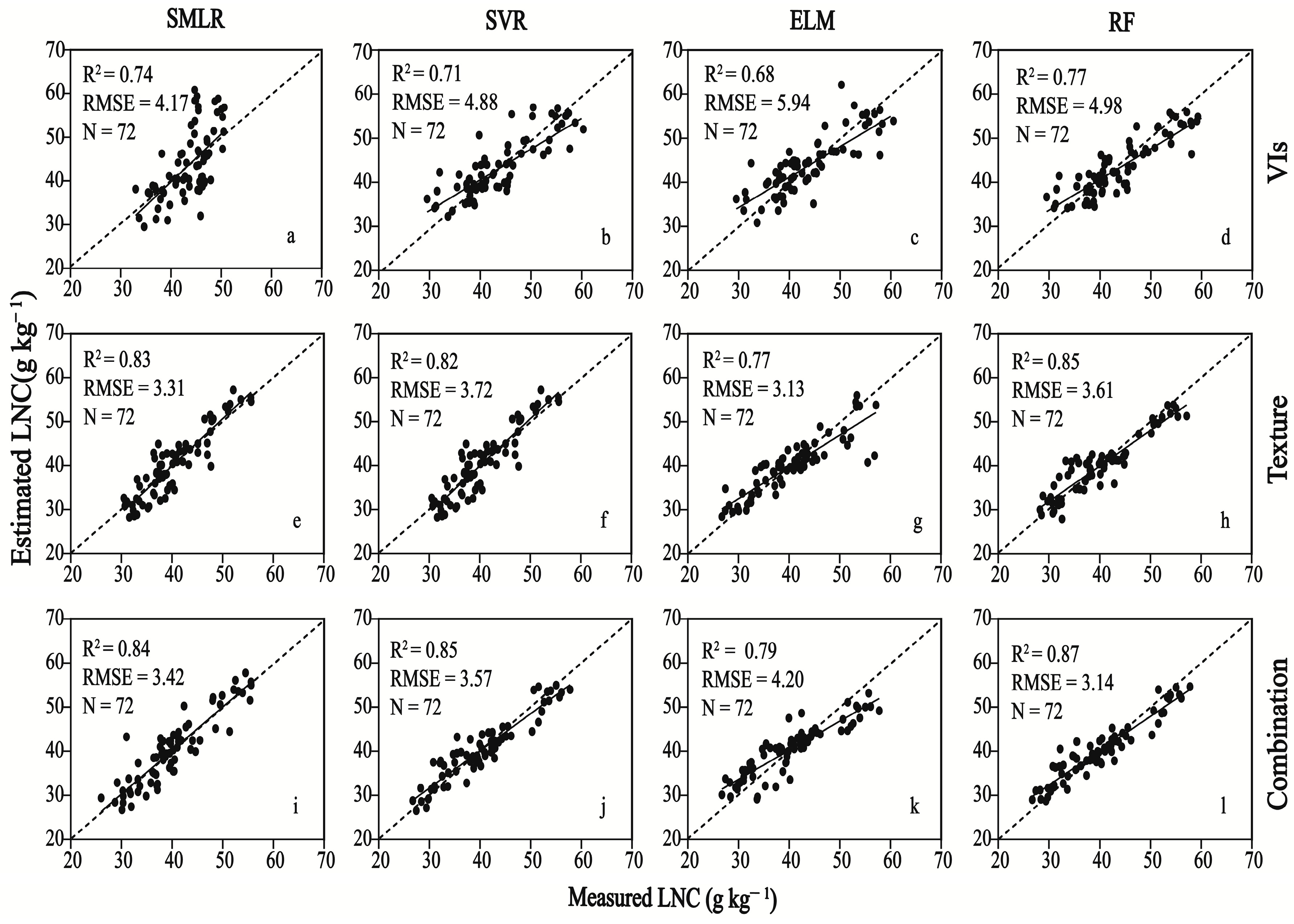

3.2. Comparison of LNC Estimation Performance among SMLR and Machine Learning Techniques

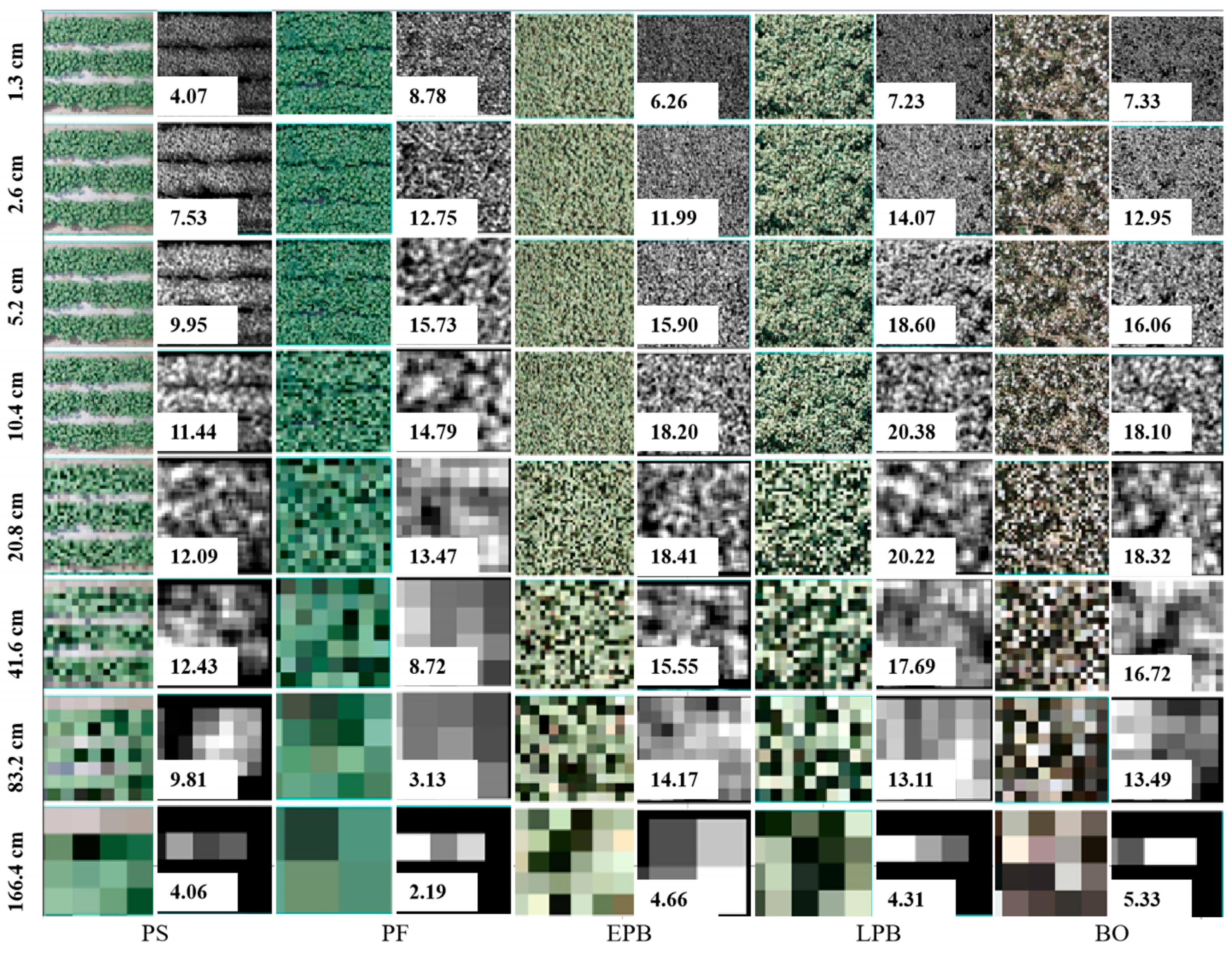

3.3. The Correlation of Image Textures at Different Ground Resolutions of the Image

3.4. The Effect of Different Ground-Resolution Images on LNC Estimation by Using RF

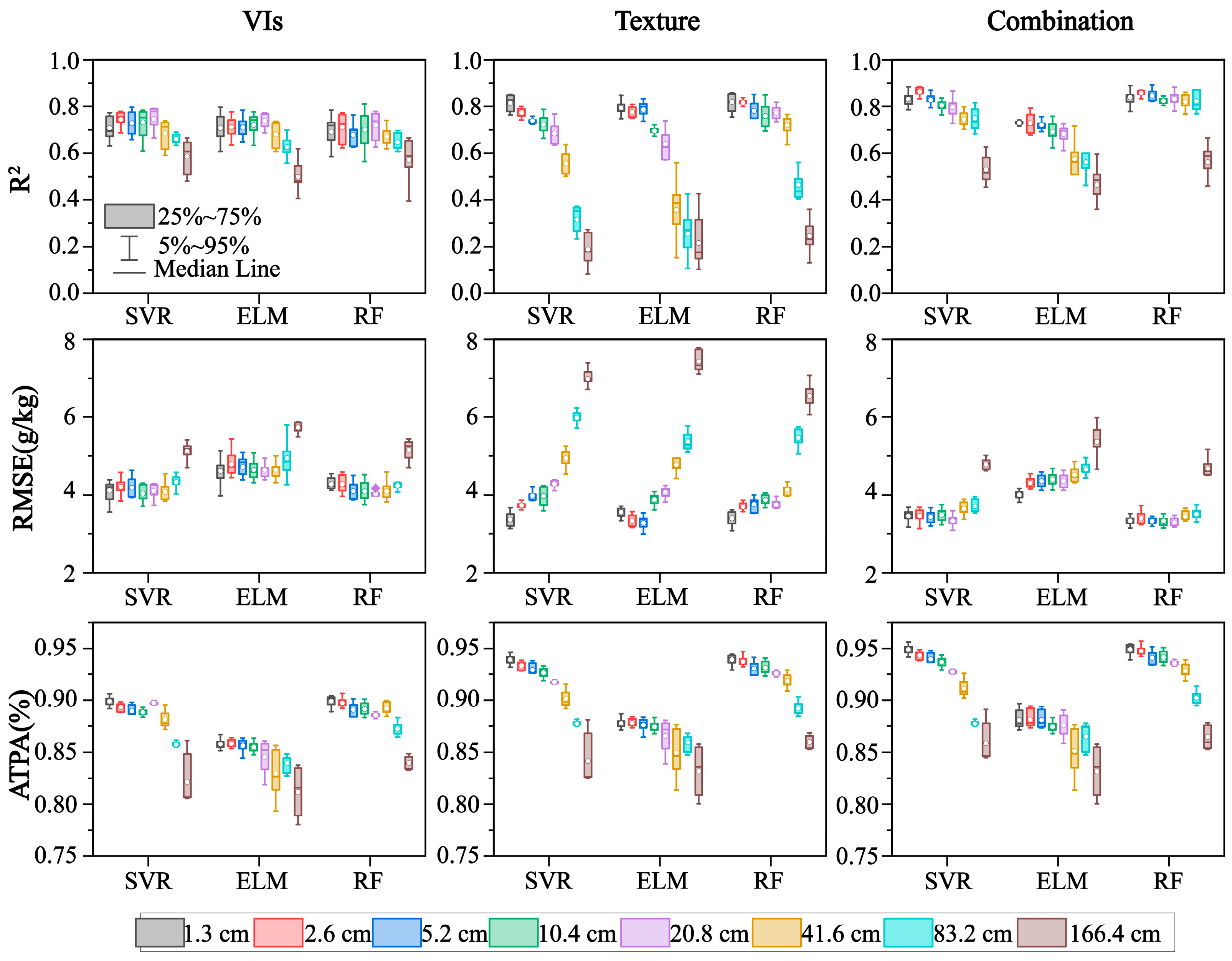

3.5. Performance of Three Machine Learning Techniques in LNC Estimation at Different Ground Resolutions

4. Discussion

4.1. The Combination of VIs and Texture Improves the Accuracy of LNC Estimation

4.2. Comparison of the Four Regression Methods

4.3. The Optimal Resolution for LNC Estimation

4.4. The Physiological Basis of VIs and Texture Features in LNC Estimation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Camera | Parameters | UAV | Parameters |

|---|---|---|---|

| Resolution | 4000 × 3000 | UAV name | DJI-Mavic Pro |

| Image DPI | 72 dpi | Flying height | 10 m |

| Bit depth | 8 | Flying Speed | 2 m/s |

| Aperture | f/5 | Takeoff weight | 734 g |

| Exposure | 1/1250 s | ||

| ISO | ISO-1600 | ||

| Focal length | 26 mm | ||

| Field of view | 64° |

| Validation | MLT | Input Data Types | Ground Resolutions (cm) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1.3 | 2.6 | 5.2 | 10.4 | 20.8 | 41.6 | 83.2 | 166.4 | |||

| R2 | SVR | VIs | 0.71 | 0.75 | 0.73 | 0.73 | 0.75 | 0.69 | 0.66 | 0.59 |

| Texture | 0.82 | 0.77 | 0.74 | 0.72 | 0.69 | 0.56 | 0.32 | 0.19 | ||

| Combination | 0.83 | 0.86 | 0.83 | 0.80 | 0.80 | 0.75 | 0.75 | 0.53 | ||

| ELM | VIs | 0.71 | 0.71 | 0.71 | 0.72 | 0.74 | 0.68 | 0.63 | 0.50 | |

| Texture | 0.79 | 0.78 | 0.79 | 0.70 | 0.64 | 0.36 | 0.26 | 0.22 | ||

| Combination | 0.73 | 0.73 | 0.72 | 0.69 | 0.68 | 0.58 | 0.56 | 0.46 | ||

| RF | VIs | 0.69 | 0.71 | 0.68 | 0.70 | 0.72 | 0.67 | 0.65 | 0.57 | |

| Texture | 0.82 | 0.82 | 0.78 | 0.76 | 0.77 | 0.72 | 0.46 | 0.25 | ||

| Combination | 0.84 | 0.86 | 0.85 | 0.83 | 0.83 | 0.82 | 0.82 | 0.56 | ||

| RMSE | SVR | VIs | 4.11 | 4.21 | 4.19 | 4.04 | 4.11 | 4.09 | 4.34 | 5.13 |

| Texture | 3.38 | 3.73 | 3.99 | 3.97 | 4.29 | 4.94 | 5.98 | 6.98 | ||

| Combination | 3.46 | 3.46 | 3.44 | 3.49 | 3.35 | 3.65 | 3.72 | 4.79 | ||

| ELM | VIs | 4.62 | 4.79 | 4.72 | 4.65 | 4.65 | 4.65 | 4.95 | 5.74 | |

| Texture | 3.56 | 3.34 | 3.29 | 3.89 | 4.07 | 4.78 | 5.39 | 7.43 | ||

| Combination | 4.01 | 4.31 | 4.35 | 4.40 | 4.36 | 4.52 | 4.67 | 5.38 | ||

| RF | VIs | 4.28 | 4.28 | 4.10 | 4.09 | 4.03 | 4.10 | 4.25 | 5.17 | |

| Texture | 3.40 | 3.71 | 3.76 | 3.87 | 3.75 | 4.14 | 5.47 | 6.54 | ||

| Combination | 3.34 | 3.41 | 3.33 | 3.34 | 3.30 | 3.46 | 3.51 | 4.68 | ||

| ATPA | SVR | VIs | 0.90 | 0.89 | 0.89 | 0.89 | 0.90 | 0.88 | 0.86 | 0.82 |

| Texture | 0.94 | 0.93 | 0.93 | 0.93 | 0.92 | 0.90 | 0.88 | 0.84 | ||

| Combination | 0.95 | 0.94 | 0.94 | 0.94 | 0.93 | 0.91 | 0.88 | 0.86 | ||

| ELM | VIs | 0.86 | 0.86 | 0.86 | 0.85 | 0.85 | 0.83 | 0.84 | 0.81 | |

| Texture | 0.88 | 0.88 | 0.88 | 0.87 | 0.87 | 0.85 | 0.86 | 0.83 | ||

| Combination | 0.88 | 0.88 | 0.88 | 0.87 | 0.88 | 0.85 | 0.87 | 0.83 | ||

| RF | VIs | 0.90 | 0.90 | 0.89 | 0.89 | 0.89 | 0.89 | 0.87 | 0.84 | |

| Texture | 0.94 | 0.94 | 0.93 | 0.93 | 0.93 | 0.92 | 0.89 | 0.86 | ||

| Combination | 0.95 | 0.95 | 0.94 | 0.94 | 0.94 | 0.93 | 0.90 | 0.87 | ||

| Year | Hydrolyzable N (mg kg−1) | Olsen-P (mg kg−1) | Available-K (mg kg−1) | Organic Matter (g kg−1) | pH |

|---|---|---|---|---|---|

| 2019 | 186.7 | 78.7 | 332.0 | 21.9 | 7.82 |

| 2020 | 44.3 | 19.0 | 486.0 | 15.5 | 8.17 |

References

- Hou, Z.N.; Li, P.F.; Li, B.G.; Gong, J.; Wang, Y.N. Effects of fertigation scheme on N uptake and N use efficiency in cotton. Plant Soil 2007, 290, 115–126. [Google Scholar] [CrossRef]

- Ata-Ul-Karim, S.T.; Zhu, Y.; Cao, Q.; Rehmani, M.I.A.; Cao, W.X.; Tang, L. In-season assessment of grain protein and amylose content in rice using critical nitrogen dilution curve. Eur. J. Agron. 2017, 90, 139–151. [Google Scholar] [CrossRef]

- Bodirsky, B.L.; Popp, A.; Lotze-Campen, H.; Dietrich, J.P.; Rolinski, S.; Weindl, I.; Schmitz, C.; Muller, C.; Bonsch, M.; Humpenoder, F.; et al. Reactive nitrogen requirements to feed the world in 2050 and potential to mitigate nitrogen pollution. Nat. Commun. 2014, 5, 38–58. [Google Scholar] [CrossRef] [PubMed]

- Yao, X.; Zhu, Y.; Tian, Y.C.; Feng, W.; Cao, W.X. Exploring hyperspectral bands and estimation indices for leaf nitrogen accumulation in wheat. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 89–100. [Google Scholar] [CrossRef]

- Li, S.Y.; Ding, X.Z.; Kuang, Q.L.; Ata-Ul-Karim, S.T.; Cheng, T.; Liu, X.J.; Tan, Y.C.; Zhu, Y.; Cao, W.X.; Cao, Q. Potential of UAV-Based Active Sensing for Monitoring Rice Leaf Nitrogen Status. Front. Plant Sci. 2018, 9, 1834. [Google Scholar] [CrossRef] [PubMed]

- LaCapra, V.C.; Melack, J.M.; Gastil, M.; Valeriano, D. Remote sensing of foliar chemistry of inundated rice with imaging spectrometry. Remote Sens. Environ. 1996, 55, 50–58. [Google Scholar] [CrossRef]

- Blaes, X.; Chome, G.; Lambert, M.J.; Traore, P.S.; Schut, A.G.T.; Defourny, P. Quantifying Fertilizer Application Response Variability with VHR Satellite NDVI Time Series in a Rainfed Smallholder Cropping System of Mali. Remote Sens. 2016, 8, 531. [Google Scholar] [CrossRef]

- Boegh, E.; Soegaard, H.; Broge, N.; Hasager, C.B.; Jensen, N.O.; Schelde, K.; Thomsen, A. Airborne multispectral data for quantifying leaf area index, nitrogen concentration, and photosynthetic efficiency in agriculture. Remote Sens. Environ. 2002, 81, 179–193. [Google Scholar] [CrossRef]

- Tilling, A.K.; O’Leary, G.J.; Ferwerda, J.G.; Jones, S.D.; Fitzgerald, G.J.; Rodriguez, D.; Belford, R. Remote sensing of nitrogen and water stress in wheat. Field Crops Res. 2007, 104, 77–85. [Google Scholar] [CrossRef]

- Yao, X.; Ren, H.; Cao, Z.; Tian, Y.; Cao, W.; Zhu, Y.; Cheng, T. Detecting leaf nitrogen content in wheat with canopy hyperspectrum under different soil backgrounds. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 114–124. [Google Scholar] [CrossRef]

- Schut, A.G.T.; Traore, P.C.S.; Blaes, X.; de By, R.A. Assessing yield and fertilizer response in heterogeneous smallholder fields with UAVs and satellites. Field Crops Res. 2018, 221, 98–107. [Google Scholar] [CrossRef]

- Amaral, L.R.; Molin, J.P.; Portz, G.; Finazzi, F.B.; Cortinove, L. Comparison of crop canopy reflectance sensors used to identify sugarcane biomass and nitrogen status. Precis. Agric. 2015, 16, 15–28. [Google Scholar] [CrossRef]

- Jiang, J.L.; Cai, W.D.; Zheng, H.B.; Cheng, T.; Tian, Y.C.; Zhu, Y.; Ehsani, R.; Hu, Y.Q.; Niu, Q.S.; Gui, L.J.; et al. Using Digital Cameras on an Unmanned Aerial Vehicle to Derive Optimum Color Vegetation Indices for Leaf Nitrogen Concentration Monitoring in Winter Wheat. Remote Sens. 2019, 11, 2667. [Google Scholar] [CrossRef]

- Lu, N.; Zhou, J.; Han, Z.X.; Li, D.; Cao, Q.; Yao, X.; Tian, Y.C.; Zhu, Y.; Cao, W.X.; Cheng, T. Improved estimation of aboveground biomass in wheat from RGB imagery and point cloud data acquired with a low-cost unmanned aerial vehicle system. Plant Methods 2019, 15, 17. [Google Scholar] [CrossRef] [PubMed]

- Prey, L.; Schmidhalter, U. Sensitivity of Vegetation Indices for Estimating Vegetative N Status in Winter Wheat. Sensors 2019, 19, 3712. [Google Scholar] [CrossRef] [PubMed]

- Zhou, K.; Cheng, T.; Zhu, Y.; Cao, W.X.; Ustin, S.L.; Zheng, H.B.; Yao, X.; Tian, Y.C. Assessing the Impact of Spatial Resolution on the Estimation of Leaf Nitrogen Concentration Over the Full Season of Paddy Rice Using Near-Surface Imaging Spectroscopy Data. Front. Plant Sci. 2018, 9, 964. [Google Scholar] [CrossRef]

- Zhao, B.; Ata-Ul-Karim, S.T.; Yao, X.; Tian, Y.C.; Cao, W.X.; Zhu, Y.; Liu, X.J. A New Curve of Critical Nitrogen Concentration Based on Spike Dry Matter for Winter Wheat in Eastern China. PLoS ONE 2016, 11, e0164545. [Google Scholar] [CrossRef]

- Yue, J.B.; Yang, G.J.; Tian, Q.J.; Feng, H.K.; Xu, K.J.; Zhou, C.Q. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Zheng, H.B.; Ma, J.F.; Zhou, M.; Li, D.; Yao, X.; Cao, W.X.; Zhu, Y.; Cheng, T. Enhancing the Nitrogen Signals of Rice Canopies across Critical Growth Stages through the Integration of Textural and Spectral Information from Unmanned Aerial Vehicle (UAV) Multispectral Imagery. Remote Sens. 2020, 12, 957. [Google Scholar] [CrossRef]

- Zhang, X.W.; Zhang, K.F.; Wu, S.Q.; Shi, H.T.; Sun, Y.Q.; Zhao, Y.D.; Fu, E.J.; Chen, S.; Bian, C.F.; Ban, W. An Investigation of Winter Wheat Leaf Area Index Fitting Model Using Spectral and Canopy Height Model Data from Unmanned Aerial Vehicle Imagery. Remote Sens. 2022, 14, 5087. [Google Scholar] [CrossRef]

- Xu, C.; Ding, Y.L.; Zheng, X.M.; Wang, Y.Q.; Zhang, R.; Zhang, H.Y.; Dai, Z.W.; Xie, Q.Y. A Comprehensive Comparison of Machine Learning and Feature Selection Methods for Maize Biomass Estimation Using Sentinel-1 SAR, Sentinel-2 Vegetation Indices, and Biophysical Variables. Remote Sens. 2022, 14, 4083. [Google Scholar] [CrossRef]

- Zheng, H.B.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Tian, Y.C.; Cao, W.X.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2019, 20, 611–629. [Google Scholar] [CrossRef]

- Singh, B.; Singh, Y.; Ladha, J.K.; Bronson, K.F.; Balasubramanian, V.; Singh, J.; Khind, C.S. Chlorophyll meter- and leaf color chart-based nitrogen management for rice and wheat in northwestern India. Agron. J. 2002, 94, 821–829. [Google Scholar] [CrossRef]

- Niu, Y.X.; Zhang, L.Y.; Zhang, H.H.; Han, W.T.; Peng, X.S. Estimating Above-Ground Biomass of Maize Using Features Derived from UAV-Based RGB Imagery. Remote Sens. 2019, 11, 1261. [Google Scholar] [CrossRef]

- Ma, Y.R.; Ma, L.L.; Zhang, Q.; Huang, C.P.; Yi, X.; Chen, X.Y.; Hou, T.Y.; Lv, X.; Zhang, Z. Cotton Yield Estimation Based on Vegetation Indices and Texture Features Derived from RGB Image. Front. Plant Sci. 2022, 13, 925986. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.Y.; Li, D.; Wu, M.Q.; Zhao, W. Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Bremner, J.M. Recent research on problems in the use of urea as a nitrogen fertilizer. Fertil. Res. 1995, 42, 321–329. [Google Scholar] [CrossRef]

- Haralick, R.M.; Sabaretnam, K. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Kawashima, S.; Nakatani, M. An algorithm for estimating chlorophyll content in leaves using a video camera. Ann. Bot. 1998, 81, 49–54. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially Located Platform and Aerial Photography for Documentation of Grazing Impacts on Wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Johnson, D.E.; Harris, N.R.; Louhaichi, M.; Casady, G.M.; Borman, M.M. Mapping selected noxious weeds using remote sensing and geographic information systems. Abstr. Pap. Am. Chem. Soc. 2001, 221, 48. [Google Scholar]

- Woebbecke, D.M.; Meyer, G.E.; Vonbargen, K.; Mortensen, D.A. Color Indexes for Weed Identification under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Li, D.; Zhou, X.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Evaluation of RGB, Color-Infrared and Multispectral Images Acquired from Unmanned Aerial Systems for the Estimation of Nitrogen Accumulation in Rice. Remote Sens. 2018, 10, 824. [Google Scholar] [CrossRef]

- Mutanga, O.; Adam, E.; Cho, M.A. High density biomass estimation for wetland vegetation using WorldView-2 imagery and random forest regression algorithm. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 399–406. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhou, H.M.; Ding, X.J.; Zhang, R. Extreme Learning Machine for Regression and Multiclass Classification. IEEE Trans. Syst. Man Cybern. Part B-Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Gao, Y.K.; Lu, D.S.; Li, G.Y.; Wang, G.X.; Chen, Q.; Liu, L.J.; Li, D.Q. Comparative Analysis of Modeling Algorithms for Forest Aboveground Biomass Estimation in a Subtropical Region. Remote Sens. 2018, 10, 627. [Google Scholar] [CrossRef]

- Lin, L.; Wang, F.; Xie, X.L.; Zhong, S.S. Random forests-based extreme learning machine ensemble for multi-regime time series prediction. Expert Syst. Appl. 2017, 83, 164–176. [Google Scholar] [CrossRef]

- Jin, X.L.; Yang, G.J.; Xu, X.G.; Yang, H.; Feng, H.K.; Li, Z.H.; Shen, J.X.; Zhao, C.J.; Lan, Y.B. Combined Multi-Temporal Optical and Radar Parameters for Estimating LAI and Biomass in Winter Wheat Using HJ and RADARSAR-2 Data. Remote Sens. 2015, 7, 13251–13272. [Google Scholar] [CrossRef]

- Grossman, Y.L.; Ustin, S.L.; Jacquemoud, S.; Sanderson, E.W.; Schmuck, G.; Verdebout, J. Critique of stepwise multiple linear regression for the extraction of leaf biochemistry information from leaf reflectance data. Remote Sens. Environ. 1996, 56, 182–193. [Google Scholar] [CrossRef]

- Jia, F.F.; Liu, G.S.; Liu, D.S.; Zhang, Y.Y.; Fan, W.G.; Xing, X.X. Comparison of different methods for estimating nitrogen concentration in flue-cured tobacco leaves based on hyperspectral reflectance. Field Crops Res. 2013, 150, 108–114. [Google Scholar] [CrossRef]

- Gleason, C.J.; Im, J. Forest biomass estimation from airborne LiDAR data using machine learning approaches. Remote Sens. Environ. 2012, 125, 80–91. [Google Scholar] [CrossRef]

- Wang, L.A.; Zhou, X.D.; Zhu, X.K.; Dong, Z.D.; Guo, W.S. Estimation of biomass in wheat using random forest regression algorithm and remote sensing data. Crop J. 2016, 4, 212–219. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, P. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Nasar, J.; Wang, G.Y.; Ahmad, S.; Muhammad, I.; Zeeshan, M.; Gitari, H.; Adnan, M.; Fahad, S.; Khalid, M.H.B.; Zhou, X.B.; et al. Nitrogen fertilization coupled with iron foliar application improves the photosynthetic characteristics, photosynthetic nitrogen use efficiency, and the related enzymes of maize crops under different planting patterns. Front. Plant Sci. 2022, 13, 988055. [Google Scholar] [CrossRef]

- Zheng, J.C.; Zhang, H.; Yu, J.; Zhan, Q.W.; Li, W.Y.; Xu, F.; Wang, G.J.; Liu, T.; Li, J.C. Late Sowing and Nitrogen Application to Optimize Canopy Structure and Grain Yield of Bread Wheat in a Fluctuating Climate. Turk. J. Field Crops 2021, 26, 170–179. [Google Scholar] [CrossRef]

| Experiment | Sowing Date | Date of UAV Flights | Date of Field Sampling | Growth Stage |

|---|---|---|---|---|

| #1 | 24 April 2019 | 1 July 2019 | 1 July 2019 | Full bud stage |

| 16 July 2019 | 16 July 2019 | Full flowering stage | ||

| 7 August 2019 | 7 August 2019 | Early full-bolling stage | ||

| 16 August 2019 | 16 August 2019 | Late full-bolling stage | ||

| 7 September 2019 | 8 September 2019 | Boll opening stage | ||

| #2 | 18 April 2020 | 14 June 2020 | 15 June 2020 | Full bud stage |

| 4 July 2020 | 4 July 2020 | Full flowering stage | ||

| 27 July 2020 | 27 July 2020 | Full-bolling stage | ||

| 21 August 2020 | 21 August 2020 | Late full-boll stage | ||

| 13 September 2020 | 13 September 2020 | Boll opening stage |

| Task | Parameter Setup |

|---|---|

| Aligning image | Accuracy: high; Pair selection: generic; Key points: 40,000; Tie points: 4000 |

| Building mesh | Surface type: height field Source data: dense cloud; Face count: high |

| Positioning guided marker | Manual positioning of markers on the even 16 GCPs for all the photos |

| Optimizing cameras | Default settings |

| Building dense point cloud | Quality: high; Depth filtering: mild |

| Building texture | Mapping mode: Generic; Blending mode: Mosaic; Texture size/count: 4096 |

| Building DEM | Surface: Mesh; Other parameters: default |

| Building orthomosaic | Surface: Mesh; Other parameters: default |

| Textures and Abbreviations | Bands | Windows | Ground Resolutions |

|---|---|---|---|

| Variance (VAR), Entropy (EN), Correlation (COR), | R, G, B | 3 × 3 | 1.3 cm, 2.6 cm, 5.2 cm, |

| Homogeneity (HOM), Second Moment (SE), | 10.4 cm, 20.8 cm, 41.6 cm, | ||

| Dissimilarity (DIS), Contrast (CON), Mean (MEA) | 83.2 cm, 166.4 cm |

| Index | Name | Formulation | References |

|---|---|---|---|

| IKAW | Kawashima Index | [31] | |

| RGBVI | Red Green Blue Vegetation Index | [32] | |

| MGRVI | Modified Green Red Vegetation Index | [19] | |

| GLI | Green Leaf Index | [33] | |

| ExGR | Excess Green minus Excess Red | [34] | |

| GRVI | Green Red Vegetation Index | [35] | |

| ExG | Excess Green Index | [36] | |

| VARI | Visible Atmospherically Resistant Index | [37] | |

| GBRI | Green blue ratio index | [38] | |

| GRRI | Green red ratio index | [39] |

| Input Variables | Technique | Calibration (N = 288) | Validation (N = 72) | ||||

|---|---|---|---|---|---|---|---|

| R2 | RMSE (g/Kg) | AIC | R2 | RMSE (g/Kg) | rRMSE (%) | ||

| VIs | SMLR | 0.72 | 4.11 | 680.61 | 0.74 | 4.17 | 12.78 |

| SVR | 0.70 | 4.12 | 410.19 | 0.71 | 4.88 | 9.43 | |

| ELM | 0.68 | 4.25 | 408.50 | 0.68 | 5.94 | 9.72 | |

| RF | 0.76 | 3.72 | 414.83 | 0.77 | 4.98 | 8.51 | |

| Textures | SMLR | 0.83 | 3.21 | 678.53 | 0.83 | 3.31 | 8.05 |

| SVR | 0.82 | 3.17 | 386.96 | 0.82 | 3.72 | 7.89 | |

| ELM | 0.77 | 3.66 | 425.28 | 0.77 | 3.13 | 9.10 | |

| RF | 0.85 | 2.85 | 378.59 | 0.85 | 3.61 | 7.09 | |

| VIs and Textures | SMLR | 0.84 | 3.11 | 678.53 | 0.84 | 3.42 | 7.75 |

| SVR | 0.83 | 3.13 | 386.96 | 0.85 | 3.57 | 7.79 | |

| ELM | 0.78 | 3.63 | 425.28 | 0.79 | 4.20 | 9.04 | |

| RF | 0.87 | 2.80 | 378.59 | 0.87 | 3.14 | 7.00 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.; Liu, Y.; Lu, X.; Jiang, J.; Ma, X.; Wen, M.; Ma, F. Integrating Unmanned Aerial Vehicle-Derived Vegetation and Texture Indices for the Estimation of Leaf Nitrogen Concentration in Drip-Irrigated Cotton under Reduced Nitrogen Treatment and Different Plant Densities. Agronomy 2024, 14, 120. https://doi.org/10.3390/agronomy14010120

Li M, Liu Y, Lu X, Jiang J, Ma X, Wen M, Ma F. Integrating Unmanned Aerial Vehicle-Derived Vegetation and Texture Indices for the Estimation of Leaf Nitrogen Concentration in Drip-Irrigated Cotton under Reduced Nitrogen Treatment and Different Plant Densities. Agronomy. 2024; 14(1):120. https://doi.org/10.3390/agronomy14010120

Chicago/Turabian StyleLi, Minghua, Yang Liu, Xi Lu, Jiale Jiang, Xuehua Ma, Ming Wen, and Fuyu Ma. 2024. "Integrating Unmanned Aerial Vehicle-Derived Vegetation and Texture Indices for the Estimation of Leaf Nitrogen Concentration in Drip-Irrigated Cotton under Reduced Nitrogen Treatment and Different Plant Densities" Agronomy 14, no. 1: 120. https://doi.org/10.3390/agronomy14010120

APA StyleLi, M., Liu, Y., Lu, X., Jiang, J., Ma, X., Wen, M., & Ma, F. (2024). Integrating Unmanned Aerial Vehicle-Derived Vegetation and Texture Indices for the Estimation of Leaf Nitrogen Concentration in Drip-Irrigated Cotton under Reduced Nitrogen Treatment and Different Plant Densities. Agronomy, 14(1), 120. https://doi.org/10.3390/agronomy14010120