Assessment of the Performance of a Field Weeding Location-Based Robot Using YOLOv8

Abstract

:1. Introduction

2. Materials and Methods

2.1. Field and Crop

2.2. Robot

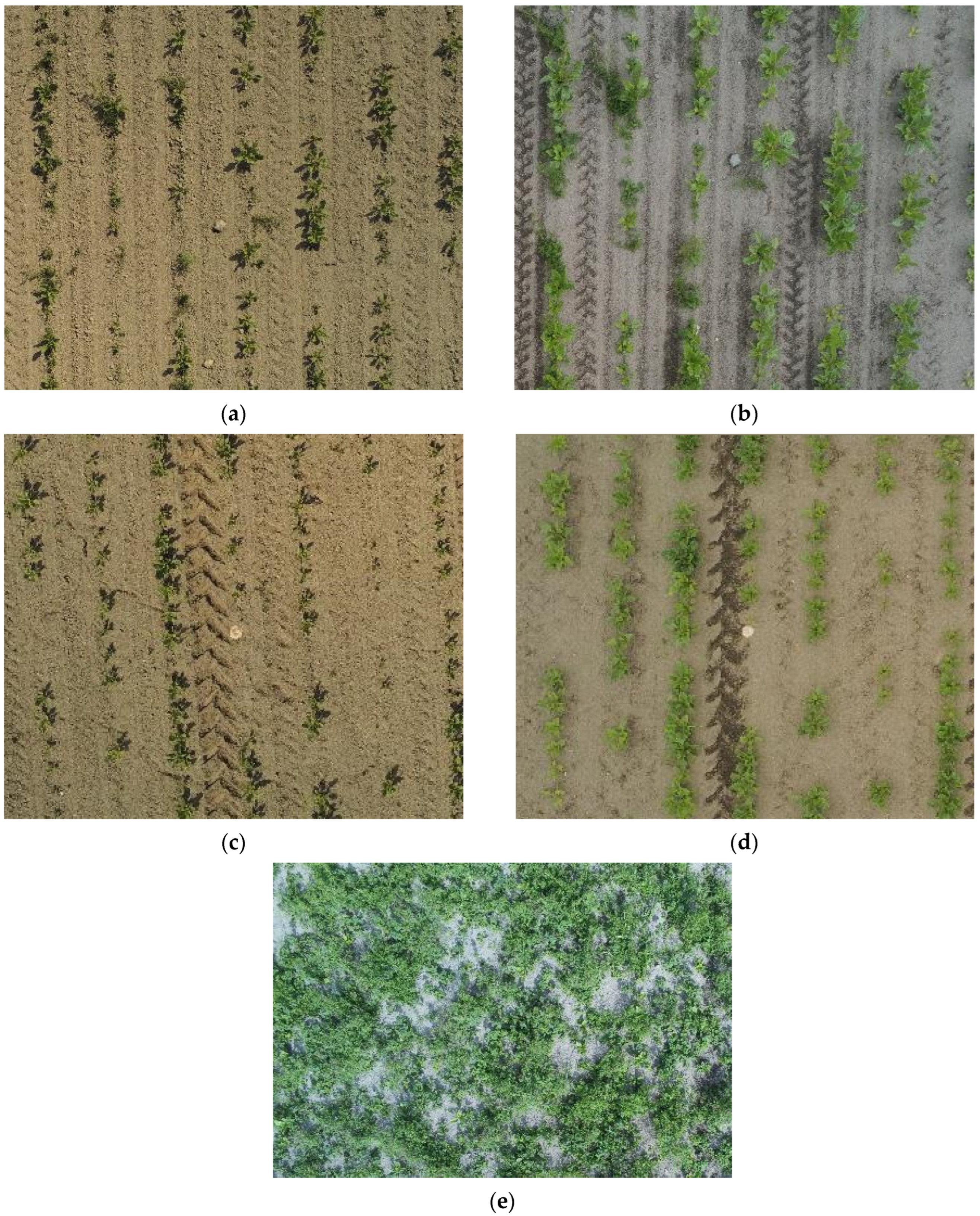

2.3. Image Recording

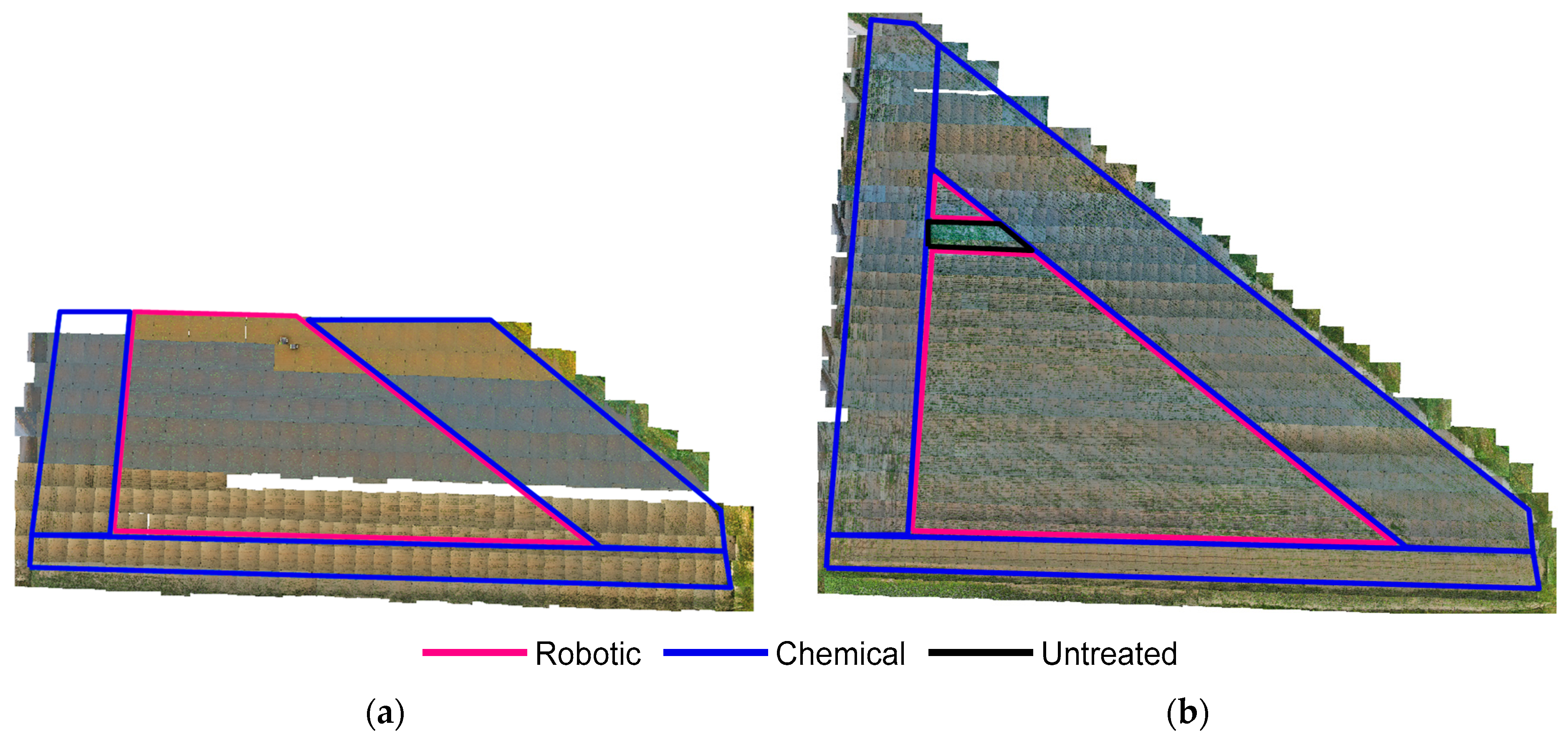

2.4. Field Mapping

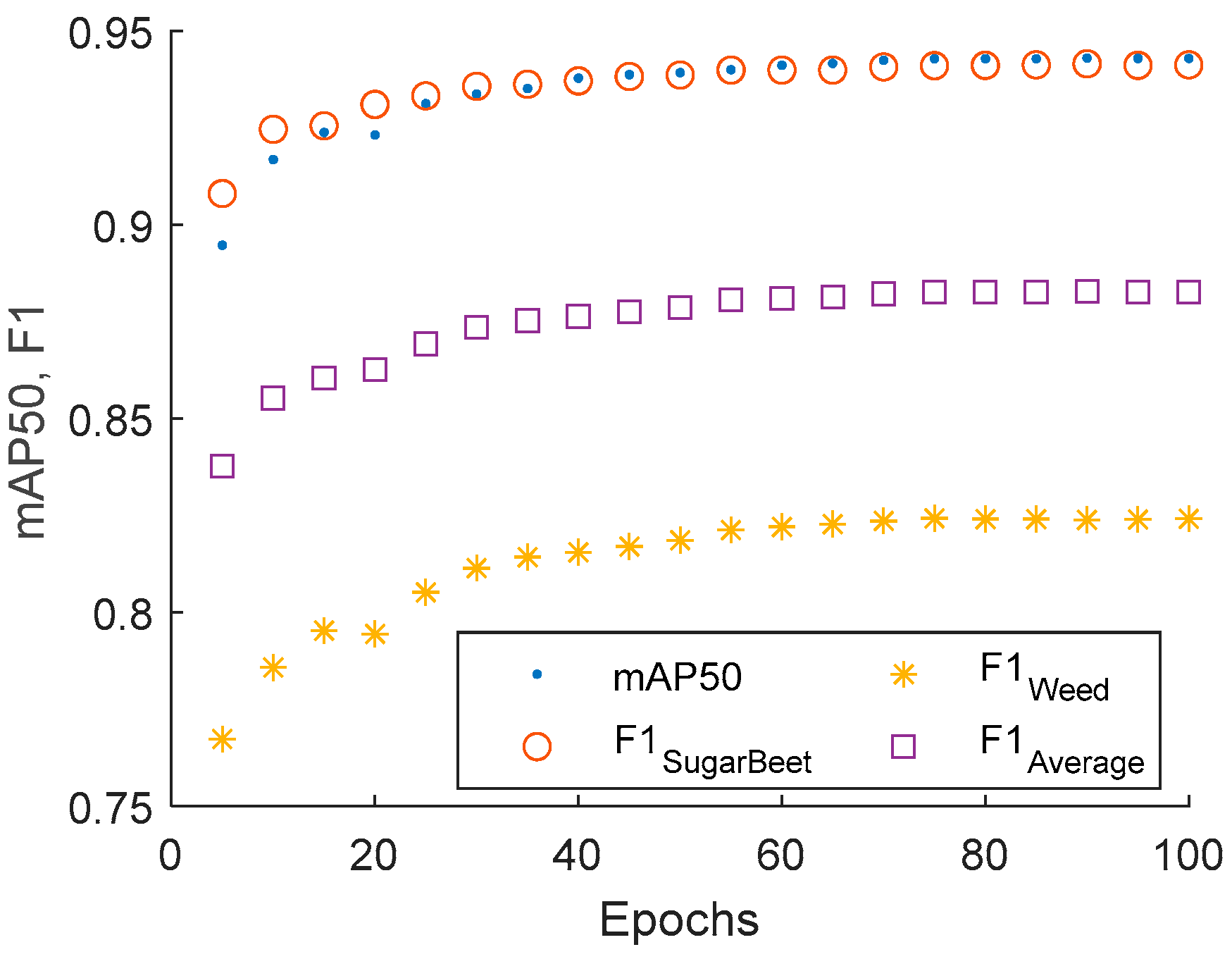

2.5. Plant Detection

2.6. Robot Performance Analysis

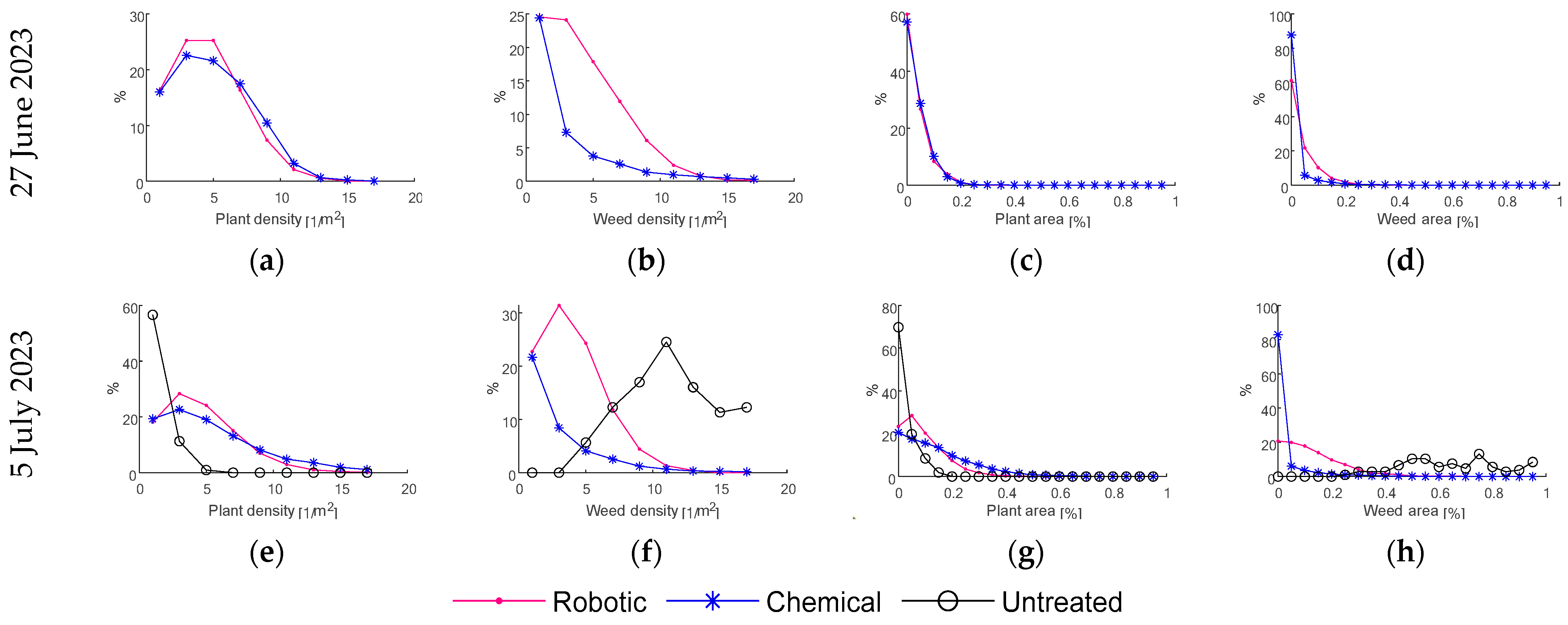

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Oliveira, L.F.P.; Moreira, A.P.; Silva, M.F. Advances in Agriculture Robotics: A State-of-the-Art Review and Challenges Ahead. Robotics 2021, 10, 52. [Google Scholar] [CrossRef]

- Gil, G.; Casagrande, D.E.; Pérez Cortés, L.; Verschae, R. Why the low adoption of robotics in the farms? Challenges for the establishment of commercial agricultural robots. Smart Agric. Technol. 2023, 3, 100069. [Google Scholar] [CrossRef]

- Vahdanjoo, M.; Gislum, R.; Sørensen, C.A.G. Operational, Economic, and Environmental Assessment of an Agricultural Robot in Seeding and Weeding Operations. AgriEngineering 2023, 5, 299–324. [Google Scholar] [CrossRef]

- Fountas, S.; Mylonas, N.; Malounas, I.; Rodias, E.; Hellmann, S.C.; Pekkeriet, E. Agricultural Robotics for Field Operations. Sensors 2020, 20, 2672. [Google Scholar] [CrossRef]

- Spykman, O.; Gabriel, A.; Ptacek, M.; Gandorfer, M. Farmers’ perspectives on field crop robots – Evidence from Bavaria, Germany. Comput. Electron. Agric. 2021, 186, 106176. [Google Scholar] [CrossRef]

- Qu, H.R.; Su, W.H. Deep Learning-BasedWeed–Crop Recognition for Smart Agricultural Equipment: A Review. Agronomy 2024, 14, 363. [Google Scholar] [CrossRef]

- Avrin, G.; Boffety, D.; Lardy-Fontan, S.; Regnier, R.; Rescoussie, R.; Barbosa, V. Design and validation of testing facilities for weeding robots as part of ROSE Challenge. In Proceedings of the IA (EPAI), Saint-Jacques-de-Compostelle (Virtual Conference), Spain, September 2020. [Google Scholar]

- Bawden, O.; Kulk, J.; Russell, R.; McCool, C.; English, A.; Dayoub, F.; Lehnert, C.; Perez, T. Robot for weed species plant-specific management. J. Field Robot. 2017, 34, 1179–1199. [Google Scholar] [CrossRef]

- Wu, X.; Aravecchia, S.; Lottes, P.; Stachniss, C.; Pradalier, C. Robotic weed control using automated weed and crop classification. J. Field Robot. 2020, 37, 322–340. [Google Scholar] [CrossRef]

- Jiang, W.; Quan, L.; Wei, G.; Chang, C.; Geng, T. A conceptual evaluation of a weed control method with post-damage application of herbicides: A composite intelligent intra-row weeding robot. Soil Tillage Res. 2023, 234, 105837. [Google Scholar] [CrossRef]

- Gerhards, R.; Risser, P.; Spaeth, M.; Saile, M.; Peteinatos, G. A comparison of seven innovative robotic weeding systems and reference herbicide strategies in sugar beet (Beta vulgaris subsp. vulgaris L.) and rapeseed (Brassica napus L.). Weed Res. 2024, 64, 42–53. [Google Scholar] [CrossRef]

- Li, Y.; Zhiqiang, G.; Feng, S.; Man, Z.; Xiuhua, L. Key technologies of machine vision for weeding robots: A review and benchmark. Comput. Electron. Agric. 2022, 196, 106880. [Google Scholar] [CrossRef]

- Murad, N.Y.; Mahmoodm, T.; Forkan, A.R.M.; Morshed, A.; Jayaraman, P.P.; Siddiqui, M.S. Weed Detection Using Deep Learning: A Systematic Literature Review. Sensors 2023, 23, 3670. [Google Scholar] [CrossRef] [PubMed]

- Hu, K.; Wang, Z.; Coleman, G.; Bender, A.; Yao, T.; Zeng, S.; Song, D.; Schumann, A.; Walsh, M. Deep learning techniques for in-crop weed recognition in large-scale grain production systems: A review. Precis. Agric. 2024, 25, 1–29. [Google Scholar] [CrossRef]

- Coleman, G.; Salter, W.; Walsh, M. OpenWeedLocator (OWL): An open-source, low-cost device for fallow weed detection. Sci. Rep. 2022, 12, 170. [Google Scholar] [CrossRef]

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J.; et al. DeepWeeds: A Multiclass Weed Species Image Dataset for Deep Learning. Sci. Rep. 2019, 9, 2058. [Google Scholar] [CrossRef]

- Zhang, W.; Miao, Z.; Li, N.; He, C.; Sun, T. Review of Current Robotic Approaches for Precision Weed Management. Curr. Robot. Rep. 2022, 3, 139–151. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, C.; Qiao, Y.; Zhang, Z.; Zhang, W.; Song, C. CNN feature based graph convolutional network for weed and crop recognition in smart farming. Comput. Electron. Agric. 2020, 174, 105450. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, Z.; Guo, Y.; Ma, Y.; Cao, W.; Chen, D.; Yang, S.; Gao, R. Weed Detection in Peanut Fields Based on Machine Vision. Agriculture 2022, 12, 1541. [Google Scholar] [CrossRef]

- Visentin, F.; Cremasco, S.; Sozzi, M.; Signorini, L.; Signorini, M.; Marinello, F.; Muradore, R. A mixed-autonomous robotic platform for intra-row and inter-row weed removal for precision agriculture. Comput. Electron. Agric. 2023, 214, 108270. [Google Scholar] [CrossRef]

- Gao, J.; French, A.P.; Pound, M.P.; He, Y.; Pridmore, T.P.; Pieters, J.G. Deep convolutional neural networks for image-based Convolvulus sepium detection in sugar beet fields. Plant Methods 2020, 16, 29. [Google Scholar] [CrossRef]

- Salazar-Gomez, A.; Darbyshire, M.; Gao, J.; Sklar, E.I.; Parsons, S. Towards practical object detection for weed spraying in precision agriculture. arXiv 2021, arXiv:2109.11048. [Google Scholar] [CrossRef]

- Guo, Z.; Goh, H.H.; Li, X.; Zhang, M.; Li, Y. WeedNet-R: A sugar beet field weed detection algorithm based on enhanced RetinaNet and context semantic fusion. Front. Plant Sci. 2023, 14, 1226329. [Google Scholar] [CrossRef] [PubMed]

- Chebrolu, N.; Lottes, P.; Schaefer, A.; Winterhalter, W.; Burgard, W.; Stachniss, C. Agricultural robot dataset for plant classification, localization and mapping on sugar beet fields. Int. J. Robot. Res. 2017, 36, 1045–1052. [Google Scholar] [CrossRef]

- Darbyshire, M.; Salazar-Gomez, A.; Gao, J.; Sklar, E.I.; Parsons, S. Towards practical object detection for weed spraying in precision agriculture. Front. Plant Sci. 2023, 14, 1183277. [Google Scholar] [CrossRef]

- Milioto, A.; Lottes, P.; Stachniss, C. Real-Time Semantic Segmentation of Crop and Weed for Precision Agriculture Robots Leveraging Background Knowledge in CNNs. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018. [Google Scholar] [CrossRef]

- Lottes, P.; Behley, J.; Chebrolu, N.; Milioto, A.; Stachniss, C. Robust joint stem detection and crop-weed classification using image sequences for plant-specific treatment in precision farming. J. Field Robot. 2019, 37, 20–34. [Google Scholar] [CrossRef]

- Bertoglio, R.; Mazzucchelli, A.; Catalano, N.; Matteucci, M. A comparative study of Fourier transform and CycleGAN as domain adaptation techniques for weed segmentation. Smart Agric. Technol. 2023, 4, 100188. [Google Scholar] [CrossRef]

- Magistri, F.; Weyler, J.; Gogoll, D.; Lottes, P.; Behley, J.; Petrinic, N.; Stachniss, C. From one field to another—Unsupervised domain adaptation for semantic segmentation in agricultural robotics. Comput. Electron. Agric. 2023, 212, 108114. [Google Scholar] [CrossRef]

- Fasiolo, D.T.; Scalera, L.; Maset, E.; Gasparetto, A. Towards autonomous mapping in agriculture: A review of supportive technologies for ground robotics. Robot. Auton. Syst. 2023, 169, 104514. [Google Scholar] [CrossRef]

| Robotically Weeded | Chemically Weeded | Untreated | ||||

|---|---|---|---|---|---|---|

| 27 June 2023 | 5 July 2023 | 27 June 2023 | 5 July 2023 | 27 June 2023 | 5 July 2023 | |

| Plant density, 1/m2 | 5.0 ± 2.7 | 5.0 ± 2.9 | 5.3 ± 3.0 | 5.7 ± 4.0 | NA | 1.5 ± 1.3 |

| Weed density, 1/m2 | 4.3 ± 3.1 | 4.3 ± 2.5 | 1.7 ± 3.4 | 1.4 ± 2.7 | NA | 12.0 ± 3.7 |

| Plant area, % | 5.4 ± 4.6 | 11.4 ± 8.2 | 5.6 ± 4.6 | 17.3 ± 14.2 | NA | 4.4 ± 4.2 |

| Weed area, % | 5.5 ± 5.6 | 15.2 ± 12.7 | 2.2 ± 5.9 | 3.5 ± 9.0 | NA | 69.3 ± 25.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Palva, R.; Kaila, E.; García-Pascual, B.; Bloch, V. Assessment of the Performance of a Field Weeding Location-Based Robot Using YOLOv8. Agronomy 2024, 14, 2215. https://doi.org/10.3390/agronomy14102215

Palva R, Kaila E, García-Pascual B, Bloch V. Assessment of the Performance of a Field Weeding Location-Based Robot Using YOLOv8. Agronomy. 2024; 14(10):2215. https://doi.org/10.3390/agronomy14102215

Chicago/Turabian StylePalva, Reetta, Eerikki Kaila, Borja García-Pascual, and Victor Bloch. 2024. "Assessment of the Performance of a Field Weeding Location-Based Robot Using YOLOv8" Agronomy 14, no. 10: 2215. https://doi.org/10.3390/agronomy14102215