Research on the Recognition Method of Tobacco Flue-Curing State Based on Bulk Curing Barn Environment

Abstract

1. Introduction

- (1)

- A color correction matrix was used to process the image data to construct a dataset of tobacco flue-curing state images in an bulk curing barn environment, providing a rich resource for model training and evaluation.

- (2)

- (3)

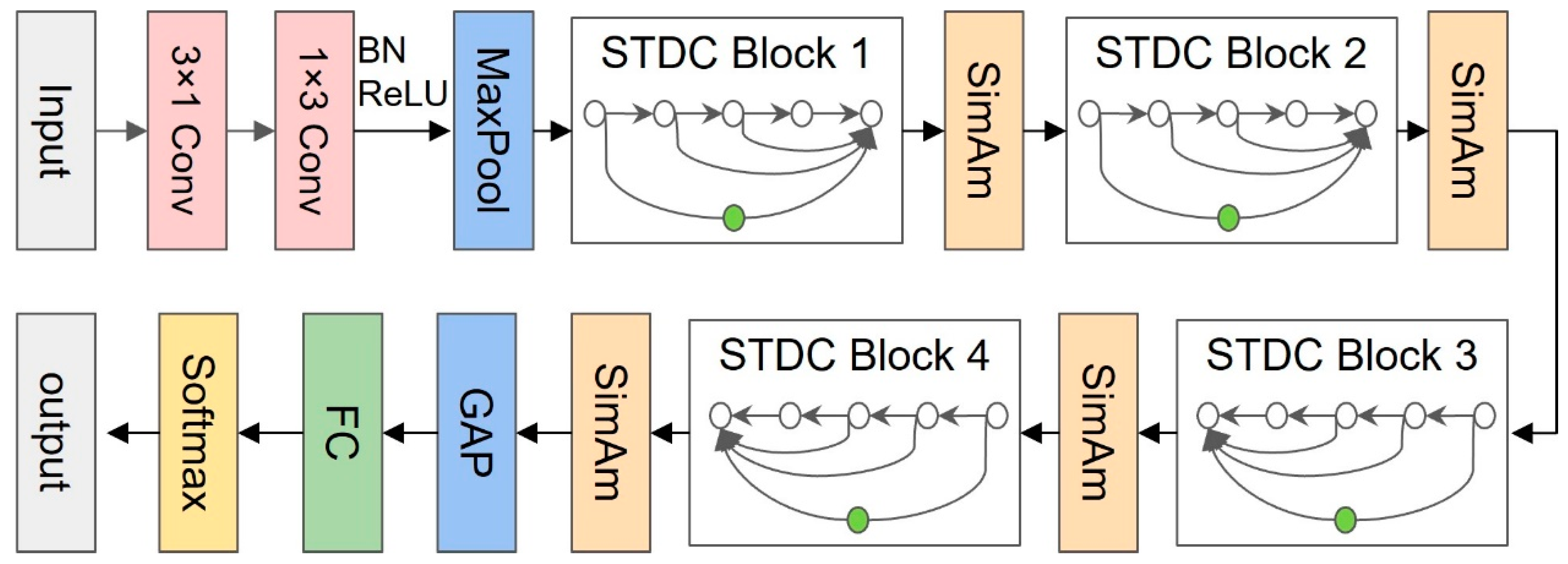

- The input layer of the model used spatially separable convolutional layers of 3 × 1 convolution and 1 × 3 convolution, which significantly improved the recognition accuracy of the model by combining the SimAm attention module [25] and setting different expansion rates [26] for the Depthwise Separable Convolution.

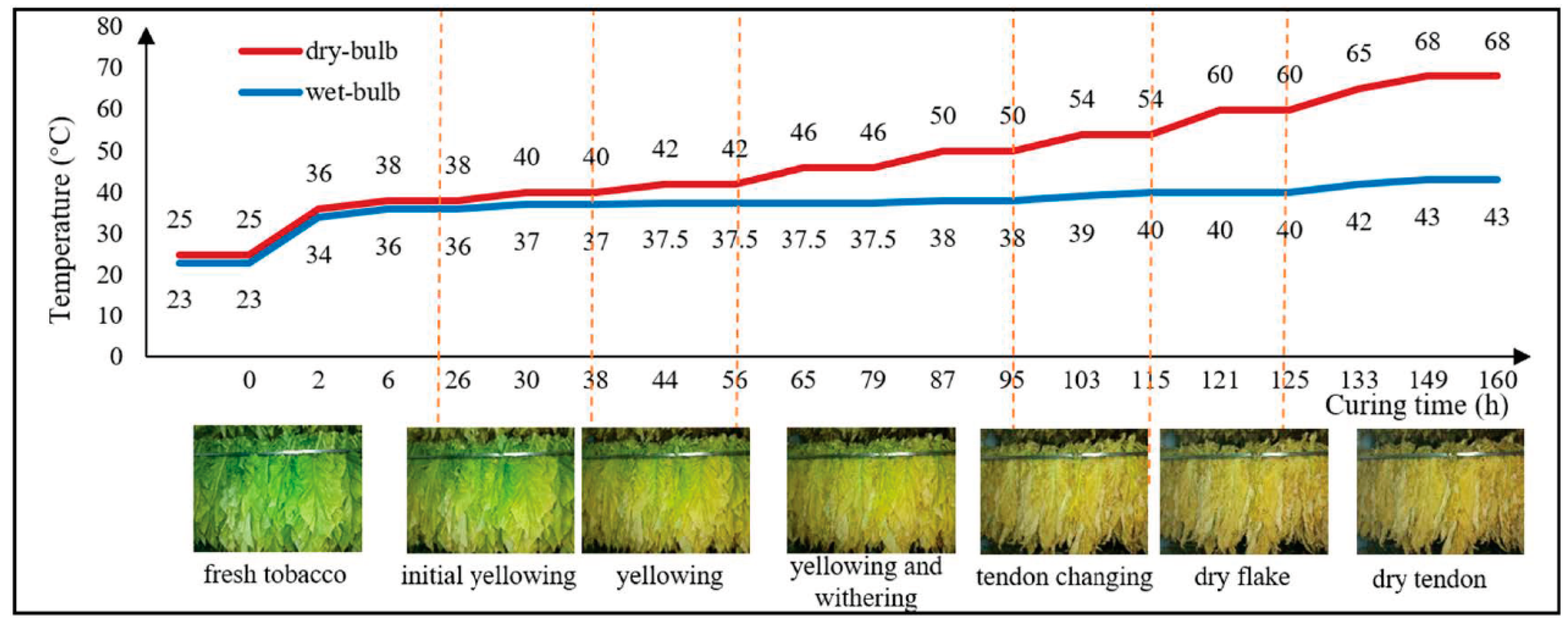

2. Materials and Methods

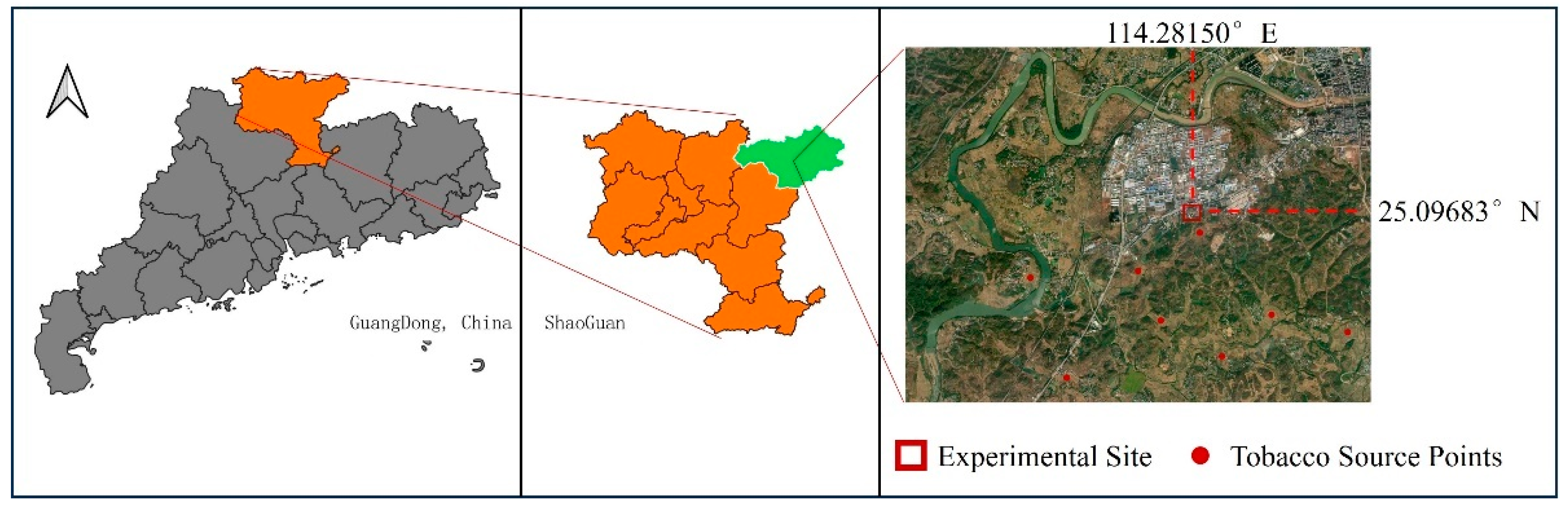

2.1. Image Acquisition

2.2. Building the Dataset

2.3. Building the Model

2.3.1. Improved Short-Term Dense Concatenate Module

2.3.2. Depthwise Separable Convolution

2.3.3. Dilated Convolution

2.3.4. SimAm Attention Module

3. Results

3.1. Test Environment and Evaluation Metrics

3.2. Inffuence of Color Calibration on Experimental Results

3.3. Ablation Experiment

3.4. Comparison of Results Using Different Attention Modules

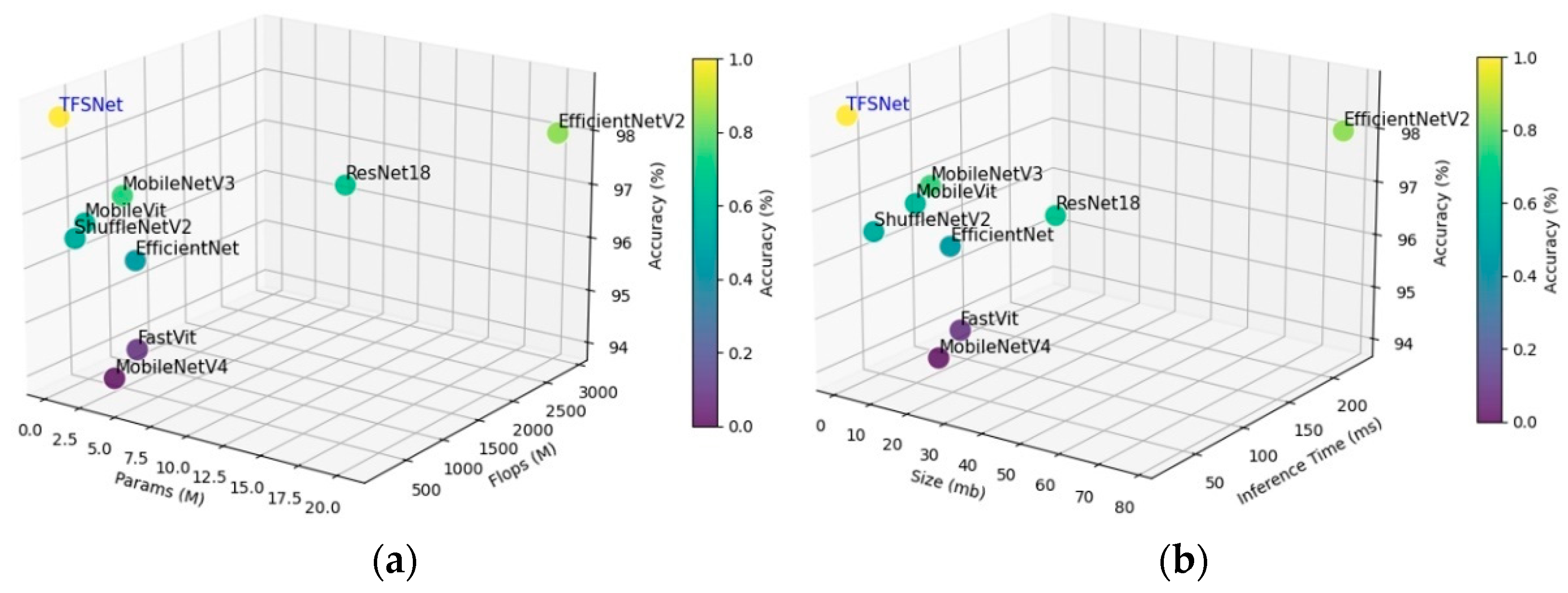

3.5. Comparative Analysis of the Different Models

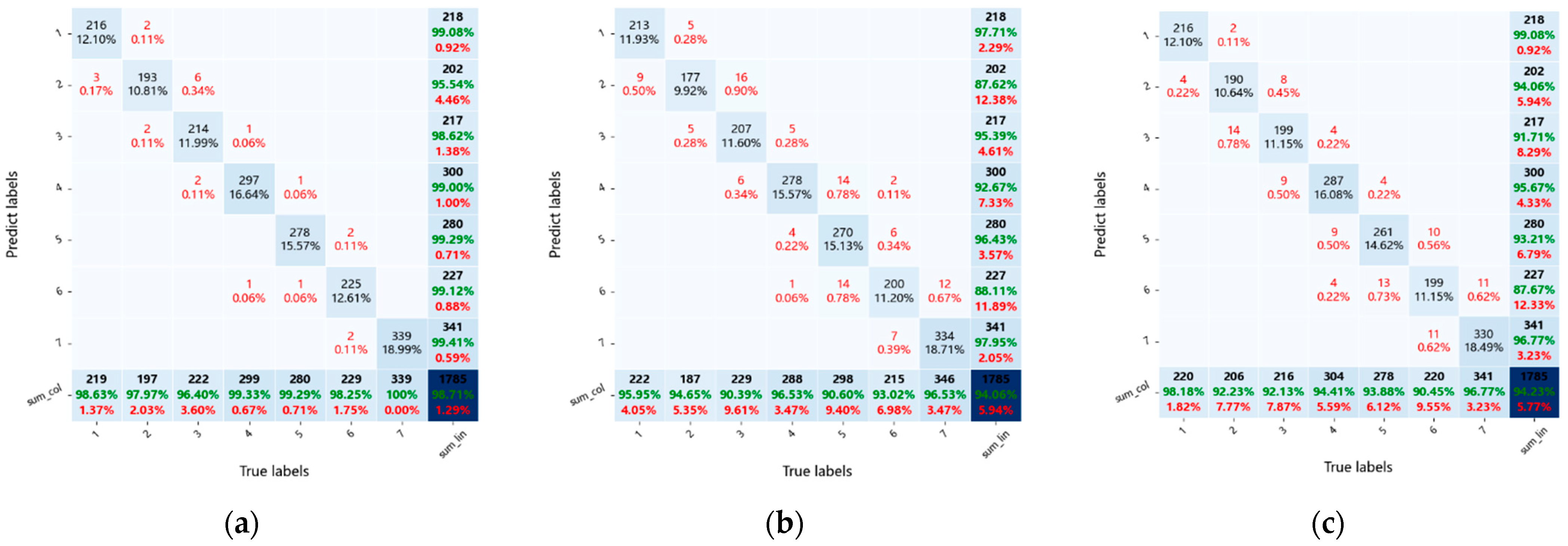

3.6. Results of Identification of Different States of Tobacco Flue-Curing

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| STDC | Short-Term Dense Concatenate |

| SSC | Spatially Separable Convolution |

| DSC | Depthwise Separable Convolution |

| DC | Dilated Convolution |

| TFSNet | Tobacco Flue-Curing State Recognition Network |

| CA | Coordinate Attention |

| SE | Squeeze and Excitation |

| CBAM | Convolutional Block Attention Module |

| ECA | Efffcient Channel Attention |

| SimAm | A Simple, Parameter-Free Attention Module |

References

- Fu, L.; Ye, L. China Statistical Yeakbook 2023; China Statistics Press: Beijing, China, 2023; pp. 327–404. ISBN 978-7-5230-0190-5.

- Wu, J.; Yang, S.X.; Tian, F. An adaptive neuro-fuzzy approach to bulk tobacco flue-curing control process. Dry. Technol. 2017, 35, 465–477. [Google Scholar] [CrossRef]

- Zhu, W.; Wang, Y.; Chen, L.; Wang, Z.; Li, B.; Wang, B. Effect of two-stage dehydration on retention of characteristic flavor components of flue-cured tobacco in rotary dryer. Dry. Technol. 2016, 34, 1621–1629. [Google Scholar] [CrossRef]

- Lu, X.R.; Li, L.G. The factors of affecting tobacco baking quality by using the three-step technology in Guizhou. Guizhou Agric. Sci. 2009, 7, 41–42. [Google Scholar]

- Sumner, P.E.; Moore, J.M. Harvesting and Curing Flue-Cured Tobacco; University of Georgia: Athens, GA, USA, 2009. [Google Scholar]

- Rehman, T.U.; Mahmud, M.S.; Chang, Y.K.; Jin, J.; Shin, J. Current and future applications of statistical machine learning algorithms for agricultural machine vision systems. Comput. Electron. Agric. 2019, 156, 585–605. [Google Scholar] [CrossRef]

- Zou, C.; Hu, X.; Huang, W.; Zhao, G.; Yang, X.; Jin, Y.; Gu, H.; Yan, F.; Li, Y.; Wu, Q.; et al. Different yellowing degrees and the industrial utilization of flue-cured tobacco leaves. Sci. Agric. 2019, 76, 1–9. [Google Scholar] [CrossRef]

- Lu, X.; Zhao, C.; Qin, Y.; Xie, L.; Wang, T.; Wu, Z.; Xu, Z. The application of hyperspectral images in the classification of fresh leaves’ maturity for flue-curing tobacco. Processes 2023, 11, 1249. [Google Scholar] [CrossRef]

- Ma, X.; Shen, J.; Liu, R.; Zhai, H. Choice of tobacco leaf features based on selected probability of particle swarm algorithm. In Proceedings of the 2016 Chinese Control and Decision Conference (CCDC), Yinchuan, China, 28–30 May 2016; pp. 3041–3044. [Google Scholar]

- Yin, Y.; Xiao, Y.; Yu, H. An image selection method for tobacco leave grading based on image information. Eng. Agric. Environ. Food 2015, 8, 148–154. [Google Scholar] [CrossRef]

- He, Y.; Wang, H.; Zhu, S.; Zeng, T.; Zhuang, Z.; Zuo, Y.; Zhang, K. Method for grade identification of tobacco based on machine vision. Trans. ASABE 2018, 61, 1487–1495. [Google Scholar] [CrossRef]

- Wang, H.; Jiang, H.; Xu, C.; Wang, D.; Yang, C.; Wang, B. Three-Stage Six-Step Flue-Curing Technology for Viginia Tobacco Leaves and Its Application in China. J. Agric. Sci. Technol. A 2016, 6, 232–238. [Google Scholar]

- Wu, J.; Yang, S.X. Intelligent control of bulk tobacco curing schedule using LS-SVM-and ANFIS-based multi-sensor data fusion approaches. Sensors 2019, 19, 1778. [Google Scholar] [CrossRef]

- Wang, Y.; Qin, L. Research on state prediction method of tobacco curing process based on model fusion. J. Ambient Intell. Humaniz. Comput. 2022, 13, 2951–2961. [Google Scholar] [CrossRef]

- Wang, L.; Cheng, B.; Li, Z.; Liu, T.; Li, J. Intelligent tobacco flue-curing method based on leaf texture feature analysis. Optik 2017, 150, 117–130. [Google Scholar] [CrossRef]

- Condorí, M.; Albesa, F.; Altobelli, F.; Duran, G.; Sorrentino, C. Image processing for monitoring of the cured tobacco process in a bulk-curing stove. Comput. Electron. Agric. 2020, 168, 105113. [Google Scholar] [CrossRef]

- Wu, J.; Yang, S.X.; Tian, F. A novel intelligent control system for flue-curing barns based on real-time image features. Biosyst. Eng. 2014, 123, 77–90. [Google Scholar] [CrossRef]

- Chen, D.; Zhang, Y.; He, Z.; Deng, Y.; Zhang, P.; Hai, W. Feature-reinforced dual-encoder aggregation network for flue-cured tobacco grading. Comput. Electron. Agric. 2023, 210, 107887. [Google Scholar] [CrossRef]

- Lu, M.; Wang, C.; Wu, W.; Zhu, D.; Zhou, Q.; Wang, Z.; Chen, T.; Jiang, S.; Chen, D. Intelligent Grading of Tobacco Leaves Using an Improved Bilinear Convolutional Neural Network. IEEE Access 2023, 11, 68153–68170. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Xin, X.; Gong, H.; Hu, R.; Ding, X.; Pang, S.; Che, Y. Intelligent large-scale flue-cured tobacco grading based on deep densely convolutional network. Sci. Rep. 2023, 13, 11119. [Google Scholar] [CrossRef]

- Wu, J.; Yang, S.X. Modeling of the bulk tobacco flue-curing process using a deep learning-based method. IEEE Access 2021, 9, 140424–140436. [Google Scholar] [CrossRef]

- Fan, M.; Lai, S.; Huang, J.; Wei, X.; Chai, Z.; Luo, J.; Wei, X. Rethinking bisenet for real-time semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 9716–9725. [Google Scholar]

- Sifre, L.; Mallat, S. Rigid-motion scattering for texture classification. arXiv 2014, arXiv:1403.1687. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Yu, F.; Koltun, V.; Funkhouser, T. Dilated residual networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 472–480. [Google Scholar]

- Weicai, Z.; Miaowen, Q.; Huihong, L.; Xiuling, J.; Shuhua, C. Breeding and Selection of Flue-cured Tobacco New Variety Yueyan97 and its Characteristics. Chin. Tob. Sci. 2010, 31, 10–14. [Google Scholar]

- Kucuk, A.; Finlayson, G.D.; Mantiuk, R.; Ashraf, M. Performance Comparison of Classical Methods and Neural Networks for Colour Correction. J. Imaging 2023, 9, 214. [Google Scholar] [CrossRef] [PubMed]

- Finlayson, G.D.; Mohammadzadeh Darrodi, M.; Mackiewicz, M. The alternating least squares technique for nonuniform intensity color correction. Color Res. Appl. 2015, 40, 232–242. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Tan, M.; Le, Q. Efffcientnetv2: Smaller models and faster training. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Ye, C.; Akin, B.; et al. MobileNetV4-Universal Models for the Mobile Ecosystem. arXiv 2024, arXiv:2404.10518. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufffenet v2: Practical guidelines for efffcient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Mehta, S.; Rastegari, M. Mobilevit: Light-weight, general-purpose, and mobile-friendly vision transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

- Vasu, P.K.A.; Gabriel, J.; Zhu, J.; Tuzel, O.; Ranjan, A. FastViT: A fast hybrid vision transformer using structural reparameterization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 5785–5795. [Google Scholar]

- Songfeng, W.; Aihua, W.; Jinliang, W.; Zhikun, G.; Sen, C.; Fushan, S.; Xiaohua, L.; Xiuhong, X.; Chuanyi, W.; Jie, R. Effect of Rising Temperature Rate at Leaf-drying Stage on Physiological-biochemistry Characters and Quality during Bulk Curing Process of Tobacco Leaves. Chin. Tob. Sci. 2012, 33, 48–53. [Google Scholar]

- Zhang, B.L.; Bai, T.; Han, G. Analysis on the threshold of heating rate during leaf-drying early stage and bulk curing on quality of upper ffue-cured tobacco leaves. Chin. Tob. Sci. 2023, 51, 160–164. [Google Scholar]

| State | Features |

|---|---|

| fresh tobacco | One-third of the leaves turn yellow with green veins at all levels |

| initial yellowing | About 80% of the leaves turn yellow; the leaf base, main veins, and secondary branch veins contain green, and the leaves lose water and become soft. |

| yellowing | 90% of leaves turned yellow, fully withered and collapsed, more than 1/2 of main veins softened |

| yellowing and withering | Yellow flakes and yellow tendons form on the surface of the tobacco leaves. At the end of the 45 °C–47 °C time period, leaf dehydration reaches the hooked tips and curled edges. At the end of the 48 °C–50 °C period, the leaf blades were dehydrated, and 1/3 of the leaf surface dried to form small rolls. |

| tendon changing | Tobacco leaves dehydrated to more than 2/3, with dry, large-rolled leaves |

| dry flake | Tobacco leaf blades completely dry, and 1/3 to 1/2 of the main veins are dry. |

| dry tendon | The main veins of the tobacco were sufficiently dry throughout the curing barn. |

| States | Precision % | Recall % | F1 Score % | |||

|---|---|---|---|---|---|---|

| P1 1 | P2 | R1 | R2 | F1-1 | F1-2 | |

| S1 | 94.0 | 100.0 | 100.0 | 96.3 | 96.9 | 98.1 |

| S2 | 89.5 | 92.9 | 93.0 | 97.0 | 91.2 | 94.9 |

| S3 | 95.5 | 96.3 | 87.1 | 96.8 | 91.1 | 96.5 |

| S4 | 97.3 | 99.0 | 97.3 | 97.7 | 97.3 | 98.3 |

| S5 | 96.7 | 96.8 | 95.7 | 97.5 | 96.2 | 97.1 |

| S6 | 93.9 | 96.5 | 95.2 | 96.5 | 94.5 | 96.5 |

| S7 | 98.0 | 99.1 | 99.4 | 99.1 | 98.7 | 99.1 |

| Mean | 95.6 | 97.2 | 95.4 | 97.3 | 95.5 | 97.3 |

| Model | Accuracy/% | Params/M | FLOPs/M | Size/mb | Inference Time/ms |

|---|---|---|---|---|---|

| STDC+3 × 3Conv | 97.42 | 763,495 | 338.29 | 2.91 | 20 |

| STDC + SSC | 97.87 | 756,610 | 343.67 | 2.89 | 24 |

| STDC + DC | 98.32 | 757,159 | 370.52 | 2.89 | 28 |

| STDC + DSC | 96.86 | 203,607 | 162.24 | 0.78 | 16 |

| STDC + SSC + DC | 98.26 | 75,6622 | 344.35 | 2.89 | 30 |

| STDC + SSC + DSC | 97.19 | 203,058 | 172.02 | 0.78 | 19 |

| STDC + DSC + DC | 97.53 | 203,607 | 162.24 | 0.78 | 18 |

| STDC + SSC + DSC + DC | 97.87 | 203,058 | 172.39 | 0.78 | 21 |

| STDC + SSC + DSC + DC + SimAm | 98.71 | 203,058 | 172.39 | 0.78 | 21 |

| Model | Accuracy/% | Precision/% | Recall/% | Params/M | FLOPs/M | Size/mb | Inference Time/ms |

|---|---|---|---|---|---|---|---|

| STDC + SSC + DSC + DC + SE | 97.65 | 97.47 | 97.57 | 203,058 | 172.39 | 0.78 | 24 |

| STDC + SSC + DSC + DC + CA | 97.59 | 97.51 | 97.47 | 280,002 | 173.77 | 1.07 | 27 |

| STDC + SSC + DSC + DC + CBAM | 96.80 | 96.59 | 96.60 | 254,650 | 173.58 | 1.17 | 31 |

| STDC + SSC + DSC + DC + ECA | 97.14 | 97.08 | 97.10 | 203,070 | 173.07 | 0.78 | 26 |

| STDC + SSC + DSC + DC + SimAm | 98.71 | 98.56 | 98.57 | 203,058 | 172.39 | 0.78 | 21 |

| Model | Accuracy/% | Precision/% | Recall/% | Params/M | FLOPs/M | Size/mb | Inference Time/ms |

|---|---|---|---|---|---|---|---|

| ResNet18 | 97.14 | 97.03 | 97.14 | 11.18 | 1823.53 | 42.65 | 69 |

| EfficientNet | 96.18 | 95.93 | 96.16 | 4.02 | 411.56 | 15.32 | 66 |

| EfficientNetV2 | 97.98 | 97.83 | 97.87 | 20.32 | 2924.08 | 77.53 | 231 |

| MobileNetV3 | 97.48 | 97.85 | 97.33 | 4.23 | 228.38 | 16.15 | 45 |

| MobileNetV4 | 94.00 | 94.20 | 93.54 | 2.99 | 305.72 | 11.4 | 67 |

| ShuffleNetV2 | 96.58 | 96.49 | 96.56 | 1.26 | 151.69 | 4.81 | 30 |

| FastVit | 94.39 | 94.17 | 94.19 | 3.26 | 550.3 | 12.43 | 85 |

| MobileVit | 96.80 | 96.64 | 96.76 | 1.33 | 263.44 | 5.08 | 69 |

| TFSNet | 98.71 | 98.56 | 98.57 | 0.203 | 172.39 | 0.78 | 21 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiong, J.; Hou, Y.; Wang, H.; Tang, K.; Liao, K.; Yao, Y.; Liu, L.; Zhang, Y. Research on the Recognition Method of Tobacco Flue-Curing State Based on Bulk Curing Barn Environment. Agronomy 2024, 14, 2347. https://doi.org/10.3390/agronomy14102347

Xiong J, Hou Y, Wang H, Tang K, Liao K, Yao Y, Liu L, Zhang Y. Research on the Recognition Method of Tobacco Flue-Curing State Based on Bulk Curing Barn Environment. Agronomy. 2024; 14(10):2347. https://doi.org/10.3390/agronomy14102347

Chicago/Turabian StyleXiong, Juntao, Youcong Hou, Hang Wang, Kun Tang, Kangning Liao, Yuanhua Yao, Lan Liu, and Ye Zhang. 2024. "Research on the Recognition Method of Tobacco Flue-Curing State Based on Bulk Curing Barn Environment" Agronomy 14, no. 10: 2347. https://doi.org/10.3390/agronomy14102347

APA StyleXiong, J., Hou, Y., Wang, H., Tang, K., Liao, K., Yao, Y., Liu, L., & Zhang, Y. (2024). Research on the Recognition Method of Tobacco Flue-Curing State Based on Bulk Curing Barn Environment. Agronomy, 14(10), 2347. https://doi.org/10.3390/agronomy14102347