Leveraging Zero-Shot Detection Mechanisms to Accelerate Image Annotation for Machine Learning in Wild Blueberry (Vaccinium angustifolium Ait.)

Abstract

1. Introduction

2. Materials and Methods

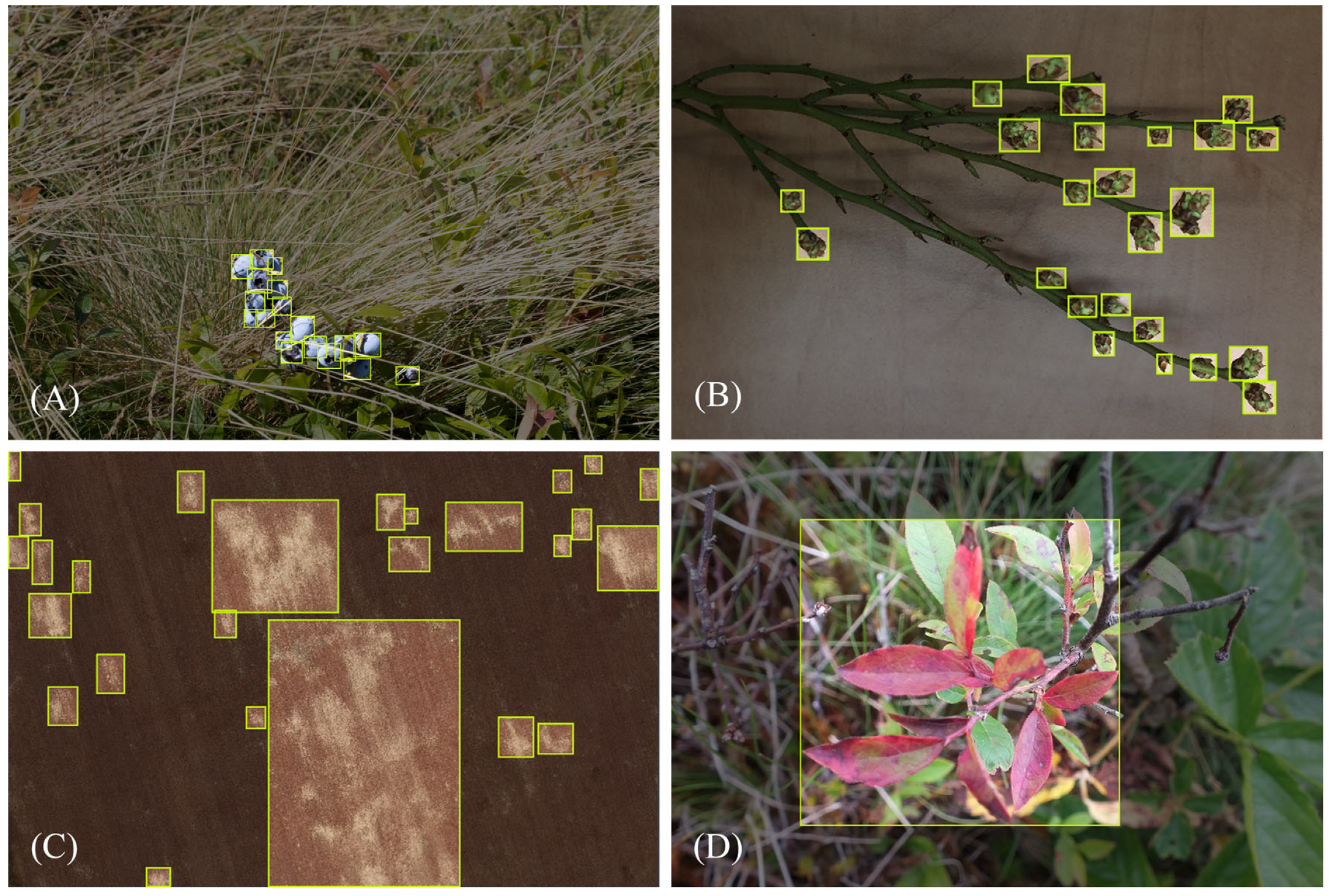

2.1. Dataset Preparation

2.2. Model Selection

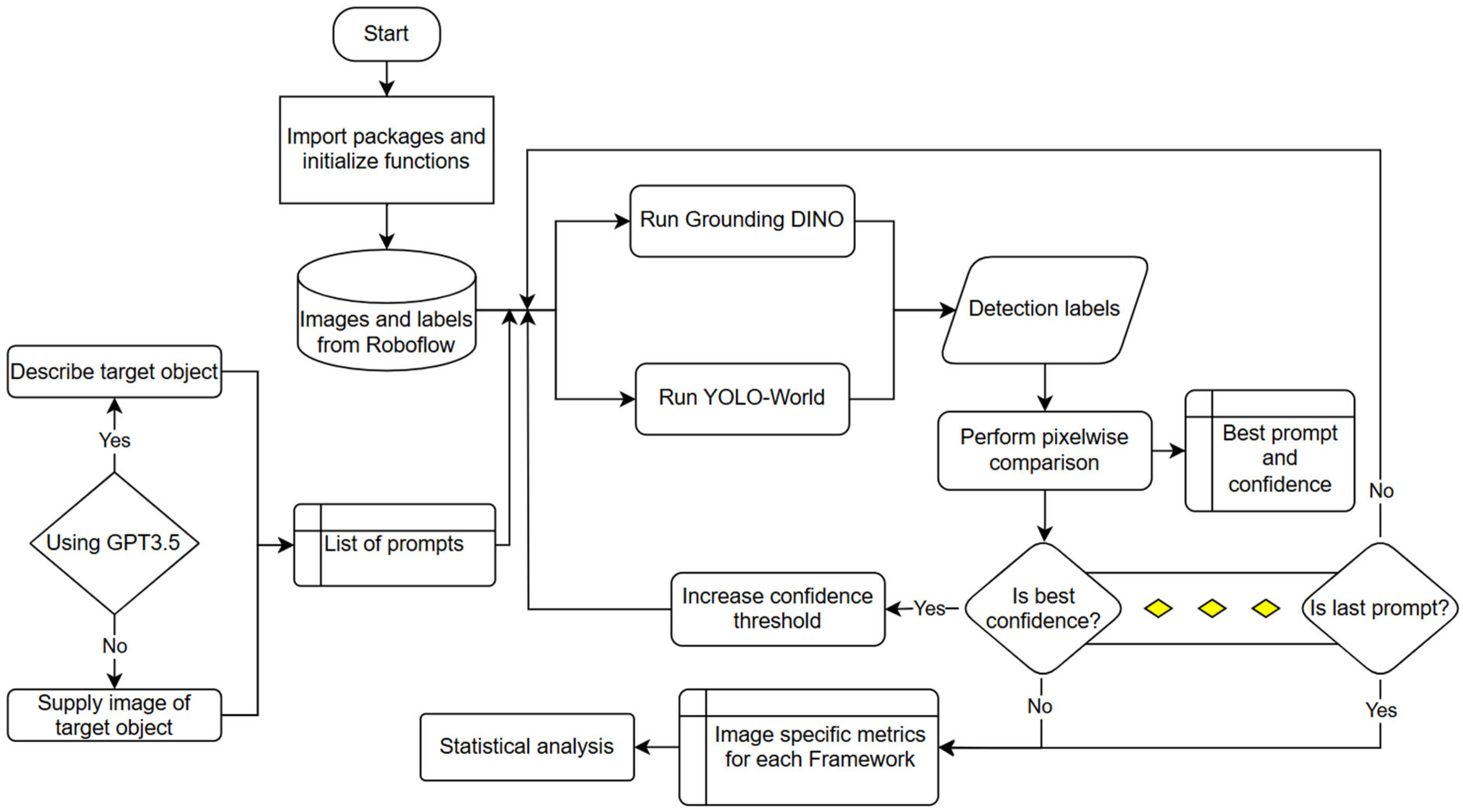

2.3. Evaluation Procedure

2.4. Statistical Analysis

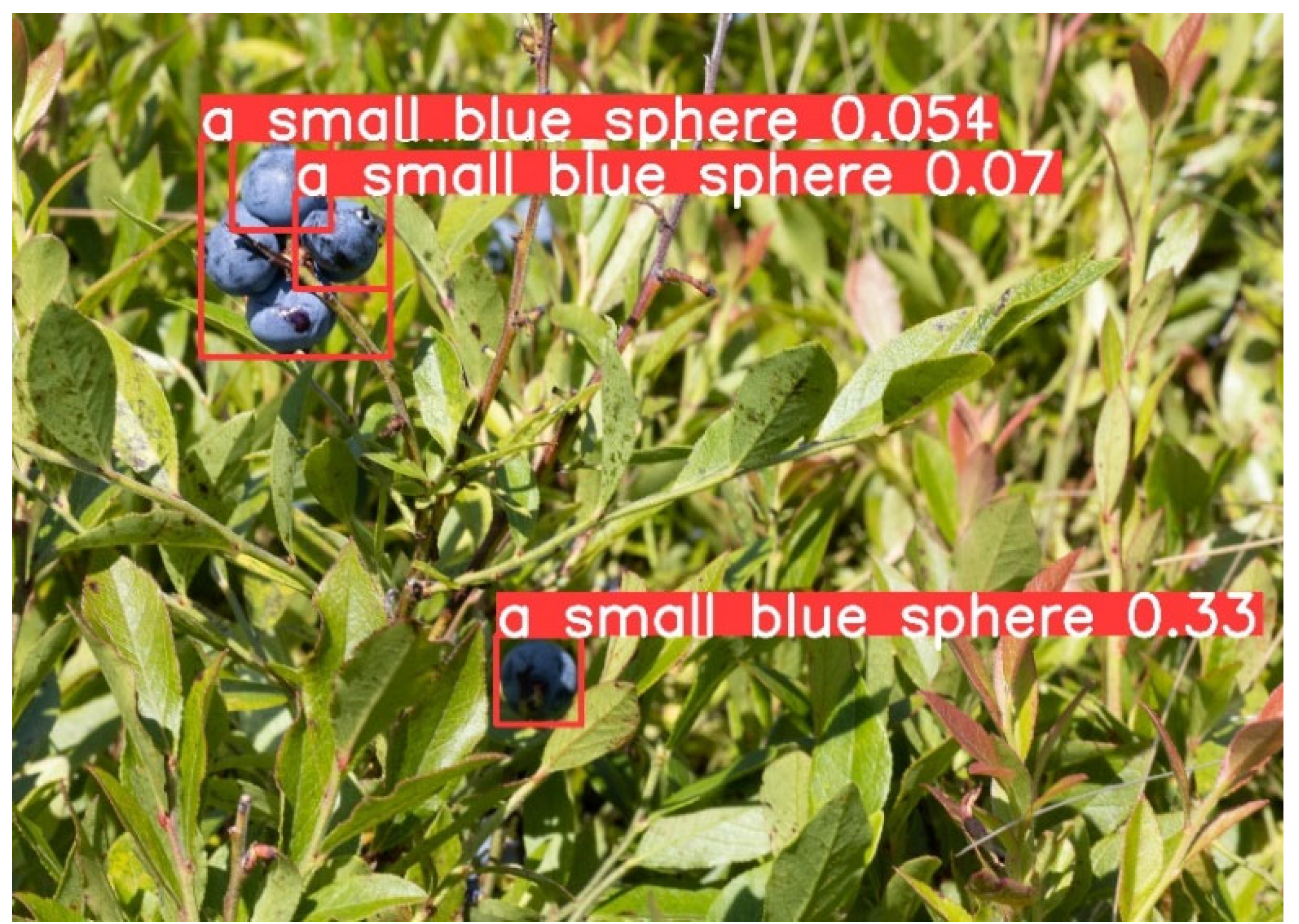

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bilodeau, M.F.; Esau, T.J.; MacEachern, C.B.; Farooque, A.A.; White, S.N.; Zaman, Q.U. Identifying Hair Fescue in Wild Blueberry Fields Using Drone Images for Precise Application of Granular Herbicide. Smart Agric. Technol. 2023, 3, 100127. [Google Scholar] [CrossRef]

- Fiona, J.R.; Anitha, J. Automated Detection of Plant Diseases and Crop Analysis in Agriculture Using Image Processing Techniques: A Survey. In Proceedings of the 2019 IEEE International Conference on Electrical, Computer and Communication Technologies (ICECCT), Coimbatore, India, 20–22 February 2019; pp. 1–5. [Google Scholar]

- Cordier, A.; Gutierrez, P.; Plessis, V. Improving Generalization with Synthetic Training Data for Deep Learning Based Quality Inspection. arXiv 2022, arXiv:2202.12818. [Google Scholar]

- Fenu, G.; Malloci, F.M. DiaMOS Plant: A Dataset for Diagnosis and Monitoring Plant Disease. Agronomy 2021, 11, 2107. [Google Scholar] [CrossRef]

- MacEachern, C.B.; Esau, T.J.; Schumann, A.W.; Hennessy, P.J.; Zaman, Q.U. Detection of Fruit Maturity Stage and Yield Estimation in Wild Blueberry Using Deep Learning Convolutional Neural Networks. Smart Agric. Technol. 2023, 3, 100099. [Google Scholar] [CrossRef]

- Verma, R. Crop Analysis and Prediction. In Proceedings of the 2022 5th International Conference on Multimedia, Signal Processing and Communication Technologies, Aligarh, India, 26–27 November 2022; pp. 1–5. [Google Scholar]

- Yarborough, D.; Cote, J. Pre-and Post-Emergence Applications of Herbicides for Control of Resistant Fineleaf Sheep Fescue in Wild Blueberry Fields in Maine. In Proceedings of the North American Blueberry Research and Extension Workers Conference, Atlantic City, NJ, USA, 24 June 2014. [Google Scholar]

- Percival, D.C.; Dawson, J.K. Foliar Disease Impact and Possible Control Strategies in Wild Blueberry Production. In Proceedings of the IX International Vaccinium Symposium 810, Corvallis, OR, USA, 14–18 July 2008; pp. 345–354. [Google Scholar]

- Jewell, L.E.; Compton, K.; Wiseman, D. Evidence for a Genetically Distinct Population of Exobasidium sp. Causing Atypical Leaf Blight Symptoms on Lowbush Blueberry (Vaccinium angustifolium Ait.) in Newfoundland and Labrador, Canada. Can. J. Plant Pathol. 2021, 43, 897–904. [Google Scholar] [CrossRef]

- Hildebrand, P.D.; Nickerson, N.L.; McRae, K.B.; Lu, X. Incidence and Impact of Red Leaf Disease Caused by Exobasidium vaccinii in Lowbush Blueberry Fields in Nova Scotia. Can. J. Plant Pathol. 2000, 22, 364–367. [Google Scholar] [CrossRef]

- Lyu, H.; McLean, N.; McKenzie-Gopsill, A.; White, S.N. Weed Survey of Nova Scotia Lowbush Blueberry (Vaccinium angustifolium Ait.) Fields. Int. J. Fruit Sci. 2021, 21, 359–378. [Google Scholar] [CrossRef]

- White, S.N. Evaluation of Herbicides for Hair Fescue (Festuca filiformis) Management and Potential Seedbank Reduction in Lowbush Blueberry. Weed Technol. 2019, 33, 840–846. [Google Scholar] [CrossRef]

- Esau, T.; Zaman, Q.; Groulx, D.; Farooque, A.; Schumann, A.; Chang, Y. Machine Vision Smart Sprayer for Spot-Application of Agrochemical in Wild Blueberry Fields. Precis. Agric. 2018, 19, 770–788. [Google Scholar] [CrossRef]

- Bator, M.; Pankiewicz, M. Image Annotating Tools for Agricultural Purpose-A Requirements Study. Mach. Graph. Vis. 2019, 28, 69–77. [Google Scholar] [CrossRef]

- Brenskelle, L.; Guralnick, R.P.; Denslow, M.; Stucky, B.J. Maximizing Human Effort for Analyzing Scientific Images: A Case Study Using Digitized Herbarium Sheets. Appl. Plant Sci. 2020, 8, e11370. [Google Scholar] [CrossRef] [PubMed]

- Hennessy, P.J.; Esau, T.J.; Farooque, A.A.; Schumann, A.W.; Zaman, Q.U.; Corscadden, K.W. Hair Fescue and Sheep Sorrel Identification Using Deep Learning in Wild Blueberry Production. Remote Sens. 2021, 13, 943. [Google Scholar] [CrossRef]

- Donatelli, M.; Magarey, R.D.; Bregaglio, S.; Willocquet, L.; Whish, J.P.; Savary, S. Modelling the Impacts of Pests and Diseases on Agricultural Systems. Agric. Syst. 2017, 155, 213–224. [Google Scholar] [CrossRef]

- Sun, X.; Chen, S.; Feng, Z.; Ge, W.; Huang, K. A Service Annotation Quality Improvement Approach Based on Efficient Human Intervention. In Proceedings of the 2018 IEEE International Conference on Web Services (ICWS 2018), San Francisco, CA, USA, 2–7 July 2018; pp. 107–114. [Google Scholar]

- Too, E.C.; Yujian, L.; Njuki, S.; Yingchun, L. A Comparative Study of Fine-Tuning Deep Learning Models for Plant Disease Identification. Comput. Electr. Agric. 2019, 161, 272–279. [Google Scholar] [CrossRef]

- Ahmed, N.; Asif, H.M.S.; Saleem, G.; Younus, M.U. Image Quality Assessment for Foliar Disease Identification (AgroPath). arXiv 2022, arXiv:2209.12443. [Google Scholar]

- Brust, C.-A.; Käding, C.; Denzler, J. Active Learning for Deep Object Detection. arXiv 2018, arXiv:1809.09875. [Google Scholar]

- Schmidt, S.; Rao, Q.; Tatsch, J.; Knoll, A. Advanced Active Learning Strategies for Object Detection. In Proceedings of the IEEE Intelligent Vehicles Symposium, IV 2020, Las Vegas, NV, USA, 19 October–13 November 2020; pp. 871–876. [Google Scholar]

- Tung, F.; Mori, G. Similarity-Preserving Knowledge Distillation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer, Seoul, Republic of Korea, 27 October 2019–2 November 2019; pp. 1365–1374. [Google Scholar]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging Properties in Self-Supervised Vision Transformers. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Virtual, 10–17 October 2021; pp. 9650–9660. [Google Scholar]

- Fu, Z. Vision Transformer: Vit and Its Derivatives. arXiv 2022, arXiv:2205.11239. [Google Scholar]

- Kwon, H.; Castro, F.M.; Marin-Jimenez, M.J.; Guil, N.; Alahari, K. Lightweight Structure-Aware Attention for Visual Understanding. arXiv 2022, arXiv:2211.16289. [Google Scholar]

- Naseer, M.; Ranasinghe, K.; Khan, S.H.; Hayat, M.; Khan, F.; Yang, M.-H. Intriguing Properties of Vision Transformers. In Proceedings of the Neural Information Processing Systems, Virtual, 6 December 2021. [Google Scholar]

- Gallego-Mejia, J.A.; Jungbluth, A.; Martínez-Ferrer, L.; Allen, M.; Dorr, F.; Kalaitzis, F.; Ramos-Pollán, R. Exploring DINO: Emergent Properties and Limitations for Synthetic Aperture Radar Imagery. arXiv 2023, arXiv:2310.03513. [Google Scholar]

- Vu, T.; Wang, T.; Munkhdalai, T.; Sordoni, A.; Trischler, A.; Mattarella-Micke, A.; Maji, S.; Iyyer, M. Exploring and Predicting Transferability across NLP Tasks. arXiv 2020, arXiv:2005.00770. [Google Scholar]

- Wanyan, X.; Seneviratne, S.; Shen, S.; Kirley, M. Extending Global-Local View Alignment for Self-Supervised Learning with Remote Sensing Imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Li, C.; Yang, J.; Su, H.; Zhu, J.; et al. Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection. arXiv 2023, arXiv:2303.05499. [Google Scholar]

- Wu, D.; Li, H.; Gu, C.; Liu, H.; Xu, C.; Hou, Y.; Guo, L. Feature First: Advancing Image-Text Retrieval through Improved Visual Features. IEEE Trans. Multimed. 2023, 26, 3827–3841. [Google Scholar] [CrossRef]

- Yang, L.; Xu, Y.; Yuan, C.; Liu, W.; Li, B.; Hu, W. Improving Visual Grounding with Visual-Linguistic Verification and Iterative Reasoning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 9499–9508. [Google Scholar]

- Tan, J.; Li, B.; Lu, X.; Yao, Y.; Yu, F.; He, T.; Ouyang, W. The Equalization Losses: Gradient-Driven Training for Long-Tailed Object Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13876–13892. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Liu, H.; Li, L.; Zhang, P.; Aneja, J.; Yang, J.; Jin, P.; Hu, H.; Liu, Z.; Lee, Y.J. Elevater: A Benchmark and Toolkit for Evaluating Language-Augmented Visual Models. Adv. Neural Inf. Process. Syst. 2022, 35, 9287–9301. [Google Scholar]

- Cheng, T.; Song, L.; Ge, Y.; Liu, W.; Wang, X.; Shan, Y. YOLO-World: Real-Time Open-Vocabulary Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 3 August 2024).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the International Conference on Machine Learning, ICML 2021, Virtual, 18–24 June 2021; pp. 8748–8763. [Google Scholar]

- Li, L.H.; Zhang, P.; Zhang, H.; Yang, J.; Li, C.; Zhong, Y.; Wang, L.; Yuan, L.; Zhang, L.; Hwang, J.-N. Grounded Language-Image Pre-Training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10965–10975. [Google Scholar]

- Zhang, H.; Zhang, P.; Hu, X.; Chen, Y.-C.; Li, L.; Dai, X.; Wang, L.; Yuan, L.; Hwang, J.-N.; Gao, J. Glipv2: Unifying Localization and Vision-Language Understanding. Adv. Neural Inf. Process. Syst. 2022, 35, 36067–36080. [Google Scholar]

- Esmaeilpour, S.; Liu, B.; Robertson, E.; Shu, L. Zero-Shot out-of-Distribution Detection Based on the Pre-Trained Model Clip. In Proceedings of the AAAI Conference on Artificial Intelligence 2022, Virtual, 22 February–1 March 2022; Volume 36, pp. 6568–6576. [Google Scholar]

- Rahman, M.A.; Wang, Y. Optimizing Intersection-Over-Union in Deep Neural Networks for Image Segmentation. In Advances in Visual Computing; Bebis, G., Boyle, R., Parvin, B., Koracin, D., Porikli, F., Skaff, S., Entezari, A., Min, J., Iwai, D., Sadagic, A., et al., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2016; Volume 10072, pp. 234–244. ISBN 978-3-319-50834-4. [Google Scholar]

- Sokolova, M.; Lapalme, G. A Systematic Analysis of Performance Measures for Classification Tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Montgomery, D.C. Design and Analysis of Experiments; John Wiley & Sons: Hoboken, NJ, USA, 2017; ISBN 978-1-119-11347-8. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y. Segment Anything. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4015–4026. [Google Scholar]

| Dataset | Field | Latitude | Longitude | Date |

|---|---|---|---|---|

| Red leaf disease | 1 | 45.500750 | −63.107650 | 2 June 2022 |

| Ripe wild blueberries | 2 | 45.440839 | −63.542934 | 17 August 2023 |

| Hair fescue | 3 | 45.913421 | −64.472220 | 8 May 2024 |

| 4 | 45.763845 | −64.486173 | 8 May 2024 | |

| 5 | 45.492640 | −62.988993 | 5 May 2024 | |

| 6 | 45.405000 | −63.670521 | 10 May 2024 | |

| 7 | 45.481963 | −63.574070 | 10 May 2024 | |

| Wild blueberry buds | 8 | 45.493991 | −62.990759 | 13 May 2024 |

| 9 | 45.472361 | −63.631111 | 13 May 2024 |

| Dataset | Metric | Grounding DINO | YOLO-World | p-Value |

|---|---|---|---|---|

| Ripe wild blueberries | IoU | 0.642 ± 0.148 | 0.516 ± 0.114 | <0.001 |

| Precision | 0.689 ± 0.146 | 0.648 ± 0.111 | 0.038 | |

| Recall | 0.901 ± 0.091 | 0.733 ± 0.161 | <0.001 | |

| F1 score | 0.772 ± 0.108 | 0.673 ± 0.099 | <0.001 | |

| Wild blueberry buds | IoU | 0.629 ± 0.161 | 0.408 ± 0.169 | <0.001 |

| Precision | 0.682 ± 0.175 | 0.535 ± 0.217 | <0.001 | |

| Recall | 0.919 ± 0.135 | 0.709 ± 0.245 | <0.001 | |

| F1 score | 0.760 ± 0.125 | 0.558 ± 0.176 | <0.001 | |

| Red leaf disease | IoU | 0.921 ± 0.135 | 0.567 ± 0.286 | 0.020 |

| Precision | 0.923 ± 0.134 | 0.568 ± 0.286 | 0.020 | |

| Recall | 0.998 ± 0.002 | 0.997 ± 0.004 | 0.518 | |

| F1 score | 0.954 ± 0.083 | 0.686 ± 0.252 | 0.030 | |

| Hair fescue | IoU | 0.735 ± 0.213 | 0.232 ± 0.106 | <0.001 |

| Precision | 0.802 ± 0.215 | 0.252 ± 0.133 | <0.001 | |

| Recall | 0.912 ± 0.129 | 0.874 ± 0.146 | 0.302 | |

| F1 score | 0.832 ± 0.142 | 0.366 ± 0.136 | <0.001 |

| Dataset | Manual Annotation (s/Image) | Grounding DINO (s/Image) | YOLO-World (s/Image) |

|---|---|---|---|

| Ripe wild blueberries | 91.0 ± 53.3 a | 25.2 ± 25.0 b | 82.2 ± 44.2 ab |

| Wild blueberry buds | 57.7 ± 12.7 a | 24.8 ± 11.2 b | 40.3 ± 19.9 ab |

| Red leaf disease | 24.7 ± 4.7 a | 4.3 ± 2.1 c | 13.2 ± 5.0 b |

| Hair fescue | 21.8 ± 6.4 a | 6.8 ± 3.1 b | 28.2 ± 4.4 a |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mullins, C.C.; Esau, T.J.; Zaman, Q.U.; Toombs, C.L.; Hennessy, P.J. Leveraging Zero-Shot Detection Mechanisms to Accelerate Image Annotation for Machine Learning in Wild Blueberry (Vaccinium angustifolium Ait.). Agronomy 2024, 14, 2830. https://doi.org/10.3390/agronomy14122830

Mullins CC, Esau TJ, Zaman QU, Toombs CL, Hennessy PJ. Leveraging Zero-Shot Detection Mechanisms to Accelerate Image Annotation for Machine Learning in Wild Blueberry (Vaccinium angustifolium Ait.). Agronomy. 2024; 14(12):2830. https://doi.org/10.3390/agronomy14122830

Chicago/Turabian StyleMullins, Connor C., Travis J. Esau, Qamar U. Zaman, Chloe L. Toombs, and Patrick J. Hennessy. 2024. "Leveraging Zero-Shot Detection Mechanisms to Accelerate Image Annotation for Machine Learning in Wild Blueberry (Vaccinium angustifolium Ait.)" Agronomy 14, no. 12: 2830. https://doi.org/10.3390/agronomy14122830

APA StyleMullins, C. C., Esau, T. J., Zaman, Q. U., Toombs, C. L., & Hennessy, P. J. (2024). Leveraging Zero-Shot Detection Mechanisms to Accelerate Image Annotation for Machine Learning in Wild Blueberry (Vaccinium angustifolium Ait.). Agronomy, 14(12), 2830. https://doi.org/10.3390/agronomy14122830