Algorithm for Corn Crop Row Recognition during Different Growth Stages Based on ST-YOLOv8s Network

Abstract

1. Introduction

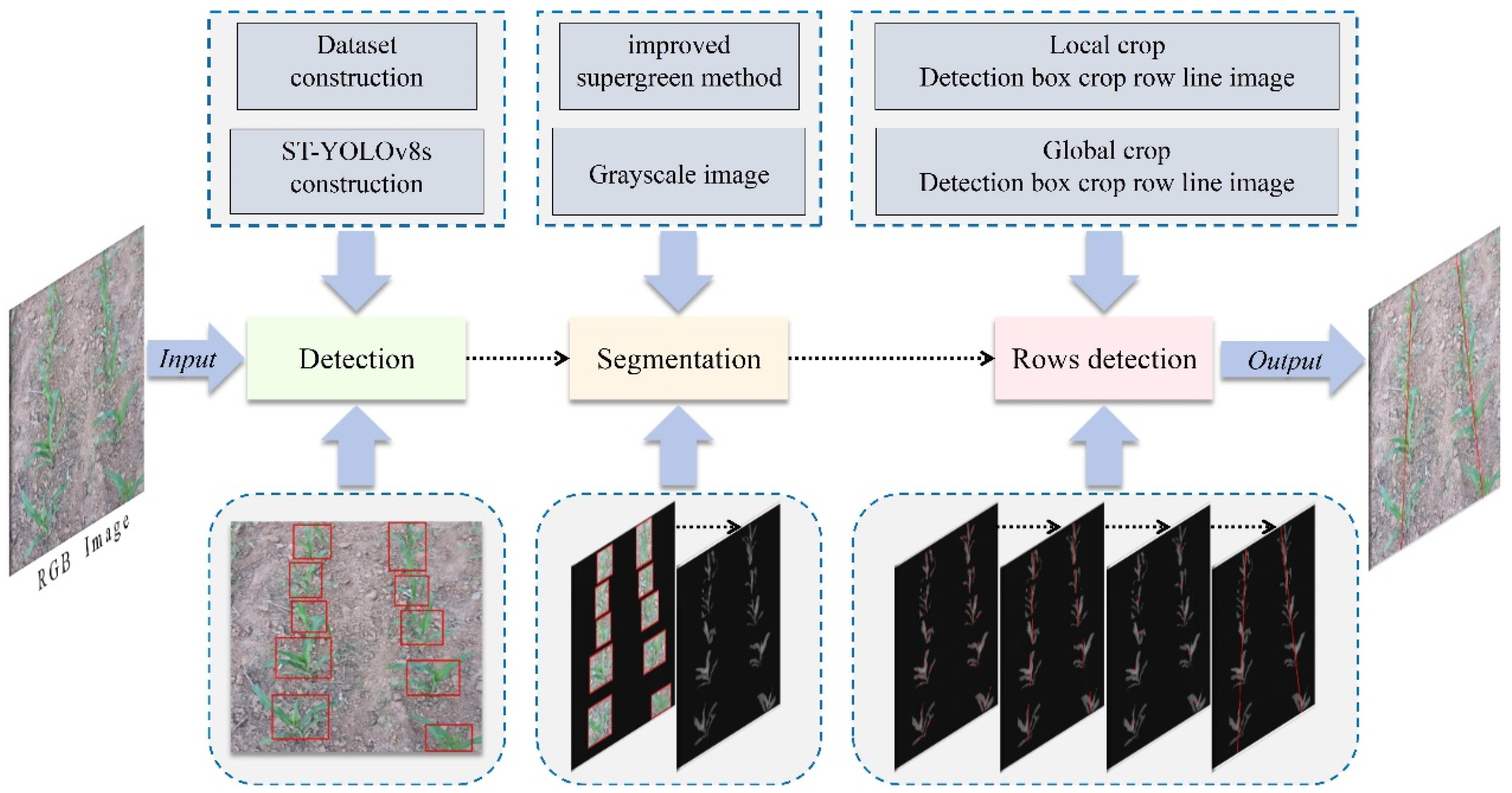

2. Materials and Methods

2.1. Dataset Construction

2.2. Model Construction

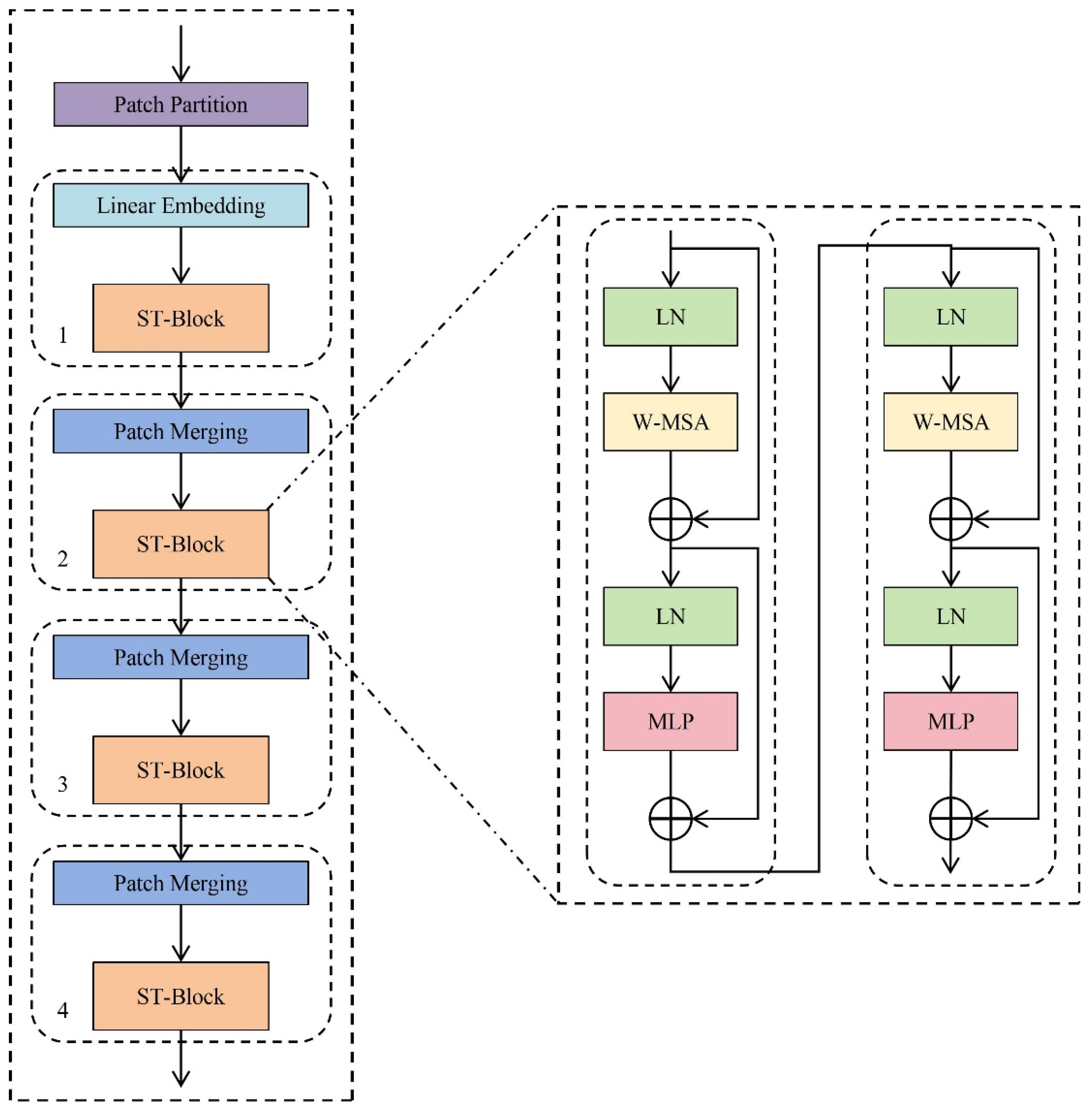

2.2.1. Swin Transformer Model Construction

2.2.2. ST-YOLOv8s Model Construction

2.3. Improved Supergreen Method

2.4. Local–Global Detection Method

- (a)

- Firstly, on the basis of the crop row segmentation results, the left and right edge centerline method is used to locate the local crop row feature points; the coordinates of the feature points of the local crop rows are (xk,yk), and xk is defined as shown in Equation (2), where, xi, xj are the horizontal coordinates of the intersection point of the centerline of each horizontal strip and the edge of the crop row. The intersection point is determined by the change in the gray value; when the centerline crosses the edge of the crop row, the gray value will change from 0 to 1.9G-R-B or from 1.9G-R-B to 0, and at this time the edge point is the intersection point. yk represents the vertical coordinates of the intersection point of the centerline of each horizontal strip and the edge of the crop row.

- (b)

- Secondly, the local crop row centerline is fitted using the least squares method.

- (c)

- After that, this paper uses the local crop row line detection results to locate the global crop row feature point. The global crop row feature point coordinates are (xg,yg), where xg, yg are defined as shown in Equations (3) and (4), respectively, xe, xf are the horizontal coordinates of the endpoints of the local crop row line, and ye, yf are the vertical coordinates of the endpoints of the local crop row line.

- (d)

- Finally, the global crop row centerline is fitted using the least squares method.

3. Results and Discussion

3.1. Experimental Platform

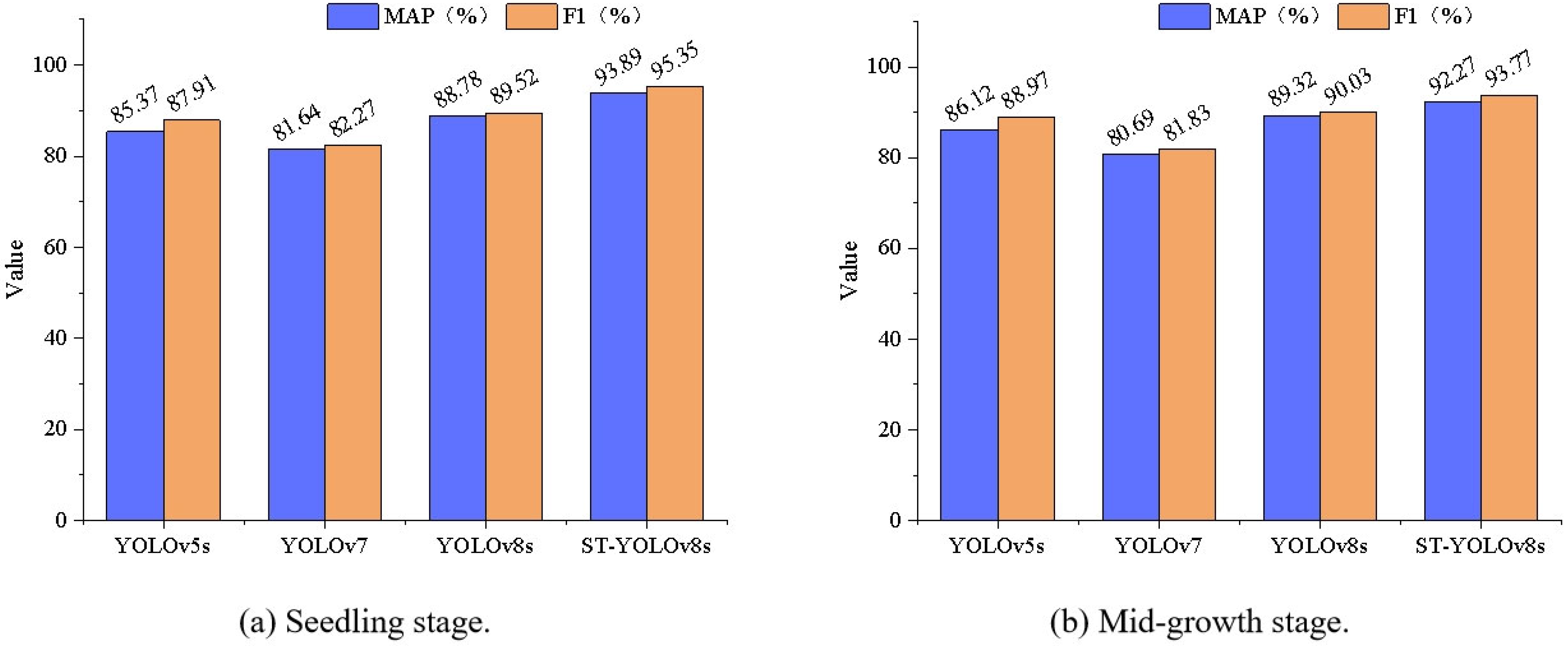

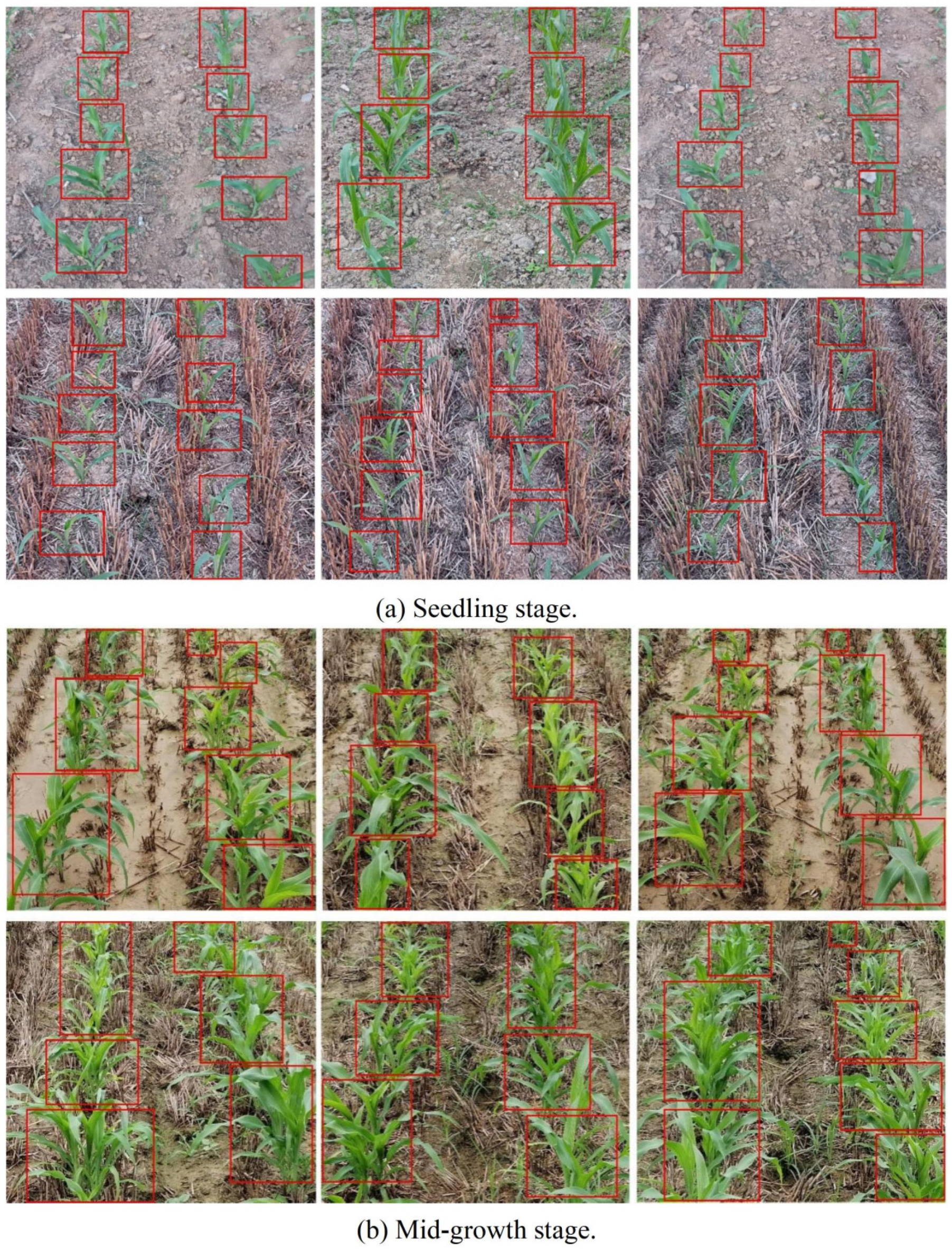

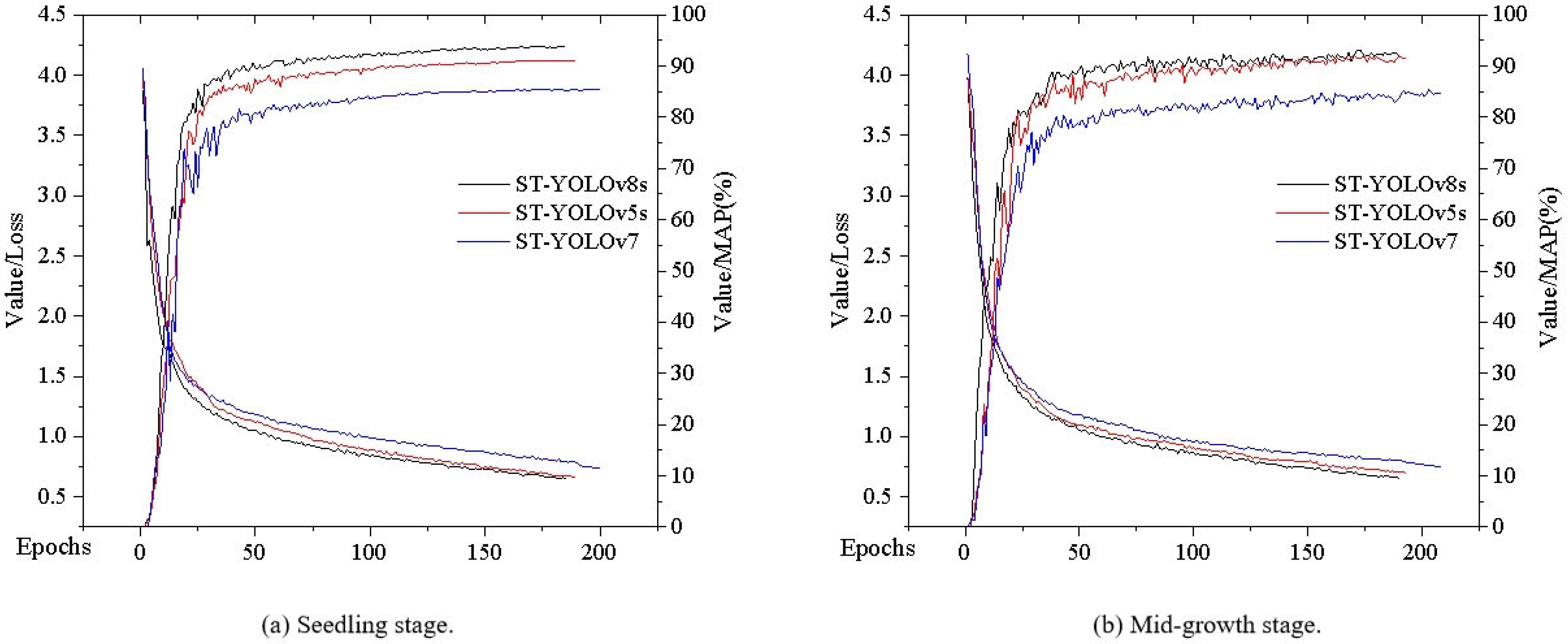

3.2. Crop Row Segment Detection Experiment

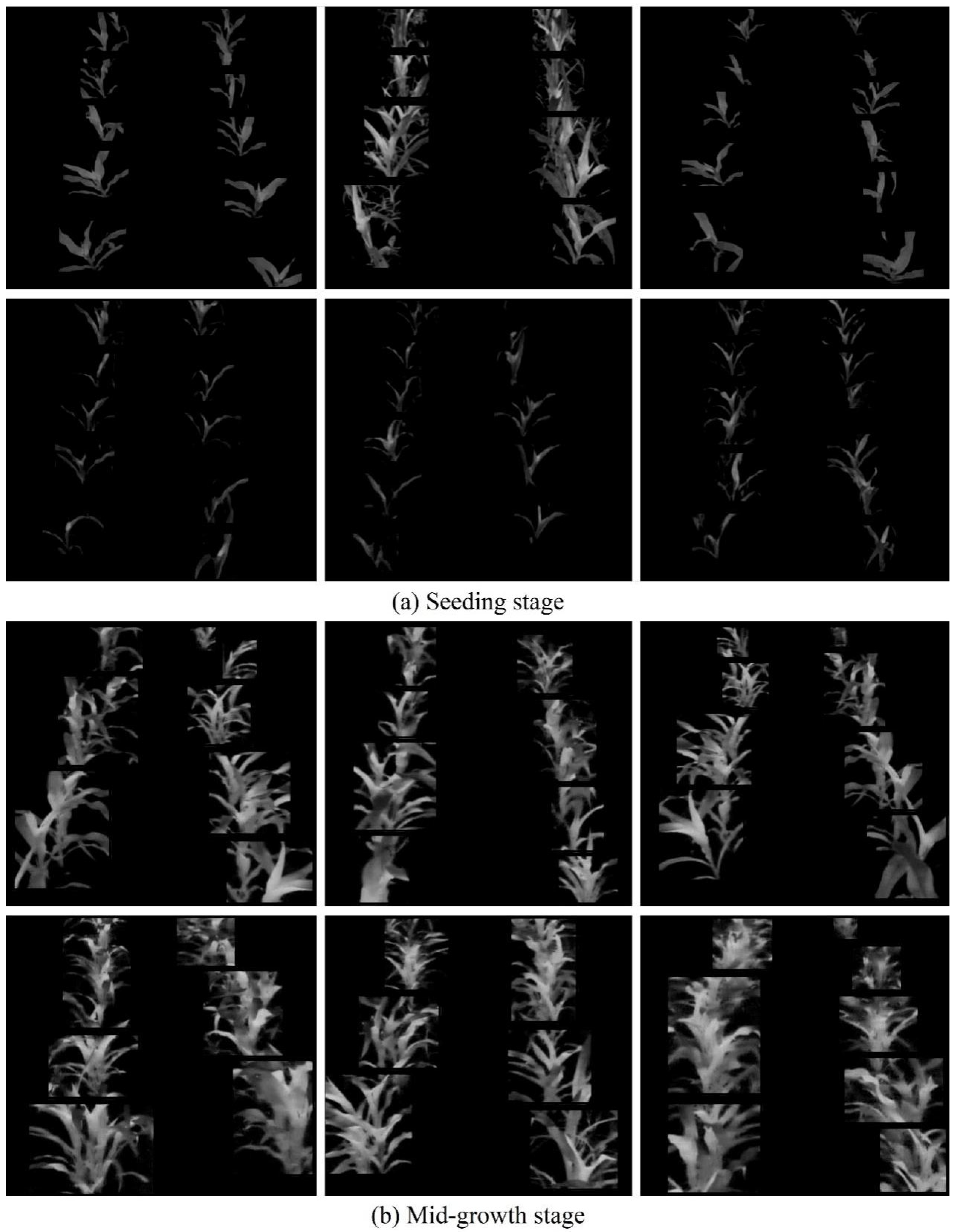

3.3. Crop Row Segment Segmentation Experiment

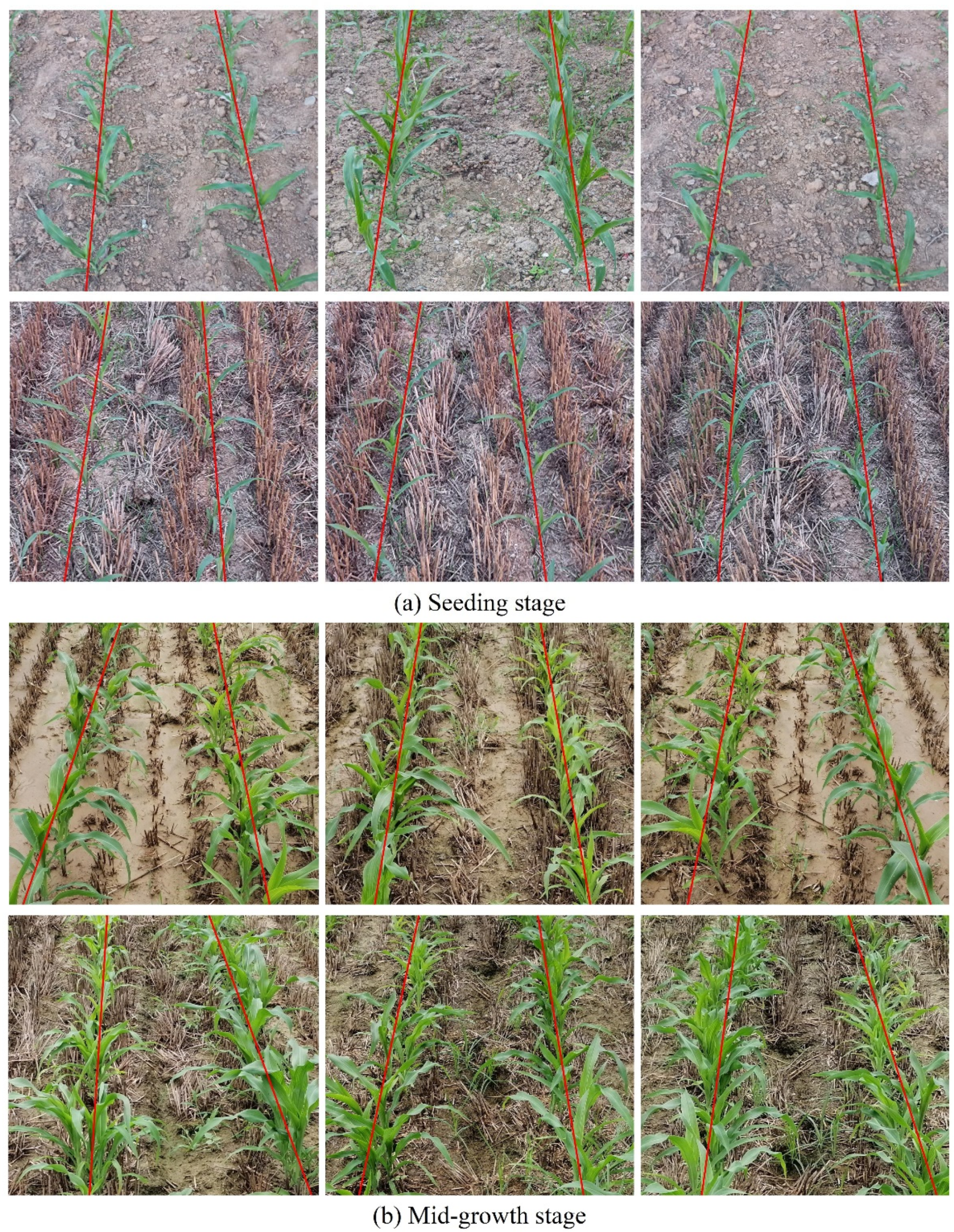

3.4. Crop Row Line Detection Experiment

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Diao, Z.H.; Yan, J.N.; He, Z.D.; Zhao, S.N.; Guo, P.L. Corn seedling recognition algorithm based on hyperspectral image and lightweight-3D-CNN. Comput. Electron. Agric. 2022, 201, 107343. [Google Scholar] [CrossRef]

- Bai, Y.H.; Zhang, B.H.; Xu, N.M.; Zhou, J.; Shi, J.Y.; Diao, Z.H. Vision-based navigation and guidance for agricultural autonomous vehicles and robots: A review. Comput. Electron. Agric. 2023, 205, 107584. [Google Scholar] [CrossRef]

- Wang, T.H.; Chen, B.; Zhang, Z.Q.; Li, H.; Zhang, M. Applications of machine vision in agricultural robot navigation: A review. Comput. Electron. Agric. 2022, 198, 107085. [Google Scholar] [CrossRef]

- Li, Y.; Guo, Z.Q.; Shuang, F.; Zhang, M.; Li, X. H Key technologies of machine vision for weeding robots: A review and benchmark. Comput. Electron. Agric. 2022, 196, 106880. [Google Scholar] [CrossRef]

- Solimani, F.; Cardellicchio, A.; Dimauro, G.; Petrozza, A.; Summerer, S.; Cellini, F.; Renò, V. Optimizing tomato plant phenotyping detection: Boosting YOLOv8 architecture to tackle data complexity. Comput. Electron. Agric. 2024, 218, 108728. [Google Scholar] [CrossRef]

- Xiao, B.J.; Nguyen, M.; Yan, W.Q. Fruit ripeness identification using YOLOv8 model. Multimed. Tools Appl. 2024, 83, 28039–28056. [Google Scholar] [CrossRef]

- Liu, S.C.; Huang, Z.; Xu, Z.H.; Zhao, F.J.; Xiong, D.L.; Peng, S.B.; Huang, J.L. High-throughput measurement method for rice seedling based on improved UNet model. Comput. Electron. Agric. 2024, 219, 108770. [Google Scholar] [CrossRef]

- Yu, J.Y.; Zhang, J.Y.; Shu, A.J.; Chen, Y.J.; Chen, J.N.; Yang, Y.J.; Tang, W.; Zhang, Y.C. Study of convolutional neural network-based semantic segmentation methods on edge intelligence devices for field agricultural robot navigation line extraction. Comput. Electron. Agric. 2023, 209, 107811. [Google Scholar] [CrossRef]

- Li, D.F.; Li, B.L.; Long, S.F.; Feng, H.Q.; Xi, T.; Kang, S.; Wang, J. Rice seedling row detection based on morphological anchor points of rice stems. Biosyst. Eng. 2023, 226, 71–85. [Google Scholar] [CrossRef]

- He, Y.; Zhang, X.Y.; Zhang, Z.Q.; Fang, H. Automated detection of boundary line in paddy field using MobileV2-UNet and RANSAC. Comput. Electron. Agric. 2022, 194, 106697. [Google Scholar] [CrossRef]

- Liu, F.C.; Yang, Y.; Zeng, Y.M.; Liu, Z.Y. Bending diagnosis of rice seedling lines and guidance line extraction of automatic weeding equipment in paddy field. Mech. Syst. Signal Process. 2020, 142, 106791. [Google Scholar] [CrossRef]

- Quan, L.Z.; Feng, H.Q.; Lv, Y.J.; Wang, Q.; Zhang, C.B.; Liu, J.G.; Yuan, Z.Y. Maize seedling detection under different growth stages and complex field environments based on an improved Faster R–CNN. Biosyst. Eng. 2019, 184, 1–23. [Google Scholar] [CrossRef]

- Yang, Y.; Zhou, Y.; Yue, X.; Zhang, G.; Wen, X.; Ma, B.; Xu, L.Y.; Chen, L.Q. Real-time detection of crop rows in maize fields based on autonomous extraction of ROI. Expert Syst. Appl. 2023, 213, 118826. [Google Scholar] [CrossRef]

- Jiang, H.H.; Zhang, C.Y.; Qiao, Y.L.; Zhang, Z.; Zhang, W.J.; Song, C.Q. CNN feature based graph convolutional network for weed and crop recognition in smart farming. Comput. Electron. Agric. 2020, 174, 105450. [Google Scholar] [CrossRef]

- Diao, Z.H.; Guo, P.L.; Zhang, B.H.; Zhang, D.Y.; Yan, J.N.; He, Z.D.; Zhao, S.N.; Zhao, C.J. Maize crop row recognition algorithm based on improved UNet network. Comput. Electron. Agric. 2023, 210, 107940. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, J.H.; Li, B. Extraction method for centerlines of rice seedings based on YOLOv3 target detection. Trans. CSAM 2020, 51, 34–43. [Google Scholar] [CrossRef]

- Yang, R.B.; Zhai, Y.M.; Zhang, J.; Zhang, H.; Tian, G.B.; Zhang, J.; Huang, P.C.; Li, L. Potato visual navigation line detection based on deep learning and feature midpoint adaptation. Agriculture 2022, 12, 1363. [Google Scholar] [CrossRef]

- Hu, Y.; Huang, H. Extraction method for centerlines of crop row based on improved lightweight yolov4. In Proceedings of the 2021 6th International Symposium on Computer and Information Processing Technology (ISCIPT), Changsha, China, 11–13 June 2021; pp. 127–132. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. CRowNet: Deep network for crop row detection in UAV images. IEEE Access 2019, 8, 5189–5200. [Google Scholar] [CrossRef]

- Wang, S.S.; Yu, S.S.; Zhang, W.Y.; Wang, X.S.; Li, J. The seedling line extraction of automatic weeding machinery in paddy field. Comput. Electron. Agric. 2023, 205, 107648. [Google Scholar] [CrossRef]

- Everingham, M.; Gool, L.V.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Zhu, Y.J.; Li, S.S.; Du, W.S.; Du, Y.P.; Liu, P.; Li, X. Identification of table grapes in the natural environment based on an improved Yolov5 and localization of picking points. Precis. Agric. 2023, 24, 1333–1354. [Google Scholar] [CrossRef]

- Diao, Z.H.; Guo, P.L.; Zhang, B.K.; Yan, J.N.; He, Z.D.; Zhao, S.N.; Zhao, C.J.; Zhang, J.C. Spatial-spectral attention-enhanced Res-3D-OctConv for corn and weed identification utilizing hyperspectral imaging and deep learning. Comput. Electron. Agric. 2023, 212, 108092. [Google Scholar] [CrossRef]

- Gallo, I.; Rehman, A.U.; Dehkordi, R.H.; Landro, N.; Grassa, R.L.; Boschetti, M. Deep object detection of crop weeds: Performance of YOLOv7 on a real case dataset from UAV images. Remote Sens. 2023, 15, 539. [Google Scholar] [CrossRef]

- Lee, S.H.; Chan, C.S.; Wilkin, P.; Remagnino, P. Deep-plant: Plant identification with convolutional neural networks. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 452–456. [Google Scholar] [CrossRef]

- Wang, S.S.; Zhang, W.Y.; Wang, X.S.; Yu, S.S. Recognition of rice seedling rows based on row vector grid classification. Comput. Electron. Agric. 2021, 190, 106454. [Google Scholar] [CrossRef]

- Liu, G.X.; Nouaze, J.C.; Touko Mbouembe, P.L.; Kim, J.H. YOLO-tomato: A robust algorithm for tomato detection based on YOLOv3. Sensors 2020, 20, 2145. [Google Scholar] [CrossRef] [PubMed]

- Diao, Z.H.; Guo, P.L.; Zhang, B.H.; Zhang, D.Y.; Yan, J.N.; He, Z.D.; Zhao, S.N.; Zhao, C.J.; Zhang, J.C. Navigation line extraction algorithm for corn spraying robot based on improved YOLOv8s network. Comput. Electron. Agric. 2023, 212, 108049. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.T.; Cao, Y.; Hu, H.; Wei, Y.X.; Zhang, Z.; Lin, S.; Guo, B.N. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual Event, 18–24 July 2021; pp. 10347–10357. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Tan, M.X.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

| Method | Advantage | Disadvantage |

|---|---|---|

| GNSS | Global coverage and high accuracy | Poor stability and signal susceptibility to environmental influences |

| LiDAR | Highly accurate and unaffected by the environment | Higher cost and complexity of operation |

| Computer vision | Good autonomy and real time | Sensitivity to environmental factors |

| Target | Method | Accuracy | Author |

|---|---|---|---|

| - | ENet | 84.94% | Yu et al. (2023) |

| Rice | Transformer | 92.93% | Li et al. (2023) |

| Rice | UNet + MobileNet V2 | 90.8% | He et al. (2022) |

| Rice | SSD + MobileNet | 92.8% | Liu et al. (2020) |

| Corn | Faster-RCNN + VGG19 | 97.71% | Quan et al. (2019) |

| Corn | YOLOv5 | 97.8% | Yang et al. (2023) |

| - | GCN + ResNet-101 | 97.5% | Jiang et al. (2020) |

| Corn | UNet + ASPP | 90.18% | Diao et al. (2023) |

| Rice | YOLOv3 | 91.47% | Zhang et al. (2020) |

| Potato | UNet + VGG16 | 97.29% | Yang et al. (2022) |

| - | YOLOv4 + MobileNet V3 | 93.6% | Hu et al. (2021) |

| - | SegNet | 93.58% | Bah et al. (2019) |

| Rice | YOLOv5 + PFocal Loss | - | Wang et al. (2023) |

| Category | Number |

|---|---|

| Seedling stage | 5328 |

| Mid-growth stage | 4672 |

| Name | Related Configuration |

|---|---|

| Operating system | Windows 10 (64 bit) |

| CPU | Intel(R) Xeon(R) CPU E5-2678 v3 @ 2.50 GHz |

| GPU | NVIDIA GeForce RTX 3080 Ti |

| Software and environment | PyCharm 2021.3, Python 3.8, Pytorch 1.10 |

| Growth Stage | Network | MAP |

|---|---|---|

| ST-YOLOv5s | 91.13% | |

| Seedling stage | ST-YOLOv7 | 85.36% |

| ST-YOLOv8s | 93.89% | |

| ST-YOLOv5s | 91.78% | |

| Mid-growth stage | ST-YOLOv7 | 84.77% |

| ST-YOLOv8s | 92.27% |

| Evaluation Metrics | Detection Frame Midpoint + Least Squares Method | FAST Corner Point Detection + Least Squares Method | SUSAN Corner Point Detection + Least Squares Method | This Paper |

|---|---|---|---|---|

| Accuracy W | 79.41% | 86.32% | 90.80% | 96.79% |

| Average angle error N | 4.36° | 2.19° | 1.28° | 0.58° |

| Average fitting time T | 52.30 ms | 65.00 ms | 80.77 ms | 47.00 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Diao, Z.; Ma, S.; Zhang, D.; Zhang, J.; Guo, P.; He, Z.; Zhao, S.; Zhang, B. Algorithm for Corn Crop Row Recognition during Different Growth Stages Based on ST-YOLOv8s Network. Agronomy 2024, 14, 1466. https://doi.org/10.3390/agronomy14071466

Diao Z, Ma S, Zhang D, Zhang J, Guo P, He Z, Zhao S, Zhang B. Algorithm for Corn Crop Row Recognition during Different Growth Stages Based on ST-YOLOv8s Network. Agronomy. 2024; 14(7):1466. https://doi.org/10.3390/agronomy14071466

Chicago/Turabian StyleDiao, Zhihua, Shushuai Ma, Dongyan Zhang, Jingcheng Zhang, Peiliang Guo, Zhendong He, Suna Zhao, and Baohua Zhang. 2024. "Algorithm for Corn Crop Row Recognition during Different Growth Stages Based on ST-YOLOv8s Network" Agronomy 14, no. 7: 1466. https://doi.org/10.3390/agronomy14071466

APA StyleDiao, Z., Ma, S., Zhang, D., Zhang, J., Guo, P., He, Z., Zhao, S., & Zhang, B. (2024). Algorithm for Corn Crop Row Recognition during Different Growth Stages Based on ST-YOLOv8s Network. Agronomy, 14(7), 1466. https://doi.org/10.3390/agronomy14071466