Abstract

The identification and enumeration of peach seedling fruits are pivotal in the realm of precision agriculture, greatly influencing both yield estimation and agronomic practices. This study introduces an innovative, lightweight YOLOv8 model for the automatic detection and quantification of peach seedling fruits, designated as YOLO-Peach, to bolster the scientific rigor and operational efficiency of orchard management. Traditional identification methods, which are labor-intensive and error-prone, have been superseded by this advancement. A comprehensive dataset was meticulously curated, capturing the rich characteristics and diversity of peach seedling fruits through high-resolution imagery at various times and locations, followed by meticulous preprocessing to ensure data quality. The YOLOv8s model underwent a series of lightweight optimizations, including the integration of MobileNetV3 as its backbone, the p2BiFPN architecture, spatial and channel reconstruction convolution, and coordinate attention mechanism, all of which have significantly bolstered the model’s capability to detect small targets with precision. The YOLO-Peach model excels in detection accuracy, evidenced by a precision and recall of 0.979, along with an mAP50 of 0.993 and an mAP50-95 of 0.867, indicating its superior capability for peach sapling identification with efficient computational performance. The findings underscore the model’s efficacy and practicality in the context of peach seedling fruit recognition. Ablation studies have shed light on the indispensable role of each component, with MobileNetV3 streamlining the model’s complexity and computational load, while the p2BiFPN architecture, ScConv convolutions, and coordinate attention mechanism have collectively enhanced the model’s feature extraction and detection precision for minute targets. The implications of this research are profound, offering a novel approach to peach seedling fruit recognition and serving as a blueprint for the identification of young fruits in other fruit species. This work holds significant theoretical and practical value, propelling forward the broader field of agricultural automation.

1. Introduction

In recent years, the burgeoning global population has led to a surging demand for fruit [1]. The fruit industry has been experiencing rapid growth, and peach trees have notably expanded, with peaches emerging as the third most popular temperate fruit, following apples and pears [2,3]. To bolster the yield and quality of peaches, beyond meticulous water and fertilizer management, the strategic thinning of peach seedlings stands as an essential practice [4,5]. This thinning process not only amplifies the size and quality of the fruits that remain but also diminishes the occurrence of biennial bearing—a situation where a season of abundant crops is succeeded by one of scarcity [6,7]. The identification and enumeration of peach seedlings are therefore pivotal, providing a reference for thinning and significantly contributing to the accurate forecasting of orchard yields and the evolution of sophisticated orchard management strategies [8].

In the current peach production process, thinning techniques are predominantly executed through manual labor, mechanical devices, or chemical agents. Manual thinning stands out as the most accurate technique, albeit one that requires substantial human and material resources, as well as the expertise of trained personnel, leading to increased costs [9]. Mechanical thinning offers the advantage of being more expeditious and cost-efficient compared to manual methods, but it poses the risk of damaging fruit trees and causing the development of scar tissue, which can impede the growth of the fruit [10]. Chemical thinning presents a broad spectrum of benefits, particularly in reducing costs and ensuring the timeliness of intervention, with its effectiveness varying according to the specific chemicals employed [11]. Thus, the evolution toward smart orchards hinges on the automation of precise thinning, making the development of a method for the accurate and swift quantification of young fruits an indispensable component.

Traditional methodologies grounded in image processing techniques have long relied on color thresholds, shape characteristics, and textural details to segment and identify fruits [12,13,14]. These approaches, however, often demand specialized image processing algorithms that are finely tuned to particular environments, thereby limiting their broader utility and necessitating a significant degree of technical expertise from operators [15,16]. Deep-learning-based technologies offer a compelling alternative, capable of automatically extracting and learning the intrinsic features of fruits through the training of advanced neural network models, which results in enhanced precision in identification and counting [17,18]. As deep learning continues to make strides, it has become the method of choice for many researchers in the field of fruit recognition, ushering in a new era of technological advancement [19,20,21,22].

Basri et al. [23] harnessed the prowess of the Faster R-CNN deep learning framework for the multi-fruit detection and classification of mangoes and pitayas, leveraging the MobileNet model and attaining an impressive accuracy rate nearing 99%. Gao et al. [24] advanced the field with a multi-class apple detection strategy based on Faster R-CNN, adept at discerning unobstructed, leaf-obstructed, branch/wire-obstructed, and fruit-obstructed apples, with average precisions reaching 0.909, 0.899, 0.858, and 0.848, respectively. Xu et al. [25] developed the EMA-YOLO model, incorporating an advanced attention mechanism and EIoU loss function, which achieved a 4.2% mAP increase over the YOLOv8n baseline, enhancing the detection accuracy of immature yellow peaches for efficient orchard management. Vasconez et al. [26] extended the application of deep learning to diverse orchards in Chile and the United States, employing Faster R-CNN with Inception V2 and SSD with MobileNet, showcasing two distinct convolutional neural network architectures. The synergy of Inception V2 with Faster R-CNN culminated in a fruit counting performance that peaked at 93%, while the amalgamation of SSD with MobileNet secured an accuracy of 90%. Jia et al. [27] innovated a two-stage instance segmentation technique grounded in Mask R-CNN, enriched with a nimble MobileNetv3 backbone and a Boundary Patch Refinement (BPR) post-processing module, facilitating the meticulous segmentation of verdant fruits within the complexity of orchard environments. The optimized Mask R-CNN delivered an average precision mean (mAP) of 76.3% and an average recall mean (mAR) of 81.1% on the persimmon dataset, representing a commendable enhancement of 3.1% and 3.7% over the foundational Mask R-CNN, respectively. Gai et al. [28] presented a cherry fruit detection algorithm that builds on an upgraded YOLO-v4 model, with the core network transitioned to DenseNet and the incorporation of circular marking frames tailored to the contours of cherry fruits. The research findings illuminated that the mAP value of the revamped model exceeded that of the original YOLO-v4 by a notable margin of 0.15. Wang and He [29] introduced a cutting-edge apple seedling detection method for pre-fruit thinning, underpinned by a channel pruning-informed YOLO v5s deep learning algorithm, achieving a recall rate of 87.6%, precision of 95.8%, F1 score of 91.5%, and a false detection rate of a mere 4.2%. Bai et al. [30] forged ahead with an algorithm for the expedited and accurate detection of greenhouse strawberry seedling flowers and fruits, integrating a YOLO v7-based Swin Transformer prediction head and GS-ELAN optimization module. The research outcomes demonstrated that the precision (P), recall (R), and mean average precision (mAP) excelled at 92.6%, 89.6%, and 92.1%, respectively, eclipsing the performance metrics of YOLOv7 by 3.2%, 2.7%, and 4.6%. At present, deep learning techniques for detecting fruits are largely split into two-phase object detection methods that are predominantly R-CNN-based and one-phase object detection algorithms that are primarily derived from SSD and the YOLO series [31,32]. The two-stage detection methods, while enhancing accuracy through region proposal networks, are hampered by slower processing speeds and stringent hardware requirements, which render them impractical for mobile applications [33,34]. Conversely, single-stage detection algorithms, although potentially slightly less accurate, offer superior processing speeds and are thus better suited for deployment on mobile devices. The YOLO series, in particular, has garnered widespread adoption and has proven its prowess with outstanding performance across a myriad of fields [35]. However, the deployment of these algorithms on mobile devices with constrained resources continues to pose a formidable challenge. There is a clear imperative to refine these algorithms to achieve a balance between lightweight design and precision. Furthermore, the application of current fruit detection algorithms to peaches is relatively scarce. Consequently, the pursuit of making the YOLO series algorithms both lightweight and accurate is essential for propelling research in the realms of peach thinning management and yield estimation.

This research is dedicated to harnessing the potential of the lightweight YOLOv8s in the identification of peach seedling fruits, with the goal of refining the YOLOv8 algorithm to create a specialized, streamlined model for this niche. The scope of the study involves the meticulous construction of a dataset designed to facilitate the recognition of peach seedling fruits, the strategic enhancement of the YOLOv8 model through lightweight innovations, and rigorous testing and evaluation within authentic orchard conditions. The aspiration is to contribute an efficient and highly accurate solution for the identification of peach seedling fruits, thereby fostering progress in the domain of agricultural automation and the evolution of precision farming. The objectives pursued in this research are as follows:

- To engineer a sophisticated detection model for peach seedling fruits that capitalizes on YOLOv8s, with the aim of refining the precision of thinning practices and offering robust preliminary yield estimations within peach orchards.

- To advance model lightweighting: To devise a cost-effective, streamlined YOLOv8s model that can be seamlessly integrated into the agricultural landscape, even within the scope of limited resources.

- To amplify the precision of the lightweight model: To integrate advanced deep learning methodologies, with a particular focus on the optimized YOLOv8s algorithm, to significantly elevate the accuracy of peach seedling fruit detection and curtail instances of both oversight and false positives.

The significance of this research transcends the development of a novel technique for peach seedling fruit identification; it also establishes a valuable reference for the recognition of young fruits across a spectrum of fruit trees. This study bears profound theoretical and practical implications for driving forward the broader field of agricultural automation.

2. Materials and Methods

2.1. Establishment of the Peach Seedling Fruit Dataset

For the purpose of this study, achieving the proficient recognition of peach seedling fruits dictated a meticulous approach to dataset construction, which was methodically carried out in several pivotal stages. The iQOO 12 Pro smartphone served as our image-capturing device, with its rear main camera boasting a 50-megapixel sensor and an f/1.75 aperture, ensuring the acquisition of high-resolution and diverse imagery. Image collection took place within an orchard in Luoyang City, Henan Province, with a strategic approach to ensure dataset diversity. With a keen eye on the natural light conditions, we meticulously planned our photography sessions at different times of the day to capture a wide array of lighting scenarios. The iQOO 12 Pro’s high dynamic range (HDR) feature and superior low-light performance enabled the capture of high-quality images under diverse lighting conditions. The subsequent phase involved comprehensive preprocessing of the images, including cropping to focus on the fruits, resizing to a uniform 640 × 640 pixel dimension, and normalization to scale pixel values within the 0 to 1 range. In the subsequent annotation process, the study utilized LabelImg to annotate the collected peach seedling images, delineating the fruits within rectangular frames to ensure consistency and precision in data labeling. The final phase was the application of data augmentation strategies to enrich and enhance the dataset. These strategies included rotation (within a ±30-degree range), scaling (from 0.8 to 1.2 times the original size), flipping (both horizontal and vertical), and the introduction of noise (Gaussian noise with a mean of 0 and a standard deviation of 0.01). These techniques were designed to increase the complexity of the dataset and bolster the model’s adaptability to real-world variations. The culminating dataset comprises 2270 images, meticulously partitioned into training, validation, and test sets in an 8:1:1 ratio. A selection of these images is illustrated in Figure 1, where red-colored peaches denote those basking in direct sunlight, the green-hued fruits are shaded by leaves or positioned on the shadowed side of the tree, and those with black edges, color variations, and mosaic patterns are indicative of our randomized augmentation techniques.

Figure 1.

Sample images from a dataset portion.

2.2. Improvement of the YOLOv8s Model

2.2.1. The Basic Network Architecture of YOLOv8s

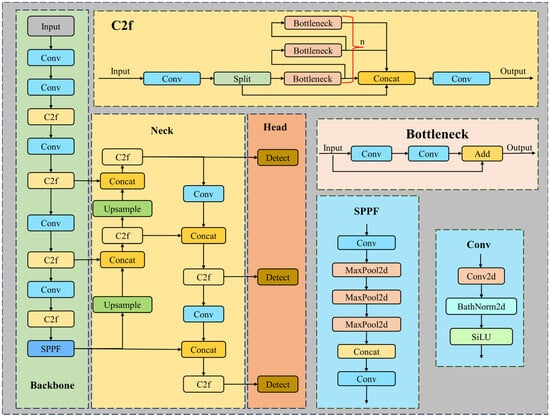

The YOLOv8 algorithm is an innovative single-stage object detection technique, comprising a backbone network, a neck structure, and a detection head [36], as illustrated in Figure 2. The backbone network of YOLOv8 has been optimized, building on YOLOv5 and replacing the traditional C3 module with the C2f module. The C2f module synthesizes the Cross Stage Partial Network (CSP) concept and the ELAN module from YOLOv7, thereby enriching the gradient flow information while sustaining the network’s efficient design. Additionally, YOLOv8 retains the SPPF module, which manages targets of different scales through a series of three 5 × 5 max pooling layers, ensuring heightened detection accuracy while maintaining a streamlined network architecture. In the realm of feature fusion, YOLOv8 leverages PAN-FPN to bolster the amalgamation and application of information from feature layers of varying scales, enhancing the model’s proficiency in detecting multi-scale targets. The neck structure of YOLOv8 is constituted by two upsampling layers and an array of C2f modules, culminating in a decoupled head structure that distinguishes classification and regression tasks, thereby amplifying the detection precision.

Figure 2.

YOLOv8 network architecture diagram.

2.2.2. Enhancing the YOLOv8 Backbone Network Using MobileNetV3

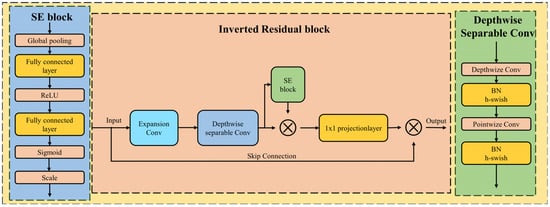

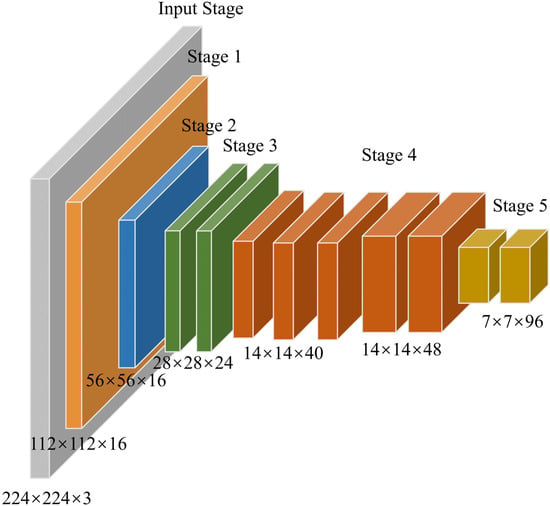

The MobileNetV3 is a lightweight deep learning model introduced by the Google team in 2019, specifically optimized for mobile and embedded devices [37]. The MobileNetV3 model uses Depthwise Separable Convolution to reduce computational load and model size, while enhancing feature representation with Inverted Residuals that incorporate linear bottleneck structures [38]. Additionally, MobileNetV3 incorporates the Squeeze-and-Excite (SE) module to strengthen inter-channel relationships and employs a smooth, non-monotonic activation function called h-swish to improve model performance. The Inverted Residual module of the MobileNetV3 network is illustrated in Figure 3. The design of MobileNetV3 was accomplished through Network Architecture Search (NAS) techniques to achieve an optimal network structure. In this study, we chose the Small version of the MobileNetV3 network to suit resource-constrained mobile deployments, with its backbone network structure shown in Figure 4. As depicted in Figure 4, the output stage consists of a Conv_bn_h-swish module composed of Conv2d, BatchNorm2d, and the h-swish activation function, while the remaining stages are Inverted Residual blocks.

Figure 3.

Diagram of the Inverted Residual with linear bottleneck module and SE module.

Figure 4.

Backbone structure diagram of the MobileNetV3 network.

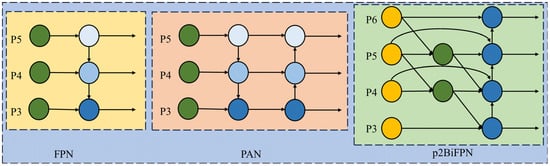

2.2.3. Improving YOLOv8s Small Object Detection Capability with the BiFPN Structure

Although the PAN-FPN structure of YOLOv8 enhances the fusion of semantic and localization information, it may lead to information loss when handling large-scale feature maps, affecting detection quality and further reducing feature reuse during upsampling and downsampling processes [39]. In this study, to improve YOLOv8s ability to detect small objects, especially occluded small objects, we adopted the Bidirectional Feature Pyramid Network (BiFPN) structure and integrated it with a small object detection layer to enhance the neck network of YOLOv8. The BiFPN introduces a bottom-up path to enhance information exchange between different levels of features, thereby providing richer contextual information for small-sized objects. Additionally, BiFPN employs weighted feature fusion technology, dynamically adjusting the weights of features at different scales, allowing the model to focus more on the features that are crucial for the current task. Furthermore, to enhance small object detection performance, we introduced a dedicated small object detection head based on BiFPN. In BiFPN, the feature fusion process can be expressed by the following formula:

where represents the input features, O represents the output features, and are learnable weights, and the learnable weights in the range of [0, 1] are scaled using the ReLU activation function. To ensure the stability of the output, is set to a small value. The results of the fused p2BiFPN are shown in Figure 5.

Figure 5.

The structure of the p2BiFPN network.

2.2.4. The Lightweight Spatial and Channel Reconstruction Convolution

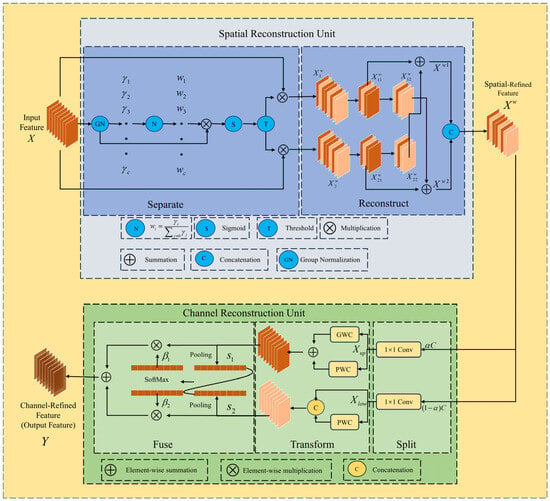

The Spatial and Channel Reconstruction Convolution (ScConv) is an efficient convolutional module designed for Convolutional Neural Networks (CNN). It enhances computational efficiency and reduces model complexity by reducing spatial and channel redundancy in feature maps [40]. The ScConv module comprises two main components: the Spatial Reconstruction Unit (SRU) and the Channel Reconstruction Unit (CRU) [41]. The main structural diagram of ScConv is depicted in Figure 6.

Figure 6.

The structure of ScConv.

The purpose of the SRU is to reduce spatial redundancy in feature maps through separation and reconstruction operations. It first uses the scaling factors of the Group Normalization layer to evaluate the amount of information in different feature maps. Then, it separates the feature maps with high information content from those with low information content through a gating operation. The SRU further combines these two parts of the feature maps through a cross-reconstruction operation to enhance feature representation and reduce spatial redundancy. The CRU aims to reduce channel redundancy in feature maps. It adopts a Split-Transform-and-Fuse strategy, first dividing the channels of the feature map into two parts, and then using lightweight operations such as 1 × 1 convolution and Group-wise Convolution to extract representative features. Finally, it adaptively merges the feature maps from different transformation stages using a feature importance vector, further reducing channel redundancy.

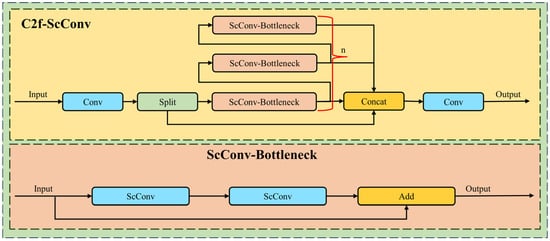

This study employs the ScConv convolution module to replace the convolution in the C2f module, capturing spatial and channel information of the target more effectively during the feature extraction phase, thereby enhancing the performance of the C2f module. The structure of the improved C2f-ScConv module is shown in Figure 7.

Figure 7.

The structure diagram of C2f-ScConv module.

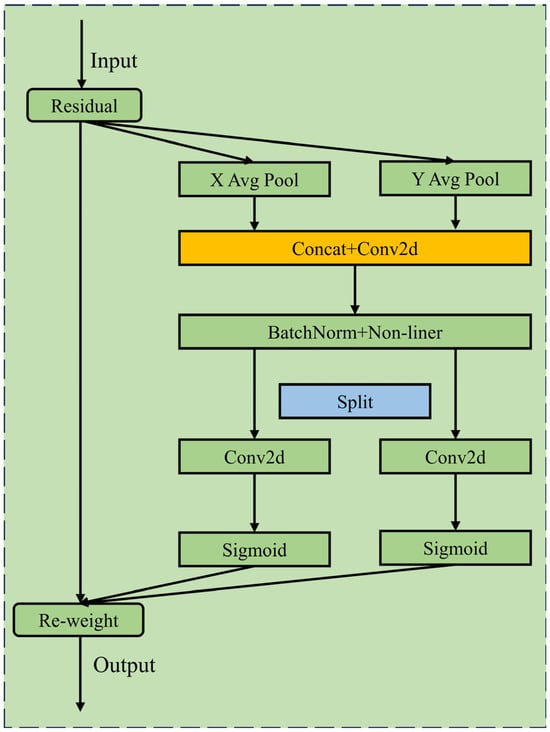

2.2.5. Coordinate Attention Mechanism

In this study, we integrated a coordinate attention mechanism (CA) module at the junction of the neck structure and detection head, enabling the model to focus more effectively on key areas of the image, especially those containing small objects. This mechanism calculates the coordinates of each pixel in the feature map and combines them with the feature map itself to generate a coordinate-sensitive feature representation. This representation can more accurately capture the spatial context information of the targets, thereby improving detection accuracy. The CA attention mechanism primarily consists of coordinate information embedding and coordinate attention generation [42], with its main structure shown in Figure 8.

Figure 8.

The structure of the CA attention mechanism.

Coordinate Information Embedding: The CA module first encodes spatial information by applying two parallel one-dimensional (1D) convolutions along the horizontal and vertical directions of the input feature map X. This operation generates two direction-aware feature maps, retaining spatial cues critical for target localization. Coordinate Attention Generation: Next, the encoded features are transformed to generate an attention map. This transformation involves concatenating the direction-aware feature maps, and then processing them through a 1 × 1 convolution, batch normalization, and a nonlinear activation function. The output is then split into two separate tensors, each adjusted to match the channel dimensions of the input feature map X. The final attention weights are obtained through a sigmoid function and then applied to the input feature map to produce an enhanced output.

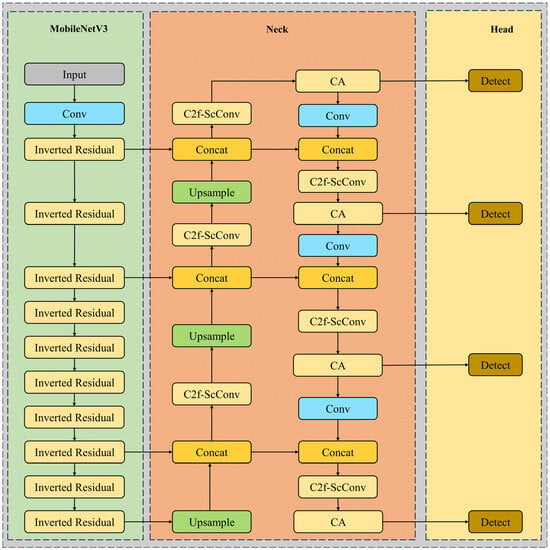

2.2.6. The Improved Network Structure Based on YOLOv8s

This study optimized the YOLOv8s structure by using MobileNetV3 as the backbone network to enhance lightweight design and computational efficiency. The introduction of the p2BiFPN structure enhances small object detection, while the combination of ScConv convolution and the C2f module improves model accuracy and reduces parameters. Additionally, the inclusion of the coordinate attention mechanism further improves target localization accuracy. The YOLO-Peach network structure constructed in this study is shown in Figure 9.

Figure 9.

The structure of the YOLO-Peach network.

2.3. The Experimental Setup

To validate the performance of the model proposed in this study, a model running an experimental platform was built using Windows 11 as the operating system and PyTorch 2.0 as the deep learning framework. The CPU of the experimental platform in this study is an Intel Core i5-1350P with 16GB of RAM, and the GPU is a GeForce RTX 4050 with 6 GB of VRAM.

2.4. Evaluation Index

In this study, we adopted four key evaluation metrics to comprehensively assess the performance of the lightweight YOLOv8 model in the task of young peach fruit recognition. These metrics include Precision (P), Recall (R), Mean Average Precision (mAP50), and Extended Mean Average Precision (mAP50-95). Precision measures the proportion of true positive predictions among all positive predictions, while Recall reflects the model’s ability to capture all true positive instances. mAP50 is the average precision for all categories at an IoU threshold of 50%, serving as a comprehensive metric to evaluate the model’s performance across different categories. mAP50-95 provides a more detailed assessment by averaging the precision for all categories across IoU thresholds from 50% to 95%, offering a more thorough evaluation of the model’s adaptability to varying degrees of occlusion and scale changes. The formulas for these evaluation metrics are as follows:

where TP represents the number of true positives, FP represents the number of false positives, FN represents the number of false negatives, and represents the average precision for the i-th category at an IoU threshold of 0.5.

3. Results

3.1. The Comparison of Experimental Results with Other Models

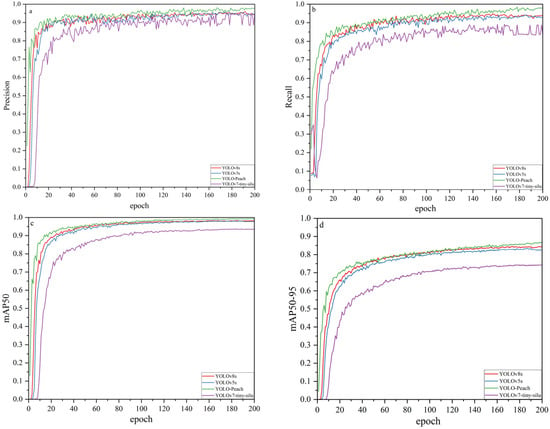

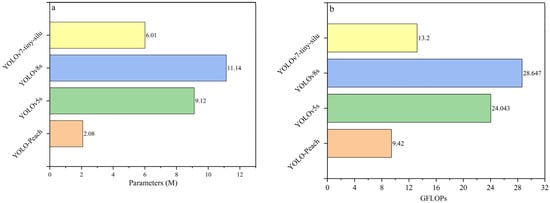

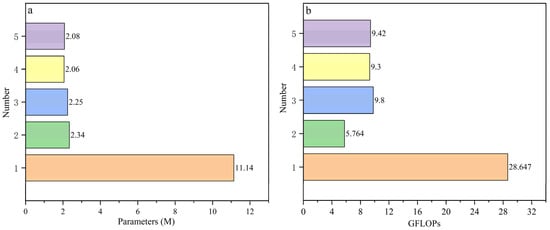

In this study, based on the YOLOv8s single-stage object detection algorithm, we constructed a YOLO-Peach model for detecting and measuring young peach fruits. This was achieved by lightening the backbone network, adopting the p2BiFPN neck structure network with a small object detection layer, combining the ScConv and C2f modules to reduce parameter volume and floating-point computation while enhancing model accuracy, and introducing the coordinate attention mechanism to enhance the model’s focus on key targets. Additionally, we compared the YOLO-Peach model with three existing YOLO series models, namely YOLOv5s, YOLOv7-tiny-silu, and YOLOv8s, to better evaluate the model’s performance. The results of the model training process are depicted in Figure 10, while the accuracy, parameters, and computational complexity of various models are presented in Table 1 and Figure 11.

Figure 10.

The variation of accuracy of the training process for different models: (a) displays the iterative training accuracy of the model, (b) shows the iterative recall during training, (c) illustrates the mAP50 (mean Average Precision at 50% Intersection over Union) across iterations, and (d) represents the mAP50-95, which measures the model’s performance over a broader range of Intersection over Union thresholds.

Table 1.

The precision of different models.

Figure 11.

The number of parameters and GFLOPs diagrams for different models: (a) represents the model’s parameter count, and (b) illustrates the model’s GFLOPs.

The curve of the YOLO-Peach model in Figure 10 exhibits a noticeable upward trend, particularly in the initial stages of testing. This trend signifies the rapid adaptation and enhancement of detection accuracy by YOLO-Peach during the initial phases. As testing progresses, the performance improvement of YOLO-Peach gradually decelerates, with the curve flattening out, indicating that the model’s performance has stabilized at a higher level of detection accuracy. In the later stages of model training, the curve of YOLO-Peach demonstrates a significant advantage over other models. This outcome underscores the exceptional performance of YOLO-Peach in detecting young peach fruits. In contrast, although the curves of YOLOv8s and YOLOv5s also exhibit an upward trend with increasing iteration numbers, their overall performance levels are inferior to that of YOLO-Peach. Furthermore, YOLOv7-tiny-silu exhibits the most significant performance gap compared to YOLO-Peach overall.

As illustrated in Table 1 and Figure 11, YOLO-Peach exhibits exceptional performance across all accuracy metrics. Specifically, the model achieves a precision (P) of 0.979, recall (R) of 0.979, mAP50 of 0.993, and mAP50-95 of 0.867, indicating extremely high detection accuracy. Additionally, the model’s parameter count is 2,077,050, with a Gflops of 9.42, effectively managing model complexity and computational requirements while maintaining high accuracy. In comparison, the YOLOv8s model ranks second in accuracy, with P at 0.952, R at 0.936, mAP50 at 0.982, and mAP50-95 at 0.843. However, the YOLOv8s model has the highest parameter count and computational requirements, with parameters totaling 11,135,987 and a computational complexity of 28.647 Gflops. Furthermore, the YOLOv5s model exhibits slightly lower accuracy than the YOLOv8s model, but its recall rate is slightly higher (precision of 0.947, recall of 0.937, mAP50 of 0.981, and mAP50-95 of 0.834). Despite the lower parameter count of YOLOv5s compared to YOLOv8s, its parameter count and computational complexity are still significantly higher than those of YOLO-Peach (parameters totaling 9,122,579 and a computational complexity of 24.043 Gflops). Finally, the accuracy of the YOLOv7-tiny-silu model is 0.945, with a recall rate of 0.836, mAP50 of 0.935, and mAP50-95 of 0.743. The model has a parameter count of 6014038 and a computational complexity of 13.2 Gflops. Although relatively balanced in terms of parameter count and computational efficiency, it still falls short of YOLO-Peach in accuracy.

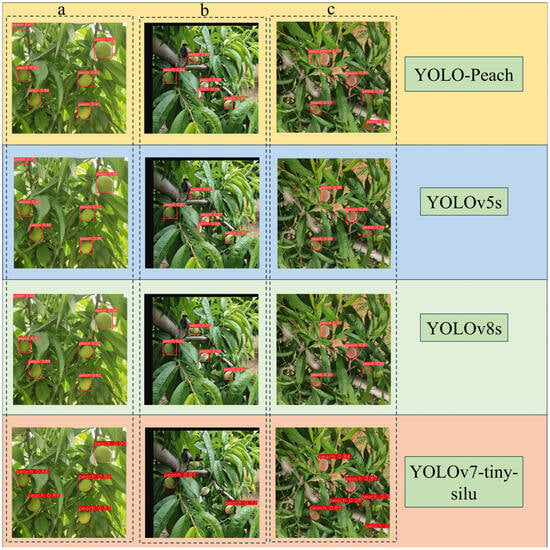

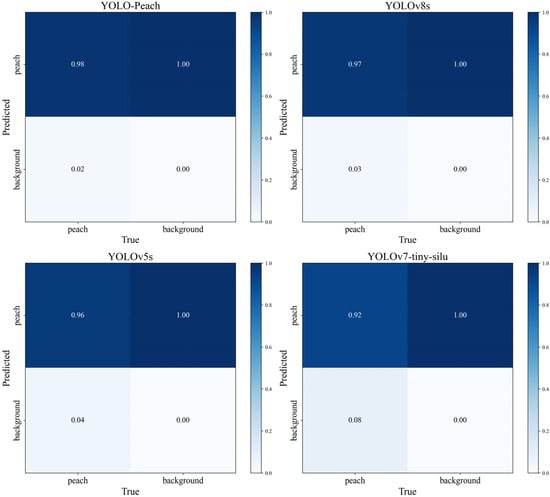

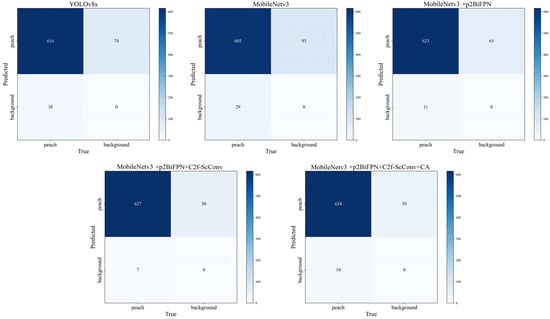

To visually demonstrate the performance of different models, we use confusion matrices and detection results to present the detection performance of different models more intuitively. The regularized confusion matrices and detection results of different models are shown in Figure 12 and Figure 13. From Figure 12, it can be observed that among the regularized confusion matrices of different models, the YOLO-Peach model has the highest proportion of correct detection of peaches, reaching 0.98, indicating the best detection performance. Following that, the YOLOv8s model has a proportion of correct detection of 0.97, indicating relatively good detection performance. The third-ranking model is the YOLOv5 model, with a proportion of correct detection of 0.96, indicating relatively poor detection performance. Lastly, the YOLOv7-tiny-silu model exhibits the poorest detection performance, with a correct detection proportion of 0.92.

Figure 12.

The detection results of different models: (a) indicates images captured in backlight conditions, (b) signifies images that have been processed for enhancement, and (c) denotes images taken in front light conditions. The ☐ symbol in the image represents the detected peach saplings, with the number following the term indicating the confidence level of the respective detection box.

Figure 13.

The regularized mixing matrix for different models.

3.2. Ablation Experiments

In this study, the YOLOv8s model was adopted as the baseline, and improvements to the model for peach fruit recognition tasks were made by incorporating key components such as MobileNetV3, p2BiFPN, C2f-ScConv, and Coordinate Attention. Through a series of ablation experiments, we quantified the specific impacts of these components on the model’s performance. The results of the ablation experiments are presented in Table 2, while the model’s parameter count and GFlops are depicted in Figure 14.

Table 2.

Results of ablation experiments.

Figure 14.

The number of parameters and GFLOPs modeled for ablation experiments: (a) represents the model’s parameter count, and (b) illustrates the model’s GFLOPs.

As depicted in Table 2 and illustrated in Figure 14, the introduction of the MobileNetV3 backbone network resulted in a slight decrease in model accuracy to 0.944, with a recall rate reduction to 92.4% and a decline in mAP50 and mAP50-95 to 0.975 and 0.821, respectively. The simultaneous incorporation of MobileNetV3 and P2 + BiFPN significantly bolstered model performance, achieving an accuracy of 0.965, a recall rate of 0.952, and mAP@0.5 and mAP50-95 scores of 0.989 and 0.855, respectively. This underscores the superiority of P2 + BiFPN in augmenting model feature integration. Further enhancement was realized by integrating the C2f-ScConv component, elevating model accuracy to 0.978, recall rate to 0.965, mAP@0.5 to 0.991, and mAP50-95 to 0.860. Ultimately, the YOLO-Peach model demonstrated optimal performance, achieving an accuracy and recall rate of 0.979, with an mAP50 of 0.993 and an mAP50-95 of 0.867, thereby substantiating the synergistic enhancement of these components in elevating the model’s overall efficacy.

To provide a more direct assessment of the performance across various models, we utilized a confusion matrix to graphically represent the impact of different enhancements on the model’s performance. The outcomes of these confusion matrices are depicted in Figure 15. As illustrated in Figure 15, the integration of MobileNetV3 into the backbone network resulted in a reduction of 11 accurately identified peach saplings and an increase of 19 false identifications of the background. Upon the simultaneous introduction of MobileNetV3 and P2 + BiFPN, the model’s performance showed an improvement over the baseline YOLOv8s model, with an increase of 7 in the number of accurate identifications and a decrease of 13 in the number of false background identifications. Subsequently, by further integrating the ScConv and C2f modules on the foundation of MobileNetV3 and P2 + BiFPN to enhance the model’s precision, there was an increase of 11 in the number of accurate identifications and a decrease of 24 in the number of false background identifications, when compared to the baseline YOLOv8s. Ultimately, the introduction of the CA attention mechanism, despite a slight reduction of 3 in the number of accurate identifications, led to a significant decrease of 11 in the number of false background identifications.

Figure 15.

The confusion matrix for the ablation experiment.

4. Discussion

The YOLO-Peach model, developed in this study, represents a significant contribution to the domain of agricultural automation, specifically in the context of peach sapling identification and quantification. The strategic selection of the MobileNetV3 backbone network has been instrumental in achieving a reduction in the model’s parameter volume and computational load, without a substantial compromise in detection accuracy. This approach aligns with existing literature that advocates for the adoption of lightweight architectures in environments characterized by limited computational resources [43,44]. The incorporation of the p2+BiFPN neck structure has been shown to enhance the model’s precision, albeit at the expense of increased parameter volume and computational demands [45]. This enhancement aligns with the body of research that underscores the efficacy of feature pyramid networks in bolstering object detection capabilities [46]. The introduction of the ScConv convolution and the C2f module within the neck structure, based on the MobileNetV3 and P2+BiFPN framework, represents an innovative strategy for minimizing the model’s computational footprint while simultaneously augmenting its accuracy. This approach reflects the current trend toward the development of more efficient and compact neural network architectures. The CA attention mechanism, integrated into the model, has been demonstrated to improve the model’s robustness against background interference. This finding is consistent with the growing body of research that highlights the utility of attention-based mechanisms in enhancing the detection accuracy of object detection models [47].

The implications of these findings extend beyond the specific application of peach sapling identification. The YOLO-Peach model’s streamlined design, capable of operating on cost-effective hardware, offers substantial practical benefits for agricultural applications with constrained resources. The model’s high performance metrics, including P and R scores of 0.979 and mAP50 at 0.993, underscore its potential for real-world deployment in precision farming scenarios.

Future research directions should consider the adaptation of the YOLO-Peach model to a broader range of fruit species and environmental conditions. This would extend the model’s utility in the realm of agricultural automation and precision farming, thereby contributing to the broader goal of sustainable agricultural advancements. Additionally, the integration of the model with other sensing technologies, such as drones or IoT devices, presents an opportunity to further enhance its capabilities and applicability across diverse agricultural scenarios.

In summary, the YOLO-Peach model, rooted in the lightweight YOLOv8 framework, has exhibited exceptional performance in peach sapling identification tasks. The model’s efficiency and precision, validated by the experimental outcomes, offer a robust solution for enhancing the precision and efficiency of agricultural management practices. The findings of this study not only validate the model’s suitability for real-world applications but also provide a foundation for the development of similar models in the future. As the agricultural sector continues to evolve, the demand for innovative solutions like the YOLO-Peach model is poised to increase, propelling the field toward more sustainable and efficient practices.

5. Conclusions

This research successfully developed a peach sapling identification and counting model based on the lightweight YOLOv8, with the goal of enhancing the scientific rigor and efficiency of orchard management. By fusing deep learning with a lightweight design, the proposed YOLO-Peach model not only efficiently identifies and counts peach saplings but is also well suited for agricultural environments with limited resources. Experimental outcomes demonstrate that the YOLO-Peach model excels in peach sapling identification tasks, P and R scores of 0.979, with mAP50 at 0.993 and mAP50-95 at 0.867, thereby validating the model’s efficiency and precision for real-world applications. MobileNetV3, serving as the backbone of the model, significantly reduces the model’s parameter count and computational demands while sustaining high detection accuracy, thereby confirming the efficacy of lightweight design in resource-restricted settings. The p2BiFPN structure in the model’s neck markedly strengthens its ability to detect small targets, especially in scenarios involving occlusion and scale variation, by enhancing the exchange and integration of feature information. The amalgamation of ScConv convolution with the C2f model further augments the model’s feature extraction capabilities by mitigating spatial and channel redundancy, thereby boosting the model’s precision in target localization. The CA attention mechanism enables the model to concentrate more on critical regions within the image, particularly those with small targets, thereby elevating the detection’s accuracy and robustness. In conclusion, the YOLO-Peach model, grounded in the lightweight YOLOv8 framework, has demonstrated exceptional performance in peach sapling identification, offering a robust solution for enhancing the precision and efficiency of agricultural management practices. For future research, we advocate for the model’s adaptation to diverse fruit species and environmental contexts, aiming to extend its utility in the realm of agricultural automation and precision farming, thus broadening its impact on sustainable agricultural advancements.

Author Contributions

Conceptualization, Y.S. and S.Q.; methodology, Y.S.; software, S.Q.; validation, Y.S., S.Q. and L.Z.; formal analysis, F.W.; investigation, F.W.; resources, Y.S.; data curation, X.Y. and M.Q.; writing—original draft preparation, S.Q.; writing—review and editing, Y.S.; visualization, X.Y. and M.Q.; supervision, Y.S.; project administration, Y.S.; funding acquisition, Y.S. and L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No. 52309050), Key R&D and Promotion Projects in Henan Province (Science and Technology Development) (No. 232102110264, No. 222102110452), PhD research startup foundation of Henan University of Science and Technology (No. 13480033), and Key Scientific Research Projects of Colleges and Universities in Henan Province (No. 24B416001).

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

We would like to thank a fruit orchard located in Luoyang City, Henan Province, China, for providing the database used in this study.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Wang, Q.; Wu, D.; Sun, Z.; Zhou, M.; Cui, D.; Xie, L.; Hu, D.; Rao, X.; Jiang, H.; Ying, Y. Design, integration, and evaluation of a robotic peach packaging system based on deep learning. Comput. Electron. Agric. 2023, 211, 108013. [Google Scholar] [CrossRef]

- Byrne, D.H.; Raseira, M.B.; Bassi, D.; Piagnani, M.C.; Gasic, K.; Reighard, G.L.; Moreno, M.A.; Pérez, S. Peach. Fruit Breed. 2012, 505–569. [Google Scholar] [CrossRef]

- Sun, L.; Yao, J.; Cao, H.; Chen, H.; Teng, G. Improved YOLOv5 Network for Detection of Peach Blossom Quantity. Agriculture 2024, 14, 126. [Google Scholar] [CrossRef]

- Lakso, A.N.; Robinson, T.L. Principles of orchard systems management optimizing supply, demand and partitioning in apple trees. Acta Hortic. 1997, 451, 405–416. [Google Scholar] [CrossRef]

- Costa, G.; Botton, A.; Vizzotto, G. Fruit thinning: Advances and trends. Hortic. Rev. 2018, 46, 185–226. [Google Scholar]

- Sutton, M.; Doyle, J.; Chavez, D.; Malladi, A. Optimizing fruit-thinning strategies in peach (Prunus persica) production. Horticulturae 2020, 6, 41. [Google Scholar] [CrossRef]

- Vanheems, B. How to Thin Fruit for a Better Harvest. 2015. Available online: https://www.growveg.com/guides/how-to-thin-fruit-for-a-better-harvest/ (accessed on 2 June 2024).

- Kukunda, C.B.; Duque-Lazo, J.; González-Ferreiro, E.; Thaden, H.; Kleinn, C. Ensemble classification of individual Pinus crowns from multispectral satellite imagery and airborne LiDAR. Int. J. Appl. Earth Obs. Geoinf. 2018, 65, 12–23. [Google Scholar] [CrossRef]

- Costa, G.; Botton, A. Thinning in peach: Past, present and future of an indispensable practice. Sci. Hortic. 2022, 296, 110895. [Google Scholar] [CrossRef]

- Bhattarai, U.; Zhang, Q.; Karkee, M. Design, integration, and field evaluation of a robotic blossom thinning system for tree fruit crops. J. Field Robot. 2024, 41, 1366–1385. [Google Scholar] [CrossRef]

- Southwick, S.M.; Weis, K.G.; Yeager, J.T. Bloom Thinning ‘Loadel’ Cling Peach with a Surfactant. J. Am. Soc. Hortic. Sci. 1996, 121, 334–338. [Google Scholar] [CrossRef]

- Henila, M.; Chithra, P. Segmentation using fuzzy cluster-based thresholding method for apple fruit sorting. IET Image Process. 2020, 14, 4178–4187. [Google Scholar] [CrossRef]

- Hussain, M.; He, L.; Schupp, J.; Lyons, D.; Heinemann, P. Green fruit segmentation and orientation estimation for robotic green fruit thinning of apples. Comput. Electron. Agric. 2023, 207, 107734. [Google Scholar] [CrossRef]

- Tian, M.; Zhang, J.; Yang, Z.; Li, M.; Li, J.; Zhao, L. Detection of early bruises on apples using near-infrared camera imaging technology combined with adaptive threshold segmentation algorithm. J. Food Process Eng. 2024, 47, e14500. [Google Scholar] [CrossRef]

- Zhang, F.; Zhang, P.; Wang, L.; Cao, R.; Wang, X.; Huang, J. Research on lightweight crested ibis detection algorithm based on YOLOv5s. J. Xi’an Jiaotong Univ. 2023, 57, 110–121. [Google Scholar]

- Zhang, Z.; Luo, M.; Guo, S.; Liu, G.; Li, S.; Zhang, Y. Cherry fruit detection method in natural scene based on improved yolo v5. Trans. Chin. Soc. Agric. Mach. 2022, 53, 232–240. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Lin, Y.; Huang, Z.; Liang, Y.; Liu, Y.; Jiang, W. AG-YOLO: A Rapid Citrus Fruit Detection Algorithm with Global Context Fusion. Agriculture 2024, 14, 114. [Google Scholar] [CrossRef]

- Chakraborty, S.K.; Subeesh, A.; Dubey, K.; Jat, D.; Chandel, N.S.; Potdar, R.; Rao, N.G.; Kumar, D. Development of an optimally designed real-time automatic citrus fruit grading–sorting machine leveraging computer vision-based adaptive deep learning model. Eng. Appl. Artif. Intell. 2023, 120, 105826. [Google Scholar] [CrossRef]

- Mirbod, O.; Choi, D.; Heinemann, P.H.; Marini, R.P.; He, L. On-tree apple fruit size estimation using stereo vision with deep learning-based occlusion handling. Biosyst. Eng. 2023, 226, 27–42. [Google Scholar] [CrossRef]

- Rajasekar, L.; Sharmila, D.; Rameshkumar, M.; Yuwaraj, B.S. Performance Analysis of Fruits Classification System Using Deep Learning Techniques. In Advances in Machine Learning and Computational Intelligence; Patnaik, S., Yang, X.S., Sethi, I., Eds.; Algorithms for Intelligent Systems; Springer: Singapore, 2021. [Google Scholar] [CrossRef]

- Xiao, F.; Wang, H.; Xu, Y.; Zhang, R. Fruit detection and recognition based on deep learning for automatic harvesting: An overview and review. Agronomy 2023, 13, 1625. [Google Scholar] [CrossRef]

- Basri, H.; Syarif, I.; Sukaridhoto, S. Faster R-CNN implementation method for multi-fruit detection using tensorflow platform. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 7166–7172. [Google Scholar] [CrossRef]

- Gao, F.; Fu, L.; Zhang, X.; Majeed, Y.; Li, R.; Karkee, M.; Zhang, Q. Multi-class fruit-on-plant detection for apple in SNAP system using Faster R-CNN. Comput. Electron. Agric. 2020, 176, 105634. [Google Scholar] [CrossRef]

- Xu, D.; Xiong, H.; Liao, Y.; Wang, H.; Yuan, Z.; Yin, H. EMA-YOLO: A Novel Target-Detection Algorithm for Immature Yellow Peach Based on YOLOv8. Sensors 2024, 24, 3783. [Google Scholar] [CrossRef] [PubMed]

- Vasconez, J.P.; Delpiano, J.; Vougioukas, S.; Cheein, F.A. Comparison of convolutional neural networks in fruit detection and counting: A comprehensive evaluation. Comput. Electron. Agric. 2020, 173, 105348. [Google Scholar] [CrossRef]

- Jia, W.; Wei, J.; Zhang, Q.; Pan, N.; Niu, Y.; Yin, X.; Ding, Y.; Ge, X. Accurate segmentation of green fruit based on optimized mask RCNN application in complex orchard. Front. Plant Sci. 2022, 13, 955256. [Google Scholar] [CrossRef]

- Gai, R.; Chen, N.; Yuan, H. A detection algorithm for cherry fruits based on the improved YOLO-v4 model. Neural Comput. Appl. 2023, 35, 13895–13906. [Google Scholar] [CrossRef]

- Wang, D.; He, D. Channel pruned YOLO V5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning. Biosyst. Eng. 2021, 210, 271–281. [Google Scholar] [CrossRef]

- Bai, Y.; Yu, J.; Yang, S.; Ning, J. An improved YOLO algorithm for detecting flowers and fruits on strawberry seedlings. Biosyst. Eng. 2024, 237, 1–12. [Google Scholar] [CrossRef]

- Liu, G.; Hu, Y.; Chen, Z.; Guo, J.; Ni, P. Lightweight object detection algorithm for robots with improved YOLOv5. Eng. Appl. Artif. Intell. 2023, 123, 106217. [Google Scholar] [CrossRef]

- Zeng, S.; Yang, W.; Jiao, Y.; Geng, L.; Chen, X. SCA-YOLO: A new small object detection model for UAV images. Vis. Comput. 2024, 40, 1787–1803. [Google Scholar] [CrossRef]

- Arifando, R.; Eto, S.; Wada, C. Improved YOLOv5-based lightweight object detection algorithm for people with visual impairment to detect buses. Appl. Sci. 2023, 13, 5802. [Google Scholar] [CrossRef]

- Deng, L.; Bi, L.; Li, H.; Chen, H.; Duan, X.; Lou, H.; Zhang, H.; Bi, J.; Liu, H. Lightweight aerial image object detection algorithm based on improved YOLOv5s. Sci. Rep. 2023, 13, 7817. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Zhou, X.; Shi, Y.; Guo, X.; Liu, H. Object Detection Algorithm of Transmission Lines Based on Improved YOLOv5 Framework. J. Sens. 2024, 2024, 5977332. [Google Scholar] [CrossRef]

- Yang, G.; Wang, J.; Nie, Z.; Yang, H.; Yu, S. A lightweight YOLOv8 tomato detection algorithm combining feature enhancement and attention. Agronomy 2023, 13, 1824. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Tan, M.; Chen, B.; Pang, R.; Vasudevan, V.; Sandler, M.; Howard, A.; Le, Q.V. Mnasnet: Platform-aware neural architecture search for mobile. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2820–2828. [Google Scholar]

- Wang, X.; Gao, H.; Jia, Z.; Li, Z. BL-YOLOv8: An improved road defect detection model based on YOLOv8. Sensors 2023, 23, 8361. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Wen, Y.; He, L. Scconv: Spatial and channel reconstruction convolution for feature redundancy. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6153–6162. [Google Scholar]

- Ju, Z.; Zhou, Z.; Qi, Z.; Yi, C. H2MaT-Unet: Hierarchical hybrid multi-axis transformer based Unet for medical image segmentation. Comput. Biol. Med. 2024, 174, 108387. [Google Scholar] [CrossRef] [PubMed]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Guo, G.; Zhang, Z. Road damage detection algorithm for improved YOLOv5. Sci. Rep. 2022, 12, 15523. [Google Scholar] [CrossRef]

- Jia, L.; Wang, T.; Chen, Y.; Zang, Y.; Li, X.; Shi, H.; Gao, L. MobileNet-CA-YOLO: An improved YOLOv7 based on the MobileNetV3 and attention mechanism for Rice pests and diseases detection. Agriculture 2023, 13, 1285. [Google Scholar] [CrossRef]

- Shang, J.; Wang, J.; Liu, S.; Wang, C.; Zheng, B. Small target detection algorithm for UAV aerial photography based on improved YOLOv5s. Electronics 2023, 12, 2434. [Google Scholar] [CrossRef]

- Juanjuan, Z.; Xiaohan, H.; Zebang, Q.; Guangqiang, Y. Small Object Detection Algorithm Combining Coordinate Attention Mechanism and P2-BiFPN Structure. In Proceedings of the International Conference on Computer Engineering and Networks, Wuxi, China, 3–5 November 2023; Springer Nature: Singapore, 2023; pp. 268–277. [Google Scholar]

- Li, G.; Shi, G.; Jiao, J. YOLOv5-KCB: A new method for individual pig detection using optimized K-means, CA attention mechanism and a bi-directional feature pyramid network. Sensors 2023, 23, 5242. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).